Abstract

Recently, the local binary patterns (LBP) have been widely used in the texture classification. The LBP methods obtain the binary pattern by comparing the gray scales of pixels on a small circular region with the gray scale of their central pixel. The conventional LBP methods only describe microstructures of texture images, such as edges, corners, spots and so on, although many of them show good performances on the texture classification. This situation still could not be changed, even though the multi-resolution analysis technique is adopted by LBP methods. Moreover, the circular sampling region limits the ability of the conventional LBP methods in describing anisotropic features. In this paper, we change the shape of sampling region and get an extended LBP operator. And a multi-structure local binary pattern (Ms-LBP) operator is achieved by executing the extended LBP operator on different layers of an image pyramid. Thus, the proposed method is simple yet efficient to describe four types of structures: isotropic microstructure, isotropic macrostructure, anisotropic microstructure and anisotropic macrostructure. We demonstrate the performance of our method on two public texture databases: the Outex and the CUReT. The experimental results show the advantages of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the abundance of textures in the natural world, texture analysis is regarded as one of the major parts of the machine vision, and plays an important role in many applications such as surface inspection [1], content-based image retrieval [2], medical imaging [3], etc. Texture classification has been extensively investigated during the last several decades, especially the textures that are captured under different conditions.

The early representative methods for texture classification include the co-occurrence matrix method [4] and filtering-based approaches [5–7]. These methods are sensitive to the illumination and the rotation change of textures. Recently, many filtering methods build textons to extract robust texture features. Leung and Malik [8] create 3D textons from a stack of texture images under different captured conditions for the texture classification. Schmid [9] uses isotropic “Gabor-liker” filters to build textons from a single image for rotation-invariant texture classification. Varma and Zisserman [10] present a good statistical algorithm, MR8, which uses 38 filters to build a rotation-invariant texton library from a training set for classifying an unknown texture image. Unlike the texton-based method, the local binary pattern (LBP) [11] extracts feature by comparing the gray scales of pixels in a small local region. It has been successfully applied to many computer fields, such as texture analysis [12, 13], description of salient regions [14], face recognition [15–18] and so on. For texture classification, Mäenpää et al. [19] introduce the uniform patterns to boost the texture description by selecting a subset of patterns encoded in LBP forms. With this technique, they propose a rotation-invariant uniform pattern (LBPriu2) operator [20] to describe rotational textures. Liao et al. [21] use the 80% dominant local binary patterns (DLBP) to classify rotational textures, but it needs the SVM to enhance the performance. Ahonen et al. [22] transform the uniform LBP histogram into the frequency domain and propose the local binary pattern histogram Fourier features (LBP-HF) to describe textures. Guo et al. [23] take local variances as weights of uniform patterns and propose the LBPV operator. Reordering the bins of LBPV histogram, they create the feature histograms in all possible orientations for classifying rotational textures. Later, they [24] propose a completed modeling of the LBP operator (CLBP_S/M/C) that combines three pieces of local information: center grays (CLBP_C), local signs (CLBP_S) and local magnitudes (CLBP_M). By utilizing the temporal domain information, Zhao and Pietikäinen [25] propose the volume local binary patterns (VLBP) and extract local binary patterns from three orthogonal planes (LBP–TOP) for dynamic texture classification.

Although these LBP methods perform well, most of them consider binary patterns in very small regions and only extract microstructures of images. These microstructures are not enough to describe the texture information. The problem still exists when the multi-resolution technique [20] is employed. The multi-resolution technique just combines the limited neighbor sampling points and radii. These sampling radii are very small, because the stability of LBP values deteriorates rapidly with the increase in neighbor radii. Mäenpää and Pietikäinen [26] propose the LBPF operator and try to extract larger structures under the basic frame of the LBP method. The LBPF employs exponentially growing circular neighborhoods with Gaussian low-pass filtering to extract binary patterns for texture analysis. The LBPF also shows isotropic microstructures of images, because the size of circular neighborhood is limited by the sampling radii. Turtinen and Pietikäinen [27] extract the LBP feature with three scales for the sense classification. Their features are limited to different non-overlapping blocks. Qian et al. [28] introduce the PLBP operator which executes the basic LBP on an image pyramid. The PLBP only considers the isotropic information. Moreover, the performance of the PLBP is limited by patterns on the high levels of the image pyramid. The patterns of the PLBP in the high levels of the image pyramid could bring a negative effect, when a large number of sampling points are available. In this paper, the image pyramid is also employed to ensure the generation of sampling regions with different sizes. Two kinds of LBP are used to describe isotropic and anisotropic structures: one gets the sampling points on a circular region and the other obtains the sampling points on elliptical regions with four different rotational angles. The LBP with the elliptical sampling is sensitive to rotational angles of samples. The literature [16, 29] also extracts the LBP on elliptical regions, but the rotation-variant problem of the extracted features is not considered. Here, we adjust the orders of the extracted feature histograms to build the feature in possible orientations. The corresponding orientation of two samples is obtained by matching the extracted feature in all the possible orientations. We carry out the two kinds of LBP in the image pyramid to extract both micro and macrostructures of texture images. In our work, four types of structures are described: isotropic microstructure, isotropic macrostructure, anisotropic microstructure sand anisotropic macrostructure. Later, the histograms of different extracted information are given proper weights to enhance the performance of the proposed method. The results of experiments on the Outex database and the CUReT database show the superiority of our method.

This paper is an extension of our previous work [30]. In this current paper, we have extended the Ms-LBP and added the anisotropic microstructure into the original framework. We also provide a more in-depth analysis and more extensive evaluations on the Ms-LBP. The rest of this paper is organized as follows. Section 2 gives a brief overview of the basic LBP method and discusses the missing information of the conventional LBP. Section 3 is devoted to the details of the proposed method. Section 4 presents the implementation of our experiments and reports the results. Section 5 concludes this paper.

2 Local binary patterns

In this section, we review the LBP methods and point out the structures of texture images that are neglected by most of the LBP methods. This is necessary for understanding the advantage of our method.

2.1 The LBP methods

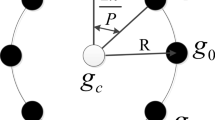

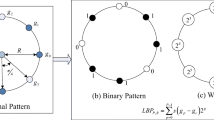

The LBP method [20] characterizes the local structure of the texture image. The basic LBP method considers a small circularly symmetric neighborhood that has P equally spaced pixels on a circle of radius R. Figure 1 shows an example of the local regions with different numbers of sampling points (P) and radii (R).

The LBP value of the center pixel is computed by thresholding the gray scales of P sampling points with the gray scale of their center pixel, and summing the thresholded values weighted by powers of two. Thus, the LBP label for the center pixel (x, y) is computed by

where g c is the gray value of the center pixel; g p (p = 0,…, P − 1) corresponds to the gray value of the pth sampling points. If the coordinates of g c are (0, 0), then the coordinates of g p are given by (−Rsin(2πp/P), Rcos(2πp/P)). The gray values of sampling points that do not fall exactly at the center of the grids are estimated by the interpolation.

The basic LBP is sensitive to the orientation of an image. Ojala et al. [20] designate the patterns whose 0/1 transition are 2 at most as the uniform patterns and propose the rotation-invariant uniform pattern operator LBP riu2 P,R :

where

According to the definition of ‘uniform’, there are P+1 ‘uniform’ binary patterns in a circularly symmetric neighbor set of P pixels. Equation (3) assigns a unique label to each of them. The assigned labels correspond to the number of ‘1’ bits in the pattern (0, 1,…, P) and all the ‘non-uniform’ patterns are grouped with label (P + 1). Thus, the LBP riu2 P,R has P + 2 distinct output values. Combining with the local variance (VAR P,R ), the LBP riu2 P,R /VAR P,R operator usually gets a good performance in the texture classification.

2.2 Drawback of the conventional LBP

The LBP methods just compute patterns on small local regions. The extracted patterns describe the small structures of images, such as flat area, spot, corner, edge and so on. The LBP histogram of an image is the occurrence frequencies of these small structures. The performance of the conventional LBP methods is limited, because these methods merely depend on microstructures of images. The weakness is quite clear when different texture images have the same microstructures. We give an extreme example in Fig. 2 to show the drawback. The uniform LBP features are selected to describe the problem. In fact, other conventional LBP methods have similar conclusion. In the first column of Fig. 2, two texture images that have the same microstructures but different macrostructures are given. The second column presents uniform LBP (P = 8, R = 1) histograms of the two textures in the first column. It is clear that the uniform LBP method has no contribution to classifying the two textures, because they have similar LBP feature histograms (the Euclidian distance is 0.0029). In the third column, the uniform LBP (P = 8, R = 1) histograms are extracted on the second level of the pyramid of texture images in the first column. Some differences of the two histograms can be seen in the third column (the Euclidian distance is 0.0454). The details of the image pyramid will be described in the next section. The results show that some importation information is lost by the conventional LBP methods that just extract microstructures of images.

First column: two texture images have the same microstructures. Second column: uniform LBP (P = 8, R = 1) histograms of left textures. Third column: uniform LBP (P = 8, R = 1) histograms on the second level of the pyramid of left textures. All the histograms are normalized. The Euclidian distances of histograms in sub-images (b) and (c) are 0.0029 and 0.0454, respectively

3 Multi-structure local binary patterns

The basic LBP only considers the isotropic microstructures of images. In this section, the shape of the sampling region is changed to extract both isotropic and anisotropic LBP. We execute them on an image pyramid to describe four different types of structures: (1) isotropic microstructure; (2) isotropic macrostructure; (3) anisotropic microstructure; (4) anisotropic macrostructure.

3.1 Extended LBP

The conventional LBP methods get the sampling points in a circular region, which is good for capturing the isotropic information. Here, we alter the shape of sampling regions to describe both isotropic and anisotropic structures. The sampling points are obtained not only in the circular region, but also in four elliptical regions. Four ellipses are the same ellipse, but in four different rotational angles θ: 0°, 45°, 90° and 135°. For each ellipse, the ratio of its major and minor axis is limited to 2:1 and we let the length of the minor axis of the elliptical region be equal to the radius of the circular region. Thus, we can still use the radius R to express the size of the ellipse. Suppose the coordinates of the central pixel in the elliptical region are (0, 0). Then the x-coordinate of the pth sampling point equals 2Rcos(2πp/P+θ)cos(θ)+Rsin(2πp/P+θ)sin(θ), while its y-coordinate equals −2Rcos(2πp/P+θ)sin(θ)+Rsin(2πp/P+θ)cos(θ). Figure 3 gives the five sampling types with eight sampling points. We modify the operator LBP riu2 P,R as the LBP riu2 T,P,R :

where the subscript ‘T’ stands for the sampling type and T ϵ {0, 1, 2, 3, 4}. T = 0 indicates the circular sampling region used, while T = 1, 2, 3, 4 select elliptical sampling with rotational angles 0°, 45°, 90° and 135°, respectively.

3.2 Image pyramid

An image pyramid can be created from the original image. We use the sign I l to indicate sub-images of the image pyramid. The subscript l stands for the level of the image pyramid. In the process of building the image pyramid, the Gaussian function G(x, y, σ) is used to smooth the image. Referring to the SIFT operator [31], we select the variance σ = 1.5.

Suppose the original image is I 0 . The sub-image I l (l > 0) is created from the image I l−1 by the following formula:

where * is the convolution operation; ↓2 means the down sample by 2. Figure 4 gives a three-level image pyramid for extracting different structures.

3.3 Multi-structure local binary pattern feature

The multi-structure local binary pattern (Ms-LBP) can be achieved by executing the LBP riu2 T,P,R operator on the image pyramid. The isotropic microstructures are obtained by executing the LBP riu2 T,P,R (T = 0) operator on the original image, while the anisotropic microstructures are obtained by executing the LBP riu2 T,P,R (T = 1, 2, 3, 4) operators on the same image. Similarly, the isotropic macrostructures are created by executing the LBP riu2 T,P,R (T = 0) operator on the sub-images I l (l > 0), while the anisotropic macrostructures are created by executing the LBP riu2 T,P,R (T = 1, 2, 3, 4) operators on the sub-images I l (l > 0). For consistency, our method is written as Ms-LBP riu2 P,R , where signs ‘P’, ‘R’ and ‘riu2’ have the same meaning with the operator LBP riu2 P,R . The final feature of the Ms-LBP riu2 P,R is composed of the LBP riu2 T,P,R histograms in every single sub-image of the image pyramid:

where \( {\text{LBP}}_{l,T,P,R}^{\text{riu2}} (i,j) \) is the \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) value of the pixel I l (i, j); K is the maximal \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) pattern; H l,T is the \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) histogram of the sub-image I l ; M and N are the sizes of the sub-image of the image pyramid.

3.4 Classification principle

There are many principles to evaluate the dissimilarity of a sample and a model. One of the useful measures is the Chi-square distance, which is employed by many studies [8, 10, 23, 24, 32] in the texture classification. The Chi-square distance between a model M and a sample S is computed as follows:

where B is the number of bins; S b and M b correspond to the values of the sample and the model at the bth bin, respectively.

The final dissimilarity contains the distances of four kinds of different structure features. The anisotropic part of the proposed method is not rotation invariant. Therefore, the rotational problem should be first solved. The anisotropic features are extracted by the \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) (T = 1, 2, 3, 4) operators that correspond to the elliptical samplings with four rotational angles (0°, 45°, 90° and 135°). The \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) (T = 1, 2, 3, 4) values are the same when the image is rotated by the angle 180°. Therefore, the anisotropic features would be rotationally invariant in eight orientations (0°, 45°, 90°, 135°, 180°, 225°, 270° and 315°), if we controlled the rotational change of the elliptical sampling in four orientations (0°, 45°, 90° and 135°). Suppose the [H l,1 H l,2 H l,3 H l,4 ] are the four anisotropic feature histograms in the lth level of the image pyramid. Circularly adjusting the sequence of the four histograms has the same results as extracting anisotropic feature histograms on the rotational image. For instance, the histograms [H l,2 H l,3 H l,4 H l,1 ] equal to executing the \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) (T = 1, 2, 3, 4) operators by turns on the image that has been anticlockwise rotated by the angle 45° or 225°. Other orders of the four histograms have a similar situation. Hence, by circularly adjusting the sequence of the four histograms four times, the anisotropic feature histograms of an image in eight possible orientations can be obtained (0°, 45°, 90°, 135°, 180°, 225°, 270° and 315°). We take the anisotropic feature histograms in all the levels of the image pyramid as a whole. The corresponding angle between a testing sample and a training sample can be obtained by searching the minimum dissimilarity distance between the extracted anisotropic histograms of the training sample and the anisotropic histograms in all possible orientations of the testing sample. Once the corresponding angle has been found, these anisotropic feature distances under different levels of the image pyramid can be separately calculated. Another factor should be considered is the contributions of different parts of the Ms-LBP operator. Compared with the microstructures, the macrostructures located at the top of the image pyramid show less statistics because of the small sizes of sub-images at high levels. Intuitively, the higher levels of the image pyramid supplies less information of the texture than the lower levels of the image pyramid. Moreover, the different structures should make different contributions to classifying samples. Therefore, the final dissimilarity (D F (S, M)) is the summation of all the distances of histograms with different weights:

where S l,T and M l,T stand for the \( {\text{LBP}}_{T,P,R}^{\text{riu2}} \) histogram in the lth level of the image pyramid of the sample and the model, respectively; w l,0 and w l,1 are the distant weights of the isotropic part and the anisotropic part in the lth level of the image pyramid, respectively; L is the maximum level of the image pyramid; the k value points to the best order of anisotropic histograms that corresponds to the appropriate rotational angle of the testing sample relative to the training sample; D min(S an l , M an l ) is the summation of the anisotropic distances between the adjusted S l,T and the original M l,T .

The classification rate is a good candidate for the weight. All the distant weights should be calculated from the training set. One sample of each class is chosen by turns. All of the selected samples group a new training set, while other samples are used for testing. Suppose each class has N samples. Thus, there will be N test groups. For each test group, the isotropic and anisotropic features are extracted in each level of the image pyramid. We use every structure feature in each level of the image pyramid at a time to achieve the texture classification in the created test group. For the isotropic part, the basic Chi-square distance is used to test the dissimilarity of the isotropic histograms between a testing sample and a training sample. For the anisotropic part, the best orientation of the testing sample is only searched from the current level of the image pyramid. Thus, the anisotropic dissimilar distance (D(S an l , M an l )) is computed as:

Different structure features get different classification rates. For each structure feature, the averaged classification rate over the test groups is employed to build the corresponding structure weight. For the image pyramid, the sub-images on lower levels supply more details of a texture. Here, we set a weight of 1/2l to the lth level of the image pyramid so that the lower levels supply a great contribution. The combinations of the structure weights and the pyramid weights are selected as the distant weights. Finally, all the distant weights are normalized to have a sum of one. Algorithm 1 presents the pseudo code for calculating the distant weights.

4 Experiments

We demonstrate the performance of the proposed method on two public texture databases: the Outex and the CUReT. Two databases are selected because their texture images are acquired under more varied conditions (viewing angle, orientation and source of illumination) than the widely used Brodatz database. Many studies [8, 10, 19–24, 28] use the two databases to study the texture features.

4.1 The compared methods

As an LBP-based method, the proposed method has been compared against six represent LBP algorithms: LBP riu2 P,R [20], LBP riu2 P,R /VAR P,R [20], LBP P,R -HF [22], LBPV u2 P,R GMES [23], CLBP_S riu2 P,R /M riu2 P,R /C [24] and PLBP riu2 P,R [28]. The MR8 [10] as a powerful texton-based method is also compared.

The LBP riu2 P,R and the local variance (VAR P,R ) are two classical rotation-invariant texture descriptors. The two descriptors express the local information in different ways. Their joint distribution LBP riu2 P,R /VAR P,R usually performs better than the LBP riu2 P,R operator. The output values of the VAR P,R are continuous. In the following experiments, the feature distribution of the VAR P,R is quantized into 16 bins according to [20].

To avoid the quantization of the values in the VAR P,R , the LBPV u2 P,R GMES [23] takes the local variances as weights of the corresponding uniform patterns. A rotational texture is classified by exhaustively searching the uniform patterns in all possible orientations. The LBP P,R -HF [22] is also built on the basis of the uniform patterns. The LBP P,R -HF method transforms the histogram of uniform patterns into Fourier space to describe rotational textures. The CLBP_S riu2 P,R /M riu2 P,R /C [24] operator combines three piece of information to enhance the performance of the classification. For LBPV u2 P,R GMES and CLBP_S riu2 P,R /M riu2 P,R /C, all the textures are normalized to have an average intensity of 128 and a standard deviation of 20 [23, 24].

The MR8 [10] is a texton learning algorithm. For the MR8, all the texture samples are normalized into an average intensity of zero with standard deviation of one [10]. Ten textons are learned from the training samples of each class.

The PLBP riu2 P,R [28] executes the basic LBP riu2 P,R operators on an image pyramid. Because both the PLBP riu2 P,R and the proposed method employ the image pyramid as their frames, the image pyramid of PLBP riu2 P,R is created the same as the proposed method. The number of levels of the image pyramid is selected according to the sizes of the images. In the following databases, the image pyramid with four levels is used. To analyze the differences between the PLBP riu2 P,R and the proposed method, the Ms-LBP riu2 P,R without weights is also executed in the following experiments. For convenience, the Ms-LBP riu2 P,R without weights is denoted by Ms-LBP riu2 P,R,nw . For the Ms-LBP riu2 P,R , the weights are first calculated from the training samples according to Algorithm 1. Once the weights have been obtained, the Ms-LBP features are extracted on training samples and testing samples, respectively. A testing sample is classified according to the nearest neighbor principle with Chi-square distance. For the Ms-LBP riu2 P,R,nw , the weights are ignored when the dissimilarity between modes and tests are calculated. In other words, each weight in the Ms-LBP riu2 P,R,nw equals to one. The percentages of the correctly classified samples are used to characterize the performances of the algorithms. The best result for each test suite is marked in the bold font.

4.2 Results on the Outex database

There are 24 classes of textures in the Outex database. These textures are collected under three illuminations and at nine angles. Our experiments were performed on two public test suites of the Outex (http://www.outex.oulu.fi/temp/): Outex_TC_00010 (TC10) and Outex_TC_00012 (TC12). TC10 is used for studying rotation-invariant texture classification and TC12 is used for researching illuminant and rotation-invariant texture classification. The two test suites contain the same 24 classes of textures as shown in Fig. 5. Each texture class is collected under three different illuminants (‘inca’, ‘t184’ and ‘horizon’) and nine different angles of rotation (0°, 5°, 10°, 15°, 30°, 45°, 60°, 75° and 90°). All the images in the two test suites are gray scales. There are 20 non-overlapping 128 × 128 texture samples for each class under each setting. The experimental setups were as follows:

-

1.

For TC10, the classifier was trained with the reference textures of the illuminant ‘inca’ (20 samples of angle 0° in each texture class), while the 160 (8 × 20) samples of the other eight rotational angles in each texture class were used for testing the classifier. Hence, there were 480 (24 × 1 × 20) models and 3,840 (24 × 8 × 20) testing samples in total.

-

2.

For TC12, the classifier was trained with the same training sample in the TC10 test suites. All samples captured under illuminant ‘tl84’ or ‘horizon’ were used for testing the classifier. Hence, in both the illuminant experiments, there are 480 (24 × 20) models and 4,320 (24 × 20 × 9) validated samples in total for each illuminant. To simplify the name, ‘TC12t’ is shortened for TC12 ‘tl84’, and ‘TC12h’ for TC12 ‘horizon’.

The test suites TC10 and TC12 have the same training set that contains 480 samples of illuminant ‘inca’ in total. Therefore, they have the same distant weights. Table 1 presents the correct classification rates of the different parts of the proposed method with different sampling points P and radius R. An image pyramid with four levels was used. For each Ms-LBP riu2 P,R operator, the distant weights were computed by normalizing the combination of the correct classification rates and the corresponding pyramid weights. Taking the Ms-LBP riu2 8,1 operator for example, the weights of different parts of the Ms-LBP riu2 8,1 from level 0 to level 3 are: 0.273, 0.306, 0.126, 0.148, 0.045, 0.064, 0.017 and 0.022, respectively. Similarly, the weights of the proposed method with other parameters can also be calculated according to the Algorithm 1. The parts of the proposed method in the lower levels have been set higher weights than those in the high levels. The reason lies in the image pyramid. The lower levels of the image pyramid supply the details of a texture. These details contain a lot of information of the texture. In addition, the proposed method uses the distribution of patterns to describe the texture. The distributions at high levels are unstable, because the number of pixels at high levels is very small. Therefore, the high levels are set low weights. The stability of the distribution can be seen from the results of different levels in Table 1. Results in Table 1 show that the correct classification rates deteriorate rapidly with the increase of levels, because the sizes of sub-images at high levels are too small to supply enough statistics of structures. Four elliptical sampling regions cause more anisotropic information to be extracted and give a good performance to the anisotropic parts in the image pyramid. Therefore, it can be found that the classification rates of anisotropic parts are usually higher than the results of isotropic parts at the same levels of the image pyramid.

Table 2 gives the results of different methods on the TC10 and TC12 test suites. The results of all the LBP-based methods with the multi-resolution technique are also presented in Table 2. The multi-resolution technique [20] is an effective tool to enhance the performance of LBP methods. The LBP methods with the multi-resolution technique combine the features provided by multiple LBP operators of varying parameters (P, R). The high scores 98.89 and 98.52% are obtained by the Ms-LBP riu216,2 operator on TC12t and TC12h, respectively. For TC10, the combination of Ms-LBP riu216,2,nw and Ms-LBP riu224,3,nw provides the best score, 99.64%. The superiority of our method is obvious on the test suite TC12, which contains both illuminant and rotation-variant textures. Textures captured under different illuminant usually have different microstructures, but their macrostructures are very similar. Thus, compared with other operators, our method works well on testing sets TC12.

The Ms-LBP riu2 P,R method usually excels its counterparts under the same parameters (P, R) in the same testing sets. This situation is particularly clear when the parameter (P, R) equals to (8, 1). In fact, the conventional LBP methods with eight sampling points supply very limited patterns to express the texture. The Ms-LBP riu28,1 operator extracts more information to describe the texture. On comparing the operator with the single parameter (P, R), better results of the proposed method are obtained with parameter (16, 2) and the performance degrades a little with the parameter (24, 3). Observing the results at high levels in Table 1, this phenomenon also exists. The reason lies in the small sizes of sub-images at the high levels of the image pyramid. At the same time, the dimension of the feature histogram increases with the number of sampling points P. It is well known that a high-dimensional histogram with few entries is not enough to support a stable distribution of features in the statistical sense and the operator Ms-LBP riu224,3 belongs to this situation. As can be seen from Table 2, the performance of Ms-LBP riu2 P,R degrades a little with the large number of sampling points, P = 24. Similarly, PLBP riu224,3 and Ms-LBP riu224,3,nw have the same situation. For Ms-LBP riu2 P,R , the weights are used to constrain the negative effect of the patterns at high levels of the image pyramid. Thus, the Ms-LBP riu2 P,R usually performs better than the Ms-LBP riu2 P,R,nw .

Although our method performs better than others, the proposed method needs more features to describe textures. Table 3 shows the lengths of feature vectors that have been extracted by different methods. The length of feature vector of the proposed method is longer than the compared methods except for the CLBP_S riu2 P,R /M riu2 P,R /C method.

4.3 Results on the CUReT database

There are 61 materials in the CUReT database. Each material has 205 texture images with different viewpoints, illumination and orientation. Figure 6 shows the materials. In each material, 118 images were captured from a viewing angle of less than 60°. Among the 118 images, 92 images were selected. The selected images had sufficiently large regions to be cropped into sizes of 200 × 200 and all the cropped regions were converted into gray scales. Thus, there were 5,612 images in total. The cropped samples can be downloaded at (http://www.robots.ox.ac.uk/~vgg/research/texclass/).

In our experiments, we had randomly selected N samples from each class as training samples and others were used for testing. The number N was set as 6, 12, 23 and 46 by turn. For each number N, the randomly partitioned process was repeated ten times for experiments. The mean accuracy over the ten splits was used to evaluate the algorithms. We learned the distant weights of the Ms-LBP from the smallest training set that had been built in the first time by randomly selecting six samples for each class. The number of selected training samples for each class has little effect to calculate the distant weights. The created training set would be divided several times for computing the performance of different parts of the Ms-LBP. Different number N just alters the executing times of this process. The results of the different parts are finally normalized into relative results. Table 4 gives the correct classification rates of the different parts of the Ms-LBP with different sampling points P and radius R. With the classification rates, the distant weights are easily obtained according to Algorithm 1.

Table 5 shows the classification results in the CUReT with four different divided schemes. The best scores 98.88, 96.79, 93.26 and 86.79% are obtained by the proposed method when the selected number N equals 46, 23, 12 and 6, respectively. The classification accuracies of all the algorithms increase with the increment of the number N. More selected training samples supply more information for all the algorithms to describe the textures under different captured conditions. In contrast, the proposed method has a greater advantage when the number of training samples is smaller. Taking N = 6, for example, the proposed method greatly improves classification accuracy by an average of 8.17% over the CLBP_S riu2 P,R /M riu2 P,R /C method. Many materials in the CUReT database have 3D structures. Thus, some small shades are created in the images when these materials are captured under different viewpoints. The small shades lead to some inaccurate patterns for the conventional LBP methods, because these methods only extract patterns by comparing pixels in a small region. Using more training samples to learn the classifier can reduce the effect of shades in a way, because the training samples and the testing samples might have the shades in the same captured viewpoints. For the proposed method, macrostructures are also extracted from the high levels of the image pyramid. Since each pixel in the high levels is an ensemble of a large region in the original image, these shades have little effect on patterns that stand for macrostructures. Therefore, the proposed method performs much better than its counterparts when the training set has fewer samples. This is also the reason why the MR8 method performs well in the CUReT. The filter bank in the MR8 can capture the information in large scales. The filtering operation reduces the effect of small shades. On the other hand, the filtering operation loses the ability to precisely describe the local information. Hence, the MR8 method does not give the expected results on the Outex database. Textures in the Outex have no viewpoint change and the local regions are relatively stable between the training samples and the testing samples. Therefore, the LBP methods usually have better results on the Outex database than the MR8 method. Since both the micro and macro information are extracted, the proposed method performs well in the two databases.

All of the PLBP riu2 P,R , Ms-LBP riu2 P,R,nw and Ms-LBP riu2 P,R have used the image pyramid to extract macro information of textures. The macro information makes the PLBP riu2 P,R algorithm perform better than the basic LBP riu2 P,R method, when a small quantity of sampling points is available. The performance of the PLBP riu224,3 operator falls, because more patterns are present. The high levels of an image pyramid cannot supply enough pixels to support stable distributions with a lot of patterns. Ms-LBP riu2 P,R,nw gives better scores than PLBP riu2 P,R as a result of the extraction of extra anisotropic information. The weights are also employed to balance the contributions of different parts of the proposed method. Thus, Ms-LBP riu2 P,R shows better scores than Ms-LBP riu2 P,R,nw and PLBP riu2 P,R .

5 Conclusions

In this paper, we proposed a multi-structure framework on the basis of the LBP to describe textures. Both circular sampling and elliptical sampling were used to extract the rotation-invariant uniform LBP operator. Combined with the image pyramid technique, we described four different structures (isotropic microstructure, anisotropic microstructure, isotropic macrostructure and anisotropic macrostructure). The weights of extracted features are also defined according to their contributions to the images. The experimental results on the Outex database and the CUReT database demonstrate the advantages of our method. The performance of the proposed method is limited by the size of images, because small images are not enough to supply large macrostructures. Fortunately, the texture images are different from other images, because they are full of repeating modes. So in the future, some texture synthesis technique could be used to create texture image with large sizes and more stable Ms-LBPs could be achieved on the synthesized texture images. Moreover, the proposed method can be combined with other local information (such as VAR, LBPV) to improve the discriminative power.

References

Tsai DM, Huang TY (2003) Automated surface inspection for statistical textures. Image Vis Comput 21(4):307–323

Chun YD, Kim NC, Jang IH (2008) Content-based image retrieval using multiresolution color and texture features. IEEE Trans Multimed 10(6):1073–1084

Ji Q, Engel J, Craine E (2000) Texture analysis for classification of cervix lesions. IEEE Trans Med Imaging 19(11):1144–1149

Haralick RM, Shanmugam K, Dinstein I (1973) Textural features for image classification. IEEE Trans Syst Man Cybern 3(6):610–621

Laine A, Fan J (1993) Texture classification by wavelet packet signatures. IEEE Trans Pattern Anal Mach Intell 15(11):1186–1191

Manjunath BS, Ma WY (1996) Texture features for browsing and retrieval of image data. IEEE Trans Pattern Anal Mach Intell 18(8):837–842

Randen T, Husoy J (1999) Filtering for texture classification: a comparative study. IEEE Trans Pattern Anal Mach Intell 21(4):291–310

Leung T, Malik J (2001) Representing and recognizing the visual appearance of materials using three-dimensional textons. Int J Comput Vis 43(1):29–44

Schmid C (2001) Constructing models for content-based image retrieval. In: Proceedings of Conference on Computer Vision and Pattern Recognition. Montbonnot, France, pp 39–45

Varma M, Zisserman A (2005) A statistical approach to texture classification from single images. Int J Comput Vis 62(1):61–81

Ojala T, Valkealahti K, Oja E, Pietikäinen M (2001) Texture discrimination with multidimensional distributions of signed gray-level differences. Pattern Recognit 34(3):727–739

Mäenpää T, Pietikäinen M (2005) Texture analysis with local binary patterns. In: Chen C, Wang P (eds) Handbook of Pattern Recognition and Computer Vision. 3rd edn. World Scientific, Singapore, pp 197–216

Zhang J, Tan T (2002) Brief review of invariant texture analysis methods. Pattern Recognit 35(3):735–747

Heikkilä M, Pietikäinen M, Schmid C (2009) Description of interest regions with local binary patterns. Pattern Recognit 42(3):425–436

Ahonen T, Hadid A, Pietikäinen M (2006) Face description with local binary patterns: application to face recognition. IEEE Trans Pattern Anal Mach Intell 28(12):2037–2041

Liao S, Chung A (2007) Face recognition by using elongated local binary patterns with average maximum distance gradient magnitude. In: Proceedings of Asian Conference on Computer Vision. Tokyo, Japan, pp 672–679

Xiaoyang T, Triggs B (2010) Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 19(6):1635–1650

Zhang W, Shan S, Qing L, Chen X, Gao W (2009) Are Gabor phases really useless for face recognition? Pattern Anal Appl 12(3):301–307

Mäenpää T, Ojala T, Pietikäinen M, Soriano M (2000) Robust texture classification by subsets of local binary patterns. In: Proceedings of International Conference on Pattern Recognition. Barcelona, Spain, pp 947–950

Ojala T, Pietikäinen M, Mäenpää T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transform Pattern Anal Mach Intell 24(7):971–987

Shu L, Law M, Chung A (2009) Dominant local binary patterns for texture classification. IEEE Trans Image Process 18(5):1107–1118

Ahonen T, Matas J, He C, Pietikäinen M (2009) Rotation invariant image description with local binary pattern histogram fourier features. In: Proceedings of 16th Scandinavian Conference on Image Analysis. Oslo, Norway, pp 61–70

Guo Z, Zhang L, Zhang D (2010) Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognit 43(3):706–719

Guo Z, Zhang L, Zhang D (2010) A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process 19(6):1657–1663

Zhao G, Pietikäinen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Mäenpää T, Pietikäinen M (2003) Multi-scale binary patterns for texture analysis. In: Proceedings of Scandinavian Conference on Image Analysis. Halmstad, Sweden, pp 885–892

Turtinen M, Pietikäinen M (2006) Contextual analysis of textured scene images. In: Proceedings of British Machine Vision Conference. Edinburgh, UK, pp 849–858

Qian X, Hua X-S, Chen P, Ke L (2011) PLBP: an effective local binary patterns texture descriptor with pyramid representation. Pattern Recognit 44(10):2502–2515

Nanni L, Lumini A, Brahnam S (2010) Local binary patterns variants as texture descriptors for medical image analysis. Artif Intell Med 49(2):117–125

He Y, Sang N, Gao C (2010) Pyramid-based multi-structure local binary pattern for texture classification. In: Proceedings of Asian Conference on Computer Vision. Queenstown, New Zealand, pp 133–144

Lowe D (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Lazebnik S, Schmid C, Ponce J (2005) A sparse texture representation using local affine regions. IEEE Trans Pattern Anal Mach Intell 27(8):1265–1278

Acknowledgments

The authors would like to thank MVG and VGG for sharing the LBP code and the VZ_MR8 code, respectively. The authors also thank Zhenhua Guo, Lei Zhang and David Zhang for sharing the source code of LBPV and CLBP. This work was supported by the Chinese National 863 Grand No. 2009AA12Z109. The authors would like to thank the anonymous referees for their useful suggestions. Any researchers can e-mail us to get the code of the proposed method for studying.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

He, Y., Sang, N. & Gao, C. Multi-structure local binary patterns for texture classification. Pattern Anal Applic 16, 595–607 (2013). https://doi.org/10.1007/s10044-011-0264-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-011-0264-4