Abstract

This paper establishes a non-stochastic analog of the celebrated result by Dubins and Schwarz about reduction of continuous martingales to Brownian motion via time change. We consider an idealized financial security with continuous price paths, without making any stochastic assumptions. It is shown that typical price paths possess quadratic variation, where “typical” is understood in the following game-theoretic sense: there exists a trading strategy that earns infinite capital without risking more than one monetary unit if the process of quadratic variation does not exist. Replacing time by the quadratic variation process, we show that the price path becomes Brownian motion. This is essentially the same conclusion as in the Dubins–Schwarz result, except that the probabilities (constituting the Wiener measure) emerge instead of being postulated. We also give an elegant statement, inspired by Peter McCullagh’s unpublished work, of this result in terms of game-theoretic probability theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is a contribution to the game-theoretic approach to probability. This approach was explored (by e.g. von Mises, Wald and Ville) as a possible basis for probability theory at the same time as the now standard measure-theoretic approach (Kolmogorov), but then became dormant. The current revival of interest in it started with A.P. Dawid’s prequential principle ([15], Sect. 5.1, [16], Sect. 3), and recent work on game-theoretic probability includes monographs [55, 59] and papers [32, 36–39, 61].

The treatment of continuous-time processes in game-theoretic probability often involves nonstandard analysis (see e.g. [55], Chaps. 11–14). The recent paper [60] suggested avoiding nonstandard analysis and introduced the key technique of “high-frequency limit order strategies,” also used in this paper and its predecessors, [67] and [65].

An advantage of game-theoretic probability is that one does not have to start with a full-fledged probability measure from the outset to arrive at interesting conclusions, even in the case of continuous time. For example, ref. [67] shows that continuous price paths satisfy many standard properties of Brownian motion (such as the absence of isolated zeroes) and ref. [65] (developing [68] and [60]) shows that the variation index of a non-constant continuous price path is 2, as in the case of Brownian motion. The standard qualification “with probability one” is replaced with “unless a specific trading strategy increases manyfold the capital it risks” (the formal definitions, assuming zero interest rate, will be given in Sect. 2). This paper makes the next step, showing that the Wiener measure emerges in a natural way in the continuous trading protocol. Its main result contains all main results of [65, 67], together with several refinements, as special cases.

Other results about the emergence of the Wiener measure in game-theoretic probability can be found in [64] and [66]. However, the protocols of those papers are much more restrictive, involving an externally given quadratic variation (a game-theoretic analog of predictable quadratic variation, generally chosen by a player called Forecaster). In the present paper, the Wiener measure emerges in a situation with surprisingly little a priori structure, involving only two players: the market and a trader.

The reader will notice that not only our main result but also many of our definitions resemble those in Dubins and Schwarz’s paper [20], which can be regarded as the measure-theoretic counterpart of this paper. The main difference of this paper is that we do not assume a given probability measure from the outset. A less important difference is that our main result will not assume that the price path is unbounded and nowhere constant (among other things, this generalization is important to include the main results of [65, 67] as special cases). A result similar to that of Dubins and Schwarz was almost simultaneously proved by Dambis [11]; however, Dambis, unlike Dubins and Schwarz, dealt with predictable quadratic variation, and his result can be regarded as the measure-theoretic counterpart of [64] and [66].

Another related result is the well-known observation (see e.g. [27], Theorem 5.39) that in the binomial model of a financial market, every contingent claim can be replicated by a self-financing portfolio whose initial price is the expected value (suitably discounted if the interest rate is not zero) of the payoff function with respect to the risk-neutral probability measure. This insight is, essentially, extended in this paper to the case of an incomplete market (the price for completeness in the binomial model is the artificial assumption that at each step the price can only go up or down by specified factors) and continuous time (continuous-time mathematical finance usually starts from an underlying probability measure, with some notable exceptions discussed in Sect. 12).

This paper’s definitions and results have many connections with several other areas of finance and stochastics, including stochastic integration, the fundamental theorems of asset pricing, and model-free option pricing. These will be discussed in Sect. 12.

The main part of the paper starts with the description of our continuous-time trading protocol and the definition of game-theoretic versions of the notion of probability (outer and inner content) in Sect. 2. In Sect. 3 we state our main result (Theorem 3.1), which becomes especially intuitive if we restrict our attention to the case of the initial price equal to 0 and price paths that do not converge to a finite value and are nowhere constant: the outer and the inner content of any event that is invariant with respect to time transformations then exist and coincide between themselves and with its Wiener measure (Corollary 3.7). This simple statement was made possible by Peter McCullagh’s unpublished work on Fisher’s fiducial probability: McCullagh’s idea was that fiducial probability is only defined on the σ-algebra of events invariant with respect to a certain group of transformations. Section 4 presents several applications (connected with [67] and [65]) demonstrating the power of Theorem 3.1. The fact that typical price paths possess quadratic variation is proved in Sect. 8. It is, however, used earlier, in Sect. 5, where it allows us to state a constructive version of Theorem 3.1. The constructive version, Theorem 5.1, says that replacing time by the quadratic variation process turns the price path into Brownian motion. In Sect. 6 we state generalizations, from events to positive bounded measurable functions, of Theorem 3.1 and part of Theorem 5.1; these are Theorems 6.2 and 6.4, respectively. The easy directions in Theorems 6.2 and 6.4 are proved in the same section. Sections 7 and 9 prove part of Theorem 5.1 and prepare the ground for the proof of the remaining parts of Theorems 5.1 and 6.4 (in Sect. 10) and Theorem 6.2 (in Sect. 11). Section 12 continues the general discussion started in this section.

Words such as “positive,” “negative,” “before,” “after,” “increasing,” and “decreasing” will be understood in the wide sense of ≥ or ≤, as appropriate; when necessary, we add the qualifier “strictly.” As usual, C(E) is the space of all continuous functions on a topological space E equipped with the sup norm. We often omit the parentheses around E in expressions such as C[0,T]:=C([0,T]).

2 Outer content in a financial context

We consider a game between two players, Reality (a financial market) and Sceptic (a trader), over the time interval [0,∞). First Sceptic chooses his trading strategy and then Reality chooses a continuous function ω:[0,∞)→ℝ (the price path of a security).

Let Ω be the set of all continuous functions ω:[0,∞)→ℝ. For each t∈[0,∞),  is defined to be the smallest σ-algebra that makes all functions ω↦ω(s), s∈[0,t], measurable. A process

\(\mathfrak{S}\) is a family of functions \(\mathfrak{S}_{t}:\varOmega\to[-\infty,\infty]\), t∈[0,∞), each \(\mathfrak{S}_{t}\) being

is defined to be the smallest σ-algebra that makes all functions ω↦ω(s), s∈[0,t], measurable. A process

\(\mathfrak{S}\) is a family of functions \(\mathfrak{S}_{t}:\varOmega\to[-\infty,\infty]\), t∈[0,∞), each \(\mathfrak{S}_{t}\) being  -measurable; its sample paths are the functions \(t\mapsto\mathfrak {S}_{t}(\omega)\). An event is an element of the σ-algebra

-measurable; its sample paths are the functions \(t\mapsto\mathfrak {S}_{t}(\omega)\). An event is an element of the σ-algebra  , also denoted by

, also denoted by  . (We often consider arbitrary subsets of Ω as well.) Stopping times τ:Ω→[0,∞] with respect to the filtration

. (We often consider arbitrary subsets of Ω as well.) Stopping times τ:Ω→[0,∞] with respect to the filtration  and the corresponding σ-algebras

and the corresponding σ-algebras  are defined as usual; ω(τ(ω)) and \(\mathfrak{S}_{\tau(\omega)}(\omega)\) will be simplified to ω(τ) and \(\mathfrak{S}_{\tau }(\omega)\), respectively (occasionally, the argument ω will be omitted in other cases as well).

are defined as usual; ω(τ(ω)) and \(\mathfrak{S}_{\tau(\omega)}(\omega)\) will be simplified to ω(τ) and \(\mathfrak{S}_{\tau }(\omega)\), respectively (occasionally, the argument ω will be omitted in other cases as well).

The class of allowed strategies for Sceptic is defined in two steps. A simple trading strategy

G consists of an increasing sequence of stopping times τ

1≤τ

2≤⋯ and, for each n=1,2,…, a bounded  -measurable function h

n

. It is required that for each ω∈Ω, lim

n→∞

τ

n

(ω)=∞. To such a G and an initial capital

c∈ℝ corresponds the simple capital process

-measurable function h

n

. It is required that for each ω∈Ω, lim

n→∞

τ

n

(ω)=∞. To such a G and an initial capital

c∈ℝ corresponds the simple capital process

(with the zero terms in the sum ignored, which makes the sum finite for each t); the value h

n

(ω) will be called Sceptic’s bet (or bet on

ω, or stake) at time τ

n

, and  will be referred to as Sceptic’s capital at time t.

will be referred to as Sceptic’s capital at time t.

A positive capital process is any process \(\mathfrak{S}\) that can be represented in the form

where the simple capital processes  are required to be positive, for all t and ω, and the positive series \(\sum_{n=1}^{\infty}c_{n}\) is required to converge. The sum (2.2) is always positive but allowed to take value ∞. Since

are required to be positive, for all t and ω, and the positive series \(\sum_{n=1}^{\infty}c_{n}\) is required to converge. The sum (2.2) is always positive but allowed to take value ∞. Since  does not depend on ω, \(\mathfrak{S}_{0}(\omega)\) also does not depend on ω and will sometimes be abbreviated to \(\mathfrak{S}_{0}\).

does not depend on ω, \(\mathfrak{S}_{0}(\omega)\) also does not depend on ω and will sometimes be abbreviated to \(\mathfrak{S}_{0}\).

Remark 2.1

The financial interpretation of a positive capital process (2.2) is that it represents the total capital of a trader who splits his initial capital into a countable number of accounts, and on each account runs a simple trading strategy making sure that this account never goes into debit.

The outer content of a set E⊆Ω (not necessarily  ) is defined as

) is defined as

where \(\mathfrak{S}\) ranges over the positive capital processes and \(\operatorname{\boldsymbol{1}}_{E}\) stands for the indicator function of E. In the financial terminology (and ignoring the fact that the inf in (2.3) need not be attained), \(\operatorname{\overline{\mathbb{P}}}(E)\) is the price of the cheapest superhedge for the European contingent claim paying \(\operatorname{\boldsymbol{1}}_{E}\) at time ∞. It is easy to see that the lim inf t→∞ in (2.3) can be replaced by sup t (and, therefore, by lim sup t→∞ ): we can always stop (i.e., set all bets to 0) when \(\mathfrak{S}\) reaches the level 1 (or a level arbitrarily close to 1).

We say that a set E⊆Ω is null if \(\operatorname{\overline{\mathbb{P}}}(E)=0\). If E is null, there is a positive capital process \(\mathfrak{S}\) such that \(\mathfrak{S}_{0}=1\) and \(\lim_{t\to\infty}\mathfrak{S}_{t}(\omega)=\infty\) for all ω∈E (it suffices to sum over ϵ=1/2,1/4,… positive capital processes \(\mathfrak{S}^{\epsilon}\) satisfying \(\mathfrak{S}_{0}^{\epsilon}=\epsilon\) and \(\liminf_{t\to\infty}\mathfrak{S}_{t}^{\epsilon}\ge \operatorname{\boldsymbol{1}}_{E}\)). A property of ω∈Ω will be said to hold for typical ω if the set of ω where it fails is null. Correspondingly, a set E⊆Ω is full if \(\operatorname{\overline{\mathbb{P}}}(E^{c})=0\), where E c:=Ω∖E stands for the complement of E.

We can also define inner content by

(intuitively, this is the price of the most expensive subhedge of \(\operatorname{\boldsymbol{1}}_{E}\)). This notion of inner content will not be useful in this paper (but a simple modification will be).

Remark 2.2

Another natural setting is where Ω is defined as the set of all continuous functions ω:[0,T]→ℝ for a given constant T (the time horizon). In this case the definition of outer content simplifies: instead of \(\liminf_{t\to\infty}\mathfrak{S}_{t}(\omega)\), we have simply \(\mathfrak{S}_{T}(\omega)\) in (2.3).

Remark 2.3

Alternative names (used in e.g. [55]) for outer and inner content are upper and lower probability in the case of sets and upper and lower expectation in the case of functions (the latter case will be considered in Sect. 6). Our terminology essentially follows refs. [31] and [56], but we drop “probability” in outer/inner probability content. We also avoid expressions such as “for almost all” and “almost surely.” Hopefully, this terminology will remind the reader that we do not start from a probability measure on Ω. For terminology used in the finance literature, see Sect. 12.

3 Main result: abstract version

A time transformation is defined to be a continuous increasing (not necessarily strictly increasing) function f:[0,∞)→[0,∞) satisfying f(0)=0. Equipped with the binary operation of composition, (f∘g)(t):=f(g(t)), t∈[0,∞), the time transformations form a (non-commutative) monoid, with the identity time transformation t↦t as the unit. The action of a time transformation f on ω∈Ω is defined to be the composition ω f:=ω∘f∈Ω, (ω∘f)(t):=ω(f(t)). The trail of ω∈Ω is the set of all ψ∈Ω such that ψ f=ω for some time transformation f. (These notions are often defined for groups rather than monoids: see e.g. [47]; in this case the trail is called the orbit. In their “time-free” considerations, Dubins and Schwarz [20, 53, 54] make simplifying assumptions that make the monoid of time transformations a group; we make similar assumptions in Corollary 3.7.) A subset E of Ω is time-superinvariant if together with any ω∈Ω, it contains the whole trail of ω; in other words, if for each ω∈Ω and each time transformation f, it is true that

The time-superinvariant class

is defined to be the family of those events (elements of

is defined to be the family of those events (elements of  ) that are time-superinvariant.

) that are time-superinvariant.

Let c∈ℝ. The probability measure  on Ω is defined by the conditions that ω(0)=c with probability one and, for all 0≤s<t, ω(t)−ω(s) is independent of

on Ω is defined by the conditions that ω(0)=c with probability one and, for all 0≤s<t, ω(t)−ω(s) is independent of  and has the Gaussian distribution with mean 0 and variance t−s. (In other words,

and has the Gaussian distribution with mean 0 and variance t−s. (In other words,  is the distribution of Brownian motion started at c.) In this paper, we rely on the classical arguments for the existence of

is the distribution of Brownian motion started at c.) In this paper, we rely on the classical arguments for the existence of  (see e.g. [35], Chap. 2).

(see e.g. [35], Chap. 2).

Theorem 3.1

Let

c∈ℝ. Each event

such that

ω(0)=c

for all

ω∈E

satisfies

such that

ω(0)=c

for all

ω∈E

satisfies

The main part of (3.2) is the inequality ≤, whose proof will occupy us in Sects. 7–11. The easy part ≥ will be established in Sect. 6.

Remark 3.2

The time-superinvariant class  is closed under countable unions and intersections; in particular, it is a monotone class. However, it is not closed under complementation, and so is not a σ-algebra (unlike McCullagh’s invariant σ-algebras). An example of a time-superinvariant event E such that E

c is not time-superinvariant is the set of all increasing (not necessarily strictly increasing) ω∈Ω satisfying lim

t→∞

ω(t)=∞: the implication (3.1) is violated when ω is the identity function (i.e., ω(t)=t for all t), f=0, and we have E

c in place of E.

is closed under countable unions and intersections; in particular, it is a monotone class. However, it is not closed under complementation, and so is not a σ-algebra (unlike McCullagh’s invariant σ-algebras). An example of a time-superinvariant event E such that E

c is not time-superinvariant is the set of all increasing (not necessarily strictly increasing) ω∈Ω satisfying lim

t→∞

ω(t)=∞: the implication (3.1) is violated when ω is the identity function (i.e., ω(t)=t for all t), f=0, and we have E

c in place of E.

Remark 3.3

This remark explains the intuitive meaning of time-superinvariance. Let f be a time transformation. Transforming ω into ω f is either trivial (ω is replaced by the constant ω(0), if f=0) or can be split into three steps: (a) remove [T,∞) from the domain of ω, i.e., transform ω into ω′:=ω|[0,T), for some T∈(0,∞] (namely, T:=lim t→∞ f(t)); (b) continuously deform the time interval [0,T) into [0,T′) for some T′∈(0,∞], i.e., transform ω′ into ω″∈C[0,T′) defined by ω″(t):=ω′(g(t)) for some increasing homeomorphism g:[0,T′)→[0,T) (e.g., the graph of g can be obtained from the graph of f by removing all horizontal pieces); (c) insert countably many (perhaps a finite number of, perhaps zero) horizontal pieces into the graph of ω″ making sure to obtain an element of Ω (inserting a horizontal piece means replacing ψ∈Ω with

for some a and b, a<b, in the domain of ψ, or

if the domain of ψ is [0,c) for some c<∞ and lim s→c ψ(s) exists in ℝ). Therefore, the trail of ω∈Ω consists of all elements of Ω that can be obtained from ω by an application of the following steps: (a) remove any number of horizontal pieces from the graph of ω; let [0,T) be the domain of the resulting function ω′ (it is possible that T<∞; if T=0, output any ω″∈Ω satisfying ω″(0)=ω(0)); (b) assuming T>0, continuously deform the time interval [0,T) into [0,T′) for some T′∈(0,∞]; let ω″ be the resulting function with the domain [0,T′); (c) if T′=∞, output ω″; if T′<∞ and lim t→T′ ω(t) exists in ℝ, extend ω″ to [0,∞) in any way making sure that the extension belongs to Ω and output the extension; otherwise, nothing is output. A set E is time-superinvariant if and only if application of these last three steps, (a)–(c), never leads outside E.

Remark 3.4

By the Dubins–Schwarz result [20] and Lemma 3.5 below, we can replace  in the statement of Theorem 3.1 by any probability measure P on

in the statement of Theorem 3.1 by any probability measure P on  such that the process X

t

(ω):=ω(t) is a martingale with respect to P and the filtration

such that the process X

t

(ω):=ω(t) is a martingale with respect to P and the filtration  , is unbounded P-a.s., is nowhere constant P-a.s., and satisfies X

0=c

P-a.s.

, is unbounded P-a.s., is nowhere constant P-a.s., and satisfies X

0=c

P-a.s.

Because of its generality, some aspects of Theorem 3.1 may appear counterintuitive. (For example, the conditions we impose on E imply that E contains all ω∈Ω satisfying ω(0)=c whenever E contains the constant c.) In the rest of this section, we specialize Theorem 3.1 to the more intuitive case of divergent and nowhere constant price paths.

Formally, we say that ω∈Ω is nowhere constant if there is no interval (t 1,t 2), where 0≤t 1<t 2, such that ω is constant on (t 1,t 2); we say that ω is divergent if there is no c∈ℝ such that lim t→∞ ω(t)=c; and we let \(\operatorname{DS}\subseteq\varOmega\) stand for the set of all ω∈Ω that are divergent and nowhere constant. Intuitively, the condition that the price path ω should be nowhere constant means that trading never stops completely, and the condition that ω should be divergent will be satisfied if ω’s volatility does not eventually die away (cf. Remark 5.2 in Sect. 5 below). The conditions of being divergent and nowhere constant in the definition of \(\operatorname{DS}\) are similar to, but weaker than, Dubins and Schwarz’s [20] conditions of being unbounded and nowhere constant.

All unbounded and strictly increasing time transformations f:[0,∞)→[0,∞) form a group, which will be denoted  . Let us say that an event E is time-invariant if it contains the whole orbit

. Let us say that an event E is time-invariant if it contains the whole orbit  of each of its elements ω∈E. It is clear that \(\operatorname{DS}\) is time-invariant. Unlike

of each of its elements ω∈E. It is clear that \(\operatorname{DS}\) is time-invariant. Unlike  , the time-invariant events form a σ-algebra: E

c is time-invariant whenever E is (cf. Remark 3.2).

, the time-invariant events form a σ-algebra: E

c is time-invariant whenever E is (cf. Remark 3.2).

The following two lemmas will be needed to specialize Theorem 3.1 to subsets of \(\operatorname{DS}\). First of all, it is not difficult to see that for subsets of \(\operatorname{DS}\), there is no difference between time-invariance and time-superinvariance (which makes the notion of time-superinvariance much more intuitive for subsets of \(\operatorname{DS}\)).

Lemma 3.5

An event \(E\subseteq \operatorname{DS}\) is time-superinvariant if and only if it is time-invariant.

Proof

If E (not necessarily \(E\subseteq \operatorname{DS}\)) is time-superinvariant, ω∈E and  , we have ψ:=ω

f∈E as \(\psi^{f^{-1}}=\omega\). Therefore, time-superinvariance always implies time-invariance.

, we have ψ:=ω

f∈E as \(\psi^{f^{-1}}=\omega\). Therefore, time-superinvariance always implies time-invariance.

It is clear that for all ψ∈Ω and time transformations f, \(\psi^{f}\notin \operatorname{DS}\) unless  . Let \(E\subseteq \operatorname{DS}\) be time-invariant, ω∈E, f a time transformation, and ψ

f=ω. Since \(\psi^{f}\in \operatorname{DS}\), we have

. Let \(E\subseteq \operatorname{DS}\) be time-invariant, ω∈E, f a time transformation, and ψ

f=ω. Since \(\psi^{f}\in \operatorname{DS}\), we have  , and so \(\psi=\omega^{f^{-1}}\in E\). Therefore, time-invariance implies time-superinvariance for subsets of \(\operatorname{DS}\). □

, and so \(\psi=\omega^{f^{-1}}\in E\). Therefore, time-invariance implies time-superinvariance for subsets of \(\operatorname{DS}\). □

Lemma 3.6

An event \(E\subseteq \operatorname{DS}\) is time-superinvariant if and only if \(\operatorname{DS}\setminus E\) is time-superinvariant.

Proof

This follows immediately from Lemma 3.5. □

For time-invariant events in \(\operatorname{DS}\), (3.2) can be strengthened to assert the coincidence of the outer and the inner content of E with  . However, the notions of outer and inner content have to be modified slightly.

. However, the notions of outer and inner content have to be modified slightly.

For any B⊆Ω, a restricted version of outer content can be defined by

with \(\mathfrak{S}\) again ranging over the positive capital processes. Intuitively, this is the definition obtained when Ω is replaced by B: we are told in advance that ω∈B. The corresponding restricted version of inner content is

We use these definitions only in the case where \(\operatorname{\overline{\mathbb{P}}}(B)=1\). Lemma 7.3 below shows that in this case \(\operatorname{\underline{\mathbb{P}}}(E;B)\le \operatorname{\overline{\mathbb{P}}}(E;B)\).

We say that \(\operatorname{\overline{\mathbb{P}}}(E;B)\) and \(\operatorname{\underline{\mathbb{P}}}(E;B)\) are restricted to B. It should be clear by now that these notions are not related to conditional probability \(\operatorname{\mathbb{P}}(E\mid B)\). Their analogs in measure-theoretic probability are the function \(E\mapsto \operatorname{\mathbb{P}}(E\cap B)\), in the case of outer content, and the function \(E\mapsto \operatorname{\mathbb{P}}(E\cup B^{c})\), in the case of inner content (assuming B is measurable). Both functions coincide with \(\operatorname{\mathbb{P}}\) when \(\operatorname{\mathbb{P}}(B)=1\).

We also use the restricted versions of the notions “null,” “for typical,” and “full.” For example, E being B-null means \(\operatorname{\overline{\mathbb{P}}}(E;B)=0\).

Theorem 3.1 immediately implies the following statement about the emergence of the Wiener measure in our trading protocol (another such statement, more general and constructive but also more complicated, will be given in Theorem 5.1(b)).

Corollary 3.7

Let

c∈ℝ. Each event

satisfies

satisfies

(in this context, ω(0)=c stands for the event {ω∈Ω|ω(0)=c} and the comma stands for the intersection).

Proof

The events \(E\cap \operatorname{DS}\cap\{\omega\mid \omega(0)=c\}\) and \(E^{c}\cap \operatorname{DS}\cap\{\omega\mid \omega(0)=c\}\) belong to  ; for the first of them, this immediately follows from

; for the first of them, this immediately follows from  and

and  being closed under intersections (cf. Remark 3.2), and for the second, it suffices to notice that

being closed under intersections (cf. Remark 3.2), and for the second, it suffices to notice that  (cf. Lemma 3.6). Applying (3.2) to these two events and making use of the inequality \(\operatorname{\underline{\mathbb{P}}}\le \operatorname{\overline{\mathbb{P}}}\) (cf. Lemma 7.3 and Eq. (7.1) below), we obtain

(cf. Lemma 3.6). Applying (3.2) to these two events and making use of the inequality \(\operatorname{\underline{\mathbb{P}}}\le \operatorname{\overline{\mathbb{P}}}\) (cf. Lemma 7.3 and Eq. (7.1) below), we obtain

□

We can express the equality (3.3) by saying that the game-theoretic probability of E exists and is equal to  when we restrict our attention to ω in \(\operatorname{DS}\) satisfying ω(0)=c.

when we restrict our attention to ω in \(\operatorname{DS}\) satisfying ω(0)=c.

4 Applications

The main goal of this section is to demonstrate the power of Theorem 3.1; in particular, we shall see that it implies the main results of [67] and [65]. One corollary (Corollary 4.5) of Theorem 3.1 solves an open problem posed in [65], and two other corollaries (Corollaries 4.6 and 4.7) give much more precise results. At the end of the section, we draw the reader’s attention to several events such that Theorem 3.1 together with very simple game-theoretic arguments show that they are full, while the fact that they are full does not follow from Theorem 3.1 alone.

In this section, we deduce the main results of [67] and [65] and other results as corollaries of Theorem 3.1 and the corresponding results for measure-theoretic Brownian motion. It is, however, still important to have direct game-theoretic proofs such as those given in [65, 67]. This will be discussed in Remark 4.11.

The following obvious fact will be used constantly in this paper: restricted outer content is countably (in particular, finitely) subadditive. (Of course, this fact is obvious only because of our choice of definitions.)

Lemma 4.1

For any B⊆Ω and any sequence of subsets E 1,E 2,… of Ω,

In particular, a countable union of B-null sets is B-null.

4.1 Points of increase

Let us say that t∈[0,∞) is a point of increase for ω∈Ω if there exists δ>0 such that ω(t 1)≤ω(t)≤ω(t 2) for all t 1∈((t−δ)+,t] and t 2∈[t,t+δ). Points of decrease are defined in the same way except that ω(t 1)≤ω(t)≤ω(t 2) is replaced by ω(t 1)≥ω(t)≥ω(t 2). We say that ω is locally constant to the right of t∈[0,∞) if there exists δ>0 such that ω is constant over the interval [t,t+δ].

A slightly weaker form of the following corollary was proved directly (by adapting Burdzy’s [8] proof) in [67].

Corollary 4.2

Typical ω have no points t of increase or decrease such that ω is not locally constant to the right of t.

This result (without the clause about local constancy) was established by Dvoretzky, Erdős and Kakutani [24] for Brownian motion, and Dubins and Schwarz [20] noticed that their reduction of continuous martingales to Brownian motion shows that it continues to hold for all almost surely unbounded continuous martingales that are almost surely nowhere constant. We apply Dubins and Schwarz’s observation in the game-theoretic framework.

Proof of Corollary 4.2

Let us first consider only the ω∈Ω satisfying ω(0)=0. Consider the set E of all ω∈Ω that have points t of increase or decrease such that ω is not locally constant to the right of t and ω is not locally constant to the left of t (with the obvious definition of local constancy to the left of t; if t=0, every ω is locally constant to the left of t). Since E is time-superinvariant (cf. Remark 3.3), Theorem 3.1 and the Dvoretzky–Erdős–Kakutani result show that the event E is null. And the following standard game-theoretic argument (as in [67], Theorem 1) shows that the event that ω is locally constant to the left but not locally constant to the right of a point of increase or decrease is null. For concreteness, we consider the case of a point of increase. It suffices (see Lemma 4.1) to show that for all rational numbers b>a>0 and D>0, the event that

is null. The simple capital process that starts from ϵ>0, bets h 1:=1/D at τ 1=a, and bets h 2:=0 at time τ 2:=min{t≥a∣ω(t)∈{ω(a)−Dϵ,ω(a)+D}} is positive and turns ϵ (an arbitrarily small amount) into 1 when (4.1) happens. (Notice that this argument works both when t=0 and when t>0.)

It remains to get rid of the restriction ω(0)=0. Fix a positive capital process \(\mathfrak{S}\) satisfying \(\mathfrak{S}_{0}<\epsilon\) and reaching 1 on ω with ω(0)=0 that have at least one point t of increase or decrease such that ω is not locally constant to the right of t. Applying \(\mathfrak{S}\) to ω−ω(0) gives another positive capital process, which will achieve the same goal but without the restriction ω(0)=0. □

It is easy to see that the qualification about local constancy to the right of t in Corollary 4.2 is essential.

Proposition 4.3

The outer content of the following event is one: There is a point t of increase such that ω is locally constant to the right of t.

Proof

This proof uses Lemma 7.2 stated in Sect. 7 below. Consider the continuous martingale which is Brownian motion that starts at 0 and is stopped as soon as it reaches 1. □

4.2 Variation index

For each interval [u,v]⊆[0,∞), each p∈(0,∞) and each ω∈Ω, the strong p-variation of ω over [u,v] is defined as

where κ ranges over all partitions \(u=t_{0}\le t_{1}\le\cdots\le t_{n_{\kappa}}=v\) of the interval [u,v]. It is obvious that there exists a unique number \(\operatorname{vi}^{[u,v]}(\omega)\in[0,\infty]\), called the variation index of ω over [u,v], such that \(\operatorname{v}_{p}^{[u,v]}(\omega)\) is finite when \(p>\operatorname{vi}^{[u,v]}(\omega)\) and infinite when \(p<\operatorname{vi}^{[u,v]}(\omega)\); notice that \(\operatorname{vi}^{[u,v]}(\omega)\notin(0,1)\).

The following result was obtained in [65] (by adapting Bruneau’s [7] proof); in measure-theoretic probability it was established by Lepingle ([40], Theorem 1 and Proposition 3) for continuous semimartingales and Lévy [41] for Brownian motion.

Corollary 4.4

For typical ω∈Ω, the following is true: For any interval [u,v]⊆[0,∞) such that u<v, either \(\operatorname{vi}^{[u,v]}(\omega)=2\) or ω is constant over [u,v].

(The interval [u,v] was assumed fixed in [65], but this assumption is easy to get rid of.)

Proof

Without loss of generality, we restrict our attention to the ω satisfying ω(0)=0 (see the proof of Corollary 4.2). Consider the set of ω∈Ω such that for some interval [u,v]⊆[0,∞), neither \(\operatorname{vi}^{[u,v]}(\omega)=2\) nor ω is constant over [u,v]. This set is time-superinvariant (cf. Remark 3.3), and so in conjunction with Theorem 3.1, Lévy’s result implies that it is null. □

Corollary 4.4 says that, for typical ω,

However, it does not say anything about the situation for p=2. The following result completes the picture (solving the problem posed in [65], Sect. 5).

Corollary 4.5

For typical ω∈Ω, the following is true: For any interval [u,v]⊆[0,∞) such that u<v, either \(\operatorname{v}^{[u,v]}_{2}(\omega)=\infty\) or ω is constant over [u,v].

Proof

Lévy [41] proves for Brownian motion that \(\operatorname{v}^{[u,v]}_{2}(\omega)=\infty\) almost surely (for fixed [u,v], which implies the statement for all [u,v]). Consider the set of ω∈Ω such that for some interval [u,v]⊆[0,∞), neither \(\operatorname{v}^{[u,v]}_{2}(\omega)=\infty\) nor ω is constant over [u,v]. This set is time-superinvariant, and so in conjunction with Theorem 3.1, Lévy’s result implies that it is null. □

4.3 More precise results

Theorem 3.1 allows us to deduce much stronger results than Corollaries 4.4 and 4.5 from known results about Brownian motion.

Define ln∗ u:=1∨|lnu|, u>0, and let ψ:[0,∞)→[0,∞) be Taylor’s [62] function

(with ψ(0):=0). For ω∈Ω, T∈[0,∞) and ϕ:[0,∞)→[0,∞), set

where κ ranges over all partitions \(0=t_{0}\le t_{1}\le\cdots\le t_{n_{\kappa}}=T\) of [0,T]. In the previous subsection we considered the case ϕ(u):=u p; another interesting case is ϕ:=ψ. See [6] for a much more explicit expression for \(\operatorname{v}_{\psi,T}(\omega)\).

Corollary 4.6

For typical ω,

Suppose ϕ:[0,∞)→[0,∞) is such that ψ(u)=o(ϕ(u)) as u→0. For typical ω,

Corollary 4.6 refines Corollaries 4.4 and 4.5; it will be further strengthened by Corollary 4.7.

The quantity \(\operatorname{v}_{\psi,T}(\omega)\) is not nearly as fundamental as the following quantity introduced by Taylor [62]: for ω∈Ω and T∈[0,∞), set

where K δ [0,T] is the set of all partitions \(0=t_{0}\le\cdots \le t_{n_{\kappa}}=T\) of [0,T] whose mesh is less than δ, i.e., max i (t i −t i−1)<δ. Notice that the expression after lim δ→0 in (4.3) is increasing in δ; therefore \(\operatorname{w}_{T}(\omega)\le \operatorname{v}_{\psi,T}(\omega)\).

The following corollary contains Corollaries 4.4–4.6 as special cases. It is similar to Corollary 4.6 but stated in terms of the process \(\operatorname{w}\).

Corollary 4.7

For typical ω,

Proof

First let us check that under the Wiener measure (4.4) holds for almost all ω. It is sufficient to prove that \(\operatorname{w}_{T}=T\) for all T∈[0,∞) a.s. Furthermore, it is sufficient to consider only rational T∈[0,∞). Therefore, it is sufficient to consider a fixed rational T∈[0,∞). And for a fixed T, \(\operatorname{w}_{T}=T\) a.s. follows from Taylor’s result ([62], Theorem 1).

As usual, let us restrict our attention to the case ω(0)=0. In view of Theorem 3.1, it suffices to check that the complement of the event (4.4) is time-superinvariant, i.e., to check (3.1), where E is the complement of (4.4). In other words, it suffices to check that ω f=ω∘f satisfies (4.4) whenever ω satisfies (4.4). This follows from Lemma 4.8 below, which says that \(\operatorname{w}_{T}(\omega\circ f)=\operatorname{w}_{f(T)}(\omega)\). □

Lemma 4.8

Let T∈[0,∞), ω∈Ω and f be a time transformation. Then we have \(\operatorname{w}_{T}(\omega\circ f)=\operatorname{w}_{f(T)}(\omega)\).

Proof

Fix T∈[0,∞), ω∈Ω, a time transformation f and c∈[0,∞]. Our goal is to prove

in the notation of (4.3). Suppose the antecedent in (4.5) holds. Notice that the two lim δ→0 in (4.5) can be replaced by inf δ>0.

To prove that the limit on the right-hand side of (4.5) is ≤c, take any ϵ>0. We assume c<∞ (the case c=∞ is trivial). Let δ>0 be so small that

Let δ′>0 be so small that |t−t′|<δ′ implies that |f(t)−f(t′)|<δ. Since f(κ)∈K δ [0,f(T)] whenever κ∈K δ′[0,T],

To prove that the limit on the right-hand side of (4.5) is ≥c, take any ϵ>0 and δ′>0. We assume c<∞ (the case c=∞ can be considered analogously). Place a finite number N of points including 0 and T onto the interval [0,T] so that the distance between any pair of adjacent points is less than δ′; this set of points will be denoted κ 0. Let δ>0 be so small that ψ(|ω(t″)−ω(t′)|)<ϵ/N whenever |t″−t′|<δ. Choose a partition κ={t 0,…,t n }∈K δ [0,f(T)] satisfying

Let \(\kappa'=\{t'_{0},\ldots,t'_{n}\}\) be a partition of the interval [0,T] satisfying f(κ′)=κ. This partition will satisfy

and the union \(\kappa''=\{t''_{0},\ldots,t''_{N+n}\}\) (with its elements listed in the increasing order) of κ 0 and κ′ will satisfy

Since κ″∈K δ′[0,T] and ϵ and δ′ can be taken arbitrarily small, this completes the proof. □

The value \(\operatorname{w}_{T}(\omega)\) defined by (4.3) can be interpreted as the quadratic variation of the price path ω over the time interval [0,T]. Another non-stochastic definition of quadratic variation (see (5.2)) will serve us in Sect. 5 as the basis for the proof of Theorem 3.1. For the equivalence of the two definitions, see Remark 5.6.

4.4 Limitations of Theorem 3.1

We said earlier that Theorem 3.1 implies the main result of [67] (see Corollary 4.2). This is true in the sense that the extra game-theoretic argument used in the proof of Corollary 4.2 was very simple. But this simple argument was essential: in this subsection, we shall see that Theorem 3.1 per se does not imply the full statement of Corollary 4.2.

Let c∈ℝ and E⊆Ω be such that ω(0)=c for all ω∈E. Suppose the set E is null. We can say that the equality \(\operatorname{\overline{\mathbb{P}}}(E)=0\) can be deduced from Theorem 3.1 and the properties of Brownian motion if (and only if)  , where \(\overline{E}\) is the smallest time-superinvariant set containing E (it is clear that such a set exists and is unique). It would be nice if all equalities \(\operatorname{\overline{\mathbb{P}}}(E)=0\), for all null sets E satisfying ∀ω∈E:ω(0)=c, could be deduced from Theorem 3.1 and the properties of Brownian motion. We shall see later (Proposition 4.9) that this is not true even for some fundamental null events E; an example of such an event will now be given.

, where \(\overline{E}\) is the smallest time-superinvariant set containing E (it is clear that such a set exists and is unique). It would be nice if all equalities \(\operatorname{\overline{\mathbb{P}}}(E)=0\), for all null sets E satisfying ∀ω∈E:ω(0)=c, could be deduced from Theorem 3.1 and the properties of Brownian motion. We shall see later (Proposition 4.9) that this is not true even for some fundamental null events E; an example of such an event will now be given.

Let us say that a closed interval [t 1,t 2]⊆[0,∞) is an interval of local maximum for ω∈Ω if (a) ω is constant on [t 1,t 2] but not constant on any larger interval containing [t 1,t 2], and (b) there exists δ>0 such that ω(s)≤ω(t) for all s∈((t 1−δ)+,t 1)∪(t 2,t 2+δ) and all t∈[t 1,t 2]. In the case where t 1=t 2, we say “point” instead of “interval.” It is shown in [67] (Corollary 3) that for typical ω, all intervals of local maximum are points; this also follows from Corollary 4.2, and is very easy to check directly (using the same argument as in the proof of Corollary 4.2). Let E be the null event that ω(0)=c and not all intervals of local maximum of ω are points. Proposition 4.9 says that \(\operatorname{\overline{\mathbb{P}}}(E)=0\) cannot be deduced from Theorem 3.1 and the properties of Brownian motion. This implies that Corollary 4.2 also cannot be deduced from Theorem 3.1 and the properties of Brownian motion, despite the fact that the deduction is possible with the help of a very easy game-theoretic argument.

Before stating and proving Proposition 4.9, we formally introduce the operator \(E\mapsto\overline{E}\) and show that it is a bona fide closure operator. For each E⊆Ω, \(\overline{E}\) is defined to be the union of the trails of all points in E. It can be checked that \(E\mapsto\overline{E}\) satisfies the standard properties of closure operators: \(\overline{\emptyset}=\emptyset\) and \(\overline{E_{1}\cup E_{2}}=\overline{E_{1}}\cup\overline{E_{2}}\) are obvious, and \(\overline{\overline{E}}=\overline{E}\) and \(E\subseteq\overline{E}\) follow from the fact that the time transformations constitute a monoid. Therefore ([25], Theorem 1.1.3 and Proposition 1.2.7), \(E\mapsto\overline{E}\) is the operator of closure in some topology on Ω, which may be called the time-superinvariant topology. A set E⊆Ω is closed in this topology if and only if it contains the trail of any of its elements.

Proposition 4.9

Let c∈ℝ and E be the set of all ω∈Ω such that ω(0)=c and ω has an interval of local maximum that is not a point. Then E and \(\overline{E}\) are events and

Proof

For the equality \(\operatorname{\overline{\mathbb{P}}}(E)=0\), see above. The equality  is a well-known fact (and follows from \(\operatorname{\overline{\mathbb{P}}}(E)=0\) and Lemma 6.3 below). It suffices to prove that

is a well-known fact (and follows from \(\operatorname{\overline{\mathbb{P}}}(E)=0\) and Lemma 6.3 below). It suffices to prove that  and

and  ; Theorem 3.1 will then imply \(\operatorname{\overline{\mathbb{P}}}(\overline{E})=1\). The inclusion

; Theorem 3.1 will then imply \(\operatorname{\overline{\mathbb{P}}}(\overline{E})=1\). The inclusion  and the equality

and the equality  follow from the following explicit description of \(\overline{E}\): this set consists of all ω∈Ω with ω(0)=c that are not increasing functions. This can be seen from Remark 3.3 or from the following argument. If ω is increasing, ω

f will also be increasing for any time transformation f. Combining this with (3.1) we can see that the set of all ω that are not increasing is time-superinvariant; since this set contains E, it also contains \(\overline{E}\). In the opposite direction, we are required to show that any ω∈Ω that is not increasing is an element of \(\overline{E}\), i.e., there exists a time transformation f such that ω

f∈E. Fix such ω and find 0≤a<b such that ω(a)>ω(b). Let m∈[0,b] be the smallest element of argmax

t∈[0,b]

ω(t). Applying the time transformation

follow from the following explicit description of \(\overline{E}\): this set consists of all ω∈Ω with ω(0)=c that are not increasing functions. This can be seen from Remark 3.3 or from the following argument. If ω is increasing, ω

f will also be increasing for any time transformation f. Combining this with (3.1) we can see that the set of all ω that are not increasing is time-superinvariant; since this set contains E, it also contains \(\overline{E}\). In the opposite direction, we are required to show that any ω∈Ω that is not increasing is an element of \(\overline{E}\), i.e., there exists a time transformation f such that ω

f∈E. Fix such ω and find 0≤a<b such that ω(a)>ω(b). Let m∈[0,b] be the smallest element of argmax

t∈[0,b]

ω(t). Applying the time transformation

to ω, we obtain an element of E. □

Remark 4.10

Another event E that satisfies (4.6) is the set of all ω∈Ω such that ω(0)=c and ω has an interval of local maximum that is not a point, or has an interval of local minimum that is not a point (with the obvious definition of intervals and points of local minimum). Then \(\overline{E}\) is the event that consists of all non-constant ω with ω(0)=c. This is the largest possible \(\overline{E}\) for E satisfying \(\operatorname{\overline{\mathbb{P}}}(E)=0\) (provided we consider only ω with ω(0)=c): indeed, if the constant c is in \(\overline{E}\), c will also be in E, and so \(\operatorname{\overline{\mathbb{P}}}(E)=1\).

Proposition 4.9 shows that Theorem 3.1 does not make all other game-theoretic arguments redundant. What is interesting is that already very simple arguments suffice to deduce all results in [65, 67].

Remark 4.11

Theorem 3.1 does not make the game-theoretic arguments in [65, 67] redundant also in another, perhaps even more important, respect. For example, Corollary 4.2 is an existence result: it asserts the existence of a trading strategy whose capital process is positive and increases from 1 to ∞ when ω has a point t of increase or decrease such that ω is not locally constant to the right of t. In principle, such a strategy could be extracted from the proof of Theorem 3.1, but it would be extremely complicated and non-intuitive; the result would remain essentially an existence result. The proof of Theorem 2 in [67], on the contrary, constructs an explicit trading strategy exploiting the existence of points of increase or decrease. Similarly, the proof of Theorem 1 in [65] constructs an explicit trading strategy whose existence is asserted in Corollary 4.4. The recent paper [63] partially extends Corollary 4.4 to discontinuous price paths, showing that \(\operatorname{vi}^{[0,T]}(\omega)\le2\) for all T<∞ for typical ω. The trading strategy constructed in [63] for profiting from \(\operatorname{vi}^{[0,T]}(\omega)>2\) is especially intuitive: it just combines (following Stricker’s [58] idea) the strategies for profiting from lim inf t ω(t)<a<b<lim sup t ω(t) implicit in the standard proof of Doob’s martingale convergence theorem.

Remark 4.12

All results discussed in this section are about sets of outer content zero or inner content one, and one might suspect that the class  is so small that

is so small that  for all c∈ℝ and all

for all c∈ℝ and all  such that ω(0)=c when ω∈E; this would have been another limitation of Theorem 3.1. However, it is easy to check that for each p∈[0,1] and each c∈ℝ, there exists

such that ω(0)=c when ω∈E; this would have been another limitation of Theorem 3.1. However, it is easy to check that for each p∈[0,1] and each c∈ℝ, there exists  satisfying ω(0)=c for all ω∈E and

satisfying ω(0)=c for all ω∈E and  . Indeed, without loss of generality we can take c:=p, and we can then define E to be the event that ω(0)=p, ω reaches levels 0 and 1, and ω reaches level 1 before reaching level 0.

. Indeed, without loss of generality we can take c:=p, and we can then define E to be the event that ω(0)=p, ω reaches levels 0 and 1, and ω reaches level 1 before reaching level 0.

5 Main result: constructive version

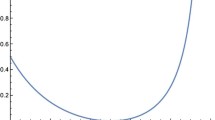

For each n∈{0,1,…}, let \(\mathbb{D}_{n}:=\{k2^{-n}\mid k\in\mathbb{Z}\}\) and define a sequence of stopping times \(T^{n}_{k}\), k=−1,0,1,2,…, inductively by \(T^{n}_{-1}:=0\),

(as usual, inf∅:=∞). For each t∈[0,∞) and ω∈Ω, define

(cf. (4.2) with p=2) and set

We shall see later (Theorem 5.1(a)) that the event \(\{\forall t\in[0,\infty):\smash{\overline {A}}_{t}=\smash{\underline{A}}_{t}\}\) is full and that for typical ω the functions \(\smash{\overline{A}}(\omega):t\in[0,\infty)\mapsto\smash {\overline{A}}_{t}(\omega)\) and \(\smash{\underline{A}}(\omega):t\in[0,\infty)\mapsto\smash {\underline{A}}_{t}(\omega)\) are elements of Ω (in particular, they are finite). But in general we can only say that \(\smash{\overline{A}}(\omega)\) and \(\smash{\underline{A}}(\omega)\) are positive increasing functions (not necessarily strictly increasing) that can even take the value ∞. For each s∈[0,∞), define the stopping time

(We shall see in Lemma 8.3 that this is indeed a stopping time.) It will be convenient to use the following convention: an event stated in terms of A ∞ , such as A ∞ =∞, happens if and only if \(\smash{\overline{A}}=\smash{\underline {A}}\in\varOmega\) and \(A_{\infty}:=\smash{\overline{A}}_{\infty}=\smash{\underline {A}}_{\infty}\) satisfies the given condition.

Let P be a function defined on the power set of Ω and taking values in [0,1] (such as \(\operatorname{\overline{\mathbb{P}}}\) or \(\operatorname{\underline{\mathbb{P}}}\)), and let f:Ω→Ψ be a mapping from Ω to another set Ψ. The pushforward Pf −1 of P by f is the function on the power set of Ψ defined by

An especially important mapping for this paper is the normalizing time transformation \(\operatorname{ntt}:\varOmega\to\mathbb{R}^{[0,\infty)}\) defined as follows: for each ω∈Ω, \(\operatorname{ntt}(\omega)\) is the time-changed price path s↦ω(τ s ), s∈[0,∞), with ω(∞) set to e.g. 0. (We call it “normalizing” since our goal is to ensure \(\smash{\overline{A}}_{t}(\operatorname{ntt}(\omega))=\smash{\underline {A}}_{t}(\operatorname{ntt}(\omega))=t\) for all t≥0 for typical ω.) For each c∈ℝ, let

(as before, the commas stand for conjunction in this context) be the pushforwards of the restricted outer and inner content

respectively, by the normalizing time transformation \(\operatorname{ntt}\).

As mentioned earlier, we use restricted outer and inner content \(\operatorname{\overline{\mathbb{P}}}(E;B)\) and \(\operatorname{\underline{\mathbb{P}}}(E;B)\) only when \(\operatorname{\overline{\mathbb{P}}}(B)=1\). In Sect. 7, (7.2), we shall see that indeed \(\operatorname{\overline{\mathbb{P}}}(\omega(0)=c,A_{\infty}=\infty)=1\).

The next theorem shows that the pushforwards of \(\operatorname{\overline{\mathbb{P}}}\) and \(\operatorname{\underline{\mathbb{P}}}\) we have just defined are closely connected with the Wiener measure. Remember that for each c∈ℝ,  is the probability measure on

is the probability measure on  which is the pushforward of the Wiener measure

which is the pushforward of the Wiener measure  by the mapping ω∈Ω↦ω+c (i.e.,

by the mapping ω∈Ω↦ω+c (i.e.,  is the distribution of Brownian motion over the time period [0,∞) started from c).

is the distribution of Brownian motion over the time period [0,∞) started from c).

Theorem 5.1

(a) For typical ω, the function

exists, is an increasing element of Ω with A 0(ω)=0, and has the same intervals of constancy as ω.

(b) For all

c∈ℝ, the restriction of both

\(\smash{\overline{Q}}_{c}\)

and

\(\smash {\underline{Q}}_{c}\)

to

coincides with the measure

coincides with the measure

on

Ω (in particular, \(\smash{\underline{Q}}_{c}(\varOmega)=1\)).

on

Ω (in particular, \(\smash{\underline{Q}}_{c}(\varOmega)=1\)).

Remark 5.2

The value A t (ω) can be interpreted as the total volatility of the price path ω over the time period [0,t]. Theorem 5.1(b) implies that typical ω satisfying A ∞ (ω)=∞ are unbounded (in particular, divergent). If A ∞ (ω)<∞, the total volatility A t+1(ω)−A t (ω) of ω over (t,t+1] tends to 0 as t→∞, and so the volatility of ω can be said to die away.

Remark 5.3

Theorem 5.1 continues to hold if the restriction “;ω(0)=c,A ∞ =∞)” in the definitions (5.4) and (5.5) is replaced by “;ω(0)=c,ω is unbounded)” (in analogy with [20]).

Remark 5.4

Theorem 5.1 depends on the arbitrary choice (\(\mathbb{D}_{n}\)) of the sequence of grids to define the quadratic variation process A. To make this less arbitrary, we could consider all grids whose mesh tends to zero fast enough and which are definable in the standard language of set theory (similarly to Wald’s [69] suggested requirement for von Mises’s collectives). Dudley’s [21] result shows that the rate of convergence o(1/logn) of the mesh to zero is sufficient for Brownian motion and partitions of the horizontal (time) axis, and de la Vega’s [17] result shows that this rate is slowest possible. It is an open question what the optimal rate of convergence is when quadratic variation is defined via partitions of the vertical axis, as in the present paper.

Remark 5.5

In this paper, we construct the quadratic variation A and define the stopping times τ s in terms of A. Dubins and Schwarz [20] construct τ s directly (in a very similar way to our construction of A). An advantage of our construction (the game-theoretic counterpart of that in [34]) is that the function A(ω) is continuous for typical ω, whereas the event that the function s↦τ s (ω) is continuous has inner content zero. (Dubins and Schwarz’s extra assumptions make this function continuous for almost all ω.)

Remark 5.6

Theorem 3.1 implies that the two notions of quadratic variation that we have discussed so far, \(\operatorname{w}_{t}(\omega)\) defined by (4.3) and A

t

(ω), coincide for all t for typical ω. Indeed, since \(\operatorname{w}_{t}=A_{t}=t\), ∀t∈[0,∞), holds almost surely in the case of Brownian motion (see Lemma 8.4 for A

t

=t), it suffices to check that the complement of the event \(\{\forall t\in[0,\infty):\operatorname{w}_{t}=A_{t}\}\) belongs to  . This follows from Lemma 4.8 and the analogous statement for A: if \(\operatorname{w}_{t}(\omega)=A_{t}(\omega)\) for all t, we also have

. This follows from Lemma 4.8 and the analogous statement for A: if \(\operatorname{w}_{t}(\omega)=A_{t}(\omega)\) for all t, we also have

for all t.

6 Functional generalizations

Theorems 3.1 and 5.1(b) are about outer content for sets, but the former and part of the latter can be generalized to cover the following more general notion of outer content for functionals, i.e., real-valued functions on Ω. The outer content of a positive functional F restricted to a set B⊆Ω is defined by

where \(\mathfrak{S}\) ranges over the positive capital processes. This is the price of the cheapest positive superhedge for F when Reality is restricted to choosing ω∈B. Restricted outer content for functionals generalizes restricted outer content for sets: \(\operatorname{\overline{\mathbb{P}}}(E;B)=\operatorname{\overline{\mathbb{E}}}(\operatorname{\boldsymbol{1}}_{E};B)\) for all E⊆Ω. When B=Ω, we abbreviate \(\operatorname{\overline{\mathbb{E}}}(F;B)\) to \(\operatorname{\overline{\mathbb{E}}}(F)\) and refer to \(\operatorname{\overline{\mathbb{E}}}(F)\) as the outer content of F. Notice that \(\operatorname{\overline{\mathbb{E}}}(F;B)=\operatorname{\overline{\mathbb{E}}}(F\operatorname{\boldsymbol{1}}_{B})\).

Let us say that a positive functional F:Ω→[0,∞) is  -measurable if for each constant c∈[0,∞), the set {ω∣F(ω)≥c} is in

-measurable if for each constant c∈[0,∞), the set {ω∣F(ω)≥c} is in  . (We need to spell out this definition since

. (We need to spell out this definition since  is not a σ-algebra; cf. Remark 3.2.) Notice that the

is not a σ-algebra; cf. Remark 3.2.) Notice that the  -measurability of F means that F is

-measurability of F means that F is  -measurable and, for each ω∈Ω and each time transformation f,

-measurable and, for each ω∈Ω and each time transformation f,

(cf. (3.1)).

Remark 6.1

The presence of ≤ in (6.2) is natural as, intuitively, transforming ω into ω

f may involve cutting off part of ω (step (a) at the beginning of Remark 3.3). It is clear that F(ω

f)=F(ω) when  .

.

In this paper, we shall in fact prove the following generalization of Theorem 3.1.

Theorem 6.2

Let

c∈ℝ. Each bounded positive

-measurable functional

F:Ω→[0,∞) satisfies

-measurable functional

F:Ω→[0,∞) satisfies

The proof of the inequality ≥ in (6.3) is easy and is accomplished by the following lemma; it suffices to apply it to  in place of P and to \(F\operatorname{\boldsymbol{1}}_{\{\omega(0)=c\}}\) in place of F.

in place of P and to \(F\operatorname{\boldsymbol{1}}_{\{\omega(0)=c\}}\) in place of F.

Lemma 6.3

Let

P

be a probability measure on

such that the process

X

t

(ω):=ω(t) is a martingale with respect to

P

and the filtration

such that the process

X

t

(ω):=ω(t) is a martingale with respect to

P

and the filtration

. Then

\(\int F \,dP\le \operatorname{\overline{\mathbb{E}}}(F)\)

for any positive

. Then

\(\int F \,dP\le \operatorname{\overline{\mathbb{E}}}(F)\)

for any positive

-measurable functional

F.

-measurable functional

F.

Proof

Fix a positive  -measurable functional F and let ϵ>0. Find a positive capital process \(\mathfrak{S}\) of the form (2.2) such that \(\mathfrak{S}_{0}<\operatorname{\overline{\mathbb{E}}}(F)+\epsilon\) and \(\liminf_{t\to\infty}\mathfrak{S}_{t}(\omega)\ge F(\omega)\) for all ω∈Ω. It can be checked using the optional sampling theorem (it is here that the boundedness of Sceptic’s bets is used) that each addend in (2.1) is a martingale, and so each partial sum in (2.1) is a martingale and (2.1) itself is a local martingale. Since each addend in (2.2) is a positive local martingale, it is a supermartingale. We can see that each addend in (2.2) is a positive continuous supermartingale. Using Fatou’s lemma and the monotone convergence theorem, we now obtain

-measurable functional F and let ϵ>0. Find a positive capital process \(\mathfrak{S}\) of the form (2.2) such that \(\mathfrak{S}_{0}<\operatorname{\overline{\mathbb{E}}}(F)+\epsilon\) and \(\liminf_{t\to\infty}\mathfrak{S}_{t}(\omega)\ge F(\omega)\) for all ω∈Ω. It can be checked using the optional sampling theorem (it is here that the boundedness of Sceptic’s bets is used) that each addend in (2.1) is a martingale, and so each partial sum in (2.1) is a martingale and (2.1) itself is a local martingale. Since each addend in (2.2) is a positive local martingale, it is a supermartingale. We can see that each addend in (2.2) is a positive continuous supermartingale. Using Fatou’s lemma and the monotone convergence theorem, we now obtain

where t can be assumed to take only integer values. Since ϵ can be arbitrarily small, this implies the statement of the lemma. □

We shall deduce the inequality ≤ in Theorem 6.2 from the following generalization of the part of Theorem 5.1(b) concerning \(\smash{\overline{Q}}_{c}\).

Theorem 6.4

For any

c∈ℝ and any bounded positive

-measurable functional

F:Ω→[0,∞),

-measurable functional

F:Ω→[0,∞),

(with ∘ standing for composition of two functions and with the convention that \((F\circ \operatorname{ntt})(\omega):=0\) when \(\omega\notin \operatorname{ntt}^{-1}(\varOmega)\)).

We shall check that Theorem 6.4 (namely, the inequality ≤ in (6.5)) indeed implies Theorem 5.1(b) in Sect. 10. In this section, we only prove the easy inequality ≥ in (6.5). In Lemma 8.4, we shall see that \(\smash{\overline{A}}_{t}(\omega)=\smash{\underline {A}}_{t}(\omega)=t\) for all t∈[0,∞) for  -almost all ω; therefore, \(\operatorname{ntt}(\omega)=\omega\) for

-almost all ω; therefore, \(\operatorname{ntt}(\omega)=\omega\) for  -almost all ω. In conjunction with Lemma 6.3, this implies the inequality ≥ in (6.5), i.e.,

-almost all ω. In conjunction with Lemma 6.3, this implies the inequality ≥ in (6.5), i.e.,

Remark 6.5

Theorem 6.2 gives the price of the cheapest superhedge for the contingent claim F, but it is not applicable to the usual contingent claims traded in financial markets, which are not  -measurable. The theorem would be applicable to the imaginary contingent claim paying f(ω(τ

S

)) at time τ

S

(cf. (5.3); there is no payment if τ

S

=∞), where S>0 is a given constant and f is a given positive, bounded and measurable payoff function. (If the interest rate r is constant but different from 0, we can consider the contingent claim paying \(e^{\tau_{S} r}f(\omega (\tau_{S}))\) at time τ

S

.) The price of the cheapest superhedge will be

-measurable. The theorem would be applicable to the imaginary contingent claim paying f(ω(τ

S

)) at time τ

S

(cf. (5.3); there is no payment if τ

S

=∞), where S>0 is a given constant and f is a given positive, bounded and measurable payoff function. (If the interest rate r is constant but different from 0, we can consider the contingent claim paying \(e^{\tau_{S} r}f(\omega (\tau_{S}))\) at time τ

S

.) The price of the cheapest superhedge will be  , where c:=ω(0), if there are no restrictions on ω∈Ω, but will become

, where c:=ω(0), if there are no restrictions on ω∈Ω, but will become  if ω is restricted to be positive (as in many real financial markets).

if ω is restricted to be positive (as in many real financial markets).

Sections 7–11 are mainly devoted to the proof of the remaining statements in Theorems 5.1, 6.2 and 6.4, whereas Sect. 12 is devoted to the discussion of the financial meaning of our results and their connections with related probabilistic and financial literature.

7 Coherence

The following trivial result says that our trading game is coherent, in the sense that \(\operatorname{\overline{\mathbb{P}}}(\varOmega)=1\) (i.e., no positive capital process increases its value between time 0 and ∞ by more than a strictly positive constant for all ω∈Ω).

Lemma 7.1

\(\operatorname{\overline{\mathbb{P}}}(\varOmega)=1\). Moreover, for each c∈ℝ, \(\operatorname{\overline{\mathbb{P}}}(\omega(0)=c)=1\).

Proof

No positive capital process can strictly increase its value on a constant ω∈Ω. □

Lemma 7.1, however, does not even guarantee that the set of non-constant elements of Ω has outer content one. The theory of measure-theoretic probability provides us with a plethora of non-trivial events of outer content one.

Lemma 7.2

Let E be an event that almost surely contains the sample path of a continuous martingale with time interval [0,∞). Then \(\operatorname{\overline{\mathbb{P}}}(E)=1\).

Proof

This is a special case of Lemma 6.3 applied to \(F:=\operatorname{\boldsymbol{1}}_{E}\). □

In particular, applying Lemma 7.2 to Brownian motion started at c∈ℝ gives

and

(for the latter we also need Lemma 8.4 below). Both (7.1) and (7.2) have been used above.

Lemma 7.3

Let \(\operatorname{\overline{\mathbb{P}}}(B)=1\). For every set E⊆Ω, \(\operatorname{\underline{\mathbb{P}}}(E;B)\le \operatorname{\overline{\mathbb{P}}}(E;B)\).

Proof

Suppose \(\operatorname{\underline{\mathbb{P}}}(E;B)>\operatorname{\overline{\mathbb{P}}}(E;B)\) for some E; by the definition of \(\operatorname{\underline{\mathbb{P}}}\), this would mean that \(\operatorname{\overline{\mathbb{P}}}(E;B)+\operatorname{\overline{\mathbb{P}}}(E^{c};B)<1\). Since \(\operatorname{\overline{\mathbb{P}}}(\cdot;B)\) is finitely subadditive (see Lemma 4.1), this would imply \(\operatorname{\overline{\mathbb{P}}}(\varOmega;B)<1\), which is equivalent to \(\operatorname{\overline{\mathbb{P}}}(B)<1\) and therefore contradicts our assumption. □

8 Existence of quadratic variation

In this paper, the set Ω is always equipped with the metric

(and the corresponding topology and Borel σ-algebra, the latter coinciding with  ). This makes it a complete and separable metric space. The main goal of this section is to prove that the sequence of continuous functions \(t\in[0,\infty)\mapsto A^{n}_{t}(\omega)\) is convergent in Ω for typical ω; this is done in Lemma 8.2. This will establish the existence of A(ω)∈Ω for typical ω, which is part of Theorem 5.1(a). It is obvious that when it exists, A(ω) is increasing and A

0(ω)=0. The last part of Theorem 5.1(a), asserting that the intervals of constancy of ω and A(ω) coincide for typical ω, will be proved in the next section (Lemma 9.4).

). This makes it a complete and separable metric space. The main goal of this section is to prove that the sequence of continuous functions \(t\in[0,\infty)\mapsto A^{n}_{t}(\omega)\) is convergent in Ω for typical ω; this is done in Lemma 8.2. This will establish the existence of A(ω)∈Ω for typical ω, which is part of Theorem 5.1(a). It is obvious that when it exists, A(ω) is increasing and A

0(ω)=0. The last part of Theorem 5.1(a), asserting that the intervals of constancy of ω and A(ω) coincide for typical ω, will be proved in the next section (Lemma 9.4).

Lemma 8.1

For each T>0, for typical ω, \(t\in[0,T]\mapsto A^{n}_{t}\) is a Cauchy sequence of functions in C[0,T].

Proof

Fix a T>0 and fix temporarily an n∈{1,2,…}. Let κ∈{0,1} be such that \(T^{n-1}_{0}=T^{n}_{\kappa}\), and for each k=1,2,…, let

(this is only defined when \(T^{n}_{\kappa+2k}<\infty\)). If ω were generated by Brownian motion, ξ k would be a random variable taking value j, j∈{1,−1}, with probability 1/2; in particular, the expected value of ξ k would be 0. As the standard backward induction procedure shows, this remains true in our current framework in the following game-theoretic sense: there exists a simple trading strategy that, when started with initial capital 0 at time \(T^{n}_{\kappa+2k-2}\), ends with ξ k at time \(T^{n}_{\kappa+2k}\), provided both times are finite; moreover, the corresponding simple capital process is always between −1 and 1. (Namely, at time \(T^{n}_{\kappa+2k-1}\) bet −2n if \(\omega(T^{n}_{\kappa+2k-1})>\omega(T^{n}_{\kappa+2k-2})\) and bet 2n otherwise.) Notice that the increment of the process \(A^{n}_{t}-A^{n-1}_{t}\) over the time interval \([T^{n}_{\kappa+2k-2},T^{n}_{\kappa+2k}]\) is

i.e., η k =2−2n+1 ξ k .

The game-theoretic version of Hoeffding’s inequality (see Theorem A.1 in the Appendix below) shows that for any constant λ∈ℝ, there exists a simple capital process \(\mathfrak{S}^{n}\) with \(\mathfrak{S}^{n}_{0}=1\) such that for all K=0,1,2,…,

According to Eq. (A.1) in the Appendix (with x n corresponding to η k ), such an \(\mathfrak{S}^{n}\) can be defined as the capital process of the simple trading strategy betting the current capital times

on \(A^{n}_{t}-A^{n-1}_{t}\) at each time \(T^{n}_{\kappa+2k-2}\), k∈{1,2,…}. In terms of the original security, this simple trading strategy bets 0 on ω at each time \(T^{n}_{\kappa+2k-2}\) and bets the current capital times

on ω at each time \(T^{n}_{\kappa+2k-1}\), k∈{1,2,…}. It is clear that the process \(\mathfrak{S}^{n}\) is positive: it is constant in each time interval \([T^{n}_{\kappa+2k-2},T^{n}_{\kappa+2k-1}]\), and is linear in ω(t) in each time interval \([T^{n}_{\kappa+2k-1},T^{n}_{\kappa+2k}]\); therefore, its overall positivity follows from its positivity (cf. (8.2)) at the points \(T^{n}_{\kappa+2K}\), K∈{0,1,2,…}.

Fix temporarily α>0. It is easy to see that since the sum of the positive capital processes \(\mathfrak{S}^{n}\) over n=1,2,… with weights 2−n will also be a positive capital process, none of these processes will ever exceed 2n2/α except for a set of ω of outer content at most α/2. The inequality

can be equivalently rewritten as

Plugging in the identities

and taking λ:=2n, we can transform (8.3) to

which implies

This is true for any K=0,1,2,…; choosing the largest K such that \(T^{n}_{\kappa+2K}\le t\), we obtain

for any t∈[0,∞) (the simple case \(t<T^{n}_{\kappa}\) has to be considered separately). Proceeding in the same way but taking λ:=−2n, we obtain

instead of (8.4) and

instead of (8.5), which gives

instead of (8.6). We know that (8.6) and (8.7) hold for all t∈[0,∞) and all n=1,2,… except for a set of ω of outer content at most α.

Now we have all ingredients to complete the proof. Suppose there exists α>0 such that (8.6) and (8.7) hold for all n=1,2,… (this is true for typical ω). First let us show that the sequence \(A^{n}_{T}\), n=1,2,…, is bounded. Define a new sequence B n, n=0,1,2,…, as follows: \(B^{0}:=A^{0}_{T}\) and B n, n=1,2,…, are defined inductively by

(notice that this is equivalent to (8.6) with B n in place of \(A^{n}_{t}\) and = in place of ≤). As \(A^{n}_{T}\le B^{n}\) for all n, it suffices to prove that B n is bounded. If it is not, B N≥1 for some N. By (8.8), B n≥1 for all n≥N. Therefore, again by (8.8),

and the boundedness of the sequence (B n) follows from B N<∞ and

Now it is obvious that the sequence \((A^{n}_{t})\) is Cauchy in C[0,T]: (8.6) and (8.7) imply

□

Lemma 8.1 implies that for typical ω, the sequence \(t\in[0,\infty)\mapsto A^{n}_{t}\) is Cauchy in Ω. Therefore, we have the following implication.

Lemma 8.2

The event that the sequence of functions \(t\in[0,\infty)\mapsto A^{n}_{t}\) converges in Ω is full.

We can see that the first term in the conjunction in (5.3) holds for typical ω; let us check that τ s itself is a stopping time.

Lemma 8.3

For each s≥0, the function τ s defined by (5.3) is a stopping time.

Proof

It suffices to check that the condition τ s ≤t can be written as

where (q 1,q 2) ranges over the non-empty intervals with rational end-points. Let T be the largest number in [0,∞] such that the functions \(\smash{\overline{A}}|_{[0,T)}\) and \(\smash {\underline{A}}|_{[0,T)}\) coincide and are continuous; we use A′ as the common notation for \(\smash{\overline {A}}|_{[0,T)}=\smash{\underline{A}}|_{[0,T)}\). The condition τ s ≤ t means that for some t′∈[0,t], the domain of A′ includes [0,t′) and \(\sup_{u<t'}A'_{u}=~s\). Now it is clear that the condition (8.9) is satisfied if τ s ≤t. In the opposite direction, suppose (8.9) is satisfied. Then \(\smash{\overline{A}}_{u}=\smash{\underline{A}}_{u}\) whenever u∈(0,t) satisfies \(\smash{\underline{A}}_{u}<s\). Indeed, if we had \(\smash{\underline{A}}_{u}<\smash{\overline{A}}_{u}\) for such u, we could choose (q 1,q 2)⊆(0,s) satisfying \(\smash{\underline{A}}_{u}<q_{1}<q_{2}<\smash{\overline{A}}_{u}\) and there would be no q satisfying the required properties in (8.9): if q≤u, \(\smash{\underline{A}}_{q}\le\smash{\underline{A}}_{u}<q_{1}\), and if q≥u, \(\smash{\overline{A}}_{q}\ge\smash{\overline{A}}_{u}>q_{2}\). Combining this result with (8.9), we can see that there is a function A″ with a domain [0,t″)⊆[0,t) such that \(A''_{u}=\smash{\overline{A}}_{u}=\smash{\underline{A}}_{u}\) for all u∈[0,t″) and supA″=s. The function A″ is increasing and, by (8.9), continuous; this implies τ s ≤t. □

Let us now consider the case of Brownian motion.

Lemma 8.4

For any

c∈ℝ,  .

.

Proof

It suffices to consider only rational values of t and, therefore, a fixed value of t. The convergence \(A^{n}_{t}\to t\) (see (5.1)) in  -probability can be deduced from the law of large numbers applied to \(T^{n}_{k}\):

-probability can be deduced from the law of large numbers applied to \(T^{n}_{k}\):

-

the law of large numbers implies that \(A^{n}_{t}\to t\) in

-probability because

-probability because  (this is a combination of the second statement of Theorem 2.49 in [45], which is a corollary of Wald’s second lemma, with the strong Markov property of Brownian motion);

(this is a combination of the second statement of Theorem 2.49 in [45], which is a corollary of Wald’s second lemma, with the strong Markov property of Brownian motion); -

the law of large numbers is applicable because

(see the proof of the second statement of Theorem 2.49 in [45]).

(see the proof of the second statement of Theorem 2.49 in [45]).

It remains to apply Lemma 8.2, which, in combination with Lemma 6.3 (applied to the indicator functions of events), implies that the sequence (A

n) converges in Ω

-almost surely. □

-almost surely. □

Remark 8.5

This section is about the quadratic variation of the price path, but in finance the quadratic variation of the stochastic logarithm (see e.g. [33], p. 134) of a price process is usually even more important than the quadratic variation of the price process itself. A pathwise version of the stochastic logarithm has been studied by Norvaiša in [48, 49]. Consider an ω∈Ω such that A(ω) exists, belongs to Ω, and has the same intervals of constancy as ω; Theorem 5.1(a) says that these conditions are satisfied for typical ω. Fix a time horizon T>0 and suppose additionally that inf t∈[0,T] ω(t)>0. The limit

(where we use the same notation as in (5.1)) exists for all t∈[0,T], and the function R(ω):t∈[0,T]↦R t (ω) satisfies ([49], Proposition 56)

In financial terms, the value R t (ω) is the cumulative return of the security ω over [0,t] ([48], Sect. 2); in probabilistic terms, R(ω) is the pathwise stochastic logarithm of ω. The quadratic variation of R(ω) can be defined as

(the existence of the limit and the equality are also parts of Proposition 56 in [49]).

9 Tightness

In this section, we do some groundwork for the proof of Theorems 5.1(b) and 6.4, and also finish the proof of Theorem 5.1(a). We start from the results that show (see the next section) that \(\smash{\underline{Q}}_{c}\) is tight in the topology induced by the metric (8.1).

Lemma 9.1

For each α>0 and S∈{1,2,4…},

Proof

Let S=2d, where d∈{0,1,2,…}. For each m=1,2,…, divide the interval [0,S] into 2d+m equal subintervals of length 2−m. Fix for a moment such an m, and set β=β m :=(21/4−1)2−m/4 α (where 21/4−1 is the normalizing constant ensuring that the β m sum to α) and

(we shall be careful to use ω i only when t i <∞).

We first replace the quadratic variation process A (in terms of which the stopping times τ s are defined) by a version of A ℓ for a large enough ℓ. If τ is any stopping time (we are interested in τ=t i for various i), set, in the notation of (5.1),

(we omit parentheses in expressions of the form x∨y∧z since we have (x∨y)∧z=x∨(y∧z), provided x≤z). The intuition is that \(A^{n,\tau}_{t}(\omega)\) is the version of \(A^{n}_{t}(\omega)\) that starts at time τ rather than 0.

For i=0,1,…,2d+m−1, let \(\mathfrak{E}_{i}\) be the event that t i <∞ implies that (8.7), with α replaced by γ>0 and \(A^{n}_{t}\) replaced by \(A^{n,t_{i}}_{t}\), holds for all n=1,2,… and t∈[t i ,∞). Applying a trading strategy similar to that used in the proof of Lemma 8.1 but starting at time t i rather than 0, we can see that the inner content of \(\mathfrak{E}_{i}\) is at least 1−γ. The inequality

holds for all t∈[t i ,t i+1] and all n on the event \(\{t_{i}<\infty\}\cap\mathfrak{E}_{i}\). For the value t:=t i+1, this inequality implies

(including the case t i+1=∞). Applying the last inequality to n=ℓ+1,ℓ+2,… (where ℓ will be chosen later), we obtain that

holds on the whole of \(\{t_{i}<\infty\}\cap\mathfrak{E}_{i}\) except perhaps a null set. The qualification “except a null set” allows us not only to assume that \(A^{\infty,t_{i}}_{t_{i+1}}\) exists in (9.3), but also to assume that \(A^{\infty,t_{i}}_{t_{i+1}}=A_{t_{i+1}}-A_{t_{i}}=2^{-m}\). Let \(\gamma:=\frac{1}{3}2^{-d-m}\beta\) and choose ℓ=ℓ(m) so large that (9.3) implies \(A^{\ell,t_{i}}_{t_{i+1}}\le2^{-m+1/2}\) (this can be done as both the product and the sum in (9.3) are convergent, and so the product can be made arbitrarily close to 1 and the sum can be made arbitrarily close to 0). Doing this for all i=0,1,…,2d+m−1 will ensure that the inner content of

is at least 1−β/3.

An important observation for what follows is that the process defined as 0 for t<t i and as \((\omega(t)-\omega(t_{i}))^{2}-A^{\ell,t_{i}}_{t}\) for t≥t i is a simple capital process (corresponding to betting \(2(\omega(T^{\ell}_{k})-\omega(t_{i}))\) at each time \(T^{\ell}_{k}>t_{i}\)). Now we can see that

will hold on the event (9.4), except for a set of ω of outer content at most β/3: indeed, there is a positive simple capital process taking value at least \(2^{1/2}S+\sum_{i=1}^{j}(\omega_{i} - \omega_{i-1})^{2}-j2^{-m+1/2}\) at time t j on the conjunction of the events (9.4) and {t j <∞} for all j=0,1,…,2d+m, and this simple capital process will make at least \(2^{1/2}\frac{3}{\beta}S\) at time τ S (in the sense of lim inf if τ S =∞) out of initial capital 21/2 S, if (9.4) happens but (9.5) fails to happen.

For each ω∈Ω, define

where ϵ=ϵ m will be chosen later. It is clear that |J(ω)|≤21/23S/βϵ 2 on the set (9.5). Consider the simple trading strategy whose capital increases by \((\omega(t_{i})-\omega(\tau))^{2}-A^{\ell ,\tau}_{t_{i}}\) between each time τ∈[t i−1,t i ]∩[0,∞) when |ω(τ)−ω i−1|=ϵ for the first time during [t i−1,t i ]∩[0,∞) (this is guaranteed to happen when i∈J(ω)) and the corresponding time t i , i=1,…,2d+m, and which is not active (i.e., sets the bet to 0) otherwise. (Such a strategy exists, as explained in the previous paragraph.) This strategy will make at least ϵ 2 out of (21/23S/βϵ 2)2−m+1/2, provided all three of the events (9.4), (9.5) and

happen. (And we can make the corresponding simple capital process positive by being active for at most 21/23S/βϵ 2 values of i and setting the bet to 0 as soon as (9.4) becomes violated.) This corresponds to making at least 1 out of (21/23S/βϵ 4)2−m+1/2. Solving the equation (21/23S/βϵ 4)2−m+1/2=β/3 for ϵ gives ϵ=(2×32 S2−m/β 2)1/4. Therefore,

except for a set of ω of outer content β. By the countable subadditivity of outer content (Lemma 4.1), (9.6) holds for all m=1,2,… except for a set of ω of outer content at most ∑ m β m =α.

We have now allowed m to vary and so write \(t^{m}_{i}\) instead of t i defined by (9.2). Fix an ω∈Ω satisfying A(ω)∈Ω and (9.6) for m=1,2,…. Intervals of the form \([t^{m}_{i-1}(\omega),t^{m}_{i}(\omega)]\subseteq [0,\infty)\), for m∈{1,2,…} and i∈{1,2,3,…,2d+m}, will be called predyadic (of order m). Given an interval [s 1,s 2]⊆[0,S] of length at most δ∈(0,1) and with \(\tau_{s_{2}}<\infty\), we can cover \((\tau_{s_{1}}(\omega),\tau_{s_{2}}(\omega))\) (without covering any points in the complement of \([\tau_{s_{1}}(\omega),\tau_{s_{2}}(\omega)]\)) by adjacent predyadic intervals with disjoint interiors such that, for some m∈{1,2,…}; there are between one and two predyadic intervals of order m; for i=m+1,m+2,…, there are at most two predyadic intervals of order i (start from finding the point in [s 1,s 2] of the form j2−k with integer j and k and the smallest possible k, and cover \((\tau_{s_{1}}(\omega),\tau_{j2^{-k}}]\) and \([\tau_{j2^{-k}},\tau _{s_{2}}(\omega))\) by predyadic intervals in the greedy manner). Combining (9.6) and 2−m≤δ, we obtain

which is stronger than (9.1) as 29/431/2(21/4−1)−1/2(1−2−1/8)−1≈228.22. □

Now we can prove the following elaboration of Lemma 9.1, which will be used in the next two sections.

Lemma 9.2

For each α>0,

Proof

Replacing α in (9.1) by α S :=(1−2−1/2)S −1/2 α for S=1,2,4,… (where 1−2−1/2 is the normalizing constant ensuring that the α S sum to α over S), we obtain

The countable subadditivity of outer content now gives

which is stronger than (9.7) as 230 (1−2−1/2)−1/2≈424.98. □

The following lemma develops inequality (9.5) and will be useful in the proof of Theorem 5.1.

Lemma 9.3

For each α>0,

in the notation of (9.2).

Proof