Abstract

Wind-generated surface gravity waves forms an integral part in modulating the air-sea exchange processes. Information of wave parameters is also very essential in planning marine- and coastal-related activities. It is now well recognized that wind-wave activity shows changing trends over the global ocean basins. Numerous studies have addressed the projected changes in significant wave height for the Indian Ocean (IO) region, and there is a need to conduct thorough performance evaluation of global climate models (GCMs) over this region for futuristic planning. With this motivation, the present study examined the performance of historical dynamical wave climate simulations generated under the Coordinated Ocean Wave Climate Projections (COWCLIP) experiment. The simulations utilized near-surface wind speed datasets from 8 CMIP5 (Fifth phase of Coupled Model Intercomparison Project) GCMs to force a spectral wave model. The skill level of individual GCM forced wave simulations and multi-model mean (MMM) in reproducing the significant wave height (SWH) over four different sub-domains in the IO was evaluated with reference to the ECMWF Reanalysis 5th Generation (ERA5) datasets. Several performance metrics such as the Taylor Skill, M-Score, Model Climate Performance Index (MCPI), and Model Variability Index (MVI) are employed to establish the skill level of model simulations. The study deciphers that model performance is highly reliant on the region and its characteristics. Representation of the historical wave climate over the Arabian Sea (AS) and the Bay of Bengal (BoB) regions is remarkable in the COWCLIP datasets. However, there are discrepancies noticed in SWH distribution over the South Indian Ocean (SIO) attributed to model limitations in adequately reproducing swell wave fields over that region. The MMM constructed using the best-performing models (MRI-CGCM3, ACCESS1.0, INMCM4, HadGEM2-ES, and BCC-CSM1.1) is found consistent at all the sub-domains. The study signifies that the performance evaluation of GCM forced wave simulations is crucial before employing them for practical applications. Best-performing models listed from this study can be used to establish futuristic scenarios of SWH in a changing climate for the IO region.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Changes in atmospheric circulation and dynamics can directly impact fluxes at the air-sea interface, sea-level pressure, and wind-waves. The impact of climate change can affect wind-waves, and therefore, it is very essential to have a proper understanding of its evolution, quantification, and evaluation of its variability having significant practical applications. The present study focuses on the Indian Ocean region that is bordered by a highly vulnerable coastline and islands directly impacted by sea-level rise, wave-induced flooding, and extreme weather events (Church et al. 2006). These effects indicate a demand for adaptive planning that can benefit the coastal communities in coping up with the associated risk of future wind-wave climate change (Morim et al. 2018). Therefore, the availability of high-quality data can undoubtedly provide the best possible estimate of changes pertaining to ocean surface waves.

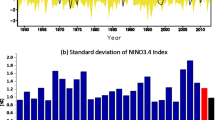

In a broader perspective, the wave measurements from long-term records of Voluntary Observing Ships (1900–2000) have established negative trends (− 11 cm/decade) for the SIO (Trenberth et al. 2007). Calibrated and validated datasets from satellite altimeters are widely used to interpret global trends in wind and wave climate (Woolf et al. 2002; Meucci et al. 2020; Young et al. 2011; Young and Ribal 2019). A recent study (Gupta et al. 2015) reported that the latitudinal band 40° S–55° S elucidates the highest impact of climate change in the Indian Ocean (IO) region. Using similar datasets for 28 years, Sreelakshmi and Bhaskaran (2020b) reported an increasing trend in extreme wind-waves for the Extra-Tropical South Indian Ocean belt since 2011.

Among the reanalysis products, ERA5 has been recommended for its advancements and benefit in data assimilation techniques (Meucci et al. 2020). Studies reported an excellent agreement of ERA5 data collated with observations and models both at global and regional scales (Stefanakos 2019; Dullaart et al. 2020; Tarek et al. 2020; Rivas and Stoffelen 2019). Utilizing 41 years of ERA5 data, Sreelakshmi and Bhaskaran (2020c) stated that the AS and the head BoB show decreased wind-sea activity. Recently, Bruno et al. (2020) evaluated the performance of both the wind-sea and swell components for the western AS. Naseef and Kumar et al. (2020) have noticed an increasing trend for the maximum SWH (0.73 cm/year) for the IO. A consistent rate of increase in extreme wind speed (0.8–1.2 cm/s per year) and wave height (0.42–0.88 cm per year) has been reported for the south and central AS (Aboobacker et al. 2021).

Climate models developed under the Intergovernmental Panel on Climate Change (IPCC) have achieved great attention for their benefits in showcasing historical and future changes in various parameters under climate change scenarios. Ocean wave parameters are not available under the CMIP project; instead, the models simulate wind field, sea-ice concentration, and sea-level information. Prior studies have generated wave projections using input parameters from CMIP3 and CMIP5 projects. They have used both statistical methods (Wang and Swart 2018) and dynamical methods (Hemer et al. 2013a) to develop projections of wind-wave parameters. The future wave conditions are projected and evaluated across the global oceans (Hemer Mark et al. 2013; Morim et al. 2019) and regional basins (Wang and Swart 2018; Bricheno and Wolf 2018; Gallagher et al. 2016).

A regional study over the Northeast Atlantic Ocean by Perez et al. (2014) analysed the performance of CMIP5 models in simulating the wind speed. They reported that models ACCESS1.0, EC-Earth, HadGEM2-ES, HadGEM2-CC, and CMCC-CM performed well compared to NCEP-NCAR, ERA-40C, and NOAA-CIRES datasets. Zappa et al. (2013) had shortlisted EC-Earth, GFDL-CM3, HadGEM2-ES, and MRI-CGCM3 as the best-performing GCMs in reproducing the North Atlantic Extra-Tropical cyclones. For the European region, the wind speed simulated by EC-Earth, MIROC, and HadGEM2 correlated well during the winter season (Masato et al. 2013). A recent study by Morim et al. (2020) indicated that MRI-CGCM3 and MRI-ESM1 models overestimated the mean and extreme wind speeds due to considerable inter-model uncertainty. Their study indicated a negative bias in wind speed for most of the global oceans, with exception for the equatorial regions. Among the 19 CMIP5 GCMs, the EC-Earth model was reported as the top-performing model for the North-East Atlantic (Hazeleger et al. 2012). A seasonal difference of 5–10% in SWH simulations over the North Atlantic Ocean was depicted by the Wave Watch III (WWIII) model forced with ERA-Interim and EC-Earth wind data (Gallagher et al. 2016).

In context to the Indian Ocean, seven ensembles of the EC-Earth model are employed in the WAM model to generate wave parameters. Those simulations were validated against 72 in situ measurements, ERA-Interim, and CFSR datasets (Semedo et al. 2018). Historical SWH data show the highest difference in the NIO and the lowest bias for the extra-tropical SIO. The zonal wind stress distribution over the equatorial IO is weaker than the QuikScat, NCEP-1, and ERA-Interim datasets (Lee et al. 2013). Wave height simulations produced by WWIII forced with CMIP5 models exhibited a significant positive bias for the IO region (Casas-Prat et al. 2018). The overestimation of SWH data in some regions is attributed to the drawbacks in the SMC grid in representing the remote island locations. Wang et al. (2015) reported that CSIRO-Mk3.6.0 and EC-Earth exhibited substantial climate change signals in the annual mean SWH for the Eastern Tropical and NIO regions. The WAM model forced with the EC-Earth wind field have simulated a historical (1950–2010) wind speed and wave height trend for the IO as 0.13 × 10−2 m/s per decade and 0.78 × 10−2 m/decade, respectively (Dobrynin et al. 2012). A study using the MRI-AGCM3.2S model (Kamranzad and Mori 2018; Kamranzad et al. 2017) indicated a decrease in SWH for the NIO and the central SIO regions. Their study noticed considerable overestimation in the regions near Antarctica due to the absence of ice cover in the SWAN model compared to satellite measurements. Thereafter, Kamranzad and Mori (2019) demonstrated that SWAN outperforms the WWIII model in simulating SWH against satellite data for the IO region. Many regions in the IO and the west coast of Maldives are prone to unstable wind-wave climate under the extreme emission scenario. Another study (Chowdhury et al. 2019) used CMIP5 wind information to force the MIKE21 model. Their study (Chowdhury et al. 2019) signifies increased SWH for the Indian coast, specifically a rise of 30% in wave period for the east coast of India under the RCP 4.5 scenario. Remarkable model skills by GFDL-CM3 and MRI-CGCM3 are reported in a recent study (Srinivas et al. 2020) that reflected on the teleconnections (between SWH and Indian Ocean Dipole).

The Coordinated Ocean-Wave Climate Projections (COWCLIP) project has developed climate model projections with CMIP5 surface winds forced to WWIII (Hemer Mark 2010). The team has evaluated the performance of wave projections for global oceans by comparing them with ERA40C, ERA-Interim, and NCEP-CFSR data (Hemer and Trenham 2016). Projected changes in SWH from CMIP3 models (Hemer et al. 2013) have reported an increased wind activity over the eastern equatorial IO region. They found a consistent projected decrease in SWH and wind speed over the North and the East IO regions. The multi-model ensemble from CMIP5 models demarcated a projected decrease of 25.8% in the global SWH distribution (Hemer et al. 2013). The studies mentioned above have explored and presented the future wave climate projections for the global and regional domains. However, pertinent studies based on the CMIP5 model evaluation of SWHs in the IO domain utilizing various skill analysis methods are minimal.

Therefore, the present study used the COWCLIP datasets developed by the Commonwealth Scientific and Industrial Research Organisation (CSIRO), Australia (Hemer et al. 2012). The goal is to evaluate the skill level of 8 GCMs in reproducing the historical wind-wave climate (1979–2005) for the IO region. Evaluation of the model performance is planned by comparing the simulations with the latest ERA5 wave data. The IO sector is divided into different sub-domains based on wind-wave activity to differentiate the model performance at each region. Comparison exercise for the historical period would provide enough confidence among the models to evaluate its usage for future projections. The best-performing models can be used to construct an ensemble mean to access the projected changes in wind-wave climate for the IO, which would be executed in a separate study.

2 Data and methodology

2.1 Datasets

2.1.1 COWCLIP-CMIP5 GCM forced wave simulations

The COWCLIP project employs the spectral wave model, WAVEWATCH III (version 3.14) (Tolman 2009), to generate the global simulations at 1° × 1° spatial resolution (Hemer et al. 2013). Sensitivity of Wave Watch III simulations for the Indian Ocean region is documented in many studies (Seemanth et al. 2016; Remya et al. 2020; Swain and Umesh 2018).The model grid is generated using the DBDB2 v3.0 bathymetry and GSHHS shoreline database to define the obstruction grid for unresolved boundaries. The model wave spectra were discretized by non-linear frequency bands ranging between 0.04 and 0.5 Hz with a directional resolution of 15° and 25° (Hemer et al. 2013. Near-surface wind speed and sea-ice area fraction at a temporal resolution of 3 h serve as input for generating the wave parameters. The historical wave simulations covering 26 years (1979–2005) are generated using each of the 8 GCM simulated datasets. The URL link http://data-cbr.csiro.au/thredds/catalog/catch_all/CMAR_CAWCRWave_archive/Global_wave_ projections/HISTORICAL/CMIP5/catalog.html is used to extract the datasets from the CSIRO archive. This study utilized the SWH data forced by eight individual GCMs on a monthly resolution for the IO domain (Table 1).

2.1.2 ERA5-reference datasets

The fifth-generation ECMWF Reanalysis product (ERA5) is a replacement for the ERA-Interim that combined model data with global observations using the data assimilation technique. The ERA5 dataset can be used to assess the effectiveness of CMIP5 GCM forced wave simulations. Altimeter products available from 1993 is not sufficient for long-term climate model evaluations. Therefore, the reanalysis product serves as the best available wave dataset to represent the historical wind-wave climate. The ERA5 data spans 1979 to date with a spatial resolution of 0.25° globally (Hersbach et al. 2020). ERA5 datasets are produced through a 4DVAR data assimilation scheme in CY41R2 of ECMWF’s Integrated Forecast System (IFS). The data assimilation method incorporates the observations from satellite (satellite radiances-infrared and microwave, satellite retrievals from radiance data, GPS-radio occultation data, scatterometer data, altimeter data), in situ (buoys, ships, wind profiler, radar), and snow (land stations, satellite) data. The ERA5 products are superior to ERA-Interim in terms of higher spatial and temporal resolution, better representation of precipitation, evaporation, sea surface temperature, and sea ice (Rivas and Stoffelen 2019; He et al. 2021; Tarek et al. 2020; Gleixner et al. 2020). The ERA5 wave model product employs a wave spectrum having 24 direction and 30 frequency bands along with ETOPO2 bathymetry. A revised unresolved bathymetry scheme and wave advection scheme are included for improving the model in representing coastlines and unresolved islands (Bidlot 2012). Additional output parameters define the wave-modified fluxes, swell components, and freak waves in the ocean (Janssen and Bidlot 2009). Considering the inherent benefits, the present study uses monthly SWH data from the ERA5, which is interpolated to 1° × 1° grid as recommended in the COWCLIP project (Wang et al. 2015). The linear interpolation method is used, which maintains homogeneity for ERA5 reference compared to the GCM forced wave simulations.

2.2 Methodology

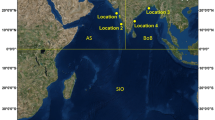

The GCM forced wave simulations produced under the COWCLIP project are evaluated through multiple performance metrics. The entire IO domain is divided into four sub-domains based on wind-wave activity, such as the Arabian Sea (AS), Bay of Bengal (BoB), Tropical Indian Ocean (TIO), and the South Indian Ocean (SIO). Geographical coordinates corresponding to each sub-domain are provided in Table 2 and Fig. 1. The performance metrics are calculated for individual sub-domains. The study also incorporates various methods to evaluate model skill in simulating the historical wave climate in addition to the spatial mean, standard deviation, and bias measures. The methods are widely applied in climate model evaluation for various ocean and atmospheric parameters. The chosen skill assessment metrics are also widely used in the evaluation of climate model performance. Metrics such as M-score, Taylor skill, MCPI, and MVI enable testing of the sensitivity of model performance. The evaluation of CMIP5 and CMIP6 models is performed using the Taylor skill score measure (Chen et al. 2021; Fan et al. 2020; Mohan and Bhaskaran 2019; Hirota and Takayabu 2013; Ito et al. 2020; Kusunoki and Arakawa 2015). M-score is another important metric for differentiating climate models based on their performance skill score (Hemer and Trenham 2016; Katzfey et al. 2016; Bador et al. 2015; Elguindi et al. 2014). The MCPI and MVI represent the relative error in the models by comparing them with the reference data (Gleckler et al. 2008; Chen and Sun 2015; Werner 2011; Díaz-Esteban et al. 2020; Luo et al. 2020).

2.2.1 Mielke measure (M-Score)

The Mielke measure or M-score (Mielke Jr 1991; Watterson 1996; Watterson et al. 2014; Watterson 2015) is a non-dimensional matrix used to represent the skill level of models. They are widely applied in climate variables such as wave parameters (Hemer and Trenham 2016), sea surface temperature, precipitation, and mean sea-level pressure (Katzfey et al. 2016; Bador et al. 2015; Elguindi et al. 2014). M-score is calculated using Eq. 1 as:

where x corresponds to the modelled (GCMs) field, y is the observed field (ERA5 reanalysis), V signifies the variance, and G is the spatial mean of the variable (SWH) over the domain considered. The M-score represents the mean square error (MSE), which is non-dimensionalized by including the spatial variance of the field. The arcsin transformation denotes the square root of MSE, rather than MSE itself. This is particularly useful when the correlation coefficient values tend to be close to one. The calculated skill score range is 0–800, where a zero shows no skill for the model. After interpolating the x and y fields to the same grid, the scores were calculated for 8 GCM forced wave simulation outputs compared with the ERA5 data. The historical period of 1979–2005 is considered as fixed duration for all statistical measures.

2.2.2 Taylor skill score

Taylor skill score (Taylor 2001) relates the correlation coefficient and standard deviations of the models to observations. This score is a beneficial tool in the climate model evaluation as documented in many studies (Mohan and Bhaskaran 2019; Hirota and Takayabu 2013; Ito et al. 2020; Kusunoki and Arakawa 2015). The Taylor skill score is calculated as follows:

where R represents the correlation coefficient of each model with reference. The maximum correlation coefficient R0 (set to 1 for this analysis). The ratio of standard deviations of each model against the observed values is represented by SDR. A Taylor skill score value close to 1 shows a better skill for the model. We present the Taylor diagrams to feature the performance of the GCMs in each sub-domain (Krishnan and Bhaskaran 2020). In the Taylor diagram, the abscissa represents the reference dataset (ERA5). The azimuthal angle shows the correlation between the models and reference dataset, and the radial distance from the origin represents the standard deviation. The root mean square error is shown as proportional to the distance between each GCM and the reference (Ogata et al. 2014).

2.2.3 Model Climate Performance Index (MCPI)

The MCPI index emphasizes the models’ overall performance, which is estimated by averaging the relative errors across the fields and domains of the study (Gleckler et al. 2008; Chen and Sun 2015; Werner 2011). To calculate the MCPI, we estimate the root mean square difference (RMSD) between each model and reference dataset as follows:

where F is the simulated field; R is the reference field; i, j, and t represent the longitude, latitude, and time; and W is the weighted sum. Later, the relative error is calculated by relating individual RMSD values of each wave simulation (Emfr) and median of all RMSD values (\( {\overline{E}}_{fr} \)) calculated. The relative error is calculated as follows:

The median of the RMSD values is calculated instead of the mean to reduce the influence of large errors in the results (Gleckler et al. 2008). Relative errors are calculated for 8 of the GCM forced wave simulations against ERA5 data at four sub-domains. Smaller values for MCPI indicate better agreement with the reference data, and a negative value usually indicates a remarkable skill than the typical model (Chen and Sun 2015).

2.2.4 Model Variability Index (MVI)

The Model Variability Index denotes the ratio of simulated to observed variance of the datasets considered (Gleckler et al. 2008). The MVI is calculated as

where β2 and F represent the ratio of the model to ERA5 variance and the total number of variables respectively. A better model which replicates observations well would have the MVI value close to zero. This method addresses the model issues such as excessively large or small inter-annual variability (Bao et al. 2014). MVI is useful for evaluating the difference between the model and observation (Díaz-Esteban et al. 2020; Luo et al. 2020).

There can be limitations when using a single method to assess the model skills.

The M-Score does not provide details on the bias whether it is positive or negative, as the score is based on squared differences (Gu et al. 2015). On the other hand, MCPI does not reveal model errors as the measure is a residual of large spread in model performance which are variable specific. The metric MVI provides an overall performance index in representing the inter-annual variability (Radić and Clarke 2011). For the variables with a larger degree of variation, the error shown by the normalized Taylor diagrams will be smaller than the actual error (Gleckler et al. 2008). Therefore, we considered four different metrics to evaluate the performance of each model. Multi-model ensemble mean (MMM) is constructed using five best-performing models following the analyses as mentioned above. The performance of MMM and the individual models is analyzed and discussed in the subsequent sections.

3 Results and discussions

3.1 Spatial analysis of GCM forced wave simulations

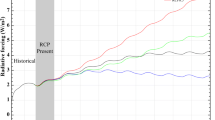

The monthly mean of GCM simulated SWH is compared with the ERA5 Reanalysis for 26 years (1979–2005). Figure 2 illustrates that the wave simulations by MRI-CGCM3 and INMCM4 follow the spatial pattern of SWH similar to the ERA5. Besides that, HadGEM2-ES and ACCESS1.0 resemble the maximum SWH noticed over Eastern regions of the SIO. A gross underestimation is noticed in the CNRM-CM5 model SWH values all over the IO compared to ERA5. Wave height distribution ranged between 0 and 2 m for the North Indian Ocean (NIO), 2 and 3.5 m for the Tropical Indian Ocean (TIO), and 3.5 and 5 m for the South Indian Ocean (SIO) in the ERA5 data. The mean MMM constructed from the best-performing models reproduced a similar pattern compared to the reference dataset.

The standard deviation (STD) of any variable represents dispersion from the mean (Dobrynin et al. 2012; Kumar et al. 2020). From Fig. 3, the models follow a similar trend in locations with high STD values (North-Western AS and SIO). The model ACCESS1.0 replicates the STD distribution close to the ERA5 data. On the other hand, MIROC5 simulates a maximum STD value of around 1.2 m over the North-Western AS, an overestimation of about 0.2 m. As mentioned earlier, the CNRM-CM5 forced SWH data showed higher underestimation over the SIO region.

Standard bias in climate model simulations has been discussed widely (Krishnan and Bhaskaran 2019a; Xu et al. 2014). Figure 4 clearly shows that most of the GCMs simulated SWH overestimate the ERA5 by a maximum of 1 m. The models illustrate the highest bias over the AS, equatorial IO, and the South-Western areas of the SIO. Slightly positive bias values over the Western TIO are attributed to the waves generated by trade winds, which does not have a relationship with model resolution (Hemer and Trenham 2016). An underestimation in SWH ranging between 1 and 1.5 m is depicted over the Eastern SIO. Compared to the individual models, MMM showcases a lesser bias for the overall IO. The accumulated bias in wave simulations is attributed to the wave model’s uncertainty, along with the input GCM fields (Hemer and Trenham 2016). The CMIP5 GCMs are reported to carry biases caused by the model parametrizations and model physics (Ma et al. 2014). The bias errors located at any specific region can be linked with phenomena occurring at distant locations (Wang et al. 2014). Nayak et al. (2013) reported that the remotely forced long waves generated from the Southern ocean influence the East coast of India. The wave climate of SIO comprises both swells and wind-sea generated by the trade wind system. The biases accumulated in these waves can be dispersed by swell waves (Lee et al. 2013). Variations in the SWH values over the SIO can be attributed to the lower skill of climate models in resolving this region’s complexity.

The Taylor skill score of each GCM forced wave simulation and MMM are shown in Fig. 5. Taylor skill manifests the mean variability among the models in simulating SWH over the IO domain (Mohan and Bhaskaran 2019; Davini and Cagnazzo 2014; Hirota and Takayabu 2013). A common feature depicted among the models is that higher skill (0.8–1.0) is seen over the AS, BoB, and the equatorial IO. MIROC5 observes deficient skill close to zero over the Eastern and Western regions of TIO and the SIO. The benefits of ensemble average are reflected in MMM by exhibiting the highest Taylor skill score. The lowest model skill over the Western TIO region is seen prevailing in both individual models and MMM.

3.2 Performance evaluation metrics (sub-domain analysis)

In addition to the spatial variability analysis, the study demands a detailed investigation on the performance of individual GCMs over each sub-domain. A recent study by Sreelakshmi and Bhaskaran (2020a) had established the fact that highest wave activity exists over the extra-tropical SIO and lowest for the AS. The wave period data from CMIP5 and CORDEX datasets showed an overestimation for the SIO region (Chowdhury and Behera 2019). Therefore, the specific behaviour of wave climate in the IO domain demands verification of the model competency over each sub-domain. The variability of the modelled SWH by GCMs and ERA5 is dominant over the AS, BoB, TIO, and SIO regions from the bias error and Taylor skill. Following that, the performance matrices such as the Taylor diagram, M-Score, MCPI, and MVI are calculated for each IO sub-domain.

Firstly, we have evaluated the Mielke measure or M-Score to rank the GCM forced wave simulations. Hemer and Trenham (2016) have noticed the largest M-Score (765) for the full global domain. The same proves that the wave field simulated by the COWCLIP models reproduces the global structure relatively well. Furthermore, a detailed analysis on the individual sectors would provide additional confidence in choosing the best model. M-Score is calculated for 8 GCM forced wave simulations and MMM over four sub-domains (represented in Fig. 1). In Fig. 6, BoB shows the highest similarity (M-Score of 768) between MMM and ERA5 among the four sub-domains. The three domains other than AS have shown a higher skill for MMM than that of any individual model. The wave simulations by the best-performing models, HadGEM2-ES, BCC-CSM1.1, and ACCESS1.0, portray considerable skill compared to MMM for the AS domain. The mentioned models show the M-Score greater than 600 for the AS, BoB, and SIO, whereas they present low skill over the TIO. The MMM showed a comparatively low M-Score for the TIO (633) and SIO (640) basins. The model CNRM-CM5 (at least M-Score of 179 for TIO) underperforms consistently in the analysis. Similar observation is also noticed in the global simulations (Hemer and Trenham 2016). In contrast, CNRM-CM5 performs moderately well for the AS (M-Score of 576). The model MIROC5 also showed a notably high score for the AS compared to the other three domains. The remarkable performance of MMM in the four sub-domains is reflected in the M-Score analysis. The analysis suggests that the GCM forced simulations reproduced the wavefield structure of IO reasonably well.

After determining the M-Score, certain independent checks have been performed for the GCM forced wave simulations to ascertain the wave data quality. The Model Climate Performance Index (MCPI) is a measure of the relative error linked with the root mean square errors between the simulated and observed fields. In addition, the Model Variability Index (MVI) evaluates the variance of the fields. Figure 7 shows the MCPI and MVI values, demonstrating the spread of the model performance and model variability for each domain. The smaller the values of MCPI and MVI, the better the model performance (Gleckler et al. 2008). Analogous to the M-Score, BoB showed a remarkable correlation for MCPI and MVI by establishing smaller values for the indices. A substantial degree of skill is visible for HadGEM2-ES, BCC-CSM1.1, and ACCESS1.0 for the AS domain. The wave simulations by INMCM4 are weaker for the AS and BoB. The model CNRM-CM5 underperforms in the TIO and SIO domains. For the BoB, TIO, and SIO domains, MMM outperforms the individual models. The models GFDL-CM3, BCC-CSM1.1, and ACCESS1.0 display the lowest skill for the SIO domain. The MMM holds higher MVI values than the best individual models for the AS, TIO, and SIO regions. Improved skill of MMM over the BoB, TIO, and SIO regions in terms of a negative value for MCPI indicates an improved skill than the typical model (Chen and Sun 2015).

The Taylor diagram (Taylor 2001) represents the spread of models in terms of normalized correlation coefficient (CC), root mean square error (RMSE), and standard deviation (STD). Taylor diagrams are widely used to analyse the CMIP5 model skills (Krishnan and Bhaskaran 2019b; Miao et al. 2014; Semedo et al. 2018). The MMM for the BoB domain showed enhanced results (higher CC and lower RMSE) than individual models (Fig. 8). The standard deviation value for MMM is closer to the ERA5. For the SIO domain, MMM exhibits a higher STD value than the reference. The correlation coefficient is highest in the BoB and lowest in the TIO and SIO domains. The Taylor skill score of MMM in the AS domain is between the score of the other three domains. The RMSE is lowest in the BoB and TIO regions compared to the other two areas. Better effectiveness of the MMM over the individual GCM forced simulations are evident from the analysis.

Various performance evaluation metrics (M-Score, MCPI, MVI, and Taylor skill) are summarized in Fig. 9. The performance index is standardized to 0–1 for a pronounced understanding of the skill of the GCM forced simulations. Performance scores vary based on domain and models, whereas a few models perform well at all the domains. The M-Score is highest for HadGEM2-ES in the AS domain, whereas MMM dominates in the other three domains. Taylor skill score is highest for the MMM, which is consistent in the four domains. MCPI is another index by which GFDL-CM3 outperforms the MMM for the AS and BoB. Simultaneously, models ACCESS1.0, MRI-CGCM3, INMCM4, and BCC-CSM1.1 are better than MMM for the TIO domain. In the SIO region, MMM shows notably more remarkable skill than the individual ensemble members. In terms of MVI, HadGEM2-ES, ACCESS1.0, and BCC-CSM1.1 establish a substantial skill level than the MMM in the AS and SIO.

Figure 10a, b summarizes the Total Performance Index (TPI) for each of the models in the four sub-domains. The TPI values recommend the average skill of each model in representing SWH values on a monthly and seasonal scale. The improved skill of MMM better than the individual models in simulating seasonal SWH over the BoB domain is similar to the monthly skill. Analysis of monthly data reveals the better skill of BCC-CSM1.1 and MIROC5 for the AS domain; instead, ACCESS1.0 dominates in the seasonal analysis. Unlike the other domains, the TIO and SIO agree to INMCM4 and MMM for the monthly mean and HadGEM2-ES and ACCESS1.0 for the seasonal mean values. In the seasonal analysis of SWH data, HadGEM2-ES, ACCESS1.0, and MMM perform better among the available models.

There are few models (HadGEM2-ES, ACCESS1.0, and BCC-CSM1.1) which show outstanding performance in the study. The study also recommends the competency of MMM constructed using five selected models (MRI-CGCM3, ACCESS1.0, INMCM4, HadGEM2-ES, and BCC-CSM1.1) over the IO. The Fourth Assessment Report of IPCC (Randall et al. 2007) has mentioned that the MMM reduces the biases of individual models, retaining only the pervasive errors. The GCM forced wave simulations produced by the wave models are controlled mainly by the quality of the input wind forcing. The input field requires a sufficient resolution to represent the characteristics of storm systems, which causes the generation of surface waves (Hemer and Trenham 2016). Therefore, the variability in the wind input can be accounted as the significant factor influencing the differences in the skill of each wave simulation. Inadequate representation of atmospheric components in the GCM directly influences the near-surface wind speed (Morim et al. 2020). Model sensitivity in terms of uncertainty in the inputs was reported by Krishnan and Bhaskaran 2019a, 2019b, 2020). The analysis reported that near-surface wind speed simulated by HadGEM2-ES, ACCESS1.0, and MIROC5 is found as the best-performing GCMs for the BoB. A similar improved performance was also noticed in the wave model output created by the same GCM inputs. Correspondingly, wave simulations follow the same skill for the mentioned models, which are not valid for all IO sub-domains. Model performance can also vary when the models and observations agree on the external natural influences (e.g. extreme events, ENSO) (De Winter et al. 2013). In this context, Krishnan and Bhaskaran (2019b) noticed that during few cyclone cases in the BoB, maximum wind speed simulated by the GCMs underestimated the in situ records of RAMA buoys. The primary accumulation of bias in the GCM forced wave simulations over the SIO can be attributed to the modelling of sea-swell systems in that region. Swells generated in the SIO propagate towards the North Indian Ocean (NIO) and affect the wind-wave climate of the AS and BoB (Young 1999; Alves 2006; Sabique et al. 2012). The wind-seas at any region formed because the local winds are modified by interacting with the swells (Hanson and Phillips 1999). Vethamony et al. (2013) reported that the swells generated at 40° S directly propagate to the BoB without affecting the AS. Following that, Nayak et al. (2013) presented the propagation of swells from the SIO and its role in modifying the local wind-waves along the East coast of India. Other than the swells originating from the SIO, the Shamal swells formed in the Arabian Peninsula reach the West coast of India and modify that region’s wave climate (Aboobacker et al. 2011a, 2011b). Another wave system known as Makran swells propagates towards the Eastern and Western parts of the Arabian Sea (Anoop et al. 2020). All these swell systems, their propagation, and interaction with local wave conditions determine the resultant wind-wave climate of the IO region. Therefore, the weaknesses of GCM forced wave simulation can be accounted for various causes such as the inability of GCM forcing to reproduce the conditions, the drawback of wave model application in simulating the wave fields, and the intricate complexities existing in the study area.

4 Conclusions

Evaluation of the historical wave climate for the Indian Ocean was performed utilizing the simulations produced under the COWCLIP project. Performance evaluation methodologies such as Taylor skill score, M-Score, MCPI, and MVI are employed for the analysis. The GCM forced wave simulations were compared with the wave heights from ERA5 reanalysis. This study demonstrates that the GCM forced wave simulations showed variable skill depending on the region. Higher Taylor skill (0.8–1.0) from simulations was evident over the AS, BoB, and the equatorial IO. The BoB showed the highest similarity (M-Score of 768) between MMM and ERA5 among the four sub-domains. Based on MCPI and MVI, the models HadGEM2-ES, ACCESS1.0, and BCC-CSM1.1 revealed outstanding performance than MMM for the AS and SIO. The model CNRM-CM5 consistently underperformed in the analysis. Based on the skill statistics, the multi-model mean was constructed using the five best-performing models (MRI-CGCM3, ACCESS1.0, INMCM4, HadGEM2-ES, and BCC-CSM1.1). The most remarkable variations between the models were noticed over the SIO. The SIO is known as one of the swell generation areas in the IO. The difference in SWH over the SIO can be attributed to the lower skill of climate models in resolving the complexity over this region. The biases accounted in models can be attributed due to the weaknesses of GCM forcing in model physics, parametrization, and resolution. The present study provides a first-order analysis dealing with the skill of each GCM forced wave simulation by leveraging the advantage of the multi-model mean. A pronounced understanding on the historical wave climate would provide remarkable confidence in employing them for futuristic wave climate studies. Wave simulations produced under the COWCLIP project deal only with a limited number of ensemble members. Further studies will incorporate more ensemble member’s available and corresponding wave height projections for the IO region.

References

Aboobacker VM, Rashmi R, Vethamony P, Menon HB (2011a) On the dominance of pre-existing swells over wind seas along the west coast of India. Cont Shelf Res 31(16):1701–1712. https://doi.org/10.1016/j.csr.2011.07.010

Aboobacker VM, Vethamony P, Rashmi R (2011b) “Shamal” swells in the Arabian Sea and their influence along the west coast of India. Geophys Res Lett 38(3):1–7. https://doi.org/10.1029/2010GL045736

Aboobacker VM, Shanas PR, Al-Ansari EMAS, Sanil Kumar V, Vethamony P (2021) The maxima in northerly wind speeds and wave heights over the Arabian Sea, the Arabian/Persian Gulf and the Red Sea derived from 40 years of ERA5 data. Clim Dyn 56:1037–1052. https://doi.org/10.1007/s00382-020-05518-6

Alves JHGM (2006) Numerical modeling of ocean swell contributions to the global wind-wave climate. Ocean Model 11:98–122. https://doi.org/10.1016/j.ocemod.2004.11.007

Anoop TR, Shanas PR, Aboobacker VM, Kumar VS, Nair LS, Prasad R, Reji S (2020) On the generation and propagation of Makran swells in the Arabian Sea. Int J Climatol 40(1):585–593. https://doi.org/10.1002/joc.6192

Bador M, Boé J, Terray L, Alexander L V (2015) Impact of higher spatial atmospheric resolution on precipitation extremes over land in global climate models. J Geophys Res: Atmos 1–23. https://doi.org/10.1029/2019JD032184

Bao Y, Gao Y, Lü S, Wang Q, Zhang S, Xu J, Li R, Li S, Ma D, Meng X, Chen H, Chang Y (2014) Evaluation of CMIP5 earth system models in reproducing leaf area index and vegetation cover over the Tibetan plateau. J Meteorol Res 28(6):1041–1060. https://doi.org/10.1007/s13351-014-4023-5

Bidlot JR (2012) Present status of wave forecasting at ECMWF. sWorkshop Ocean Waves 1(June 2012):25–27

Bricheno LM, Wolf J (2018) Future wave conditions of Europe, in response to high-end climate change future wave conditions of Europe in response to high-end climate change scenarios. J Geophys Res Oceans 123:8762–8791. https://doi.org/10.1029/2018JC013866

Bruno MF, Molfetta MG, Totaro V, Mossa M (2020) Performance assessment of ERA5 wave data in a swell dominated region. J Mar Sci Eng 8(3). https://doi.org/10.3390/jmse8030214

Casas-Prat M, Wang XL, Swart N (2018) CMIP5-based global wave climate projections including the entire Arctic Ocean. Ocean Model 123:66–85

Chen H, Sun J (2015) Assessing model performance of climate extremes in China: an intercomparison between CMIP5 and CMIP3. Clim Chang 129(1–2):197–211. https://doi.org/10.1007/s10584-014-1319-5

Chen CA, Hsu HH, Liang HC (2021) Evaluation and comparison of CMIP6 and CMIP5 model performance in simulating the seasonal extreme precipitation in the Western North Pacific and East Asia. Weather Clim Extr 31:100303

Chowdhury P, Behera MR (2019) Evaluation of CMIP5 and CORDEX derived wave climate in Indian Ocean. Clim Dyn 52(7–8):4463–4482. https://doi.org/10.1007/s00382-018-4391-0

Chowdhury P, Behera MR, Reeve DE (2019) Wave climate projections along the Indian coast. Int J Climatol 39(11):4531–4542

Church JA, White NJ, Hunter JR (2006) Sea-level rise at tropical Pacific and Indian Ocean islands. Glob Planet Chang 53(3):155–168

Davini P, Cagnazzo C (2014) On the misinterpretation of the North Atlantic Oscillation in CMIP5 models. Clim Dyn 43(5–6):1497–1511. https://doi.org/10.1007/s00382-013-1970-y

De Winter RC, Sterl A, Ruessink BG (2013) Wind extremes in the North Sea Basin under climate change: an ensemble study of 12 CMIP5 GCMs. J Geophys Res-Atmos 118(4):1601–1612

Díaz-Esteban Y, Raga GB, Rodríguez OOD (2020) A weather-pattern-based evaluation of the performance of CMIP5 models over Mexico. Climate 8(1):1–18. https://doi.org/10.3390/cli8010005

Dobrynin M, Murawsky J, Yang S (2012) Evolution of the global wind wave climate in CMIP5 experiments. Geophys Res Lett 39(17):2–7. https://doi.org/10.1029/2012GL052843

Dullaart JCM, Muis S, Bloemendaal N, Aerts JCJH (2020) Advancing global storm surge modelling using the new ERA5 climate reanalysis. Clim Dyn 54(1–2):1007–1021. https://doi.org/10.1007/s00382-019-05044-0

Elguindi N, Giorgi F, Turuncoglu U (2014) Assessment of CMIP5 global model simulations over the subset of CORDEX domains used in the Phase I CREMA. Clim Chang 125(1):7–21

Fan X, Miao C, Duan Q, Shen C, Wu Y (2020) The performance of CMIP6 versus CMIP5 in simulating temperature extremes over the global land surface. J Geophys Res-Atmos 125(18):e2020JD033031

Gallagher S, Gleeson E, Tiron R, Mcgrath R, Dias F (2016) Twenty-first century wave climate projections for Ireland and surface winds in the North Atlantic Ocean. Adv Sci Res 5:75–80. https://doi.org/10.5194/asr-13-75-2016

Gleckler PJ, Taylor KE, Doutriaux C (2008) Performance metrics for climate models. J Geophys Res-Atmos 113(D6)

Gleixner S, Demissie T, Diro GT (2020) Did ERA5 improve temperature and precipitation reanalysis over East Africa? Atmosphere 11(9):996

Gu H, Yu Z, Wang J, Wang G, Yang T, Ju Q, Yang C, Xu F, Fan C (2015) Assessing CMIP5 general circulation model simulations of precipitation and temperature over China. Int J Climatol 35(9):2431–2440

Gupta N, Bhaskaran PK, Dash MK (2015) Recent trends in wind-wave climate for the Indian Ocean. Curr Sci 25:2191–2201

Hanson JL, Phillips OM (1999) Wind sea growth and dissipation in the open ocean. J Phys Oceanogr 29(8 PART 1):1633–1648

Hazeleger W, Wang X, Severijns C, Ştefănescu S, Bintanja R, Sterl A, Wyser K, Semmler T, Yang S, Van den Hurk B, Van Noije T (2012) EC-Earth V2. 2: description and validation of a new seamless earth system prediction model. Clim Dyn 39(11):2611–2629

He Y, Wang K, Feng F (2021) Improvement of ERA5 over ERA-Interim in simulating surface incident solar radiation throughout China. J Clim 34(10):3853–3867. https://doi.org/10.1175/JCLI-D-20-0300.1

Hemer Mark A (2010) Historical trends in Southern Ocean storminess: long-term variability of extreme wave heights at Cape Sorell, Tasmania. Geophys Res Lett 37. https://doi.org/10.1029/2010gl044595

Hemer Mark A, Katzfey J, Trenham CE (2013) Global dynamical projections of surface ocean wave climate for a future high greenhouse gas emission scenario. Ocean Model 70:221–245. https://doi.org/10.1016/j.ocemod.2012.09.008

Hemer MA, Trenham CE (2016) Evaluation of a CMIP5 derived dynamical global wind wave climate model ensemble. Ocean Model 103:190–203

Hemer MA, Wang XL, Weisse R, Swail VR (2012) Advancing wind-waves climate science: the COWCLIP project. Bull Am Meteorol Soc 93(6):791–796

Hemer MA, Fan Y, Mori N, Semedo A, Wang XL (2013) Projected changes in wave climate from a multi-model ensemble. Nat Clim Chang 3(5):471–476

Hersbach H, Bell B, Berrisford P, Hirahara S, Horányi A, Muñoz-Sabater J, Nicolas J, Peubey C, Radu R, Schepers D, Simmons A, Soci C, Abdalla S, Abellan X, Balsamo G, Bechtold P, Biavati G, Bidlot J, Bonavita M, Chiara G, Dahlgren P, Dee D, Diamantakis M, Dragani R, Flemming J, Forbes R, Fuentes M, Geer A, Haimberger L, Healy S, Hogan RJ, Hólm E, Janisková M, Keeley S, Laloyaux P, Lopez P, Lupu C, Radnoti G, Rosnay P, Rozum I, Vamborg F, Villaume S, Thépaut JN (2020) The ERA5 global reanalysis. Q J R Meteorol Soc 146(730):1999–2049. https://doi.org/10.1002/qj.3803

Hirota N, Takayabu YN (2013) Reproducibility of precipitation distribution over the tropical oceans in CMIP5 multi-climate models compared to CMIP3. Clim Dyn 41(11–12):2909–2920. https://doi.org/10.1007/s00382-013-1839-0

Ito R, Shiogama H, Nakaegawa T, Takayabu I (2020) Uncertainties in climate change projections covered by the ISIMIP and CORDEX model subsets from CMIP5. Geosci Model Dev 13(3):859–872. https://doi.org/10.5194/gmd-13-859-2020

Janssen PAEM, Bidlot JR (2009) On the extension of the freak wave warning system and its verification. Issue 588 of ECMWF technical memorandum, pp 44

Kamranzad B, Mori N (2018) Regional wave climate projection based on super-high-resolution MRI-AGCM3.2S, Indian Ocean. J Jpn Soc Civil Eng Ser B2 (Coast Eng) 74:I_1351–I_1355. https://doi.org/10.2208/kaigan.74.i_1351

Kamranzad B, Mori N (2019) Future wind and wave climate projections in the Indian Ocean based on a super - high - resolution MRI - AGCM3. 2S model projection. Clim Dyn 53(3):2391–2410. https://doi.org/10.1007/s00382-019-04861-7

Kamranzad B, Mori N, Shimura T (2017) Spatio-temporal wave climate using nested numerical wave modeling in the northern Indian Ocean. https://doi.org/10.13140/RG.2.2.13908.45448

Katzfey J, Nguyen K, Mcgregor J, Hoffmann P, Ramasamy S, Van Nguyen H, Van Khiem M (2016) High-resolution simulations for Vietnam - methodology and evaluation of current climate. Asia-Pac J Atmos Sci 52(2):91–106. https://doi.org/10.1007/s13143-016-0011-2

Krishnan A, Bhaskaran PK (2019a) CMIP5 wind speed comparison between satellite altimeter and reanalysis products for the Bay of Bengal. Environ Monit Assess 191:554. https://doi.org/10.1007/s10661-019-7729-0

Krishnan A, Bhaskaran PK (2019b) Performance of CMIP5 wind speed from global climate models for the Bay of Bengal region. Int J Climatol 40:3398–3416. https://doi.org/10.1002/joc.6404

Krishnan A, Bhaskaran PK (2020) Skill assessment of global climate model wind speed from CMIP5 and CMIP6 and evaluation of projections for the Bay of Bengal. Clim Dyn 55(9–10):2667–2687. https://doi.org/10.1007/s00382-020-05406-z

Kumar VS, George J, Joseph D (2020) Hourly maximum individual wave height in the Indian shelf seas—its spatial and temporal variations in the recent 40 years. Ocean Dyn 70(10):1283–1302. https://doi.org/10.1007/s10236-020-01395-z

Kusunoki S, Arakawa O (2015) Are CMIP5 models better than CMIP3 models in simulating precipitation over East Asia? J Clim 28(14):5601–5621. https://doi.org/10.1175/JCLI-D-14-00585.1

Lee T, Waliser DE, Li JLF, Landerer FW, Gierach MM (2013) Evaluation of CMIP3 and CMIP5 wind stress climatology using satellite measurements and atmospheric reanalysis products. J Clim 26(16):5810–5826. https://doi.org/10.1175/JCLI-D-12-00591.1

Luo N, Guo Y, Gao Z, Chen K, Chou J (2020) Assessment of CMIP6 and CMIP5 model performance for extreme temperature in China. Atmos Ocean Sci Lett 13(6):589–597. https://doi.org/10.1080/16742834.2020.1808430

Ma HY, Xie S, Klein SA, Williams KD, Boyle JS, Bony S, Douville H, Fermepin S, Medeiros B, Tyteca S, Watanabe M, Williamson D (2014) On the correspondence between mean forecast errors and climate errors in CMIP5 models. J Clim 27(4):1781–1798. https://doi.org/10.1175/JCLI-D-13-00474.1

Masato G, Hoskins BJ, Woollings T (2013) Winter and summer northern hemisphere blocking in CMIP5 models. J Clim 26(18):7044–7059

Meucci A, Young I R, Hemer M, Kirezci E, Ranasinghe R (2020) Projected 21st century changes in extreme wind-wave events. July 2011. doi:https://doi.org/10.1126/sciadv.aaz7295

Miao C, Duan Q, Sun Q, Huang Y, Kong D, Yang T, Ye A, Di Z, Gong W (2014) Assessment of CMIP5 climate models and projected temperature changes over Northern Eurasia. Environ Res Lett 9(5). https://doi.org/10.1088/1748-9326/9/5/055007

Mielke P Jr (1991) The application of multivariate permutation methods based on distance functions in the earth sciences. Earth Sci Rev 31:55–71 https://journals-scholarsportal-info.proxy.library.carleton.ca/pdf/00128252/v31i0001/55_taompmdfites.xml%0Ahttp://www.sciencedirect.com/science/article/pii/001282529190042E

Mohan S, Bhaskaran PK (2019) Evaluation of CMIP5 climate model projections for surface wind speed over the Indian Ocean region. Clim Dyn 53(9–10):5415–5435. https://doi.org/10.1007/s00382-019-04874-2

Morim J, Hemer M, Cartwright N, Strauss D, Andutta F (2018) On the concordance of 21st century wind-wave climate projections. Glob Planet Chang 167:160–171

Morim J, Hemer M, Wang X L, Cartwright N, Trenham C, Semedo A, …, Erikson L (2019) Robustness and uncertainties in global multivariate wind-wave climate projections. Nat Clim Chang, 9(9), 711–718.

Morim J, Hemer M, Andutta F, Shimura T, Cartwright N (2020) Skill and uncertainty in surface wind fields from general circulation models: intercomparison of bias between AGCM, AOGCM and ESM global simulations. Int J Climatol 40(5):2659–2673

Nayak S, Bhaskaran PK, Venkatesan R, Dasgupta S (2013) Modulation of local wind-waves at Kalpakkam from remote forcing effects of Southern Ocean swells. Ocean Eng 64:23–35. https://doi.org/10.1016/j.oceaneng.2013.02.010

Ogata T, Ueda H, Inoue T, Hayasaki M, Yoshida A, Watanabe S, Kira M, Ooshiro M, Kumai A (2014) Projected future changes in the Asian monsoon: a comparison of CMIP3 and CMIP5 model results. J Meteorol Soc Jpn 92(3):207–225. https://doi.org/10.2151/jmsj.2014-302

Perez J, Menendez M, Mendez FJ, Losada IJ (2014) Evaluating the performance of CMIP3 and CMIP5 global climate models over the north-east Atlantic region. Clim Dyn 43(9-10):2663–2680. https://doi.org/10.1016/j.ocemod.2015.07.004

Radić V, Clarke GK (2011) Evaluation of IPCC models’ performance in simulating late-twentieth-century climatologies and weather patterns over North America. J Clim 24(20):5257–5274

Randall D A, Wood R A, Bony S, Colman R, Fichefet T, Fyfe J, Kattsov V, Pitman A, Shukla J, Srinivasan J, Stouffer R J, (2007) Climate models and their evaluation. In Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the IPCC (FAR) (pp. 589–662). Cambridge University Press

Remya, P.G., Rabi Ranjan, T., Sirisha, P., Harikumar, R. and Balakrishnan Nair, T.M. (2020) Indian Ocean wave forecasting system for wind waves: development and its validation. J Oper Oceanogr pp.1-16

Rivas MB, Stoffelen A (2019) Characterizing ERA-Interim and ERA5 surface wind biases using ASCAT. Ocean Sci 15(3):831–852. https://doi.org/10.5194/os-15-831-2019

Sabique L, Annapurnaiah K, Balakrishnan Nair TM, Srinivas K (2012) Contribution of Southern Indian Ocean swells on the wave heights in the Northern Indian Ocean - a modelling study. Ocean Eng 43:113–120. https://doi.org/10.1016/j.oceaneng.2011.12.024

Seemanth M, Bhowmick SA, Kumar R, Sharma R (2016) Sensitivity analysis of dissipation parameterizations in a third-generation spectral wave model, WAVEWATCH III for Indian Ocean. Ocean Eng 124:252–273

Semedo A, Dobrynin M, Lemos G, Behrens A, Staneva J, De Vries H, Sterl A, Bidlot JR, Miranda P, Murawski J (2018) CMIP5-derived single-forcing, single-model, and single-scenario wind-wave climate ensemble: configuration and performance evaluation. J Mar Sci Eng. https://doi.org/10.3390/jmse6030090

Sreelakshmi S, Bhaskaran PK (2020a) Regional wise characteristic study of significant wave height for the Indian Ocean. Clim Dyn 54(7–8):3405–3423. https://doi.org/10.1007/s00382-020-05186-6

Sreelakshmi S, Bhaskaran PK (2020b, 1998) Spatio-temporal distribution and variability of high threshold wind speed and significant wave height for the Indian Ocean. Pure Appl Geophys Young. https://doi.org/10.1007/s00024-020-02462-8

Sreelakshmi S, Bhaskaran PK (2020c) Wind-generated wave climate variability in the Indian Ocean using ERA-5 dataset. Ocean Eng 209:107486. https://doi.org/10.1016/j.oceaneng.2020.107486

Srinivas G, Remya PG, Praveen Kumar B, Modi A, Balakrishnan Nair TM (2020) The impact of Indian Ocean dipole on tropical Indian Ocean surface wave heights in ERA5 and CMIP5 models. Int J Clim 41(3):1619–1632. https://doi.org/10.1002/joc.6900

Stefanakos C (2019) Intercomparison of wave reanalysis based on ERA5 and WW3 databases. InThe 29th International Ocean and Polar Engineering Conference International Society of Offshore and Polar Engineers

Swain J, Umesh PA (2018) Prediction of uncertainty using the third generation wave model WAVEWATCH III driven by ERA-40 and blended winds in the North Indian Ocean. J Oceanogr Mar Res 5(172):2

Tarek M, Brissette FP, Arsenault R (2020) Evaluation of the ERA5 reanalysis as a potential reference dataset for hydrological modelling over North America. Hydrol Earth Syst Sci 24(5):2527–2544. https://doi.org/10.5194/hess-24-2527-2020

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res-Atmos 106(D7):7183–7192

Tolman H L (2009) User manual and system documentation of WAVEWATCH-IIITM version 3.14. Technical Note, 3.14, 220

Trenberth KE, Jones PD, Ambenje P, Bojariu R, Easterling D, Klein Tank A, Parker D, Rahimzadeh F, Renwick JA, Rusticucci M, Soden B (2007) Observations: surface and atmospheric climate change. Chapter 3. Climate Change 15:235–336

Vethamony PV, Rashmi R, Samiksha SV, Aboobacker VM (2013) Recent studies on wind seas and swells in the Indian Ocean: a review. Int J Ocean Clim Syst 4(1):63–73. https://doi.org/10.1260/1759-3131.4.1.63

Wang XL, Swart N (2018) CMIP5-based global wave climate projections including the entire Arctic Ocean. Ocean Model 123(January):66–85. https://doi.org/10.1016/j.ocemod.2017.12.003

Wang C, Zhang L, Lee SK, Wu L, Mechoso CR (2014) A global perspective on CMIP5 climate model biases. Nat Clim Chang 4(3):201–205. https://doi.org/10.1038/nclimate2118

Wang XL, Feng Y, Swail VR (2015) Climate change signal and uncertainty in CMIP5-based projections of global ocean surface wave heights. J Geophys Res Oceans 121:476–501. https://doi.org/10.1002/2015JC010878.Received

Watterson IG (1996) Non-dimensional measures of climate model performance. Int J Climatol 16(4):379–391

Watterson IG (2015) Improved simulation of regional climate by global models with higher resolution: skill scores correlated with grid length. J Clim 28(15):5985–6000. https://doi.org/10.1175/JCLI-D-14-00702.1

Watterson IG, Bathols J, Heady C (2014) What influences the skill of climate models over the continents? Bull Am Meteorol Soc 95(5):689–700. https://doi.org/10.1175/BAMS-D-12-00136.1

Werner A T (2011) BCSD downscaled transient climate projections for eight select GCMs over British Columbia, Canada. Pacific Climate Impacts Consortium, April, 63. http://scholar.google.com/scholar?hl=en,btnG=Search,q=intitle:BCSD+Downscaled+Transient+Climate+Projections+for+Eight+Select+GCMs+over+British+Columbia,+Canada#0%5Cn. http://scholar.google.com/scholar?hl=en,btnG=Search,q=intitle:BCSD+downscaled+transient+cl

Woolf DK, Challenor PG, Cotton PD (2002) Variability and predictability of the North Atlantic wave climate. J Geophys Res Oceans 107(10). https://doi.org/10.1029/2001jc001124

Xu Z, Chang P, Richter I, Kim W, Tang G (2014) Diagnosing southeast tropical Atlantic SST and ocean circulation biases in the CMIP5 ensemble. Clim Dyn 43(11):3123–3145. https://doi.org/10.1007/s00382-014-2247-9

Young IR (1999) Wind generated ocean waves. Elsevier

Young IR, Ribal A (2019) Multiplatform evaluation of global trends in wind speed and wave height. Science 364(6440):548–552. https://doi.org/10.1126/science.aav9527

Young IR, Zieger S, Babanin AV (2011) Global trends in wind speed and wave height. Science. 332:451–455. https://doi.org/10.1126/science.1197219

Zappa G, Shaffrey LC, Hodges KI, Sansom PG, Stephenson DB (2013) A multimodel assessment of future projections of North Atlantic and European extratropical cyclones in the CMIP5 climate models. J Clim 26(16):5846–5862

Acknowledgements

The authors sincerely thank the Department of Science and Technology (DST), Government of India, for the financial support. This study was conducted under the Centre of Excellence (CoE) in Climate Change studies established at IIT Kharagpur funded by DST, Government of India. The study forms a part of the ongoing project ‘Wind-Waves and Extreme Water Level Climate Projections for the East Coast of India’. We acknowledge that the study used wave simulations developed under the Coordinated Ocean Wave Climate Project (COWCLIP) of Commonwealth Scientific and Industrial Research Organisation (CSIRO), Australia. The authors thank Dr. Mark A. Hemer for providing the necessary permissions to use the data and Claire E. Trenham for helping with the details regarding the datasets. We also acknowledge Copernicus Climate Change Service (C3S) (2017): ERA5: Fifth generation of ECMWF atmospheric reanalyses of the global climate. Copernicus Climate Change Service Climate Data Store (CDS), accessed on 02-09-2020. https://cds.climate.copernicus.eu/cdsapp#!/home

Funding

The authors did not receive support from any organization for the submitted work. No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by Athira Krishnan. The first draft of the manuscript was written by Athira Krishnan, and all of the authors commented on previous versions of the manuscript. All of the authors read and approved the final manuscript.

Conceptualization: Prasad K. Bhaskaran; methodology: Prasad K. Bhaskaran and Prashant Kumar; formal analysis and investigation: Athira Krishnan, Prasad K. Bhaskaran, and Prashant Kumar; writing—original draft preparation: Athira Krishnan and Prasad K. Bhaskaran; writing—review and editing: Athira Krishnan, Prasad K. Bhaskaran, and Prashant Kumar; supervision: Prasad K. Bhaskaran

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Krishnan, A., Bhaskaran, P.K. & Kumar, P. CMIP5 model performance of significant wave heights over the Indian Ocean using COWCLIP datasets. Theor Appl Climatol 145, 377–392 (2021). https://doi.org/10.1007/s00704-021-03642-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-021-03642-9