Abstract

The present study reports a thorough information theory–based investigation of the time series of rainfall in seasonal scale as well as yearly scale in the Himalayas during the summer monsoon (June–September). In this paper, the inbred uncertainty of rainfall series has been evaluated through Shannon entropy by fitting a probability distribution. The entropy has been measured for seasonal scales as well as yearly scales. A few parameters relating to the summer monsoon rainfall series over parts of North Mountainous India (NMI) from 1844 to 2005 are considered for computing entropy. Maximization of Shannon entropy is done to examine the parallel contributions of these parameters in this meteorological phenomenon. Finally, we have shown how entropy is affected by the fluctuation of mean rainfall in seasonal scale as well as yearly scale. We have also computed the decade-wise intrinsic uncertainty of rainfall series as rainfall is the most important parameter for climate risk. Besides, we also fitted the probability distribution curve generated by rainfall series.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

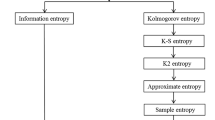

The average rate of stochastic source of data produced information is called entropy. In 1850, the modern ergodic theory has begun with the evolution of the concept of entropy of a stochastic variable by Rudolf Clausius, and in 1870 Ludwig Boltzmann showed it statistically. In that subsequent time period, the idea of entropy was developed by J. Willard Gibbs in thermodynamics and Von Neumann in quantum mechanics. Then, in 1948, it was reintroduced by Claude Shannon to find fundamental limits on signal processing and communication operations in a paper titled “A Mathematical Theory of Communication.” A developmental approach from information is the measurement in pursuance of information in stuff like events, random variables, and distributions. Quantification of the load of info signal is the procedure of probabilities. This relates information theory to probability. In his information theory, Claude Shannon defined “entropy” as a measure of uncertainty with respect to some event or variable. The higher value of Shannon entropy is the maximum quantitative recognition of uncertainty with respect to the system. On the basis of maximum entropy, we can evaluate geophysical processes consistently. Then, it has also been asserted in the paper that the forecasts might be improved by the larger amount of information involved in a probabilistic forecast than in a deterministic forecast (Palmer 2000; Richardson 2000). Roulston and Smith (2002) entrenched a convenient analytical groundwork of information theory for foretelling realization and quantifying weather and climate. For further analysis on the Shannon entropy, we can refer to Gray (1990). For mutual information, the perception of entropy is

where X and Y are respective random variables.

The consisted information in one process subtracting the consisted information in the other process when it is well-known is the mutual collective information. In spite of the presence of another perception of conditional entropy, the fore (I) has been concentrated by the information logicians as it does not need any interpretation of what conditional entropy signifies and is more well-formed than the definition of conditional. Mathematicians and statisticians have elongated the principle of Shannon entropy in the models of information sources, coding structures, and performance measures. Xu (2007) conferred a precise study on the diversity of relative entropy and Shannon entropy in estimating contained information and dropping information and deduced that the difference of Shannon entropy computes only the part of variability and the relative entropy computes both the signal and variability parts of the contained information from observations. An important application of information theory to the study of rainfall has been reported in Brunsell (2010). In another study, Kawachi et al. (2001) demonstrated an application of Shannon entropy to rainfall over Japan. Liu et al. (2011) reviewed the application of Shannon entropy to meteorology and allied areas. Nebot et al. (2008) reported a Fuzzy-based methodology in combination with Shannon entropy in the study of uncertainty.

Uncertain time series (Radzuan et al. 2013; Kalamaras et al. 2017) treated to be an expanded group of actualizations of a respective accustomed random variable and recorded by a time appearing normally in environment, meteorology, economics, and other study areas. The uncertain chronological time series has been manifested in literatures: Lykoudis et al. (2010), Cloke and Pappenberger (2009), Efstathiou and Varotsos (2010), Varotsos et al. (2013, Varotsos et al. 2015), and Radzuan et al. (2013). The maximum entropy explains the distributional properties and endurance in the hydrological processes. Hydrological examples with respect to this include Koutsoyiannis (2005), Singh (1997, 2011), Kawachi et al. (2001), Mukhopadhyay and Khan (2015), da Silva et al. (2016), and Agarwal et al. (2016). In the paper of Chattopadhyay et al. (2018), there is the theory of Shannon entropy maximization in discussing the relative contribution of different surface parameters in the genesis of a severe weather system over a part of India. Plethora of literatures have explored the complexity associated with meteorological processes and related prediction. Examples include Bandyopadhyay et al. (2016); Chattopadhyay (2007); Chattopadhyay and Bandyopadhyay (2008); Chaudhuri (2006); Chaudhuri (2008); Chaudhuri and Chattopadhyay (2005); Dallachiesa et al. (2011); Dash et al. (2007); De et al. (2011a, b); Gardner and Dorling (1998); Gutierrez-Coreaa et al. (2016); Hontoria et al. (2005); Hsieh and Tang (1998), Varotsos et al. (2014) and Cracknell and Varotsos (2007)

Meteorological conditions over India and the subcontinent as well are significantly influenced by the Himalayas that act an extraordinary climatic separation. The course of air as well as water of the said region depends heavily upon it toward the south and in the Central Asian good countries toward the north. As per prudence of the area and its marvelous tallness, the Higher Himalayas blocks the entry of chill mainland air from the northern part into India in wintertime and furthermore powers the storm toward South-West (downpour bearing) breeze to surrender a large portion of their dampness before entering the northern area. The outcome is overwhelming rain and day of downfall precipitation in the Indian side yet bone-dry environment in Tibet. The normal yearly precipitation on the south inclines fluctuates between 60 in. (1530 mm) at Shimla, Himachal Pradesh, and Mussoorie, Uttarakhand, in the western half of the Himalayas and 120 in. (3050 mm) at Darjeeling, West Bengal, in the eastern half of Himalayas. North of the Great Himalayas, at spots, for example, Skardu, Gilgit, and Leh in the Kashmir segment of the Indus river valley, just 3 to 6 in. (75 to 150 mm) of downfall happens.

The period of summer monsoon (June to September) contributes the maximum to the annual rainfall over entire India. Interactions of a number of complex meteorological processes at different scales lead to the Indian summer monsoon rainfall (ISMR). The amount of ISMR is characterized by huge degree of spatial variability ranging from 160 to 1800 mm/year (Kishore et al. 2016). It has been demonstrated in Kishore et al. (2016) that the highest amount of annual ISMR is received by the northeast, west coast, western Himalayas, and north central parts of India.

The Himalayan range, a highly elevated land area, has a major role to play in the climate and is regarded as one of the most important mountainous regions in the world. The significance of the Himalayan region and the adjacent Tibetan Plateau in the modulation of regional monsoon climate is well discussed in the literatures (Bhatt and Nakamura 2005). The processes of atmospheric circulation including monsoon depression and westerly disturbances are significantly influenced by the Himalayan range. During the period of summer monsoon, the said region is affected by abundant rainfall and episodes of flood. In particular, the slopes that are facing south and the Gangetic plains fall under this climatic condition. Landslides connote the most perennial feature of the Himalayas during the summer monsoon. On the other hand, it should also be noted that the rainfall has substantial importance in the agricultural practices over this region. Plethora of literature have emphasized upon the role of convection in the Tibetan Plateau and its adjacent region in the process of monsoon circulation through the release of latent heat. Studies on the summer monsoon over the Himalayas mostly include the techniques of ground of satellite-based investigations. In a recent study, Vellore et al. (2016) have demonstrated a rigorous study to understand the evolution of the monsoon-extratropical circulation features through reanalysis of circulation products pertaining to some extreme events of precipitation over the western Himalayas.

In the theory of time series analysis, there are two fundamental approaches, namely time domain and frequency domain. The two processes are distinct in implementation procedures and are complementary to each other. In the time domain approach, a data series is characterized in the same terms in which they have been observed. In the present work, we have implemented the entire methodology to the data in the form which they have been reported by the Indian Institute of Tropical Meteorology (IITM), Pune, because of the title which contains the term “time domain.” Here, we are going to explore the intrinsic complexity of the ISMR over the Himalayan region through probabilistic information theory. At this juncture, we explain the motivation behind the study. It is more difficult to study the pattern of the monsoon rainfall over the Himalayan region than that in the remaining parts of India. This difficulty is attributed to the complex topography of the Himalayas. The terrain induces waves such as the lee-wave and seeder-feeder mechanism and as a consequence severe modifications happen to the precipitation process. Furthermore, the nature of convection, altitudinal variation, and slope-wise variation contributes to the complexity of the pattern of monsoon rainfall over this zone (Shrestha et al. 2000). The details of the methodology to be adopted in the present study with the maximization of Shannon entropy is presented in the subsequent sections. The rest of the paper is organized as follows: in Section 2, we have fitted normal and log-normal distributions to the rainfall data. In a subsection of Section 2, we have presented the methodology of deriving the maximum entropy probability distribution. In Section 3, we have demonstrated the computation of Shannon entropy and maximum entropy probability distribution. We have the conclusion in Section 4.

2 Methodology

2.1 Data and source

In this present work, our adoptive methodology is entropy based. The observational data on rainfall over the Himalayan Mountain Region for the period 1844 to 2005 were collected from the India Meteorological Department (IMD). At first, these discrete data have been classified into continuous distribution by the exclusive method of classification with a class interval of 50. The calculated mean was 1123.765, and standard deviation was 227.164. We have tested the data in various scales for normal and log-normal distributions. In this connection, it may be stated that a positive random variable X follows log-normal if the logarithm of X follows normal distribution, i.e., lnX~N(μ, σ2). In order to check the data for these two distributions, we have considered the null hypothesis that the distribution is a good fit to the data. The alternative is the negation. After carrying out χ2 test, it has been observed that the JJAS rainfall data over the study zone satisfies both the probability distributions. The outcomes have been pictorially presented in Figs. 1 and 2.

Therefore, most rainfall data are close to the mean 1123.765 (mm). Small differences between an individual rainfall data and the mean were obtained more frequently than substantial deviations from the mean. The standard deviation is 227.164 (mm), which indicates the typical behavior that individual data tend to fall from the mean rainfall. The distribution is symmetric. The number of rainfall data lower than average equals the number of rainfall data greater than average. In both tails of the distribution, extremely low rainfall data occur as infrequently as extremely high rainfall data.

In Fig. 2, we fitted log-normal distribution to seasonal summer monsoon rainfall data by converting it into continuous exclusive method of classification from 1844 to 2005 over the Himalayan Mountain Region. Here, the larger values tend to be farther away from the mean than the smaller values; therefore, it has skew distribution to the right, which is positive skewness.

2.2 Determining ignorance

It has already been demonstrated in the preceding sections that we intend to quantify the intrinsic uncertainty associated with the JJAS rainfall over the Himalayan mountainous region. This section demonstrates a brief overview of the entropy function required for the said purpose. Details of this methodology are available in Klir and Folger (2009) and references therein. In the process of entropy maximization, out of all probability distributions that hold on to the evidence, the chosen distribution has to have maximum uncertainty. So, the primary focus is to determine a probability distribution that maximizes the entropy:

Here, f is a discrete random variable which is defined on the probability space (Ω, B, P), where Ω denotes the sample space, B denotes the set of events, and P is a function from events to probabilities. The entropy can be safely regarded not as a function of the particular outputs of f but as a function of the partition that f induces on X. That means, Q is made up of disjoint sets that group the points in Ω as per what output the measurement f produces. Therefore, the entropy function can be written as

2.3 Entropy maximization

Here, we are about to present the methodology based on the old principle of probability theory alternatively known as the principle of insufficient reason. This principle calls for our methodology to eliminate all probability distributions that is not in line with the evidence in hand. Out of all the remaining probability distributions, those are selected that fully validates our ignorance. They ultimately provide for the maximum entropy (Lesne 2014). Considering a probability distribution function (PDF), defined by the vector f consisting of n components (the ith component defining the happening of ith outcome), then we can rewrite Eq. (2) as (Roulston and Smith 2002)

We try to maximize H(p) as in Eq. (3) under the constra

Now, the following formed Lagrangian is

Here, α and β are Lagrange multipliers. The following partial differential equations are derived from Eq. (7):

For optimization, we set \( \frac{\delta L}{\delta \alpha}=0 \),\( \frac{\delta L}{\delta \beta}=0 \),\( \frac{\delta L}{\delta {p}_i} \).Equation (10) gives

From Eqs. (11) and (9), we arrive at

From Eq. (11),

Using Eqs. (5) and (13), we get

Using Eqs. (14) and (9), we finally have

We solve for β in Eq. (15) and substitute it in Eq. (14). We get the maximum entropy probability distribution function and thereby we get the maximum H(p).

3 Results and discussion

3.1 Calculation of Shannon entropy

Here, in this present study, we are considering the summer monsoon rainfall series of the North Mountainous India (NMI) from 1844 to 2005. The mean (E[X]) of rainfall series is 1123.765. The number of rainfall data considered in this study is 26 as we transferred the collected discrete 162 years of data in continuous distribution. Values of pre-assigned parameters before and after rainfall have been taken to our calculation. Following Chaudhuri and Chattopadhyay (2003), we have diversified n from 1 to 26 in Eq. (15) for each case of parameters. The E[X] has been observed as the desired changes (%) in the measurements of the parameters. By using the Newton-Raphson method, the β in Eq. (15) has been found. A maximum entropy probability distribution has been allocated for each solution of β. Using these probability densities in Eq. (3), we calculated entropies as predefined for each equation and plotted in Fig. 3.

It may be noted that while creating Tables 1 and 2 we have followed continuous distribution method and in Table 3 the discrete distribution method has been adopted. By continuous distribution method, we mean that the entire data available in array form are converted to frequency distribution with continuous classes and the mid points of the classes are considered as values realized by the random variable. On the other hand, the discrete distribution actually pertains to a finite set of values realized by the continuous random variable at some discrete points.

3.2 Entropy as uncertainty

Entropy helps us making accurate statements and performing computations concerning uncertainty about the outcome. We can say that entropy is a measure of uncertainty, though it will be an understatement. Based on some assumptions, entropy is the measure of uncertainty. A bigger entropy value means a greater level of uncertainty, suggesting lesser purity of the distribution. By entropy, we are referring to the Shannon entropy. In spite of several other entropies being there, we are assuming that Shannon entropy is the most frequently used entropy in our context.

We are splitting the data set into 16 data sets by taking decade-wise serial discrete data. Then, we found out the mean and standard deviations for each data set and we calculated Shannon entropy for each case also.

We are taking decade-wise discrete data. The number of rainfall data is 10 in each case. Following the previous method, we have varied n from 1 to 10 in Eq. (15). For each case, the E(x) has been observed as the desired changes (%) in the measurements of the parameters. By using the Newton-Raphson method, the β in Eq. (15) has been found for each decade-wise data set. Similarly, we calculated a maximum entropy probability distribution for each solution of β. Using these probability densities in Eq. (3), we calculated entropies for each case.

4 Conclusion

In the study reported above, we have carried out a thorough information theory–based investigation. Millions of people depend on agriculture for a living. Precipitation, rain to be precise, has a substantial effect on agriculture. Similarly, the Himalayan range, a highly elevated land area, has a major role to play in the climate. In this paper, at first, the discrete rainfall data has been classified into continuous frequency distribution and on checking it was found that normal (Fig. 1) and log-normal (Fig. 2) distribution is the best fit for this continuous random variable. Here, the inbred uncertainty of rainfall series has been evaluated through Shannon-entropy by fitting a probability distribution. The entropy has been measured for seasonal scales as well as yearly scales. A few parameters relating to the summer monsoon rainfall series over parts of North Mountainous India (NMI) from 1844 to 2005 are considered for computing entropy. Maximization of Shannon entropy is done to examine the parallel contributions of these parameters in discovering this meteorological phenomenon. It has been observed that most rainfall data are close to the mean 1123.765 (mm). Small differences between an individual rainfall data and the mean were obtained more frequently than substantial deviations from the mean. The standard deviation is 227.164 (mm), which indicates the typical behavior that individual data tend to fall from the mean rainfall. The distribution is symmetric. Also, we fitted log-normal distribution (Fig. 2) to seasonal summer monsoon rainfall data by converting it into continuous exclusive method of classification from 1844 to 2005 over the Himalayan Mountain Region. Here, the larger values tend to be farther away from the mean than the smaller values; therefore, it has skew distribution to the right, which is positive skewness. The number of rainfall data lower than average equals the number of rainfall data greater than average. In both tails of the distribution, extremely low rainfall data occur as infrequently as extremely high rainfall data.

In the subsequent phase, taking decade-wise discrete data, we have applied entropy maximization procedure and by using the Newton-Raphson method the β in Eq. (15) has been found for each decade-wise data set. Similarly, we calculated a maximum entropy probability distribution for each solution of β. Using these probability densities in Eq. (3), we calculated entropies for each case. It has been observed that the Shannon entropy values obtained by the expected rainfall in a 10-year scale, averaged by yearly 4 months of JJAS data has maintained almost similar pattern (Fig. 3). This shows that the ISMR over the study zone does not lead to significant change in the intrinsic uncertainty in yearly scale. In decadal scale also, the entropy (Fig. 4) does not exhibit significant changes over time. The probability distributions corresponding to the maximum entropies, computed through Eq. (14), are presented in Figs. 5 and 6, where it is observed that the probabilities corresponding to higher values of rainfall amount are less than the lower values of the rainfall. This indicates that associated uncertainty increases with the lowering of rainfall amount during the season of Indian summer monsoon. Nevertheless, the measures of uncertainty in both scenarios stay in the range of (Nebot et al. 2008; Lesne 2014). This indicates that the ISMR over the study zone maintains its complexity pattern throughout the years. This quantitative measure of uncertainty can be utilized for the development of predictive models for future studies.

References

Agarwal A, Maheswaran R, Sehgal V, Khosa R, Sivakumar B, Bernhofer C (2016) Hydrologic regionalization using wavelet-based multiscale entropy method. J Hydrol 538:22–32

Bandyopadhyay N, Bhuiyan C, Saha AK (2016) Heat waves, temperature extremes and their impacts on monsoon rainfall and meteorological drought in Gujarat, India. Nat Hazards 82(1):367–388

Bhatt BC, Nakamura K (2005) Characteristics of monsoon rainfall around the Himalayas revealed by TRMM precipitation radar. Mon Weather Rev 133(1):149–165

Brunsell NA (2010) A multiscale information theory approach to assess spatial-temporal variability of daily precipitation. J Hydrol 385:165–172

Chattopadhyay S (2007) Feed forward artificial neural network model to predict the average summermonsoon rainfall in India. Acta Geophys 55:369–382

Chattopadhyay S, Bandyopadhyay G (2008) Artificial neural network with backpropagation learning to predict mean monthly total ozone in Arosa, Switzerland. Int J Remote Sens 28:4471–4482

Chattopadhyay S, Chattopadhyay G, Midya SK (2018) Shannon entropy maximization supplemented by neurocomputing to study the consequences of a severe weather phenomenon on some surface parameters. Nat Hazards 93:237–247

Chaudhuri S (2006) Predictability of chaos inherent in the occurrence of severe thunderstorms. Adv Complex Syst 9:77–85

Chaudhuri S (2008) Preferred type of cloud in the genesis of severe thunderstorms—a soft computing approach. Atmos Res 88:149–156

Chaudhuri S, Chattopadhyay S (2003) Viewing the relative importance of some surface parameters associated with pre-monsoon thunderstorms through ampliative reasoning. Solstice: An Electronic Journal of Geography and Mathematics, Vol XIV, Number 1. Institute of Mathematical Geography, Ann Arbor

Chaudhuri S, Chattopadhyay S (2005) Neuro-computing based short range prediction of some meteorological parameters during the pre-monsoon season. Soft Comput 9:349–354

Cloke HL, Pappenberger F (2009) Ensemble flood forecasting: a review. J Hydrol 375(3–4):613–626

Cracknell, A. P., & Varotsos, C. A. (2007). Editorial and cover: Fifty years after the first artificial satellite: from sputnik 1 to envisat. Int. J. Remote Sens. 28(10), 2071-2072

da Silva VDPR, Belo Filho AF, Almeida RSR, de Holanda RM, da Cunha Campos JHB (2016) Shannon information entropy for assessing space–time variability of rainfall and streamflow in semiarid region. Science Total Environ 544:330–338

Dallachiesa M, Nushi B, Mirylenka K, Palpanas T (2011) Similarity matching for uncertain time series: analytical and experimental comparison. Proceedings of the 2nd ACM SIGSPATIAL International Workshop on Querying and Mining Uncertain Spatio-Temporal Data (pp. 8–15), ACM

Dash SK, Jenamani RK, Kalsi SR, Panda SK (2007) Some evidence of climate change in twentieth-century India. Clim Chang 85(3–4):299–321

De SS, Bandyopadhyay B, Paul S (2011a) A neurocomputing approach to the forecasting of monthly maximum temperature over Kolkata, India using total ozone concentration as predictor. Comptes Rendus Geosci 343:664–676

De SS, De BK, Chattopadhyay G, Paul S, Haldar DK, Chakrabarty DK (2011b) Identification of the best architecture of a multilayer perceptron in modelling daily total ozone concentration over Kolkata, India. Acta Geophysica 59:361–376

Efstathiou MN, Varotsos CA (2010) On the altitude dependence of the temperature scaling behaviour at the global troposphere. Int J Remote Sens 31(2):343–349

Gardner MW, Dorling SR (1998) Artificial neural networks (the multilayer perceptron) a review of applications in the atmospheric sciences. Atmos Environ 32:2627–2636

Gray RM (1990) Entropy and information theory. Springer, New York

Gutierrez-Coreaa F-V, Manso-Callejo M-A, Moreno-Regidora M-P, Manrique-Sanchoa M-T (2016) Forecasting short-term solar irradiance based on artificial neural networks and data from neighboring meteorological stations. Sol Energy 134:119–131

Hontoria L, Aguilera J, Zufiria P (2005) An application of the multilayer perceptron: solar radiation maps in Spain. Sol Energy 79:523–530

Hsieh WW, Tang B (1998) Applying neural network models to prediction and data analysis in meteorology and oceanography. Bull Am Meteorol Soc 79:1855–1870

Kalamaras I, Zamichos, A, Salamanis A, Drosou A, Kehagias DD, Margaritis G, Papadopoulos S, Tzovaras D (2017) An interactive visual analytics platform for smart intelligent transportation systems management. IEEE Transactions on Intelligent Transportation Systems 19(2):487–496

Kawachi T, Maruyama T, Singh VP (2001) Rainfall entropy for delineation of water resources zones in Japan. J Hydrol 246:36–44

Kishore P, Jyothi S, Basha G, Rao SVB, Rajeevan M, Velicogna I, Sutterley TC (2016) Precipitation climatology over India: validation with observations and reanalysis datasets and spatial trends. Clim Dyn 46(1–2):541–556

Klir GJ, Folger TA (2009) Fuzzy-sets uncertainty and information. Prentice-Hall, Englewood Cliffs

Koutsoyiannis D (2005) Uncertainty, entropy, scaling and hydrological stochastics. 2. Time dependence of hydrological processes and time scaling / Incertitude, entropie, effet d'échelle et propriétés stochastiques hydrologiques. 2. Dépendance temporelle des processus hydrologiques et échelle temporelle. Hydrolog Sci J, 50:(3):426. https://doi.org/10.1623/hysj.50.3.405.65028

Lesne A (2014) Shannon entropy: a rigorous notion at the crossroads between probability, information theory, dynamical systems and statistical physics. Math Struct Comp Sci 24:e240311

Liu Y, Liu C, Wang D (2011) Understanding atmospheric behaviour in terms of entropy: a review of applications of the second law of thermodynamics to meteorology. Entropy 13:211–240

Lykoudis SP, Argiriou AA, Dotsika E (2010) Spatially interpolated time series of δ18Ο in Eastern Mediterranean precipitation. Glob Planet Chang 71(3–4):150–159

Mukhopadhyay B, Khan A (2015) Boltzmann-Shannon entropy and river flow stability within Upper Indus Basin in a changing climate. Int J River Basin Manag 13(1):87–95

Nebot A, Mugica V, Escobet A (2008) Ozone prediction based on meteorological variables: a fuzzy inductive reasoning approach. Atmos Chem Phys Discuss 8:12343–12370

Palmer TN (2000) Predicting uncertainty in forecats of weather and climate. Rep Prog Phys 63:71–116

Radzuan NFM, Othman Z, Bakar AA (2013) Uncertain time series in weather prediction. Procedia Technol 11:557–564

Richardson DS (2000) Skill and relative economic value of the ECMWF ensemble prediction system. Q J R Meteorol Soc. 126:649–667

Roulston MS, Smith LA (2002) Evaluating probabilistic forecats using information theory. Mon Weather Rev 130:1653–1660

Shrestha A, Wake C, Dibb J, Mayweski P (2000) Precipitation fluctuations in the Nepal Himalaya and its vicinity and relationship with some large scale climatological parameters. Int J Climatol 20:317–327

Singh VP (1997) The use of entropy in hydrology and water resources. Hydrol Process 11(6):587–626

Singh VP (2011) Hydrologic synthesis using entropy theory. J Hydrol Eng 16(5):421–433

Varotsos C (2007) Power-law correlations in column ozone over Antarctica. Int J Remote Sens 27:3333–3342

Varotsos CA, Ondov JM, Efstathiou MN, Cracknell AP (2014) The local and regional atmospheric oxidants at Athens (Greece). Environ Sci Pollut Res 21(6):4430–4440

Varotsos CA, Efstathiou MN, Cracknell AP (2013) Plausible reasons for the inconsistencies between the modeled and observed temperatures in the tropical troposphere. Geophys Res Lett 40:4906–4910

Varotsos C, Tzanis C, Efstathiou M, Deligiorgi D (2015) Tempting long-memory in the historic surface ozone concentrations at Athens, Greece. Atmos Pollut Res 6:1055–1057

Vellore RK, Kaplan ML, Krishnan R, Lewis JM, Sabade S, Deshpande N, Singh BB, Madhura RK, Rao MR (2016) Monsoon-extratropical circulation interactions in Himalayan extreme rainfall. Clim Dyn 46(11–12):3517–3546

Xu Q (2007) Measuring information content from observations for data assimilation: relative ntropy versus shannon entropy difference. Tellus A Dyn Meteorol Oceanogr 59:198–209

Yadav RK (2016) On the relationship between Iran surface temperature and northwest India summer monsoon rainfall. Int J Climatol 36(13):4425–4438

Zeng X, Pielke RA, Eykholt R (1993) Chaos theory and its applications to the atmosphere. Bull Am Meteorol Soc 74s:631–644

Zhang S, Guo Y, Wang Z (2015) Correlation between flood frequency and geomorphologic complexity of rivers network–a case study of Hangzhou China. J Hydrol 527:113–118

Acknowledgments

The authors are thankful to the anonymous reviewers for their constructive suggestions. The data are collected from the Indian Institute of Tropical Meteorology, Pune.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Saha, S., Chattopadhyay, S. Exploring of the summer monsoon rainfall around the Himalayas in time domain through maximization of Shannon entropy. Theor Appl Climatol 141, 133–141 (2020). https://doi.org/10.1007/s00704-020-03186-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-020-03186-4