Abstract

This study evaluated the ability of Weather Research and Forecasting (WRF) multi-physics ensembles to simulate storm systems known as East Coast Lows (ECLs). ECLs are intense low-pressure systems that develop off the eastern coast of Australia. These systems can cause significant damage to the region. On the other hand, the systems are also beneficial as they generate the majority of high inflow to coastal reservoirs. It is the common interest of both hazard control and water management to correctly capture the ECL features in modeling, in particular, to reproduce the observed spatial rainfall patterns. We simulated eight ECL events using WRF with 36 model configurations, each comprising physics scheme combinations of two planetary boundary layer (pbl), two cumulus (cu), three microphysics (mp), and three radiation (ra) schemes. The performance of each physics scheme combination and the ensembles of multiple physics scheme combinations were evaluated separately. Results show that using the ensemble average gives higher skill than the median performer within the ensemble. More importantly, choosing a composite average of the better performing pbl and cu schemes can substantially improve the representation of high rainfall both spatially and quantitatively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

East Coast Lows (ECLs) are intense low-pressure systems that occur off the eastern coast of Australia. Extreme rainfall events associated with ECLs frequently cause significant flash flooding near the coast, as well as major flooding in river systems with headwaters in the Great Dividing Range. In spite of its destructive capacity, the rainfall associated with ECLs has a beneficial role for coastal communities, as it provides significant inflow to coastal storages along the New South Wales (NSW) coast (Pepler and Rakich 2010). Large events can even provide inflow to the headwaters of western flowing rivers, particularly in northeastern NSW. It is important for both hazard control and water management to correctly capture the ECL features in modeling, in particular, to reproduce the observed rainfall amounts and spatial patterns.

The Weather Research and Forecasting (WRF) model (Skamarock et al. 2008) is a numerical weather prediction and atmospheric simulation system designed for operational forecasting, atmospheric research, and dynamical downscaling of Global Climate Models. Previous studies have shown that the WRF model performs well for simulating the regional climate of south-eastern Australia (Evans and McCabe 2010, 2013; Evans and Westra 2012). Evans et al. (2012) evaluated physics scheme combinations for hind-cast simulations of four ECL events using the WRF model. The authors investigated the influence of selecting different planetary boundary layer (pbl), cumulus (cu), microphysics (mp), and radiation (ra) schemes on accuracy of maximum and minimum temperature, wind speed, mean sea level pressure, and rainfall. Similar sensitivity study was done for other regions too (Yuan et al. 2012; Jankov et al. 2005; Awan et al. 2011). For example, Yuan et al. (2012) used the WRF model configured with two alternative schemes of mp, cu, ra, and land surface physics schemes when forecasting winter precipitation in China. The authors of these studies struggled to identify a single “best” physics scheme combination for all variables, although it was clear that some combinations performed better than others for certain variables (Evans et al. 2012).

While sensitivity analysis cannot agree on a best model configuration (Jankov et al. 2005; Evans et al. 2012), other methods can be utilized to maximize the information gained from multiple model runs using different parameterizations. Ensemble averaging is one of them, which is widely used in weather forecasting, seasonal predictions, and climate simulations (Fraedrich and Leslie 1987; Hagedorn et al. 2005; Phillips and Gleckler 2006; Schwartz et al. 2010; Schaller et al. 2011). Many studies have investigated the ensemble averages of various regional climate models and perturbed initial conditions in simulating regional rainfall (Cocke and LaRow 2000; Yuan and Liang 2011; Carril et al. 2012; Ishizaki et al. 2012). Some of them showed that an ensemble average had skill in reproducing heavy rainfall events (Yuan and Liang 2011; Yuan et al. 2012), while others reported that the results were far from satisfactory (Carril et al. 2012).

In this paper, we evaluated the skill of ensemble averages (for the full ensemble and subsets of particular physics schemes) relative to the use of individual ensemble members to capture spatial and distributional properties of rainfall associated with eight ECLs. We used the same physics scheme combinations as those used by Evans et al. (2012) but extended the modeling to cover four more events, in order to give a complete representation of the different types of synoptic events typically associated with ECLs (Speer et al. 2009).

2 Method

2.1 Physics scheme ensemble and model domain

This study used version 3.2.1 of WRF with the Advanced Research WRF dynamical core (Skamarock et al. 2008). Initial and boundary conditions were provided by European Centre for Medium-Range Weather Forecasts interim re-analyses (ERA Interim) (Dee et al. 2011). The experimental configuration consists of: 2 pbl, 2 cu, 3 ra, and 3 mp schemes, giving a total of 36 runs for each event (Table 1). The full details of the experiment setup are described in Evans et al. (2012).

Two model domains with one-way nesting (with spectral nudging of wind and geopotential above 500 hpa in the outer domain) were used in this study (see Fig. 1), with grid spacing of 50 and 10 km for the outer and inner model domain, respectively. Both domains had 30 vertical levels. Each run was started 1 week prior to the event for a 2-week period, thus encompassing pre- and post-storm days. The total number of events simulated and resolution chosen were limited by the available computational resources.

2.2 Case study periods

Using mean sea level pressure, wind speed, rainfall, and wave height, Speer et al. (2009) identified six different types of ECLs: (1) ex-tropical cyclones, (2) inland trough lows, (3) easterly trough lows, (4) wave on front lows, (5) decaying front lows, and (6) lows in the westerlies. Unlike Evans et al. (2012), our study of eight ECL events includes examples of all common synoptic ECL types (Table 2). The events were subjectively named based on the location, timing, or type of event.

The eight ECL events were subjectively divided into two categories (strong and weak) according to the observed rainfall amount. Four strong events (NEWY, SURFERS, JUN, and FEB) generally produced more than 200-mm cumulate rainfall and caused regional or local flooding. In contrast, four weak events (CTLOW, OCT, MAY and SOLOW) generated less rainfall.

2.3 Observation and evaluation methodology

Gridded daily rainfall data over land with ∼5-km horizontal grid spacing obtained from the Australian Water Availability Project (AWAP) (Jones et al. 2009) were used for evaluation of model simulations. For the evaluation, 13-days accumulated AWAP rainfall was re-gridded to the 10-km resolution domain. Figure 2 shows accumulated AWAP rainfall maps for the eight ECL events.

The first day of each simulation was considered as the spin-up period, and was hence excluded from the analysis. The skill of each WRF physics scheme combination and their ensembles to simulate accumulated rainfall was assessed using: spatial correlation (R), bias, mean absolute error (MAE), root mean square error (RMSE), and equitable threat score (ETS), also known as the Gilbert Skill Score (Wilks 2006). Higher values of R and ETS indicate better forecasts, with a perfect ETS achieving a score of 1. An ETS below zero indicates that random chance would provide a better simulation than the model. MAE, RMSE and bias are all better as they approach zero.

The ETS is commonly used in forecast verification to investigate the overall spatial performance of the simulations for different rainfall thresholds (10, 25, 50, 100, 200, and 300 mm for all ECL events). It should be noted that the higher rainfall thresholds have smaller sample sizes with the 200-mm threshold sampling 1,136 grid points and the 300-mm threshold sampling only 197 grid points from AWAP. For each threshold, an observation can be classified as either: “a” (forecast and observed agree on exceedance of threshold, i.e., hits), “d” (forecast and observed agree on non-exceedance of threshold), “c” (exceeded in observed but not in forecast, i.e., missed event), and “b” (exceeded in forecast but not in observed, i.e. false alarm). Having classified all grid cells according to a–d, the ETS is then calculated as \( \mathrm{ETS}={{{\left( {a-\mathrm{ar}} \right)}} \left/ {{\left( {a+b+c-\mathrm{ar}} \right)}} \right.} \), where ar is the number of hits due to random chance and is given by \( \mathrm{ar}={{{\left( {a+b} \right)\times \left( {a+c} \right)}} \left/ {{\left( {a+b+c+d} \right)}} \right.} \).

2.4 Ensemble integration

Ensemble averages were calculated for each event, using all 36 members and subsets of runs simulated with common pbl, cu, mp, or ra physics schemes. The names and the collection of runs used in ensembles are summarized in Table 3. For example, the “YSU” ensemble is averaged over the 18 runs that use the YSU pbl scheme.

3 Results

The skill of the ensemble averages was assessed using the metrics described in Section 2.3 and the results compared with each other and with the median of the 36 individual members. Inter-event comparisons showed similarity in results for all but the SURFERS event. Therefore, in this section, we focused on the results from the JUN event (typical of the seven events that give similar results) and the SURFERS event to demonstrate our findings.

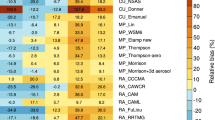

For the JUN event, the ensemble average using all members (ALL) provided substantially higher spatial correlation, lower MAE and RMSE, and better ETS (except for the 300-mm threshold) than those from the median of the 36 individual members (Fig. 3a), but also resulted in more negative bias than the median. Some of the results using ALL (i.e. R, RMSE, ETS10) were even better than the 75th percentile estimate of the 36 individual members. This suggests that ALL provides better estimates for rainfall amounts and patterns compared to the median of the 36 individual members at all thresholds below 300 mm.

Plots summarizing statistics from 36 members and ensemble averages for the JUN (a) and SURFERS (b) events. The boxes and whiskers show the results from 36 members. The boxes show the inter-quartile, the middle horizontal lines show the median and the whiskers show the best and the worst values. The bias, MAE and RMSE values were divided by 30, 40, and 80, respectively, to allow them to be plotted in the same graph. The results from five ensembles are shown in different markers. All represents an ensemble average of all the 36 members, YSU represents the ensemble average for all members using YSU scheme, KF represents the ensemble average for all members using KF scheme, YSU_KF represents the ensemble average for all members using both YSU and KF schemes, and YSU_BMJ represents the ensemble average for all members using both YSU and BMJ schemes

Ensembles using certain mp (WSM3, WSM5, and WDM5) and ra (Dudhia/RRTM, CAM/CAM, and RRTMG/RRTMG) schemes showed little to no improvement in the skill relative to ALL. However, there was a large difference in the results from ensembles using different pbl (YSU, MYJ) and cu (KF, BMJ) schemes. The ensemble using YSU pbl scheme performed better than the ensemble using MYJ pbl scheme, and similarly ensemble using KF cu scheme performed better than the ensemble using BMJ cu scheme (not shown). The ensembles using either YSU pbl scheme or KF cu scheme outperformed ALL except for ETS 25, where KF was worse than ALL. The best performance is typically given by the ensemble using a combination of the YSU pbl and KF cu scheme (nine members). This ensemble also considerably outperformed the combination using YSU pbl and BMJ cu scheme. At high rainfall thresholds, this ensemble was even superior to the best result in the 36 individual members (i.e., thresholds at 200 and 300 mm).

As mentioned above, results for the SURFERS event were different to those of other events. While ALL gave a higher spatial correlation and better ETS for multiple thresholds (except for 10 and 300 mm) compared to the median of the 36 individual members, it also produced larger bias, MAE and RMSE compared to the median (Fig. 3b). The ensemble using YSU pbl scheme performed better than ALL, while the ensemble using KF cu scheme performed worse than ALL at all rainfall thresholds for ETS but was still better than ALL for bias, MAE and RMSE. The ensemble using both YSU pbl scheme and KF cu scheme at the same time showed higher R, smaller bias, MAE and RMSE, and better skill at rainfall threshold below 100 mm compared to ALL, but the results were poorer at the 100- and 200-mm rainfall thresholds.

4 Discussion

Ensemble averages have been found to perform consistently as well as, if not better than, the median of individual members when evaluated using common metrics in weather forecasting, seasonal predictions and climate simulations (Fraedrich and Leslie 1987; Hagedorn et al. 2005; Phillips and Gleckler 2006; Schwartz et al. 2010; Schaller et al. 2011). For many of these metrics (e.g., bias, RMSE), ensemble averaging smoothens the field of interest, thus removing any large errors present in the individual ensemble members. However, this smoothing reduces the outlier values and hence the ability to capture extremes. Results presented in Fig. 3 suggest that judicial choice of ensemble members allows the high rainfall centers to be captured by an ensemble average. For all events, assessments showed that the ensemble mean for all members (ALL) and for members using YSU pbl scheme (YSU) provided better estimates for rainfall amounts and patterns compared to the median of the individual members, even though they also resulted in larger bias, MAE and RMSE for the SURFERS event relative to the median. The ensemble using the combination of the YSU pbl scheme and KF cu scheme (YSU_KF) was superior to all the other ensembles for seven of the eight events. For SURFER event, the ensemble using the combination of the YSU pbl scheme and BMJ cu scheme (YSU_BMJ) gave the best performance for ETS 100–300.

The unique response of SURFER prompted further investigation into the synoptic conditions prevailing during this event. Comparing the complete rainfall field from WRF (i.e., including ocean grid cells) with observational rainfall data, we propose that the geographical positioning of the main rainfall center of SURFER relative to the observational rainfall data set could provide an explanation for the different behavior of SURFER relative to the other events. As described in Evans et al. (2012), the SURFERS event developed from a tropical low that persisted for 5 to 6 days over the Coral Sea, classified as an ex-Tropical Cyclone (xTC) type by Speer et al. (2009). While SURFER caused flash flooding throughout the region including Surfers Paradise in Queensland, information from satellite images and Climate Prediction Center Merged Analysis of Precipitation showed that the major rainfall center was offshore, and hence was not captured in the land-based AWAP observations, whereas for all other events, the major rainfall centers were on land. As the rainfall evaluation was conducted only over land, the SURFER simulations were assessed on conditions peripheral to the main storm center. Thus, we propose that geographical positioning of the main rainfall center of SURFER relative to the observational rainfall dataset could provide an explanation for the different behavior of SURFER relative to the other events.

To further investigate whether the ensembles using the YSU pbl scheme, the KF cu scheme, or a combination of both will give more realistic rainfall estimates in different weather conditions, we compared these ensembles with the full member ensemble (ALL) using an average of skill metrics from eight events to evaluate their performance. Figure 4a shows differences between the three ensembles (YSU, KF, and YSU_KF) and the full member ensemble (ALL) averaged over eight events. The bias, MAE and RMSE values were divided by 100, respectively, to allow them to be plotted in the same scale with the other metrics. The positive values for R and ETSs and the negative values for Bias, MAE, and RMSE indicate improvement relative to ALL. The results showed that the ensembles using either YSU pbl scheme, or KF cu schemes, or their combination generally produce relatively small changes in R, bias, MAE and RMSE relative to ALL. At higher rainfall thresholds, the ensembles preformed much better than ALL based on the ETS. This indicates that an ensemble based on carefully chosen physics schemes can dramatically improve the ability of the ensemble to capture centers of high rainfall. When the SURFERS event was excluded from the statistics, the performance of these ensembles became even better for high rainfall (Fig. 4b). Considering that the ex-Tropical Cyclone type (like SURFERS event) only constitutes 4 % of observed ECL events (Speer et al. 2009), the climatological performance of these ensembles would be more similar to Fig. 4b.

Difference between the three ensembles (YSU, KF, and YSU_KF) and the full member ensemble (ALL) averaged over eight events (a) and over seven events (eight events excluding SURFERS event) (b). The bias, MAE and RMSE values were divided by 100, respectively, to allow them to be plotted in the same scale with other metrics. The positive values for R, ETSs and the negative values for Bias, MAE, and RMSE indicate improvement relative to ALL

5 Conclusions

The performance of 36 physics scheme combinations of the WRF model and their ensembles were evaluated for modeling rainfall totals associated with eight ECL events (four strong and four weak) using five statistical metrics (R, bias, MAE, RMSE, and ETS for six rainfall thresholds).

The results show that the ensemble average generally provides more realistic rainfall estimates compared to the median performer of the individual members. Improvements compared to individual ensemble members were seen in the accuracy of rainfall amount and spatial pattern. Furthermore, based on the sensitivity analyses of physics scheme combinations, we found that the ensembles using the YSU pbl scheme or the KF cu scheme provided improved rainfall estimates compared to both the median performer of individual members and the all member ensemble mean, particularly at high rainfall thresholds. The ensemble average using the combination of YSU pbl scheme and KF cu schemes provided the best results for simulating centers of high rainfall. This points to the value of focusing only on a subset of ensemble runs when calculating an ensemble mean. Depending on the rainfall characteristics of interest, different parameterization based sub-ensembles may improve the ensemble mean estimate.

References

Awan NK, Truhetz H, Gobiet A (2011) Parameterization-induced error characteristics of MM5 and WRF operated in climate mode over the Alpine Region: an ensemble-based analysis. J Clim 24:3107–3123. doi:10.1175/2011JCLI3674.1

Betts AK (1986) A new convective adjustment scheme. Part I: observational and theoretical basis. Q J Roy Meteorol Soc 121:255–270

Betts AK, Miller MJ (1986) A new convective adjustment scheme. Part II: single column tests using GATE wave, BOMEX, and arctic air-mass data sets. Q J Roy Meteorol Soc 121:693–709

Carril AF, Mene’ndez CG, Remedio ARC (2012) Performance of a multi-RCM ensemble for South Eastern South America. Clim Dyn 39:2747–2768

Chen F, Dudhia J (2001) Coupling an advanced land-surface/ hydrology model with the Penn State/ NCAR MM5 modeling system. Part I: model description and implementation. Mon Weather Rev 129:569–585

Clough SA, Shephard MW, Mlawer EJ, Delamere JS, Iacono MJ, Cady-Pereira K, Boukabara S, Brown PD (2005) Atmospheric radiative transfer modeling: a summary of the AER codes. J Quant Spectrosc Radiat Transf 91:233–244

Cocke S, LaRow TE (2000) Seasonal predictions using a regional spectral model embedded within a coupled ocean–atmosphere model. Mon Weather Rev 128:689–708

Collins WD, Rash PJ, Boville BA, Hack JJ, McCaa JR, Williamson DL, Kiehl JT, Briegleb B (2004) Description of the NCAR community atmosphere model (CAM 3 0), NCAR technical note, NCAR/TN-464 + STR, 226 pp

Dee DP et al (2011) The ERA-Interim reanalysis: configuration and performance of the data assimilation system. Q J R Meteorol Soc 137:553–597

Dudhia J (1989) Numerical study of convection observed during the winter monsoon experiment using a mesoscale two-dimensional model. J Atmos Sci 46:3077–3107

Evans J P, and McCabe M F (2010) Regional climate simulation over Australia’s Murray-Darling basin: A multitemporal assessment. J Geophys Res 115, Issue D14. doi:10.1029/2010JD013816

Evans JP, McCabe MF (2013) Effect of model resolution on a regional climate model simulation over southeast Australia. Clim Res. doi:10.3354/cr01151

Evans JP, Westra S (2012) Investigating the mechanisms of diurnal rainfall variability using a regional climate model. J Clim 25:7232–7247. doi:10.1175/JCLI-D-11-00616.1

Evans JP, Ekstrom M, Ji F (2012) Evaluating the performance of a WRF physics ensemble over South-East Australia. Clim Dyn 39:1241–1258. doi:10.1007/s00382-011-1244-5

Fraedrich K, Leslie LM (1987) Combining predictive schemes in short-term forecasting. Mon Weather Rev 115:1640–1644. doi:10.1175/1520-0493

Hagedorn R, Doblas-Reyes F, Palmer T (2005) The rationale behind the success of multi-model ensembles in seasonal forecasting—I. basic concept. Tellus Ser A Dyn Meteorol Oceanogr 57:219–233

Hong S-Y, Lim J-OJ (2006) The WRF single-moment 6-class microphysics scheme (WSM6). J Kor Meteor Soc 42:129–151

Hong S-Y, Dudhia J, Chen S-H (2004) A revised approach to ice microphysical processes for the bulk parameterization of clouds and precipitation. Mon Weather Rev 132:103–120

Hong SY, Noh Y, Dudhia J (2006) A new vertical diffusion package with an explicit treatment of entrainment processes. Mon Weather Rev 134:2318–2341

Ishizaki Y, Nakaegawa T, Takayabu I (2012) Validation of precipitation over Japan during 1985–2004 simulated by three regional climate models and two multi-model ensemble means. Clim Dyn 39:185–206

Janjic ZI (1994) The step-mountain eta coordinate model: further developments of the convection, viscous sublayer and turbulence closure schemes. Mon Weather Rev 122:927–945

Janjic ZI (2000) Comments on “Development and evaluation of a convection scheme for use in climate models”. J Atmos Sci 57:3686

Jankov I, Gallus W Jr, Segal M, Shaw B, Koch S (2005) The impact of different WRF model physical parameterizations and their interactions on warm season MCS rainfall. Weather Forecast 20:1048–1060

Jones D, Wang W, Fawcett R (2009) High-quality spatial climate data-sets for Australia. Aust Meteorol Mag 58:233–248

Kain JS (2004) The Kain-Fritsch convective parameterization: an update. J Appl Meteorol 43:170–181

Kain JS, Fritsch JM (1990) A one-dimensional entraining/ detraining plume model and its application in convective parameterization. J Atmos Sci 47:2784–2802

Kain JS, Fritsch JM (1993) Convective parameterization for mesoscale models: the Kain-Fritsch scheme, the representation of cumulus convection in numerical models. In: Emanuel KA, Raymond DJ (eds) Amer Meteor Soc 246 pp

Mlawer EJ, Taubman SJ, Brown PD, Iacono MJ, Clough SA (1997) Radiative transfer for inhomogeneous atmosphere: RRTM, a validated correlated-k model for the long-wave. J Geophys Res 102:16663–16682

Paulson CA (1970) The mathematical representation of wind speed and temperature profiles in the unstable atmospheric surface layer. J Appl Meteorol 9:857–861

Pepler AS, Rakich CS (2010) Extreme inflow events and synoptic forcing in Sydney catchments. IOP Conf Ser: Earth Environ Sci (EES) 11(012010). doi:10.1088/1755-1315/11/1/012010

Phillips TJ, Gleckler PJ (2006) Evaluation of continental precipitation in 20th century climate simulations: the utility of multimodel statistics. Water Resour Res 42(3). doi: 10.1029/2005WR004313

Schaller N, Mahlstein I, Cermak J, Knutti R (2011) Analyzing precipitation projections: a comparison of different approaches to climate model evaluation. J Geophys Res 116(10). doi: 10.1029/2010JD014963

Schwartz CS, Kain JS, Weiss SJ, Xue M, Bright DR, Kong F, Thomas KW, Levit JJ, Coniglio MC, Wandishin MS (2010) Toward improved convection-allowing ensembles: model physics sensitivities and optimizing probabilistic guidance with small ensemble membership. Weather Forecast 25:263–280. doi:10.1175/2009WAF2222267.1

Skamarock WC, Klemp JB, Dudhia J, Gill DO, Barker DM, Duda M, Huang XY, Wang W, Powers JG (2008) A description of the advanced research WRF version 3. NCAR, Boulder, NCAR Technical Note

Speer M, Wiles P, Pepler A (2009) Low pressure systems off the New South Wales coast and associated hazardous weather: establishment of a database. Aust Meteorol Oceanogr J 58:29–39

Webb EK (1970) Profile relationships: the log-linear range, and extension to strong stability. Q J Roy Meteorol Soc 96:67–90

Wilks DS (2006) Statistical methods in the atmospheric sciences, 2nd edn. Academic Press, Amsterdam, p 627, International Geophysics Series, 91

Yuan X, Liang XZ (2011) Improving cold season precipitation prediction by the nested CWRF-CFS system. Geophys Res Lett 38, L02706. doi:10.1029/2010GL046104

Yuan X, Liang XZ, Wood EF (2012) WRF ensemble downscaling seasonal forecasts of China winter precipitation during 1982–2008. Clim Dyn 39:2041–2058. doi:10.1007/s00382-011-1241-8

Acknowledgments

This work is made possible by funding from the NSW Environmental Trust for the ESCCI-ECL project, the NSW Office of Environment and Heritage backed NSW/ACT Regional Climate Modelling Project (NARCliM), and the Australian Research Council as part of the Discovery Project DP0772665 and Linkage Project LP120200777. Thanks to the South Eastern Australian Climate Initiative (SEACI) for funding the CSIRO contribution to this study. This research was undertaken on the NCI National Facility in Canberra, Australia, which is supported by the Australian Commonwealth Government.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ji, F., Ekström, M., Evans, J.P. et al. Evaluating rainfall patterns using physics scheme ensembles from a regional atmospheric model. Theor Appl Climatol 115, 297–304 (2014). https://doi.org/10.1007/s00704-013-0904-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-013-0904-2