Abstract

Due to consumer preference for products with ever higher performance, a requirement exists for precise autofocusing microscope systems to perform the inspection process in automated mass production lines. Accordingly, the present study proposes a laser-based microscope system in which a precise autofocusing capability is achieved using a position feedback signal based on the distance L between the geometrical center (X c , Y c ) of the image captured by the CCD sensor and the centroid (x centroid , y centroid ) of the image. The experimental results show that the proposed system has a positioning accuracy of 2.2 μm and a response time of 1 s given a working range of ±200 μm. The autofocusing performance of the proposed system is thus better than that of a conventional centroid-based system, which typically achieves a positioning accuracy of around 5.2 μm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Non-contacted method is a persistent trend in many electronic, biomedical and industrial applications. As a consequence, a requirement exists for highly precise microscope systems capable of performing the inspection process in automated mass production lines. In practice, implementing such systems requires the use of powerful yet highly-efficient autofocusing and image-processing schemes. Thus, the problem of automated microscopy has emerged as an important concern in recent decades (Andersson 2011; Hsu et al. 2009; Liu et al. 2012a, b; Petruck et al. 2012; Rudnaya et al. 2012; Zhang et al. 2008).

Autofocusing is a critical operation in automated microscopy (Zeder and Pernthaler 2009). As a result, many autofocusing microscopes have been proposed. Generally speaking, existing autofocusing microscopes can be classified as either image-based (software) or optics-based (hardware) (Pengo et al. 2009). In image-based autofocusing microscopes, a precise focusing performance is achieved using a feedback signal based on the image sharpness, as quantified by the focus value, for example (Chang et al. 2009; Chen et al. 2010). Such microscopes are cheap, reliable and robust. As a result, they are used in many automated optical inspection applications throughout industry (Abdullah et al. 2009; Brazdilova and Kozubek 2009; Kim and Poon 2009; Lee et al. 2009; Moscaritolo et al. 2009; Shao et al. 2010; Wright et al. 2009). However, image-based autofocusing systems are relatively inefficient in determining the point of maximum focus. As a result, they are impractical for applications with a real-time inspection requirement. Moreover, the maximum achievable focusing accuracy is inevitably limited by depth of focus constraints. In an effort to resolve these limitations, many optics-based autofocusing methods have been proposed (Chao et al. 2010; Kim et al. 2007, 2008; Liu et al. 2012a, b; Rhee et al. 2009; Tanaka et al. 2006).

Optics-based autofocusing methods generally use a laser light source. However, the timing fluctuations of the laser introduce noise; thereby degrading the positioning accuracy of the autofocusing system. In order to optimize the focusing performance of such systems, the power, frequency and geometry of the illuminating laser beam must all be stable. The literature contains many proposals for reducing the power and frequency fluctuations of laser light sources (Andronova and Bershtein 1991; Nakamura et al. 1987; Rodwell et al. 1986; Wang et al. 2000). However, to the best of the current authors’ knowledge, very few methods have been proposed for reducing the geometrical fluctuations. Traditionally, geometrical fluctuations in the laser beam are reduced using a mode-cleaner; namely a Fabry–Perot cavity used in transmission which reduces the fluctuations by a factor approximately equal to the finesse (Gossler et al. 2003). Alternatively, the fluctuations are reduced using an adaptive optics system based on the interferometric detection of the phase front (Avino et al. 2007). Unfortunately, however, such systems are expensive and complex, and are therefore poorly suited to most real-world inspection applications.

Accordingly, the present study proposes an optics-based autofocusing microscope system in which the effects of geometrical fluctuations in the laser beam are minimized by adjusting the position of the objective lens adaptively in accordance with the distance L between the geometrical center (X c , Y c ) of the image captured by the CCD sensor and the centroid (x centroid , y centroid ) of the image. The autofocusing performance of the proposed system is demonstrated by means of a series of experimental trials using a transparent TFT sample.

2 Structure of laser-based autofocusing microscope system

2.1 Layout of proposed system

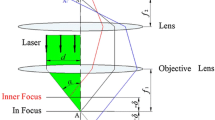

Figure 1 illustrates the basic structure of the proposed autofocusing microscope system. As shown, a light beam is emitted from a laser light source (Hitachi HL6501MG, 658 nm) and is expanded and collimated through an expander lens. The collimated laser beam is bisected by a knife and is then passed through two beam splitters (BSs). The first BS results in an equal reflection and transmission of red light, while the second BS results in a high reflection of red light and a high transmission of the remaining visible light (i.e., wavelengths other than 658 nm). The laser beam emerging from the second BS passes through an objective lens and is incident on the sample surface. The laser beam is reflected from the sample surface, passes back through the objective lens and BSs, enters an achromatic lens, and is finally incident on a CCD sensor (Guppy F-146C).

In accordance with basic geometric optics principles, the shape of the laser spot (or the distance L between the geometrical image center (X c , Y c ) and the centroid (x centroid , y centroid ) of the image) changes depending on the distance between the sample surface and the objective lens (see Sect. 2.3). In the microscope system proposed in this study, the variation in the distance L is calculated using a self-written algorithm embedded in a signal processing unit. An autofocusing capability is then achieved by using a position feedback signal based on the variation in the value of L to dynamically adjust the position of the objective lens via a linear motor. The autofocusing procedure is observed in real-time using an infinity-corrected optical system (Navitar Zoom 6000).

2.2 Conventional autofocusing method

Figure 2 presents a schematic illustration of the optical path in the proposed autofocusing microscope system. When the laser beam strikes point A on the sample surface (placed at the focal plane 1 of the objective lens), it is reflected from the surface and incident at point A′ on the CCD sensor. From basic geometric principles, it can be shown that

where α is the maximum incidence angle of the laser beam, d is the radius of the collimated laser beam, and f 1 is the focal length of the objective lens. When the sample surface is moved from plane 1 to plane 3 (corresponding to a displacement of δ), the laser beam strikes point C on the sample surface and is then reflected. The reflected laser beam intersects plane 1 at point A1 and is then incident on the CCD sensor at point A1′. Again, from basic geometric principles, it can be shown that

The displacement Δ of the incident point on the CCD sensor can be obtained as

where Δ is the distance between point A′ and point A1′, and K is the total magnification of the objective lens and achromatic lens, and is given by

where f 2 is the focal length of the achromatic lens. Substituting Eqs. (1), (2) and (4) into (3), the shift Δ of the incident point on the CCD sensor is obtained as

Equation (5) indicates that the distance Δ and the defocus distance δ are linearly related.

Figure 3 illustrates the shape of the laser spot on the CCD sensor given different values of the defocus distance δ. Note that in the figure (X c , Y c ) and (x centroid , y centroid ) represent the positions of the geometrical image center and the centroid of the image, respectively. Assuming that the position of the geometrical image center (X c , Y c ) remains constant, the coordinates of the image centroid can be expressed as

where i and j are the pixel row number and pixel column number, respectively, and P ij is the image intensity of the pixel located at the intersection of row i and column j. In traditional autofocusing microscope systems, it is assumed that the geometrical image center (X c, Y c) remains constant over time and is located at the origin of the x–y coordinate frame. Thus, the following equations are obtained from Eqs. (6) and (7):

Assuming that the CCD image has a uniform intensity, i.e., P ij is a constant for every image pixel, a linear relationship exists between the centroid coordinates of the image on the CCD sensor and the defocus distance δ [see Eqs. (5), (8) and (9)]. According to this linear relationship, conventional automated microscope systems achieve an autofocusing capability by using a feedback signal based on the centroid coordinates (x centroid , y centroid ) to dynamically adjust the position of the objective lens. This autofocusing method is referred to conventionally as the centroid method (Wang et al. 2000; Weiss et al. 2010).

2.3 Proposed autofocusing method

In practice, geometrical fluctuations of the laser beam cause the geometrical image center (X c , Y c ) to vary at different measuring times (T 1, T 2). In other words, the geometrical image center (X c , Y c ) at time T 1 ≠ the geometrical image center (X c , Y c ) at time T 2. As a consequence, the positioning accuracy of the autofocusing system is reduced. Accordingly, the present study proposes a modified autofocusing design.

As shown in Fig. 4, assuming that the geometrical fluctuations of the laser beam affect only the coordinate positions of the laser spot (i.e., the shape of the laser spot is unchanged), the distance L between the geometrical image center (X c , Y c ) and the centroid (x centroid , y centroid ) of the image can be regarded as a constant for different measuring times (T 1, T 2) for any given value of the defocusing distance δ. In other words, regardless of the geometrical fluctuations of the laser beam, a linear relationship exists between the distance L and the defocus distance δ. That is,

where k is a proportionality constant.

Consequently, having determined the value of L by means of an image processing scheme (see Fig. 5), the defocus distance δ (i.e., the distance through which the objective lens should be shifted to obtain a focused image) can be computed directly from Eq. (10). This section covers the minimum background necessary for the discussion. For more comprehensive coverage, the reader is referred to references (National Insruments 2003, 2004).

2.4 Optical design

A series of ray tracing simulations was performed to determine the optimal values of the major design parameters (d, f 1, f 2 and k) in the proposed autofocusing microscope system. The corresponding results obtained for a working range of ±200 μm are presented in Table 1. Figure 6 shows the optical model of the autofocusing system constructed using commercial ZEMAX optical design software. Meanwhile, Fig. 7 shows the numerical results obtained for the shape of the laser spot on the CCD sensor given different values of the defocus distance. As predicted by the theoretical analysis results, it is seen that the distance L and the defocus distance δ are linearly related.

3 Experimental characterization of prototype model

The validity of the proposed autofocusing microscope system was verified by constructing a laboratory-built prototype (see Fig. 8). A series of autofocusing experiments was then performed using a transparent TFT array as a sample. In performing the experiments, the algorithm used to derive the positioning feedback signal was implemented using commercial LabVIEW software.

Figure 9 presents experimental images of the laser spot on the CCD sensor given different values of the defocus distance. It can be seen that the distance L varies linearly with the defocus distance δ. However, a ghost image resulting from the reflection of the laser beam from the lower surface of the transparent TFT sample is also observed (see dashed rectangular area in left-most image). Figure 10a presents a magnified view of the ghost image and the corresponding image intensity signal. It is seen that the intensity of the ghost image is sufficiently strong to affect the calculated value of L, and therefore degrades the positioning accuracy of the autofocusing system. Figure 10b illustrates the formation of the ghost image as the result of the reflection of the laser beam from the lower surface of the transparent sample. The ghost image cannot be avoided when using a transparent sample. However, in the present study, the effect of the ghost image is minimized by means of a filtering operation in the self-written autofocusing algorithm. Figure 11 presents a sequence of real-time images captured by the infinity-corrected optical system during the autofocusing procedure. The images confirm that the proposed system has an excellent autofocusing capability.

a Magnified view of ghost image in Fig. 9 and corresponding image intensity signal, b formation of ghost reflection

In order to verify the stability of the proposed autofocusing system, an experiment was performed to compare the variation over time of the geometrical center, centroid position, and distance L of the laser spot on the CCD sensor given a constant defocus distance of δ = 200 μm. Figures 12 and 13 present the corresponding experimental results, in which each pixel is equivalent to 1 μm. Comparing the two images presented in Fig. 12, it is seen that the geometry of the measured image at T 2 = 60 min is very different from that at T 1 = 0. Figure 13 shows that the variation in the centroid coordinates (x centroid , y centroid ) over a time interval of 270 min is around 5.2 pixels (equivalent to a positioning accuracy of 5.2 μm) while that in the distance L is around 2.2 pixels (equivalent to a positioning accuracy of 2.2 μm). In other words, the assumption of a constant centroid position in the conventional centroid autofocusing method is incorrect, and thus a sub-optimal focusing result is obtained. By contrast, in the method proposed in this study, the defocus distance is adjusted adaptively with changes in the value of L [see Eq. (10)], and thus the focusing performance is improved. Figure 14 present typical experimental images captured during the autofocusing process. The images demonstrate that the proposed system has a response time of just 1 s given a working range of 200 μm.

Overall, the experimental results show that the proposed autofocusing microscope system has a high positioning accuracy (less than 2.2 μm) and a fast response time (~1 s) given a working range of ±200 μm. As a result, the proposed system is ideally suited to real-world automated inspection applications.

4 Conclusions

This study has presented a highly-precise laser-based autofocusing microscope system for automated inspection applications. In the proposed approach, the effects of geometrical fluctuations in the laser beam are mitigated by driving the objective lens of the microscope adaptively using a position feedback signal based on the distance L between the geometrical center of the image (X c , Y c ) and the centroid of the image (x centroid , y centroid ). The autofocusing performance of the proposed system has been demonstrated using a laboratory-built prototype and a transparent sample (a TFT array). The experimental results have shown that the proposed system has a rapid response time (i.e., ~1 s) given a working range of 200 μm and achieves a more precise positioning accuracy than conventional centroid-based focusing systems. Consequently, the proposed system represents an ideal solution for a wide range of automated inspection applications in the electronic, biomedical and industrial fields.

References

Abdullah SJ, Ratnam MM, Samad Z (2009) Error-based autofocus system using image feedback in a liquid-filled diaphragm lens. Opt Eng 48:123602-1–123602-9

Andersson SB (2011) A nonlinear controller for three-dimensional tracking of a fluorescent particle in a confocal microscope. Appl Phys B 104:161–173

Andronova IA, Bershtein IL (1991) Suppression of fluctuations of the intensity of radiation emitted by semiconductor lasers. Sov J Quantum Electron 21:616–618

Avino S, Calloni E, Tierno A et al (2007) High sensitivity adaptive optics control of laser beam based on interferometric phase-front detection. Opt Lasers Eng 45:468–470

Brazdilova SL, Kozubek M (2009) Information content analysis in automated microscopy imaging using an adaptive autofocus algorithm for multimodal functions. J Microsc 236:194–202

Chang HC, Shih TM, Chen NZ et al (2009) A microscope system based on bevel-axial method auto-focus. Opt Lasers Eng 47:547–551

Chao PCP, Chiu CW, Shen JCY (2010) Precision positioning of a three-axis optical pickup via a double phase-lead compensator equipped with auto-tuned parameters. Microsyst Technol 16:117–141

Chen CY, Hwang RC, Chen YJ (2010) A passive auto-focus camera control system. Appl Soft Comput 10:296–303

Gossler S, Casey MM, Freise A et al (2003) Mode-cleaning and injection optics of the gravitational-wave detector GEO600. Rev Sci Instrum 74:3787–3795

Hsu WY, Lee CS, Chen PJ et al (2009) Development of the fast astigmatic auto-focus microscope system. Meas Sci Technol 20:045902-1–045902-9

Kim T, Poon TC (2009) Autofocusing in optical scanning holography. Appl Opt 48:H153–H159

Kim KH, Lee SY, Kim S et al (2007) A new DNA chip detection mechanism using optical pick-up actuators. Microsyst Technol 13:1359–1369

Kim KH, Lee SY, Kim S et al (2008) DNA microarray scanner with a DVD pick-up head. Curr Appl Phys 8:687–691

Lee S, Lee JY, Yang W et al (2009) Autofocusing and edge detection schemes in cell volume measurements with quantitative phase microscopy. Opt Express 17:6476–6486

Liu J, Shi T, Xia Q et al (2012a) Flip chip solder bump inspection using vibration analysis. Microsyst Technol 18:303–309

Liu CS, Hu PH, Lin YC (2012b) Design and experimental validation of novel optics-based autofocusing microscope. Appl Phys B 109:259–268

Moscaritolo M, Jampel H, Knezevich F et al (2009) An image based auto-focusing algorithm for digital fundus photography. IEEE Trans Med Imag 28:1703–1707

Nakamura S, Maeda T, Tsunoda Y (1987) Autofocusing effect due to wavelength change of diode lasers in an optical pickup. Appl Opt 26:2549–2553

National Instruments (2003) IMAQ vision concepts manual. http://www.ni.com/pdf/manuals/322916b.pdf

National Instruments (2004) NI-IMAQ user manual. http://www.ni.com/pdf/manuals/370160d.pdf

Pengo T, Munoz-Barrutia A, Ortiz-De-Solorzano C (2009) Halton sampling for autofocus. J Microsc 235:50–58

Petruck P, Riesenberg R, Kowarschik R (2012) Optimized coherence parameters for high-resolution holographic microscopy. Appl Phys B 106:339–348

Rhee HG, Kim DI, Lee YW (2009) Realization and performance evaluation of high speed autofocusing for direct laser lithography. Rev Sci Instrum 80:073103-1–073103-5

Rodwell MJW, Weingarten KJ, Bloom DM (1986) Reduction of timing fluctuations in a mode-locked Nd:YAG laser by electronic feedback. Opt Lett 11:638–640

Rudnaya ME, Morsche HG, Maubach JML et al (2012) A derivative-based fast autofocus method in electron microscopy. J Math Imaging Vis 44:38–51

Shao Y, Qu J, Li H et al (2010) High-speed spectrally resolved multifocal multiphoton microscopy. Appl Phys B 99:633–637

Tanaka Y, Watanabe T, Hamamoto K et al (2006) Development of nanometer resolution focus detector in vacuum for extreme ultraviolet microscope. Jpn J Appl Phys 45:7163–7166

Wang SH, Tay CJ, Quan C et al (2000) Laser integrated measurement of surface roughness and micro-displacement. Meas Sci Technol 11:454–458

Weiss A, Obotnine A, Lasinski A (2010) Method and apparatus for the auto-focussing infinity corrected microscopes. US Patent 7,700,903 B2

Wright EF, Wells DM, French AP et al (2009) A low-cost automated focusing system for time-lapse microscopy. Meas Sci Technol 20:027003-1–027003-4

Zeder M, Pernthaler J (2009) Multispot live-image autofocusing for high-throughput microscopy of fluorescently stained bacteria. Cytom Part A 75A:781–788

Zhang Z, Feng Q, Gao Z et al (2008) A new laser displacement sensor based on triangulation for gauge real-time measurement. Opt Laser Technol 40:252–255

Acknowledgments

The authors gratefully acknowledge the financial support provided to this study by the National Science Council of Taiwan under Grant NSC 100-2218-E-194-008.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, CS., Lin, YC. & Hu, PH. Design and characterization of precise laser-based autofocusing microscope with reduced geometrical fluctuations. Microsyst Technol 19, 1717–1724 (2013). https://doi.org/10.1007/s00542-013-1883-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00542-013-1883-z