Abstract

Erroneous object distance often causes significant errors in vision-based measurement. In this paper, we propose to apply autofocus to control object distance in order to enhance the measurement accuracy of a machine vision system for robotic drilling. First, the influence of the variation of object distance on the measurement accuracy of the vision system is theoretically analyzed. Then, a Two Dimensional Entropy Sharpness (TDES) function is proposed for autofocus after a brief introduction to various traditional sharpness functions. Performance indices of sharpness functions including reproducibility and computation efficiency are also presented. A coarse-to-fine autofocus algorithm is developed to shorten the time cost of autofocus without sacrificing its reproducibility. Finally, six major sharpness functions (including the TDES) are compared with experiments, which indicate that the proposed TDES function surpasses other sharpness functions in terms of reproducibility and computational efficiency. Experiments performed on the machine vision system for robotic drilling verify that object distance control is accurate and efficient using the proposed TDES function and coarse-to-fine autofocus algorithm. With the object distance control, the measurement accuracy related to object distance is improved by about 87 %.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Object distance

- Measurement accuracy

- Sharpness function

- Autofocus

- Vision-based measurement

- Robotic drilling

1 Introduction

Robotic drilling system has become a feasible option for fastener hole drilling in aircraft manufacturing because of its low investment, high flexibility, and satisfactory drilling quality. Research and development of flexible robotic drilling systems have been conducted by universities and aircraft manufacturers since the year 2000. Lund University developed an effective robotic drilling prototype system, which demonstrated the great potential of applying robotic drilling in the field of aircraft assembly (Olsson et al. 2010). Electroimpact (EI) Corporation developed the One-sided Cell End effector (ONCE) robotic drilling system, and successfully applied it to the assembly of Boeing’s F/A-18E/F Super Hornet (DeVlieg et al. 2002). Through scanning a workpiece with a vision system, the ONCE system can measure the workpiece’s position, and correct drilling positions by comparing the nominal and actual positions of the workpiece. Beijing University of Aeronautics and Astronautics (BUAA) developed an end-effector MDE60 for robotic drilling (Bi and Liang 2011), which integrated a vision unit for measuring the locations of workpieces and welding seams. A robotic drilling system developed at Zhejiang University (ZJU) also integrated a vision system, which was applied to measure reference holes for correcting the drilling positions of a workpiece, refer Fig. 1.

In robotic drilling, the mathematical model of the work cell (including the robot, workpiece, jigs, etc) is used as the basis for the generation of robot programs. However, the mathematical model is not accurately coincident with reality regarding the shape, position, and orientation of the workpiece, which leads to the position errors of drilled fastener holes. In order to enhance the position accuracy of drilled holes, the robot programs should be created in accordance with the actual assembly status of the workpiece. This is usually achieved through creating some reference holes on the workpiece, whose actual positions are typically measured with a vision system, and correcting the drilling positions of fastener holes according to the differences of the actual and nominal positions of the reference holes (Zhu et al. 2013).

High quality standards in aerospace industry require that fastener holes drilled by a robotic system have a position accuracy of ±0.2 mm (Summers 2005), which in turn places a stringent requirement on the measurement accuracy of the vision system integrated into the robotic system. Since the image of an object being photographed changes with the variation of object distance (distance between the camera lens and object being shot), measurement errors occur when the object is not in-focus in vision-based measurements . Therefore, it is important to ensure that object distance is correct and consistent during vision-based measurements. Autofocus , which automatically adjusts the distance between the object being shot and the camera lens to maximize focus of a scene (Chen et al. 2013), can be used to locate the in-focus position to achieve the correct object distance. Focusing has been widely used in bio-engineering, medicine, and manufacturing (Firestone et al. 1991; Geusebroek et al. 2000; Mateos-Pérez et al. 2012; Santos et al. 1997; Liu et al. 2007; Osibote et al. 2010; Handa et al. 2000), where it is used to improve image sharpness. In this paper, we propose to use autofocus to improve the measurement accuracy of a machine vision system.

The rest of the paper is organized as follows: In Sect. 2, measurement errors of a machine vision system with respect to the variation of object distance are theoretically analyzed. Several traditional sharpness functions and a new sharpness function based on two-dimensional entropy are presented in Sect. 3. In Sect. 4, a coarse-to-fine autofocus algorithm for fast autofocus in the robotic drilling environment is discussed. Section 5 addresses experimental evaluations of various sharpness functions. Experiments of object distance control in a machine vision system with the proposed autofocus method are presented in Sect. 6. Finally, conclusions are drawn in Sect. 7.

2 Measurement Errors Induced by Erroneous Object Distance

In robotic drilling, positions of reference holes are measured with a 2D vision system. In order to achieve the required measurement accuracy, error sources of the vision system should be analyzed and controlled. Since the drill axis is perpendicular to the workpiece in robotic drilling, the deviation along the drill axis does not introduce positioning errors of fastener holes. Thus, major factors regarding measurement accuracy of the vision system mainly include: flatness of the workpiece surface being measured, perpendicularity of the optical axis of the camera with respect to the workpiece surface, and variation of object distance. In robotic drilling for aircraft assembly, aeronautical components dealt with are often panel structures such as wing and fuselage panels, which are small in surface curvature. In addition, a region shooted by a camera in a single shoot is small. Therefore, it can be reasonably assumed that the region captured by the camera is planar. The optical-axis-to-workpiece perpendicularity can be guaranteed by accurate installation and extra perpendicularity sensors. Due to improvement of lens production, use of a camera with small field of view of 18° (the visible area is 28 mm × 21 mm) and short object distance , skewness and lens distortion can be reasonably ignored (Zhan and Wang 2012). So, it is suitable to analyze influence of object distance on measurement accuracy with the pinhole imaging model.

Measurement errors caused by erroneous object distance in a machine vision system for robotic drilling are illustrated in Fig. 2a. Suppose O is the optical center of a camera, OA is the optical axis. GH is the image plane, AB is an object plane perpendicular to the optical axis. Ideally, the projection of an object point B onto the image plane is H. The corresponding image point moves to H 1, when object distance increases to OA 1; accordingly, the corresponding image point moves to H 2, when object distance decreases to OA 2. Thus, measurement errors of the vision system occur when the geometric dimension of the object being measured is calculated from an image acquired at some erroneous object distance.

Let in-focus object distance OA be z, and suppose object distance fluctuates by \( \Delta z \), hence, \( OA_{1} = z +\Delta z \), \( OA_{2} = z -\Delta z \). For clarity, define \( AB = A_{1} E = A_{2} F = s \).

From the similarity \( \Delta OAC \cong\Delta OA_{1} E \), it follows that

and the measurement error is

Similarly, from \( \Delta OAD \cong\Delta OA_{2} F \), we can obtain

thus the measurement error is

Assuming that \( \Delta z \) is positive when object distance increases (and vice versa), Eqs. (2) and (4) can be combined into a unified model of measurement errors as follows.

The surface model of Eq. (5) is shown in Fig. 2b. If the in-focus object distance of the vision system is z = 225 mm, object distance deviates by \( \Delta z = \pm 2\,{\text{mm}} \) (accuracy of industrial robots and setup accuracy of aircraft panel structures are usually at the mm level), and the distance of a geometric feature from the optical axis is s = 7.5 mm, then the maximum measurement error is 0.07 mm. Such an error is significant for vision-based measurement in robotic drilling because the target accuracy of which is typically ±0.1 mm. Moreover, object detection algorithms demand sharply focused images to extract useful information (Bilen et al. 2012). So, it is essential to use autofocus to achieve the correct object distance and make a workpiece being shot in-focus in vision-based measurements in robotic drilling.

3 Sharpness Functions and Their Performance Indices

3.1 Sharpness Functions

Autofocus is the process of adjusting object distance or focal length based on the sharpness of acquired images so that the object being photographed is at the focal position. In robotic drilling, camera internal parameters including the focal length are constants in order to take correct measurements of geometric dimensions of workpieces. Therefore, autofocus is achieved through adjusting the distance between the camera lens and workpiece surface being photographed on the basis of image sharpness measures. Sharpness of an image reflects the amount of high frequency components in the frequency field of the image (Tsai and Chou 2003), as well as the richness of edges and details in the image. Defocused images inherently have less information than sharply focused ones (Krotkov 1988). Based on the described phenomena, various sharpness functions have been proposed in literature. Considering the frequency of usage and variety, the following classical sharpness functions are selected for comparison with the proposed new sharpness function.

A sharpness function based on Discrete Cosine Transform (DCTS) (Zhang et al. 2011) uses the amount of high frequency components in an image as the sharpness index of the image, and the greater the amount of high frequency components, better degree of focus of the image. A sharpness function based on Prewitt Gradient Edge Detection (PS) (Shih 2007) evaluates image sharpness by detecting the edge gradient features of an image using an operator simpler than the Sobel operator. Laplacian sharpness (LS) (Mateos-Pérez et al. 2012) function instead uses the Laplacian operator to detect gradient features of edges in an image. Variance sharpness (VS) function (Mateos-Pérez et al. 2012) uses the square of the total standard deviation of an image as its sharpness criterion. One dimensional entropy sharpness (ODES) function is based on Monkey Model Entropy (MME) (Firestone et al. 1991). MME is the first simple and widely used method for the calculation of the entropy of an image (Razlighi and Kehtarnavaz 2009), which reflects the average amount of information or uncertainty in the image. According to the definition of information entropy (Shannon 2001), the entropy of an image characterizes its overall statistical property, or more specifically, the overall measure of uncertainty. In-focus image often has wider distribution of gray values than out-of-focus images, and the distribution of gray values reflect image sharpness to some extent. Therefore, the entropy of an image can be used as the sharpness measure of the image.

For an 8-bit gray-scale image, the range of gray values is \( \chi_{1} = \left\{ {0,1,2, \ldots ,255} \right\} \). It is assumed that all gray levels have the same and independent distribution. The frequency of a gray level \( x\left( {x \in \chi_{1} } \right) \) is \( N_{x} \) in the image with N pixels. A pixel in the image is denoted \( X_{ij} \), where i and j are the row and column numbers, respectively. Thus, the one dimensional probability density function \( P\left( x \right) = \Pr \left\{ {X_{ij} = x} \right\} \) can be constructed from the histogram of the image using \( P\left( x \right) = {{N_{x} } \mathord{\left/ {\vphantom {{N_{x} } N}} \right. \kern-0pt} N} \). Based on Shannon’s definition of information entropy (Shannon 2001), image entropy can be similarly defined by

and one-dimensional entropy sharpness function (Firestone et al. 1991) is:

Equation (6) is computationally efficient, however, it is not accurate enough for estimating image entropy. In Eq. (6), each pixel is used without considering its adjacent pixels in the image, and the spatial relationships between the pixels are also neglected. Therefore, less information is available in this statistical property of gray values, and the resulting image entropy is lower than its actual magnitude. In order to describe image entropy more accurately, it is necessary to consider the spatial relationships between pixels in image entropy calculation. However, the dimension of the random distribution field of gray values increases when more relationships between pixels are included in image entropy estimation, leading to increased computational complexity. A good balance between accuracy and complexity of entropy calculation is needed because image processing speed is important for the in-process vision system integrated in the robotic drilling system. Thus, we propose to use the two-dimensional joint entropy of vectors defined by a pixel and its right adjacent in an image, which was initially used for object extraction (Pal and Pal 1991), as the measure of image entropy.

The joint gray value of a pixel and its right adjacent in an image can be represented by \( \left( {x,y} \right) \), and its range is shown in Table 1. Denote the two-dimensional probability distribution of the joint gray values as \( P\left( {x,y} \right) = \Pr \left\{ {X_{ij} = x,X_{i + 1,j} = y} \right\} \), where x and y are the gray values of a pixel \( X_{ij} \) and its right adjacent pixel \( X_{i + 1,j} \), respectively. This probability distribution can be obtained from the histogram of two-dimensional joint gray values by using \( P\left( {x,y} \right) = {{N_{xy} } \mathord{\left/ {\vphantom {{N_{xy} } N}} \right. \kern-0pt} N} \), where \( N_{xy} \) is the frequency of a joint gray value \( \left( {x,y} \right) \) in the image with N pixels. The two-dimensional spatial entropy of the image is defined as

Based on Eq. (8), a Two-Dimensional Entropy Sharpness (TDES) function for autofocus can be given as follows:

3.2 Performance Indices of Sharpness Functions

Besides performance indices of sharpness functions such as accuracy, focusing range, unimodality, half width, and sensitivity to environmental parameters summarized in (Firestone et al. 1991; Shih 2007), two additional performance indices: reproducibility and computation efficiency for the evaluation of various sharpness functions, are presented in this section.

Since variation of object distance causes significant errors in vision-based measurements , control of object distance is important to achieve the same in-focus position in multiple autofocus operations. Therefore, reproducibility is used as a performance index in autofocus in the vision system. Reproducibility is different from the index “accuracy” in literature (Firestone et al. 1991) which is defined as twice the standard deviation (the radius of a 95 % confidence interval) of the resulting in-focus positions in multiple autofocus operations. The lower reproducibility is easier and the same in-focus position can be achieved, and the better object distance can be controlled in multiple autofocus operations. The index “reproducibility” is treated as the most important performance index for autofocus due to its direct and significant impact on measurement accuracy of a vision system.

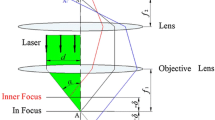

For verifying the effectiveness regarding control of object distance in the vision system integrated in the robotic drilling system, encoder reading of the end-effector can be used as the as the controllable variable during the autofocus processes of the vision system. The relationship of object distance and the encoder reading is illustrated in Fig. 3. Object distance z is the distance between an object plane and the optical center of camera lens, which is difficult to measure. However, variation of object distance in autofocus operations can be directly reflected by the encoder reading d, which can be controlled using the control system of robotic drilling.

Another important performance index for online vision-based measurements is computation efficiency which is treated as the time cost for images sharpness estimation.

4 Coarse-to-Fine Autofocus Algorithm

The time cost for image sharpness estimation contributes to the total time cost of an autofocus process with a certain proportion. A coarse-to-fine autofocus algorithm based on global search (Kehtarnavaz and Oh 2003) is proposed to reduce the time cost and eliminate negative influence of local extremums, which often occur in the traditional hill-climbing autofocus algorithm. The coarse-to-fine autofocus algorithm is shown in Fig. 4, in which autofocus is achieved by adjusting object distance z according to the spindle position d of the drilling end-effector.

The detailed procedures of the algorithm are described as follows:

-

Step 1: Set autofocus parameters:

-

Range width of coarse autofocus, \( W_{\text{coarse}} \);

-

Range width of fine autofocus, \( W_{\text{fine}} \);

-

Increment of coarse autofocus, \( \Delta d_{\text{coarse}} \);

-

Increment of fine autofocus, \( \Delta d_{\text{fine}} \);

-

Threshold of the difference between the peak position of sharpness function and boundaries of coarse autofocus range in coarse autofocus, \( T_{\text{coarse}} \);

-

Threshold of the difference between the peak position of sharpness function and boundaries of fine autofocus range in fine autofocus, \( T_{\text{fine}} \).

-

-

Step 2: Set the middle position of coarse autofocus range \( d_{\text{coarse}}^{\text{mid}} \) as the home position of the spindle, where the object being photographed can be roughly seen.

-

Step 3: Determine coarse autofocus range \( \left( {d_{\text{coarse}}^{\hbox{min} } ,d_{\text{coarse}}^{\hbox{max} } } \right) \), where \( d_{\text{coarse}}^{\hbox{min} } = d_{\text{coarse}}^{\text{mid}} - 0.5 \cdot W_{\text{coarse}} \), and \( d_{\text{coarse}}^{\hbox{max} } = d_{\text{coarse}}^{\text{mid}} + 0.5 \cdot W_{\text{coarse}} \);

-

Step 4: Perform coarse autofocus with the starting position \( d_{\text{coarse}}^{\hbox{min} } \) and the increment \( \Delta d_{\text{coarse}} \) until the destination \( d_{\text{coarse}}^{\hbox{max} } \) is reached;

-

Step 5: Determine \( d_{\text{coarse}}^{\text{peak}} \), which is the peak position of the sharpness function used in coarse autofocus;

-

Step 6: Set the middle position of fine autofocus range \( d_{\text{fine}}^{\text{mid}} \) as \( d_{\text{coarse}}^{\text{peak}} \), if termination criteria \( d_{\text{coarse}}^{\text{peak}} - d_{\text{coarse}}^{\hbox{min} } > T_{\text{coarse}} \) and \( d_{\text{coarse}}^{\hbox{max} } - d_{\text{coarse}}^{\text{peak}} > T_{\text{coarse}} \) are achieved. Otherwise, set \( d_{\text{coarse}}^{\text{mid}} \) as \( d_{\text{coarse}}^{\text{peak}} \), and go back to Step 3;

-

Step 7: Determine fine autofocus range \( \left( {d_{\text{fine}}^{\hbox{min} } ,d_{\text{fine}}^{\hbox{max} } } \right) \), where \( d_{\text{fine}}^{\hbox{min} } = d_{\text{fine}}^{\text{mid}} - 0.5 \cdot W_{\text{fine}} \), and \( d_{\text{fine}}^{\hbox{max} } = d_{\text{fine}}^{\text{mid}} + 0.5 \cdot W_{\text{fine}} \);

-

Step 8: Perform fine autofocus with the starting position \( d_{\text{fine}}^{\hbox{min} } \) and the increment \( \Delta d_{\text{fine}} \) until the destination \( d_{\text{fine}}^{\hbox{max} } \) is reached;

-

Step 9: Determine \( d_{\text{fine}}^{\text{peak}} \), which is the peak position of the sharpness function used in fine autofocus;

-

Step 10: Accept \( d_{\text{fine}}^{\text{peak}} \) as the result of coarse-to-fine autofocus, if termination criteria \( d_{\text{fine}}^{\text{peak}} - d_{\text{fine}}^{\hbox{min} } > T_{\text{fine}} \) and \( d_{\text{fine}}^{\hbox{max} } - d_{\text{fine}}^{\text{peak}} > T_{\text{fine}} \) are reached. Otherwise set \( d_{\text{fine}}^{\text{mid}} \) for fine autofocus as \( d_{\text{fine}}^{\text{peak}} \), and go to Step 7.

5 Experimental Evaluation of Sharpness Functions

5.1 Setup and Procedures of the Experiments

The experimental platform used for sharpness function evaluation is shown in Fig. 5, which consists of a Coord3 coordinate measuring machine (CMM), a Baumer TXG12 CCD camera, an annular light, a clamping unit, a test piece for reference hole detection, and a computer installed with the developed machine vision software.

The CCD camera, which has a lens of fixed focal length, is hold by the clamping unit. The CCD camera is connected to the computer using an Ethernet cable. The test piece is fixed on the Z-axis of the CMM. The position of the reference hole on the image plane can be adjusted by moving the CMM along its X- and Z-axis. Object distance can be adjusted by moving the CMM along its Y-axis. However, the exact value of object distance is not known. Therefore, we use the position d, which is the reading of Y-axis coordinate of the CMM, to achieve autofocus .

During the image acquisition processes in focusing operations, various environmental conditions listed in Table 2 were designed to cover as many as possible real scenarios in robotic drilling . And the main environmental factor is light intensity which have a directly impact on brightness of acquired images. Low light intensity lower the intensity of each pixel so that all intensities in images are within a narrow range (Jin et al. 2010). However, light intensity is influenced by many factors, and is difficult to control and quantify. With light intensity being fixed, image brightness can be adjusted by exposure time, and short exposure time leads to darker images. Hence, exposure time was used as a major influential condition for evaluation of sharpness functions . Besides, four supplemental influence factors: distribution of light intensity, position of reference holes in images (centered or not), type of reference holes (countersunk or not, refer Fig. 6), were also included to test robustness of various sharpness functions. Steps for sharpness function evaluation are presented as follows:

-

(1)

Adjust object distance by moving the CMM along its Y-axis to approximately make the vision system in-focus, and the reading of Y-axis coordinate of the CMM \( d = 2 1 5\,{\text{mm}} \). Set focusing range as [205, 225] mm based on this roughly in-focus position d.

-

(2)

Conduct focusing with the global search strategy (Kehtarnavaz and Oh 2003). Firstly, move the test piece to the position \( d = 20 5\,{\text{mm}} \); then move the test piece forward with an increment 0.2 mm; capture an image after each moving, and compute the sharpness of the image used various sharpness functions; repeat this process until the position \( d = 2 2 5\,{\text{mm}} \).

-

(3)

Plot curves of various sharpness functions in the XY coordinate system, where X-axis and Y-axis stands for stands for the position d of the images and the sharpness values of the images normalized to [0, 1], respectively.

5.2 Performance Evaluation of Sharpness Functions

Curves of various sharpness functions obtained in experiments are shown in Fig. 7, and the characteristics of the sharpness functions are analyzed as follows:

All sharpness functions have their best characteristics when the imaged area of the test piece has no reference hole, refer to Fig. 7i. Sharpness functions fluctuate when distribution of light intensity is nonuniform, refer to Fig. 7g; however, almost all sharpness functions do not fluctuate in the range close to the in-focus position except the VS function, which lost the bell-shaped property in this case, and cannot be used in autofocus . Thus, the VS function is not further considered.

Form the curves of various sharpness functions obtained with different exposure times (Fig. 7a–f), it can be seen that ODES is sensitive to the change of exposure time, while the DCTS, PS, LS, and TDES functions are stable with respect to various exposure times used.

The resulting in-focus positions obtained using various sharpness functions are shown in Fig. 8, and reproducibility values of various functions DCTS, PS, LS, ODES, TDES, VS are 0.454, 0.430, 0.535, 0.386, 0.368, 8.909 mm, respectively, refer Fig. 8. Thus, it can be seen: the reproducibility value of VS function is 8.908 mm, which is coincident with the conclusion above that focusing is hard to achieved using VS function; the reproducibility value of TDES function is 0.368 mm as the smallest, which indicates that the same in-focus position is most likely to be obtained using this function in multiple autofocus operations under various experimental conditions.

The computer used in the experiments was a DELL OPTIPLEX 380 with a 2.93 GHz Intel Core CPU and 2G RAM. And the computer was installed with Microsoft Windows XP Professional and Microsoft Visual Studio 2005. The algorithms were implemented with C++ and OpenCV (Bradski and Kaehler 2008). Time costs for sharpness estimation with various sharpness functions are shown in Table 3, and a time cost is the time for calculating sharpness values for 101 images with 1296 × 966 pixels in size and acquired under experimental condition 3. From Table 3, the time costs of PS and DCTS are significantly longer than the other sharpness functions’.

From the above discussions, it can be concluded that the TDES sharpness function has the smallest reproducibility value, reasonably small time cost, suitable focusing range, and stable focusing curve. Therefore, the TDES sharpness function is selected for the vision system used in robotic drilling , since both accuracy and efficiency of vision-based measurement are important in real assembly scenarios.

6 Experiments of Object Distance Control with Autofocus on a Vision System for Robotic Drilling

The proposed autofocus method has been adopted in the vision-based measurement system. Autofocus experiments have been performed on a robotic drilling end-effector to evaluate the effectiveness and reproducibility of the developed autofocus method. Figure 9 shows the robotic drilling platform for autofocus experiments, including a KUKA industrial robot, an drilling end-effector, a calibration board and its spindle mandril, a Baumer TXG12 CCD camera, an annular light, and a computer system (with vision-based measurement software refer to Fig. 10).

The CCD camera and the annular light are installed in a housing box of the drilling end-effector. The tool holder of the spindle clamps the spindle mandril, which is connected to the calibration board. The position of the calibration board is adjusted so that the shooting area (3 by 3 hole array) is visible to the camera, refer to Fig. 11. Once the adjustment is completed, the rotation of the spindle is restricted using locknut. Thus, only motion between the calibration board and the camera is along the drilling direction. The distance between the calibration board and the camera can be adjusted through moving the spindle along the drilling direction by controlling the end-effector. Since the position of the spindle directly reflects the object distance , autofocus of the calibration board is achieved through adjusting the spindle position d. The parameters of the autofocus algorithm are set as \( W_{\text{coarse}} = 20\,{\text{mm}} \), \( W_{\text{fine}} = 2\,{\text{mm}} \), \( \Delta d_{\text{coarse}} = 1\,{\text{mm}} \), \( \Delta d_{\text{fine}} = 0.2\,{\text{mm}} \), \( T_{\text{coarse}} = 2\,{\text{mm}} \), and \( T_{\text{fine}} = 0.2\,{\text{mm}} \). Then move the spindle such that the linear encoder reading (the spindle position) is 90 mm, and set \( d_{\text{coarse}}^{\text{mid}} \) as this value.

The curve of a coarse-to-fine autofocus of the calibration board is shown in Fig. 12. Totally 32 images are captured and used for sharpness calculation with the TDES sharpness function in the autofocus process. According to Fig. 12, the spindle positions \( d_{\text{coarse}}^{\text{peak}} \) and \( d_{\text{fine}}^{\text{peak}} \) are 96.003 and 96.203 mm in the coarse and fine autofocus stage, respectively, during the autofocus of the calibration board. The time cost for computing the sharpness of the 32 images is 0.64 s.

In the experiments, five test pieces with reference holes were used to verify the reproducibility and robustness of the proposed sharpness function and coarse-to-fine autofocus algorithm. After camera calibration, each of the five test pieces was fixed on the calibration board so that it was visible to the camera, and autofocus experiments were performed. The in-focus spindle positions with five different test pieces are shown in Fig. 13, and the reproducibility value is around 0.275 mm. Thus, the measurement error caused by the inconsistency of object distance is less than 0.009 mm when the in-focus object distance is 225 mm. And the measurement accuracy related to object distance is improved by about 87 %.

7 Conclusions

In this paper, the influence of erroneous object distance on the accuracy of vision-based measurement system is analyzed, and autofocus is applied to control the object distance to improve the measurement accuracy . A TDES function has been proposed for autofocus of the vision-based measurement system for robotic drilling applications. Experimental results show that overall performance of the proposed TDES function surpasses the traditional sharpness functions , and is most appropriate for vision-based measurement in robotic drilling. A coarse-to-fine autofocus algorithm has also been proposed to shorten the time cost of autofocus. Experiments performed on a robotic drilling end-effector show that the variation of object distance can be controlled to be within 0.275 mm using the proposed TDES function and autofocus algorithm, which ensures measurement error induced by erroneous object distance to be below 0.009 mm. The method of object distance control based on autofocus for improved vision-based measurement accuracy has been applied in robotic drilling for flight control surface assembly at Xi’an aircraft industry (group) company Ltd., Aviation Industry Corporation of China.

References

Bi S, Liang J (2011) Robotic drilling system for titanium structures. Int J Adv Manuf Technol 54(5–8):767–774. doi:10.1007/s00170-010-2962-2

Bilen H, Hocaoglu M, Unel M et al (2012) Developing robust vision modules for microsystems applications. Mach Vis Appl 23(1):25–42. doi:10.1007/s00138-010-0267-y

Bradski G, Kaehler A (2008) Learning OpenCV: computer vision with the OpenCV library. O’Reilly Media, Incorporated, Sebastopol

Chen C-C, Chiu S-H, Lee J-H et al (2013) A framework of barcode localization for mobile robots. Int J Robot Autom 28(4):317–330

DeVlieg R, Sitton K, Feikert E et al (2002) ONCE (ONe-sided Cell End effector) robotic drilling system. SAE Technical Paper 2002-01-2626. doi:10.4271/2002-01-2626

Firestone L, Cook K, Culp K et al (1991) Comparison of autofocus methods for automated microscopy. Cytometry Part-A 12(3):195–206. doi:10.1002/cyto.990120302

Geusebroek J-M, Cornelissen F, Smeulders AWM et al (2000) Robust autofocusing in microscopy. Cytometry Part-A 39(1):1–9. doi:10.1002/(SICI)1097-0320(20000101)39:1<1:AID-CYTO2>3.0.CO;2-J

Handa DP, Foxa MDT, Harana FM, Petersb C, Morganc SA, McLeanc MA et al (2000) Optical focus control system for laser welding and direct casting. Opt Lasers Eng 34(4–6):415–427

Jin S, Cho J, Kwon K et al (2010) A dedicated hardware architecture for real-time auto-focusing using an FPGA. Mach Vis Appl 21(5):727–734. doi:10.1007/s00138-009-0190-2

Kehtarnavaz N, Oh HJ (2003) Development and real-time implementation of a rule-based auto-focus algorithm. Real-Time Imaging 9(3):197–203. doi:10.1016/S1077-2014(03)00037-8

Krotkov E (1988) Focusing. Int J Comput Vision 1(3):223–237. doi:10.1007/BF00127822

Liu XY, Wang WH, Sun Y (2007) Dynamic evaluation of autofocusing for automated microscopic analysis of blood smear and pap smear. J Microsc 227(1):15–23. doi:10.1111/j.1365-2818.2007.01779.x

Mateos-Pérez JM, Redondo R, Nava R et al (2012) Comparative evaluation of autofocus algorithms for a real-time system for automatic detection of mycobacterium tuberculosis. Cytometry Part-A 81A(3):213–221. doi:10.1002/cyto.a.22020

Olsson T, Haage M, Kihlman H et al (2010) Cost-efficient drilling using industrial robots with high-bandwidth force feedback. Robot Comput Integr Manuf 26(1):24–38. doi:10.1016/j.rcim.2009.01.002

Osibote OA, Dendere R, Krishnan S et al (2010) Automated focusing in bright-field microscopy for tuberculosis detection. J Microsc 240(2):155–163. doi:10.1111/j.1365-2818.2010.03389.x

Pal NR, Pal SK (1991) Entropy: a new definition and its applications. IEEE Trans Syst Man Cybern 21(5):1260–1270

Razlighi QR, Kehtarnavaz N (2009) A comparison study of image spatial entropy. In: Proceedings of SPIE-IS&T electronic imaging, visual communications and image processing, San Jose, CA, pp 72571X-1–72571X-10. doi:10.1117/12.814439

Santos A, De Sol Ortiz, rzano C, Vaquero JJ et al (1997) Evaluation of autofocus functions in molecular cytogenetic analysis. J Microsc 188(3):264–272. doi:10.1046/j.1365-2818.1997.2630819.x

Shannon CE (2001) A mathematical theory of communication. ACM SIGMOBILE Mob Comput Commun Rev 5(1):3–55. doi:10.1145/584091.584093

Shih L (2007) Autofocus survey: a comparison of algorithms. In: Proceedings of SPIE-IS&T electronic imaging, digital photography III, San Jose, California, pp 65020B1–65020B11

Summers M (2005) Robot capability test and development of industrial robot positioning system for the aerospace industry. SAE Technical Papers 2005-01-3336. doi:10.4271/2005-01-3336

Tsai DM, Chou CC (2003) A fast focus measure for video display inspection. Mach Vis Appl 14(3):192–196. doi:10.1007/s00138-003-0126-1

Zhan Q, Wang X (2012) Hand–eye calibration and positioning for a robot drilling system. Int J Adv Manuf Technol 61(5–8):691–701. doi:10.1007/s00170-011-3741-4

Zhang S, Liu J-H, Li S et al (2011) The research of mixed programming auto-focus based on image processing. In: Zhu R, Zhang Y, Liu B, Liu C (eds) Information computing and applications: Communications in computer and information science. Springer, Berlin, pp 217–225

Zhu W, Qu W, Cao L et al (2013) An off-line programming system for robotic drilling in aerospace manufacturing. Int J Adv Manuf Technol 68(9–12):2535–2545. doi:10.1007/s00170-013-4873-5

Acknowledgments

Project supported by the National Natural Science Foundation of China (No. 51205352).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Zhejiang University Press and Springer Science+Business Media Singapore

About this paper

Cite this paper

Mei, B., Zhu, Wd., Ke, Yl. (2017). Autofocus for Enhanced Measurement Accuracy of a Machine Vision System for Robotic Drilling. In: Yang, C., Virk, G., Yang, H. (eds) Wearable Sensors and Robots. Lecture Notes in Electrical Engineering, vol 399. Springer, Singapore. https://doi.org/10.1007/978-981-10-2404-7_27

Download citation

DOI: https://doi.org/10.1007/978-981-10-2404-7_27

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-2403-0

Online ISBN: 978-981-10-2404-7

eBook Packages: EngineeringEngineering (R0)