Abstract

With the advent of Internet, images and videos are the most vulnerable media that can be exploited by criminals to manipulate for hiding the evidence of the crime. This is now easier with the advent of powerful and easily available manipulation tools over the Internet and thus poses a huge threat to the authenticity of images and videos. There is no guarantee that the evidences in the form of images and videos are from an authentic source and also without manipulation and hence cannot be considered as strong evidence in the court of law. Also, it is difficult to detect such forgeries with the conventional forgery detection tools. Although many researchers have proposed advance forensic tools, to detect forgeries done using various manipulation tools, there has always been a race between researchers to develop more efficient forgery detection tools and the forgers to come up with more powerful manipulation techniques. Thus, it is a challenging task for researchers to develop h a generic tool to detect different types of forgeries efficiently. This paper provides the detailed, comprehensive and systematic survey of current trends in the field of image and video forensics, the applications of image/video forensics and the existing datasets. With an in-depth literature review and comparative study, the survey also provides the future directions for researchers, pointing out the challenges in the field of image and video forensics, which are the focus of attention in the future, thus providing ideas for researchers to conduct future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction to image/video forensics and its importance

Compared to text, visual media has proved to be an efficient way of communication. Visual media includes images and videos which provide information very effectively. Various devices are used to capture this type of information. This information is regarded as certification to truthfulness. Also, CCTV footage is presented in a court of law as exploratory evidence. There are so many other fields that need visual material as key information. This increases the need for authenticity and integrity of images. In this era of the digital world, it is almost in every field that we require the authenticity and integrity of images and videos. But there are various easily available tools that can be used to manipulate these images and videos. This poses threat to their authenticity and integrity. It can, therefore, be concluded that “seeing is no longer believing” [1,2,3]. For example, the forgers can take advantage of image manipulation tools to hide the crime evidences, or to impersonate s to defame well-known and reputed persons, an organization or some political party. Thus, it is more important to have a robust and highly efficient tool that can cope up with this problem. Although there is easy availability of powerful manipulation tools, researchers have proposed many techniques to detect these forgeries accurately and efficiently and thus contribute to society in crime and corruption control.

1.1 Novelty of this article

This survey presents a systematic and detailed study in the field of image and video forensics. It was found that many of the researchers have come up with survey articles in this field. However, they have carried out their survey either in image forensics or video forensics and not covering all the topics. And there are very few survey papers that have presented both the domains under one roof. Still there exist no such survey paper that has carried out the survey in all the related categories of both image and video forensics. Thus, we have come up with the latest combined systematic survey on both image and video forensics with the detailed literature review along with the simplified comparative study that can prove to be the backbone of all the future research in these fields. At the end of this survey article, some common challenges are also discussed based on the comprehensive survey carried out in these fields. From these challenges, the future directions can be proposed. The main goal of carrying out this survey has been to provide extensive information about the related research work carried out in these fields. In order to make this possible, the survey was carried out in a systematic manner to analyze and investigate different digital image and video forensic techniques. This paper also provides the quality evaluation that was carried out to ensure that the papers selected for carrying out the survey are of high quality. After quality evaluation, the selected papers were surveyed to answer the formulated research questions. The important points to notice about this survey are as follows:

-

1.

This survey uses the quality assessment approach for identifying the quality papers for study in the concerned fields.

-

2.

This survey provides a systematic and well-organized review with each topic being extensively surveyed.

-

3.

This survey provides an extensive review of image and video forgery detection methods.

-

4.

This survey also suggests the researchers the future research directions by providing the research gaps and challenges of existing studies.

-

5.

This survey aims at providing the review of both image and video forensics under one roof.

This survey is carried out in an organized way and is divided into six main sectionsAs follows: Sect. 1 is an introductory section with first sub-section discussing about the novelty of this article; the second sub-section is about design constraints for this survey with five sub-sub-sections discussing about background, inclusion and exclusion criteria, quality evaluation, research questions and motivation for the readers; the third subsection discusses general structure for image forgery detection; the fourth subsection discusses about general structure for video forgery detection, and the fifth subsection discusses the applications of forensic techniques, and the final subsection summarizes the existing datasets for image and video forensics. In Sect. 2 the literature review of various categories of image forensic approaches are discussed, and in Sect. 3, the literature review of various categories of video forensic approaches have been discussed. In Sect. 4 the various deep learning approaches to image and video forensics are discussed. In Sect. 5 the future directions are discussed and finally in Sect. 6 the conclusions drawn from the survey have been discussed. Figure 1 shows the pictorial representation of various sections of this survey article.

1.2 Design constraints for this survey

In this section, we have discussed the design constraints for this survey. The survey was conducted stepwise which includes survey protocol development, carrying out the survey, experimental results analysis, results reporting, and research finding discussion.

1.2.1 Background

Digital forensic techniques provide a way to authenticate images or videos and check whether they are forged or not. It has been a long way back that this field has come into existence. Since then, it has been a common practice and has been practiced worldwide due to the advent of very powerful and freely available forgery tools like Adobe Photoshop, etc. However, in parallel, researchers are in a queue to develop the powerful forgery detection techniques, also called as forensic techniques. The image forensic techniques are of either active or passive type, whereas the video forensic techniques are of either inter-frame or intra-frame types. The active methods use the information that is hidden in an image at the time of their acquisition or before being publicly published. This hidden information is then used to detect the source and hence forgery. The active forensic methods make the use of watermarking, Digital signatures, and steganography for image authenticity confirmation. Passive-forensic-techniques do.not use acquisition time information for a forensic purpose that is inserted into the image. They use the traces that are left during the image processing steps which may include image acquisition phases or during their storage phase. The passive techniques have been further categorized into tempering operation-based and source identification-based. Tempering operation-based techniques are either of the type-dependent or of the type-independent technique. Each technique has been discussed in the upcoming sections with a detailed literature review and comparative study.

1.2.2 Inclusion and exclusion criteria

The set of rules that determine the research boundaries has been adopted in order to conclude a systematic review on two forensic types of images and video. Moreover, research manuscripts published in top journals like SCI and E-SCI, the research work carried out by proficient scientists, and also those published in top conferences have been included in the survey, whereas the irrelevant manuscripts that were not concerned with the field of our interest have been excluded. This standard has been set only after defining the research question. The main aim of this survey was the qualitative and quantitative research that includes the latest research studies and the other much older research has been excluded.

1.2.3 Quality evaluation

After the inclusion and exclusion criteria were set, the appropriate high-quality papers were selected to carry out the survey. The research topic under consideration is a vast area having many sub-areas with a large high-quality research paper available so far. Thus it was a must to have some rules for the selection of quality papers to carry out this study and according to these rules our survey must have included:

-

1.

High-quality research papers.

-

2.

Research carried out on high-quality dataset

-

3.

High-quality survey articles.

-

4.

Most cited research papers.

-

5.

Must have included sufficient data for analysis.

1.2.4 Research questions

This survey has been carried out to find and categorize various existing literature on forensic approaches in images and videos so as to provide the researchers of these fields with handy information about the work carried out in this field. To carry out this survey, a set of research questions were kept into account and these have been tabulated in the Table 1 given below.

1.2.5 Motivation for the readers

The word forgery means manipulation or modification of contents for fraudulent purposes or to deceive some proof. Forgeries may be done to either images or videos and hence the name image forgery and video forgery, respectively. Forgery has been a custom since old ages and thus is not new to this world. In earlier times, the two or more images were combined using the photomontage process. In this process, the images are overlapped, glued or pasted, or sometimes reordered so as to obtain a single photocopy. But with time, many researchers and developers have come up with forgery tools that are more powerful and easily available over the internet. Thus, it has now become a common custom to manipulate images and videos using these tools. Researchers are racing to develop such a tool that can efficiently and accurately detect forgery. Many researchers have developed many tools for forgery detection, but lack high efficiency and high accuracy. This survey aims at helping the researchers to provide them with the handful of information of research carried in this field so far.

1.3 General structure for image forgery detection

The process of image forgery detection requires a systematic approach in a step-by-step manner as shown in Fig. 2 below.

Step 1: Acquisition The noise is introduced during the acquisition of images or videos due to irregularities in the camera imaging sensors and optical lenses [4]. Color Filter Array (CFA) is used to filter this noise [5]. After that, some Image enhancement process is done before actually storing it in the memory, which results in the addition of more noise. Nowadays we have high-quality acquisition devices which result in lesser noise. Some recent image de-noising techniques include [6,7,8,9,10,11,12].

Step 2: Color to grayscale conversion and dimensionality reduction This process is used to reduce the computational complexity [13].

Step 3: Block division In this step, the resultant image from step 2 is divided into blocks that may be either overlapping or non-overlapping [14]. The nature of block division depends on the constraints like complexity and accuracy.

Step 4: Feature extraction This process includes the extraction of features, also called as descriptors, from the image which may be local or global [15, 16]. Local descriptors denote the texture in blobs, color, patches, corners, and other parameters which are mainly used for image identification and image recognition. Global descriptors denote counter, the shape of the image and are used for object classification and identification in an image and also used from image retrieval.

There are many existing algorithms used for feature extraction so far. Among the existing techniques of feature extraction, we apply the most the most important and efficient algorithms like Mirror-reflection Invariant Feature Transformation (MIFT) [17], Scale Invariant Feature Transformation (SIFT) [18], Discrete Cosine Transform (DCT) and Discrete Wavelet Transform (DWT) [19], Affine SIFT [20], Speeded up Robust Features (SURF) [21], and Singular Value Decomposition (SVD) [22]. Deep learning has also been a prominent technique for feature extraction. There also exist various deep learning based techniques [23,24,25,26,27,28,29,30] for image retrieval.

Step 5: Feature Sorting and matching After feature extraction is done, the resultant feature matrices are sorted so as to bring the identical ones closer to each other using sorting algorithms like KD-Tree sorting, Radix sorting, best bin (BFS) first Sorting, etc. These sorted feature blocks are then matched with every other block using various algorithms [15, 31, 32]. This is achieved by calculating certain parameters which include Euclidean distance, hamming distance, K-nearest neighbor (KNN), shift vectors, pattern entropy, probabilistic matching, clustering, etc.

Step 6: Forgery localization and MMO: After matching, a similarity score obtained is used to locate the forged region. To improve the localization, various mathematical morphological operations are performed.

1.4 General structure for video forgery detection

The video forgery detection also requires a step-by-step process in a systematic approach as shown in Fig. 3 below.

Video is the collection of images also called as frames that vary with time [33, 34]. Thus the process of forgery detection starts from frame extraction from the video and saves them in any image format like jpeg, etc. After the frame extraction, the process for forgery detection remains the same as image forgery detection. For a colored image, the conversion to greyscale is done. After that, the dimensionality reduction algorithm is applied to each image for the purpose of reducing the computational complexity. The resultant images obtained are then divided into blocks. These blocks may be either overlapping or may be non-overlapping blocks. Afterward, the feature extraction is done so as to extract the feature matrices of an image. These feature matrices are then sorted and the sorted feature matrices are then matched with every other feature matrix to obtain the similarity score, which is then used to locate the actual forged region. Once the forged region is located, the last step is attacking for robustness test, in which some attacks are added explicitly to check the optimization and efficiency of the video forgery detection algorithm. These attacks create the forged part in a video which is very tough to detect.

1.5 Applications of forensic techniques

The crime investigating agencies use the forgery detection techniques to get the clue about criminal behind the scene. Without these techniques it would have not been possible to serve the justice to the innocent people who never had done that crime. The major applications of forgery detection techniques are as follows:

I. Crime detection The modification of digital evidences like videos, images by using various tools in order to hide or eliminate the evidence of crime is considered to be the serious crime in the court of law.

-

a.

These crimes include the following:

-

b.

The creation of fake digital documents for defaming any community, industry, any political party or any person.

-

c.

Alteration of property documents in order to impersonate as an owner of that property.

-

d.

Alteration of academic records to falsely make oneself eligible for the job post, promotion to higher post or for achieving admission in some prestigious college without actually being eligible.

-

e.

Alteration of CCTV surveillance images or videos in order to hide or even destroy the evidence of crime.

-

f.

Alteration of medical records to hide the cause of death.

-

g.

Alteration of DNA reports to hide the identity of dead body for political or personal reasons.

-

h.

Alteration of social media videos or images to defame someone, some industry or some political party. The other reason may be to hide the actual source of crime.

-

i.

These crimes can prove to be varying hazardous/dangerous to the society. But using the digital forensic techniques, these crimes can be detected well on time and the culprit may be sent behind the bars.

II. Crime prevention If the forgery and the culprit are detected well in time and the actual criminal gets punished, it can thwart other criminals to do this crime in future and can thus help in prevention of crime. Also the consequences of the crime can be stopped if the forensic analysis is done properly and well on time.

III. Authentication The authentication of digital document is done through digital signatures, watermarks and steganography techniques. If the documents are compromised in any form then the forensic techniques can detect them and hence can find that if they are from authentic source or not.

1.6 Data sources

The datasets available for image forgery detection techniques have been summarized in the Table 2 given below.

The datasets available for video forgery detection have been tabulated in the Table 3 given below.

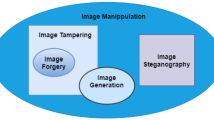

2 Image forensic approaches

There are two main categories of image forgery techniques, namely active and passive [65]. Active approaches include Steganography, Digital Signatures, and Watermarking. The passive digital image forgery methods are categorized into two main categories viz. tempering operation based and source camera identification based. Further, the tempering operation-based techniques are categorized into tempering operation-dependent and tempering operation independently. The dependent techniques include image slicing or image composites and copy-move methods. On the other hand, the independent ones include resampling, retouching, sharpening and blurring, brightness and contrast, image filtering, compression, image processing operations, image cropping and interpolation, and geometric transformations. The source camera identification-based methods include lens aberration, color filter array interpolation, sensor irregularities, and image feature-based techniques. Figure 4 shows the classification graphically. Some of the survey papers related to image forensics are tabularized in Table 4 given below.

2.1 Active approaches

Active forensic approaches rely on trustworthy image acquisition sources like cameras for forensic purposes [74, 75]. At the time of image acquisition, the digital signature [76, 77] and digital watermarking [78,79,80] are computed from the image, which can later be used for modification detection by simply verifying their values. The limitation of active approaches is that the authentication of images takes place at the very moment of their acquisition before actually storing in the memory card, making the use of specially designed digital signature and watermarking chips in the cameras. This limits their applications to very few situations. In contrast to these active approaches, passive approaches do not require prior information of image acquisition. Each of the active techniques has been explained below with the related work.

2.2 Steganography

Steganography refers to the hiding of information in the carrier using the key known as the steganography key. The sender hides the secret information in image pixels using a stego-key which is later read by the receiver using the shared key. Figure 5 shows an example of steganography.

There are two types of techniques, based on domains in which they work; these may be either spatial or frequency domain. Some of the spatial domain techniques include Least Significant Bit (LSB) [81], Pixel Mapping Method (PMM) [82], Random Pixel Selection (RPS) [83], Histogram based [84], and Grey Level Modification (GLM) [85]. LSB techniques use LSBs of image pixels to replace them with some secret information and this technique was able to reduce the system payload by 62.5%. PMM uses some mathematical function to map some image pixels with secret information bits. Similarly, RPS replaces randomly selected bits with the secret information to be hidden. Histogram-based technique hides the secret information in the image histogram and-GLM-technique-hides the information by simply modifying the image grey level. The frequency domain-based techniques are based on Discrete-Wavelet-Transform (DWT) [86], Discrete-Cosine-Transform (DCT) [87], Discrete-Fourier-Transform (DFT) [88], Discrete-Curve-Transform (DCVT) [89] and integer-wavelet- transform- (IWT) [90]. In these methods, the pixels are selected using corresponding transform functions. These pixels are then replaced by the secret information bits. Table 5 given below provides a brief description of various steganography techniques.

2.2.1 Digital signature

The digital signature provides a way to authenticate the document source. It uses the concept of two keys viz. private-and-public. Private-Key is known-to-its-owner whereas public-key-is-known to all. In a digital signature, the hash value is calculated using some hash function and then the hash value is encrypted using the sender's private key. This becomes the digitally signed document. At the receiver side, this document is first decrypted using the public key and then the hash value of this decrypted document is calculated using the same hash function and afterward, these hash values are compared.

If the hash values do not match, then it indicates that the document is modified in between source and destination and if matched, the document is verified to be authentic. The whole process of digital signature is shown in Fig. 6. The digital signature ensures that the content is authentic, reliable, and from an authentic source [91, 92]. There exist various other techniques which use digital signature concept. One of the techniques [93] uses digital-signature for improving Genetic-algorithm (GA) and Particle-swarm-optimization (PSO)-based-watermarking system. Another technique [94] used a digital-signature formed by combining Rivest–Shamir–Adleman (RSA), Vigenere–Cipher and Message-Digest-5 (MD-5), which proved to be robust against different image-forgery-attacks. Another technique [95] developed an improved-digital-signature-technique for improved data-integrity and authentication of biomedical-images in cloud. One more technique [96] was used to keep digital-signature-image-information invisible in cover-image for message-authenticity, integrity and non-repudiation. Another technique [97] combined digital-signature with LBP-LSB-Steganography-Techniques in order to enhance security of medical-images.

2.2.2 Digital watermarking

Digital watermarking involves the insertion of a certain code called as digest into the image right at its acquisition time. This is later used for an image authentication process which includes comparing extracted digest with the original digest [98,99,100]. If this extracted digest and original digest do not match then it means that some modification has happened to the image. Figure 7 shows a brief process of watermark embedding and its extraction. Watermark embedding is done by embedding algorithm which uses embedding key to embed watermark and the watermark extraction algorithm does the reverse process.

For example, in a technique [101] proposed recently, the division of an image into blocks is done based on similarity measurement. Then after blocking, some statistical measures are computed like mean, mode, median, and range of pixel values followed by encryption which encrypted values are then embedded in the image. This encrypted information is later used for forgery detection. Although the watermarking technique is very vigorous, still there are some limitations also. One of these limitations is that not all the devices come up with an inbuilt watermarking mechanism and some of the devices come up with the very expensive embedded watermarking features. The other drawback is that if some modifications are done for image enhancement, the watermarking mechanism is unable to recognize this legitimate modification. One another limitation is that there remains a requirement of an embedded system that can embed digest in an image. The watermark approaches may be Spatial-or-frequency domain. The spatial domain works on Least Significant Bit (LSB) [102, 103], Random Insertion in File (RIF) [104], and Spread Spectrum (SS) [105]. The technique [102] chains LSB and an inverse bit to determine the region to insert the watermark. Frequency domain techniques include discreet-fourier-transform (DFT) [106, 107], discrete-cosine-transform (DCT) [107, 108], singular-value-decomposition (SVD) [109, 110] and discrete-wavelet-transform (DWT) [111] depending upon the transform function used to determine the region in the image for embedding watermark. These techniques along with their brief description are tabulated in the Table 6 given below.

2.2.2.1 Fragile watermarking

These types of watermarks are used for the detection of tampering as they are highly sensitive to any sort of tampering. This makes it intolerable to any change even to only one bit. This type of watermarking is used for complete authentication purpose and any sort of watermark exposure designates the intentional or unintentional modifications to the image [114,115,116,117,118,119,120,121,122,123,124,125,126,127]. There exist various fragile watermarking techniques. Among these techniques, the robust and invisible technique [128] uses Spread Spectrum, Quantization DWT, and HVM. Another color image watermarking technique [129] used Hierarchical and BFW-SR¬ approaches. A reconstruction rate of 80% has been achieved. Another technique proposed [130] is based on Logistic map-based chaotic cryptography and histogram. The results obtained show that this Watermarking technique is secure and also a feasible technique for outsourced data. One another technique proposed related to fragile watermark [131] is based on LWT, DWT, and Amold-Transform (AT). The results obtained in this technique show that this technique is both robust and also secure watermarking technique and is thus best for copyright protection. It supports high capacity and has the capability of detecting any type of forgery attempt. One more imperceptible, secure, and robust technique proposed [132] is based on chaotic amp and Chi-Square test. The results show that this technique offers less complexity than other techniques like SVD based Watermarking and has an optimal watermark payload. These techniques have been summarized in Table 7.

2.2.2.2 Semi-fragile Watermarking

These types of watermarks are capable of being used for forensic purposes. In these techniques, the Authenticator can distinguish between the images whose content is intentionally modified and the authentic images which are intentionally modified with some modification approach that preserves the content of an image. These approaches may include compression technique (JPEG) with a reasonable compression rate [133,134,135,136,137,138,139]. There exist various semi-fragile techniques. The technique [140] uses the Discrete-Fourier-Transform (DFT). This technique makes the use of Substitution-box (S-box) and randomly selects the cover which is decided by the random number that is produced using the chaotic map. Results offered by this technique show that the technique is a secure and robust watermarking technique from all types of forgery attacks. However, its computational complexity is higher. One more technique proposed related to fragile and adaptive watermarking [141] is based on DWT and Set-Partitioning-In-Hierarchical-Tree (SPIHT) structure. After applying DWT on various sub-bands, the coefficients obtained from selected sub-bands are combined using SPHIT-algorithm. The partitioned image is further partitioned into bit-plane images and the selected bit planes of DWT coefficients receive the binary watermark. The results obtained in this technique show that this technique offers high accuracy and is adaptive in nature. One more proposed technique [142] is based on Singular-Value Decomposition (SVD) and-chaotic-permutation. This permutation is used to portion the watermark image into a number of fixed-sized blocks. These blocks are then transformed using SVD and the singular values of the cover receive the watermark using codebook techniques [143, 144]. The results show that this technique is a secure and robust watermarking scheme. Another Watermarking scheme for self-detection of JPEG-compression-forgery [36] embeds a watermark at the time of JPEG2000-compression. In order to generate the watermark, Perceptual-Hash-Function (PHF) has been applied on DWT-coefficients of the image. Another technique was proposed [145] that explores discrete-cosine-transform (DCT)-and-spread-spectrum (SS) to achieve the watermarking. DCT is- applied-on-the cover-image to transform it and-the-DCT-coefficients obtained receive the watermark. This technique is a secure and robust watermarking scheme and offers resistance against various forgery attacks. Table 8 given below summarizes the comparative study of semi-fragile watermarking techniques.

2.2.2.3 Robust watermarking

This type of watermarking algorithm can survive content preserving modification like compression, noise addition, filtering, and also geometric modifications like scaling translation, rotation, shearing, and many more. It is used for ownership authentication [146, 147]. Various robust watermarking techniques have been proposed so far. Recently the robust watermarking technique [148] was proposed which uses lifting wavelet- transform (LWT), singular value decomposition (SVD), multi-objective artificial bee colony optimization (MOABC), and logistic chaotic encryption (LCE) algorithms to create an encrypted watermarking scheme for grayscale images and showed robustness against multiple image processing attacks. Another technique [149] is based on the false positive problem (FPP) of SVD. This technique aims at resolving the FPP problem in previously existed transform domain techniques like DWT-SVD, RDWT-SVD, and IWT-SVD. It can be concluded from the simulation results of this work that if for the watermark embedding, instead of S vector, U vector is used, the problem of FPP can be resolved, and also the maximum values of robustness and imperceptibility can be obtained with the sacrifice of stability reduction which is obtained from S-vector singular values. Another technique [150] is based on DWT and encryption. This watermarking technique is applicable for the protection of image copyright. The technique makes use of Euclidean distance to identify those pixels of DWT-decomposed image that are supposed to receive the watermark. The simulation results obtained from this technique show that this technique is robust against various modifications which include compression, salt and pepper noise, and rotation. However, this technique has not been evaluated against geometric attacks. One more technique [151] is a content-based watermarking scheme for color images based on the local invariant significant bit-plane histogram. The results obtained proved that this technique is robust and shows resistance against the desynchronization attacks, and offers good visual quality and improved detection rates. This method has, however, high computation complexity and also offers less embedding capacity which needs to be taken care of. Another technique [152] is an adaptive watermarking scheme. The scaling factor has been evaluated using Bhattacharyya and Kurtoris technique. The simulation results depicted that it offers a high PSNR than the techniques [153, 154] which are in the same domain. However, the technique offers lower values for NCC, which makes it prone to attacks. Table 9 given below summarizes all these techniques.

2.3 Passive approaches

Passive approaches use intrinsic information and do not require prior information of an acquisition. They detect the image forgery when the watermark or digital signature is unavailable and also, they do not require the original image at the time of comparison. The passive digital image forgery methods are categorized into two main categories: tempering operation based and source camera identification based. Further, the tempering operation-based techniques are categorized into tempering operation-dependent and tempering operation-independent. The dependent techniques include image slicing or image composites and copy-move methods. On the other hand, the independent ones include resampling, retouching, sharpening and blurring, brightness and contrast, image filtering, compression, image processing operations, image cropping and interpolation, and geometric transformations. The source camera identification-based methods include lens aberration, color filter array interpolation, sensor irregularities, and image feature-based techniques. Each of these has been discussed below along with the comparative study.

2.3.1 Image splicing

Image splicing means to cut some object from one image and paste it on some other image [74]. Image splicing forgery is hard to detect than copy-move forgery because, in case of image splicing, different image object with different features and texture are pasted in a different environment. Figure 8 below shows an example of image-splicing.

There do currently exist a number of image-splicing techniques. One of such techniques [155] uses deep learning networks like ResNet-Conv, Mask-RCNN, ResNet101, and ResNet50 to detect the splicing forgery in an image and this technique has the ability to learn to detect the discriminative artifacts from forged regions. The dataset for the training model was a computer-generated- image-splicing dataset from COCO-dataset and set-of-random-objects with transparent backgrounds. The results reported are AUC-value = 0.967. Another technique [156] uses block-based partitioning to explore the Partial blur type inconsistency over the dataset of 800-natural-blurred-photos. This technique has been able to achieve different accuracies at various Spliced-Region-Sizes (SRS). Another technique [157] has used CNN which is a deep learning algorithm to extract the features and the SVM classifier over the CASIAv1.0-and-CASIAv2.0-datasets. The detection accuracy achieved was 96.38%. One more approach [158] has used the auto-encoder-based anomaly feature and SVM. The detection accuracy obtained varies for various datasets viz. 91.88% for Columbia, 98% for CASIAv1.0, and 97% for CASIAv2.0. Another technique [159] explores block-based techniques, Otsu-Based-Enhanced-Local-Ternary-Pattern (OELTP), and SVM as a classifier over the CASIAv1.0, CASIAv2.0, CUISDE, and CISDE datasets, and this technique achieved detection accuracies of 98.25% using CASIAv1.0, 96.59% using CASIAv2.0, and 96.66% using CUISDE-datasets. These techniques along with some other important techniques have been summarized in in Table 10.

2.3.2 Copy move forgery

It is a process in which a certain image object is cut and pasted within an image [50, 166]. This type of forgery is done so as to hide some object in an image and this forgery is easy to get detected because of similar outlines of the object in the same image with similar features like texture, size, lines, curves, and others. Figure 9 given below shows an example of copy-move forgery.

Based on how this forgery is done, copy-move forgery is divided into the following four types:

(1) Plain copy-move-forgery In this forgery, the process is as follows: copy from one region and paste in another region within an image with no additional modifications (see Fig. 10).

(2) Copy-move with reflection attacks Copy-paste with 180° rotation to create an image with an object of different orientation (see Fig. 11).

(3) Copy-move with image inpainting This includes reconstruction of depreciated regions of an image with its corresponding neighboring regions so that it can look like real image. The modification is done in such a way that it becomes undetectable (see Fig. 12).

(4) Multiple copy-move forgery This type of forgery includes copying multiple regions or objects and pasting them in different regions (see Fig. 13).

Currently, there exist many copy-move forgery detection techniques. Some of these recent techniques have been highlighted below. An Adaptive CMFD-SIFT based technique [167] was proposed for copy-move image-forgery detection. This approach offers improvement to invariance to mirror transformation over a CoMoFoD dataset and also provides the F score value greater than 90%. Another recent technique [168] is based on Tetrolet-transform and Lexicographic-sorting. This technique offers high localization and detection accuracy and uses two datasets CoMoFoD and GRIP. The technique [169] is based on scaled ORB features. In this technique, first the Gaussian scale is created and afterward, the FAST and ORB features are extracted in each scale-space followed by removal of mismatched key points using the RANSAC algorithm. This technique has been found to be robust for geometric transformations. However, it suffers high time complexity when dealing with high-resolution images. Another copy-move detection technique [170] is based on FFT, SVD, and PCA. This technique offers high detection accuracy of around 98%. One more recent technique [171] is based on DOA-GAN. Three datasets have been used CASIA-CMFD, USC-ISI-CMFD, and CoMoFoD datasets and offer different accuracies on different datasets. One more technique [172] uses adaptive-attention and residual-refinement-network (RRN) over CASIA-CMFD, USC-ISI-CMFD, and CoMoFoD-datasets and achieves distinct accuracies on each dataset. Another technique [173] is based on interest point detector. This detector is first used to detect all the points of interest. Then afterwards the description of features has been done using Polar Cosine Transform. This technique can be employed in scene recognition or image retrieval and many others. This technique is, however, prone to resizing attacks. These techniques along with some other techniques have been summarized in the Table 11.

2.3.3 Resampling

Resampling means the transformation of image sample into another sample done by increasing or decreasing the pixel numbers of an image [185]. Resampling is done in different ways as follows:

(1) Up-sampling: In this method the number of image pixels is increased as shown in the Fig. 14.

(2) Down-sampling: In this method the number of image pixels is decreased as shown in the Fig. 15.

(3) Mirroring/flipping: In this method, the flipping of an entire image is done either vertically or horizontally as shown in Fig. 16.

(4) Scaling: In this method, the corner points of an image are dragged using various image editing tools like Photoshop (See Fig. 16).

(5) Rotation: In this method, image is rotated across its axis as shown in Fig. 16.

There exist multiple resampling detection techniques. One such technique [186] used two techniques, the A-Contrario-analysis algorithm, and a Deep-Neural-Network. The dataset used was NIST-Nimble (2017) and Nimble (2018). The technique reported an accuracy Area-under-Curve (AUC) = 0.73 and False alarm rate (FAR) = 1. Another technique [187] is based on random matrix theory (RMT). The technique offers a very low computational complexity. One more technique [188] makes the use of probability of residues noise and LRT detector for the detection of resampling forgery. This technique performs better with both compressed and uncompressed images. Another technique [189] is based used multiple algorithms over the UCID dataset and was able to achieve an accuracy of 90% for a high scaling factor. Another CNN-based technique [190] achieved 91.22% accuracy for all Quality Factors and 84.08% for a Quality-factor (QF) = 50. One more technique [191] is deep learning networks like iterative pooling network and branched network (BN) and the performance accuracy was 96.6% and 98.7% for IPN and BN, respectively. Another technique [192] used the Auto-Regressive model and FD detector and achieved true positive rate (TPR) = 98.3% and false positive rate (FPR) = 1% which is considered to be a better accuracy rate. The above-mentioned techniques have been summarized in Table 12 given below.

2.3.4 Retouching

Retouching of an image is done in order to remove the errors in an image like scratches, blemishes, etc. and this process is done in many ways [193]. Retouching is also done to hide the forgery left traces for the illegal attempt and some of the widely used techniques for this are contrast enhancement, sharpening manipulation. Retouching is also used for entertainment media like magazine covers etc. Various approaches to image retouching are shown in Fig. 17 below.

Various retouching detection techniques have been proposed so far. Among these techniques, the technique [194] uses the multiresolution overshoot artifact analysis (MOAA) and non-subsampled contourlet transform (NSCT) classifier for the sharpening detection. Another CE detection technique [195] uses the histogram equalization detection algorithm (HEDA). The technique [196] used an overshoot artifact detector for un-sharp masking sharpening (UMS) detection and this technique shows robustness against post-JPEG compression and Additional Gaussian white noise (AGWN). One more technique [197] used histogram gradient aberration and ringing artifacts to detect the image sharpening operation. Another image sharpening detection technique [198] used edge perpendicular binary coding for USM sharpening detection. Another technique [199] explored deep learning and used CNN for image sharpening detection. The technique [200] used Benford’s law for the contrast enhancement (CE) detection. Another technique [192] used anti-forensics contrast enhancement detection (AFCED) for the detection of CE. The technique [201] used Modified CNN for CE detection. One more recent technique [202] used multi path network (MPN) for the detection of contrast enhancement (CE). These techniques along with their performance are summarized in Table 13.

2.3.5 Image forgery detection using JPEG compression properties

One of the prominent compression-techniques is JPEG compression and is widely used in many applications. Detection of weather an image has undergone any sort of compression or not helps in image forensic investigation. Till date, many researchers came up with their JPEG-compression detection techniques. Among these techniques, we have some of the recent techniques which are worth noting. One of these techniques is [203] which used multi-domain, frequency-domain, and spatial-domain CNNs to locate the DJPEG (Double-JPEG). Another technique [175] is a CNN based DJPEG-detection technique which used aligned and non-aligned JPEG-compression for evaluation purpose. One more technique [204] used stack-auto-encoder for image-forgery-localization for multi-format-images. One more recent technique [205] used Modified-Dense-Net to detect primary-JPEG-compression and used a special-filtering-layer in-network for image classification. Another technique [206] used CNN with preprocessing-layer to detect DJPEG-compression. Another recent DJPEG-compression-detection-technique [207] used 3D-CNN in DCT-domain. One more recent technique [208] used Dense-Net for block-level-DJPEG-detection for image-forgery-localization. These techniques along with the description and dataset used have been summarized in Table 14.

2.3.6 Source camera identification

The source camera identification (SCI) involves the extraction of features that are used during image acquisition using an acquisition device (Camera). These characteristic features include the following:

(1) Lens aberration.

(2) Sensor imperfections.

(3) Color Filter Array (CFA) interpolation.

(4) Interpolation & image-features.

Lens aberration or imperfections refers to the artifacts that result from optical lens of digital camera. Because of lens radial distortion, the straight lines look like the curved lines on the output images. This creates different patterns of different camera models which are then used to identify camera models. Once the source is known, it then becomes easy to detect the forgery. Another type of source camera identification is based on sensor imperfections. These imperfections can be described with the use of sensor pattern noise and pixel artifacts. One of the techniques [210] can detect the source camera using sensor pattern noise. The main cause of this pattern-noise is irregularities of sensors resulted from its manufacturing processes. These patterns are later used for the detection of the source camera of an image. Another characteristic feature used for SCI is CFA interpolation. One of the techniques [211] uses CFA-interpolation. One more characteristic feature used for SCI is image features. The technique [212] differentiates these features into three main categories viz. wavelet-domain statistics, color features and image quality metrics in order to identify the source camera model. One of the limitations of these techniques is that they are not effective for images that are taken from a camera having a similar charge coupled device (CCD) and hence fail to detect the exact source camera model. Other techniques are based on the photo response non uniformity (PRNU), feature extraction, and camera response function (CRF). The technique [213] provides a counter measure in order to avoid the PRNU. Another technique [214] makes the use of the texture features of colored images as a left-out fingerprint of the camera which was used to capture the image. One more technique [215] used to assess the camera response function (CRF) from local invariant planar irradiance points (LPIP).

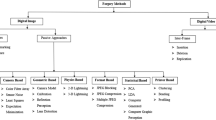

3 Video forgery approaches

As the videos are the collection of frames or images, any sort of modification can be done to these frames and hence to the videos. There are two types of video forgery techniques which are Inter-frame forgery and Intra-frame-forgery methods. The classification of video forgery detection techniques is shown in Fig. 18 below.

3.1 Inter-Frame video forgery

In Inter-frame Video Forgery, we have frame insertion, frame deletion, frame replication and frame duplication which results in the change of frame sequence in the video. This includes frame insertion, deletion and duplication. The various inter-frame video forgery approaches are diagrammatically explained in Fig. 19.

(1) Frame insertion refers to the insertion of a set of frames into the already existing frame sequence in the video.

(2) Frame deletion refers to the deletion of frames from the already existing set of frames in the video.

(3) Frame duplication refers to the duplication of frames or simply copying the set of frames and pastes them in some other location in the existing frame sequence of the video.

(4) Frame replication/shuffling refers to the shuffling or modifying the original order of frames in a video that makes the video different in meaning from the original one.

There exist various inter-frame video forgery detection techniques. These techniques are summarized in Table 15.

3.2 Intra-frame video forgery

In intra-frame video forgery, the alteration of content is done within a frame. Many a times, in a whole length of the video, the objects are put so as to make the altered frame unrecognizable. The intra-frame video forgery is of two types which include:

(1) Splicing Splicing in a video forgery refers to a fresh arrangement of video frames created from the original sequence by adding or removing some object to or from its frames, respectively.

(2) Upscale crop video forgery This refers to the cropping an extreme outer part of a video frame so as to remove the incidence proof and later the size of these frames is increased so that their inner dimension remains unchanged.

(3) Copy-Move forgery It refers to an insertion or the deletion of an object from a video frame take place. It can also be sometimes like copy-paste type forgery and hence is also called as region manipulation forgery. Removal of objects from videos is sometimes compensated by filling the vacant area with similar background content. This is called as inpainting. Inpainting can be carried out in any of the following two ways:

-

Temporal copy and paste Inpainting (TCP) refers to filling up the void with similar pixels from the surrounding coherent blocks or regions of the same video frame.

-

Exemplar-based texture synthesis inpainting: refers to filling up the void with the use of sample textures.

The techniques related to these types of video forgeries along with comparative study are summarized in the Table 15.

4 Deep learning approaches to forensic analysis

Deep learning is one of the promising subsets of machine learning that offers the automatic feature extraction capability without external intervention. It provides combined service of feature extraction and classification. Deep learning network is an interconnected multi-layer network. It has one input layer to feed input to the network and one output layer which provides the actual prediction. In between these layers there exist multiple hidden layers. There are two most important deep learning algorithms which have gained popularity due to their high accuracy rates for pattern recognition from an image.

There are two types of deep learning algorithms: convolutional neural networks (CNNs) and recurrent neural networks (RNNs). CNN is the most prominent deep learning algorithm that uses its convolution layer for the purpose of feature extraction. It finds its applications in pattern recognition and image processing. It has the capability to find the content pattern in an image and thus extract the features from it. RRNs find their applications in natural language processing (NLP) and speech recognition areas due to the fact that these networks process sequential and time series data.

Because of the high accuracy rate for pattern recognition, deep learning has found its application in image and video forensic analysis. Till date many researchers have come up with the image and video forensic techniques based on deep learning algorithms.

4.1 Deep learning-based techniques for image forgery analysis

Chen et al. [255, 256] proposed a CNN based approach which extracts median filtering residuals from image. The first layer of CNN is a filter layer which reduces the interference that arises due to presence of the edges and textures. The removal of interference helps model to investigate the traces left by median filtering. The approach was tested on a dataset of 15,352 images, obtained by composition of five image datasets. A spliced image may consist of traces of multiple devices. A CNN based image forgery detection technique [256] can detect media filtering and cut-paste forgeries using the filtering residuals. Using this 9-layer CNN framework the accuracy rate achieved was 85.14%. Another image forgery detection technique [257] can detect cut-paste and copy-move forgeries using the stacked auto-encoders (SAE). The technique used CASIA v1.0, CASIA v2.0, and Columbia datasets and thus achieved an accuracy of 91.09%. One more CNN based technique [258] can detect Gaussian blurring, Media filtering and resampling using prediction error filters. The dataset used are the images from 12 different camera models, thus achieving an accuracy of 99.10%.Another auto-encoder-based technique [222] can detect the cut-paste forgery. The dataset used various images from six smart phones and a camera, thus achieving an F1-score of 0.41 for basic forgery and 0.37 for post-processing forgeries. One more CNN based technique [223] can detect the cut-paste forgery using the hierarchal representation from the color images. The datasets used were CASIA v1.0, CASIA v2.0, and Columbia gray DVMM and achieved an accuracy of 98.04%, 97.83% and 96.38%, respectively. Another CNN based image forgery detection and localization technique [259] can detect and localize the cut-paste forgery using the source camera model features. The datasets used are Dresden image database, thus achieving a detection accuracy of 81% and localization accuracy of 82%.One more tempering localization technique [260] is based on multi-domain CNN and UCID dataset, thus achieving an accuracy of 95%. Another image splicing localization technique [261] used multi-task fully convolutional network and Columbia, CASIA v1.0, and CASIA v2.0, thereby achieving an F1 score of 0.54 on CASIA v1.0 and 0.61 on Columbia and MCC score of 0.52 on CASIA v1.0 and 0.47 on Columbia. Another copy-move forgery detection technique [262] with source localization used BurstNet, a deep learning network and VGG16 features. The datasets used are CASIA v2.0 and CoMoFoD datasets thereby offering an overall accuracy of 78%. One more image splicing detection technique [263] used Ringed Residual U-Net (RRU-Net) and the datasets of CASIA and COLUMB datasets, thereby achieving an accuracy of 76% and F1 score of 0.84 and 0.91 on CASIA and COLUMB datasets, respectively. Another recent image tempering localization and detection technique [264] used mask regional convolution neural network (Mask R-CNN) and the datasets of COVER and Columbia datasets, thereby achieving an average accuracy of 93% and 97%, respectively (see Figs. 20, 21) (see Table 16).

4.2 Deep learning-based techniques for video forgery analysis

The main task of video forensic analysis is the extraction of key-frame from the video scene. There is no better option other than deep learning techniques for feature extraction. Thus many researchers have come up with the deep learning-based video analysis techniques. One of such techniques [265] is based on ensemble learning for the summarization of the video events and named it as event bagging approach. Another technique [266] is an interest oriented video event summarization approach that represents image information using visual features. One more technique [267] was proposed using a nonconvex low-rank kernel sparse subspace learning for key-frame extraction and motion segmentation. Another event detects and summarization technique [268] for Multiview surveillance videos is based on machine learning technique Ada-Boost approach for the multi-view environment. The potential key frames have been selected for event summarization using deep learning framework CNN. Using these key frames the event boundaries are detected for video skimming. This model has been named as deep event learning boost-up approach (DELTA). The computational time reported for a sample rate of 30 frames per sec was 97.25 s. Another paper [269] introduces a fast and deep event summarization (F-DES) and a local alignment-based FASTA approach for the summarization of multi-view video events. Also a deep learning model has been used for feature extraction which dealt with the problem of variations in illumination and also helped in removing fine texture details to detect the objects in a frame. The FASTA algorithm is then used to capture the interview dependencies among multiple views of the video via local alignment. The computational time reported for a sample rate of 30 frames per second was 91.25 s. Another more related work [270] suggested key frame extraction technique based on Eratosthenes Sieve for event summarization. It combines all the video frames to create an optimal number of clusters using Davies-Bouldin index. The cluster head of each cluster is treated as a key-frame for all summarized frames. The results reported are for three variants AVS, EVS and ESVS and offers a maximum precision rate of 58.5% by ESVS variant, a recall of 50% and F-measure of 53.9%. Also the computational time reported for AVS, EVS, and ESVS are 65 s, 20 s and 20 s, respectively. Another similar work [271] proposed a Genetic algorithm and secret sharing schemes based genetic uses in video encryption with secret sharing (GUESS) model to generate sequence of frames with minimum correlation between the frames. The computational time reported is 16.375 s for 125frames and a block size of 25. Also the correlation reported is minimum and is equal to 0.01 for block size k = 35. Another paper [272] uses the spatial transformer networks (STN) that can efficiently be used for spatial and invariant information extraction from input to feed them to more plain NNs like artificial neural network (ANN) without comprising the performance. The authors suggest that this technique can replace the CNN for basic computer vision problems. The paper has reported different accuracy measures of precision, recall, F-measure and time per epoch for different models. One more recent work [273] has presented local alignment based multi-view summarization for generation of the event summary in the cloud environment. The reported computational time is 65.75 s per video at a sample rate of 30 frames per second and the accuracies of precision, recall and f-score have also been reported for three datasets Office, Lobby and BL-7F.

Some encryption based techniques are also worth discussing. One of the encryption related work [274] came up with a model named as V ⊕ SEE based on Chinese Remainder Theorem and Multi-Secret Sharing scheme for the encryption of video in order to securely transmit it over the internet. The computational time and correlation value between secret frames reported are minimum 15.555 s and 0.0126, respectively, for 125 frames. Another recent work [275] proposed a model for encryption over cloud. In this the key is generated dynamically using the original information without the involvement of the user in the process which makes it hard for an attacker to guess the key. Another work related to data encryption in images [276] explored the multimode approach of data encryption through quantum steganography. Another work [277] have proposed a Polynomial congruence based Multimedia Encryption technique over Cloud (P-MEC) for the encryption of transmitting multi-media over the cloud by introducing a cubic and polynomial congruence that makes it difficult for an attacker to decrypt the encrypted content in a reasonable amount of time. The accuracy measures like MSE, PSNR, and correlation have been reported for four types of images.

5 Future direction

After carrying out the extensive study in a well-organized way, it was found that there still exists a lot of research gap which needs to be dealt with by the upcoming researchers. Furthermore, the forgery detection is now becoming more and more challenging because of the advent of more sophisticated and easily available tools.

Some of the most common challenges in image forgeries are as follows:

-

Feature extraction is the most challenging task in forgery detection process on which the efficiency of the whole process depends. Deep learning is the most preferred one. There are a very few forgery detection techniques proposed till date based on deep learning. So in future deep learning can be explored further.

-

For watermarking schemes, the common challenges include imperceptibility, security, embedding capacity, and computational complexity which need a future focus.

-

Feature dimensionality is another challenge which also needs future attention.

-

Computational complexity of most of the forgery detection techniques is more and needs to be minimized in the future.

-

Lack of robustness against the post-processing operations in the case of many forensic approaches.

-

Most of the techniques also show invariance against the geometrical transformations and hence also need a future focus.

-

Some techniques show a slow feature learning rate which needs to be improved.

-

Improvisation of localization accuracy for most of the forgery detection techniques is also needed.

-

There is a lack of datasets that can cover all the possible attacks.

-

Most of the techniques show vulnerability to different types of forgery-attacks like JPEG Compression and others.

-

The single technique fails to detect all the present forgery types in an image which limits its utilization.

These challenges need a focus in future and hence open the gates for the researchers to carry out their future research in this area.

Furthermore, from the comparative study of video forensics techniques, it was worth noting that although we have many forensic tools and techniques that can efficiently detect video forgery, due to advancement in forgery tools and their easy availability, there still exists a research gap that can be dealt with in future. Some of the important and common challenges in video forgery include the following:

-

Computational complexity There exist many techniques with high computational complexity for forgery detection which need to be dealt with.

-

Currently we have very few anti-forensic techniques. Thus, researchers in the future can develop more such tools.

-

Robustness against post-processing operations requires to focus on in future.

-

Deep-fake detection is another interesting area for future research in the domain of video forensics.

-

Deep learning is gaining huge popularity in every field because of its novel feature extraction capability. There are very few algorithms existing that have explored deep learning techniques. Therefore, it opens a gate for researchers to explore it further in the domain of video forensics.

-

There also exist some machine learning-based video forgery detection techniques. These machine learning algorithms also prove to be very efficient with high detection accuracy and can hence be explored with other video forgery detection techniques in the future.

-

Many existing techniques fail to work for moving background and variable GOP structure videos, which again prove to be the hot topic to focus on in future.

6 Conclusion

With a systematic and well-organized research approach, a detailed and high-quality survey article has been presented. This review article not only provides a comparative study of various existing technologies, but also provides future directions and challenges in the field of image and video forensics. The first section of this article provides the brief introduction of image and video forensics along with their applications and the existing datasets. The following section presented the literature review of various sub categories of image and video forensics. The deep learning-based approaches to both image and video forensics have also been discussed in the separate section keeping in view its importance in the future research. After an in-depth literature review and comparative study, the survey finally provided future directions for researchers, pointing out the challenges in the field of image and video forensics, which are the focus of attention in the future, thus providing ideas for researchers to conduct future research.

References

Farid, H.: Digital doctoring: how to tell the real from the fake. Significance 3(4), 162–166 (2006)

Zhu, B.B., Swanson, M.D., Tewfik, A.H.: When seeing isn’t believing. IEEE Signal Process. Mag. 21(2), 40–49 (2004)

“Photo tampering throughout history,” (2012). http://www.fourandsix.com/photo-tampering-history/

Redi, J.A., Taktak, W., Dugelay, J.-L.: Digital image forensics: a booklet for beginners. Multimed. Tools ppl. 51(1), 133–162 (2010)

Parveen, A., Tayal, A.: An algorithm to detect the forged part in an image. In: Proceedings of 2nd International Conference on Communication and Signal Processing, 1486–1490 (2016)

Yan, C., Li, Z., Zhang, Y., Liu, Y., Ji, X., Zhang, Y.: Depth Image denoising using nuclear norm and learning graph model. ACM Trans. Multimed. Comput. Commun. Appl. 16(4), 1–17 (2021)

Zheng, H., Yong, H., Zhang, L. Deep convolutional dictionary learning for image de-noising. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, 630–641 (2021)

Shi, Q., Tang, X., Yang, T., Liu, R., Zhang, L.: Hyperspectral image de-noising using a 3-D attention denoising network. IEEE Trans. Geosci. Remote Sens., pp. 1–16 (2021)

Yan, C., Hao, Y., Li, L., Yin, J., Liu, A., Mao, Z., Gao, X.: Task-adaptive attention for image captioning. IEEE Trans. Circ. Syst. Video Technol., 1–1 (2021)

Quan, Y., Chen, Y., Shao, Y., Teng, H., Xu, Y., Ji, H.: Image de-noising using complex-valued Deep CNN. Pattern Recognit. 111, 107639 (2020)

Lan, R., Zou, H., Pang, C., Zhong, Y., Liu, Z., Luoet, X.: Image denoising via deep residual convolutional neural networks. SIViP 15, 1–8 (2021)

Cheng, S., Wang, Y., Huang, H., Liu, D., Fan, H., Liu, S.: NBNet: Noise basis learning for image de-noising with subspace projection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4896–4906 (2021)

Jaseela, S., Nishadha, S.G.: Survey on copy move image forgery detection techniques. Int. J. Comput. Sci. Trends Technol. (IJCST) 4(1), 87–91 (2016)

Fadl, S.M., Semary, N.O.A., Hadhoud, M.M.: Copy–rotate–move forgery detection based on spatial domain. In: Proceedings of 9th International Conference on Computer Engineering and Systems, pp. 136–141 (2014)

Ren, X.: An optimal image thresholding using genetic algorithm. Int. Forum Comput. Sci.-Technol. Appl. 1, 169–172 (2009)

Hussain, M., Muhammad, G., Saleh, S.Q., Mirza, A.M., Bebis, G.: Copy–move image Forgery detection using multi-resolution weber descriptors. In: Proceedings of 8th International Conference on Signal Image Technology and Internet Based Systems, pp. 1570–1577 (2013)

Agarwal, V., Mane, V.: Reflective SIFT for improving the detection of copy–move image forgery. In: Proceedings of 2nd International Conference on Research in Computational Intelligence and Communication Networks, pp. 84–88 (2016)

Amerini, I., Ballan, L., Caldelli, R., Bimbo, A.D., Serra, G.: A SIFT-Based forensic method for copy–move attack detection and transformation recovery. IEEE Trans. Inf. Forensics Secur. 6(3), 1099–1110 (2011)

He, Z., Lu, W., Sun, W., Huang, J.: Digital image splicing detection based on markov features in DCT and DWT domain. Pattern Recogn. 45(12), 4292–4299 (2012)

Shahroudnejad, A., Rahmati, M.: Copy–move forgery detection in digital images using affine-SIFT. In: Proceedings of 2nd International Conference of Signal Processing and Intelligent Systems, pp. 1–5 (2016)

Lin, S.D., Wu, T.: An integrated technique for splicing and copy–move forgery image detection. In: Proceedings of 4th International Conference on Image and Signal Processing, 2:1086–1091 (2011)

Ting, Z., Rang-ding, W.: Copy–move forgery detection based on SVD in digital image. In: Proceedings of 2nd International Conference on Image and Signal Processing, 1–5 (2009)

Koppanati, R.K., Kumar, K.: P-MEC: polynomial congruence-based multimedia encryption technique over cloud. IEEE Consum. Electron. Mag. 10(5), 41–46 (2021)

Yan, C., Gong, B., Wei, Y., Gao, Y.: Deep multi-view enhancement hashing for image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1 (2020)

Chaudhuri, U., Banerjee, B., Bhattacharya, A.: Siamese graph convolutional network for content based remote sensing image retrieval. Comput. Vis. Image Underst. 184, 22–30 (2019)

Tolias, G., Sicre, R., Jegou, H.: Particular object retrieval with ´ integral max-pooling of CNN activations. In: ICLR, pp. 1–12 (2015)

Xu, J., Wang, C., Qi, C., Shi, C., Xiao, B.: Unsupervised part-based weighting aggregation of deep convolutional features for image retrieval. In: AAAI, 2018, 32(1), pp. 7436–7443 (2018)

Liu, H., Wang, R., Shan, S., Chen, X.: Deep supervised hashing for fast image retrieval. In: CVPR, 2016, pp. 2064–2072 (2016)

Yan, K., Wang, Y., Liang, D., Huang, T., Tian, Y.: CNN vs. SIFT for image retrieval: alternative or complementary? In: ACM MM, 2016, 407–411 (2016)

Liu, L., Ouyang, W., Wang, X., Fieguth, P., Chen, J., Liu, X., Pietikainen, M.: Deep learning for generic object detection: a survey. Int. J. Comput. Vis. 128(2), 261–318 (2020)

Sridevi, M., Mala, C., Sandeep, S.: Copy–move image forgery detection in a parallel environment. In: Proceedings of CS & IT Computer Science Conference Proceedings (CSCP), pp. 19–29 (2012)

Kang, L., Cheng, X.P.: Copy–move forgery detection in digital image. In: Proceedings of 3rd International Congress on Image and Signal Processing (CISP), vol. 5, pp. 2419–2421 (2010)

Li, H., Luo, W., Qiu, X., Huang, J.: Image forgery localization via integrating tampering possibility maps. IEEE Trans. Inf. Forensics Secur. 12, 1–13 (2017)

Al-Sanjary, O.I., Sulong, G.: Detection of video forgery: A review of literature. J. Theoret. Appl. Inf. Technol. 74(2), 217–218 (2015)

Ng, T., Chang, S.: A data set of authentic and spliced image blocks (2004)

Hsu, Y., Chang, S.: Detecting image splicing using geometry invariants and camera characteristics consistency. In: 2006 IEEE International Conference on Multimedia and Expo, 549–552 (2006)

Jegou, H., Douze, M., Schmid, C.: Hamming Embedding and Weak geometry consistency for large scale image search. In: Proceedings of the 10th European conference on Computer vision, October, 2008 (2008)

Gloe, T., Bohme, R.: The dresden image database for benchmarking digital image forensics. J. Digital Forensic Pract. 3(2–4), 150–159 (2010)

Amerini, I., Ballan, L., Caldelli, R., Del Bimbo, A., Serra, G.: A SIFT-based forensic method for copy-move attack detection and transformation recovery. IEEE Trans. Inf. Forensics Secur. 6(3), 1099–1110 (2011)

Bas, P., Filler, T., Pevny, T.: (2011). May Break our steganographic system: the ins and outs of organizing BOSS. In: International Workshop on Information Hiding, pp. 59–70 (2011)

Bianchi, T., Piva, A.: Image forgery localization via block-grained analysis of JPEG artifacts. IEEE Trans. Inf. Forensics Secur. 7(3), 1003–1017 (2012)

Christlein, V., Riess, C., Jordan, J., Riess, C., Angelopoulou, E.: An evaluation of popular copy-move forgery detection approaches. IEEE Trans. Inf. Forensics Secur. 7(6), 1841–1854 (2012)

Tralic, D., Zupancic, I., Grgic, S., Grgic, M.: CoMoFoD—New database for copy–move forgery detection. In: International Symposium Electronics in Marine, pp. 49–54 (2013)

Dong, J., Wang, W., Tan, T.: CASIA image tampering detection evaluation database. In: 2013 IEEE China Summit and International Conference on Signal and Information Processing (2013)

Amerini, I., Ballan, L., Caldelli, R., Del-Bimbo, A., Del-Tongo, L., Serra, G.: Copy-move forgery detection and localization by means of robust clustering with J-Linkage. Signal Process. Image Commun. 28(6), 659–669 (2013)

Cozzolino, D., Poggi, G., Verdoliva, L.: Copy-move forgery detection based on PatchMatch. In: 2014 IEEE International Conference on Image Processing (ICIP), pp. 5312–5316 (2014)

Ardizzone, E., Bruno, A., Mazzola, G.: Copy-move forgery detection by matching triangles of keypoints. IEEE Trans. Inf. Forensics Secur. 10, 2084–2094 (2015)

Dang-Nguyen, D.T., Pasquini, C., Conotter, V., Boato, G.: RAISE- A raw images dataset for digital image forensics. In: Proc. 6th ACM Multimed. Syst. Conf. MMSys 2015, pp. 219–224 (2015)

Wattanachote, K., Shih, T.K., Chang, W.-L., Chang, H.-H.: Tamper detection of JPEG image due to seam modifications. IEEE Trans. Inf. Forensics Secur. 10(12), 2477–2491 (2015)

Silva, E., Carvalho, T., Ferreira, A.: A. Rocha, going deeper into copy- move forgery detection: Exploring image telltales via multi-scale analysis and voting processes. J. Vis. Commun. Image Represent. 29, 16–32 (2015)

Zampoglou, M., Papadopoulos, S., Kompatsiaris, Y.: Detecting image splicing in the wild (WEB). In: 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), pp. 1–6 (2015)

Wen, B., Zhu, Y., Subramanian, R., Ng, T.T., Shen, X., Winkler, S.: COVERAGE—a novel database for copy-move forgery detection. In: Proc. - Int. Conf. Image Process. ICIP.2016-August, 161–165 (2016)

National Inst. of Standards and Technology (2016). The 2016 Nimble challenge evaluation dataset, https://www.nist.gov/itl/iad/mig/nimble-challenge, (2016)

Korus, P., Huang, J.J.: Multi-scale analysis strategies in PRNU-based tampering localization. IEEE Trans. Inf. Forensics Secur. 12(4), 809–824 (2017)

Guan, H., Kozak, M., Robertson, E., Lee, Y., Yates, A., Delgado, A., Zhou, D., Kheyrkhah, T., Smith, J., Fiscus, J.: MFC Datasets: Large-Scale Benchmark Datasets for Media Forensic Challenge Evaluation, IEEE Winter Conference on Applications of Computer Vision (WACV 2019), Waikola, HI, [online], https://tsapps.nist.gov/publication/get_pdf.cfm?pub_id=927035. (2019)

Novozámský, A., Mahdian, B., Saic, S.: IMD2020: a large-scale annotated dataset tailored for detecting manipulated images. In: 2020 IEEE Winter Applications of Computer Vision Workshops (WACVW), pp. 71–80 (2020)

Qadir, G., Yahahya, S., Ho, A.: Surrey University Library for Forensic Analysis (SULFA). In: Proceedings of the IETIPR 2012, 3–4 July, London (2012)

Bestagini, P., Milani, S., Tagliasacchi, M., Tubaro, S.: Local tampering detection in video sequences. In: 2013 IEEE 15Th International Workshop on Multimedia Signal Processing (MMSP). IEEE, pp. 488–493 (2013)

Al-Sanjary, O.I., Ahmed, A.A., Sulong, G.: Development of a video tampering dataset for forensic investigation. Forensic Sci. Int. 266, 565–572 (2016)

Chen, S., Tan, S., Li, B., Huang, J.: Automatic detection of object-based forgery in advanced video. IEEE Trans. Circ. Syst. Video Tech. 26(11), 2138–2151 (2016)

D’Avino, D., Cozzolino, D., Poggi, G., Verdoliva, L.: Autoencoder with recurrent neural networks for video forgery detection. Electron. Image 2017(7), 92–99 (2017)

Ulutas, G., Ustubioglu, B., Ulutas, M., Nabiyev, V.V.: Frame duplication detection based on bow model. Multimed. Syst. 24(5), 549–567 (2018)

D’Amiano, L., Cozzolino, D., Poggi, G., Verdoliva, L.: A patch match-based dense-field algorithm for video copy-move detection and localization. IEEE Trans. Circ. Syst. Video Technol. 29, 669–682 (2018)

Panchal, H.D., Shah, H.B.: Video tampering dataset development in temporal domain for video forgery authentication. Multimed. Tools Appl. 79, 24553–24577 (2020)

Ferreira, W.D., Ferreira, C.B., Junior, G.D., Soares, F.: A review of digital image forensics. Comput. Electr. Eng. 85, 106685 (2020)

Birajdar, G.K., Mankar, V.H.: Digital image forgery detection using passive techniques: a survey. Digit. Investig. 10(3), 226–245 (2013)

Farid, H.: A survey of image forgery detection techniques. IEEE Signal Process. Mag. 26, 16–25 (2009)

Qazi, T., Hayat, K., Khan, S.U., Madani, S.A., Khan, I.A., Kołodziej, J., Li, H., Lin, W., Yow, K.C., Xu, C.-Z.: Survey on blind image forgery detection. Image Process IET 7, 660–670 (2013)

Ansari, M.D., Ghrera, S.P., Tyagi, V.: Pixel-based image forgery detection: a review. IETE J. Educ. 55, 40–46 (2014)

Lanh, T.V.L.T., Van-Chong, K.-S., Chong, K.-S., Emmanuel, S., Kankanhalli, M.S.: A survey on digital camera image forensic methods. In: 2007 IEEE International Conference on Multimedia and Expo, pp. 16–19 (2007)

Mahdian, B., Saic, S.: A bibliography on blind methods for identifying image forgery. Signal Process. Image Commun. 25, 389–399 (2010)

Warif, N.B.A., Wahab, A.W.A., Idris, M.Y.I.: Copy-move forgery detection: survey, challenges and future directions. J. Netw. Comput. Appl. 75, 259–278 (2016)

Christlein, V., Riess, C.C., Jordan, J., Riess, C.C., Angelopoulou, E.: An evaluation of popular copy-move forgery detection approaches. IEEE Trans. Inf. Forensics Secur. 7, 1841–1854 (2012)

Friedman, G.L.: Trustworthy digital camera: restoring credibility to the photographic image. IEEE Trans. Consum. Electron. 39(4), 905–910 (1993)

Blythe, P., Fridrich, J.: Secure digital camera. In: Proceedings of the Digital Forensic Research Workshop (DFRWS ’04), pp. 17–19 (2004)

Rivest, R.L., Shamir, A., Adleman, L.: A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 21(2), 120–126 (1978)

Menezes, A.J., VanstoneOorschot, S.S.A.P.C.V.: Handbook of Applied Cryptography, 1st edn. CRC Press, Boca Raton (1996)

Cox, I.J., Miller, M.L., Bloom, J.A.: Digital Watermarking. Morgan Kaufmann Publishers, Berlin (2002). ISBN 978-1-55860-714-9

Barni, M., Bartolini, F.: Watermarking systems engineering: enabling digital assets security and other applications. In: Signal Processing and Communications. Marcel Dekker (2004)

Cox, I.J., Miller, M.L., Bloom, J., Fridrich, J., Kalker, T.: Digital Watermarking and Steganography, 2nd edn. Morgan Kaufmann, San Francisco (2008)

Carneiro-Tavares, J.R., Madeiro-Bernardino-Junior, F.: Word-hunt: a LSB steganography method with low expected number of modifications per pixel. IEEE Lat. Am. Trans. 14(2), 1058–1064 (2016)

Laskar, S.A., Hemachandran, K.: Steganography based on random pixel selection for efficient data hiding. Int. J. Comput. Eng. Technol. (IJCET) 4, 31–44 (2013)