Abstract

We present a proof of qualitative stochastic homogenization for a nonconvex Hamilton–Jacobi equations. The new idea is to introduce a family of “sub-equations” and to control solutions of the original equation by the maximal subsolutions of the latter, which have deterministic limits by the subadditive ergodic theorem and maximality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation and overview

We study the Hamilton–Jacobi equation

The potential \(V\) is assumed to be a bounded, stationary–ergodic random potential. We prove that, in the limit as the length scale \(\varepsilon > 0\) of the correlations tends to zero, the solution \(u^\varepsilon \) of (1.1), subject to an appropriate initial condition, converges to the solution \(u\) of the effective, deterministic equation

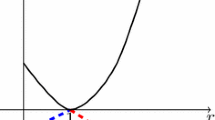

The effective Hamiltonian \(\overline{H}\) is, in general, a non-radial, nonconvex function whose graph inherits the basic “mexican hat” shape of that of the spatially independent Hamiltonian \(p\mapsto (|p|^2-1)^2\). As we will show, it typically has two “flat spots” (regions in which it is constant), with one a neighborhood of the origin and at which \(\overline{H}\) attains a local maximum and the other a neighborhood of \(\{ |p| = 1\}\) and at which \(\overline{H}\) attains its global minimum. See Fig. 1.

A cross section of the graph of \(\overline{H}\), illustrated in the case \(\inf V = 0\) and \(\sup V = \frac{2}{5}\). The difference \(h(0) - (\overline{H}(0) - \inf V)\) is precisely \(\max \{ 1, \sup V\}\). The regions \(K_i\) are defined below in (2.30). While \(\overline{H}\) is even, it is not radial, in general, unless for example the law of \(V\) is invariant under rotations

Qualitative stochastic homogenization results for convex Hamilton–Jacobi equations were first obtained independently by Rezakhanlou and Tarver [21] and Souganidis [23] and subsequent qualitative results were obtained by Lions and Souganidis [15–17], Kosygina, Rezakhanlou and Varadhan [12], Kosygina and Varadhan [13], Schwab [22], Armstrong and Souganidis [2, 3] and Armstrong and Tran [4]. Quantitative homogenization results were proved in Armstrong et al. [1] (see also Matic and Nolen [19]).

In contrast to the periodic setting, in which nonconvex Hamiltonians are not more difficult to handle than convex Hamiltonians (c.f. [9, 14]), extending the results of [21, 23] to the nonconvex case has remained, until now, completely open (except for the quite modest extension to level-set convex Hamiltonians [3] and the forthcoming work [6], which considers a first-order motion with a sign-changing velocity). The issue of whether convexity is necessary for homogenization in the random setting is mentioned prominently as an open problem for example in [11, 16, 17]. As far as we know, in this paper we present the first stochastic homogenization result for a genuinely non-convex Hamilton–Jacobi equation.

A new proof of qualitative homogenization for convex Hamilton–Jacobi equations in random environments was introduced in [3], based on comparison arguments which demonstrate that maximal subsolutions of the equation (also called solutions of the metric problem) control solutions of the approximate cell problem. This new argument is applicable to merely level-set convex Hamiltonians and lead to the quantitative results of [3], among other developments. Several of the comparison arguments we make in the proofs of Lemmas 3.2–3.6, below, which are at the core of the argument for our main result, rely on some of the ideas introduced in [3]. The metric problem was also used to obtain dynamical information in Davini and Siconolfi [7, 8] and as the basis of numerical schemes for computing \(\overline{H}\) in Oberman et al. [20] and Luo et al. [18].

The proof of our main result is based on comparison arguments, in which we control the solution \(v^\delta \) of the approximate cell problem

by the maximal subsolutions of the following family of “sub-equations”

where the real parameters \(\mu \) and \(\sigma \) range over \(-\inf V \le \mu < \infty \) and \(\sigma \in [-1,1]\). Notice that (1.4) for \(\sigma = \pm 1\) can be formally derived from the metric problem associated to (1.1), which is roughly

by taking the square root of (1.5). As it turns out that we must consider (1.4) also for \(-1<\sigma <1\), in order to “connect” the branches of the square root function. The key insight is that, while the solutions of both (1.4) and (1.5) have a subadditive structure and thus deterministic limits by the ergodic theorem, there is more information contained in the former than the latter. Indeed, as we show, there is just enough information in (1.4) to allow us to deduce that (1.1) homogenizes.

1.2 Precise statement of the main result

The random potential is modeled by a probability measure on the set of all potentials. To make this precise, we take

to be the space of real-valued, bounded and uniformly continuous functions on \(\mathbb {R}^d\). We define \(\mathcal {F}\) to be the \(\sigma \)-algebra on \(\Omega \) generated by pointwise evaluations, that is

The translation group action of \(\mathbb {R}^d\) on \(\Omega \) is denoted by \(\{ T_y\}_{y\in \mathbb {R}^d}\), that is, \(T_y:\Omega \rightarrow \Omega \) is defined by

We consider a probability measure \(\mathbb {P}\) on \((\Omega ,\mathcal {F})\) satisfying the following properties: there exists \(K_0>0\) such that

for every \(E \in \mathcal {F}\) and \(y\in \mathbb {R}^d\),

and

We now present the main result. Recall that, for each \(\varepsilon > 0 \) and \(g\in {{\mathrm{BUC}}}(\mathbb {R}^d)\), there exists a unique solution \(u^\varepsilon (\cdot ,g)\in C(\mathbb {R}^d\times [0,\infty ))\) of (1.1) in \(\mathbb {R}^d\times (0,\infty )\), subject to the initial condition \(u^\varepsilon (x,0,g) = g(x)\). All differential equations and inequalities in this paper are to be interpreted in the viscosity sense (see [10]).

Theorem 1.1

Assume \(\mathbb {P}\) is a probability measure on \((\Omega ,\mathcal {F})\) satisfying (1.6), (1.7) and (1.8). Then there exists \(\overline{H} \in C(\mathbb {R}^d)\) satisfying

such that, if we denote, for each \(g\in {{\mathrm{BUC}}}(\mathbb {R}^d)\), the unique solution of (1.2) subject to the initial condition \(u(x,0) = g(x)\) by \(u(x,t,g)\), then

Some qualitative properties of \(\overline{H}\), including the confirmation that its basic shape resembles that of Fig. 1, are presented in Sect. 2.2.

1.3 Outline of the paper

In Sect. 2.1, we introduce the maximal subsolutions of (1.4), study their relationship to (1.5) and show that they homogenize. We construct \(\overline{H}\) in Sect. 2.2 and study some of its qualitative features. The proof of Theorem 1.1 is the focus of Sect. 3, where we compare the maximal subsolutions of (1.4) to the solutions of (1.3).

2 Identification of the effective Hamiltonian

Following the metric problem approach to homogenization introduced in [3], one is motivated to consider, for \(\mu \in \mathbb {R}\), maximal subsolutions of the equation

Unfortunately, unlike the convex setting, it turns out (as is well-known) that the maximal subsolutions of (2.1) do not encode enough information to identify \(\overline{H}\), much less prove homogenization. This is not surprising since, by the subadditive nature of the maximal subsolutions, if they could identify \(\overline{H}\) then the latter would necessarily be convex. Instead, we consider maximal subsolutions of the “sub-equation”

with we take the parameters \(\mu \ge -\inf _{\mathbb {R}^d} V\) and \(\sigma \in [-1,1]\). The idea is that we can control solutions of (1.1) by the maximal subsolutions of (2.2), varying the parameters \(\mu \) and \(\sigma \) in an appropriate way. Observe that we may formally derive (2.2) with \(\sigma =\pm 1\) from (2.1) by taking the square root of the equation.

2.1 The maximal subsolutions of (2.2)

We define the maximal subsolutions of (2.2) and review their deterministic properties. Throughout this subsection we suppose for convenience that

For every \(\mu \ge 0\), \(-1 \le \sigma \le 1\) and \(z\in \mathbb {R}^d\), we define

Clearly this definition is void if (2.2) possesses no subsolutions, which occurs if and only if the right-hand side is not nonnegative, that is, if and only if

In this case, we simply take \(m_{\mu ,\sigma } \equiv -\infty \). Otherwise, we note that \(m_{\mu ,\sigma } \ge 0\).

In the next proposition, we summarize some basic properties of \(m_{\mu ,\sigma }\) and relate it to the equation

Note that (2.5) is the same as (2.1) in the case that \(\sigma \in \{ -1, 1\}\).

Proposition 2.1

Fix \(V \in \Omega \) satisfying (2.3), \(\mu \ge 0\) and \(\sigma \in [-1,1]\) such that

For every \(y,z\in \mathbb {R}^d\),

For every \(x,y,z\in \mathbb {R}^d\),

For every \(z\in \mathbb {R}^d\), \(m_\mu (\cdot ,z) \in C^{0,1}(\mathbb {R}^d)\) and

and, moreover,

Proof

Since (2.2) is a convex equation, a function \(u\in {{\mathrm{USC}}}(\mathbb {R}^d)\) is a subsolution of (2.2) if and only if \(u\in C^{0,1}_{\mathrm {loc}}(\mathbb {R}^d)\) (and thus \(u\) is differentiable almost everywhere) and \(u\) satisfies (2.2) at almost every point of \(\mathbb {R}^d\). See e.g. [5] or [3, Lemma 2.1]. Since \(V\) is uniformly bounded, a subsolution must in fact be globally Lipschitz, i.e., \(u\in C^{0,1}(\mathbb {R}^d)\). Thus, for each \(z\in \mathbb {R}^d\), \(m_{\mu ,\sigma }(\cdot ,z)\) is the supremum of a family of equi-Lipschitz functions on \(\mathbb {R}^d\) and hence belongs to \(C^{0,1}(\mathbb {R}^d)\). As \(u\) is the supremum of a family of subsolutions of (2.2), we have

We also obtain from the above characterization of subsolution of (2.2) that \(u \in {{\mathrm{USC}}}(\mathbb {R}^d)\) is a subsolution of (2.2) if and only if \(-u\) is also a subsolution of (2.2). This together with the definition of \(m_{\mu ,\sigma }\) yields (2.7) as well as that

Finally, by the maximality of \(m_{\mu ,\sigma }(\cdot ,z)\), the Perron method yields that

A proof of (2.14) can also be found in [3, Proposition 3.2].

The subadditivity (2.8) of \(m_{\mu ,\sigma }\) is immediate from maximality. Indeed, since \(m_{\mu ,\sigma }(\cdot ,z) - m_{\mu ,\sigma }(x,z)\) is a subsolution of (2.2) in \(\mathbb {R}^d\), we may use it as an admissible function in the definition of \(m_{\mu ,\sigma }(y,x)\). This yields (2.8).

Proceeding with the demonstration of (2.9), (2.10) and (2.11), we select a smooth test function \(\phi \in C^{\infty }(\mathbb {R}^d)\) and \(x_0 \in \mathbb {R}^d\) such that

which is equivalent to

According to (2.13) and (2.16),

If \(\sigma \le 0\), then (2.17) implies that

This completes the proof of (2.11). If \(y_0 \ne z\), then (2.14) and (2.15) yield

which, together with (2.17), gives

Rearranging the equation and squaring the previous line, we get

In view of the fact that (2.15) and (2.16) are equivalent, and that (2.18) is symmetric in \(\phi \) and \(-\phi \), we have proved both (2.9) and (2.10). \(\square \)

2.2 Limiting shapes of \(m_{\mu ,\sigma }\) and identification of \(\overline{H}\)

Since \(m_{\mu ,\sigma }\) is defined to be maximal , the subadditive ergodic theorem implies that \(m_{\mu ,\sigma }\) is deterministic in the rescaled macroscopic limit. The precise statement we need is summarized in Proposition 2.5. Before presenting it, we first observe that \(\inf _{\mathbb {R}^d} V\) and \(\sup _{\mathbb {R}^d} V\) are deterministic quantities, thanks to the ergodicity hypothesis.

Lemma 2.2

There exist \(\overline{v},\underline{v}\in \mathbb {R}\) such that

Proof

For each \(t\in \mathbb {R}\), the events \(\left\{ V \in \Omega \,:\, \inf _{\mathbb {R}^d} V < t \right\} \) and \(\left\{ V \in \Omega \,:\, \sup _{\mathbb {R}^d} V > t \right\} \) are invariant under translations and therefore have probability either 0 or 1 by (1.8). We take \(\overline{v}\) to be the largest value of \(t\) for which \(\mathbb {P}\left[ \sup _{\mathbb {R}^d} V > t \right] = 1\) and \(\underline{v}\) to be the smallest value of \(t\) for which \(\mathbb {P}\left[ \inf _{\mathbb {R}^d} V < t \right] = 1\). In view of (1.6), we have \(-K_0\le \underline{v} \le \overline{v} \le K_0\). The statement of the lemma follows.

We assume throughout the rest of the paper that \(\underline{v} = 0\). Note that we may, without loss of generality, subtract any constant we like from the random potential \(V\) without altering the statement of Theorem 1.1.

The value of \(\overline{v}\) prescribes, almost surely, the set of parameters \((\mu ,\sigma )\) for which \(m_{\mu ,\sigma }\) is finite, i.e., for which (2.6) holds. We denote this by

It is convenient to set

Note that if \(\kappa \ge 0\), then \((\kappa ,-1) \in \mathcal {A}\) and \(\kappa \) is the largest value of \(\mu \) for which \((\mu ,-1) \in \mathcal {A}\). If on the other hand \(\kappa < 0\), then \((\mu ,-1) \not \in \mathcal {A}\) for every \(\mu \ge 0\).

We also define the following subset \(\mathcal {A}'\) of \(\mathcal {A}\), which consists of those parameters which play a role in the proof of Theorem 1.1:

Observe that there exists a unique element \((\mu _*,\sigma _*)\in \mathcal {A}'\) for which

In fact, with \(\kappa \) as above, we have

We next establish some simple bounds on the growth of \(m_{\mu ,\sigma }\).

Lemma 2.3

Assume \((\mu ,\sigma )\in \mathcal {A}\) and \(V\in \Omega \) satisfies \(\inf _{\mathbb {R}^d} V = 0\) and \(\sup _{\mathbb {R}^d} V = \overline{v}\). Then, for every \(y,z\in \mathbb {R}^d\), we have the following: in the case that \(\sigma \le 0\),

and, in the case that \(\sigma \ge 0\),

Proof

The arguments for (2.23) and (2.24) are almost the same, so we only give the proof of (2.23). The lower bound is immediate from the definition of \(m_{\mu ,\sigma }(\cdot ,z)\) and the fact that the left side of (2.23), as a function of \(y\), is a subsolution of (2.2) in \(\mathbb {R}^d\). To get the upper bound, we observe that any subsolution \(u \in {{\mathrm{USC}}}(\mathbb {R}^d)\) of (2.2) satisfies

In particular, by the characterization of subsolutions mentioned in the first paragraph of the proof of Proposition 2.1, we deduce that (2.25) holds at almost every point of \(\mathbb {R}^d\). This implies that \(u\) is Lipschitz with constant \(\left( 1 +\sigma \mu ^{1/2} \right) ^{1/2}\). This argument applies to \(m_{\mu ,\sigma }(\cdot ,z)\) by (2.12). Since \(m_{\mu ,\sigma }(z,z) = 0\), we obtain the upper bound of (2.23). \(\square \)

We next prove some continuity and monotonicity properties for the function \((\mu ,\sigma ) \mapsto m_{\mu ,\sigma }(y,z)\) on \(\mathcal {A}\).

Lemma 2.4

Fix \(V\in \Omega \) for which \(\inf _{\mathbb {R}^d} V= 0\) and \(\sup _{ \mathbb {R}^d}V = \overline{v}\) and suppose that \((\mu ,\sigma ) \in \mathcal {A}\) is such that \(\sigma (\mu +\overline{v})^{1/2} > -1\). Then

For every pair \((\mu ,\sigma ) , (\nu ,\tau ) \in \mathcal {A}\) and \(y,z\in \mathbb {R}^d\), we have

Moreover, for every \((\mu ,\sigma ), (\nu ,\tau ) \in \mathcal {A}\) with \(\sigma \mu < \tau \nu \), there exists \(c>0\) such that, for all \(y,z\in \mathbb {R}^d\),

Proof

Let \(0< \varepsilon < 1\), and observe that, by (2.12), for \(\lambda := 1-\varepsilon \), the function \(w:= \lambda m_{\mu ,\sigma }(\cdot ,z)\) is a subsolution of the equation

Observe that the infimum over \(\mathbb {R}^d\) of the term in parentheses on the right-hand side is positive by assumption. Thus if \((\nu ,\tau )\) is sufficiently close to \((\mu ,\sigma )\), we have that for all \(y\in \mathbb {R}^d\),

By maximality, we deduce that \(w \le m_{\nu ,\tau }(\cdot ,z)\) for all \((\nu ,\tau )\) sufficiently close to \((\mu ,\sigma )\), depending on \(\varepsilon \). According to the bounds in Lemma 2.3, we obtain, for a constant \(C>0\) depending only on \((\mu ,\sigma ,\overline{v})\), the estimate

Reversing the roles of \((\mu ,\sigma )\) and \((\nu ,\tau )\), using that \(\tau (\nu +\overline{v}) >-1\) for \((\nu ,\tau )\) close to \((\mu ,\sigma )\), and arguing similarly, we get, for all \((\nu ,\tau )\) sufficiently close to \((\mu ,\sigma )\), that

This completes the proof of (2.26).

The monotonicity property (2.27) is immediate from the definition (2.4) since the condition on the right of (2.27) implies that the right side of (2.2) is larger for \((\nu ,\tau )\) than for \((\mu ,\sigma )\), and hence the admissible class of subsolutions in (2.4) is larger.

The strict monotonicity property in the last statement of the lemma follows from the fact, which is easy to check from the characterization of subsolutions mentioned in the proof of Proposition 2.1, that \(y \mapsto m_{\mu ,\sigma }(y,z) + c|y-z|\) is a subsolution of

provided \(c>0\) is sufficiently small, depending on a lower bound for \(\sigma (\nu -\mu )\). \(\square \)

The following proposition is a special case of, for example, [3, Proposition 4.1] or [4, Proposition 2.5]), and so we do not present the proof. The argument is an application of the subadditive ergodic theorem, using the subadditivity of \(m_{\mu ,\sigma }\), (2.8).

Proposition 2.5

For each \((\mu ,\sigma )\in \mathcal {A}\), there exists a convex, positively homogeneous function \(\overline{m}_{\mu ,\sigma } \in C(\mathbb {R}^d)\) such that

We are now ready to construct \(\overline{H}\). We continue by introducing two functions

defined by

We take \(\overline{H}^{\,-}\!(p):= -\infty \) if the admissible set in its definition is empty. Since \(\mu \mapsto \overline{m}_{\mu ,-1}(\cdot )\) is decreasing, we see that \(\overline{H}^-(p) = -\infty \) if and only if there exists \(y \in \mathbb {R}^d\) such that \(\overline{m}_{0,-1}(y) < p\cdot y\). We define the effective Hamiltonian to be the maximum of these:

Observe that since, for all \(\mu ,\nu \ge 0\),

we have that

Therefore we can also write

We next check that \(\overline{H}\) is coercive, i.e., that (1.9) holds.

Lemma 2.6

For every \(p\in \mathbb {R}^d\),

Proof

According to Proposition 2.5 and Lemmas 2.2 and 2.3, for every \(\mu \ge 0\),

provided that \((\mu ,-1)\in \mathcal {A}\), and

In view of the definition of \(\overline{H}\), this yields the estimate (2.29). \(\square \)

In order to describe \(\overline{H}\) further, we partition \(\mathbb {R}^d\) into four regions, generally corresponding to the following features in the graph of \(\overline{H}\): the flat hilltop, the flat valley, the slope between the latter two, and the unbounded region outside the flat valley (see Fig. 1). We define

Here \(\partial \phi (x_0)\) denotes the subdifferential of a convex function \(\phi :\mathbb {R}^d\rightarrow \mathbb {R}\) at \(x_0\in \mathbb {R}^d\),

and we write \(\partial \phi (E) := \cup \left\{ \partial \phi (x)\,:\, x\in \mathbb {E}\right\} \) for \(E\subseteq \mathbb {R}^d\).

We remark that \(0 \in K_1\) by the nonnegativity of \(m_{\mu ,\sigma }\) and \(K_2 = \emptyset \) if and only if \(\mu _* = 0\). Since \(m_{\mu ,0}(y,0) = \overline{m}_{\mu ,0}(y) = |y|\) for every \(\mu \ge 0\), we see that \(\partial B_1 \subseteq K_3\). Finally, we note that \(K_4\) is unbounded, while \(K_1\cup K_2 \cup K_3\) is bounded.

The following proposition gives us a representation of \(\overline{H}\) which is convenient for the proof of Theorem 1.1. It also confirms that the basic features of \(\overline{H}\) are portrayed accurately in Fig. 1.

Proposition 2.7

For each \(p\in \mathbb {R}^d\setminus K_1\), there exists a unique \(\mu \ge 0\) such that, for some \((\mu ,\sigma ) \in \mathcal {A}'\), we have \(p \in \partial \overline{m}_{\mu ,\sigma }(\partial B_1)\). In particular, \(\{ K_1,K_2,K_3,K_4\}\) is a disjoint partition of \(\mathbb {R}^d\). Moreover, with \(\mu _*\) as defined in (2.22), we have

Proof

We move along the path \(\mathcal {A}'\) starting at \((\mu _*,\sigma _*)\). If \(\mu _*>0\) and hence \(\sigma _*=-1\), then we move in straight line segments from \((\mu _*,-1)\) to \((0,-1)\) to \((0,1)\) to \((\infty ,1)\); otherwise, if \(\mu _*=0\), then we move first from \((0,\sigma _*)\) to \((0,1)\) and then to \((\infty ,1)\).

By Lemma 2.4, the graph of the positively homogeneous, convex function \(\overline{m}_{\mu ,\sigma }\) is continuous and increasing as we move along the path. Therefore, given \(p\in \mathbb {R}^d\setminus K_1\), we can stop at the first point \((\mu ,\sigma )\in \mathcal {A}'\setminus \{ (\mu _*,\sigma _*) \}\) in the path at which the graph of \(\overline{m}_{\mu ,\sigma }\) is tangent to that of the plane \(y\mapsto p\cdot y\). Indeed, \(p\not \in K_1\) ensures that the plane \(p\cdot y\) is not below the graph of \(\overline{m}_{\mu ,\sigma }\) for every \((\mu ,\sigma )\in \mathcal {A}'\setminus \{ (\mu _*,\sigma _*) \}\), and this point must be reached at or before \(((|p|^2-1)^2,1)\), by the estimate (2.24). The uniqueness of \(\mu \) follows from the last statement of Lemma 2.4. This completes the proof of the first statement. The formula (2.31) is then immediate from the definition of \(\overline{H}\) and Lemma 2.4. \(\square \)

3 Proof of homogenization

We consider, for each \(p\in \mathbb {R}^d\) and \(\delta > 0\), the approximate cell problem

It is classical that, for every \(p\in \mathbb {R}^d\) and \(\delta > 0\), there exists a unique viscosity solution \(v^\delta =v^\delta (\cdot ,p) \in C(\mathbb {R}^d)\) of (3.1) subject to the growth condition

In fact, by comparing \(v^\delta (\cdot ,p)\) to constant functions we immediately obtain that \(v^\delta (\cdot ,p)\) is bounded and

It follows from (3.2) and the coercivity of the equation that \(v^\delta \) is Lipschitz and, for \(C>0\) depending only on an upper bound for \(|p|\) and \(\sup _{\mathbb {R}^d} V\), we have

Using (3.3) and comparing \(v^\delta (\cdot ,p)\) to \(v^\delta (\cdot ,q) \pm C\delta ^{-1}|p-q|\), we obtain, for a constant \(C> 0\) depending only on an upper bound for \(\max \{ |p|,|q| \}\) and \(\sup _{\mathbb {R}^d} V\), the estimate

By the perturbed test function method, Theorem 1.1 can be reduced to the following proposition.

Proposition 3.1

We omit the demonstration that Proposition 3.1 implies Theorem 1.1, since it is classical and can also be obtained for example by applying [1, Lemma 7.1]. The argument for Proposition 3.1 is broken into the following five lemmas. Recall that \(\mathcal {A}\) is the set of admissible parameters \((\mu ,\sigma )\) defined in (2.19).

Lemma 3.2

Lemma 3.3

Lemma 3.4

Lemma 3.5

Lemma 3.6

Postponing the proof of the lemmas, we show first that they imply Proposition 3.1.

Proof of Proposition 3.1 According to (3.4), using also Lemma 2.2 to control the constant in (3.4) on an event of full probability, it suffices to prove that

By [3, Lemma 5.1], to obtain (3.6), it suffices to show that

Indeed, while the Hamiltonian in [3] is assumed to be convex in \(p\), the argument for [3, Lemma 5.1] relies only on a \(\mathbb {P}\)-almost sure, uniform Lipschitz bound on \(v^\delta (\cdot ,p)\) (which we have in (3.3), using again Lemma 2.2 to control the constant), and therefore the lemma holds in our situation notwithstanding the lack of convexity.

To obtain (3.7), we consider the partition \(\{ K_1,K_2,K_3,K_4\}\) of \(\mathbb {R}^d\) given by (2.30) and Proposition 2.7 and check that, for each \(i \in \{ 1,2,3,4\}\),

In view of the formula (2.31), we see that:

-

For \(i=1\), we consider two cases. If \(\kappa \le 0\), then \(\mu _* = 0\) and, in view of the fact that \(K_1 \subseteq B_1\), we obtain (3.8) for \(i=1\) from Lemmas 3.4 and 3.6. If \(\kappa > 0\), then \(\mu _*=\kappa >0\) and \(\sigma _*=-1\) and we have \((\mu ,-1) \in \mathcal {A}\) for all \(0\le \mu < \mu _*\), and thus (3.8) for \(i=1\) follows from Lemmas 3.3 and 3.6.

-

For \(i=3\), we get (3.8) immediately from Lemmas 3.4 and 3.5.

-

For \(i=4\), the claim (3.8) is a consequence of Lemmas 3.2 and 3.5.

This completes the argument. \(\square \)

We obtain each of the five auxiliary lemmas stated above by a comparison between the functions \(m_{\mu ,\sigma }\) and \(v^\delta \), with the exception of Lemma 3.4, which is much simpler.

Proof of Lemma 3.2 Fix \((\mu ,1) \in \mathcal A\) and \(p\in \partial \overline{m}_{\mu ,1}(\partial B_1)\). Select \(e\in \partial B_1\) such that \(p\in \partial \overline{m}_{\mu ,1}(e)\). This implies that, for every \(y\in \mathbb {R}^d\),

Suppose that \(V\in \Omega \) and \(\delta >0\) are such that

If \(c>0\) is sufficiently small, then the function

satisfies

Due to (3.2), there exists \(s>0\), independent of \(\delta \), such that

and specializing (3.11) to the domain \(U\) yields, in view of the definition of \(\theta \), that

We observe next that, due to (2.10) and (3.12), the function

is a supersolution of the equation

In view of \(0\in U\), (3.12), (3.13) and (3.14), the comparison principle yields

Rearranging the previous inequality and using (3.12), we find that

Notice that (3.9) and the positive homogeneity of \(\overline{m}_{\mu ,1}\) implies that

Combining the previous two lines, we obtain

We have shown that (3.10) implies (3.15). We therefore obtain the conclusion of the lemma by applying Proposition 2.5. \(\square \)

Proof of Lemma 3.3 The proof is similar to Lemma 3.2. The difference is that we use (2.11) rather than (2.10), which means that we do not have to take the vertex of \(m_{\mu ,\sigma }\) to be far away from the origin in the definition of the function \(\tilde{m}\). The argument is therefore easier and the statement of the lemma is stronger.

Fix \((\mu ,-1) \in \mathcal A\) and \(p\in \partial \overline{m}_{\mu ,-1}(0)\). This implies that, for every \(y\in \mathbb {R}^d\),

Suppose that \(V\in \Omega \) and \(\delta >0\) are such that

If \(c>0\) is sufficiently small, then the function

satisfies

Due to (3.2), there exists \(s>0\), independent of \(\delta \), such that

and restricting (3.18) to the domain \(U\) we obtain, in view of the definition of \(\theta \), that

According to (2.10), the function

is a supersolution of the equation

In view of \(0\in U\), (3.19), (3.20) and (3.21), the comparison principle yields

Rearranging the previous inequality and using (3.19), we find that

Using (3.16), we get

We have shown that (3.17) implies (3.22). We therefore obtain the conclusion of the lemma from Proposition 2.5. \(\square \)

Proof of Lemma 3.4 Fix \(p\in \mathbb {R}^d\), \(V\in \Omega \) for which \(\inf _{\mathbb {R}^d} V = 0\) and \(\sup _{\mathbb {R}^d} V = \overline{v}\) and let \(\theta > 0\). Select \(y_\theta \in \mathbb {R}^d\) and a number \(r>0\) such that \(\sup _{B_r(y_\theta )} V \le \theta \). Let \(\varphi \) be any smooth function on \(B_r(y_\theta )\) such that \(\varphi (x)\rightarrow +\infty \) as \(x \rightarrow \partial B_r(y_\theta )\). Then \(v^\delta (\cdot ,p) - \varphi \) attains a local maximum at some point \(y \in B_r(y_\theta )\). The equation (3.1) then gives

Letting \(r\rightarrow 0\), we obtain that

In view of (3.3), we have

where \(C>0\) depends only on an upper bound for \(|p|\) and \(\overline{v}\). Sending first \(\delta \rightarrow 0\) and then \(\theta \rightarrow 0\) yields

We have shown that \(\inf _{\mathbb {R}^d} V = 0\) and \(\sup _{\mathbb {R}^d} V =\overline{v}\) imply (3.24) for all \(p\in \mathbb {R}^d\). We therefore obtain the conclusion of the lemma by an appeal to Lemma 2.2. \(\square \)

Proof of Lemma 3.5 The argument is similar to that of Lemma 3.2. We fix \((\mu ,\sigma ) \in \mathcal A\) and \(p\in \partial \overline{m}_\mu (\partial B_1)\). Select \(e\in \partial B_1\) such that \(p \in \partial \overline{m}_{\mu ,\sigma }(e)\). Since \(\overline{m}_{\mu ,\sigma }\) is positively homogeneous, this means that, for every \(y\in \mathbb {R}^d\),

We suppose that for fixed \(V\in \Omega \) and \(\delta >0\) we have

We define

and notice that, for \(c>0\) sufficiently small, \(w\) satisfies

By (3.2), there exists \(s>0\), which independent of \(\delta \), such that

In view of (3.26), (3.27) and (3.28), we have

We next employ (2.9), (3.28), and the fact that \(\sigma ^2 \le 1\) to deduce that the function

is a subsolution of the equation

The usual comparison hence implies

Rearranging the above and using (3.28) to achieve that

By the symmetric property (2.7) of \(m_{\mu ,\sigma }\) and (3.25), we get

We have shown that (3.26) implies (3.32). The conclusion of the lemma therefore follows from Proposition 2.5. \(\square \)

Proof of Lemma 3.6 Fix \(p\in \overline{B}_1\) and \(V\in \Omega \) for which \(\sup _{\mathbb {R}^d} V = \overline{v}\). Suppose \(\theta ,\delta > 0\) are such that

Define

and check that, if \(c>0\) is sufficiently small, then \(w\) satisfies

By (3.2), there exists \(s>0\), which is independent of \(\delta \), such that

In view of (3.33), (3.34) and (3.35), we have

Set \(\eta := \frac{1}{4} \min \{\theta ,1\}\), select \(y_\theta \in \mathbb {R}^d\) such that

According to (2.23) and (2.24), \(m_{\mu ,0}(y,z) = |y-z|\) for every \(\mu \ge 0\) and \(y,z\in \mathbb {R}^d\). Define

We claim that \(\tilde{m}\) is a subsolution of the equation

In view of (2.9), it suffices to check (3.38) at the vertex point \(y_\theta \). We consider a smooth test function \(\phi \) such that

It is evident that

Thus \(\left( |p+D\phi (y_\theta )|^2 - 1\right) ^2\le 1\) and so we deduce from (3.37) that

This completes the proof of (3.38).

Applying the comparison principle, in view of \(0\in U\), (3.36) and (3.38), we obtain

Rearranging the above expression and using (3.35), we deduce that

By the usual triangle inequality, this yields

We have shown that (3.33) and \(\sup _{\mathbb {R}^d} V = \overline{v}\) implies (3.39). As \(p\in \overline{B}_1\), (3.39) is impossible for \(\delta < \theta /(8|y_\theta |)\).

We have shown that \(\sup _{\mathbb {R}^d} V = \overline{v}\) implies that, for every \(p\in \overline{B}_1\) and \(\theta > 0\),

Thus \(\sup _{\mathbb {R}^d} V = \overline{v}\) implies

We therefore obtain the statement of the lemma after an appeal to Lemma 2.2. \(\square \)

References

Armstrong, S.N., Cardaliaguet, P., Souganidis, P.E.: Error estimates and convergence rates for the stochastic homogenization of Hamilton–Jacobi equations. J. Am. Math. Soc. 27, 479–540 (2014)

Armstrong, S.N., Souganidis, P.E.: Stochastic homogenization of Hamilton-Jacobi and degenerate Bellman equations in unbounded environments. J. Math. Pures Appl. (9), 97(5):460–504 (2012)

Armstrong, S.N., Souganidis, P.E.: Stochastic homogenization of level-set convex Hamilton–Jacobi equations. Int. Math. Res. Notices 2013(15), 3420–3449 (2013)

Armstrong, S.N., Tran, H.V.: Stochastic homogenization of viscous Hamilton–Jacobi equations and applications. Anal. PDE 7(8), 1969–2007 (2014)

Barron, E.N., Jensen, R.: Semicontinuous viscosity solutions for Hamilton-Jacobi equations with convex Hamiltonians. Commun. Partial Differ. Equ. 15(12), 1713–1742 (1990)

Ciomaga, A., Souganidis, P.E., Tran, H.V.: Stochastic homogenization of interfaces with changing sign velocity (in preparation)

Davini, A., Siconolfi, A.: Metric techniques for convex stationary ergodic Hamiltonians. Calc. Var. Partial Differ. Equ. 40(3–4), 391–421 (2011)

Davini, A., Siconolfi, A.: Weak KAM theory topics in the stationary ergodic setting. Calc. Var. Partial Differ. Equ. 44(3–4), 319–350 (2012)

Evans, L.C.: Periodic homogenisation of certain fully nonlinear partial differential equations. Proc. R. Soc. Edinb. Sect. A 120(3–4), 245–265 (1992)

Evans, L.C.: Partial differential equations, vol. 19 of Graduate Studies in Mathematics. American Mathematical Society, Providence, RI (1998)

Kosygina, E.: Homogenization of stochastic Hamilton-Jacobi equations: brief review of methods and applications. In: Stochastic Analysis and Partial Differential Equations, vol. 429 of Contemp. Math., pp. 189–204. Amer. Math. Soc., Providence, RI (2007)

Kosygina, E., Rezakhanlou, F., Varadhan, S.R.S.: Stochastic homogenization of Hamilton–Jacobi–Bellman equations. Commun. Pure Appl. Math. 59(10), 1489–1521 (2006)

Kosygina, E., Varadhan, S.R.S.: Homogenization of Hamilton–Jacobi–Bellman equations with respect to time-space shifts in a stationary ergodic medium. Commun. Pure Appl. Math. 61(6), 816–847 (2008)

Lions, P.-L., Papanicolaou, G.C., Varadhan, S.R.S.: Homogenization of Hamilton–Jacobi equations. Unpublished preprint (1987)

Lions, P.-L., Souganidis, P.E.: Correctors for the homogenization of Hamilton–Jacobi equations in the stationary ergodic setting. Commun. Pure Appl. Math. 56(10), 1501–1524 (2003)

Lions, P.-L., Souganidis, P.E.: Homogenization of “viscous” Hamilton–Jacobi equations in stationary ergodic media. Commun. Partial Differ. Equ. 30(1–3), 335–375 (2005)

Lions, P.-L., Souganidis, P.E.: Stochastic homogenization of Hamilton–Jacobi and “viscous”-Hamilton–Jacobi equations with convex nonlinearities—revisited. Commun. Math. Sci. 8(2), 627–637 (2010)

Luo, S., Yu, Y., Zhao, H.: A new approximation for effective Hamiltonians for homogenization of a class of Hamilton–Jacobi equations. Multiscale Model. Simul. 9(2), 711–734 (2011)

Matic, I., Nolen, J.: A sublinear variance bound for solutions of a random Hamilton–Jacobi equation. J. Stat. Phys. 149(2), 342–361 (2012)

Oberman, A.M., Takei, R., Vladimirsky, A.: Homogenization of metric Hamilton–Jacobi equations. Multiscale Model. Simul. 8(1), 269–295 (2009)

Rezakhanlou, F., Tarver, J.E.: Homogenization for stochastic Hamilton–Jacobi equations. Arch. Ration. Mech. Anal. 151(4), 277–309 (2000)

Schwab, R.W.: Stochastic homogenization of Hamilton–Jacobi equations in stationary ergodic spatio-temporal media. Indiana Univ. Math. J. 58(2), 537–581 (2009)

Souganidis, P.E.: Stochastic homogenization of Hamilton–Jacobi equations and some applications. Asymptot. Anal. 20(1), 1–11 (1999)

Acknowledgments

The third author was partially supported by NSF CAREER Award #1151919.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by F. H. Lin.

Rights and permissions

About this article

Cite this article

Armstrong, S.N., Tran, H.V. & Yu, Y. Stochastic homogenization of a nonconvex Hamilton–Jacobi equation. Calc. Var. 54, 1507–1524 (2015). https://doi.org/10.1007/s00526-015-0833-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00526-015-0833-2