Abstract

Retinopathy of prematurity (ROP) is a sight threatening disorder that primarily affects preterm infants. It is the major reason for lifelong vision impairment and childhood blindness. Digital fundus images of preterm infants obtained from a Retcam Ophthalmic Imaging Device are typically used for ROP screening. ROP is often accompanied by Plus disease that is characterized by high levels of arteriolar tortuosity and venous dilation. The recent diagnostic procedures view the prevalence of Plus disease as a factor of prognostic significance in determining its stage, progress and severity. Our aim is to develop a diagnostic method, which can distinguish images of retinas with ROP from healthy ones and that can be interpreted by medical experts. We investigate the quantification of retinal blood vessel tortuosity via a novel U-COSFIRE (Combination Of Shifted Filter Responses) filter and propose a computer-aided diagnosis tool for automated ROP detection. The proposed methodology involves segmentation of retinal blood vessels using a set of B-COSFIRE filters with different scales followed by the detection of tortuous vessels in the obtained vessel map by means of U-COSFIRE filters. We also compare our proposed technique with an angle-based diagnostic method that utilizes the magnitude and orientation responses of the multi-scale B-COSFIRE filters. We carried out experiments on a new data set of 289 infant retinal images (89 with ROP and 200 healthy) that we collected from the programme in India called KIDROP (Karnataka Internet Assisted Diagnosis of Retinopathy of Prematurity). We used 10 images (5 with ROP and 5 healthy) for learning the parameters of our methodology and the remaining 279 images (84 with ROP and 195 healthy) for performance evaluation. We achieved sensitivity and specificity equal to 0.98 and 0.97, respectively, computed on the 279 test images. The obtained results and its explainable character demonstrate the effectiveness of the proposed approach to assist medical experts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

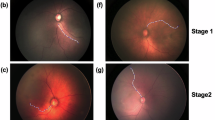

Retinopathy of prematurity (ROP) is a retinal disorder that causes visual impairment in premature low-weight babies who are born afore 31 weeks of gestation [6]. It causes abnormal blood vessels to grow in the inner regions of the eyes, leading to the detachment of the retina and possibly blindness. Statistics from the World Health Organization indicate that ROP is not only the leading cause of childhood blindness but also a great challenge in view of its treatment and management due to high inter-expert diagnosis variability [15]. The Committee for International Classification of Retinopathy of Prematurity (ICROP) [13] established that the treatment of the ROP should be initiated once the Plus disease is diagnosed. The disorder is characterized by high levels of tortuosity and dilation of the retinal blood vessels, as shown in Fig. 1b. The most common approach for ROP screening involves visual tortuosity quantification by a retinal expert.

The recent medical advancements in neonatal care have improved the survival rates of low birth weight infants, especially in the developing countries. For instance, out of 26 million annual new born babies in India, approximately two millions are born with low birth weight and are at risk of developing ROP [41]. The screening programme conducted in the rural areas generates large amounts of image data for ROP screening. The manual labelling is often difficult and time consuming. So there arise the need for an automatic system which can effectively handle huge image data and can accurately diagnose the pathology. In this paper, we focus on developing the backbone of such a diagnostic system with a novel algorithm for the automatic detection of ROP in retinal fundus images of infants.

The computerized analysis of retinal images for the automatic detection of certain pathologies, such as diabetic retinopathy, age-related macular degeneration, ROP and glaucoma, among others, is of wide interest for the image processing and artificial intelligence research communities. Although deep learning approaches are obtaining very good results, often quantitatively overcoming the performance of human observers, their predictions are not easily interpretable. This limits the use of such systems in real diagnostic scenarios, where clear explanation of the decisions has to be provided.

To this concern, we design a novel pipeline for automated and explainable ROP diagnosis, which we constructed under the guidance of ROP-expert ophthalmologists. It consists of two main steps, namely blood vessel segmentation followed by the detection and evaluation of tortuous vessels. The process to detect ROP in retinal images is based on a decision scheme that implements, to some extent, the diagnostic process performed by medical doctors who evaluate the number and severity of tortuous vessels in the retinal images. We propose U-COSFIRE filters for tortuous vessel detection. In contrast to existing methods, which we discuss in Sect. 2, the U-COSFIRE filters do not require explicit modelling of the geometrical properties of the vessel-curvature points. Instead, they are configured in an automatic process by presenting a single example of interest. This is an advantage as, in the operating phase of a diagnostic system, medical operators can automatically configure additional tortuous vessel detectors for particular cases. This contributes to the flexibility and scalability of the proposed pipeline. The response maps of the U-COSFIRE filters can be interpreted directly by medical doctors as they highlight the points of high-curvature of vessels.

To the best of our knowledge, there are no public data sets available for benchmarking methods for ROP diagnosis in retinal images. We thus validate the proposed method on a new data set of 289 images, which we acquired from KIDROP, that is the world largest tele-medicine network for ROP screening and assistance [19]. Furthermore, we implemented an existing method for vessel tortuosity quantification [33], the performance of which we compare with those achieved by the proposed pipeline based on U-COSFIRE filters. We summarize the contributions of this work as follows:

-

novel U-COSFIRE filters for the detection of high-curvature vessel points, and an approach for the quantification of tortuosity level from their magnitude response maps;

-

extension of the B-COSFIRE filters with an explicit multi-scale framework for the segmentation of retinal blood vessels with varying thickness;

-

a pipeline for automated diagnosis of ROP from retinal images, whose outputs are explainable, and that employs the proposed U-COSFIRE and multi-scale B-COSFIRE filters.

The paper is organized as follows. In Sect. 2, we give an account of the state-of-the-art approaches for vessel delineation, tortuosity estimation and ROP diagnosis. In Sect. 3, we describe the proposed methods and their use for the design of an automated ROP diagnosis system, while in Sect. 4, we present our data set that we collected and the results that we achieved. We also provide a comparison with other methods and a discussion of the results in Sect. 5. Finally, we draw conclusions in Sect. 6.

2 Related works

Existing works on retinal vessel segmentation as well as evaluation of tortuosity were surveyed in [1, 10, 49]. Retinal blood vessel delineation approaches are based on either supervised or unsupervised learning techniques. Supervised methods use manually segmented data for training a classifier, and their performance, essentially, depends on the set of features derived from the training samples. The classification model learns from the feature vectors and distinguishes vessel pixels from non-vessel ones. Unsupervised vessel segmentation methods rely on the responses obtained from vessel-selective filters followed by thresholding operations.

In [34], the authors introduced a supervised classification technique that depends on feature vectors derived from the properties of Gabor filters with a Gaussian mixture model (GMM) and a discriminative support vector machine (SVM) as classifiers. Another supervised classification method proposed in [42] relies on a constructed feature space of pixel intensity values and Gabor wavelet responses combined with the GMM classifier. In [40], a SVM-based supervised classification using feature vectors derived from the responses of a line detector and target pixel grey levels was proposed.

The unsupervised methods proposed in [2, 7, 8, 17] used the matched filter as the prime filtering element followed by thresholding operations. The multi-scale filtering approach proposed in [25] used the responses of the matched filter in three different scales for segmentation and width estimation of retinal blood vessels. The literature also contains a reasonable amount of work that uses Gabor filters for the vessel segmentation process. The research carried out in [20, 38, 39] are a few examples in this area. Gabor filters have been widely accepted as computational models of simple cells of visual cortex. The recently introduced Combination of Receptive Fields (CORF) computational model [5], however, outperforms Gabor filters by exhibiting more qualities of the real biological simple cells. This enables a better contour detection, an important biological functionality of simple cells. The latter method and the trainable filters for visual pattern recognition [3] inspired the B-COSFIRE blood vessel delineation operator [4, 43], first proposed as an unsupervised approach and later wrapped in a supervised method [44]. Besides blood vessel segmentation, B-COSFIRE filters were effectively employed to detect other elongated patterns [45, 48]. The delineation performance of the B-COSFIRE filters was substantially improved by the addition of an inhibitory component, based on the push-pull inhibition phenomenon known to happen in area V1 of the visual system of the brain, which resulted in a new operator for delineation of elongated patterns named RUSTICO [46].

A substantial amount of work has also been reported on the estimation of tortuosity of blood vessels. Most of these approaches consider length-to-chord (LTC) [27] measure as a major parameter for tortuosity evaluation. LTC is expressed as the ratio of the actual length of the curved segment of a given blood vessel to the length of the shortest line (chord) connecting its terminal points. A couple of studies [12, 28] have considered different parameters of the vessel axis, such as curvature and directional changes, for tortuosity evaluation. A semi-automatic tortuosity evaluation method based on grouping vessel segments with constant sign-curvature was proposed in [14]. In [33], an angle-based method was applied on the orientation response maps of Gabor filters for detecting tortuous vessel segments.

3 Proposed pipeline

The pipeline for ROP diagnosis that we propose consists of seven steps, namely pre-processing, blood vessel segmentation with multi-scale B-COSFIRE filters, vessel-map binarization, thinning of the vessel tree map, U-COSFIRE filtering for detection of high-curvature points, analysis of connected components of the high-curvature locations, and a diagnostic decision based on the number of connected components. Figure 2 shows a schematic view of the proposed pipeline. In the following, we elaborate on each of these steps.

Schematic outline of the proposed pipeline. a Input image, b pre-processing, c vessel segmentation using multi-scale B-COSFIRE filter, d binarization, e thinning of the vessel tree map, f curvature-points detection, b connected components analysis, and h assessment of ROP. Images (c), (d), (e), and (g) are inverted for better visualization

3.1 Pre-processing

We resize the given RGB fundus images to \(800 \times 600\) pixels and process only the green channel of the images as it contains the highest contrast between the blood vessels and the background [30].

3.2 Multi-scale B-COSFIRE vessel segmentation

We employ the trainable B-COSFIRE filters for vessel segmentation, originally proposed in [4]. A B-COSFIRE filter can be configured by presenting a single pattern of interest to a configuration algorithm. Effective delineation is achieved by combining the responses of a symmetric and an asymmetric B-COSFIRE filters, configured on a vessel- and a vessel-ending-like pattern, respectively. Formally, their responses are combined as:

where \(S_{\phi , {{\hat{\rho }}}, {{\hat{\sigma }}},{\hat{\sigma }}_0, {{\hat{\alpha }}}}(x,y)\) and \(A_{\phi , {{\tilde{\rho }}}, {{\tilde{\sigma }}}, {{\tilde{\sigma }}}_0, {{\tilde{\alpha }}}}(x,y)\) are the responses of a vessel- and a vessel-ending-selective filters, respectively. The parameter \(\sigma \) is a scale parameter (i.e. the standard deviation of the outer Gaussian of a Difference-of-Gaussians used as contributing filter to the B-COSFIRE filter) that regulates the selectivity of the B-COSFIRE filters to vessels of a certain thickness. The parameter \(\phi \) indicates the orientation preference of the filter, while \({\hat{\rho }}\) and \({\tilde{\rho }}\) are the radii of the concerned filter supports. The pairs of parameters \({({\hat{\sigma }}_0,~{{\hat{\alpha }}})}\) and \({({{\tilde{\sigma }}}_0,~{{\tilde{\alpha }}})}\) control the tolerance degree for the detection of deformed patterns with respect to that used for the configuration. Tolerance to curvilinear vessels increases with increasing values of these parameters, up to some extent. The symbol \(|.|_t\) represents the thresholding operation of the combined filter responses by a factor t, which is a fraction of the obtained maximum response. The symmetric and asymmetric filters have different parameter values since they are selective for different parts of the vessels. In order to delineate vessels with different orientations, a rotation-tolerant response map R is constructed by superimposing the responses \(C_{{\phi ,{\hat{\sigma }},{\tilde{\sigma }}}}(x,y)\) obtained for different values of \(\phi \), as:

Here, we consider a set of 12 preferred orientations with angle spacing of \(\pi /12\) radians. The configuration of a B-COSFIRE filter relies on two parameters, namely \(\sigma \) and \(\rho \). The parameter \(\sigma \) determines the selectivity of the filter to curvilinear patterns of a given thickness: given a line pattern of interest of thickness w pixels, we compute \(\sigma = 1.92w\) [36]. The parameter \(\rho \) regulates the size in pixels of the support region of the filter. For more technical details about the B-COSFIRE filters, we refer the reader to [4].

High tortuosity levels of blood vessels influence the thickness variability across the retina. This causes difficulty in tracking the vessels using a single-scale filtering. In [47], robustness to scale was achieved by forming pixel-wise feature vectors derived from a bank of B-COSFIRE filters tuned to vessels of various thicknesses. Using these vectors, a classifier was trained to differentiate the vessel pixels from the background.

In this work, we extend the B-COSFIRE filters to multi-scale and obtain both scale- and rotation-tolerant responses at every pixel location. We replace the \(\sigma \) parameter of the B-COSFIRE filter with a vector \(\underline{{\varvec{\sigma }}}\) of preferred scales, which constitutes a scale space in which the B-COSFIRE response map is computed. For a B-COSFIRE filter S with preferred orientation \(\phi \), we formally define the scale-tolerant response \(S_M\) as:

where \(N_s\) is the number of considered scales. Subsequently, we compute the response map of a multi-scale rotation-tolerant B-COSFIRE filter as the superposition of the multi-scale response maps computed for each preferred orientation. In this work, we employ multi-scale symmetric and asymmetric B-COSFIRE filters, and define their combined response map \(R_{M, (\hat{\underline{{\varvec{\sigma }}}}, \tilde{\underline{{\varvec{\sigma }}}}) }(x,y)\) for multi-scale vessel segmentation as:

where \(C_{M,(\phi ,\hat{ \underline{{\varvec{\sigma }}}}, \tilde{\underline{{\varvec{\sigma }}}}) }(x,y)\) is the combined multi-scale symmetric and asymmetric B-COSFIRE filter response computed for a given orientation \(\phi \), which we formally define as:

3.3 Vessel-tree binarization and thinning

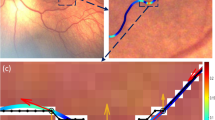

We threshold the B-COSFIRE magnitude response map \(R_{M, (\hat{\underline{{\varvec{\sigma }}}}, \tilde{\underline{{\varvec{\sigma }}}}) }(x,y)\) by using the Otsu’s method [35]. When applied to the B-COSFIRE magnitude response map, this method first identifies a grey level which separates the region containing the blood vessels from the background. An illustration of this first thresholding is provided in Fig. 3a, b, showing the B-COSFIRE response and binary maps, respectively. Subsequently, we apply the Otsu’s method for a second time on the pixels that fall within the white area determined by the first thresholding operation, Fig. 3c.

From the resulting binary map, we then extract the thinned vessels by means of morphological thinning operation. The thinned vessels often have unwanted short spurs. We carry out pruning with 10 iterations to remove those short spurs which are formed as a result of small irregularities present in the boundary of the original vessel maps. Finally, we consider the remaining thinned vessel segments for the analysis of tortuosity.

3.4 U-COSFIRE filters for high-curvature point detection

The proposed methodology has its basis on the trainable B-COSFIRE filter for blood vessel delineation that was proposed in [4]. We configure new COSFIRE filters to be selective to tortuous patterns, which resemble ‘U’-shapes in geometry. The COSFIRE filters that we propose take as input a set of responses of a DoG filter, whose relative locations are found in an automatic configuration process carried out on a prototypical U-shape pattern as shown in Fig. 4a. The DoG function which we denote by \(DoG_{\sigma }(x,y)\), is defined as:

The configuration process involves the determination of key points along a set of concentric circles around the centre of the given prototype.

For the configuration of a U-COSFIRE filter, we first convolve the input prototype pattern I with a DoG function of standard deviation \({\sigma }\):

where the operation \(|.|^+\) suppresses negative values. It is usually referred to as half-wave rectification or rectification linear unit (ReLU). Figure 4b shows the thresholded DoG response map computed for the prototype input image shown in Fig. 4a. The proposed filter structure is determined from the DoG responses obtained through the automatic configuration process which results in a set U of 3-tuples that describe the geometric properties of the prototype pattern:

The automatic configuration of the U-COSFIRE filter is shown in Fig. 5. The centre of the filter is labelled as ‘1’, and the DoG responses along a number of concentric circles around the centre point are considered. The points labelled from ‘2’ to ‘9’ indicate the locations of the local maxima along the concentric circles. The number of the local maxima depends on the shape complexity of the pattern as well as on the number of concentric circles. Below is the set U of 3-tuples that is determined from the input pattern shown in Fig. 4a.

Every tuple describes the characteristics of a local maximum response along the concentric circles, with \(\sigma _i\) representing the standard deviation of the DoG function, and \((\rho _i,\phi _i)\) representing the polar coordinates of its location with respect to the filter support centre.

The response of a U-COSFIRE filter is obtained with the same steps described in [4]. The method involves the computation of intermediate response maps, one for each tuple in the configured set U, followed by the fusion of such maps with the weighted geometric mean function. An intermediate feature map for a tuple i is achieved in four steps: (1) convolution of the input image with a DoG function with standard deviation \(\sigma _i\), (2) suppression of the negative values with the ReLU function mentioned above, (3) blurring of the thresholded DoG response map with a Gaussian function of standard deviation \({\hat{\sigma }}_i\) that grows linearly with the distance \(\rho _i\): \(\hat{\sigma _i} = {\sigma _0 + \alpha \rho _i}\), and (4) shifting of the blurred DoG responses by the vector \([\rho _i, \pi -\phi _i]\). The constants \({\sigma {_0}}\) and \(\alpha \) regulate the tolerance to deformations of the prototype pattern, and they are determined empirically. We refer the reader to [4] for all technical details.

We denote by \(r_U(x,y)\) the COSFIRE filter response that is the weighted geometric mean of all intermediate feature responses \(u_{\sigma _i,\rho _i,\phi _i}(x,y)\):

where

and

The threshold parameter t (\(0<t<1\)) is a fraction of the maximum filter response. The selectivity of a U-COSFIRE filter for a particular orientation depends upon the orientation of the prototype used while configuring the filter. To achieve selectivity in different orientations, we construct a bank of U-COSFIRE filters from the one configured above. For a given orientation preference \(\psi,\) we alter the angular parameters in the set U to form a new set \(R_\psi (U)\):

The multi-orientation response map \({\hat{r}}_{U}(x,y)\) at any pixel location is the maximum superposition of the response maps evaluated with different preferred orientations:

Here, we set \(\Psi =\{0,\frac{\pi }{36},\! \ldots ,\!\frac{35\pi }{36}\}\) so that we have a bank of 36 U-COSFIRE filters tuned for 36 different directions that vary in intervals of \(\pi /36\).

3.5 Estimation of vessel tortuosity

We analyse the connected components of the U-COSFIRE filter response map. For each pixel in the response map, we determine the components that are eight-connected, by inspecting the pixels vertically from top to bottom and then horizontally from left to right. The scanning process results in an integer map where each object is assigned to a particular set of pixels. Zero values in the map correspond to the background pixels. For visualization purposes, we label each connected component (a group of pixels with the same label) in the integer map using a pseudo random colour.

We compute the number of connected components in the U-COSFIRE response map, which corresponds to the number of detected high-curvature points, and use it in the next steps for the automation of ROP diagnosis. We also explore other approaches to characterize the tortuosity level from the U-COSFIRE response map, namely number of nonzero pixels, sparse density, entropy, and the number of local maxima. We present a comparative analysis of the results achieved by these approaches in Sect. 4.4.

3.6 ROP diagnosis by the analysis of vessel tortuosity

One of the main indicators for the diagnosis of ROP is the detection of Plus disease. Studies from the medical literature indicate that high tortuosity in at least two quadrants of a given retinal fundus image is an indication of the presence of Plus disease [13]. We compute the number of connected components in the U-COSFIRE response map as a measure of the vessel tortuosity severity in a given retinal image.

The recorded count of connected components is checked for the presence of Plus disease. A high value indicates the presence of abnormal tortuosity in the image. We designed the decision rule under the strict supervision of ROP-expert ophtalmologists, and kept it simple and clear, which makes it interpretable by humans.

4 Experiments

4.1 Data Set

We assessed the effectiveness of the proposed methodology on a new data set of 289 retinal fundus images that we acquired from KIDROP [19], the largest tele-medicine network across the globe, which is aimed to eradicate ROP-based infant blindness. All images belong to the category of disc-centred retinal images where the optic disc (OD) lies in the image centre. The images are captured using a \(RetCam3^{TM}\) camera with a resolution of \(1600 \times 1200\) pixels. Of the 289 images, 10 imagesFootnote 1 are solely used for the configuration of the parameters and are not used for performance analysis. The images used for parameter configuration are randomly selected and contain equal number of images with healthy and unhealthy retinas. Of the remaining 279 images (validation set), 84 are labelled as showing signs of Plus disease while the rest are marked as healthy (see Table 1 for the data set details). The infant retinal images were labelled independently by three clinical experts with experience in ROP detection. A label is assigned to a given image by majority voting, which we then considered as the ground truth.

4.2 Evaluation

For the performance evaluation of the proposed pipeline, we computed the specificity (Sp), sensitivity (Se), precision (Pr) and F-measure (F):

where TP, FP, TN, and FN stand for true positives, false positives, true negatives and false negatives, respectively. We count a TP when an image labelled with ROP disease is correctly classified, and a FN when it is incorrectly classified. We consider an image of a healthy retina as TN if it is correctly classified and as FP if it is classified as containing signs of ROP.

We also evaluated the overall performance of the proposed pipeline by computing the receiver operating characteristic (ROC) curve and computing its underlying area (AUC). We constructed the ROC curve by varying the value of the threshold \(t^\star \) on the number of connected components corresponding to tortuous points that we use for the evaluation of the presence of Plus disease and diagnosis of ROP

In addition to the above performance metrics, we also computed the Matthews correlation coefficient (MCC) and the Cohen’s kappa coefficient (\(\kappa \)), which are suitable to evaluate the performance of methods for binary classification when the classes are unbalanced. The MCC is formally defined as:

Its values range between \(+1\) and \(-1\), where \(+1\) indicates a perfect prediction, 0 indicates a random prediction, and \(-1\) corresponds to completely opposite predictions. The Kappa coefficient \((\kappa )\) also deals with imbalanced class problems and is defined as:

where \({p_\mathrm{obs}}\) is the observed agreement and \(p_\mathrm{exp}\) is the expected agreement. The \(\kappa \) values vary between \(-1\) and \(+1\), with values less than or equal to 0 indicating no agreement, and values greater than 0 indicating the degree of agreement with a maximum of 1 (perfect agreement) [9, 29].

4.3 Parameter configuration

The values of the COSFIRE filter parameters are to be set according to the characteristics of the pattern of interest. For instance, the parameters \({\hat{\sigma }}\) and \({\tilde{\sigma }}\) control the selectivity to vessels of a certain thickness and have to be tuned according to the vessels in the images at hand [47]. The relationship between the thickness \(\tau \) (in pixels) of a line and the standard deviation \(\sigma \) of the outer Gaussian function of the DoG function that gives the maximum response is defined as \(\tau =1.92\sigma \) [24].

Example of single- (a–c) and multi-scale (d) B-COSFIRE filter response maps obtained for the input image shown in Fig. 2a. The parameters of the single-scale filters are (a) \({\hat{\sigma }} = 1.4\), \( {\tilde{\sigma }} = 0.6\), b\({\hat{\sigma }} = 2.2\), \( {\tilde{\sigma }} = 1.4\), c\({\hat{\sigma }} = 3.4\), \( {\tilde{\sigma }} = 2.4\), while for the d multi-scale one are \(\hat{\underline{{\varvec{\sigma }}}}\) = {1.4, 2.2, 3.4}, \( \tilde{\underline{{\varvec{\sigma }}}}\) = {0.6,1.4, 2.4}. All images are inverted for clearer visualization

In Fig. 6, we show the intermediate responses of several B-COSFIRE filters to the input image shown in Fig. 2a. The use of the multi-scale filtering (Fig. 6d) increases the robustness to noise and contributes to enhanced detection of vessels with respect to what is detected by single-scale B-COSFIRE filters (Fig. 6a–c). The effect of noise is limited in the case of multi-scale filtered image shown in Fig. 6d. Multi-scale B-COSFIRE filtering improves the ruggedness of the proposed approach regarding artifacts in the background, which reduces false detection of blood vessels.

We consider the vectors \(\hat{\underline{{\varvec{\sigma }}}} = \{1.4, 2.2, 3.4\}\) and \(\underline{\tilde{\varvec{{{\sigma }}}}} = \{0.6, 1.4, 2.4\}\), for the symmetric and asymmetric filters, respectively. The selection of these parameter values was determined by visually inspecting the output COSFIRE response maps of the training images. In Table 2, we report the configuration parameters of both symmetric and asymmetric B-COSFIRE filters for the KIDROP data set.

As to the configuration of the U-COSFIRE filter, we determine its parameters empirically on the 10 training images. We use a prototype synthetic U-shape pattern of size \(19 \times 19\) pixels (see Fig. 4) and set \(\sigma =0.52\) and \(\rho =8\). We experimented with different prototype sizes and with different configuration parameters. It turned out that a prototype of \(19 \times 19\) pixels in size for the data set at hand is well suited to achieve satisfactory results. In the rightmost column of Table 2, we report the configuration parameters of the U-COSFIRE filter that is best suited to detect the tortuous vessel segments in the images of the KIDROP data set.

We determined the configuration of the B-COSFIRE and U-COSFIRE parameters in close collaboration with ROP experts that helped us evaluating the quality of the response maps computed in the intermediate steps of the proposed method. We coupled this knowledge-driven evaluation with quantitative analysis of the overall performance on the training set of images. In Sect. 5, we report the sensitivity analysis results of the parameters involved in our approach.

4.4 Results

In this section, we present the results that we achieved on the KIDROP data set, and compare them with a state-of-the-art method for ROP diagnosis proposed by Oloumi et al. [33], that relies on an angle-based measure of tortuosity of blood vessels. That method requires a semi-automatic procedure for the removal of the optic disc, which is not required by the method that we propose. In this section, we also provide details about the implementation and results obtained for the method proposed by Oloumi et al. [33].

4.4.1 ROP detection using U-COSFIRE filters.

We achieved sensitivity of 0.98 and specificity of 0.97, which correspond to an F-score of 0.97, by using the proposed diagnostic method based on counting the connected components of the U-COSFIRE filter response map for vessel tortuosity estimation. In Fig. 7, we show examples of the evaluation performed on two images with and without Plus disease (Fig. 7a, g, respectively). The number of the detected connected components in the response map of the U-COSFIRE tortuosity detector on the retinal image with ROP (Fig. 7e) is 123, while that in the U-COSFIRE response map on the healthy image (Fig. 7k) is 10. This shows how the proposed U-COSFIRE filter is able to extract valuable information for robustly evaluating the tortuosity of blood vessels and the presence of Plus disease for ROP detection in retinal images.

Responses obtained for two sample input images, N18 and N28 of the KIDROP data set. The top two rows show the responses obtained for the image N18 with signs of Plus disease, and the bottom two rows show the output for the healthy image N28. (a, g) Input images, (b, h) multi-scale B-COSFIRE magnitude response maps obtained after pre-processing the green channel of the RGB input images, (c, i) binarized images, (d, j) skeletons obtained from the binary images, (e, k) U-COSFIRE magnitude response maps, and (f, l) connected components labelled with different colours superimposed on the green channel of the input images. The connected components are encircled for better clarity

In order to analyse the overall performance of the proposed methodology and construct the ROC curve, we conduct the following evaluation:

-

1.

set a threshold value \(t^*=1\);

-

2.

classify as positive (i.e. has ROP) the images with the number of connected components greater than \(t^*\) and as negative (i.e. no ROP) the other images;

-

3.

compute the metrics TP, FP, FN, Pr, Se, and F:

-

4.

set \(t^\star = t^\star + 1\) and repeat steps 2 to 4 until \(t^\star \) is equal to the maximum number of connected components in the image.

In Fig. 8, we depict the ROC curve that we achieved. The black dots represent the performance results (Se and Sp) achieved at each value of the threshold \(t^\star \), while the solid black line is their interpolation that we show for visualization purposes. We achieved \(AUC=0.9945\), which demonstrates the robustness and effectiveness of the proposed methodology for automatic detection of ROP in retinal images. For the threshold \(t^\star \) that contributes to the best F-score, the obtained MCC and \(\kappa \) values are 0.95 and 0.94, respectively.

We also investigate the contributions of alternative measurements computed from the U-COSFIRE response maps, which could help to discriminate between retinal images of patients affected by ROP or not. We compute the number of pixels with nonzero value in the response map (NZ), sparse density (SD), the number of local maximum points (LM) and the entropy (E). In Fig. 9, we plot the values of these features computed on retinal images of healthy patients (blue lines) and of patients affected by ROP (red lines). In all cases, we observe that the considered features are able to provide a suitable separation of healthy and unhealthy cases, which is also due to the robustness of the U-COSFIRE processing with respect to the detection of high-curvature vessel points. Although all measurements contribute to satisfactory results, we include the number of local connected component in the proposed methodology for ROP diagnosis as it guarantees the best robustness and the highest results. We report and discuss in Sect. 5 the results that we achieved.

4.4.2 ROP detection using Oloumi et al’s [33] angle-based method

We briefly describe the angle-based vessel tortuosity evaluation method proposed by Oloumi et al. [33] for the diagnosis of Plus disease and ROP detection. We implement this method and evaluate it on top of the vessel map detected by the B-COSFIRE filter that we employed in this work, in order to directly compare its performance for vessel tortuosity estimation with that of the proposed U-COSFIRE filters.

In addition to the response \(R_{M, (\hat{\underline{{\varvec{\sigma }}}}, \tilde{\underline{{\varvec{\sigma }}}}) }(x,y)\) of a rotation-tolerant multi-scale B-COSFIRE filter for vessel segmentation, we also compute the orientation map \(O_{M, (\hat{\underline{{\varvec{\sigma }}}}, \tilde{\underline{{\varvec{\sigma }}}}) }(x,y)\):

that contains, at each pixel location (x, y), the orientation \(\phi \) in radians at which the B-COSFIRE filter achieves the highest response.

The method proposed by Oloumi et al. [33] for vessel tortuosity quantification requires the removal of the optic disc (OD), which we perform by cropping an elliptic region (of size \(48 \times 40\) pixels), whose centre point is indicated by the user. We then apply the thresholding and thinning operations described in Sect. 3. The pixels surrounded by three or more neighbours are then extracted from the skeleton image to identify branching points. These points are dilated by using morphological dilation operation. The image with the dilated branching points is XOR-ed with the original skeleton image so that branches are removed, and only vessel segments can be used for the evaluation of tortuosity.

The median absolute deviation (MAD) measurement for each location along the thinned vessel segments [37] is used to estimate the linearity of every pixel. The pixel locations whose MAD value is 0 belong to linear structures, while the others belong to curved structures. Next, the locations with nonzero MAD values are used to compute the local tortuosity index (LTI) [32]:

Finally, the average tortuosity \(\mu _{s_i}\) of every nonlinear segment \(s_i\) with P pixels is computed as:

Based on the average tortuosity \(\mu _{s_i}\) measure, each segmented portion is marked as either abnormally tortuous or normal according to a given threshold. This threshold is obtained by comparing the tortuosity measures for the normal and tortuous vessels, given by an ophthalmologist on the training images (we set the threshold equal to 0.01). Then, we compute the length of each tortuous segment: for vertically or horizontally adjacent pixels we add 1, and for diagonally adjacent pixels we add \(\sqrt{2}\) [11].

As per recommendations made by the International Classification of ROP (ICROP), high tortuosity in any of two quadrants is an indication of Plus disease. The total length of abnormal tortuous vessel segments in each of the four quadrants of a retinal image is computed, and based on the minimum tortuous vessel length thresholds (\(L_\mathrm{min}\)) determined from the training images, Plus disease is diagnosed if:

-

one quadrant has \(L_\mathrm{min}\ge 350\) pixels or

-

any two quadrants have \(L_\mathrm{min}\ge 175\) pixels.

The method of Oloumi et al. [33] achieved a specificity of 0.93 and a sensitivity of 0.9 (76 Plus cases were detected, and correct ROP diagnosis was provided). More specifically, it classified 260 images correctly out of the 279 images in the KIDROP validation set (\(TP = 76\) and \(TN = 184\)). Among the misclassified cases, 11 are FP and 8 are FN. In Fig 10, we show examples of the results achieved by the method of Oloumi et al. [33] on two images of patients with and without Plus disease. The total length of the tortuous vessel segments in the four quadrants of the image in Fig 10a with signs of Plus disease are 239, 180, 330 and 297 pixels (red segments in Fig. 10c). The counterpart values in the image of the healthy patient are 45, 2, 61 and 57 pixels.

Response maps obtained for the images shown in Fig. 7; (a, d) binarized response maps of input images obtained after OD removal, (b, e) black pixels indicate locations with nonzero MAD values images are inverted for better clarity), (c, f) colour-coded tortuous vessel segments; green segments represent linear structures, and red segments represent curved segments. The top row corresponds to responses obtained for the image N18 with ROP, and the bottom row for image N28 with healthy retina

5 Results comparison and discussion

We compared the results of the proposed U-COSFIRE filters for vessel tortuosity estimation and diagnosis of ROP in retinal images with those obtained by the method of Oloumi et al. [33]. We evaluated the performance of the algorithms for tortuosity analysis on the same vessel segmentation map, namely the one provided by the B-COSFIRE filter that we extended with a multi-scale processing.

Furthermore, we included other approaches for the quantification of vessel tortuosity in the response map of the proposed U-COSFIRE filters. Given the response map of the U-COSFIRE filter, we computed the number of pixels with nonzero value (NZ), sparse density (SD), the number of local maximum points (LM) and the entropy (E).

In Table 3, we report the results of the considered methods. The method that we propose provides a decision on the presence of Plus disease that allows the diagnosis of a patient with ROP based on counting the connected components of the U-COSFIRE filter response map (U-COSFIRE+CC), achieved the highest F-score and sensitivity in comparison with the method of Oloumi et al. [33] on the KIDROP data set. Moreover, we computed the MCC and \(\kappa \) values for a specific value of the threshold \(t^\star \), the one which gives the highest average F-score value on the data set for each of the methods using U-COSFIRE filters. The obtained MCC and \(\kappa \) values are summarized in Table 3. They confirm the effectiveness of the proposed methodology in ROP detection.

The results of the local maxima analysis from the magnitude response map of the U-COSFIRE filter (U-COSFIRE + LM) are comparable with those achieved by counting the connected components (U-COSFIRE+CC). Hence, the LM feature can be used as an additional tool for ROP screening. While the other features, namely NZ, SD and E, achieve promising results, they fall short of the best performance we achieved.

It is worth pointing out that the classification errors made by our method are all false positive detections, which are caused by the presence of particular artefacts in the images (Fig. 11). The approach that we propose did not detect any false negative cases, something which is desirable in medical practice as it avoids putting at risk the health of patients. Among the causes of false positive detections, we observed that thin choroidal vessels in the background (Fig. 11a) may also be segmented resulting in the false detection of blood vessels and the consequent erroneous estimation of the vascular curvature. Tessellation, which is a condition in retina caused due to reduced pigmentation of the retinal pigment epithelium, may also lead to false detection of blood vessels (Fig. 11b). Occlusion of blood vessels (Fig. 11c), termed as small pupil artefact, is another main cause of misclassification. In this case, blood vessels are invisible and hence result in poor segmentation. Finally, the foveal and peri-foveal reflexes (Fig. 11d) present in the infant fundus images may also lead to misclassification of images.

We performed sensitivity analysis to study the effect of each of the parameters \(\sigma \), \(\sigma _0\), and \(\alpha \) by changing the value of one parameter at a time while keeping the other two fixed. The parameters are analysed one at a time by incrementing or decrementing the optimal values (\(\sigma =0.52\), \(\sigma _{0}=0.8\), \(\alpha =0.5\)) of the concerned parameters in steps of 0.1. We computed the average MCC value on the training set of 10 images for each experiment. The threshold \(t^*\) that we used is the maximum number of connected components obtained for the normal images in the training data set with the optimal parameters. In Table 4, we report the outcomes of the performed experiments. The sensitivity analysis shows that the parameter \(\sigma \) is the least sensitive followed by \(\sigma _{0}\) and \(\alpha \). The insensitivity of the parameter \(\sigma \) accounts for the fact that the input to the U-COSFIRE filter is the thinned response map obtained after multi-scale B-COSFIRE filtering process.

Notable is the fact that there are no public benchmark data sets of retinal fundus images for the evaluation of ROP detection methods. For discussion purposes, however, in Table 5 we include the results reported in existing works, which were obtained using different proprietary data sets. Only the results reported in the last two rows can be directly compared. In some studies, tortuosity evaluation is done on manually selected blood vessels restricted to a pre-defined area around the OD.

Heneghan et al. [16] and Kiely et al. [21] used tortuosity and width of blood vessels for ROP assessment. They used the length-to-chord measures for tortuosity evaluation. The results reported in [12] and [23] were based on tortuosity in arterioles alone by using an angle-based approach. Wallace et al. [50] and Oloumi et al. [31] proposed similar approaches that differ in how they defined tortuosity. The former used length-to-chord measures, while the latter used an angle-based approach, which relies on a local tortuosity index. In Table 5, we include only the works, which reported the achieved values of sensitivity and specificity for ROP classification. Although a direct comparison of the results is not possible, it is worth pointing out that our study is the largest one so far reported in the literature. Furthermore, all existing methods involve OD removal, often performed in a semi-automatic way with the manual input of the user (Fig. 10a, d), which is not required by our proposed method based on U-COSFIRE filters and connected component analysis (Fig. 7c, i). The method that we propose has higher level of automation, in fact it is fully automatic, and it is more suitable to be employed in a mass screening programme.

A point of strength of the proposed method is that the decision process to diagnose the presence of ROP disease in an image is explainable. This is a direct consequence of the design that we made in close collaboration with medical doctors and experts in ROP disease, with the aim of deploying the proposed approach in medical studies on a large number of patients within the KIDROP programme in Bangalore. Further optimization of the proposed method for ROP diagnosis can be directed towards optimization of its parameters during medical trials and mass screening operations. Reinforcement learning strategies [26, 51] or online optimization techniques [18], such as those based on bandits optimization [22], can be explored to cope with distribution shifts in the characteristics of the images taken in different population groups or limiting diagnostic errors by including medical feedback. In the retinal images of patients with ROP, one can note large arterial tortuosity and dilation of veins. Future developments of the present work could also include categorization of extracted blood vessels into arteries and veins, which would then allow a focused analysis of arteriolar vessels to diagnose ROP more robustly. In addition to tortuosity, it would be worth to explore the impact of widths of blood vessels in ROP diagnosis.

6 Conclusion

We propose a highly effective methodology for ROP diagnosis of retinal fundus images and achieve very high results on a new data set that we collected in collaboration with the KIDROP programme. The method that we put forward is based on a novel U-COSFIRE filter for the detection of high tortuosity vessel points in retinal images, followed by tortuosity quantification by connected component analysis. The contributions of this work are threefold: (a) a method for the detection of tortuous vessels based on the novel U-COSFIRE filters, (b) the extension of the B-COSFIRE filters with a multi-scale processing framework and (c) a novel fully automated and explainable pipeline for ROP diagnosis, which is based on vessel segmentation followed by U-COSFIRE filtering and connected component analysis for the quantification of vessel tortuosity.

We achieved higher results (\(\hbox {Se}=0.98\), \(\hbox {Sp}=0.97\), \(F=0.97\)) than those obtained by a state-of-the-art tortuosity quantification method proposed by Oloumi et al. [31, 33] (\(\hbox {Se}=0.90\), \(\hbox {Sp}=0.93\), \(F=0.88\)) on the KIDROP data set. The results that we obtained demonstrate the effectiveness of the proposed methodology, which is now undergoing medical trial to be employed in large-scale diagnostics.

Notes

Image files named T1-T10 of KIDROP data set are used as training images.

References

Abràmoff MD, Garvin MK, Sonka M (2010) Retinal imaging and image analysis. IEEE Rev Biomed Eng 3:169–208

Al-Rawi M, Qutaishat M, Arrar M (2007) An improved matched filter for blood vessel detection of digital retinal images. Comput Biol Med 37(2):262–267

Azzopardi G, Petkov N (2012) Trainable cosfire filters for keypoint detection and pattern recognition. IEEE Trans Pattern Anal Mach Intell 35(2):490–503

Azzopardi G, Strisciuglio N, Vento M, Petkov N (2015) Trainable cosfire filters for vessel delineation with application to retinal images. Med Image Anal 19(1):46–57

Azzopardi G, Petkov N (2012) A corf computational model of a simple cell that relies on lgn input outperforms the Gabor function model. Biol Cybern pp 1–13

Blencowe H, Lawn JE, Vazquez T, Fielder A, Gilbert C (2013) Preterm-associated visual impairment and estimates of retinopathy of prematurity at regional and global levels for 2010. Pediatr Res 74(S1):35–49

Chaudhuri S, Chatterjee S, Katz N, Nelson M, Goldbaum M (1989) Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans Med Imaging 8(3):263–269

Chutatape O, Zheng L, Krishnan SM (1998) Retinal blood vessel detection and tracking by matched gaussian and Kalman filters. In: Proceedings of the 20th annual international conference of the IEEE engineering in medicine and biology society, 1998, vol 6, pp 3144–3149. IEEE

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Measur 20(1):37–46

Fraz MM, Remagnino P, Hoppe A, Uyyanonvara B, Rudnicka AR, Owen CG, Barman SA (2012) Blood vessel segmentation methodologies in retinal images-a survey. Comput Methods Programs Biomed 108(1):407–433

Freeman H (1961) On the encoding of arbitrary geometric configurations. IRE Trans Electron Comput 2:260–268

Gelman R, Martinez-Perez ME, Vanderveen DK, Moskowitz A, Fulton AB (2005) Diagnosis of plus disease in retinopathy of prematurity using retinal image multiscale analysis. Invest Ophthalmol Vis Sci 46(12):4734–4738

Gole GA, Ells AL, Katz X, Holmstrom G, Fielder AR, Capone A Jr, Flynn JT, Good WG, Holmes JM, McNamara J et al (2005) The international classification of retinopathy of prematurity revisited. JAMA Ophthalmol 123(7):991–999

Grisan E, Foracchia M, Ruggeri A (2008) A novel method for the automatic grading of retinal vessel tortuosity. IEEE Trans Med Imaging 27(3):310–319

Gschließer A, Stifter E, Neumayer T, Moser E, Papp A, Pircher N, Dorner G, Egger S, Vukojevic N, Oberacher-Velten I et al (2015) Inter-expert and intra-expert agreement on the diagnosis and treatment of retinopathy of prematurity. Am J Ophthalmol 160(3):553–560

Heneghan C, Flynn J, O’Keefe M, Cahill M (2002) Characterization of changes in blood vessel width and tortuosity in retinopathy of prematurity using image analysis. Med Image Anal 6(4):407–429

Hoover A, Kouznetsova V, Goldbaum M (2000) Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imaging 19(3):203–210

Kar P, Li S, Narasimhan H, Chawla S, Sebastiani F (2016) Online optimization methods for the quantification problem. Proceedings of the 22nd ACM SIGKDD. https://doi.org/10.1145/2939672.2939832

Karnataka internet assisted diagnosis of retinopathy of prematurity. http://kidrop.org/

Kharghanian R, Ahmadyfard A (2012) Retinal blood vessel segmentation using Gabor wavelet and line operator. Int J Mach Learn Comput 2(5):593

Kiely AE, Wallace DK, Freedman SF, Zhao Z (2010) Computer-assisted measurement of retinal vascular width and tortuosity in retinopathy of prematurity. Arch Ophthalmol 128(7):847–852

Korda N, Szörényi B, Li S (2016) Distributed clustering of linear bandits in peer to peer networks. In: Proceedings of the 33rd international conference on international conference on machine learning—vol 48, ICML’16, pp 1301–1309

Koreen S, Gelman R, Martinez-Perez ME, Jiang L, Berrocal AM, Hess DJ, Flynn JT, Chiang MF (2007) Evaluation of a computer-based system for plus disease diagnosis in retinopathy of prematurity. Ophthalmology 114(12):e59–e67

Kruizinga P, Petkov N (2000) Computational model of dot-pattern selective cells. Biol Cybern 83(4):313–325

Li Q, You J, Zhang D (2012) Vessel segmentation and width estimation in retinal images using multiscale production of matched filter responses. Expert Syst Appl 39(9):7600–7610

Liu S, Ngiam KY, Feng M (2019) Deep reinforcement learning for clinical decision support: a brief survey. arXiv preprint arXiv:1907.09475

Lotmar W, Freiburghaus A, Bracher D (1979) Measurement of vessel tortuosity on fundus photographs. Graefe’s Arch Clin Exp Ophthalmol 211(1):49–57

Makkapati VV, Ravi VVC (2015) Computation of tortuosity of two dimensional vessels. In: Eighth International conference on advances in pattern recognition (ICAPR), 2015, pp 1–4. IEEE

McHugh ML (2012) Interrater reliability: the kappa statistic. Biochem Medica 22(3):276–282

Mendonca AM, Campilho A (2006) Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imaging 25(9):1200–1213

Oloumi F, Rangayyan RM, Ells AL (2016) Computer-aided diagnosis of retinopathy in retinal fundus images of preterm infants via quantification of vascular tortuosity. J Med Imaging 3(4):044505

Oloumi F, Rangayyan RM, Ells AL (2014) Assessment of vessel tortuosity in retinal images of preterm infants. In: 36th annual international conference of the IEEE engineering in medicine and biology society (EMBC), 2014, pp 5410–5413. IEEE

Oloumi F, Rangayyan RM, Ells AL (2015) Computer-aided diagnosis of plus disease in retinal fundus images of preterm infants via measurement of vessel tortuosity. In: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 4338–4342. IEEE

Osareh A, Shadgar B (2009) Automatic blood vessel segmentation in color images of retina. Iran J Sci Technol 33(B2):191

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

Petkov N, Visser WT (2005) Modifications of center-surround, spot detection and dot-pattern selective operators. Tech. Rep. CS 2005-9-01, Institute of Mathematics and Computing Science, University of Groningen, The Netherlands

Pham-Gia T, Hung T (2001) The mean and median absolute deviations. Math Comput Modell 34(7–8):921–936

Ramlugun GS, Nagarajan VK, Chakraborty C (2012) Small retinal vessels extraction towards proliferative diabetic retinopathy screening. Expert Syst Appl 39(1):1141–1146

Rangayyan RM, Ayres FJ, Oloumi F, Oloumi F, Eshghzadeh-Zanjani P (2008) Detection of blood vessels in the retina with multiscale Gabor filters. J Electron Imaging 17(2):023018–023018

Ricci E, Perfetti R (2007) Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans Med Imaging 26(10):1357–1365

Sen P, Rao C, Bansal N (2015) Retinopathy of prematurity: an update. Sci J Med Vis Res Foun 33(2):93–6

Soares JV, Leandro JJ, Cesar RM, Jelinek HF, Cree MJ (2006) Retinal vessel segmentation using the 2-d Gabor wavelet and supervised classification. IEEE Trans Med Imaging 25(9):1214–1222

Strisciuglio N, Vento M, Azzopardi G, Petkov N (2015) Unsupervised delineation of the vessel tree in retinal fundus images. Comput Vis Med Image Process 1:149–155

Strisciuglio N, Azzopardi G, Vento M, Petkov N (2016) Supervised vessel delineation in retinal fundus images with the automatic selection of b-cosfire filters. Mach Vis Appl 27(8):1137–1149

Strisciuglio N, Azzopardi G, Petkov N (2017) Detection of curved lines with b-cosfire filters: a case study on crack delineation. In: International conference on computer analysis of images and patterns, pp 108–120. Springer

Strisciuglio N, Azzopardi G, Petkov N (2019) Robust inhibition-augmented operator for delineation of curvilinear structures. IEEE Trans Image Process. https://doi.org/10.1109/TIP.2019.2922096

Strisciuglio N, Azzopardi G, Vento M, Petkov N (2015) Multiscale blood vessel delineation using b-cosfire filters. In: International conference on computer analysis of images and patterns, pp 300–312. Springer

Strisciuglio N, Petkov N (2017) Delineation of line patterns in images using b-cosfire filters. In: International conference and workshop on bioinspired intelligence (IWOBI), 2017, pp 1–6. IEEE

Sutter FK, Helbig H (2003) Familial retinal arteriolar tortuosity: a review. Surv Ophthalmol 48(3):245–255

Wallace DK, Jomier J, Aylward SR, Landers MB (2003) Computer-automated quantification of plus disease in retinopathy of prematurity. J Am Assoc Pediatr Ophthalmol Strabismus 7(2):126–130

Yu C, Liu J, Nemati S (2019) Reinforcement learning in healthcare: a survey. arXiv preprint arXiv:1908.08796

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest:

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ramachandran, S., Strisciuglio, N., Vinekar, A. et al. U-COSFIRE filters for vessel tortuosity quantification with application to automated diagnosis of retinopathy of prematurity. Neural Comput & Applic 32, 12453–12468 (2020). https://doi.org/10.1007/s00521-019-04697-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04697-6