Abstract

Understanding the pattern of financial activities and predicting their development and changes are research hotspots in academic and financial circles. Because financial data contain complex, incomplete and fuzzy information, predicting their development trends is an extremely difficult challenge. Fluctuations in financial data depend on a myriad of correlated constantly changing factors. Therefore, predicting and analysing financial data are a nonlinear, time-dependent problem. Deep neural networks (DNNs) combine the advantages of deep learning (DL) and neural networks and can be used to solve nonlinear problems more satisfactorily compared to conventional machine learning algorithms. In this paper, financial product price data are treated as a one-dimensional series generated by the projection of a chaotic system composed of multiple factors into the time dimension, and the price series is reconstructed using the time series phase-space reconstruction (PSR) method. A DNN-based prediction model is designed based on the PSR method and a long- and short-term memory networks (LSTMs) for DL and used to predict stock prices. The proposed and some other prediction models are used to predict multiple stock indices for different periods. A comparison of the results shows that the proposed prediction model has higher prediction accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, financial activities have been increasingly growing in number with rapid economic development, and their variation trend has also become increasingly complex. Understanding the pattern of financial activities and predicting their development and changes are focuses of research in academic and financial circles. An approximate prediction of financial data using one or a series of methods can help understand the development of and changes in the financial market at the macroscopic level and provide a basis for investors and for-profit organizations to make trade decisions and plans at the microscopic level, thereby allowing them to maximize profits. Because financial data contain complex, incomplete and fuzzy information, predicting their development trend is an extremely difficult challenge [1, 2].

Before the emergence of efficient machine learning algorithms, researchers in China and elsewhere generally used various statistical and econometric methods to build prediction models for research. Conventional statistical and econometric models require linear models and cannot be used to predict and analyse financial products before transforming nonlinear models to linear models. As an important branch of machine learning algorithms, neural networks (NNs) have the following advantages compared to conventional statistical methods: they are numeric, data-driven and adaptive. Therefore, NNs have a higher capacity to analyse inaccurate and noisy data and have been extensively used to predict time series. By design, the newly emerged deep learning (DL) algorithms can be trained with massive volumes of nonlinear data and used to construct deep NNs (DNNs) with multiple hidden layers and capture more abstract nonlinear relationships between data. Compared to conventional machine learning algorithms, these DL algorithms can be used to solve nonlinear problems more satisfactorily [3].

In 1988, White predicted the changes in daily stock returns of IBM using NNs [4]. Zhang combined an auto-regressive integrated moving average (ARIMA) model with an artificial NN (ANN) to predict time series and conducted a comparative study. The results showed that the ANN was more advantageous in analysing and processing nonlinear data [5]. In 2009, Vanstone and Finnie [6] proposed an NN-based empirical methodology for designing automated trade systems based on ANNs. Jasemi et al. [7] studied the information hidden in Japanese stock candlestick charts using a multi-layer perception (MLP) model. Ticknor proposed a stock index price prediction model that uses a Bayesian network and determined its effectiveness based on the data for Microsoft Corp. and Goldman Sachs Group Inc. stocks [8]. Wang [9] proposed a stochastic time effective function NN and combined it with principal component analysis to predict the prices of multiple securities. Xiong et al. predicted the linear pattern of a time series using an ARIMA model, estimated the nonlinear residual of the time series using an NN model and combined the results to form the final prediction for the time series. They examined the effectiveness of the hybrid prediction model through simulations based on a RMB exchange rate series [10]. Based on the theory for the relationship between volume and price, Wu et al. [11] performed an empirical study on the relationship between volume and price in China’s stock market using the exponential generalized auto-regressive conditional model and a backpropagation NN. Li [12] predicted error series using empirical mode decomposition in conjunction with a support vector machine (SVM).

The primary objective of DL is to obtain highly robust feature sets from complex real-world data and, on this basis, acquire highly correlated information. Deep nonlinear topologies of DNNs can be built by stacking the hidden layers of the used nonlinear activation functions and used to analyse and model data with complex structures. Compared to conventional shallow NNs, DNNs can better represent complex high-dimensional functions, such as highly varying functions [13]. In addition, the data processing capacity of computer chips has improved dramatically. In particular, graphic processing units are highly suitable for deep model training. Moreover, increasingly many research results have been achieved in the related fields, such as machine learning and information and signal processing. Furthermore, relevant techniques for storing large datasets have also improved. These allow DL algorithms to fit highly nonlinear functions better and undergo rapid popularization and development. Today, DL has achieved marked results in the classification, speech recognition and computer vision fields. Shen et al. constructed a deep belief network using a continuous restricted Boltzmann machine and combined it with the conjugate gradient method to predict exchange rates. Through a comparison, they found that the DL network structure was superior to conventional feedforward NNs [14]. Ding et al. predicted the changes in stock index prices from an event-driven perspective. They constructed a prediction model using a convolutional NN and used it to measure the short- and long-term effects of events on the changes in stock prices. The accuracy of their method was 6% higher than that of a conventional method for the stocks in the Standard and Poor’s (S&P) 500 [15]. Zhao et al. [16] constructed a prediction model using a de-noising auto-encoder and performed multiple sets of experiments based on datasets extended using the bagging method. They also used the prediction model to predict oil prices. Krauss et al. studied the application of several integrated methods, including DNNs, gradient boosted regression trees and random forests, in statistical arbitrage. In addition, they also proposed an equal-weighted integrated model based on multiple models and, with it, achieved excess earnings in the S&P 500 [17]. In recent years, there are some researches on the prediction of stock price by deep learning method [18,19,20].

2 Financial data prediction and DNNs

2.1 Financial data prediction

Financial data, particularly stock index data, often treated as a time series affected by multiple variable factors. These factors can generally be classified into two types: macroscopic variables, which affect the market on a long-term scale (e.g. economic policies or national gross domestic product), and microscopic variables, which affect the market on a microscopic scale (e.g. random events, irrational emotional fluctuations of investors and market rumours). By analysing macroscopic and microscopic factors, decisions can be made for the financial market, which is a dynamic system with constantly changing factors. However, because these factors often change, it can be considered that they are affected by an unknown system. It is nearly impossible to collect all the macroscopic and microscopic variables and determine the extents of their influence. Therefore, financial time series prediction is often viewed as one of the main difficult research areas in the literature relating to time series and machine learning.

The first step of financial time series analysis is to observe financial time series data. Commonly observed financial variables include price (stock price, stock index, exchange rate and futures price), return (stock return, stock index return, interest rate and futures return), fluctuation, trade volume and companies’ financial variables (bond issuance and hedging tools). Because the rate of return is unaffected by the scale of investment and, as a stable series, exhibits excellent statistical properties, it is often used as a measure of trading experiments.

Assuming \( P_{t} \) is the asset price at time \( t \), a single-period simple gross return will be earned if one asset is held between time \( t - 1 \) and time \( t \) when dividends and dividend returns are not considered in formula (1):

The corresponding single-period simple return is given as formula (2):

Compared to ordinary time series, financial time series also have some unique statistical properties, including the following:

- Outliers:

A financial time series can be easily affected by macroscopic factors (e.g. human and policy factors) and contains data that differ relatively significantly from the original data

- Trend:

A mid-/long-term upward or downward trend can be observed from a financial time series in any observation dimension

- Mean reversion:

A financial time series has a tendency to revert to its mean level

- Fluctuation focus:

Large fluctuations are often closely followed by large fluctuations, whereas small fluctuations are often closely followed by small fluctuations

2.2 DNNs

NNs are an important area of the machine learning field and are a type of data-driven algorithm. They are adaptive, have a relatively strong capacity to approximate nonlinear functions and can be used to satisfactorily predict nonlinear, time-varying data. However, NNs often rely heavily on the feature attributes of the input data. Sparse input features and missing data will likely result in under-fitted predictions. If the input features are highly correlated and there are more feature attributes than samples, there will be an extended model training process and a risk of over-fitting. ANNs are the precursor of DNNs, which currently are a focus of research in the machine learning field. DNNs differ from conventional ANNs in two main areas. (1) Most DNNs consist of multiple hidden layers or multi-stage nonlinear information processing processes. (2) Continuous, deeper and more abstract hidden layers are constructed using supervised methods or non-supervised feature representation methods. By incorporating knowledge and advanced technologies of many fields, DL has evolved into an enormous branch in the technical field and system structure of machine learning. However, the basic feature of DL is still to build multi-layer models for processing nonlinear information. Based on the model structure, the employed technique and the scene of application, DL is approximately categorized into two types, namely unsupervised (semi-supervised) learning and supervised learning. Of supervised and unsupervised learning models, supervised DL models are more efficient in the training and testing processes, have more flexible frameworks and are more suitable for the end-to-end learning process of complex systems compared to conventional dichotomous models. In contrast, unsupervised DL models, particularly probabilistic production models, are easier to understand and can be more easily embedded with field knowledge, combined with other models and used to address uncertain problems. Nonetheless, it is often difficult to use unsupervised DL models to process the reasoning and learning of complex systems. Figure 1 shows the approximate categorization of DL model structures.

3 DNN-based stock price prediction method

3.1 Description of problem

A characteristic of the price time series for most financial products is that they can be treated as data series that change continuously within the trade range. However, because there are certain time intervals within the trade range, all financial time series cannot be treated as completely continuous data. In addition, generally, there is a relatively significant difference in trends between series observed in different intervals. Here, stock index data are taken as an example. A long-term upward trend is observed in a seasonal interval. However, in this long-term period, the mid- and short-term trends observed in daily or hourly intervals may fluctuate or even be downward during many stages. To reduce the number of factors affecting the experiments and ensure consistency in the comparison experiments and considering China’s T + 1 trading system, stock price data are selected as the main study object in the experiments conducted in this study and trading days are used as observation intervals. A discrete stock index price series is recorded: \( y_{1} ,y_{2} , \ldots ,y_{t} , \ldots ,y_{n} \). Thus, when predicting a financial time series, all the historical data are known at time t and used for searching for a nonlinear process that emulates price changes. A prediction model is constructed and used to predict yt, as indicated in formula (3). The ultimate goal is to select a suitable model and optimal parameters based on the observed time series data to approximate the process \( f \).

where d is the duration of the delay, \( n \) is the time span that requires consideration and \( w(t) \) is the noise in the data observed at time \( t \).

To more clearly describe the analysis and prediction of stock index price series, the process of building a stock index price prediction model is abstracted into three stages, namely data analysis and processing, prediction model building and experimental result prediction and evaluation, as shown in Fig. 2. The first stage involves pre-processing processes (e.g. data acquisition and de-noising), phase-space reconstruction (PSR) and data structuring processes (e.g. label defining and test set partitioning) and eventually produces an input dataset and an output dataset effectively applicable to the prediction model. At the second stage, a prediction model is built using various machine learning methods. In addition, reasonable model parameters are selected, and the model is adjusted and optimized. Furthermore, prediction is performed using the model based on the generated input and output data. At the third stage, evaluation metrics for prediction results are defined, and the results obtained using the prediction model are subjected to multi-dimensional analysis. The evaluation results are presented in the form of charts.

3.2 PSR of price data

It has been only several decades since most financial products that focus on stock indices were created. The number of time series data points generated with trading days as observation intervals is basically within several thousands and slightly smaller than those in the datasets in other machine learning fields. When the dataset contains insufficient data, a model trained using a machine learning method is often over-fitted. In this study, regarding the data volume, data are folded and replicated multiple times using the common sliding window method. Regarding the dimensionality, price data are extended dimensionally through PSR. At time t, a known time series \( [y_{t} = x(t),\;t = 1, \ldots ,n] \) that the undergone PSR, which can be expressed as formula (4):

The series consisting of the reconstructed data is given as formula (5):

where \( \tau \) is the delay interval, which allows the two observation data \( x(t) \) and \( x(t + \tau ) \) at the endpoints of \( \tau \) to be independent of one another to some extent but not completely uncorrelated and m is the embedding dimension of the generated series. Reasonably extending the embedding dimension can increase the analytical potential of a dynamic system structure.

In this study, \( \tau \) and \( m \) are calculated using the mutual information method and an improved Cao false nearest neighbour method, respectively. PSR is performed after \( \tau \) and m are determined. The reconstructed data are denoted by \( X(t) \). The input and output of the supervised learning model are then defined as follows: the input is the k number of reconstructed data points before time \( t \): \( X(i),i = t - k,t - k + 1, \ldots ,t - 1. \), and the output is the reconstructed data at time \( t \): \( X(t) \). In the last step of the experiment, the data of the last dimension \( x(t - (m - 1)\tau ) \) of the predicted reconstructed data \( X(t) \) are used as the predicted price series, which is subsequently evaluated by comparison with the actual price data.

3.3 DNN-based prediction model

Recurrent NNs (RNNs) [21] achieve explicit modelling of time through self-connection of the hidden layers and record long-term information by improving the nodes in the hidden layers. RNNs have achieved marked results in natural language processing and audio frequency analysis. In conventional RNNs, there are links between the nodes in the hidden layers. Owing to these recurrent feedback links, network models have a memory ability. Thus, RNNs can model information on a time scale. The duration of information transfer can be treated as the model depth. However, earlier RNNs are unable to model information with a long time span and can lead to a vanishing gradient problem when used to build large time scale models. By optimizing the nodes, deep RNNs are able to efficiently model on a time scale and prevent the occurrence of a vanishing gradient problem.

RNN models that use long- and short-term memory (LSTM) nodes are effective and expandable when used to address a number of problems involving series data. These models are for general use and effective in capturing long-term time-dependent information and have achieved exceptional results in handwriting recognition, concise natural language translation and audio frequency data analysis. An LSTM [22] node consists of so-called dynamic gate structures, including input, forget and output gate structures, as shown in Fig. 3.

In addition, an LSTM node structure also contains a recurrent logic structure, which is referred to as nerve cell and used to record node information.

An LSTM node is described below:

- 1.

Input data weight: Wi, Wf, Wc, Wo.

- 2.

Recurrent data weight: Ri, Rf, Rc, Ro.

- 3.

Peephole weight: V ∈ ℝN.

- 4.

Offset: bi, bf, bc, bo ∈ ℝN.

- 5.

\( x_{t} ,\;y_{t} \) are the input and output of the node at time t, respectively.

- 6.

ft is the output of the forget gate at time t, which is expressed as formula (6):

$$ f_{t} = \sigma (W_{f} x_{t} + R_{f} h_{t - 1} + b_{f} ) $$(6) - 7.

\( i_{t} \) is the output of the input gate at time t, which is expressed as formula (7):

$$ i_{t} = \sigma (W_{i} x_{t} + R_{i} h_{t - 1} + b_{i} ) $$(7) - 8.

\( \tilde{C}_{t} ,\;C_{t} \) is the input and cell structure of the node at time t, respectively, which are expressed as formulas (8) and (9):

$$ \tilde{C} = \tanh (W_{c} x_{t} + R_{c} h_{t - 1} + b_{c} ) $$(8)$$ C_{t} = i_{t} \odot \tilde{C}_{t} + f_{t} \odot C_{t - 1} $$(9) - 9.

Ot is the output of the output gate, which is expressed as formula (10):

$$ o_{t} = \sigma (W_{o} x_{t} + R_{o} h_{t - 1} + b_{o} ) $$(10) - 10.

The final output ht of the node is expressed as formula (11):

$$ h = o_{t} \odot \tanh (c_{t} ) $$(11)

In an LSTM nerve cell model, the input gate determines how much information can be added to the nerve cell, and the output gate determines how much information can be output after processing. Evidently, the forget gate determines how much of the output of the previous moment will be retained for calculation in the subsequent moment. Due to their unique node structure, when used to model series data, LSTM NNs are able to capture mid- and long-term data and will not cause a time scale gradient vanishing problem, unlike earlier RNNs.

The model established in this study is a multi-hidden layer LSTM network structure consisting of LSTM nodes with an m-dimensional vector that has undergone PSR at time t, \( X(t) \), as the input and the reconstructed vector at time \( t + 1 \), \( X(t + 1) \), as the output. Figure 4 shows the established DNN-based model.

The input and output layers each contain an m number of nodes, corresponding to the reconstructed actual time series data at time t and the reconstructed predicted time series data at time t + 1, respectively. The hidden layers consist of multiple LSTM node layers and are connected with one another the same manner as the hidden layers of RNNs mentioned earlier. Hidden layer architectures are mostly designed based on experience in previous studies. Hence, to reduce the number of comparison parameters in the experiment, all the hidden layers of the model are set to contain the same number of nodes. Thus, the model contains L hidden layers, each of which contains K LSTM nodes. An optimal combination of L and K is selected by traversing the grid in the experiment.

In addition, the final output is the reconstructed data on day t + 1, which differs from normal regression experiments, which have only one output. Therefore, the last loss function of the model is corrected: the mean of the squares of the differences between any two dimensions is used as the loss function. Formula (12) describes the loss function of each training sample:

3.4 Evaluation metrics for prediction results

As mentioned previously, the final model established in the experiment is an m-dimensional vector that has undergone PSR. To compare with other time series analysis methods and prediction models and ensure that the experimental results are normative, the final experimental results are calculated using the price data. In other words, the data of the last dimension of the reconstructed vector, \( x^{\prime } (t + (m - 1) \cdot \tau ) \), are used as the final predicted values.

To effectively evaluate the established prediction model, the predicted price data are analysed using four metrics from prediction accuracy and error perspectives based on the actual price data.

Directional accuracy (DA) is expressed as formula (13):

where trend’ is the predicted trend and trend is the actual trend. Because noise is removed from the stock index data using the wavelet de-noising technique, the obtained price series contains identical values for consecutive days. Thus, the trend at time t is defined as formula (14):

Mean root square error (MRSE) is expressed as formula (15):

Mean absolute per cent error (MAPE) is expressed as formula (16):

Pearson correlation coefficient (CORR) is expressed as formula (17):

where yt is the actual price and y′t is the predicted price.

The prediction accuracy of the model is used as the main analytical metric for evaluating its prediction capacity, which is a direct estimate of the trend in the financial data. The higher DA is, the higher the prediction capacity of the model is. MRSE, MAPE and CORR are used to evaluate the closeness of the predicted price data to the actual price data. The lower MRSE and MAPE are and the closer CORR is to 1, the more reliable the prediction results produced by the model are.

4 Experimental results and analysis

4.1 Description of the experimental data

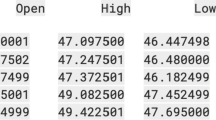

Stock market index data were selected as experimental data based on the following several factors: high accessibility, stable market performance and relatively high analytical significance. Data for six stock indices for various market environments, namely the S&P 500, the Dow Jones industrial average (DJIA), the Nikkei 225 (N 225), the Hang Seng index (HSI), the China Securities index 300 (CSI 300) and the ChiNext index, were obtained from Yahoo Finance (finance.yahoo.com), TuShare financial data interface (tushare.org) and relevant organizations. The analytical data are the closing prices for each trading day. Table 1 presents a description of the selected data.

In this study, three sets of experiments were performed on the time series data for the six stock indices with an aim to establish a reasonable model structure and the effectiveness of the proposed PSR-DNN-combined approach in analysing and predicting financial time series was examined.

4.2 PSR analysis of various stock indices

Before establishing a prediction model, τ and m were first determined for each stock index using the PSR method, as shown in Figs. 5, 6, 7, 8, 9 and 10.

As shown in the mutual information delay variation chart and m discriminant chart for each stock index, there was no significant difference in τ and m between the stock indices. For mutual information, its first and second minimum values were reached at τ of 3 and 6, respectively, for all the stock indices. For m, its value basically approached and reached the extreme value at the fifth and sixth times, respectively, and the values at the fifth and sixth times were basically close.

Based on the above description of the experiment, the PSR parameters for all the stock index price data were set as follows: τ = 3, and m = 5. The reconstructed series of a known price series \( [y_{t} \; = \;x(t),\;t\; = \;1,\;2, \ldots ,n] \) is as follows:

To demonstrate the effectiveness of the PSR method, a comparison experiment was performed on the subsequently adjusted and optimized LSTM prediction model. Table 2 summarizes the experimental results (the mean of each evaluation metric for each stock index obtained from multiple sets of experiments based on the data for a 6-year period).

The experimental results show a relatively significant difference in prediction accuracy for the S&P 500 and the ChiNext index: the prediction accuracy for these two indices was, on average, over 3% higher when the PSR method was used than when the PSR method was not used. For other stock indices, the prediction accuracy was approximately 1% higher when the PSR method was used than when the PSR method was not used. Overall, although the use of the PSR method will decrease the goodness of fit between the predicted and actual values, more attention is paid to increasing the prediction accuracy in practical application. The results also show that the MRSE and R were within their respective acceptable ranges. Thus, it can be concluded that the use of the PSR method can help improve the model’s prediction performance.

4.3 Analysis of the DNN structure

The three main parameters of the established DNN-based prediction model, namely the number of hidden layers, the number of LSTM nodes in the hidden layers and the activation function of the LSTM output, were determined through an experiment. Other hyperparameters were similarly set based on the grid searching method and the relevant literature [18, 19] as follows: time step, 10; initial learning rate, 0.001; the initial weights follow the normal distribution pattern; probability for preservation of nodes, 0.4; and number of iterations, 5000. To facilitate better observation and comparison of the experimental results, PSR, de-noising and normalization were first performed in the model parameter experiment. In addition, relatively fine experimental data were selected: 500 sets of data for the S&P 500 for a nearly 2-year (January 4, 2010 to February 3, 2012) period.

First, the number of LSTM nodes in the hidden layers was analysed through an experiment under the following conditions: number of hidden layers, 3, and activation function of the output, hyperbolic tangent (tanh) function. Table 3 and Fig. 11 present the experimental results for the test set.

The experimental results demonstrate that there was no significant difference between the graphs plotted by the established single-LSTM hidden layer models with various numbers of nodes. The data in Table 3 indicate that the model prediction accuracy changed with the number of nodes in the hidden layers. Relatively excellent results were obtained when the number of nodes in the hidden layers was set to 20, 32 or 48. Thus, the number of nodes in the hidden layers of the subsequent experimental model was set to 32.

After the number of LSTM nodes in the hidden layers was determined, the number of hidden layers was determined through an experiment. Models established in most of the relevant studies (including those on RNNs) do not contain too many hidden layers. Thus, the experimental range for the number of hidden layers was set to [1, 8]. Table 4 and Fig. 12 present the experimental results.

The experimental results show that as the number of hidden layers increased, data fluctuations occurred in greater intervals and became smoother. There was a relatively large difference between the predicted and actual values. Considering the very small number of sample sets used as training data, it is prudent to establish a model with not too many layers to prevent over-fitting. As demonstrated by the experimental results, the best prediction results were achieved with three hidden layers.

After obtaining a deep RNN network with three hidden layers, each of which contains 32 nodes, the activation function of the final output gate of each LSTM node was determined through an experiment. The tanh and linear rectification (ReLU) functions were selected. Table 5 and Fig. 13 present the experimental results.

Clearly, the fitted predicted values for the intervals with relatively significant fluctuations obtained when the ReLU function was selected as the activation function of the output gate of each LSTM node were inferior to those obtained when the tanh function was selected as the activation function.

4.4 Performance analysis of the prediction model

To determine the effectiveness of the established model in predicting financial data, multiple prediction models were used to predict the data for the six stock indices for various market environments. Table 6 summarizes the description of the comparison experimental models. To facilitate analysis and evaluation, the experimental results were consolidated by year and are presented in Figs. 14, 15, 16, 17, 18 and 19 and Tables 7, 8, 9, 10, 11 and 12.

To facilitate observation, the figures show the data for 2016, including the actual data and the data predicted by various prediction models. Each dataset contains approximately 235 data points. Although there are some slight differences between the curves produced by the models for different stock indices, common findings are described below:

Regarding the goodness of fit to the series, the deep LSTM network model was superior to the SVM and deep MLP models.

The sections of the curves produced by the deep LSTM network model and the deep MLP model for small fluctuations in the stock indices were softer, whereas the corresponding results predicted by the support vector regression (SVR) and ARIMA methods were basically identical to those of the previous day. Tables 7, 8, 9, 10, 11 and 12 summarize the experimental data in more detail.

The following can be found from the experimental data:

- (1)

The maximum prediction accuracy of the LSTM model reached 62.87% (for the S&P 500 for 2012).

- (2)

There were some differences in prediction results between different stock indices and between different periods. On average, the predication accuracy for the S&P 500 was the highest, whereas the prediction accuracy for the ChiNext index was the lowest.

- (3)

Based on MRSE, MAPE and CORR, the goodness of fit of the results produced by the deep LSTM network model was slightly lower than that of the results produced by the ARIMA method but higher than those of the results produced by the SVR and MLP models.

- (4)

Based on DA, the prediction accuracy of the LSTM model for the price series (average: 56.85%) was much higher than that of the ARIMA model and 3–5% higher than those of the SVR and MLP models.

The prediction model was established in this study based only on the historical price data without considering macroscopic factors (e.g. relevant policies, market system and investor psychology). The S&P 500 is an index for a developed market in which investors are more rational and policies are sounder; consequently, its price fluctuations are more in line with the market rules. The average prediction accuracy for the ChiNext index was the lowest. However, of all the stock indices, the ChiNext index is the youngest (inaugurated in October 2009). ChiNext is a second-tier stock market, which can be seen as a low-entry-requirement, high-risk and stringently regulated stock market. As a result, the ChiNext index is more easily affected by macroscopic factors, such as policy control, industrial changes and investor psychology. These factors cannot be determined based on price data alone. Nevertheless, the DNN-based prediction model was still able to achieve relatively high prediction accuracy for some time intervals. Thus, the errors in the above-mentioned experimental results are within the acceptable range and are in basic agreement with the estimation. The deep LSTM network prediction model that uses the PSR method for price series analysis can relatively accurately predict financial data.

5 Conclusions

In this paper, a prediction model is proposed for financial price data, which are non-stationary and relatively noisy time series, through three process steps, namely time series data processing, network model building and result evaluation and analysis. The PSR method for time series analysis is combined with a DNN-based LSTM network model. Data de-noising and normalization are performed at the pre-processing stage. In addition, data structuring is also achieved by partitioning using a time window. Subsequently, a DNN-based model is designed and optimized by selecting an optimum activation function and optimization method. Ultimately, four metrics are used to evaluate the effectiveness of the model. Finally, various prediction methods, including the conventional ARIMA linear analytical method, the conventional SVR machine learning method, a deep MLP model, a deep LSTM model involving no PSR process and the deep LSTM combined with PSR, are compared. The comparison of the prediction results for various stock indices for various periods demonstrates that the established DNN-based prediction model displays a higher prediction capacity than the other models.

References

Zhang Li, Wang Fulin, Bing Xu, Chi Wenyu, Wang Qiongya, Sun Ting (2018) Prediction of stock prices based on LM-BP neural network and the estimation of overfitting point by RDCI. Neural Comput Appl 30(5):1425–1444

Oliveira ALI, Meira SRL (2006) Detecting novelties in time series through neural networks forecasting with robust confidence intervals. Neurocomputing 70(1):79–92

Lecun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436

White H (1988) Economic prediction using neural networks: the case of IBM daily stock returns 451–458

Zhang GP (2003) Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 50:159–175

Vanstone B, Finnie G (2009) An empirical methodology for developing stockmarket trading systems using artificial neural networks. Expert Syst Appl 36(3):6668–6680

Jasemi M, Kimiagari AM, Memariani A (2011) A modern neural network model to do stock market timing on the basis of the ancient investment technique of Japanese Candlestick. Expert Syst Appl 38(4):3884–3890

Ticknor JL (2013) A Bayesian regularized artificial neural network for stock market forecasting. Expert Syst Appl 40(14):5501–5506

Wang J (2015) Forecasting stock market indexes using principle component analysis and stochastic time effective neural networks. Neurocomputing 156:68–78

Xiong Z (2011) Research on RMB exchange rate forecasting model based on combining ARIMA with Neural networks. J Quant Tech Econ 28(06):64–76 (in Chinese)

Wu Q, Wang C, Tang Y (2013) Empirical research on volume-price relationship based on GARCH models and BP neural network. J Sichuan Univ Nat Sci Edn 50(04):703–708 (in Chinese)

Li X, Zhang Z (2014) Support vector machine method for financial time series prediction based on simultaneous error prediction. J Tianjin Univ Sci Technol 47(01):86–94 (in Chinese)

Schmidhuber J (2015) Deep learning in neural networks: an overview. Neural Netw 61:85–117

Shen F, Chao J, Zhao J (2015) Forecasting exchange rate using deep belief networks and conjugate gradient method. Neurocomputing 167:243–253

Ding X, Zhang Y, Liu T, et al (2015) Deep learning for event-driven stock prediction. In Twenty-fourth international joint conference on artificial intelligence, pp 2327–2333

Zhao Y, Li J, Yu L (2017) A deep learning ensemble approach for crude oil price forecasting. Energy Econ 66:9–16

Krauss C, Do XA, Huck N (2017) Deep neural networks, gradient-boosted trees, random forests: statistical arbitrage on the S&P 500. Eur J Oper Res 259(2):689–702

Song Y, Lee JW, Lee J (2018) A study on novel filtering and relationship between input-features and target-vectors in a deep learning model for stock price prediction. Appl Intell 49:1–15

Minh DL, Sadeghi-Niaraki A, Huy HD et al (2018) Deep learning approach for short-term stock trends prediction based on two-stream gated recurrent unit network. IEEE Access 6:1–1

Göçken M, Özçalici M, Boru A, Dosdogru AT (2019) Stock price prediction using hybrid soft computing models incorporating parameter tuning and input variable selection. Neural Comput Appl 31(2):577–592

Graves A, Jaitly N (2014) Towards end-to-end speech recognition with recurrent neural networks. In: International conference on machine learning, pp 1764–1772

Greff K, Srivastava RK, Koutnik J et al (2016) LSTM: a search space Odyssey. IEEE Trans Neural Netw 28:1–11

Acknowledgements

This paper is supported by Natural Science Foundation of China. (No. 61673354), the Fundamental Research Funds for the Central Universities, China University of Geosciences (Wuhan), the State Key Lab of Digital Manufacturing Equipment and Technology, Huazhong University of Science and Technology (DMETKF2018020) and Open Research Project of The Hubei Key Laboratory of Intelligent Geo-Information Processing, China University of Geosciences (Wuhan).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflicts of interest of this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yu, P., Yan, X. Stock price prediction based on deep neural networks. Neural Comput & Applic 32, 1609–1628 (2020). https://doi.org/10.1007/s00521-019-04212-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04212-x