Abstract

The electrocardiogram (ECG) is a picture of heart electrical conduction, which is widely used to diagnose many types of diseases such as abnormal heartbeat rhythm (arrhythmia). However, it is very difficult to detect the abnormal ECG characteristics because of the nonlinearity and the complexity of ECG signals from one side, and the noise effect of these signals from the other side, which make it very difficult to perform direct information extraction. Therefore, in this study we propose a very deep convolutional neural network (VDCNN) by using small filters throughout the whole net to reduce the noise affect and improve the performance. Our approach introduces multi-canonical correlation analysis (MCCA), a method to learn selective adaptive layer’s features such that the resulting representations are highly linearly correlated and speed up the training task. Moreover, the Q-Gaussian multi-class support vector machine (QG-MSVM) is introduced for classification, an algorithm which has a better learning performance and generalization ability on ECG signals processing. As a result, we come up with expressively more accurate architecture which is able to differentiate between the normal (NSR) heartbeats and three common types of arrhythmia atrial fibrillation (A-Fib), atrial flutter (AFL), and paroxysmal supraventricular tachycardia (PSVT) without performing any noise filtering or pre-processing techniques. Experimental results show that the proposed algorithm outperforms the state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cardiovascular diseases (CVDs) are one of the most important death causes across the globe. Hence, building fast and accurate techniques for automatic electrocardiogram (ECG) abnormal heartbeat signal detection is crucial for clinical diagnosis of different CVDs.

Arrhythmia is one of the CVDs types, which is an irregular heartbeat (also called dysrhythmia); and during this case the heart can beat too fast (Tachycardia), too slow (Bradycardia), or with an irregular rhythm [1]. The most common test used to diagnose arrhythmias is ECG, which is simple and painless test to detect and record the normal and abnormal individual heartbeats [2]. The most frequent deadly arrhythmias that affect the elderly population are: atrial fibrillation (A-Fib), atrial flutter (AF), and paroxysmal supraventricular tachycardia (PSVT), which are depicted as follows: The A-Fib is the most common sustained cardiac arrhythmia which will continue to grow rapidly. A-Fib occurs when action potentials fire very rapidly within the pulmonary veins or atrium in a chaotic manner. The result is a very fast atrial rate of 400–600 beats per minute because the atrial rate is so fast, the action potentials produced are of such low amplitude, and P waves will not be seen on the ECG in patients with atrial fibrillation [3]. AF is a type of arrhythmia that occurs when the chambers in the top of the heart (atria) beat faster than the bottom ones (ventricles), which cause the heart rhythm to be out of sync [4]. PSVT is episodes of rapid heart rate that start in a part of the heart above the ventricles. There are a number of specific causes of PSVT, and it can develop when doses of the heart medicine, digitalis are too high. It can also occur with a condition known as Wolff–Parkinson–White syndrome, which is most often seen in young people and infants [5]. Figure 1 illustrates plots of normal sinus rhythm (NSR), A-Fib, AF, and PSTV ECG signals.

As shown in Fig. 1, all the arrhythmia signals are noisy signals, nonlinear, and complex, so it is difficult to detect the characteristics from these signals. To tackle these problems, many computer-aided diagnosis (CAD) systems are used to automatically detect arrhythmias and categorize different types of arrhythmia into their respective classes [6,7,8,9,10,11,12,13,14]. These methods include multi-resolution wavelet transform, which proposed by Sahoo et al. [6] to classify four types of ECG beats: normal (N), left bundle branch block (LBBB), right bundle branch block (RBBB), and paced beats (P) using neural network (NN) and SVM classifier with average accuracy of 96.67%. Ebrahimzadeh et al. [9] presented a system for classification of the normal heartbeats and premature ventricular contraction (PVC). In this method, the authors used stationary wavelet transform (SWF) for noise reduction in the ECG signals. Then, a higher-order statistics and Hermite basis functions expansion represent the ECG signals, and finally they adopted multi-layer perceptron (MLP) neural networks for classification with accuracy of 98%. Desai et al. [13] implemented a CAD system to diagnose the four-class arrhythmia NSR, A-Fib, AF, and V-Fib using rotation forest (ROF) ensemble method with accuracy 98.37%. Acharya et al. [14] also proposed a CAD system for automated diagnosis of the four-class arrhythmia (NSR, A-Fib, AFL, and V-Fib), of which they extracted entropy features from the ECG signals, and the obtained features are then subjected to automated classification using decision tree (DT) classifier with accuracy of 96.3%. However, these CAD systems follow the conventional process where the ECG signals are pre-processed, then the pre-processed signals are passed to feature extraction followed by the classification process to detect the diagnosis, which give low performance when validated on a separate dataset.

Recently, convolutional neural network (CNN) has been employed in the automated classification of ECG signals. Acharya et al. [15] employed an eleven-layer CNN to automatically classify the four classes of ECG signals: NSR, A-Fib, AF, and ventricular fibrillation (V-Fib) with accuracy of 94.90%. They used Daubechies wavelet 6 [16] for signal denoising and baseline removing. Zubair et al. [17] extracted R-peak ECG beat patterns for the training of three-layer CNN, with low accuracy in detecting the ECG beats into five classes: normal, fusion beat, supraventricular ectopic beat, unknown beat, and ventricular ectopic beat. Acharya et al. [18] developed a 9-layer deep CNN to automatically identify five different categories of heartbeats in ECG signals: non-ectopic (N), supraventricular ectopic (S), ventricular ectopic (V), fusion (F), and unknown (Q). They used pre-processing algorithm to remove noise from ECG signals and achieved an accuracy of 94.03 and 93.47% in the diagnostic classification of heartbeats in original and noise-free ECGs. However, these works [15, 17, 18] used pre-processing techniques to remove the noise, which is time-consuming. In addition, they used relatively large filters size in the first convolution layers, which decrease the number of parameters, and lead to integrate less discriminative decision function (ReLU). In our study, we solved these problems by using very small receptive fields throughout the whole net.

The major contributions of this paper are fourfolds:

-

1.

We proposed a novel feature extraction and combination method which takes advantage of very deep convolutional neural network (VDCNN) to extract the very deep features and reduce the noise effect, and apply the feature-level fusion due to the feature level provides more information and details, which lead to a better classification performance.

-

2.

We introduced small-size convolution filters to decrease the number of parameters in each layer and control the model sizes as the network goes deeper. In addition, we integrated more ReLU to have more discriminative decision function and lower computation cost. Consequently, we come up with expressively more accurate network architecture, which is used as fixed feature extractor to extract the very deep features from the ECG signals without performing any noise filtering or pre-processing techniques.

-

3.

To speed up the training task (i.e. remove irrelevant features) and improve the classification accuracy, we propose a multi-canonical correlation analysis (MCCA), an algorithm to learn selective adaptive layer’s features such that the resulting representations are highly linearly correlated.

-

4.

Extensive experiments on three public available arrhythmia ECG databases [19] show that our algorithm achieves better performance than the other state-of-the-art methods, and it can be served as a tool to help clinicians in confirming their diagnosis.

2 Methodology

This work presents a novel approach to automatically detect NSR, A-fib, AF, and PSVT classes of ECG signals using VDCNN without performing any noise filtering or pre-processing techniques. Figure 2 shows the architecture of the proposed VDCNN model which consists of three main stages: very deep features extraction, multi-CCA algorithm to fuse the learned layers’ features, and QG-MSVM classifier for classification. In the following sections, each aspect of the proposed algorithm is described in more details.

2.1 The proposed network

Using deep neural networks to learn effective feature representations has become very popular in biometric recognition [20,21,22,23,24]. It is also employed in the medical field as an automated diagnostic tool to aid clinicians [25,26,27,28,29]. In contrast to the aforementioned CNNs that usually have five or seven layers, the proposed network is based on the VDCNN for feature extraction, which it has a much deeper architecture (up to 19 weight layers) and hence can provide many informative features [30]. Therefore, we steadily increased the network depth by adding more convolutional layers, which is achievable due to the use of very small (3 × 3) convolution filters in all layers. Our proposed algorithm is based on the fusion of the very deep features learned by our network model, of which the outputs of some selected layers are used as a feature descriptor of the input signal to describe it by informative and significant features. In this paper, we used the first and second output fully connected layers of the proposed net as the feature descriptor of the ECG signal for arrhythmia detection to describe it with informative features.

2.1.1 Configuration

In our network configurations, we use a stack of convolutional layers followed by three fully connected layers. All hidden layers are supplied with the rectified linear unit (ReLU) and do not contain local response normalization (LRN) as it does not improve the performance on our ECG signal dataset, instead it leads to increase the time complexity and the memory consumption. The final layer is the softmax layer.

The reason for using very small 3 × 3 receptive fields instead of using quite large receptive fields in the first (Conv.) layers (e.g. 11 × 11 with stride 4 [31] or 7 × 7 with stride 2 [32]) is that: first, we decrease the number of parameters and therefore reduce the computation cost as the CNN goes deeper as follows: we suppose that the three-layer 3 × 3 convolution kernels stack has z channels, and the stack is parameterized by 3(32z2) = 27z2 weights; in the same time, a single 7 × 7 (Conv.) layer requires 72z2 = 49z2, which needs 81% more of parameters. In addition, using small filter sizes throughout the whole net reduces the noise effect and achieves better performance [33]. Second, we integrate ReLU in each convolution stack to have more discriminative decision functions and lower computation cost.

2.2 Feature fusion based on multi-canonical correlation analysis (MCCA)

ECG signal has its own special characteristics (nonlinearity and complexity), and it cannot only be detected in a fixed size and need information from different sizes of a signal [34]. Hence, we propose multi-canonical correlation analysis (MCCA) to learn selective adaptive layer’s features such that the resulting representations are highly linearly correlated and therefore speed up the training task and improve the performance. In this section, we first introduce the basic idea of canonical correlation analysis (CCA) fusion technique and then we propose our feature-level fusion method (MCCA).

2.2.1 Canonical correlation analysis (CCA)

Canonical correlation analysis (CCA) is a well-known technique for finding the correlations between two sets of representations. Suppose that X(p × n) and Y(q × n) are two matrices containing n training feature vectors. The aim of CCA is to find the projection direction of \( a^{\text{T}} \) and \( b^{\text{T}} \) that maximizes the pairwise correlations \( X^{*} = a^{\rm T} X \) and \( Y^{*} = b^{\rm T} Y \) across the two feature sets [35] as shown in Fig. 3.

The transformation matrices a and b are then found by solving the eigenvalue equations as in Eq. (1) [36]:

where \( \mathop a\limits^{ \wedge } \) and \( \mathop b\limits^{ \wedge } \) are the eigenvectors and \( \varLambda^{2} \) is the diagonal matrix of eigenvalues. The number of nonzero eigenvalues in each equation is \( d = {\text{rank}}\,(V_{xy} ) \le \hbox{min} \left( {n,p,q} \right) \) which will be organized in decreasing order \( r_{1} \ge r_{2} \ge \cdots \ge r_{d} \). For the transformed very deep features, the covariance matrix defined in Eq. (1) will be as:

where the above matrix shows that the canonical variates have nonzero correlation only on their indices. Finally, the feature-level fusion is implemented by summation as in (3):

where C is called the very deep discriminant features (VDDFs).

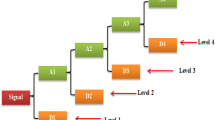

2.2.2 Multi-canonical correlation analysis (MCCA)

Multi-canonical correlation analysis (MCCA) generalizes CCA to be appropriate to more than two sets of features. We assume that we have k sets of features \( F_{i} \in \Re^{{p_{i} \times n}} \), i = 1, 2,…,k which are sorted by their rank as: rank(F1) ≥ rank(F2) ≥ \( \cdots \) ≥ rank(Fk). MCCA applies CCA on two sets of features at the same time to attain the maximum possible length of the fused feature vector, of which in each step the two feature sets with the highest ranks are fused together as illustrated in Fig. 4.

2.3 Classification

In this study, Q-Gaussian multi-class support vector machine (QG-MSVM) is proposed to classify ECG signals data into four classes (NSR, A-Fib, AF, and PSVT) where Q-Gaussian function is used as SVM kernel function [37, 38]. Q-Gaussian function is employed by replacing the exponential expression in the standard Gaussian function with Q-exponential expression to maximize the entropy under certain constraints as in Eq. (4):

where q is a real valued parameter and \( e_{q} \) is q-exponential function defined in [39] and given by Eq. (5):

The equation in (4) can be rewritten as Eq. (6):

In this paper, after lots of experiments, eventually, \( \frac{1}{{\sigma^{2} }} \) is assigned to 0.5 and q to 1.5.

3 Experimental results

In this study, three public arrhythmia ECG datasets are used for the experiments, which are available to be downloaded [19]. The datasets are described and then the results attained throughout the classification are discussed.

3.1 ECG datasets

We have obtained NSR (normal sinus rhythm) ECG signals from MIT-BIH Arrhythmia Database (mitdb), A-Fib (atrial fibrillation) and AF (atrial flutter) ECG signals from MIT-BIH atrial fibrillation database (afdb), and PSVT (paroxysmal supraventricular tachycardia) ECG signals from MIT-BIH Supraventricular Arrhythmia Database (svdb). In this work, we have used Lead II ECG signals as it is the most commonly used lead, besides it is often times the best lead to use for interpreting the heart’s rhythm.

Table 1 shows the details of the ECG signals used in this study, of which we have used ten-second duration of ECG segments and the total number of ECG segments is 111,901.

3.2 Experiment configuration and results

The proposed algorithm is trained on a PC workstation with 2.7-GHz CPU with 32 GB of memory and a moderate graphics processing unit (GPU) card. All methods have been implemented using Microsoft Windows 10 Pro 64-bit and MATLAB R2016a. We randomly selected the ECG samples for the training and the testing data sets, and we evaluated the final statistical results after five runs. After every run of training is completed, our algorithm performs a test on the CNN model. We used 80% for training and 20% for testing. The average result of all five runs gives the total performance of the system.

During training, ECG waveform is the input to our network, which is passed through a stack of convolutional (Conv.) layers, where very small filters (3 × 3) are used. Five max-pooling layers are used in this network, which is performed over a 2 × 2-pixel window, with stride 2 and each max-pooling layer follows some of the (Conv.) layers (not all (Conv.) layers are followed by max-pooling). A stack of convolutional layers (which has a different depth in different architectures) is followed by three fully connected (FC) layers: the first two have 4096 channels each, the third performs C-way ECG classification and thus contains C channels (one for each class). The final layer is the softmax layer.

Figure 5 presents a confusion matrix of the classification performance on two cases: (a) the proposed algorithm without fusion technique and (b) the proposed algorithm with fusion technique. It can be seen from Fig. 5 that: 100% ECG segments are correctly classified as NSR class, 100% of ECG segments are correctly classified as A-Fib, 100% of ECG segments are correctly classified as AF, and < 2% of the ECG heartbeats are wrongly classified using the proposed algorithm with fusion technique. Without fusion technique, 100% ECG segments are correctly classified as NSR class, 100% ECG segments are correctly classified as AF class, and < 10% of the ECG heartbeats are wrongly classified. The errors were found in detection of PSVT and A-fib. Figure 6 shows the comparison of average accuracy between the proposed algorithm with fusion and without fusion technique.

Table 2 shows the average classification performance of each class. The overall average classification performance (accuracy, equal error rate (EER), sensitivity, and specificity) for all classes is collected in Table 3.

Figure 7 shows the variation of the accuracy of the classifier during different runs (five runs) in both cases (with and without fusion).

The classification accuracy of the proposed algorithm is compared with several state-of-the-art methods and the results are shown in Table 4.

4 Discussion

The proposed very deep learning structure for detection of ECG signals is motivated by its application to image analysis and classification. Several studies based on CNN have been implemented for the automated detection of abnormal ECG signals. Acharya et al. [42] proposed a tool for an automated differentiation of shockable and non-shockable ventricular arrhythmias from 2 s ECG segments. They obtained an accuracy of 93.18% to classify the two types of ECG signals. In [43], Acharya et al. proposed an approach based on CNN for the automated detection of a normal and MI ECG beats. They achieved an accuracy of 93.53 and 95.22% using ECG beats with noise and without noise removal, respectively. Acharya et al. [15] developed an eleven-layer CNN structure to characterize the ECG signals to four classes. They obtained 92.50% accuracy when using 2 s of ECG signals and 94.90% accuracy for 5 s of ECG signals. Luo et al. [41] proposed a patient-specific heartbeat classification framework using time–frequency representation and a deep neural network (DNN) classifier for classifying the heartbeats into four classes. They reported overall accuracy of 97.5% for classifying the four types of ECG signals. In this study, we take the advantage of very deep convolutional neural network to extract the very deep features from the original ECG unlike the previous studies that extract the features after pre-processing or segment the ECG signals as in [44]. Hence, making the proposed system is suitable for real-time arrhythmia detection.

From the analysis of the results, it is evident that our proposed algorithm is more robust as compared to state-of-the-art works mentioned in Table 4. Most of the previous works are used relatively large filters size in the first convolution layers, which decrease the number of parameters, and lead to integrate less discriminative decision function (ReLU). In our study, we solved these problems by using very small receptive fields throughout the whole net. In addition, our proposed algorithm does not require any pre-processing methods, where most of the works reported in Table 4 are used pre-processing techniques in their study. Our results are comparable to the previous works described in Table 4 which proves that using filters or pre-processing techniques is not necessary for the classification of arrhythmia. In this paper, we selected the first and second output fully connected layers of the proposed net as the feature descriptor of the ECG signal for arrhythmia detection to describe it with informative features. Figure 8 shows the criterion of considering the selected layers by selecting the two layers that achieve the highest accuracy comparing to other layers in the proposed model.

To make our work more robust and efficient, we proposed a fusion technique named MCCA, which increases the accuracy by 3% comparing to the proposed algorithm without fusion. Our results using fusion are comparable to the previous works in Table 4, where the sensitivity rate is 98.75% and the specificity rate is 99.22%.

The computational cost of the proposed system is relatively low. The algorithm is implemented in a computer with specifications of 2.7-GHz CPU with 32 GB RAM. Moreover, the proposed algorithm used small filters size (3 × 3) comparing to other methods and therefore the implementation is economical and requires simple hardware.

To the best of our knowledge, this is the first study to implement a very deep CNN for the automated detection system of NSR, A-Fib, AF, and PSVT ECG signals without using any pre-processing methods.

Based on the results, the summary of proposed algorithm can be drawn as:

-

1.

It can be argued that the proposed algorithm is significantly robust, reliable, and efficient comparing to other state-of-the-art ECG classification algorithms.

-

2.

The proposed algorithm overcomes many problems that confronted most of previous algorithms such as large number of parameters and the noise effect.

-

3.

To take advantage of our proposed very deep network, we work directly on the two-dimensional ECG signal instead of a one-dimensional signal which always need a segmentation process.

-

4.

The computational cost of our algorithm is lower than other algorithms that used CNN.

-

5.

The proposed algorithm can serve as a tool to help clinicians in confirming their diagnosis.

Finally, we want to stress that a novel ECG algorithm based on a very deep CNN to classify the four classes (NSR, A-Fib, AF, and PSVT) has been already confirmed.

5 Conclusion

In this work, we propose a very deep CNN with QG-MSVM to classify the four classes (NSR, A-Fib, AF, and PSVT) using 111,901 ECG segments without performing any pre-processing methods. Furthermore, we proposed MCCA for feature-level fusion, which combines the selective adaptive layer’s features of ECG to improve the performance and speed up the training task. Our proposed algorithm achieved accuracy of 94.74% without using fusion techniques, and the accuracy is significantly improved to be 97.37% by applying MCCA. Experimental results show that the proposed algorithm is more accurate than the state-of-the-art methods. Hence, it is evident that our algorithm has the possibility to be implemented in clinical settings, which can serve as a tool to help clinicians in confirming their diagnosis. In future, we intend to extend the proposed model for detecting more abnormal cases.

References

Arrhythmia Irregular Heartbeat Center (2017) Heart disease and abnormal heart rhythm (arrhythmia). https://www.medicinenet.com/arrhythmia_irregular_heartbeat/article.htm. Accessed 12 Nov 2017

National Heart, Lung and Blood Institute (2017) How is heart failure diagnosed? https://www.nhlbi.nih.gov/health/health-topics/topics/hf/diagnosis. Accessed 12 Nov 2017

Atrial Fibrillation ECG Review (2017) https://www.healio.com/cardiology/learn-the-heart/ecg-review/ecg-topic-reviews-and-criteria/atrial-fibrillation-review. Accessed 12 Nov 2017

Healthline (2017) Atrial flutter. https://www.healthline.com/health/heart-disease/atrial-flutter#overview1. Accessed 12 Nov 2017

Medications for Paroxysmal Supraventricular Tachycardia (2017) https://www.drugs.com/condition/paroxysmal-supraventricular-tachycardia.html. Accessed 12 Nov 2017

Sahoo S et al (2016) De-noising of ECG signal and QRS detection using Hilbert transform and adaptive thresholding. Procedia Technol 25:68–75

De Albuquerque VHC et al (2016) Robust automated cardiac arrhythmia detection in ECG beat signals. Neural Comput Appl 1:1–15

Acharya UR et al (2016) Application of empirical mode decomposition (EMD) for automated identification of congestive heart failure using heart rate signals. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2612-1

Ebrahimzadeh A et al (2016) Classification of ECG signals using hermite functions and MLP neural networks. J AI Data Min 4(1):55–65

Alfaro-Ponce M et al (2017) Automatic detection of electrocardiographic arrhythmias by parallel continuous neural networks implemented in FPGA. Neural Comput Appl. https://doi.org/10.1007/s00521-017-3051-3

Andersen RS et al (2017) A novel approach for automatic detection of atrial fibrillation based on inter beat intervals and support vector machine. In: 2017 39th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 2039–2042

Garcia G et al (2017) Inter-patient ECG heartbeat classification with temporal VCG optimized by PSO. Sci Rep 7(1):10543

Desai U, Martis RJ, Acharya UR, Nayak CG, Seshikala G, Ranjan SK (2016) Diagnosis of multiclass tachycardia beats using recurrence quantification analysis and ensemble classifiers. J Mech Med Biol 16(1):1640005

Acharya UR, Fujita H, Adam H, Oh SL, Tan JH, Sudarshan VK, Koh JEW (2016) Automated characterization of Arrhythmias using nonlinear features from tachycardia ECG beats. In: IEEE international conference on systems, man, and cybernetics

Acharya D et al (2017) Automated detection of arrhythmias using different intervals of tachycardia ECG segments with convolutional neural network. Inf Sci 405:81–90

Singh BN, Tiwari AK (2006) Optimal selection of wavelet basis function applied to ECG signal denoising. Digit Signal Process A Rev J 16(3):275–287

Zubair M, Kim J, Yoon CW (2016) An automated ECG beat classification system using convolutional neural networks. In: IEEE 6th international conference on IT convergence and security

Acharya D et al (2017) A deep convolutional neural network model to classify heartbeats. Comput Biol Med 89:389–396

Goldberger AL et al (2000) PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation 101(23):e215–e220

Acharya D et al (2017) Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput Biol Med. https://doi.org/10.1016/j.compbiomed.2017.09.017

An X et al (2014) A deep learning method for classification of EEG data based on motor imagery. ICIC 8590:203–210

Sun Y et al (2014) Deep learning face representation from predicting 10,000 classes. In: 2014 IEEE conference on computer vision and pattern recognition, pp 1891–1898

Hinton G et al (2012) Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process Mag 29(2012):82–97

Wang X et al (2017) CSI-based fingerprinting for indoor localization: a deep learning approach. IEEE Trans Veh Technol 66:763–776

Burlina P et al (2017) Comparing humans and deep learning performance for grading AMD: a study in using universal deep features and transfer learning for automated AMD analysis. Comput Biol Med 82:80–86

Greenspan H et al (2016) Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans Med Imaging 35:1153–1159

Gargeya R, Leng T (2017) Automated identification of diabetic retinopathy using deep learning. Ophthalmology 124(7):962–969

Ravì D et al (2017) Deep learning for health informatics. IEEE J Biomed Health Inf 21:4–21

Havaei M et al (2017) Brain tumor segmentation with deep neural networks. Med Image Anal 35:18–31

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: Proceedings of international conference learning represent. (ICLR), San Diego, CA, pp 1–14

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: NIPS, pp 1106–1114

Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y (2014) OverFeat: integrated recognition, localization and detection using convolutional networks. In: Proceedings of ICLR

Nogueira RF, de Alencar Lotufo R, Machado RC (2016) Fingerprint liveness detection using convolutional neural networks. IEEE Trans Inf Forensics Secur 11(6):1206–1213

Rodriguez R et al (2015) Feature extraction of electrocardiogram signals by applying adaptive threshold and principal component analysis. J Appl Res Technol 13(2):261–269

Amrani M (2017) Deep feature extraction and combination for synthetic aperture radar target classification. J Appl Remote Sens 11(4):1

Haghighat M, Abdel-Mottaleb M, Alhalabi W (2016) Fully automatic face normalization and single sample face recognition in unconstrained environments. Expert Syst Appl 47:23–34

Hammad M, Wang K (2017) Fingerprint classification based on a Q-Gaussian multiclass support vector machine. In: Proceedings of the 2017 international conference on biometrics engineering and application. ACM

Silva L et al (2010) Reconstruction of multivariate signals using q-Gaussian radial basis function network. IEEE Comput Cardiol 2010:465–468

Tsallis C (1994) What are the numbers that experiments provide?. Centro Brasileiro de Pesquisas Físicas, Rio de Janeiro

Isin Ali, Ozdalili Selen (2017) Cardiac arrhythmia detection using deep learning. Procedia Comput Sci 120:268–275

Luo K et al (2017) Patient-specific deep architectural model for ECG classification. J Healthc Eng 2017:1–13

Acharya UR et al (2018) Automated identification of shockable and non-shockable life-threatening ventricular arrhythmias using convolutional neural network. Future Gener Comp Syst 79:952–959

Acharya UR et al (2017) Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf Sci 415:190–198

Jia F, Lei Y, Guo L, Lin J, Xing S (2017) A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 248:98–109

Acknowledgements

This work was supported by the MOE–Microsoft Key Laboratory of Natural Language, Processing and Speech, Harbin Institute of Technology, the Major State Basic Research Development Program of China (973 Program 2015CB351804) and the National Natural Science Foundation of China under Grant Nos. 61572155, 61672188, and 61272386.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Rights and permissions

About this article

Cite this article

Amrani, M., Hammad, M., Jiang, F. et al. Very deep feature extraction and fusion for arrhythmias detection. Neural Comput & Applic 30, 2047–2057 (2018). https://doi.org/10.1007/s00521-018-3616-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3616-9