Abstract

The extreme learning machine (ELM) is a new method for using single hidden layer feed-forward networks with a much simpler training method. While conventional kernel-based classifiers are based on a single kernel, in reality, it is often desirable to base classifiers on combinations of multiple kernels. In this paper, we propose the issue of multiple-kernel learning (MKL) for ELM by formulating it as a semi-infinite linear programming. We further extend this idea by integrating with techniques of MKL. The kernel function in this ELM formulation no longer needs to be fixed, but can be automatically learned as a combination of multiple kernels. Two formulations of multiple-kernel classifiers are proposed. The first one is based on a convex combination of the given base kernels, while the second one uses a convex combination of the so-called equivalent kernels. Empirically, the second formulation is particularly competitive. Experiments on a large number of both toy and real-world data sets (including high-magnification sampling rate image data set) show that the resultant classifier is fast and accurate and can also be easily trained by simply changing linear program.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

An optimal method to an automatic selection of optimal kernels is to learn a linear combination \(K = \sum\nolimits_{j = 1}^{m} {\mu_{j} } K_{j}\) with mixing coefficients μ together with the model parameters. This framework, named multiple-kernel learning (MKL), was first introduced by [1] where two kinds of constraints on β and K have been considered, leading to either semi-definite programming or QCQP approaches, respectively. For appropriately designed sub-kernels K j , the optimized combination coefficients can then be used to understand which features of the examples are of importance for discrimination: If one is able to obtain an accurate classification by a sparse weighting μ j , then one can quite easily interpret the resulting decision function. Intuitively, sparseness of β makes sense when the expected number of meaningful kernels is small. Requiring that only a small number of features contribute to the final kernel implicitly, it assumes that most of the features to be selected are equally informative. In other words, sparseness is good when the kernels already contain a couple of good features that alone capture almost all of the characteristic traits of the problem. This also implies that features are highly redundant. It is now generally recognized as a powerful tool for various machine learning problems [2, 3].

Extreme learning machine (ELM) is proposed by Huang [4, 5]. ELM is a new type of single hidden layer feed-forward neural networks (SLFNs), and its core is a fixed hidden layer, which contains a large number of nonlinear nodes. The hidden layer bias of ELM is chosen randomly beforehand, and only the output weights of ELM need to be calculated. The only factor which needs to be set by users is the size of ELM (the number of hidden nodes) [6]. Benefiting from the simple structure and effective training method, ELM has been successfully used in many practical tasks such as prediction, fault diagnosis, recognition, classification, signal processing, and so on.

Although ELM has made some achievements, but there is still space for improvement. Some scholars are engaged in optimizing the learning algorithm of ELM. Han et al. [7] encoded a priori information to improve the function approximation of ELM. Kim et al. [8] introduced a variable projection method to reduce the dimension of the parameter space. Zhu et al. [9] used a differential evolutionary algorithm to select the input weights of ELM.

Some other scholars dedicated to optimize the structure of ELM. Wang et al. [10] made a proper selection of the input weights and bias of ELM in order to improve the performance of ELM. Li et al. [11] proposed a structure-adjustable online ELM learning method, which can adjust the number of hidden layer RBF nodes. Huang et al. [12, 13] proposed an incremental structure ELM, which increases the hidden nodes gradually. Meanwhile, another incremental approach referred to as error-minimized extreme learning machine (EM-ELM) was proposed by Feng et al. [14]. All these incremental ELM start from a small size of ELM hidden layer and add random hidden node (nodes) to the hidden layer. During the growth of networks, the output weights are updated incrementally. On the other hand, an alternative method to optimize the structure of ELM is to train an ELM that is larger than necessary and then prune the unnecessarily nodes during learning. A pruned ELM (PELM) was proposed by Rong et al. [15, 16] for classification problem. Yoan et al. [17] proposed an optimally pruned extreme learning machine (OP-ELM) methodology. Besides, there are still other attempts to optimize the structure of ELM, such as CS-ELM [18] proposed by Lan et al., which used a subset model selection method. Zong et al. [29] put forward the weighted extreme learning machine for imbalance learning. The kernel trick applied to ELM was introduced in previous work [30].

Lanckriet et al. [1] pioneered the work of parameterized combinations of kernel learning in which the optimal kernel is obtained as a linear combination of pre-specified kernels. This procedure named as multiple-kernel learning (MKL) in the literature allows kernels to be chosen more automatic based on data and enhances the autonomy of machine learning process [27]. On the other hand, MKL is particularly valuable in application [32], i.e., through linear combination of kernel matrix learning, MKL offers the advantage of integrating heterogeneous data from multiple sources such as vectors, strings, trees, and graphs. Recently, MKL had been successfully applied to combine various heterogeneous data sources in practice [19]. Compared with the existing L∞-norm MKL method, the L2-norm MKL could lead to non-sparse solutions and more advantages when applied to biomedical problems. Ye et al. [20] showed that the multiple-kernel learning for LS-SVM can be formulated as a SDP problem, which has much less computational complexity compared with that of C-SVM. Sonnenburg et al. [22] applied a semi-infinite linear programming (SILP) strategy by reusing the SVM implementations for solving the subproblems inside the MKL optimization more efficiently, which made MKL applicable to large-scale data sets. Yang et al. [26] used the elastic net regularizer on the kernel combination coefficients as a constraint for MKL. Gu et al. [28] aimed to learn the map between the space of high-magnification sampling rate image patches and the space of blurred high-magnification sampling rate image patches based on the multi-kernel regression, which are the interpolation results generated from the corresponding low-resolution images. Liu et al. [33] designed sparse, non-sparse, and radius-incorporated MK-ELM algorithms.

We propose the issue of multiple-kernel learning for ELM by formulating it as a semi-infinite linear programming (SILP). In addition, the proposed algorithm optimizes the regularization parameter in a unified framework along with the kernels, which make the learning system more automatic. Empirical results on benchmark data sets prove that multiple-kernel learning for ELM (MKL-ELM) has good competitive performance compared with the traditional ELM algorithm.

2 Kernelized extreme learning machine

At first, we will briefly review the ELM proposed in [5, 6, 31]. The essence of ELM is that the hidden layer in ELM need not be tuned. The output function of ELM for generalized SLFNs is

where \(w_{i} \in R^{n}\) is the weight vector connecting the input nodes and the ith hidden node, \(b_{i} \in R\) is the bias of the ith hidden node, \(\beta_{i} \in R\) is the weight connecting the ith hidden node and the output node, and \(f_{L} (x) \in R\) is the output of the SLFNs. Where \(w_{i} \cdot x_{j}\) denote the inner product of w i and x j , b i are the learning parameters of hidden nodes, which are randomly chosen before learning.

If the standard SLFNs with at most \(\tilde{N}\) hidden nodes can approximate theses N samples with zero error, it then means there exists β i , w i , and b i such that

Equation (2) can be written compactly as

Then, Eq. (3) become a linear system, and the smallest norm least squares solution of the linear system is

where \({\mathbf{H}}^{\dag }\) is the Moore–Penrose generalized inverse [4] of the hidden layer output matrix H.

\({\mathbf{T}} = [t_{1} , \ldots ,t_{N} ]^{T}\), and \(\beta = [\beta_{1} ,\beta_{2} , \ldots ,\beta_{L} ]^{T} .\)

H is called the hidden layer output matrix of the network [4, 5]; the ith column of H is the ith hidden node’s output vector with respect to input \(x_{1} ,x_{2} , \ldots ,x_{N}\); and the jth row of H is the output vector of the hidden layer with respect to input x j .

It has been proven in [5, 6] that SLFNs with hidden nodes have the universal approximation capability; the hidden nodes can be randomly generalized in the beginning of learning. As introduced in [6], one of the methods to calculate Moore–Penrose generalized inverse of a matrix is the orthogonal projection method: \({\mathbf{H}}^{\dag } = {\mathbf{H}}^{T} ({\mathbf{HH}}^{T} )^{ - 1} .\)

According to the ridge regression theory [25], one can add a positive value to the diagonal of \({\mathbf{HH}}^{T}\); the resultant solution is more stable and tends to have better generalization performance:

The feature mapping \(h(x)\) is usually known to users in ELM. However, if a feature mapping \(h(x)\) is unknown to users, a kernel matrix for ELM can be defined as follows [6]:

Thus, the output function of kernel ELM classifier can be written compactly as:

ELM is to minimize the training error as well as the norm of the output weights [6].

In fact, according to (8), the classification problem for ELM with multi-output nodes can be formulated as:

where \({\varvec{\upxi}}_{i} = [\xi_{i,1} , \ldots ,\xi_{i,m} ]^{T}\) is the training error vector of the m output nodes with respect to the training sample x i . Based on the KKT condition, to train ELM is equivalent to solving the following dual optimization problem:

Algorithm 1: Given a training set \(\left\{ {(x_{i} ,t_{i} )} \right\}_{i = 1}^{N} \subset R^{n} \times R\), activation kernel function \(g( \cdot )\), and the hidden node number L:

-

Step 1: Randomly assign input weight w i and bias \(b_{i} ,{\kern 1pt} {\kern 1pt} i = 1, \ldots ,L.\)

-

Step 2: Calculate the hidden layer output matrix H.

-

Step 3: Calculate the output weight β: \(\beta = {\mathbf{H}}^{\dag } {\mathbf{T}}.\)

3 Multiple-kernel-learning algorithms for extreme learning machine

3.1 Minimum norm least squares (LS) solution of SLFNs

It is very interesting and surprising that unlike the most common understanding that all the parameters of SLFNs need to be adjusted, the input weights w i and the hidden layer biases b i are in fact not necessarily tuned, and the hidden layer output matrix H can actually remain unchanged once random values have been assigned to these parameters in the beginning of learning. For fixed input weights w i and the hidden layer biases b i , seen from Eq. (11), to train an SLFN is simply equivalent to finding a least squares solution \(\hat{\beta }\) of the linear system \({\mathbf{\rm H}}\beta = {\mathbf{T}}\):

If the number \(\tilde{N}\) of hidden nodes is equal to the number N of distinct training samples \(\tilde{N} = N,\) matrix H is square and invertible when the input weight vectors w i and the hidden biases b i are randomly chosen, and SLFNs can approximate these training samples with zero error.

However, in most cases, the number of hidden nodes is much less than the number of distinct training samples \(\tilde{N} \ll N,\) \({\mathbf{\rm H}}\) is a non-square matrix, and there may not exist w i , b i , β i (\(i = 1, \ldots ,\tilde{N}\)) such that \({\mathbf{\rm H}}\beta = {\mathbf{T}} .\) The smallest norm least squares solution of the above linear system is

where \({\mathbf{\rm H}}^{\dag }\) is the Moore–Penrose generalized inverse of matrix H [21].

3.2 QCQP formulation

In the scenario of ELM, the duality gap of Eq. (9) and its dual program is zero since the Slater constraint qualification holds, and we get

The Lagrangian dual yields:

The Lagrangian function is given as

To obtain the dual, the derivatives of the Lagrangian function with respect to the primal variables α have to vanish

According to (18), the Eq. (16) can be formulated as:

We get its dual program:

for the Slater constraint qualification holds. We consider the parameterization \(K = \sum\nolimits_{i = 1}^{p} {\mu_{i} K_{i} }\) with additional affine constraint \(\sum\nolimits_{i = 1}^{p} {\mu_{i} } = 1\) and positive semi-definiteness constraint \(K \ge 0\). Attaching these constraints into Eq. (20) and minimizing with respect to μ gives:

i.e.,

when the weights μ i are constrained to be nonnegative and K i are positive semi-definite, the constraint \(\sum\nolimits_{i = 1}^{p} {K_{i} } \ge 0\) is satisfied naturally. In that case, we again consider the parameterization \(K = \sum\nolimits_{i = 1}^{p} {\mu_{i} K_{i} }\) with constraint \(\sum\nolimits_{i = 1}^{p} {\mu_{i} } = 1\) and μ i ≥ 0. Substituting this into Eq. (20) and minimizing with respect to μ give the following formulation:

where \(\mu_{i}^{T} {\mathbf{1}}^{p} = 1\) means \(\sum\nolimits_{i = 1}^{p} {\mu_{i} = 1}\) (p is the number of kernels). Here, it should be noted that the order of the minimization and the maximization is interchanged in the first equation. This is right since the conditions that the objective is convex in μ and concave in α, the minimization problem is strictly feasible in μ, and the maximization problem is strictly feasible in α [1]. Equation (23) can be reformulated as the following QCQP

Such a QCQP problem can be solved efficiently with general-purpose optimization software packages, like MOSEK [23], which solve the primal and dual problems simultaneously using the interior point methods. The obtained dual variables can be used to fix the optimal kernel coefficients.

In some cases, the performance of ELM depends critically on the values of C. We show that the formulations (22) and (24) can be reformulated slightly, and this new formulation leads naturally to the estimation of the regularization parameter C in a joint framework. As can be seen from Eq. (22), the identity matrix appears in exactly the same form as kernel matrices; we can treat the regularization parameter as one of the coefficients for the kernel matrix and optimize them simultaneously. This leads to the following formulation:

where \(\mu_{0} = \tfrac{1}{C}\) and K 0 = I. To optimize the regularization parameter in Eq. (24), we modify Eq. (23) slightly:

where K 0 stands for unit matrix I, μ 0 denotes the reciprocal of regularization parameter. Substituting α T K i α by t and moving it to the constraint, we get the following quadratically constraint linear program:

We will show that the joint optimization of C works better in most cases in comparison with the approach of pre-specifying C.

3.3 SILP formulation

In this section, we show how these problems can be resolved by considering a novel dual formulation of the QCQP as a semi-infinite linear programming (SILP) problem. In the following formulation, K j represents the jth kernel matrix in a set of p + 1 kernels with the (p + 1)th kernel identity matrix. The MKL-ELM is formulated as

The MKL-ELM is presented in algorithm 2.

Step1 optimizes θ as a linear programming. Since the regularization coefficient is automatically estimated as θ p+1, step 3 simplifies to a linear problem as

4 Empirical results

In this section, we will perform classification experiments on such following data set: Banana, Breast Cancer, Titanic, Waveform, German, Image, Heart, Diabetes, Ringnorm, Thyroid, Twonorm, Flare Solar, and Splice (Table 1).

In the experiments, we will compare the following:

-

1.

SK-ELM: single-kernel ELM

-

2.

MK-ELM(MEB) [33]: the radius-incorporated multiple-kernel ELM

4.1 Multiple different kinds of kernels

Here, four sorts of different kernel functions, i.e., polynomial kernel function \(k_{1} (x,y) = (1 + x^{T} y)^{d} ,\) Gaussian kernel function \(k_{2} (x,y) = \exp \left( {{{ - \left\| {x - y} \right\|^{2} } \mathord{\left/ {\vphantom {{ - \left\| {x - y} \right\|^{2} } {\sigma^{2} }}} \right. \kern-0pt} {\sigma^{2} }}} \right),\) linear kernel function \(k_{3} (x,y) = x^{T} y,\) and Laplacian kernel function \(= \,\exp ({{ - \left\| {x - y} \right\|} \mathord{\left/ {\vphantom {{ - \left\| {x - y} \right\|} {p)}}} \right. \kern-0pt} {p)}},\) are selected to construct the multiple kernels \(k = \sum\nolimits_{i = 1}^{4} {\mu_{i} k_{i} } ,\) where the corresponding kernel parameters are specified as d = 2, \(\sigma^{2} = 20,\) p = 5 before experiments. All kernel matrixes K i are normalized through replacing \(K_{i} (m,n)\) by \({{K_{i} (m,n)} \mathord{\left/ {\vphantom {{K_{i} (m,n)} {\sqrt {K_{i} (m,m)K_{i} (n,n)} }}} \right. \kern-0pt} {\sqrt {K_{i} (m,m)K_{i} (n,n)} }}\) to get unit diagonal matrix [1, 24]. Table 2 gives the results of the single-kernel ELM experiments on the Image, Ringnorm data set, etc. The criterion TRA (%) and TSA (%) represent the accuracy of the training set and of the testing set. As can be seen from Table 2, our proposed algorithm is a very potential tool. Most of the testing rates are high, basically attaining 90 % in the Laplacian kernel cases. About 88.66 % testing rate is obtained for the Ringnorm set in ELM cases, while for the image data set, such criterion is beyond 90.64 %. On the one hand, comparisons of Table 2 may suggest that the MKL-ELM has higher (at least the same) testing rate than the single kernel; on the other hand, the former is more time-consuming than the latter in parameter selection. Therefore, the MKL-ELM is proved again to be a more potential tool than the single-kernel one. Furthermore, most experimental results indicate that the proposed algorithm has higher accuracy, as well as the robust stability than the MK-ELM (MEB), SK-ELM, and ELM on the multi-class problems, which can be observed from the multiple-kernel experimental results in Table 2. Moreover, the proposed algorithm has less time-consuming than the MK-ELM (MEB).

Table 3 reports the optimal kernel weight \(\left\{ {\mu_{i} } \right\}_{i = 1}^{4}\) for every kernel function and the experimental results through MKL, i.e., the SILP formulation through MKL in the ELM-SILP. Summation of the average value of μ 1, μ 2, μ 3, μ 4 and μ 0 is not equal to 1. The optimal kernel weights μ 1, μ 2 = 0, while μ 3, μ 4 ≠ 0 can be used to explain the cause why the MKL-ELM is likely to be better than the SK-ELM. Moreover, the TRA in the MKL-ELM can attain the maximum TRA in the SK-ELM. Higher TRA with MK will show better fitting capability of the MKL-ELM, while higher TSA will show better predication capacity. The above experimental results prove that the MKL-ELM has a lower upper bound of the expected risk and may give potential better data representation than the SK-ELM.

4.2 Fusing kernel experiments

In the section, the experiments are made through the fusing kernel learning (FKL) and exhibit the performance of the fusing kernel. In every feature set, five kinds of Gaussian kernel functions with bandwidth \(\sigma^{2} = \left[ {\begin{array}{*{20}l} {0.1} \hfill & 1 \hfill & {10} \hfill & {20} \hfill & {40} \hfill \\ \end{array} } \right]\) are used to form the fusing kernel \(k = \sum\nolimits_{i = 1}^{2} {\left( {\sum\nolimits_{j = 1}^{5} {\mu_{ij} k_{ij} } } \right)}\). On the basis of the results mentioned above, it is clear that the fusing kernel not only can provide a good data representation of feature set, but also can distinguish which kernels are fit for the underlying problem. The perception into the reasoning made by the fusing kernel may be analyzed through the kernel weights reported in Table 4. The results of kernel weights, TRA, TSA, and CPU(s) are listed in Table 4. Obviously, there are very high TRA and TSA performance, which are obtained by the fusing kernel. Of course, it should be pointed out that the accuracy improvement on the fusing kernel model is at the cost of increasing the model complexity, which can be embodied from the increased experimental CPU time in Table 4.

Figure 1 shows the training time with varying number of samples for QCQP and SILP. For the max sample size, QCQP achieves 400 and the SILP approach achieves 1,300. For the training time, our approach costs the least time, three times faster than QCQP. In fact, our algorithm can solve various scale problems. We find that the termination of SILP is due to the problem of out of memory in Matlab, and the termination of QCQP is due to the same problem in MOSEK.

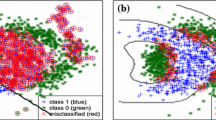

The benchmark data set was constructed by 2,000 samples in Fig. 2. We used different kernel widths to construct the RBF kernel matrices and increase the number of kernel matrices from 2 to 200. The QCQP formulations had memory issues when the number of kernels was larger than 80.

In Fig. 3, the benchmark data were made up of two linear kernel matrices and 1,000 samples. The samples were equally and randomly divided into various numbers of classes. The number of classes gradually increased from 2 to 20.

5 Conclusions and future research

We investigate the issue of multiple-kernel learning based on ELM for classification in this paper. This problem is formulated as convex programs, and thus, globally optimal solutions are guaranteed. The final kernel functions for the multiple-kernel models are determined by black-box learning from the initially proposed kernel functions. Theoretically, the kernel functions used to construct the multiple-kernel models may be different for various problems, so the problem insight could impact on the selection of the kernel functions to improve the performance. In fact, some convex optimization problems are computationally expensive, and the proposed algorithm is efficient to solve. Furthermore, we consider the problem of optimizing the kernel, thus approaching the desirable goal of automated model selection. To evaluate the proposed algorithms, we have conducted extensive experiments, which demonstrate the effectiveness.

The proposed MKL-ELM classifier is to learn the optimal combination of multiple large-scale data sets. Despite multiple-kernel ELM displaying some superiorities over the single-kernel ELM both theoretically and experimentally, there is much work worth investigating in the future, such as developing efficient algorithm to deal with large-scale training problem resulted from the increasing of the number of kernels.

From the practical point of view, our method can be easily applied in lots of applications, such as pattern recognition, time serial prediction, high-magnification sample image in painting, and bioinformatics.

References

Lanckriet G, Cristianini N, Ghaoui LE, Bartlett P, Jordan MI (2004) Learning the kernel matrix with semi-definite programming. J Mach Learn Res 5:27–72

Schölkopf B, Smola AJ (2002) Learning with kernels. The MIT Press, Cambridge

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. The Cambridge Univ Press, Cambridge

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17:879–892

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang GB, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern B Cybern 42:513–529

Han F, Huang DS (2006) Improved extreme learning machine for function approximation by encoding a priori information. Neurocomputing 69:2369–2373

Kim CT, Lee JJ (2008) Training two-layered feedforward networks with variable projection method. IEEE Trans Neural Netw 19:371–375

Zhu QY, Qin AK, Suganthan PN, Huang GB (2005) Evolutionary extreme learning machine. Pattern Recogn 38:1759–1763

Wang Y, Cao F, Yuan Y (2011) A study on effectiveness of extreme learning machine. Neurocomputing 74:2483–2490

Li GH, Liu M, Dong MY (2010) A new online learning algorithm for structure-adjustable extreme learning machine. Comput Math Appl 60:377–389

Huang GB, Chen L (2007) Convex incremental extreme learning machine. Neurocomputing 70:3056–3062

Huang GB, Li MB, Chen L, Siew CK (2008) Incremental extreme learning machine with fully complex hidden nodes. Neurocomputing 71:576–583

Feng G, Huang GB, Lin Q, Gay R (2009) Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans Neural Netw 20:1352–1357

Rong HJ, Ong YS, Tan AH, Zhu Z (2008) A fast pruned-extreme learning machine for classification problem. Neurocomputing 72:359–366

Rong HJ, Huang GB, Sundararajan N, Saratchandran P (2009) Online sequential fuzzy extreme learning machine for function approximation and classification problems. IEEE Trans Syst Man Cybern B Cybern 39:1067–1072

Yoan M, Sorjamaa A, Bas P, Simula O, Jutten C, Lendasse A (2010) OP-ELM: optimally pruned extreme learning machine. IEEE Trans Neural Netw 21:158–162

Lan Y, Soh YC, Huang GB (2010) Constructive hidden nodes selection of extreme learning machine for regression. Neurocomputing 73:3191–3199

Yu S, Falck T, Daemen A et al (2010) L2-norm multiple kernel learning and its application to biomedical data fusion. BMC Bioinformatics 11:1–53

Ye JP, Ji SW, Chen JH (2008) Multi-class discriminant kernel learning via convex programming. J Mach Learn Res 9:719–758

Serre D (2002) Matrices: Theory and Applications. Springer, New York

Sonnenburg S, Ratsch G, Schafer C, Scholkopf B (2006) Large scale multiple kernel learning. J Mach Learn Res 7:1531–1565

Andersen ED, Andersen AD (2000) The MOSEK interior point optimizer for linear programming: an implementation of the homogeneous algorithm. In: Frenk H, Roos C, Terlaky T, Zhang S (eds) High performance optimization. Kluwer Academic Publishers, Norewell, USA, pp 197–232

Bach FR, Lanckriet GRG, Jordan MI (2004) Multiple kernel learning, conic duality, and the SMO algorithm. In: Proceedings of the 21st International Conference on Machine Learning (ICML). ACM, Banff, pp 6–13

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12:55–67

Yang H, Xu Z, Ye J, King I, Lyu MR (2011) Efficient sparse generalized multiple kernel learning. IEEE Trans Neural Netw 22:433–446

Gönen M, Alpaydın E (2011) Multiple kernel learning algorithms. J Mach Learn Res 12:2211–2268

Gu Y, Qu Y, Fang T, Li C, Wang H (2012) Image super-resolution based on multikernel regression. The 21st International Conference on in Pattern Recognition (ICPR)

Zong WW, Huang G-B, Chen Y (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101:229–242

Parviainen E, Riihimäki J, Miche Y, Lendasse A (2010) Interpreting extreme learning machine as an approximation to an infinite neural network. In: Proceedings of the International Conference on Knowledge Discovery and Information Retrieval, pp 65–73

Huang GB, Wang D, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybernet 2:107–122

Hinrichs C, Singh V, Peng J, Johnson S (2012) Q-MKL: Matrix-induced regularization in multi-kernel learning with applications to neuroimaging. In: NIPS, pp 1430–1438

Liu X, Wang L, Huang G-B, Zhang J, Yin J (2013) Multiple kernel extreme learning machine. Neurocomputing. doi:10.1016/j.neucom.2013.09.072

Acknowledgments

We give warm thanks to Prof. Guangbin Huang, Prof. Zhihong Men, and the anonymous reviewers for helpful comments. This work was supported by the Zhejiang Provincial Natural Science Foundation of China (No. LR12F03002) and the National Natural Science Foundation of China (No. 61074045).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, X., Mao, W. & Jiang, W. Multiple-kernel-learning-based extreme learning machine for classification design. Neural Comput & Applic 27, 175–184 (2016). https://doi.org/10.1007/s00521-014-1709-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1709-7