Abstract

In this paper, stochastic techniques have been developed to solve the 2-dimensional Bratu equations with the help of feed-forward artificial neural networks, optimized with particle swarm optimization (PSO) and sequential quadratic programming (SQP) algorithms. A hybrid of the above two algorithms, referred to as the PSO-SQP method is also studied. The original 2-dimensional equations are solved by first transforming them into equivalent one-dimensional boundary value problems (BVPs). These are then modeled using neural networks. The optimization problem for training the weights of the network has been addressed using particle swarm techniques for global search, integrated with an SQP method for rapid local convergence. The methodology is evaluated by applying on three different test cases of BVPs for the Bratu equations. Monte Carlo simulations and extensive analyses are carried out to validate the accuracy, convergence and effectiveness of the schemes. A comparative study of proposed results is made with available exact solution, as well as, reported numerical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence techniques based on neural networks optimized with efficient global and local search methodologies have been extensively used to solve variety of the linear and nonlinear systems based on ordinary and partial differential equations [1–5]. For example, stochastic solvers based on neural networks optimized with evolutionary computing and swarm intelligence algorithms have been employed to solve the nonlinear oscillatory systems with both stiff and non-stiff scenarios [6, 7], nonlinear magnetohydrodynamics (MHD) problems [8], fluid dynamics problems based on nonlinear Jaffery–Hamel flow equations [9], the nonlinear Schrodinger equations [10], one-dimensional Bratu’s problems arise in fuel ignition model [11, 12], Troesch’s problem arising in the study of confinement of a plasma column by radiation pressure [13, 14] and nonlinear singular systems based on Lane Emden flower equations [15]. A good a survey article is referred for interested readers [16] that summarized the importance, history, recent development and future progress of research in this area. Applicability domain of these methods has been extended to solve effectively the differential equations of fractional order as well [17, 18]. For instance, the fractional Riccati differential equation and the Bagley–Torvik fractional system [19, 20] are other significant applications of such solvers. Recently, the evolutionary computing approach based on genetic algorithms (GAs) and interior-point method (IPM) is applied to 2-dimensional (2D) Bratu’s problems by transforming into equivalent one-dimensional ordinary differential equation [21]. This study presents the extension of previous works by applying the hybrid or memetic computing technique based on artificial neural networks (ANN), particle swarm optimization (PSO) and sequential quadratic programming (SQP) algorithms to solve the transformed boundary value problem (BVP) of 2D Bratu’s type equation.

The PSO algorithms since their introduction by Kennedy and Eberhart [22] have been used extensively in diverse fields as an alternate to genetic algorithms to get better optimal results. PSO techniques belong to a class of global optimization techniques which were developed on the basis of mathematical modeling of bird and fish behavior. Discrete and continuous versions of the algorithms have been developed along with many variants, and these are broadly used as expert systems for constrained and unconstrained optimization problems. A few examples in which PSO methods have performed exceedingly well include mobile communication, sensor networks, inventory control, multiprocessor scheduling, controls, stock market prediction [23–26]. These optimizers are good candidate to explore for finding the optimal design parameter of neural networks model to difficult nonlinear singular BVPs of differential equation.

In the present study, alternate stochastic numerical solution is developed for the transformed form of 2D Bratu’s equation, whose generic n-dimensional form is given as:

Here ∆ is the Laplacian operator, u represents the solution to the equation, and μ is a real number. Usually the domain Ω is taken to be the unit interval [0, 1] in ℜ, or the unit square [0, 1]·[0, 1] in ℜ2, or the unit cube [0, 1]·[0, 1]·[0, 1] in ℜ3. In case Ω = B 1 is the unit ball in ℜn, n > 1, the n-dimensional Bratu equation (1) is transformed into an equivalent one-dimensional nonlinear BVP with singularity at origin as follows [21, 27–29]:

Detailed derivation of this transformation is given in [29]. 2D Bratu’s problem are derived from (2) by taking n = 2 as:

Bratu problem (1–3) arises in the simplification of solid fuel ignition models in the field of thermal combustion theory. These problems have a long history, few famous generalization are the “Liouville–Gelfand” or “Liouville–Gelgand–Bratu” problem in recognition of the great French mathematicians Liouville and Gelfand [30–32]. The problem comes up extensively in various physical problems in applied science and engineering, such as chemical reactor theory, nanotechnology, radiative heat transfer, and the Chandrasekhar model for the expansion of the universe [31–35]. Recent articles in which solutions of Bratu-type equations have been given include the non-polynomial spline method of Jalilian [36], the one-point pseudospectral collocation method [37] of Boyd and the Lie-group shooting method (LGSM) by Abbasbandy et al. [38.]

In this study, the strength of ANNs has been exploited once again to develop an approximate mathematical model for the Bratu-type BVP. The accuracy of the model is subject to the tuning of the adjustable parameters, i.e., the weights of the ANNs. A swarm intelligence technique based on the PSO algorithm, the SQP method and a hybrid scheme PSO-SQP are used for the training of the weights of the ANNs. The viability and reliability of this method has been validated by a large number of independent runs of the algorithms along with their detailed statistical analysis. Comparison of the results is made with the reported exact and numerical solution.

2 Neural network modeling

In this section, a detailed description of modeling of BVPs of Bratu-type equations using ANNs is presented. We also formulate an unsupervised fitness function for the network.

2.1 Mathematical model

An approximate mathematical model for the Bratu equations is developed with the help of feed-forward ANNs by exploiting its strength of universal function approximation capability. It is well known that the solution u(r) to the equation and its kth derivative u (k) can be modeled by the following continuous neural network mapping [1, 5, 21]:

Here α i , w i and β i are the adaptive (real-valued) network parameters, and m is the number of neurons in the network. f is the activation function normally taken as log-sigmoid function for hidden layers and linear function is used for the output layers:

Using the log-sigmoid function as given above in Eq. (4), the updated form of the solution u(r) to Eq. (3), and its first (u′) and second (u″) derivatives, respectively, are written as:

The arbitrary combination of the networks given in above set of equations is used to develop the approximate model of the second-order differential equations, in-particularly, Eq. (3).

2.2 Fitness function

The fitness function for the Bratu equations in terms of the unsupervised error function ε is defined as the sum of the mean squared errors:

and the error ε 1 associated with the differential equations is formulated as:

Here the interval r ∈ (0, 1) is divided into K steps r ∈ (r 0 = 0, r 1, r 2, …, r k = 1) with step size h, and \( \hat{u}(r),\;\hat{u}^{{\prime }} \;{\text{and }}\hat{u}^{{\prime \prime }} \) are the outputs of the neural networks as given by the set of Eq. (6).

Similarly, the error ε 2 due to the boundary conditions can be written as:

It can be seen that for weights α i , w i and β i for which the functions ε 1 and ε 2 approach zero, the value of the unsupervised error ε also approaches 0. The ANN thus approximates the solution u(r) of Eq. (3) with û(r) as given by (6). The architecture of the network is presented graphically in Fig. 1 and will be referred to as a differential equation artificial neural network (DE-ANN).

Neural networks architecture for nonlinear singular system given in (3). a For error function ɛ 1 associated with differential equation, b for error function ɛ 1 associated with differential equation

3 Learning procedures

In this section, we give a brief introduction to the PSO and SQP algorithms, which are used to train the weights of the DE-ANN. The procedure for execution of the algorithms along with their parameter settings is described stepwise.

Swarm intelligence techniques often referred to as particle swarm optimization (PSO) algorithms were originated from the work of Kennedy and Eberhart [22]. In the standard working of PSO method, each candidate solution to an optimization problem is taken as a particle in the search space. The exploration exploitation of the entire search space is made with a population of particles referred to as a “swarm.” All particles of the swarm have their own values of fitness according to the problem specific objectives. The particles of the swarm are initialized randomly; the position and velocity of every particle are updated in each flight, according to the previous best local p LB (t − 1) and global p GB (t − 1) positions of that particle. The generic updating formulae for particle velocity and position in the standard continuous PSO are written as:

where the vector x represents a particle of the swarm X, consist of sufficient number of particles, v is the velocity vector for the particle, ω is the inertia weight (linearly decreasing over the course of the search between 0 and 1), c 1 and c 2 are the local and global social acceleration constants, respectively, and rd 1 and rd 2 are random vectors with values between 0 and 1. The elements of velocity are bounded as v i ∈ [−v max, v max], where v max is the maximum velocity. If the velocity exceeds the limits, it will be reset to the respective lower or upper bound. The global learning capabilities of the PSO is exploited for finding the optimal results along with the combination with local search techniques based of efficient sequential quadratic programming (SQP) technique for further fine-tuning of results.

The SQP methods belong to a class of nonlinear programming techniques suited well for constrained optimization problems. Their importance and significance is well established on the basis of efficiency, accuracy and percentage of successful solutions to a large number of test problems. A broad introduction and review of mathematical programming techniques for large-scale numerical optimization is given by Nocedal and Wright [39]. A good source of reference material on SQL algorithms can be found in [40, 41]. The SQP methods have been used by many researchers in diverse areas of applied science and engineering. A few recently published articles can be seen in [42, 43].

Keeping in view the strengths of the PSO and SQP algorithms, our intention is to use these schemes along with their hybrid approach referred to as PSO-SQP, for training the weights of the DE-ANN. The generic flow diagram of the process of the hybrid PSO-SQP technique is shown graphically in Fig. 2, while the necessary detailed procedural steps are given as follows:

- Step 1:

-

Initialization: Initialize the swarm consists of particles generated randomly using bounded real values. Each particle has as many elements as the number of unknown adaptive parameters of the DE-ANN. The parameters are initialized as per settings given in Table 1, and then the execution of the algorithm is started.

Table 1 Parameter settings for PSO and SQP techniques - Step 2:

-

Fitness evaluation: Calculate fitness values for each particle using the fitness function given in (7) and subsequently the Eqs. (8) and (9).

- Step 3:

-

Termination criteria: Terminate the algorithm if any of following criterion is satisfied:

-

Fitness value less than a pre-specified tolerance is achieved, e.g., ε ≤ 10−16.

-

Maximum number of cycles is executed.

If any of the above criteria is met, then go to step 5.

-

- Step 4:

-

Renewal: Update the velocity and position of the particles using the Eq. (10), and go to step 2 for the next execution cycle, i.e., flight.

- Step 5:

-

Refinement: MATLAB built-in function (FMINCON with algorithm SQP) is used for running the SQP method for fine-tuning of the results. Following procedural is adapted for SQP algorithm:

-

(a)

Initialization: The best fitted particle from the PSO technique is taken as the start point or initial weight vector for the SQP algorithm: bounds and other declarations for program and tool initialization are given in Table 1.

-

(b)

Evaluation of fitness: Calculate the value of fitness function, ε, as given in (7) and subsequently the Eqs. (8) and (9).

-

(c)

Termination criteria: Terminate the iterative process of updating the weights of ANNs, if any of the following criteria fulfilled

-

Predefined total number of iterations completed.

-

Any value for function tolerance (TolFun), maximum function evaluation (MaxFunEvals), X-tolerance (TolX), or constraints tolerance (TolCon) is achieved as defined in Table 2.

Table 2 Comparison of the results for μ = 0.5 If termination criterion fulfilled, then go to step 6.

-

-

(d)

Updating of weights: Step increment in SQP procedure is made and go to step 5(c).

-

(a)

- Step 6:

-

Storage: Store the values of the best global individual along with its fitness and time taken for this runs of the algorithm.

- Step 7:

-

Statistical analysis: Repeat steps 1–6 for a sufficiently large number of independent runs of the algorithm in order to have reliable results for subsequent statistical analysis.

4 Simulation and results

In this section, we present the results of simulations of the designed methodology applied to the nonlinear Bratu problem with singularity at origin for the three variants corresponding to different values of μ. The Bratu equation (3) has either zero, one or two solutions depending on whether μ > μ c, μ = μ c, or μ < μ c, respectively, where μ c represents the critical value of the coefficient μ (μ c = 2 [27–29]). Results for the three cases μ = 0.5, 1 and 2 are given here and compared with the exact solution. A statistical analysis is also provided in each case.

4.1 Bratu equation for μ = 0.5

Consider the BVP of the form (3), given in this case as: [21]

The exact solution of the above problem is given as: [21]

To solve this case of the Bratu equation using the proposed DE-ANN algorithm, we take 10 neurons in each hidden layer of the ANN, which results in 30 weights (α i , w i and β i ). The training set is taken from inputs r ∈ (0, 1) with a step of h = 0.1, i.e., a total of 11 grid points in the entire domain. The fitness function ε for this problem is formulated using Eqs. (7), (8) and (9) as:

The optimization of weights of the DE-ANN is carried out using the PSO, SQP and PSO-SQP algorithms for one hundred independent runs using the parameter settings as given in Table 1. One set of weights learned by the PSO, the SQP and the hybrid PSO-SQP methods with fitness values of 1.2417 × 10−08, 1.3753 × 10−13, and 2.6895 × 10−12, respectively, are tabulated in Appendix Table 10. The solution obtained using first equation of set (6) and weights given in Table 10 for the 11 input points in r ∈ (0, 1) with a step size of 0.1 is given in Table 2. The exact solution and reported results [21] of GA, IPA and GA-IPA to the problem are also tabulated in Table 2 for the same inputs. The maximum value of the absolute error (AE) is defined as absolute deviation from the exact solution and its values for PSO, SQP and PSO-SQP techniques are 4.37 × 10−06, 2.53 × 10−07 and 2.31 × 10−07, respectively, while for reported GA, IPA and GA-IPA, these are 6.15 × 10−05, 7.56 × 10−07 and 5.55 × 10−07, respectively. It is seen that the generally the accuracy of the present methods is superior to the reported results.

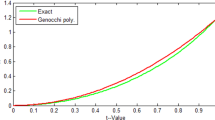

The accuracy and convergence of the proposed algorithms is examined by calculating the values of mean absolute error (MAE) and fitness achieved (FA) based on one hundred independent runs. Results are shown graphically in Fig. 3 on a semi-log scale in order to elaborate small differences in values. In Fig. 3a, c, the values of the fitness function ε and MAE are plotted, respectively, for each algorithm against the number of independent runs of the algorithms. The runs are renumbered so that FA and MAE are plotted in ascending order in Fig. 3b, d. The values of fitness function ε as given in (13) for the PSO algorithm lie in the range 10−05–10−08, while for both SQP and PSO-SQP it lies in the range 10−08–10−13. Similarly, the values of MAE are of the order of 10−03–10−06 for the PSO technique, while for both SQP and PSO-SQP schemes it lies in the range 10−05–10−08. It is seen that there is no noticeable difference between SQP and PSO-SQP for this variant of BVP (3) and relatively better than that of PSO algorithm.

The statistical analysis of the results for each solver is now performed using the mean and standard deviation (STD) along with the minimum (MIN) and maximum (MAX) values of the absolute error. The results are tabulated in Table 3 along with reported results of GA-IPA for inputs between 0 and 1 with a step size of 0.2. It is seen that the mean value lies in the range 10−04–10−05 for the PSO technique, while for both SQP and PSO-SQP algorithms it lies between 10−05 and 10−08. It is also observed that there is no noticeable difference in the values of the statistical parameters for the SQP and PSO-SQP present and reported GA-IPA methods; however, the results of the hybrid PSO-SQP scheme are generally more accurate than those of the SQP technique. Also these are much better than the solutions given by the PSO algorithm. Moreover, the reported results of GA given in [21] are generally inferior to the result proposed PSO algorithm.

4.2 Bratu equation for μ = 1.0

The nonlinear BVP of the form (3) for μ = 1.0 is given as: [21]

The exact solution of the above equation is given as: [21]

The given problem (14) is solved using the same methodology as applied for the case μ = 0.5; however, the fitness function ε formulated in this case is:

Again optimization of the weights of the DE-ANN is carried out with PSO, SQP, and PSO-SQP algorithms for a hundred independent runs. A set of trained weights is given in Appendix Table 11 for the three techniques with fitness values of 2.8207 × 10−09, 1.2113 × 10−11 and 3.1141 × 10−11, respectively. The solution to problem (14) is now determined using these weights for inputs r ∈ (0, 1) with a step size of 0.1, and results are presented in Table 4 along with reported and exact solution. The maximum values of the AE for proposed PSO, SQP and PSO-SQP methods are 1.34 × 10−05, 4.91 × 10−08 and 2.43 × 10−07, respectively, while for reported GA, IPA and GA-IPA [21], these values are 1.94 × 10−04, 6.38 × 10−07 and 2.03 × 10−07, respectively. Generally the proposed results are relatively better than that of the reported results.

The values of the MAE and fitness function ε as given in Eq. (16) are computed for the 100 independent runs for the three techniques, and results are plotted in Fig. 4. The value of ε lies in the range 10−05–10−08, 10−02–10−13 and 10−09–10−13 for the PSO, SQP and PSO-SQP methods, respectively. Similarly, the value of MAE for the three respective algorithms lies in the range 10−03–10−05, 1000–10−08 and 10−04–10−08. It is observed that for some independent runs the results of the SQP algorithm are not convergent, while the PSO and PSO-SQP methods remained convergent for all runs. As far as accuracy is concerned, the values of MAE for the convergent cases of the SQP and PSO-SQP algorithms are better than those of the PSO algorithm. Generally, the hybrid approach PSO-SQP provides the most accurate and convergent results for this case of the Bratu equation.

The accuracy of the proposed algorithms is analyzed further on the basis of the statistical parameters calculated for 100 independent runs of each solver. The results are presented in Table 5 for inputs 0–1 with a step size of 0.2. The reported results [21] of statistical analysis for hybrid approach GA-IPA are also tabulated in Table 5 for the same results. It can be seen that the values for MIN, MAX, mean and STD of the AE are lowest for the hybrid approach PSO-SQP. The mean values of the AE calculated for PSO, SQP and PSO-SQP algorithms lie in the range 10−04–10−05, 10−02–10−03 and 10−05–10−08, respectively. The results of the SQP method get deteriorated due to convergence problems observed in a few runs, this is because the SQP technique is a local search method and therefore more probable to getting stuck in some local minimum. The PSO-SQP technique stands out as the most accurate and convergent of the three proposed schemes, and generally its results are in good agreement with reported GA-IPA technique.

4.3 Bratu equation for μ = 2.0

The singular BVP of nonlinear Bratu equation (3) is given for this case as: [21]

The exact solution to the above equation is given as: [21]

Equation (17) is solved following the procedure outlined above for the two cases; however, the fitness function ε in this case is formulated as:

Optimal weights for the DE-ANN are learned using the PSO, SQP and PSO-SQP algorithms for one hundred independent runs. One set of weights is tabulated in Appendix Table 12 for the three algorithms. The values of the unsupervised error (19) are 1.8234 × 10−06, 1.8791 × 10−10 and 1.5703 × 10−10, respectively, for the three proposed methods. The solution to equation (17) is approximated using weights in Table 12 for inputs r ∈ (0, 1) with a step size of 0.1, and results are presented in Table 6 along with the reported results. The maximum values of the AE for the proposed PSO, SQP and PSO-SQP algorithms are 3.16 × 10−02, 7.90 × 10−05 and 3.18 × 10−04, respectively, while the reported GA, IPA and GA-IPA methods, these results are 1.19 × 10−01, 5.89 × 10−04 and 2.82 × 10−04, respectively.

The values of MAE and FA as given in expression (19) are also computed for 100 independent runs for the three proposed algorithms, and results shown graphically in Fig. 5. It can be seen that the value of ε achieved lies in the range 10−04–10−06, 10−00–10−12 and 10−08–10−12 for the PSO, SQP and PSO-SQP methods, respectively, while the values of MAE lies in the range 10−04–10−06, 10−00–10−12 and 10−08–10−12, for the three respective algorithms. A few runs of the SQP method diverge in this case also, while both the PSO and PSO-SQP algorithms remain consistently convergent, the hybrid scheme being the most accurate.

The accuracy of the PSO, SQP and PSO-SQP solvers is further investigated based on statistical analysis for the 100 independent runs. The results of mean, STD, MIN and MAX values of the AE are given in Table 7 for inputs 0–1 with a step size of 0.2. The reported result for hybrid approach GA-IPA is also given in the table for the same inputs. It is seen that the values of MIN, MAX, mean and STD of the AE are generally lowest for the PSO-SQP hybrid technique. Mean values of the AE for PSO, SQP and PSO-SQP algorithms lie in the range 10−01–10−03, 10−01–10−02 and 10−03–10−06, respectively. The MIN values of the AE for SQP and PSO-SQP methods are better than that of the PSO algorithm. Moreover, the values of statistical parameters of the proposed scheme PSO-SQP match closed with the reported results of GA-IPA.

5 Comparative analyses of the solvers

In this section, a comparative analysis is presented for the numerical computations carried out in the last section based on values of the MAE, global mean absolute error (GMAE), mean fitness achieved (MFA), convergence rate and computational time taken for each algorithm.

The reliability and effectiveness of the proposed solvers have been analyzed by computing the values of MAE and FA for 100 independent runs of each algorithm. The accuracy and convergence of the results based on values of MAE ≤ 10−03, 10−04, 10−05 and 10−06 are provided in Table 8. Percentage acceptability of the solution û(r) for the three cases: μ = 0.5, 1.0 and 2.0 are also given in the table. It is seen that acceptability of the results based on MAE ≤ 10−04 for the case of μ = 1.0 is >90 % for all the proposed schemes. It is also observed that with increase in the value of the coefficient μ the problem becomes stiffer and the accuracy decreases. Reliable solutions are still provided by SQP and PSO-SQP methods even for the case μ = 2.0, i.e., when μ is close to its critical value; the accuracy of the solution, however, degrades close to the critical value.

The values of the fitness function ε as given in Eqs. (13), (16) and (19) are also calculated for 100 independent runs using weights optimized by the PSO, SQP and PSO-SQP algorithms. Results for the solvers are given in Table 8 based on values of FA or the unsupervised error ε ≤ 10−03, 10−05, 10−07 and 10−09. It is seen that the PSO-SQP algorithm gives consistently better and convergent results as compared to the other techniques. The rate of convergence decreases with increase in the value of the coefficient μ, especially when it is near its critical value.

Two additional performance factors GMAE and MFA are introduced here in order to better compare the results of the proposed solvers:

Here P and R are integers, representing the total number of grid points, and the total number of independent runs of the solver, respectively. u i denotes the exact solution of the Bratu equation for the ith input, \( \hat{u}_{i}^{j} \) is the approximate solution for the ith input and the jth independent run, and ɛ j represents the fitness achieved for the jth run of the algorithm. In-depth evaluation of the performance of the algorithms is analyzed by these operators because smaller the values of GMAE and MF obtained by the algorithms mean that consistently accurate and convergent results are determined by the algorithm.

In our experimentation, we have taken 11 inputs or grid point, i.e., P = 11 and 100 independent runs, i.e., R = 100. The values of the GMAE and MFA are computed for the Bratu Problems for μ = 0.5, 1.0 and 2.0 for the three proposed methods. The proposed results are provided in Table 9 along with the values of the respective standard deviations (STD). The reported results of GA, IPA and GA-IPA are also embedded in the same table for both global performance operators. It is evident that results of the PSO-SQP hybrid approach are the best when compared to the PSO and SQP methods. Divergence is observed for a few runs of the SQP technique, and this has a significant impact on values of GMAE and MFA. The solution û(r) by the PSO-SQP hybrid approach matches with the exact solution u(r) with an accuracy of up to 9, 8, and 5 places of decimal for the μ = 0.5, 1.0 and 2.0 cases, respectively. Whereas comparing with the reported results, it is observed that proposed PSO results are consistently better than that of GAs for all three variants of Bratu problem, while the results for both hybrid approaches matched closed and better than other single algorithms used for optimization.

The computational complexity of the proposed algorithms is examined on the basis of average time taken for their execution, along with the values of STD. We have made the computational time analysis based on 100 independent runs of each solver, and results are given in Table 9 for the mean execution time (MET) and its STD. It is observed that the computational time taken by the PSO-SQP method is a bit longer than that taken by the PSO and SQP techniques, but on the other hand it has an invariable performance advantage in terms of accuracy and reliability. The computational time taken by proposed PSO, SQP and PSO-SQP is relatively less than that of reported results [21] for GA, IPA and GA-IPA, while obtaining the results with almost same level of accuracy and convergence. This analysis is carried out on a Dell Precision 390 Workstation, with Intel(R) Core(TM) 2 CPU 6000@2.40 GHz, 2.00 GB RAM, and running MATLAB version 2011a.

6 Conclusions

The nonlinear 2-dimensional Bratu equation has been solved alternately and effectively using DE-ANNs optimized with PSO, SQP and PSO-SQP algorithms, after the original problem is transformed into an equivalent one-dimensional nonlinear BVP with singularity at origin.

Comparison of the results with exact solutions shows that the PSO-SQP method gives an absolute error in the range 10−07–10−10, 10−07–10−10 and 10−04–10−06 for the cases μ = 0.5, 1.0 and 2.0, respectively. In general the PSO-SQP hybrid scheme gave not only the most accurate from PSO and SQP but also relatively better than that of reported numerical results of GA, IPA and GA-IPAs.

The reliability and effectiveness of the proposed artificial intelligence techniques were validated by a large number of independent runs of the algorithms and their statistical analysis. The PSO-SQP method provided convergence in all independent runs for all three cases.

Comparative analysis of the three proposed algorithms showed that the PSO-SQP technique invariably provided the best mean fitness values and also the minimum value of the global mean absolute error for all problem cases, but it had a slightly longer computational time. The SQP technique provides results with comparative accuracy, but convergence is a problem. The results of the PSO algorithm converge for all problem cases, and the accuracy attained is lower than of the other two approaches.

Comparison of the given solution based on global performance operators shows the results due to PSO is relatively better than GA, while the hybrid approach PSO-SQP achieve same level of accuracy and convergence as GA-IPA but with relatively lower in computational complexity.

Other optimization techniques, such as ant/bee colony optimization, genetic programming, differential evolution can also be applied in order to solve the BVPs for Bratu-type equations; this can be a topic of further research on the subject.

References

Parisi DR, Mariani MC, Laborde MA (2003) Solving differential equations with unsupervised neural networks. Chem Eng Process 42(8–9):715–721

Khan JA, Raja MAZ, Qureshi IM (2011) Stochastic computational approach for complex non-linear ordinary differential equations. Chin Phys Lett 28(2):020206–020209

Yazdi HS, Pourreza R (2010) Unsupervised adaptive neural-fuzzy inference system for solving differential equations. Appl Soft Comput 10(1):267–275

Shirvany Y, Hayati M, Moradian R (2009) Multilayer perceptron neural networks with novel unsupervised training method for numerical solution of the partial differential equations. Appl Soft Comput 9(1):20–29

Beidokhti RS, Malek A (2009) Solving initial-boundary value problems for systems of partial differential equations using neural networks and optimization techniques. J Franklin Inst 346(9):898–913

Khan JA, Raja MAZ, Qureshi IM (2011) Hybrid evolutionary computational approach: application to van der Pol oscillator. Int J Phys Sci 6(31):7247–7261. doi:10.5897/IJPS11.922

Khan JA, Raja MAZ, Qureshi IM Novel (2011) Approach for van der Pol oscillator on the continuous time domain. Chin Phys Lett 28:110205. doi:10.1088/0256-307X/28/11/110205

Raja MAZ, Samar R (2014) Numerical treatment for nonlinear MHD Jeffery–Hamel problem using neural networks optimized with interior point algorithm. Neurocomputing 124:178–193. doi:10.1016/j.neucom.2013.07.013

Raja MAZ, Samar R (2014) Numerical treatment of nonlinear MHD Jeffery–Hamel problems using stochastic algorithms. Comput Fluids 91:28–46

Monterola Christopher, Saloma Caesar (2001) Solving the nonlinear Schrodinger equation with an unsupervised neural network. Opt Express 9(2):16

Raja MAZ, Ahmad SI (2014) Numerical treatment for solving one-dimensional Bratu problem using neural networks. Neural Comput Appl 24(3–4):549–561. doi:10.1007/s00521-012-1261-2

Raja MAZ (2014) Solution of one-dimension Bratu equation arising in fuel ignition model using ANN optimized with PSO and SQP. Connect Sci. doi:10.1080/09540091.2014.907555

Raja MAZ (2014) Stochastic numerical techniques for solving Troesch’s problem. Inf Sci. doi:10.1016/j.ins.2014.04.036

Raja MAZ (2014) Unsupervised neural networks for solving Troesch’s problem. Chin Phys B 23(1):018903

Khan JA, Raja MAZ, Qureshi IM (2011) Numerical treatment of nonlinear Emden–Fowler equation using stochastic technique. Ann Math Artif Intell 63(2):185–207

Kumar Manoj, Yadav Neha (2011) Multilayer perceptrons and radial basis function neural network methods for the solution of differential equations: a survey. Comput Math Appl 62(10):3796–3811

Raja MAZ, Khan JA, Qureshi IM (2011) Swarm intelligent optimized neural networks for solving fractional differential equations. Int J Innov Comput Inf Control 7(11):6301–6318

Raja MAZ, Khan JA, Qureshi IM (2010) Evolutionary computational intelligence in solving the fractional differential equations. Lecture notes in computer science, vol 5990, part 1. Springer, ACIIDS Hue City, Vietnam, pp 231–240

Raja MAZ, Khan JA, Qureshi IM (2011) Solution of fractional order system of Bagley–Torvik equation using evolutionary computational intelligence. Math Probl Eng 2011:01–18, Article ID. 765075

Raja MAZ, Khan JA, Qureshi IM (2010) A new stochastic approach for solution of Riccati differential equation of fractional order. Ann Math Artif Intell 60(3–4):229–250

Raja MAZ, Ahmad SI, Samar R (2013) Neural network optimized with evolutionary computing technique for solving the 2-dimensional Bratu problem. Neural Comput Appl 23(7–8):2199–2210. doi:10.1007/s00521-012-1170-4

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks, Perth, Australia, IEEE Service Center, vol 4. Piscataway, NJ, pp 1942–1948

Sivanandam SN, Visalakshi P (2007) Multiprocessor scheduling using hybrid particle swarm optimization with dynamically varying inertia. Int J Comput Sci Appl 4(3):95–106

Li G-D, Masuda S, Yamaguchi D, Nagai M (2009) The optimal GNN-PID control system using particle swarm optimization algorithm. Int J Innov Comput Inf Control 5(10):3457–3470

de A Araujo R (2010) Swarm-based translation-invariant morphological prediction method for financial time series forecasting. Inf Sci 180(24):4784–4805

Li X, Wang J (2007) A steganographic method based upon JPEG and particle swarm optimization algorithm. Inf Sci 177(15):3099–3109

Syam MI, Hamdan A (2006) An efficient method for solving Bratu equations. Appl Math Comput 176:704–713

Syam MI (2007) The modified Broyden-variational method for solving nonlinear elliptic differential equations. Chaos Solitons Fractals 32:392–404

Gidas B, Ni W, Nirenberg L (1979) Symmetry and related properties via the maximum principle. Commun Math Phys 68:209–243

Bratu G (1914) Sur les équations intégrales non linéaires. Bull Soc Math France 43:113–142

Gelfand IM (1963) Some problems in the theory of quasi-linear equations. Trans Am Math Soc Ser 2:295–381

Jacobsen J, Schmitt K (2002) The Liouville–Bratu–Gelfand problem for radial operators. J Differ Equ 184:283–298

Buckmire R (2003) On exact and numerical solutions of the one-dimensional planar Bratu problem. http://faculty.oxy.edu/ron/research/bratu/bratu.pdf

Frank-Kamenetski DA (1955) Diffusion and heat exchange in chemical kinetics. Princeton University Press, Princeton, NJ

Wan YQ, Guo Q, Pan N (2004) Thermo-electro-hydrodynamic model for electrospinning process. Int J Nonlinear Sci Numer Simul 5(1):5–8

Jalilian R (2010) Non-polynomial spline method for solving Bratu’s problem. Comput Phys Commun 181:1868–1872

Boyd JP (2011) One-point pseudospectral collocation for the one-dimensional Bratu equation. Appl Math Comput 217:5553–5565

Abbasbandy S, Hashemi MS, Liu C-S (2011) The Lie-group shooting method for solving the Bratu equation. Commun Nonlinear Sci Numer Simul 16:4238–4249

Nocedal J, Wright SJ (1999) Numerical optimization. Springer Series in Operations Research, Springer, Berlin

Fletcher R (1987) Practical methods of optimization. Wiley, New York

Schittkowski K (1985) NLQPL: a FORTRAN-subroutine solving constrained nonlinear programming problems. Ann Oper Res 5:485–500

Sivasubramani S, Swarup KS (2011) Sequential quadratic programming based differential evolution algorithm for optimal power flow problem. IET Gener Transm Distrib 5(11):1149–1154

Aleem SHEA, Zobaa AF, Abdel Aziz MM (2012) Optimal C-type passive filter based on minimization of the voltage harmonic distortion for nonlinear loads. IEEE Trans Ind Electron 59(1):281–289

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

One set of design parameter of DE-ANN optimized with PSO, SQP and PSO-SQP are tabulated in Tables 10, 11 and 12 in case of Bratu equation given in problems 1, 2 and 3, respectively. The weights given in the appendix are provided in term of real number up to 14 decimal points in order to exactly reproduced the results given in the main body of manuscript and avoid unnecessary rounding of error problems.

Rights and permissions

About this article

Cite this article

Raja, M.A.Z., Ahmad, SuI. & Samar, R. Solution of the 2-dimensional Bratu problem using neural network, swarm intelligence and sequential quadratic programming. Neural Comput & Applic 25, 1723–1739 (2014). https://doi.org/10.1007/s00521-014-1664-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1664-3