Abstract

This paper investigates the problem of adaptive neural control for a class of strict-feedback stochastic nonlinear systems with multiple time-varying delays, which is subject to input saturation. Via the backstepping technique and the minimal learning parameters algorithm, the problem is solved. Based on the Razumikhin lemma and neural networks’ approximation capability, a new adaptive neural control scheme is developed. The proposed control scheme can ensure that the error variables are semi-globally uniformly ultimately bounded in the sense of four-moment, while all the signals in the closed-loop system are bounded in probability. Two simulation examples are provided to demonstrate the effectiveness of the proposed control approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the past decades, the problem of adaptive control for nonlinear systems has been extensively investigated in the control community, and many remarkable results have been reported in the literature; see references [1–5]. By introducing the backstepping technique, the restriction of the matching condition has been removed for nonlinear systems [1]. In addition, many approximation-based adaptive control schemes have been reported to deal with uncertain nonlinear systems with unknown nonlinear functions; see [6–16] for the deterministic cases, and [17–22] and the references therein for stochastic nonlinear systems. In [16], a novel adaptive fuzzy control scheme was proposed for nonlinear strict-feedback systems, which contains only one adaptive parameter needed to be estimated online regardless of the order of systems. By combining fuzzy logical systems (FLS) with the backstepping technique, in [17], a class of strict-feedback stochastic nonlinear system was considered, where the virtual control gain function sign is unknown. In [18–20], some adaptive fuzzy output-feedback control schemes were presented when the states of the stochastic nonlinear systems are not all available. However, in the aforementioned literatures, the authors have not considered time delays.

Time delays and stochastic disturbances, which are often encountered in practical applications, are sources of instability and degradation of system performance. Recently, the stability analysis and controller design problems for nonlinear time-delay system have been payed more and more attention; see [23–35]. In general, there are two main methods for solving nonlinear systems with time delays. One is to use the Lyapunov–Krasovskii theory. Without the measurements of the states taken into consideration, authors in [29] designed adaptive neural output-feedback controller for a class of stochastic nonlinear strict-feedback time-varying delays systems. The other is the Lyapunov–Razumikhin approach, which is more brief than the Lyapunov–Krasovskii method for dealing with the problems of stability analysis and controller design. Nevertheless, a few works [32–35] have been done to investigate the adaptive neural control or fuzzy control of nonlinear time-varying delay systems by the Lyapunov–Razumikhin approach. It is worth noting that the main limitation of the aforementioned results is that time-delay functions only include the previous time-varying delay states. Thus, it is imperative to put forward an adaptive neural control scheme for stochastic nonlinear systems with all state time-varying delays by the Lyapunov–Razumikhin approach.

As another source of instability and performance degeneration of practical systems, input saturation has been attracting significant attention. There exits extensive research on the control systems with input saturation [36–43]. In [38], authors investigated the problem of robust controller design for uncertain discrete time-delay systems with control input saturation. By introducing an auxiliary design system, an adaptive tracking control scheme was proposed for a class of uncertain multi-input and multi-output nonlinear systems with non-symmetric input constraints [40]. However, to the best of our knowledge, there are no results reported on the adaptive neural or fuzzy control for stochastic nonlinear time-varying delay systems with input saturation.

Motivated by the aforementioned observation, we investigate the problem of adaptive neural control for strict-feedback stochastic nonlinear systems with multiple time-varying delays and input saturation in this paper. In order to design the controller, neural networks are employed to approximate the unknown nonlinear functions, and Razumikhin lemma is used to deal with the time-delay terms. The proposed controller guarantees that all the signals in the closed-loop system are bounded in probability. The main contributions are summarized as follows: (1) for the first time, the Lyapunov–Razumikhin approach is utilized to solve the problem of a class of strict-feedback stochastic nonlinear systems with all state time-varying delays, along with the guaranteed stability of the closed-loop system. (2) A novel adaptive neural control scheme is successfully given for strict-feedback stochastic nonlinear time-delay systems with input saturation, which is more general than the existing results [32–34]. (3) The designed control scheme contains only one adaptive parameter required to be estimated online, so the computation complexity can be significantly alleviated, which makes the algorithm easy to implement in practice.

The remainder of this paper is organized as follows. Section 2 provides some preliminary results and problem formulation. The controller design and stability analysis are given in Sect. 3. Two examples are provided to demonstrate effectiveness of the results in Sect. 4. Section 5 concludes the paper.

2 Preliminaries and problem formulation

In this section, some useful conceptions and lemmas are introduced to develop the main result of the paper, then neural networks are given to approximate the unknown nonlinear function. Finally, the problem of adaptive neural control for a class of stochastic nonlinear time-varying delay systems is formulated.

2.1 Preliminary results

Consider the following stochastic nonlinear time-delay system

with initial condition \(\{x(s):-\tau\leq s \leq 0\}=\xi\in C_{F_{0}}^{b}\times([-\tau,0],R^{n})\), where \(\tau(t):R^{+}\rightarrow[0,\tau]\) is a Borel measurable function; \(x(t) \in R^{n}\) denotes the state variable and x(t − τ(t)) is the state vectors with time-delay; w is an r-dimensional standard Wiener process defined on the complete probability space \((\Upomega, F, \{F_{t}\}_{t\geq 0},P)\) with \(\Upomega\) being a sample space, F being a σ-field, {F t } t≥0 being a filtration, and P being a probability measure. \(f(\cdot),\,g(\cdot)\) are locally Lipschitz functions and satisfy f(t, 0, 0) = 0, g(t, 0, 0) = 0.

Definition 1

For any given \(V(t, x)\in C^{1,2}([-\tau,\infty]\times R^{n})\) related to the stochastic nonlinear time-delay system (1), define the infinitesimal generator \(\mathcal{L}\) as follows:

where Tr(A) is the trace of a matrix A.

Definition 2

([34]) Let p ≥ 1, the solution {x(t), t ≥ 0} of the stochastic nonlinear time-delay system (1) with initial condition \(\xi \in \Upomega_{0} (\Upomega_{0}\) is some compact set including the origin) is said to be p-moment semi-globally uniformly ultimately bounded if there exists a constant \(\bar{d}\), it holds that

Lemma 1

(Razumikhin Lemma [34]) Let p ≥ 1, consider the stochastic nonlinear time-delay system (1), if there exist function \(V(t,x)\in C^{1,2}([-\tau,\infty]\times R^{n})\) and some positive constants c 1, c 2, μ 1, μ 2, q > 1 satisfying the following inequalities

for all t ≥ 0, such that

Then the solution x(t, ξ) of system (1) is p-moment uniformly ultimately bounded.

Lemma 2

(Young’s inequality [2]) For \(\forall(x,y)\in R ^{2}\), the following inequality holds:

where \(\varepsilon > 0, p>1, q>1\), and (p − 1) (q − 1) = 1.

Lemma 3

([6]) For any \(\eta \in R\) and \(\varepsilon > 0\), the following holds:

where δ is a constant that satisfies δ = e −(δ+1); i.e., δ = 0.2875.

Lemma 4

([25]) Consider dynamic system of the following form

where ϱ and κ are positive constants and w(t) is a positive function. By choosing the initial condition \(\hat{\theta}(0) \geq 0\), we have \(\hat{\theta}(t) \geq 0\) for all t ≥ 0.

Remark 1

Since \(\hat{\theta}(\cdot)\) is an estimation of the unknown positive constant θ, it follows that \(\hat{\theta}(0) \geq 0\) is always reasonable. This result will be used in the backstepping design procedure.

2.2 Neural networks

In this paper, the radial basis function (RBF) neural networks are used to approximate an unknown continuous function \(f(Z): R^{q}\rightarrow R\),

where \(Z \in \Upomega_{Z} \subset R^{q}\) represents the input vector and q denotes the neural network input dimension. \(W=[w_{1}, w_{2}, \dots, w_{l}]^{T} \in R^{l}\) is the weight vector; l > 1 denotes the neural network node number. \(S(Z)=[s_{1}(Z), s_{2}(Z), \dots, s_{l}(Z)]^{T} \in R^{l}\) is the basis function vector with s i (Z) defined by

where \(\mu_{i} = [\mu_{i1}, \mu_{i2}, \ldots, \mu_{iq}]^{T}\) is the center of the receptive field and η is the width of the Gaussian function. For any unknown nonlinear function f(Z) defined over a compact set \(\Upomega_{Z}\in R^{q},\) there exit the neural network \(W^{\ast^{T}}S(Z)\) and arbitrary accuracy \(\varepsilon>0\) such that

where \(W^{\ast}\) is the ideal constant weight vector and is expressed as

and δ(Z) is the approximation error, which satisfies \(|\delta(Z)| \leq \varepsilon\).

Lemma 5

([25]) Consider the Gaussian RBF networks (9) and (10). Let \(\rho := \frac{1}{2}\min _{i\neq j}\|\mu_{i}-\mu_{j}\|\). Then we can take an upper bound of \(\|S(Z)\|\) as

Remark 2

It has been pointed out that the constant s is a limited value, which is independent with the neural networks input and neural network node numbers in [25].

2.3 Problem formulation

Consider a class of strict-feedback stochastic nonlinear time-varying delays systems in the following form

where \(\bar{x}_{n}=[x_{1},\ldots, x_{n}]^{T}\in R^{n}\) and \(y \in R\) denote the state vector of the system and output of the system, respectively; \(\bar{x}_{i}=[x_{1},\ldots, x_{i}]^{T}\in R^{i}\), \((i=1, 2, \ldots, n); w\) is defined as in the system (1); \(q_{i}(\bar{x}_{n, \tau(t)})\) is unknown smooth nonlinear time-delay functions with q i (0) = 0, which is defined by \(q_{i}(\bar{x}_{n, \tau(t)})= q_{i}(x_{1}(t-\tau_{1}(t)), x_{2}(t-\tau_{2}(t)), \ldots, x_{n}(t-\tau_{n}(t))); \tau_{i}(t): R^{+}\rightarrow [0,\tau]\) is uncertain time-varying delay. For \(t \in [-\tau, 0]\), \(\bar{x}_{n}(t)=\phi(t)\), where the initial function, ϕ(t), is smooth and bounded. \(f_{i}(\cdot), g_{i}(\cdot):R^{i}\rightarrow R , \psi_{i}^{T}(\cdot):R^{i}\rightarrow R^{r}\) represent the unknown smooth nonlinear functions with f i (0) = 0, ψ T i (0) = 0, (1 ≤ i ≤ n). Moreover, \(u \in R\) denotes the input signal subject to symmetric saturation nonlinearity expressed as follows:

where u M > 0 is a known bound of u(t). Obviously, if v(t) = |u M |, then there exist two sharp corners. Thus, backstepping technique is invalid. To solve this problem, the saturation is approximated by a smooth function defined as

It follows that Eq. (14) becomes

where d(v) = sat(v) − g(v) is a bounded function in time and its bound can be constrained by

Applying the mean-value theorem and choosing v 0 = 0, it is easy to obtain that

From (15)−(17), system (13) can be transformed as follows:

The control objective is to design an adaptive neural controller for system (13) such that the error variables are semi-globally uniformly ultimately bounded in the sense of four-moment, while all the signals in the closed-loop system are bounded in probability.

To achieve the goal, the following assumptions are imposed on the system (18).

Assumption 1

The signs of \(g_{i}(\bar{x}_{i}), i=1, 2, \ldots, n\) are known. There exist unknown constants b m and b M such that \(g_{i}(\bar{x}_{i})\) satisfies

Remark 3

Assumption 1 exhibits that the function \(g_{i}(\bar{x}_{i})\) is either strictly positive or negative. Without loss of generality, it is further assumed that \(b_{m} \leq g_{i}(\bar{x}_{i}) \leq b_{M}.\) The constants b m and b M are not included in the design controller, so they can be unknown.

Assumption 2

([43]) For the function \(g_{{v_{u} }}\) there exists an unknown positive constant g m such that

Remark 4

According to the Assumptions 1, 2, the following inequality holds:

with b = min{b m , b m g m } being an unknown constant.

Assumption 3

([35]) Suppose that \(Q_{ij}(\cdot)\) is a class-\(\mathcal{K}_{\infty}\) function, and the time-delay term \(q_{i}(\bar{x}_{n, \tau(t)})\) satisfies

To develop the backstepping design scheme, we need make the following coordinate transformations:

Based on the Razumikhin lemma, the intermediate control function α i (Z i ), the actual control law v and the adaptive law \(\hat{\theta}\) are obtained in the backstepping procedure. Define a constant

where b is given in Remark 4. \(\|W_{i}^{*}\|\) will be specified later. Let \(\hat{\theta}\) denotes the estimation of the unknown constant θ. Moreover, \(\tilde{\theta}=\theta-\hat{\theta}\) is the parameter error.

The intermediate control function α i , the control law v and the adaption law \(\hat{\theta}\) for strict-feedback stochastic nonlinear time-delay system (13) will be constructed in the following forms:

where \(k_{i}, \epsilon_{i}, \lambda, \gamma, \eta\) are positive design parameters, \(Z_{1}=x_{1}\in\Upomega_{Z_{1}}\subset R^{1}\), \(Z_{i}=[\bar{x}^{T}_{i}, \hat{\theta}]\in\Upomega_{Z_{i}}\subset R^{i+1}, (i=2, 3,\ldots, n)\).

Before the backstepping design procedure, we give a useful lemma first, which will be used to deal with the time-delay term in the control design procedure.

Lemma 6

For the coordinate transformations (23), the following inequality holds:

where \(Z(t)=[z_{1}, z_{2}, \ldots, z_{n}, |\tilde{\theta}|^{1/2}]^{T}, \varrho\) is a constant; ϕ(s) = s(a 0 s + b 0) is an unknown class \(\mathcal{K}_{\infty}\) function with a 0 and b 0 being positive constants.

Proof

From Lemma 5 and the definition of α i in (25), it follows that

Substituting (29) into (23) gives

where \(Z(t)=\left[z_{1}, z_{2}, \ldots, z_{n}, |\tilde{\theta}|^{1/2}\right]^{T}, \phi(s)=s(a_{0}s+b_{0}), a_{0}= \sum\nolimits_{j=1}^{n}s_{j}, b_{0}=\sum\nolimits_{j=1}^{n}\left(k_{j}+\frac{3}{4}\right)+1\), and \(\varrho=\sum\nolimits_{j=1}^{n}s_{j}|\theta|\).

3 Controller design and stability analysis

3.1 Controller design

The backstepping design procedure is given to construct adaptive neural controller in this section. In each step, RBF neural networks are employed to approximate the unknown continuous nonlinear functions, and an intermediate control function α i will be obtained to stabilize subsystem, while the actual control law v will be designed in the final step. For the sake of simplicity, sometimes function S i (Z i ) is denoted by S i ; f i stands for f i (x i ); g i represents g i (x i ); ψ i denotes ψ i (x i ).

Step 1: Let z 1 = x 1. Then we have

Consider a Lyapunov function V 1 as

From (2), the infinitesimal generator of V 1 satisfies

By using Young’s inequality, it follows that

For the time-delay term \(q_{1}(\bar{x}_{n,\tau(t)}),\) by using Assumption 3 and Lemma 6, we can obtain the following inequality

where \(\bar{Q}_{1j}(s)=Q_{1j}(2\phi(s))\). \(\bar{Q}_{1j}(s)\) is still a class \(\mathcal{K}_{\infty}\) function, and it can be rewritten as \(\bar{Q}_{1j}(s)=s\phi_{1j}(s)\) with ϕ 1j (s) being a continuous function.

By combining \(\|Z\| \leq \|\bar{Z}_{1}\|+\sum\nolimits_{k=2}^{n}|z_{k}|\) with Lemma 3, it yields

where l 1 = qn, and \(F_{1}=\sum\nolimits_{j=1}^{n}(\bar{Q}_{1j}(l_{1}\|\bar{Z}_{1}\|)+Q_{1j}(2\varrho))\).

Substituting inequalities (32) and (33) into (31), we have

where \(\bar{f}_{1}=f_{1}+\sum\nolimits_{j=1}^{n}\sum\nolimits_{k=2}^{n}\frac{3}{4}l_{1}^{\frac{4}{3}}z_{1}+\frac{9}{4\eta_{1}^{2}}\|\psi_{1}\|^{4}+F_{1}\tanh\left(\frac{z_{1}^{3}F_{1}}{\sigma_{1}}\right)+\frac{3}{4}z_{1}\). Obviously, \(\bar{f}_{1}\) is an unknown nonlinear function as it contains unknown functions f 1, ψ 1, which cannot be implemented in practice. Hence, there exist a neural network \(W_{1}^{*^{T}}S_{1}(Z_{1}), Z_{1}=x_{1}\in \Upomega_{Z_{1}}\subset R^{1}\) such that

where δ1(Z 1) denotes the approximation error and \(\varepsilon_{1}\) is a positive constant.

Based on Lemma 3 and the definition of θ, we have

By combining inequalities (34) with (36), it implies that the following inequality holds

Adding and subtracting α 1 in (37) and by z 2 = x 2 − α 1, we get

Letting the intermediate control function in (25) with i = 1 and applying Young’s inequality gives

By using inequalities (39) and (40), it follows that

where \(c_{1}=k_{1}b>0, \rho_{1}=\delta(\sigma_{1}+b\theta\epsilon_{1})+\frac{1}{4}(\varepsilon_{1}^{4}+\eta_{1}^{2})\). The last term in (41) will be dealt in the next step.

Step i (2 ≤ i ≤ n − 1): Let z i = x i − α i−1. The error dynamic system can be written as

where

Choosing the following Lyapunov candidate V i as

According to (42)–(44) and (2), we have

Via repeatedly deduction as Step 1, it obtains that

where \(c_{j}=k_{j}b > 0,\,(j=1,2,\ldots,i-1)\), and \(\rho _{1} = \delta (\sigma _{1} + b\theta \smallint _{1} ) + \frac{1}{4}\left( {\varepsilon _{1}^{4} + \eta _{1}^{2} } \right),\rho _{j} = \delta \left( {\sigma _{j} + \kappa _{j} + b\theta \smallint _{j} } \right) + \frac{1}{4}\left( {\varepsilon _{j}^{4} + \eta _{j}^{2} } \right),j = 2,3, \ldots ,i - 1\).

From Young’s inequality, the rightmost term in (45) gets

By using the Razumikhin Lemma, Young’s inequality and Lemma 3 to deal with the time-delay term in (45), the following inequalities hold

where \(\bar{Z}_{i}=[z_{1},z_{2},\ldots,z_{i},|\tilde{\theta}|^{1/2}]^{T}, l_{i} = q((n-i)+1),\) and \(\bar{Q}_{ij}(s)=s\phi_{ij}(s)\).

On the basis of (48) and (49), we have

where \(F_{i} =\sum\nolimits_{j=1}^{n}(\bar{Q}_{ij}(l_{i}\|\bar{Z}_{i}\|)+Q_{ij}(2\varrho))+\sum\limits_{m=1}^{i-1}\sum\limits_{j=1}^{n}\left|\frac{\partial\alpha_{i-1}}{\partial x_{m}}\right|(\bar{Q}_{mj}(l_{i}\|\bar{Z}_{i}\|)+Q_{mj}(2\varrho))\).

Substituting (46), (47) and (50) into (45), it follows that

where

The function \(\varphi_{i}(Z_{i})\) will be specified later. Thus, \(\bar{f}_{i}(Z_{i})\) can be approximated by the neural network \(W_{i}^{*^{T}}S_{i}(Z_{i}), Z_{i}=[\bar{x}_{i}, \hat{\theta}]^{T}\in \Upomega_{Z_{i}}\subset R^{i+1}\) such that

It is easy to verify

Similar to the aforementioned steps, we have

From the intermediate control function α i in (25) and Young’s inequality results in

Based on (55), (56) and (54), it follows that

where \(c_{j}=k_{j}b > 0,\, j=1,2,\ldots,n-1, \rho_{1}=\delta(\sigma_{1}+b\theta\epsilon_{1})+\frac{1}{4}(\varepsilon_{1}^{4}+\eta_{1}^{2})\), and \(\rho_{j}=\delta(\sigma_{j}+\kappa_{j}+b\theta\epsilon_{j})+\frac{1}{4}(\varepsilon_{j}^{4}+\eta_{j}^{2}), j=2, 3,\ldots,n-1\).

Step n This is the final step. The actual controller v will be developed. From z n = x n − α n−1, we have

Consider the stochastic Lyapunov function V n as

From the Definition 1, it yields

where \(\mathcal{L}\alpha_{n-1}\) is given in (43) with i = n, and \(\mathcal{L}V_{n-1}\) denotes (57) with i = n − 1.

For the last term in (60), applying Young’s inequality leads to

Based on the similar method to deal with the time-delay terms in (60), the following inequality can be obtained

where \(F_{n} = \sum\nolimits_{j=1}^{n}(\bar{Q}_{nj}(l_{n}\|\bar{Z}_{n}\|)+Q_{nj}(2\varrho))+\sum\nolimits_{m=1}^{n-1}\sum\nolimits_{j=1}^{n}|\frac{\partial\alpha_{n-1}}{\partial x_{m}}|(\bar{Q}_{mj}(l_{n}\|\bar{Z}_{n}\|)+Q_{mj}(2\varrho))\).

Substituting (61) and (62) into (60) gives

where

Hence, there exist a neural network \(W_{n}^{*^{T}}S_{n}(Z_{n}), Z_{n}=[\bar{x}_{n}, \hat{\theta}]^{T}\in \Upomega_{Z_{n}}\subset R^{n+1}\) such that

On the basis of (63), (64), we have

Based on the inequality (16) and the actual control input v in (26), the following inequalities hold

In view of inequalities (66) and (67), we have

Furthermore, choosing the adaptation law as described in (27)

From Young’s inequality, the following inequality holds

Then, with the help of (68) and (70), it follows that

where \(c_{j}=k_{j}b > 0,\,(j=1,2,\ldots,n), \rho_{1}=\delta(\sigma_{1}+b\theta\epsilon_{1})+\frac{1}{4}(\varepsilon_{1}^{4}+\eta_{1}^{2}), \rho_{j}=\delta(\sigma_{j}+\kappa_{j}+b\theta\epsilon_{j})+\frac{1}{4}(\varepsilon_{j}^{4}+\eta_{j}^{2}),\, (j=2, 3,\ldots,n-1)\), and \(\rho_{n}=\delta(\sigma_{n}+\kappa_{n}+b\theta\epsilon_{n})+\frac{1}{4}(\eta_{n}^{2}+\varepsilon_{n}^{4})+\frac{1}{4g_{m}}\eta^{2}b_{M}D^{4}+\frac{b\gamma}{2\lambda}\theta^{2}\).

3.2 Stability analysis

So far, based on Razumikhin Lemma and backstepping technique, the adaptive neural controller design has been completed. Now, the main result is summarized by the following theorem.

Theorem 1

Consider the stochastic nonlinear time-delay systems in (13) subject to input saturation (14) under Assumptions 1–3. For bounded initial conditions with \(\hat{\theta} \geq 0,\) the intermediate control function α i (25), the actual control law v (26), and the adaptive law \(\hat{\theta}\) (27) guarantee that the error variables are semi-globally uniformly ultimately bounded in the sense of four-moment while all the signals in the closed-loop system are bounded in probability.

Proof

Choosing the stochastic Lyapunov function as V = V n yields

From the definition of \(\hat{\theta}\), we have

Applying Lemma 4 to the last term in (73) results in

where \(\Uptheta_{j}=\lambda s_{j}\sum\nolimits_{i=2}^{j}|z_{i}^{3}\frac{\partial\alpha_{i-1}}{\partial\hat{\theta}}|\), which means that

Together (73), (74) with (75), it is easy to know that the rightmost term of (72) are negative. Clearly,

where \(\mu_{1}=\min\{4c_{j}, \gamma, j=1, 2, \ldots, n \}\) and \(\mu_{2}=\sum\nolimits_{j=1}^{n}\rho_{j}\).

Hence, from (76) and Razumikhin Lemma, it is easy to obtain that the error variables are semi-globally uniformly ultimately bounded in the sense of four-moment, and \(\tilde{\theta}\) is bounded in probability. Since θ is a constant, \(\hat{\theta}\) is bounded in probability. α i is a function of z i and \(\hat{\theta}\), so α i is also bounded in probability. Furthermore, all the signals in the closed-loop system are bounded in probability.

Remark 5

By appropriately choosing the design parameters \(k_{i}, \epsilon_{i}, \lambda, \gamma, \eta\), for example, first properly choosing the design parameters k i , γ, then choosing \(\epsilon_{i}, \eta\) sufficiently small and λ sufficiently large, all the signals in the closed-loop system converge to a small neighborhood of the origin.

4 Simulation examples

In this section, two simulation examples are used to illustrate the effectiveness of the proposed control approach in this paper.

Example 1

Consider the following second-order nonlinear time-delay system

where u M is chosen as u M = 6. The nonlinear time-delay terms are defined as \(\tau_{1}(t)=1+5\sin(t)\), \(\tau_{2}(t)=1+3\cos(t)\). According to Theorem 1, the intermediate control function α 1 and the control law v are chosen, respectively, as

where \(z_{1}=x_{1}, z_{2}=x_{2}-\alpha_{1}, Z_{1}=z_{1} \in R^{1}, Z_{2}=[z_{1}, z_{2}, \hat{\theta}]\in R^{3}\). The adaptive law is given as

In the simulation, neural network \(W_{1}^{\ast^{T}}S_{1}(Z_{1})\) contains 7 nodes with centers spaced evenly in [−3, 3], neural network \(W_{2}^{\ast^{T}}S_{2}(Z_{2})\) includes 343 nodes with centers spaced evenly in [ −3, 3] × [ −3, 3] × [0, 3], and widths are equal to 1. The design parameters are chosen as \(k_{1}=15, k_{2}=5, \epsilon_{1}=\epsilon_{2}=2, \lambda=0.5, \gamma=1\) and η = 1. The simulation results are shown in Figs. 1, 2, 3 and 4 with the initial condition \(\phi(t)=[0.1,-0.2]^{T}, t\in[-\tau, 0], \hat{\theta}(0)=0\). Figure 1 gives the response of the state variable x 1 and x 2. Figure 2 illustrates the trajectory of adaptive law \(\hat{\theta}\). Figure 3 depicts the trajectory of saturation function output signal u. Figure 4 shows the control input signal v.

Example 2

Consider three-order stochastic nonlinear time-delay system in the following form to further show the control capability of the proposed approach.

where u M = 6 is the upper bound of input saturation, \(\tau_{1}(t)=1+2\sin(t), \tau_{2}(t)=2+4\cos(t), \tau_{3}(t)=5+3\sin(t)\) are the nonlinear time-delay terms. The intermediate control function α i , the control law v, and the adaptive law \(\hat{\theta}\) are chosen as

where \(z_{1}=x_{1}, z_{2}=x_{2}-\alpha_{1}, z_{3}=x_{3}-\alpha_{2}, Z_{1}=z_{1}\in R^{1}, Z_{2}=[z_{1}, z_{2}, \hat{\theta}]\in R^{3}, Z_{3}=[z_{1}, z_{2}, z_{3}, \hat{\theta}]\in R^{4}\).

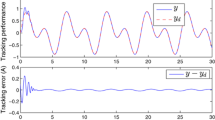

The design parameters are chosen as \(k_{1}=5, k_{2}=8, k_{3}=10, \epsilon_{1}=\epsilon_{2}=4, \epsilon_{3}=5, \lambda=2, \gamma=0.4\) and η = 2 in the simulation. The initial condition are chosen as \(\phi(t)=[0.1,-0.2,0.3]^{T}, t\in[-\tau, 0], \hat{\theta}(0)=0.4\), and neural networks are chosen as follows. Neural networks \(W_{1}^{\ast^{T}}S_{1}(Z_{1})\) and \(W_{2}^{\ast^{T}}S_{2}(Z_{2})\) are given as in Example 1, and \(W_{3}^{\ast^{T}}S_{3}(Z_{3})\) is chosen to contain 2401 nodes with centers spaced evenly in [ − 3, 3] × [ − 3, 3] × [ − 3, 3] × [0, 3], and widths are equal to 1. The simulation results are shown by Figures 5, 6, 7 and 8. Figure 5 exhibits the response of the state variable x 1, x 2 and x 3. The trajectory of adaptive law \(\hat{\theta}\) is given in Figure 6. Figure 7 depicts the trajectory of saturation function output signal u. The control input signal v is shown in Fig. 8.

5 Conclusions

In this paper, an adaptive neural control design scheme has been successfully proposed for a class of stochastic nonlinear systems with multiple time-varying delays and input saturation. In addition, the number of the online adaptive learning parameters is reduced to one, so the computation complexity can be significantly alleviated, which makes the developed results in this paper more applicable. It has been proved that the error variables are semi-globally uniformly ultimately bounded in the sense of four-moment, while all the signals in the closed-loop system are bounded in probability.

References

Krstić M, Kanellakopoulos I, Kokotovic PV (1995) Nonlinear and adaptive control design. Wiley, New York

Deng H, Krstić M (1997) Stochastic nonlinear stabilization, Part I: a backstepping design. Syst Control Lett 32(3):143–150

Krishnamurthy P, Khorrami F, Jiang ZP (2002) Global output feedback tracking for nonlinear systems in generalized output-feedback canonical form. IEEE Trans Autom Control 47(5):814–819

Zhang Z, Chen W (2009) Adaptive output feedback control of nonlinear systems with actuator failures. Inf Sci 179(24):4249–4260

Wang CL, Lin Y (2012) Multivariable adaptive backstepping control: a norm estimation approach. IEEE Trans Autom Control 57(4):989–995

Polycapou MM (1996) Stable adaptive neural control scheme for nonlinear systems. IEEE Trans Autom Control 41(3):447–451

Zhang HG, Bien Z (2000) Adaptive fuzzy control of MIMO nonlinear systems. Fuzzy Sets Syst 115(2):191–204

Zhang TP, Wen H, Zhu Q (2010) Adaptive fuzzy control of nonlinear systems in pure feedback form based on input-to-state stability. IEEE Trans Fuzzy Syst 18(1):80–93

Tong SC, Li YM (2007) Direct adaptive fuzzy backstepping control for a class of nonlinear systems. Int J Innov Comput Inf Control 3(4):887–896

Tong SC, Li YM (2009) Observer-based fuzzy adaptive control for strict-feedback nonlinear systems. Fuzzy Sets Syst 160(12):1749–1764

Tong SC, Liu CL, Li YM (2010) Fuzzy-adaptive decentralized output-feedback control for large-scale nonlinear systems with dynamical uncertainties. IEEE Trans Fuzzy Syst 18(5):845–861

Boulkroune A, Tadjine M, M’Saad M, Farza M (2010) Fuzzy adaptive controller for MIMO nonlinear systems with known and unknown control direction. Fuzzy Sets Syst 161(6):797–820

Boulkroune A, M’Saad M, Farza M (2012) Adaptive fuzzy tracking control for a class of MIMO nonaffine uncertain systems. Neurocomputing 93:48–55

Boulkroune A, M’Saad M (2012) On the design of observer-based fuzzy adaptive controller for nonlinear systems with unknown control gain sign. Fuzzy Sets Syst 201:71–85

Boulkroune A, M’Saad M, Farza M (2012) Fuzzy approximation-based indirect adaptive controller for multi-input multi-output non-affine systems with unknown control direction. IET Control Theory Appl 6(17):2619–2629

Chen B, Liu XP, Liu KF, Lin C (2009) Direct adaptive fuzzy control of nonlinear strict-feedback systems. Automatica 45(6):1530–1535

Wang YC, Zhang HG, Wang YZ (2006) Fuzzy adaptive control of stochastic nonlinear systems with unknown virtual control gain function. Acta Autom Sin 32(2):170–178

Tong SC, Li Y, Li YM, Liu YJ (2011) Observer-based adaptive fuzzy backstepping control for a class of stochastic nonlinear strict-feedback systems. IEEE Trans Syst Man Cybern B 41(6):1693–1704

Tong SC, Li YM, Wang T (2013) Adaptive fuzzy decentralized output feedback control for stochastic nonlinear large-scale systems using DSC technique. Int J Robust Nonlinear Control 23(4):381–399

Wang T, Tong SC, Li YM (2013) Adaptive neural network output feedback control of stochastic nonlinear systems with dynamical uncertainties. Neural Comput Appl 23(5):1481–1494

Psillakis HE, Alexandridis AT (2007) NN-based adaptive tracking control of uncertain nonlinear systems disturbed by unknown covariance noise. IEEE Trans Neural Netw 18(6):1830–1835

Wang HQ, Chen B, Lin C (2012) Direct adaptive neural control for strict-feedback stochastic nonlinear systems. Nonlinear Dyn 67(4):2703–2718

Boulkroune A, M’Saad M, Farza M (2011) Adaptive fuzzy controller for multivariable nonlinear state time-varying delay systems subject to input nonlinearities. Fuzzy Sets Syst 164(1):45–65

Chen B, Liu XP, Liu KF, Lin C (2010) Fuzzy-approximation-based adaptive control of strict-feedback nonlinear systems with time delays. IEEE Trans Fuzzy Syst 18(5):883–891

Wang M, Chen B, Zhang SY, Luo F (2010) Direct adaptive neural control for stabilization of nonlinear time-delay systems. Sci China Ser F 53(4):800–812

Zhou Q, Shi P, Xu SY, Li HY (2013) Adaptive output feedback control for nonlinear time-delay systems by fuzzy approximation approach. IEEE Trans Fuzzy Syst 21(2):301–313

Wang HQ, Chen B, Lin C (2012) Adaptive neural control for strict-feedback stochastic nonlinear systems with time-delay. Neurocomputing 77(1):267–274

Li TS, Li RH, Wang D (2011) Adaptive neural control of nonlinear MIMO systems with unknown time delays. Neurocomputing 78(1):83–88

Chen WS, Jiao LC, Li J, Li RH (2010) Adaptive NN backstepping output-feedback control for stochastic nonlinear strict-feedback systems with time-varying delays. IEEE Trans Syst Man Cybern B 40(3):939–950

Chen WS, Jiao LC, Wu JS (2012) Decentralized backstepping output-feedback control for stochastic interconnected systems with time-varying delays using neural networks. Neural Comput Appl 21(6):1375–1390

Zhang TP, Ge SS (2007) Adaptive neural control of MIMO nonlinear state time-varying delay systems with unknown dead-zones and gain signs. Automatica 43(6):1021–1033

Chen B, Liu XP, Liu KF, Lin C (2010) Direct adaptive fuzzy control for nonlinear systems with time-varying delays. Inf Sci 180(5):776–792

Yu ZX, Jin ZH, Du HB (2012) Adaptive neural control for a class of nonaffine stochastic nonlinear systems with time-varying delay: a Razumikhin–Nussbaum method. IET Control Theory Appl 6(1):14–23

Yu ZX, Du HB (2011) Adaptive neural control for uncertain stochastic nonlinear strict-feedback systems with time-varying delays: a Razumikhin functional method. Neurocomputing 74(12/13):2072–2082

Yu ZX, Yu ZS (2012) Adaptive neural dynamic surface control for nonlinear pure-feedback systems with multiple time-varying delays: a Lyapunov–Razumikhin method. Asian J Control 15(4):1–15

Annaswamy AM, Wong JE (1997) Adaptive control in the presence of saturation non-linearity. Int J Adapt Control Signal Process 11(1):3–19

Lan WY, Huang J (2003) Semiglobal stabilization and output regulation of singular linear systems with input saturation. IEEE Trans Autom Control 48(7):1274–1280

Xu SY, Feng G, Zou Y, Huang J (2012) Robust controller design of uncertain discrete time-delay systems with input saturation and disturbances. IEEE Trans Autom Control 57(10):2604–2609

Chen M, Ge SS, How B (2010) Robust adaptive neural network control for a class of uncertain MIMO nonlinear systems with input nonlinearities. IEEE Trans Neural Netw 21(5):796–812

Chen M, Ge SS, Ren BB (2011) Adaptive tracking control of uncertain MIMO nonlinear systems with input constraints. Automatica 47(3):452–455

Wen CY, Zhou J, Liu ZT, Su HY (2011) Robust adaptive control of uncertain nonlinear systems in the presence of input saturation and external disturbance. IEEE Trans Autom Control 56(7):1672–1678

Li YM, Tong SC, Li TS (2013) Direct adaptive fuzzy backstepping control of uncertain nonlinear systems in the presence of input saturation. Neural Comput Appl 23(5):1207–1216

Wang HQ, Chen B, Liu XP, Liu KF, Lin C (2013) Robust adaptive fuzzy tracking control for pure-feedback stochastic nonlinear systems with input constraints. IEEE Trans Cybernetics 43(6):2093–2104

Acknowledgments

The authors would like to thank the anonymous reviewers for their helpful comments that improve the quality of the paper. This work was supported by the National Natural Science Foundation of P.R. China under Grants 61374086, 61174137, 61104064, 61374153, 61203024, 61170054 and the Graduate Innovation and Creativity Foundation of Jiangsu Province under Grant CXZZ13_0209.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cui, G., Jiao, T., Wei, Y. et al. Adaptive neural control of stochastic nonlinear systems with multiple time-varying delays and input saturation. Neural Comput & Applic 25, 779–791 (2014). https://doi.org/10.1007/s00521-014-1548-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1548-6