Abstract

For mobile communication traffic series, an accurate multistep prediction result plays an important role in network management, capacity planning, traffic congestion control, channel equalization, etc. A novel time series forecasting based on echo state networks and multiplicative seasonal ARIMA model are proposed for this multiperiodic, nonstationary, mobile communication traffic series. Motivated by the fact that the real traffic series exhibits periodicities at the cycle of 6, 12, and 24 h, as well as 1 week, we isolate most of mentioned above features for each cell and integrate all the wavelet multiresolution sublayers into two parts for consideration of alleviating the accumulated error. On seasonal characters, multiplicative seasonal ARIMA model is to predict the seasonal part, and echo state networks are to deal with the smooth part because of its prominent approximation capabilities and convenience. Experimental results on real traffic dataset show that proposed method performs well on the prediction accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Time series forecasting is an active research area that has received considerable attention for application in variety of areas [1]. Generally, time series forecasting models can be grouped into two types: single models and combined ones [2]. Both theoretical and empirical investigations have indicated that a single model may not be sufficient to capture all the features of complex time series [1]. On the one hand, combined models, with the property of approximately onefold of each isolated component, are widely used to improve the prediction performance [2, 3]. Actually, most combined models are based on decomposition techniques. Fourier methods, empirical mode decomposition, and wavelet multiresolution analysis (MRA) are the most commonly used techniques in combined models [4]. On the other hand, single models use one model to predict time series directly. Many classical linear models such as the autoregressive (AR), autoregressive moving average (ARMA), and multiplicative seasonal ARIMA [5] have been widely used, while to deal with the nonlinear time series, an effective model is support vector machine (SVM) [6]. The principle SVM adopted is the structure risk minimization. With consideration of its high accuracy and good generalization capabilities, SVM is successfully used in many applications of machine learning and time series forecasting. However, it is difficult for SVM to choose kernel functions in different applications. Another effective nonlinear model is the artificial neural networks (ANNs). With the flexible nonlinear mapping capability, ANNs can approximate any continuous measurable function with arbitrarily desired accuracy [7]. Actually, due to the slow convergence and high computational training costs, training ANNs is hard in practice. Echo state network (ESNs) [8] is a new architecture of ANN. The ESNs differ from traditional ANN methods, and in that only the connections from the dynamic reservoir to the output neurons need to be trained [9]. Training ESNs [8] becomes a linear regression task, which solves the problems of slow convergence and suboptimal solutions for ANNs models.

Specifically, for our mobile communication traffic series, an accurate multistep prediction result plays an important role in network management, capacity planning, traffic congestion control, channel equalization, etc. The aim of this work is to take advantage of wavelet MRA to extract meaningful features for traffic series and improve the prediction accuracy by wavelet-based combined models.

The remainder of the paper is organized as follows. Section 2.1 describes the motivation of this work. Section 2.2 presents the framework and related works of paper, such as wavelet methods for time series analysis and forecasting, multiplicative seasonal ARIMA model, and ESNs. In Sect. 2.3, we describe our traffic series forecasting method. The experiment results and discussions are presented in Sect. 3. At last, we conclude in Sect. 4.

2 Method

2.1 Motivation

Our further observations on the real traffic series show the two main characteristics are multiperiodicities and nonstationary. So, it is difficult for a single model to capture all the features of this traffic series, even if with this promising echo state networks alone. The basic idea of this paper is to take advantage of wavelet MRA to extract meaningful features for traffic series and improve the prediction accuracy by combined models. The main challenge in this procedure is the process of forming a novel wavelet MRA, in which meaningful features can be lined up. In order to extract meaningful sublayers, Fourier spectrum was taken as prior knowledge for this multiple scales traffic series analysis. Actually, we showed that the real traffic series exhibits periodicities at the cycle of 6, 12, and 24 h, as well as 1 week. Motivated by this finding, we isolated most of mentioned above features for each cell using our modified wavelet MRA and integrated all the wavelet multiresolution sublayers into two parts, for consideration of alleviating the accumulated error. After that, appropriate models were employed to predict each of them individually. With consideration of seasonal character, we use the multiplicative seasonal ARIMA model to predict the seasonal part, while use echo state networks to predict the smooth part because of its prominent approximation capabilities and convenience.

2.2 Framework

Combining with prior knowledge of Fourier spectrum, a novel wavelet multiresolution analysis and forecasting method, based on echo state networks and multiplicative seasonal ARIMA model, are proposed in this paper. Figure 1 presents the framework of our proposed method. Our method first calculates the Fourier spectrum for this multiperiodic series, then lists prominent period components with clear physical meanings as prior knowledge. After that, it extracts meaningful features with wavelet multiresolution analysis technique based on the above prior knowledge. Further, with the consideration of alleviating the accumulated error, we integrate all the sublayers into two parts, that is, the smooth part and seasonal part by adding all the details in our multiresolution analysis. At last, a combined model is employed to predict the corresponding two parts, and the results are integrated to gain the final prediction value. With consideration of seasonal character, we use the multiplicative seasonal ARIMA model to predict the seasonal part, while we use ESN to predict the smooth part because of its prominent approximation capabilities and convenience.

2.2.1 Wavelet MRA

To meet the needs for adaptive time–frequency analysis, the wavelet theory was developed in the late 1980s by Mallat and Daubechies [10, 11]. The wavelet transform is an effective tool in signal processing due to its attractive properties such as time–frequency localization and multirate filtering [12]. Using these properties, one can extract the desired features from an input signal characterized by certain local properties in time and frequency.

The aim of this work is to take advantage of wavelet MRA to extract meaningful features for traffic series and improve the prediction accuracy by wavelet-based combined models. One essential question in this procedure is the process of forming a wavelet MRA, in which meaningful features can be lined up; this process involves the choice of wavelet decomposition algorithms, filters, and decomposition levels [2]. To date, these wavelet decomposition algorithms, filters, and decomposition levels are mainly chosen subjectively based on experience [7] or trial and error method [12]. The main drawback of choice based on these methods is that the performance of the corresponding MRA can be evaluated only after the final prediction result is given. The first problem is the choice of decomposition methods. The two popular discrete wavelet algorithms are the discrete wavelet transform (DWT) algorithm and the maximal overlap discrete wavelet transform (MODWT) algorithm. The major difference between these two algorithms is that the DWT algorithm is shift-variable, while the MODWT algorithm is shift-invariable. Shift-variable means that if we delete (or add) a few values from (or to) the front or the end of the input series, the decomposition results would be not the same as heretofore [2]. For our real world traffic series analysis and prediction, the shift-invariable algorithm is preferred as the values used to establish the prediction model should stay in the same series. Another problem associated with wavelet MRA is the choice of wavelet filters. In fact, the rough principle here adopted is that the signal analyzed and wavelet filters chosen can match each other, and the sublayers extracted can follow the original signal. The number of decomposition levels is other problem in wavelet MRA, and the basic principle here we adopted is that lining up as many meaningful features of minimum decomposition levels as possible.

Combined with Fourier spectrum and MODWT algorithm, a novel wavelet MRA is proposed for multiperiodic traffic series in this paper. Notice that our modified wavelet MRA method used is shift-invariable and associated with zero phase filter, which allows the lining up of features in the details and smooth meaningfully.

2.2.2 Multiplicative seasonal ARIMA model

We use Multiplicative Seasonal ARIMA models (MSARIMA) for the seasonal part of traffic forecasting.

In an ARIMA (p, d, q) model, the next value of a variable is assumed to be a linear function of random errors and several past observations. That is, the underlying mechanism that generates the time series with the mean u has the form:

where a t and y t are random error and the actual value at time period t, respectively. \( \phi (B) = 1 - \sum\nolimits_{i = 1}^{p} {\varphi_{i} } B_{i} \) and \( \theta (B) = 1 - \sum\nolimits_{j = 1}^{q} {\theta_{j} } B_{j} \) are polynomials in B of degree p and q, \( \phi_{i} \left( {i = 1,{ 2}, \ldots ,p} \right) \) and \( \theta_{j} \left( {j = 1,{ 2}, \ldots ,q} \right) \) are model parameters, ∇ = (1 − B), B is the backward shift operator, p and q are integers and often referred to as orders of the model, and d is an integer and often referred to as order of differencing. Random error a t is assumed to be independently and identically distributed with a mean of zero and a constant variance of \( \sigma^{2} \). The Box-Jenkins methodology for ARIMA models forecasting includes three iterative steps of model identification, parameter estimation, and diagnostic checking. This three-step model building process is typically repeated several times until a satisfactory model is finally achieved. The final selected model can then be used for our prediction task.

Notice that stationarity is a necessary condition in building an ARIMA model. A stationary time series is characterized by statistical characteristics such as the mean and the autocorrelation coefficient being constant over time. When the observed time series presents trend (periodicity), differencing (seasonal differencing) is applied to the data to remove the trend (periodicity) before an ARIMA model can be fitted. MSARIMA is an extension of ARIMA, where seasonality in the data is accommodated using seasonal differencing [13].

MSARIMA (p, d, q)(P, D, Q) s Model: In MSARIMA model [5], the seasonal components of the ARIMA model are denoted by ARIMA (P, D, Q) s , where capitalized variables represent the seasonal components of the model, and s indicates the order of periodicity. The MSARIMA model can be expressed as ARIMA (p, d, q)(P, D, Q)s.

where p is the order of nonseasonal AR process, P is the order of seasonal AR process, q is the order of nonseasonal MA process, and Q is the order of seasonal MA process. \( \phi_{p} (B) \) is the nonseasonal AR operator, \( \theta_{q} (B) \) is the nonseasonal MA operator, \( \Upphi_{P} (B^{S} ) \) is the seasonal AR operator, \( \Uptheta_{Q} (B^{S} ) \) is the seasonal MA operator, B is the backshift operator, \( \nabla^{d} \) is the nonseasonal dth differencing, \( \nabla_{S}^{D} \) is the seasonal Dth differencing at s number of lags, y t is the forecast value for period t, s equals 168 h (or 24 h) for our traffic series.

2.2.3 Echo state networks

Figure 2 presents a typical structure of ESNs which consists of input units, DR, and output units. ESNs with K input units, N dynamic reservoir processing elements, and L output units can be expressed as

In (3) and (4), \( x\left( n \right) = \left( {x_{1} \left( n \right), \ldots ,x_{N} \left( n \right)} \right),y\left( n \right) = \left( {y_{1} \left( n \right), \ldots ,y_{L} \left( n \right)} \right), \) \( u\left( n \right) = \left( {u_{1} \left( n \right), \ldots ,u_{K} \left( n \right)} \right) \) are activations of the DR processing elements, output units, and input units at time step n, respectively. The functions \( f = \left( {f_{ 1} , \ldots ,f_{N} } \right) \) are activation functions for DR processing elements (implemented as tanh functions in this paper). The functions \( f^{\text{out}} = (f_{1}^{\text{out}} , \ldots ,f_{L}^{\text{out}} ) \) are the output units’ output functions. By an N × K input weight matrix \( W^{\text{in}} = (w_{ij}^{\text{in}} ) \), the input is tied to DR processing elements. The DR processing elements are connected by an N × N matrix W = (w ij ). \( W^{\text{back}} = (w_{ij}^{\text{back}} ) \) is an N × L matrix for the connections that project back from the output to DR. And DR is tied to the output units by an L × (K + N + L) matrix \( W^{\text{out}} = (w_{ij}^{\text{out}} ) \). The variable (u(n + 1), x(n + 1), y(n)) is the input, internal, and output vectors [8].

One advantage of ESN is high accuracy. Another one is that the training process of ESN is a simple linear regression task. One disadvantage of ESN is that it is not suitable for long-term forecasting. With the property of “short-term memory”, ESN is not suitable for long-term forecasting and then we extend the mobile communication traffic series with ARIMA model before using ESN.

2.3 Procedure

The raw data were collected in cells, on an hourly basis, by the China Mobile Communications Corporation (CMCC) Heilongjiang Company, Limited. Initial observations on this traffic show long-term trends, multiperiodicities, and self-similar properties. Our goal is to provide prior knowledge for mobile communication traffic MRA. Figure 3 presents the traffic data in one cell. This figure indicates the presence of daily and weekly periodicities.

Considering this multiperiodicities properties, we take the Fourier spectrum, along with life experience, as the prior knowledge for wavelet decomposition. This means that the prominent period components revealed in the Fourier spectrum with clear physical meaning, such as 24- and 168-h period components, may be regarded as the guides in choosing wavelet decomposition algorithms, filters, and decomposition levels. Correspondingly, the aim of our MRA is to line up as many prominent period components of minimum decomposition levels as possible. This novel MRA and forecasting technique involve a five-step process explained below.

Step 1. Calculate the Fourier spectrum for traffic series [14] and list prominent period components with clear physical meanings for each cell.

Step 2. Let X be a mobile communication traffic series with N data points {X t : t = 0,1,…,N − 1}. In our experiments, N = 1,008. With the MODWT algorithm [15] and its inverse, the traffic series X can be reconstructed as

Equation (5) defines a MRA of X in terms of the jth level MODWT details D j , the J level MODWT smooth S J , and the error term a t , where J is the wavelet decomposition level and \( a_{t} \sim WN ( 0 ,\delta_{a}^{2} ) \). In the wavelet theory, the relatively high-frequency component D J may be viewed as noise, thus D J and a t may be integrated as one part. We chose the MODWT algorithm because each component of a MODWT-based MRA is associated with a zero phase filter, which allows the lining up of events in the details and smooth meaningfully with events in X.

Step 3. In this step, use the Haar wavelet filter for the MRA. The reason for this will be discussed in the experiment section.

Step 4. Starting with one, increase the decomposition level J stepwise to line up as many components as possible, whose dominant periods are in accordance with the previous Fourier spectrum. This means that J must be increased and the dominant period of each sublayer must be checked until the maximum period revealed in the Fourier spectrum is lined up, where a dominant period refers to the first prominent period.

Step 5. For further evaluation, undertake 1 week ahead predictions to compare our method with several other methods. Using the proposed MRA, we decompose the traffic series into two parts (the smooth part S J and seasonal part ΣD j ) to alleviate the accumulated error by adding all the details [2]. Next, we use the MSARIMA model [5] to predict the seasonal part, while use echo state networks to predict the smooth part. After that, the results are integrated to gain the final prediction value. For comparison, we use MSARIMA models, echo state networks, LS-SVM models, wavelet-MSARIMA models to predict the traffic series, respectively.

The time complexity of proposed MRA: when N is an integer multiple of 2J, the DWT can be computed using O(N) multiplications, whereas our proposed MRA requires O(Nlog2 N) multiplications. There is thus a computational price to pay for using our proposed MRA, but its computational burden is the same as the widely used FFT algorithm and hence is usually quite acceptable [15].

3 Results

In this section, we validated our proposed method by experiments on real network traffic of Cells in Heilongjiang Province, northeastern China. Dataset with the characteristic of multiperiodicities in real world may be suitable for our proposed method. Figure 6 in Ref. [16] shows that the electricity price dataset in Spain market is also with the property of multiperiodicities, so it may be suitable for our proposed method.

3.1 Performance metrics

In this paper, two metrics have been used to quantitatively evaluate the prediction performance, including mean absolute error (MAE) and normalized mean square error (NMSE). Mean absolute error (MAE) is defined as

where \( \mathop x\limits^{ \wedge } (t) \) and \( x(t) \) are the predicted value and the actual value at time t, respectively. M is the total prediction number, in our experiments M = 168. As prediction accuracy increases, MAE decreases. Normalized mean square error (NMSE) is defined as

where \( \sigma^{2} \) is the variance of the time series over prediction duration. The smaller the NMSE is, the better the prediction performance.

3.2 Traffic data

The raw data were collected in cells, on an hourly basis, by the China Mobile Communications Corporation (CMCC) Heilongjiang Company, Limited. Data from two cells were used to validate the performance of the proposed method, spanning November 17, 2007–January 4, 2008. Cell 1 was located in Harbin, and Cell 2 was in Daqing. Notice that the interval between two successive data points is 1 h, thus the duration of the traffic set lasts for 7 weeks. We divided the mentioned above dataset into two parts: one spanning November 17, 2007–December 28, 2007 (6 weeks) and the other spanning December 29, 2007–January 4, 2008 (1 week). The former was used for wavelet MRA and prediction model training, while the last 168 data points were used for prediction test.

3.3 MRA and prediction results

In this section, we present our modified wavelet MRA and discuss the prediction of proposed method. After that, we compare the results with that of four other models.

According to our MRA method, we first calculated the Fourier spectrum for Cell 1. Figure 4 illustrates the 6 most prominent period components, that is, periods of 6, 8, 12, 24, 84, and 168 h.

Figure 5 shows the MODWT MRA of level J = 7 for Cell 1 using the Haar wavelet filter. Note that only the final 672 points MRA results are listed for clarity and brevity. Our choice of level J = 7 was based on the fact that the dominant period of the resulting detail D 7 was 168-h. For further analysis, we present a detail of Fig. 5 in Fig. 6, that is, D 7 for X. Figure 6 shows that each undulation of D 7 corresponds exactly to 7 undulations of X, implying that the dominant period of D 7 might be 168-h, since the dominant period of X is 24-h.

Details of Fig. 5, D 7 for X

Likewise, Fig. 7 presents D 3 for X, where each undulation of X corresponds exactly to 2 undulations of D 3; this implies that the dominant period of D 3 might be 12-h. Other sublayers can be analyzed in the same way. To confirm our conjectures, we check the dominant period of each sublayer with FFT, and the results are listed in Table 1. From Table 1, we can see that 5 of the 6-period components are isolated into detail layers in the proposed MRA, including D 7 of the 168-h dominant period and D 3 of the 12-h dominant period. In our experiments, the choice of wavelet filter was based on the performance of D 3 to follow X, as the ease of judgment. From Fig. 7, we can see that D 3 follows the original traffic X in terms of the 12-h dominant period component.

Details of Fig. 5, D 3 for X

It is interesting to note that this conclusion is suitable for almost all cells, including Cell 2, in our further experiments. The prominent periods of X for Cell 2 and the dominant period of each sublayer are also listed in Table 1. It is interesting to note that we have identified the component of the 168-h dominant period, that is, D 7, which is also of level J = 7. Four in five period components revealed in the Fourier spectrum have been identified in our MRA. Other MRA results for Cell 2 are similar to that of Cell 1.

With consideration of alleviating the accumulated error, we integrate all the details as one layer—the seasonal part. Then, we use MSARIMA model to predict the seasonal part as its seasonal characteristic and no obvious “trend” information. Parameter estimation is done using maximum likelihood estimation. The best model is chosen as the one that provides the smallest AIC (Akaike Information Criterion) with orders which do not exceed 2. We obtain the fitted model as follows: ARIMA(2,0,2) × (0,1,2)168, with \( \phi_{1} = - 0. 3 6 4 3 \), \( \phi_{2} = 0. 60 9 4 \), \( \theta_{1} = - 0. 8 70 1 \), \( \theta_{2} = 0. 10 6 3 \), \( \Uptheta_{1} = 0. 8 1 6 7 \), \( \Uptheta_{2} = 0. 1 8 3 2 \) for Cell 1. For Cell 2, we obtain the fitted model as follows: ARIMA(1,0,1) × (0,1,0)168, with \( \phi_{1} = 0. 4 4 6 9 \), \( \theta_{1} = 0. 7 7 8 8 \).

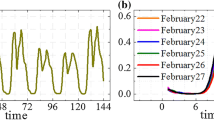

We use echo state networks to predict the smooth part for the consideration of follows: The smooth part is nonlinear without obvious seasonal feature, and ESNs has been shown to be powerful to solve complex machine learning tasks, especially for this kind of low-frequency sublayer without obvious seasonal feature. Considering the conflict between multistep forecasting demand of traffic series and short-term memory property of ESNs, we extend the smooth part to 1 week later with MSARIMA model, before modeling and forecasting with ESNs.

The training of ESNs in this paper can be described as follows: Set the parameters of ESNs including W in, W, N, SR, IS, etc. Initialize connection matrices W in and W using random weights, and they should be sparse. Collect x(n) by feeding training samples into (3). Calculate W out with pseudo-inverse method by (4).

The size N should reflect both the length T of the training data and the difficulty of the task. As a rule of thumb, N should not exceed an order of magnitude of T/10–T/2. The setting of SR is crucial for subsequent model performance. It should be small for fast teacher dynamics and large for slow teacher dynamics. Typically, SR needs to be hand-tuned by trying out several settings. Standard settings of SR lie in a range between 0.45 and 0.85. Large input scaling parameter IS implies that the network is strongly driven by input, while small IS means that the network state is only slightly excited around the dynamical reservoir’s resting state. Typical ranges for IS are 0.1–0.5.

In this paper, the number of DR processing elements N = 30, ESNs reservoir spectral radius SR = 0.75, and ESNs input scaling parameter IS = 0.4. After that, we can use this trained echo state networks to achieve 1 week ahead prediction for the smooth part in Cell 1 and Cell 2, respectively.

After the prediction of seasonal and smooth part, the results are integrated to gain the final prediction value. MAE and NMSE were used, to compare the prediction performance of our method with four other models. Table 2 gives the MAE and NMSE of Cell 1. From Table 2, we can see that the proposed method improved the prediction accuracy at least 13 % over other four methods.

In summary, Cell 1 of the proposed method leads to three main results. First, five out of the six prominent period components revealed in the Fourier spectrum have been identified, and all detail sublayers in our MRA have clear physical meanings. Second, the smooth S 7 can be viewed as the long-term trend given that the dominant period of D 7 is 168 h, that is, the maximum period in Fig. 4. Third, the proposed method improved the prediction accuracy at least 13 % over other four methods.

Similarly, the prediction results in Table 2 for Cell 2 also demonstrate the effectiveness of proposed method and improved the prediction accuracy at least 12 % over other four methods.

4 Conclusions

For mobile communication traffic series, an accurate multistep prediction result plays an important role in network management, capacity planning, traffic congestion control, channel equalization, etc. A novel wavelet multiresolution analysis and forecasting method, based on echo state networks and multiplicative seasonal ARIMA model, are proposed for mobile communication traffic in this paper. Using our modified wavelet MRA, we decompose the traffic series into the smooth part and seasonal part. After that, appropriate models are employed to predict each of them individually. Experimental results on real traffic demonstrate that the proposed method is feasible for traffic series forecasting.

References

Khashei M, Bijari M (2011) A novel hybridization of artificial neural networks and ARIMA models for time series forecasting. Appl Soft Comput 11(2):2664–2675

Sun HL, Jin YH (2009) Network traffic prediction by a wavelet-based combined model. Chin Phys B 18(11):4760–4768

Xie YC, Zhang YL, Ye ZR (2007) Short-term traffic volume forecasting using Kalman filter with discrete wavelet decomposition. Comput Aided Civil Infrastruct Eng 22(5):326–334

Guo K, Zhang X (2008) Application of EMD method to friction signal processing. Mech Syst Signal Process 22:248–259

Goh G, Law R (2002) Modeling and forecasting tourism demand for arrivals with stochastic nonstationary seasonality and intervention. Tour Manag 23(5):499–510

Tay F, Cao L (2001) Application of support vector machines in financial time series forecasting. Int J Manag Sci 29(4):309–317

Jiang XM, Adeli H (2005) Dynamic wavelet neural network model for traffic flow forecasting. J Transp Eng ASCE 131(10):771–779

Schrauwen B, Verstraeten D, Campenhout JV (2007) An overview of reservoir computing: theory, applications and implementations. European Symposium on Artificial Neural Networks 2007, pp 471–482

Jaeger H, Haas H (2004) Harnessing nonlinearity: predicting chaotic system and saving energy in wireless communication. Science 304:78–80

Mallat SG (1989) A theory of multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Machine Intell 11(7):674–693

Daubechies I (1990) The wavelet transformation, time-frequency localization and signal analysis. IEEE Trans Inf Theory 36:961–1005

Rocha Reis AJ, Alves da Silva AP (2005) Feature extraction via multiresolution analysis for short-term load forecasting. IEEE Trans Power Syst 20(1):189–198

Nobre FF, Monteiro ABS (2001) Dynamic linear model and SARIMA: a comparison of their forecasting performance in epidemiology. Stat Med 20(20):3051–3069

Papagiannaki K (2005) Long-term forecasting of internet backbone traffic. IEEE Trans Neural Netw 16(5):1110–1124

Percival DB, Walden AT (2000) Wavelet methods for time series analysis. Cambridge University Press, Cambridge, pp 159–205

Pindoriya NM, Singh SN, Singh SK (2008) An adaptive wavelet neural network-based energy price forecasting in electricity Markets. IEEE Trans Power Syst 23(3):1423–1432

Author information

Authors and Affiliations

Corresponding author

Additional information

Yu Peng and Miao Lei contributed equally to this research.

Rights and permissions

About this article

Cite this article

Peng, Y., Lei, M., Li, JB. et al. A novel hybridization of echo state networks and multiplicative seasonal ARIMA model for mobile communication traffic series forecasting. Neural Comput & Applic 24, 883–890 (2014). https://doi.org/10.1007/s00521-012-1291-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-012-1291-9