Abstract

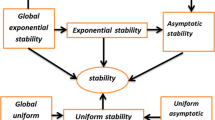

This paper considers existence, uniqueness, and the global asymptotic stability for a class of High-order Hopfield neural networks with mixed delays and impulses. The mixed delays include constant delay in the leakage term (i.e., "leakage delay") and time-varying delays. Based on the Lyapunov stability theory, together with the linear matrix inequality approach and free-weighting matrix method, some less conservative delay-dependent sufficient conditions are presented for the global asymptotic stability of the equilibrium point of the considered neural networks. These conditions are expressed in terms of LMI and can be easily checked by MATLAB LMI toolbox. In addition, two numerical examples are given to illustrate the applicability of the result.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to the fact that the high-order neural networks have stronger approximation property, faster convergence rate, greater storage capacity, and higher fault tolerance than lower-order neural networks, high-order neural networks have been the object of intensive analysis by numerous authors in recent years. In particular, there have been extensive results on the problem of the existence and stability of equilibrium points of high-order neural networks in the literature [1, 2, 3]. By using M-matrix and linear matrix inequality (LMI) techniques, the authors in [4] and [5] investigated the problems of the global exponential stability and robust stability of the equilibrium point of high-order Hopfield-type neural networks without the delays. For high-order Hopfield neural networks with constant time delays, the existence and global asymptotic stability conditions were obtained in [2] which were based on the LMI approach and with the assumption that the activation functions are monotonic nondecreasing. There are numerous articles have been published for the study of dynamic behaviors of high-order neural networks with different time delays, see for example [6] and references therein.

Although the convergence dynamics of impulsive neural networks have been considered, see for example [7, 8, 9] and references therein. The problem of the exponential stability analysis for impulsive high-order Hopfield-type neural networks with time-varying delays was studied in [10], where the delays are not required to be differentiable. We note that the conditions in [10] were obtained based on simple Lyapunov functionals, which may lead to conservatism to some extent when using them to study the exponential stability of delayed high-order neural networks without an impulse. To the best of authors’ knowledge, few authors have considered high-order Hopfield neural networks with impulses. For instance, [10, 11] investigated impulsive high-order Hopfield neural networks with time delays. However, so far, there has been very little existing work on neural networks with time delay in the leakage (or ”forgetting”) term [14, 15, 16, 17]. This is due to some theoretical and technical difficulties [12]. In fact, time delay in the leakage term also has great impact on the dynamics of neural networks. As pointed out by Gopalsamy [13], time delay in the stabilizing negative feedback term has a tendency to destabilize a system.

Motivated by the above discussions, the global asymptotic stability of a class of impulsive high-order Hopfield-type neural networks with time-varying delays and time delay in the leakage term is discussed. Based on the Lyapunov stability theory, together with the LMI approach and free-weighting matrix method, some less conservative delay-dependent sufficient conditions are presented for the global asymptotic stability of the equilibrium point of the considered neural networks. Two numerical examples are provided to demonstrate the effectiveness of the proposed stability criteria.

Notations

Throughout this paper, the superscript T denotes the transposition and the notation X ≥ Y (respectively, X > Y), where X and Y are symmetric matrices, means that X − Y is positive semi-definite (respectively, positive definite). I is the identity matrix with appropriate dimension; \(\parallel \cdot \parallel\) is the Euclidean vector norm and \(\Uptheta=\{1,2,\ldots, n\}\). For any interval \(J\subseteq R, \) set \(V\subseteq R^{k}(1\leq k \leq n), C(J,V)=\{\varphi:J \rightarrow V\) is continuous} and \(PC^{1}(J,V)=\{\varphi:J\rightarrow V\) is continuously differentiable everywhere except at finite number of points t, at which \(\varphi(t^{+}), \varphi(t^{-}), \dot{\varphi})(t^{+})\) and \(\dot{\varphi}(t^{-})\) exist and \(\varphi(t^{+})=\varphi(t), \dot{\varphi}(t^{+})=\dot{\varphi}(t)\), where \(\dot{\varphi}\) denotes the derivative of \(\varphi \}\). \([\bullet]^\ast\) denotes the integer function. The notation * always denotes the symmetric block in one symmetric matrix. Matrices, if not explicitly stated, are assumed to have compatible dimensions.

2 Model description and preliminaries

Consider a continuous-time high-order Hopfield-type neural networks with time-varying delays and impulsive perturbations:

where x i (t) is the neural state, n denotes the number of neurons, ϕ i (t) is the initial condition which is continuous on \([-\bar{\tau}, 0]\) and τ j (t) ≥ 0 represents the transmission delay which satisfies \(\tau_{1} \leq \tau_{j}(t) \leq \tau_{2}, \bar{\tau}=\max\{\tau_{2}, \sigma\}. \) The first term on the right-hand side of system (1) denotes the leakage term in the neural network. σ ≥ 0 denotes the leakage delay or forgetting delay. \(\tilde{f}_{i}(x_{i}(t))\) and \(\tilde{g}_{i}(x_{i}(t))\) are the neuron activation functions, c i > 0 is a positive constant, which denote the rate with which the ith cell resets its potential to the resting state when isolated from the other cells and inputs, a ij and b ij are the first-order synaptic weights of the neural network, and d ijl and e ijl are the second-order synaptic weights of the networks. J i denotes the ith component of an external input source introduced from the outside the network to the ith cell. The activation functions in (1) are assumed to satisfy the following assumptions:

Assumption 1

There exist constants μ j > 0, ν j > 0, γ ij and σ ij (i = 1, 2 and \(j=1,2,\ldots,n)\) such that γ ij < σ ij

For simplicity, we give the following model transformation, and we give the next assumption.

Assumption 2

For any \(i, j, l=1,2,\ldots, n, d_{ijl}+d_{ilj} \neq 0\) and e ijl + e ilj ≠ 0.

Assumption 3

The transmission delay τ(t) is time-varying and satisfies 0 ≤ h 1 ≤ τ(t) ≤ h 2 and \(\dot{\tau}(t)<\mu, \) where h 1, h 2 and μ are some positive constants.

Assumption 4

The leakage delay σ ≥ 0 is a constant.

Assumption 5

\({J_k(\cdot):\mathbb{R}^n\times \mathbb{R}^n\rightarrow\mathbb{R}^n, k\in \mathbb{Z}_+,}\) are some continuous functions.

Assumption 6

The impulse times t k satisfy \(0=t_0 <t_{1}<\cdots <t_{k}\rightarrow\infty\) and \({\inf_{k\in \mathbb{Z}_+}\{t_k-t_{k-1}\}>0.}\)

3 Existence and uniqueness theorems

One denotes an equilibrium point of the impulsive network (1) by the constant vector \({x^\ast =[x^\ast_1, x^\ast_2,\ldots, x^\ast_n]^T\in \mathbb{R}^n, }\) where the components \(x^\ast_i\) are governed by the algebraic system

As usual, the impulsive operator in this paper is viewed as a perturbation of the equilibrium point of system (1) without impulse effects, that is, it satisfies \(J_{ik}(\cdot)\) satisfy \({ J_{ik}(x^\ast_i)\equiv0, i\in\Uptheta, k \in \mathbb{Z}_{+}.}\)

Theorem 3.1

Under assumption 1, the network (1) has a unique equilibrium point if the following inequality holds:

Proof

Consider a mapping \({\Upphi(u)=(\Upphi_1(u),\Upphi_2(u),\ldots,\Upphi_n(u))^T\in \mathbb{R}^n}\) as follows

where \({u=(u_1,u_2,\ldots,u_n)\in \mathbb{R}^n.}\) Let \({v=(v_1,v_2,\ldots,v_n)\in \mathbb{R}^n,}\) then by assumption 1 we get

which implies that

where

in view of condition (4).

Thus, we obtain

It means that the mapping \({\Upphi: \mathbb{R}^n\rightarrow \mathbb{R}^n}\) is a contraction on \({\mathbb{R}^n}\) endowed with the Euclidean vector norm \(\|\bullet\|.\) and thus, there exists a unique fixed point \({u^\ast\in \mathbb{R}^n}\) such that \(\Upphi(u^\ast)=u^\ast\) which defines the unique solution of the system (3). The proof is complete.□

4 Global asymptotic stability results

Under Assumption 2, system (1) is transformed into the following form:

where \(\xi_{ijl}(u_{l}(t))=(d_{ijl}\tilde{f}_{l}(x_{l}(t))+d_{ilj}\tilde{f}_{l}(x_{l}^{*}))/(d_{ijl}+d_{ilj})\) if it lies between \(\tilde{f}_{l}(x_{l}(t))\) and \(\tilde{f}_{l}(x_{l}^{*})\) and \(\theta_{ijl}(u_{l}(t-\tau_{l}(t)))= (e_{ijl}\tilde{g}_{l}(x_{l}(t-\tau_{l}(t)))+e_{ilj}\tilde{g}_{l}(x_{l}^{*}))/(e_{ijl}+e_{ilj})\) if it lies between \(\tilde{g}_{l}(x_{l}(t-\tau_{l}(t)))\) and \(\tilde{g}_{l}(x_{l}^{*})\).

If we denote \(u(t)=[u_{1}(t), u_{2}(t),\ldots, u_{n}(t)]^{T}, \, f(u(t))=[f_{1}(u_{1}(t)), f_{2}(u_{2}(t)),\ldots, f_{n}(u_{n}(t))]^{T}\), \(g(u(t-\tau(t)))=[g_{1}(u_{1}(t-\tau_{1}(t))), g_{2}(u_{2}(t-\tau_{2}(t))),\ldots, g_{n}(u_{n}(t-\tau_{n}(t)))]^{T}, C=\hbox{diag}\{c_{1}, c_{2}, \ldots, c_{n}\}\), A = (a ij ) n×n , B = (b ij ) n×n , \(\mathcal{D}=[D_{1}, D_{2},\ldots, D_{n}]^{T}, \) where \(D_{i}=\hbox{diag}\{d_{i1}, d_{i2},\ldots, d_{in}\}, d_{ij}=[d_{ij1}+d_{i1j}, d_{ij2}+d_{i2j},\ldots, d_{ijn}+d_{inj}]^{T}\), \(\mathcal{E}=[E_{1}, E_{2}, \ldots, E_{n}]^{T}, \) where \(E_{i}=\hbox{diag}\{e_{i1}, e_{i2}, \ldots, e_{in}\}, e_{ij}=[e_{ij1}+e_{i1j}, e_{ij2}+e_{i2j}, \ldots, e_{ijn}+e_{inj}]^{T}\), \(\Upxi(u(t))=\hbox{diag}\{\xi_{1}^{T}(u(t)), \xi_{2}^{T}(u(t)), \ldots, \xi_{n}^{T}(u(t))\}_{n \times n^{3}}\) where \(\xi_{i}(u(t))=[\xi_{i1}^{T}(u(t)), \xi_{i2}^{T}(u(t)), \ldots, \xi_{in}^{T}(u(t))]^{T}\), \(\xi_{ij}(u(t))=[\xi_{ij1}^{T}(u_{1}(t)), \xi_{ij2}^{T}(u_{2}(t)), \ldots, \xi_{ijn}^{T}(u_{n}(t))]^{T}\) and \(\Uptheta(u(t-\tau(t)))=\hbox{diag}\{\theta_{1}^{T}(u(t-\tau(t))), \theta_{2}^{T}(u(t-\tau(t))), \ldots, \theta_{n}^{T}(u(t-\tau(t)))\}_{n \times n^{3}}\) where \(\theta_{i}(u(t-\tau(t)))=[\theta_{i1}^{T}(u(t-\tau(t))), \theta_{i2}^{T}(u(t-\tau(t))), \ldots, \theta_{in}^{T}(u(t-\tau(t)))]^{T}\), \(\theta_{ij}(u(t-\tau(t)))=[\theta_{ij1}^{T}(u_{1}(t-\tau_{1}(t))), \theta_{ij2}^{T}(u_{2}(t-\tau_{2}(t))), \ldots, \theta_{ijn}^{T}(u_{n}(t-\tau_{n}(t)))]^{T}\), then the above system can be written in the following vector-matrix form with impulsive perturbation:

or in equivalent form:

Remark 4.1

System (4) can be rewritten in the following vector-matrix form:

where \({\tilde{\Upxi}=\hbox{diag}\{\xi, \xi, \ldots, \xi\}_{n \times n}, \xi=(\xi_{1}, \xi_{2}, \ldots, \xi_{n})^{T}, \tilde{\mathcal{D}}=(D_{1}+D_{1}^{T}, D_{2}+D_{2}^{T}, \ldots, D_{n}+D_{n}^{T})^{T}, \tilde{\Uptheta}=\hbox{diag}\{\theta, \theta, \ldots, \theta\}_{n \times n}, \theta=(\theta_{1}, \theta_{2}, \ldots, \theta_{n})^{T}}\), and \({\tilde{\mathcal{E}}=(E_{1}+E_{1}^{T}, E_{2}+E_{2}^{T}, \ldots, E_{n}+E_{n}^{T})^{T}. }\) In fact, ξ l and θ l in the aforementioned equality should be ξ ijl (u(t)) and θ ijl (u(t − τ(t))) in (5), respectively. Obviously, they are generally relevant to weights d ijl , d ilj and \(e_{ijl}, e_{ilj}, i,j,l=1,2,\ldots, n; \) therefore, the aforementioned form is identical to system (5) only if ξ ijl (u(t)) and θ ijl (u(t − τ(t))) are independent of the weights d ijl , d ilj and \(e_{ijl}, e_{ilj}, i,j,l=1,2,\ldots, n. \)

In order to obtain the main results, we need the following lemmas.

Lemma 4.2

[18] Let X, Y and P be real matrices of appropriate dimensions with P > 0. Then, for any positive scalar \(\epsilon\), the following matrix inequality holds:

Lemma 4.3

[19] Given any real matrix M = M T > 0 of appropriate dimension and a vector function \({\omega(\cdot): [a, b] \rightarrow \mathbb{R}^{n}}\), such that the integrations concerned are well defined, then

5 Main results

In this section, we present a delay-dependent criterion for the asymptotic stability of system (7) with Assumptions 1 to 6.

Theorem 5.1

For given scalars τ2 > τ1 ≥ 0, σ and η, the equilibrium point of (7) is globally asymptotically stable if there exist symmetric matrix \(P=\left[ \begin{array}{ccc} P_{11} & P_{12} & P_{13} \\ P_{12}^{T} & P_{22} & P_{23}\\ P_{13}^{T} & P_{23}^{T} & P_{33} \end{array} \right]>0, \) positive diagonal matrices \(Q_{l}, l=1,2,\ldots,5, R_{1}, S_{1}, S_{2}, T_{1}, T_{2}, T_{3}, \) positive scalars \(\epsilon_{j}, j=1,2,\ldots,14, \) real matrix X such that the following LMIs hold:

where \(\Upomega=[\Upomega_{ij}]_{12 \times 12}, \Uppsi=[\Uppsi]_{14 \times 12}, \Upupsilon=\hbox{diag}\{\epsilon_{1}I,\epsilon_{2}I, \ldots, \epsilon_{14}I\}, \)

Proof

Construct a Lyapunov–Krasovskii functional in the form

where

Calculating the upper right derivative of V along the solution of (7) at the continuous interval \({[t_{k-1}, t_{k}), k \in \mathbb{Z}_{+}, }\) we get

By Lemma 4.3, we have

Furthermore, one can infer from inequality (2) that the following matrix inequalities hold for any positive diagonal matrices Z k , (k = 1, 2, 3) with compatible dimensions

The following equation holds for any real matrix X with a compatible dimension

By Lemma 4.2, the following inequalities hold for any positive scalars \(\epsilon_{i}, (i=1,2,\ldots,14)\)

Since

and \(\sum_{j=1}^{n}\|\xi_{ij}(u(t))\|^{2} \leq \sum_{l=1}^{n}\mu_{l}^{2}, \sum_{j=1}^{n}\|\theta_{ij}(u(t))\|^{2} \leq \sum_{l=1}^{n}\nu_{l}^{2}\) it follows that

Substituting (11–41) into (10) and making some manipulations, we have

where

From Lemma 4.2 and the well-known Schur complements, D +(V(t,u(t))) < 0 holds if the LMI (9) is true for \({t \in [t_{k-1}, t_{k}), \quad k \in \mathbb{Z}_{+}. }\)

It follows from (9) that

where \(\Uppi^{*}=-\Uppi. \) Suppose that \({t \in [t_{n-1}, t_{n}), n \in \mathbb{Z}_{+}. }\) Then, integrating inequality (42) at each interval [t k−1, t k ), 1 ≤ k ≤ n − 1, we derive that

which implies that

In order to analyze inequality (43), we need to consider the change of V at impulse times. First, it follows from (8) that

in which the last equivalent relation is obtained by the well-known Schur complements. Secondly, from model (7), it can be obtained that

which together with (44) yields

Thus, we can deduce that

Substituting above inequality to (43), it yields

Applying Lemma 4.3 and (45), we have

Similarly,

Hence, it can be obtained that

where

So the solution u(t) of model (7) is uniformly bounded on \([0,\infty).\) Thus, considering the continuity of activation function f(i.e.,(A1)), it can be deduced from system (6) that there exists some constant M > 0 such that \({\|\dot{u}(t)\|\leq M, t\in[t_{k-1},t_k), k\in \mathbb{Z}_+.}\) It implies that \({| \dot{u}_i(t) |\leq M, t\in[t_{k-1},t_k), k\in \mathbb{Z}_+,i\in \Uptheta,}\) where \(\dot{u}\) denotes the right-hand derivative of u at impulsive times.

In the following, we shall prove \(||u(t)||\rightarrow0\) as \(t\rightarrow\infty.\) We first show that

Obviously, it is equal to prove \(|u_i(t_k)| \rightarrow 0\) as \(t_k \rightarrow \infty, i \in \Uptheta. \) First, note that \({|\dot{u}_i(t)| \leq M, t \in [t_{k-1},t_k), k\in \mathbb{Z}_+, }\) then for any \(\epsilon>0, \) there exists a \(\delta=\frac{\epsilon}{2M}>0\) such that for any \({t{'},t{''}\in [t_{k-1},t_k),k\in \mathbb{Z}_+}\) and |t ′ − t ′′| < δ implies

By (A 5), we define \(\bar{\delta}=\min\{\delta, \frac{1}{2}\theta\},\) where \({\theta=\inf_{k\in \mathbb{Z}_+}\{t_{k}-t_{k-1}\}>0.}\) From (45), it can be obtained that

which implies

Applying Lemma 4.3, we get

So for above given \(\epsilon, \) there exists a \(T=T(\epsilon)>0\) such that t k > T implies

From the continuity of |u i (t)| on \([t_{k},t_{k}+\bar{\delta}]\) and using integral Mean value theorem, there exists some constant \(\xi_k\in[t_{k},t_{k}+\bar{\delta}]\) such that

which leads to

Together (47) with (49), one may deduce that for any \(\epsilon>0, \) there exists a \(T=T(\epsilon)>0\) such that t k > T implies

This completes the proof of (46).

Now we are in a position to prove that \(|u_i(t)|\rightarrow0\) as \(t \rightarrow\infty,i\in \Uptheta.\)

In fact, it follows from (47) that for any \(\epsilon>0,\) there exists a \(\delta=\frac{\epsilon}{2M}>0\) such that for any \({t{'},t{''}\in [t_{k-1},t_k),k\in \mathbb{Z}_+}\) and |t ′ − t ″| < δ implies

Since (46) holds, there exists a constant \(T_1=T_1(\epsilon)>0\) such that

In addition, applying the same argument as (48), we can deduce that

where \({\bar{\delta}=\min\{\delta, \frac{1}{2}\theta\}, \theta=\inf_{k\in \mathbb{Z}_+}\{t_{k}-t_{k-1}\}>0.}\) So for above given \(\epsilon, \) there exists a constant \(T_2=T_2(\epsilon)>0\) such that

Set \({T^\ast=\min\{t_l | t_l\geq \max\{T_1,T_2\}, l\in \mathbb{Z}_+\}.}\) Now, we claim that \(|u_i(t)|\leq \epsilon, t>T^\ast.\) In fact, for any \(t>T^\ast\) and without loss of generality assume that \(t\in [t_m,t_{m+1}), m\geq l.\) Now, we consider the following two cases:

-

Case 1.

\(t\in [t_m,t_m+\bar{\delta}].\)

In this case, it is obvious from (50) and (51) that

$$ |u_i(t)|\leq|u_i(t)-u_i(t_m)|+|u_i(t_m)|\leq \frac{\epsilon}{2}+\frac{\epsilon}{2}=\epsilon. $$ -

Case 2.

\(t\in [t_m+\bar{\delta}, t_{m+1}).\)

In this case, we know that u i (s) is continuous on \([ t-\bar{\delta},t]\subseteq [t_m,t_{m+1}).\) By integral Mean value theorem, there exists at least one point \(\tau_t\in[t-\bar{\delta},t]\) such that

$$ \int_{t-\bar{\delta}}^{t} |u_i(s)| ds=|u_i(\tau_t)|\bar{\delta}, $$which together with (52) yields \(|u_i(\tau_t)|<\frac{\epsilon}{2}.\) Then, in view of \(\tau_t\in[t-\bar{\delta},t],\) we obtain

$$ |u_i(t)|\leq|u_i(t)-u_i(\tau_t)|+|u_i(\tau_t)|\leq \frac{\epsilon}{2}+\frac{\epsilon}{2}=\epsilon. $$So in either case we have proved that \(|u_i(t)|\leq \epsilon, t>T^\ast.\) Therefore, the zero solution of system (7) is globally asymptotically stable, which implies that model (1) has a unique equilibrium point which is globally asymptotically stable. This completes the proof.

For system (1), when \(a_{ij}=d_{ijl}=0, i, j, l=1,2,\ldots,n, \) it reduces to the following high-order Hopfield neural networks with time-varying delays and impulsive perturbations:

where \(i=1,2,\ldots,n. \) Before proceeding, we assume the following assumption which will be used in the following Theorem.

Assumption 7

There exist constants ν j > 0 and σ j , \(j=1,2,\ldots,n\) such that γ j < σ j

System (5) can be rewritten in the following form:

or in equivalent form:

Now, we present a global asymptotic stability result for the delayed high-order neural networks with time delay in the leakage term and impulsive perturbations.

Theorem 5.2

For given scalars τ2 > τ1 ≥ 0, σ and η, the equilibrium point of (4) is globally asymptotically stable if there exist symmetric matrix \(P=\left[ \begin{array}{ccc} P_{11} & P_{12} & P_{13} \\ P_{12}^{T} & P_{22} & P_{23}\\ P_{13}^{T} & P_{23}^{T} & P_{33} \end{array} \right]>0,\) positive diagonal matrices \(Q_{l}, l=1,2,\ldots,5, R_{1}, S_{1}, S_{2}, T_{1}, T_{2}, T_{3}, \) positive scalars \(\epsilon_{j}, j=4,6,8,10,12,14, \) real matrix X such that the following LMIs hold:

where \(\hat{\Upomega}=[\hat{\Upomega}_{ij}]_{11 \times 11}, \hat{\Uppsi}=[\hat{\Uppsi}]_{6 \times 11}, \hat{\Upupsilon}=\hbox{diag}\{\epsilon_{4}I,\epsilon_{6}I, \epsilon_{8}I, \epsilon_{10}I, \epsilon_{12}I, \epsilon_{14}I\}\),

Remark 5.3

The new augumented Lyapunov–Krasovskii functional with triple integral and leakage delay terms in this paper is completely new and efficient than [6].

Remark 5.4

Recently, few authors have discussed the triple integral terms added in the Lyapunov–Krasovskii functional, see for example [20]. There are few papers having triple integral terms deriving stability results of neural networks, see for example [20]. The leakage delays were not taken in these triple integral terms. Motivating this reason, we have included the leakage delay in the triple integrals, which is also one of the reason of reducing conservatism. The free-weighting matrix method has also been applied to reduce less conservative stability conditions. In [6], the authors used the few free-weighting matrices and found some conservative stability results than the published papers in the literature. However, there still exists room for further improvement than the results discussed in [6]. Motivating this reason, we introduce some triple integral terms for interval time-varying delays in the Lyapunov–Krasovskii functional and derived some less conservative stability results. This plays an important role in the further reduction of conservatism and we find the better upper bound than the result reported in [6].

When there is no leakage delay, that is, model (7) becomes

For model (59), we have the following result by Theorem 5.5.

Theorem 5.5

For given scalars τ2 > τ1 ≥ 0, σ and η, the equilibrium point of (4) is globally asymptotically stable if there exist symmetric matrix \(P=\left[ \begin{array}{ccc} P_{11} & P_{12} & P_{13} \\ P_{12}^{T} & P_{22} & P_{23}\\ P_{13}^{T} & P_{23}^{T} & P_{33} \end{array} \right]>0\) , positive diagonal matrices Q l , l = 1, 2, 4, S 1, S 2, T 1, T 2, positive scalars \(\epsilon_{j}, j=1,2,\ldots,12, \) real matrix X such that the following LMI holds:

where \(\tilde{\Upomega}=[\tilde{\Upomega}_{ij}]_{10 \times 10}, \tilde{\Uppsi}=[\tilde{\Uppsi}]_{12 \times 10}, \tilde{\Upupsilon}=\hbox{diag}\{\epsilon_{1}I,\epsilon_{2}I, \ldots, \epsilon_{12}I\}\),

When there is no leakage delay, that is, model (56) becomes

For model (61), we have the following result by Theorem 5.6.

Theorem 5.6

For given scalars τ2 > τ1 ≥ 0, σ and η, the equilibrium point of (61) is globally asymptotically stable if there exist symmetric matrix \(P=\left[ \begin{array}{ccc} P_{11} & P_{12} & P_{13} \\ P_{12}^{T} & P_{22} & P_{23}\\ P_{13}^{T} & P_{23}^{T} & P_{33} \end{array} \right]>0, \) positive diagonal matrices Q l , l = 1, 2, 4, S 1, S 2, T 1, T 2, positive scalars \(\epsilon_{j}, j=4,6,10,12,14, \) real matrix X such that the following LMIs hold:

where \(\hat{\Upomega}=[\hat{\Upomega}_{ij}]_{11 \times 11}, \hat{\Uppsi}=[\hat{\Uppsi}]_{6 \times 11}, \hat{\Upupsilon}=\hbox{diag}\{\epsilon_{4}I,\epsilon_{6}I, \epsilon_{8}I, \epsilon_{10}I, \epsilon_{12}I, \epsilon_{14}I\}, \)

6 Numerical examples

Example. 4.1

Consider the delayed impulsive neural networks (5) with

Obviously, Assumptions 1 and 2 are satisfied with

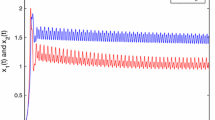

Let us take \(\sigma=0.1, \Updelta_{1}=0, \eta=1\) and \(\Updelta_{2}=0.7, \) by resorting to the Matlab LMI Control Toolbox to solve the LMIs in Theorem 5.1, we obtain the following feasible solutions

We are ready to discuss the same example for nominal system (i.e., removing the impulse term in the reported Theorems). The following Table 1 shows that the maximum allowable upper bound of the time delay τ2 for the different fixed delays τ1 and σ.

In addition, the time-varying delay has been chosen as \(\tau(t)=0.0335 \sin t+2\) of Theorem 1 in [6] for η = 0.0335. However, if we set \(\tau(t)=0.1131 \sin t+2310, \) from Theorem 5.5, we can verify that this system (59) has a unique equilibrium point, which is globally asymptotically stable for the same η. Hence, the results presented in this paper are less conservative than those studied in [6].

Example 4.2

Consider the delayed impulsive neural networks (18) with

Obviously, Assumptions 2 and 3 are satisfied with

Let us take \(\sigma=0.1, \Updelta_{1}=0, \eta=1\) and \(\Updelta_{2}=0.4, \) by resorting to the Matlab LMI Control Toolbox to solve the LMIs in Theorem 5.2, we obtain the following feasible solutions

We are ready to discuss the same example for nominal system (i.e., removing the impulse term in the reported Theorems). The following Table 2 shows that the maximum allowable upper bound of the time delay τ2 for the different fixed delays τ1 and σ.

In addition, the time-varying delay have been chosen as \(\tau(t)=0.1023 \sin t+2\) of Theorem 1 in [6] for η = 0.1023. However, if we set \(\tau(t)=0.1023 \sin t+2795, \) from Theorem 5.6, we can verify that this system (61) has a unique equilibrium point, which is globally asymptotically stable for the same η. Hence, the results presented in this paper is less conservative than those studied in [6].

Remark 4.3

In the simulations, one may find that high-order Hopfield neural networks (5) with σ = 0 are globally asymptotically stable (see Fig. 1). If we take σ = 1.5, it is easy to check that the LMIs (8–9) have not any feasible solutions via MATLAB LMI toolbox, which implies that our results cannot guarantee the stability of high-order Hopfield neural networks (5) for the example 4.1. In this case, from simulations, it is interesting to find that high-order Hopfield neural networks (5) is not stable (see Fig. 2). This greatly shows the advantage of our development results. Similarly, for example 4.2, Figs. 3 and 4.

7 Conclusion

In this paper, we have investigated a class of high-order Hopfield neural networks with time delay in the leakage term under impulsive perturbations. Sufficient condition to ensure the global existence and uniqueness of the solution for the high-order Hopfield neural networks by using the contraction mapping theorem have been derived. Next, some sufficient conditions on the global asymptotic stability results have been derived for a class of high-order Hopfield-type neural networks with mixed delays and impulsive perturbations. A new method has been proposed to obtain the delay-dependent stability criteria by introducing an appropriate Lyapunov–Krasovskii functionals including triple integral terms. Less conservative results have been derived by applying the free-weighting matrix method and LMI techniques. Finally, the effectiveness of the proposed results has been demonstrated by two numerical examples.

References

Cao JD, Liang JL, Lam J (2004) Exponential stability of high-order bidirectional associative memory neural networks with time delays. Physica D Nonlinear Phenom 199:425–436

Xu BJ, Liu XZ, Liao XX (2003) Global asymptotic stability of high-order hopfield type neural networks with time delays. Comput Math Appl 45:1729–1737

Zhang BY, Xu SY, Li YM, Chu YM (2007) On global exponential stability of high-order neural networks with time-varying delays. Phys Lett A 366:69–78

Xu B, Liu X, Liao X (2008) Stability analysis of high-order Hopfield type neural networks with uncertainty. Neurocomputing 71:508–512

Xu B, Wang Q, Liao X (2006) Global exponential stability of high order Hopfield type neural networks. Appl Math Comput 174:98–116

Zheng C-D, Zhang H, Wang Z (2011) Novel exponential stability criteria of high-order neural networks with time-varying delays. IEEE Trans Syst Man Cybern Part B 41:486–496

Li XD, Chen Z (2009) Stability properties for hopfield neural networks with delays and impulsive perturbations. Nonlinear Anal Real World Appl 10:3253–3265

Mohamad S (2007) Exponential stability in hopfield-type neural networks with impulses. Chaos Solitons Fractals 32:456–467

Mohamad S, Gopalsamy K, Akca H (2008) Exponential stability of artificial neural networks with distributed delays and large impulses. Nonlinear Anal Real World Appl 9:872–888

Liu X, Teo KL, Xu B (2005) Exponential stability of impulsive highorder Hopfield-type neural networks with time-varying delays. IEEE Trans Neural Netw 16:1329–1339

Xu B, Xu Y, He L LMI-based stability analysis of impulsive high-order Hopfield-type neural networks. Math Comput Simul. doi:10.1016/j.matcom.2011.02.008 (in press)

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Gopalsamy K (1992) Stability and oscillations in delay differential equations of population dynamics. Kluwer, Dordrecht

Peng S (2010) Global attractive periodic solutions of BAM neural networks with continuously distributed delays in the leakage terms. Nonlinear Anal Real World Appl 11:2141–2151

Li X, Cao J (2010) Delay-dependent stability of neural networks of neutral type with time delay in the leakage term. Nonlinearity 23:1709–1726

Li X, Fu X, Balasubramaniam P, Rakkiyappan R (2010) Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal Real World Appl 11:4092–4108

Li X, Rakkiyappan R, Balasubramaniam P (2011) Existence and global stability analysis of equilibrium of fuzzy cellular neural networks with time delay in the leakage term under impulsive perturbations. J Franklin Inst 348:135–155

Zhang H, Wang Z, Liu D (2008) Global asymptotic stability of recurrent neural networks with multiple time-varying delays. IEEE Trans Neural Netw 19:855–873

Gu K (2000) An integral inequality in the stability problem of time-delay systems. In: Proceedings of the 39th IEEE conference on decision and control Sydney, Australia, Dec 2000, pp 2805–2810

Chen J, Sun J, Liu GP, Rees GP (2010) New delay-dependent stability criteria for neural networks with time-varying interval delay. Phys Lett A 374:4397–4405

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rakkiyappan, R., Pradeep, C., Vinodkumar, A. et al. Dynamic analysis for high-order Hopfield neural networks with leakage delay and impulsive effects. Neural Comput & Applic 22 (Suppl 1), 55–73 (2013). https://doi.org/10.1007/s00521-012-0997-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-012-0997-z