Abstract

The Principal Component Analysis (PCA) is a powerful technique for extracting structure from possibly high-dimensional data sets. It is readily performed by solving an eigenvalue problem, or by using iterative algorithms that estimate principal components. This paper proposes a new method for online identification of a nonlinear system modelled on Reproducing Kernel Hilbert Space (RKHS). Therefore, the PCA technique is tuned twice, first we exploit the Kernel PCA (KPCA) which is a nonlinear extension of the PCA to RKHS as it transforms the input data by a nonlinear mapping into a high-dimensional feature space to which the PCA is performed. Second, we use the Reduced Kernel Principal Component Analysis (RKPCA) to update the principal components that represent the observations selected by the KPCA method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since the introduction of Support Vector Machines (SVM) [19], many learning algorithms have been transferred to a kernel representation [2, 7]. The important benefit lies on the fact that nonlinearities can be allowed, while avoiding to solve a nonlinear optimization problem. The transfer is implicitly accomplished by means of a nonlinear map in a Reproducing Kernel Hilbert Space F k (RKHS) [9].

Kernel methods have been successfully applied to a large class of problems, such as identification of nonlinear system [2, 3, 16, 17], diagnostic system [24], time series prediction [13], face recognition [26], biological data processing for medical diagnosis [23]…. The attractiveness of such algorithms stands from their elegant treatment of data issued from nonlinear processes. However, these techniques suffer from computational complexity as the amount of computer memory and the training time increases rapidly with the number of observations. It is clear that for large datasets (as for example in image processing, computer vision or object recognition), the kernel method with its powerful advantage of dealing with nonlinearities is computationally limited. For large datasets, an eigen decomposition of Gram matrix can simply become too time-consuming to extract the principal components and therefore the system parameter identification becomes a tough task. To overcome this burden, recently a theoretical foundation for online learning algorithm with kernel method in reproducing kernel Hilbert spaces was proposed [4, 5, 14, 15, 18, 22, 25]. Also online kernel algorithm is more useful when the system to be identified is time-varying, because these algorithms can automatically track changes of system model with time-varying and time lagging characteristic.

In this paper, we propose a new method for online identification of a nonlinear system parameters modeled on Reproducing Kernel Hilbert Space (RKHS). This method uses the Reduced Kernel Principal Component Analysis (RKPCA) that selects the observation data to approach the Principal Components Analysis kept by the Kernel Principal Component Analysis method KPCA [2]. The selected observations are used to build an RKHS model with a reduced parameter number. The proposed online identification method updates the list of the retained principal components and then the RKHS model by evaluating the error between the output model and the process one. The proposed technique may be very helpful to design an adaptive control strategy of nonlinear systems.

The paper is organized as follows. In Sect. 2, we remind the Reproducing Kernel Hilbert Space (RKHS). Section 3 is devoted to the modeling in RKHS. The Reduced Kernel Principal Component Analysis RKPCA method is presented in Sect. 4. In Sect. 5, we propose the new online RKPCA method. The proposed algorithm has been tested to identify the Wiener-Hammerstein benchmark [21] and a chemical reactor [8].

2 Reproducing kernel Hilbert space

Let \( E \subset {\mathbb R}^{d} \) be an input space and L 2(E) the Hilbert space of square integrable functions defined on E. Let \( k:E \times E \to \mathbb{R} \) be a continuous positive definite kernel. It is proved [6, 9] that it exists as a sequence of an orthonormal eigen functions (ψ 1, ψ 2, …, ψ l ) in L 2(E) and a sequence of corresponding real positive eigenvalues (σ 1, σ 2, …, σ l ) (where l can be infinite) so that

Let \( F_{k} \subset L^{2} (E) \) be a Hilbert space associated to the kernel k and defined by:

where \( \varphi_{i} = \sqrt {\sigma_{i} } \psi_{i} \) i = 1, …, l. The scalar product in the space F k is given by:

The kernel k is said to be a reproducing kernel of the Hilbert space F k if and only if the following conditions are satisfied.

where k(x,·) means \( k(x,x^{\prime } )\quad \forall x^{\prime } \in E \). F k is called reproducing kernel Hilbert space (RKHS) with kernel k and dimension l. Moreover, for any RKHS, there exists only one positive definite kernel and vice versa [10].

Among the possible reproducing kernels, we mention the Radial Basis function (RBF) defined as:

with μ a fixed parameter.

3 RKHS models

Consider a set of observations \( \{ x^{(i)} ,y^{(i)} \}_{i = 1, \ldots ,M} \) with \( x^{(i)} \in {\mathbb R}^{n} ,y^{(i)} \in \mathbb{R} \) are respectively the system input and output. According to the statistical learning theory (SLT) [19, 20], the identification problem in the RKHS F k can be formulated as a minimization of the regularized empirical risk. Thus, it consists in finding the function \( f^{*} \in F_{k} \) such that

where M is the measurement number and λ is a regularization parameter chosen in order to ensure a generalization ability to the solution f *. According to the representer theorem [9], the solution f * of the optimization problem (6) is a linear combination of the kernel k applied to the M measurements x (i), i = 1, …, M, as:

To solve the optimization problem (6), we can use some kernel methods such that Support Vector Machine (SVM) [11], Least Square Support Vector Machine (LSSVM) [7], Regularization Network (RN) [3], Kernel Partial Least Square (KPLS) [12], …. In [2], the Kernel Principal Component Analysis KPCA were proposed. This method reconsiders the regularization idea by finding the solution to the identification problem in some subspace F kpca spanned by the so-called principal component and yields to a RKHS model with M parameters.

In the next section, we present the Reduced KPCA method in which we approximate the retained principal components given by the KPCA with a set of vectors of input observations. This approximation is performed by a set of particular training observations and allows the construction of a RKHS model with much less parameters.

4 RKPCA method

Let a nonlinear system with an input \( u \in \mathbb{R} \) and an output \( y \in \mathbb{R} \) from which we extract a set of observations be \( \{ u^{(i)} ,y^{(i)} \}_{i = 1, \ldots ,M} \). Let F k be an RKHS space with kernel k. To build the input vector x (i) of the RKHS model, we use the NARX (Nonlinear auto regressive with eXogeneous input) structure as:

The set of observations becomes \( D = \{ x^{(i)} ,y^{(i)} \}_{i = 1, \ldots ,M} \) where \( x^{(i)} \in {\mathbb R}^{{m_{u} + m_{y} + 1}} \) and \( y^{(i)} \in \mathbb{R} \) and the RKHS model of this system based on (7) can be written as:

Let the application Φ:

where φ i are given in (2).

The Gram matrix K associated with the kernel k is an M-dimensional square matrix so that

The kernel trick [10] is so that

We assume that the transformed data \( \{ \Upphi (x^{(i)} )\}_{i = 1, \ldots ,M} \in {\mathbb R}^{l} \) are centered [2]. The empirical covariance matrix of the transformed data is symmetrical and l-dimensional. It is written as following:

Let l ′ be the number of the eigenvectors \( \{ V_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) of the C ϕ matrix that corresponds to the nonzeros positive eigenvalues \( \{ \lambda_{j} \}_{{j = 1, \ldots , \, l^{\prime } }} \). It is proved in [2] that the number l ′ is less or equal to M.

Due to the large size l of C ϕ , the calculus of \( \{ V_{j} \}_{{j = 1, \ldots , \, l^{\prime } \, }} \) can be difficult. The KPCA method shows that these \( \{ V_{j} \}_{{j = 1, \ldots , \, l^{\prime } \, }} \) are related to the eigenvectors \( \{ \beta_{j} \}_{{j = 1, \ldots ,l^{\prime } \, }} \) of the gram matrix K according to [1]:

where \( (\beta_{j,i} )_{j = 1, \ldots ,p} \) are the components of \( \{ \beta_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) associated to their nonzero eigenvalues \( \mu_{1} > \cdots > \mu_{{l^{'} }}. \)

The principle of the KPCA method consists in organizing the eigenvectors \( \{ \beta_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) in the decreasing order of their corresponding eigenvalues \( \{ \mu_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \). The principal components are the p first vectors \( \{ V_{j} \}_{j = 1, \ldots ,p} \) associated with the highest eigenvalues and are often sufficient to describe the structure of the data [1, 2]. The number p satisfies the Inertia Percentage criterion IPC given by:

where

The RKHS model provided by the KPCA method is [1].

Since the principal components are a linear combination of the transformed input data \( \{ \Upphi (x^{i} )\}_{i = 1, \ldots ,M} \) [3], the Reduced KPCA approaches each vector \( \{ V_{j} \}_{j = 1, \ldots ,p} \) by a transformed input data \( \Upphi (x_{b}^{(j)} ) \in \{ \Upphi (x^{i} )\}_{i = 1, \ldots ,M} \) having a high projection value in the direction of V j [1].

The projection of the \( \Upphi (x^{(i)} ) \) on the V j called \( \tilde{\Upphi }(x^{(i)} )_{j} \in \mathbb{R} \) and can be written as:

According to (14) and (12), the relation (18) is written:

To select the vectors \( \{ \Upphi (x_{b}^{(i)} )\} \), we project all the \( \{ \Upphi (x^{(i)} )\}_{i = 1, \ldots ,M} \) vectors on each principal component \( \{ V_{j} \}_{j = 1, \ldots ,p} \) and we retained \( x_{b}^{(j)} \in \{ x^{(i)} \}_{i = 1, \ldots ,M} \) that satisfies

where ζ is a given threshold.

Once the \( \{ x_{b}^{(j)} \}_{j = 1, \ldots ,p} \) corresponding to the p principal component \( \{ V_{j} \}_{j = 1, \ldots ,p} \) is determined, we transform the vector \( \Upphi (x) \in {\mathbb R}^{l} \) to the \( \hat{\Upphi }(x) \in {\mathbb R}^{p} \) vector that belongs to the space generated by \( \{ \Upphi (x_{b}^{j} )\}_{j = 1, \ldots ,p} \) and the proposed reduced model is

where

and according to the kernel trick (12), the model (21) is

where

The model (23) is less complicate than that provided by the KPCA. The identification problem can be formulated as a minimization of the regularized least square written as:

where ρ is a regularization parameter and \( \hat{a} = (\hat{a}_{1} , \ldots ,\hat{a}_{p} )^{T} \) is the parameter estimate vector.

The solution of the problem (25) is

With:

And \( I_{p} \in {\mathbb R}^{p \times p} \) is the p identity matrix.

The RKPCA algorithm is summarized by the five following steps:

-

1.

Determine the nonzero eigenvalues \( \{ \mu_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) and the eigenvectors \( \{ \beta_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) of Gram matrix K.

-

2.

Order the \( \{ \beta_{j} \}_{{j = 1, \ldots ,l^{\prime } }} \) on the decreasing way with respect to the corresponding eigenvalues.

-

3.

For the p retained principal components, choose the \( \{ (x_{b}^{(j)} )\}_{j = 1, \ldots ,p} \) that satisfy (20).

-

4.

Solving (25) to determine \( \hat{a}^{*} \in {\mathbb R}^{p} \).

-

5.

The reduced RKHS model is given by (23).

5 Online RKPCA method

In this section, we propose an online Reduced Kernel Principal Component Analysis method, which consists on updating the vectors that approximate the principal components. This proposed method is detailed in the following steps.

First step, to determine the optimal value of the parameters μ of the kernel associated to the RKHS model, an offline learning step on an n-observation set \( I = \{ (x^{(1)} ,y^{(1)} ), \ldots ,(x^{(n)} ,y^{(n)} ) \) is carried out till the provided RKHS model approximates correctly the nonlinear system. Then, we apply the RKPCA method to reduce the number of the model parameters and the resulting model is written as:

Let I n be the set of observations that correspond to the retained principal components. \( I_{n} = \{ x_{b}^{(j)} \}_{j = 1, \ldots ,p} \)

At time instant (n + 1), the RKHS model output is obtained according to (28) as:

The error between the estimated output and the real one is

If e (n+1) < ɛ 1, where ɛ 1 is a given threshold, the model approaches sufficiently the system behavior. Else an update of the RKHS model is required which can be accomplished either by actualizing the model parameters or by actualizing the retained principal components.

In both cases, we calculate the projection of Φ(x (n+1)) on the space F kpca spanned by \( \{ \Upphi (x_{b}^{(i)} )\}_{j = 1, \ldots ,p} \). This projection is denoted \( \hat{\Upphi }(x^{n + 1} ) \) so that its j ith component is given by:

A good approximation of \( \Upphi (x^{n + 1} ) \) by \( \hat{\Upphi }(x^{n + 1} ) \) requires satisfying the following condition:

The set I n is updated to \( I_{n + 1} = \{ I_{n} , \, x^{(n + 1)} \} \) to determine the parameters \( \{ \hat{a}_{j} \}_{j = 1, \ldots ,p} \) of the RKHS model (28).

If (32) isn’t satisfied, we actualize the set \( \{ x_{b}^{(j)} \}_{j = 1, \ldots ,p} \) using the observation set I n+1 then we built the Gram matrix corresponding to I n+1 given by:

and we compute its eigenvalues.

According to relations (15), (16) and (33), we determine the new p ′ principal component. Then, we used the RKPCA to determine the new set \( \{ x_{b}^{(j)} \}_{{j = 1, \ldots ,p^{\prime } }} \) that approaches the p ′ retained principal components. The RKPCA model is given by:

Finally, we estimate the parameters \( \hat{a}_{j} ;\quad j = 1, \ldots ,p^{\prime } \)

In the following, we summarize the algorithm of the online RKPCA method.

6 Online RKPCA algorithm

6.1 Offline phase

-

1.

According to (15) and (16), we determine the p retained principal components resulting from the processing of an n measurement set. Then, we determine the \( I_{n} = \{ x_{b}^{(j)} \}_{j = 1, \ldots ,p} \) set according to (20). The RKHS model is given by:

$$ \tilde{y} = \sum\limits_{j = 1}^{p} {\hat{a}_{j} } k(x_{b}^{(j)} ,x) $$

6.2 Online phase

-

1.

At time instant (n + 1), we have a new data (x n+1, y n+1), if e (n+1) < ɛ 1, the model approaches sufficiently the behavior of the system, else we need to update the RKHS model (28) by the projection \( \hat{\Upphi }(x^{n + 1} ) \) given by (31).

-

2.

If (32) is satisfied, we use the set \( I_{n + 1} = \{ I_{n} ,x^{(n + 1)} \} \) to actualize the parameters \( \{ \hat{a}_{j} \}_{j = 1, \ldots ,p} \), else we update the \( \{ x_{b}^{(j)} \}_{j = 1, \ldots ,p} \) set using the I n+1 set and the relations (15), (16) and (33). The new RKHS model is given by (34), and the a j parameters of the model can be determined using the least square method.

7 Simulations

The proposed method has been tested for modeling a Wiener Hammerstein benchmark and a chemical reactor.

7.1 Description of wiener Hammerstein benchmark model

The system to be modelled is sketched by Fig. 1. It consists on an electronic nonlinear system with a Wiener Hammerstein structure that was built by Gerd Vendesteen [21]. This process was adopted as a nonlinear system benchmark in SYSID 2009.

7.2 Results

To build the RKHS model, we use the RBF Kernel (Radial Basis Function)

We use a heuristic approach to select the input vector that yields the minimal normalized mean square error between real output and estimated one. This approach is called sequential forward search, in which each input is selected sequentially. The selected vector is:\( x(k) = \left\{ {u(k - 1),u(k - 2),u(k - 4), \ldots ,u(k - 15),y(k - 1)} \right\}^{T} \in {\mathbb R}^{15} \) selected with validation Normalized Mean Square Error NMSE of 0.063.

The chosen thresholds are

We performed the online identification using the online RKPCA algorithm developed in Sect. 5. The total number of observations is 187,000.

The parameter estimate vector is

The number of the retained principal components is p ′ = 16. They form the columns of the following matrix.

In Fig. 2a–c, we present the system and the model output during the online identification. We picked the performance of our algorithm in the training sample windows [4800 5800], [105 1.1105] and [183200 184200]. We remark that the model output is in concordance with the system output, indeed the Normalized mean Square Error is equal to 0.087. This shows the good performances of the proposed online identification method.

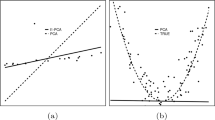

To evaluate the performance of the proposed method, we plot in Fig. 3, the evolution of the NMSE. We notice that the error goes down when the number of observation goes high.

In the Table 1, we summarized the results of the online identification algorithm in terms of kernel parameter, NMSE, and parameters number.

7.3 Chemical reactor modeling

7.3.1 Process description

The process is a Continuous Stirred Tank Reactor CSTR which is a nonlinear system used for the conduct of the chemical reactions [8]. A diagram of the reactor is given in Fig. 4.

The physical equations describing the process are

where h(t) is the height of the mixture in the reactor of the feed of reactant 1 w 1 (resp, reactant 2, w 2) with concentration Cb 1 (resp. Cb 2). The feed of product of the reaction is w 0 and its concentration is C b . k 1 and k 2 are consuming reactant rate. The temperature in the reactor is assumed constant and equal to the ambient temperature. We are interested by modeling the subsystem presented in Fig. 5.

For the purpose of the simulations, we used the CSTR model of the reactor provided with Simulink of Matlab. The parameter μ = 208 is determine using the cross-validation technique in the offline phase.

The input vector of RKHS model is

The number of observations is 300.

In Fig. 6, we represent the online reduced kernel principal component analysis output as well as the system output. We notice concordance between both outputs with a Normalized mean Square Error equal to 0.083%.

In Fig. 7, we draw the evolution of the NMSE, we notice that the online identification algorithm presents readily an error less than 1% since the 20th training sample.

In the Table 2, we summarized the performance of the online identification algorithm in terms of kernel parameter, NMSE, and parameters number.

8 Conclusion

In this paper, we have proposed an online reduced kernel principal component analysis method for nonlinear system parameter identification. Through several experiments, we showed the accuracy and good scaling properties of the proposed method. This algorithm has been tested for identifying a Wiener Hammerstein benchmark model and a chemical reactor, and the results were satisfying. The proposed technique may be very helpful to design an adaptive control strategy of nonlinear systems.

References

Aissi I (2009) Modélisation, Identification et Commande Prédictive des systèmes non linéaires par utilisations des espaces RKHS, thèse de doctorat, ENIT Tunisie

Scholkopf B, Smola A, Muller K-R (1998) Nonlinear component analysis as kernel eigenvalue problem. Neural Comput 10:1299–1319

Scholkopf B, Smola A (2002) Learning with kernels. The MIT press, Cambridge

Richard C, Bermudez JCM, Honeine P (2009) Online prediction of time series data with kernels. IEEE Trans Signal Process 57:1058–1067

Kuzmin D, Warmuth MK (2007) Online Kernel PCA with entropic matrix updates. In: Proceedings of the 24 the international conference on machine learning, Corvallis, OR

Mercer J (1909) Functions of positive and negative type and their connection with the theory of integral equations. Philos Trans R Soc 209:415–446

Suykens JAK, Van Gestel T, De Brabanter J, De Moor B, Vandewalle J (2002) Least squares support vector machines. World Scientific, Singapore

Demuth H, Beale M, Hagan M (2007) Neural network toolbox 5, User’s guide, The MathWorks

Wahba G (2000) An introduction to model building with reproducing Kernel Hilbert spaces. Technical report No 1020, Department of Statistics, University of Wisconsin-Madison

Aronszajn N (1950) Theory of reproducing kernels. Trans Am Math Soc 68(3):337–404

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines. Cambridge University Press, Cambridge

Rosipal R, Trecho LJ (2001) Kernel partial least squares in reproducing Kernel Hilbert spaces. J Mach Learn Res 2:97–123

Fernandez R (1999) Predicting time series with a local support vector regression machine. In: ACAI conference

Gunter Simon, Schraudolph NN, Vishwanathan SVN (2007) Fast iterative kernel principal component analysis. J Mach Learn Res 8:1893–1918

Chin T-J, Schindler K, Suter D (2006) Incremental Kernel SVD for face recognition with image sets. In: Proceedings of the 7th international conference on automatic face and gesture recognition FGR

Lauer F (2008) Machines à Vecteurs de Support et Identification de Systèmes Hybrides, thèse de doctorat de l’Université Henri Poincaré—Nancy 1, octobre 2008, Nancy, France

Taouali O, Aissi I, Villa N, Messaoud H (2009) Identification of nonlinear multivariable processes modelled on reproducing Kernel Hilbert space: application to tennessee process. In: ICONS, 2nd IFAC international conference on intelligent control systems and signal processing. Istanbul, pp 1–6

Vovk V (2008) Leading strategies in competitive on-line prediction. Theor Comput Sci 405:285–296

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Vapnik VN (1998) Statistical learning theory. Wiley, New York

Vandersteen G (1997) Identification of linear and nonlinear systems in an errors-in-variables least squares and total least squares framework, PhD thesis, Vrije Universiteit Brussel

Vovk V (2006) On-line regression competitive with reproducing kernel Hilbert spaces, Technical report arXiv:cs.LG/0511058(version 2), arXiv.org e-Print archive, Jan 2006

Veropoulos K, Cristianini N, Campbell C (1999) The application of support vector machines to medical decision support: a case study, ACAI conference

Ghate VN, Dudul SV (2009) Induction machine fault detection using support vector machine based classifier. WSEAS Trans Syst 8:591–603

Wanga W, Mena C, Lub W (2008) Online prediction model based on support vector machine. Neurocomputing 71:550–558

Yang MH (2002) Kernel eigenfaces vs. Kernel first faces: face recognition using kernel method, In: IEEE FGR, pp 215–220

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Taouali, O., Elaissi, I. & Messaoud, H. Online identification of nonlinear system using reduced kernel principal component analysis. Neural Comput & Applic 21, 161–169 (2012). https://doi.org/10.1007/s00521-010-0461-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-010-0461-x