Abstract

This paper deals with the problem of global stability of stochastic reaction–diffusion recurrent neural networks with continuously distributed delays and Dirichlet boundary conditions. The influence of diffusion, noise and continuously distributed delays upon the stability of the concerned system is discussed. New stability conditions are presented by using of Lyapunov method, inequality techniques and stochastic analysis. Under these sufficient conditions, globally exponential stability in the mean square holds, regardless of system delays. The proposed results extend those in the earlier literature and are easier to verify.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The stability of recurrent neural networks has attracted considerable attention due to its potential applications in classification, parallel computing, associative memory, signal and image processing, and especially in solving some difficult optimization problems. In practice, significant time delays, such as constant time delays, time-varying delays, especially, continuously distributed delays, are ubiquitous both in neural processing and in signal transmission. For example, in the modeling of biological neural networks, it is necessary to take account of time delays due to the finite processing speed of information. Time delays may lead to bifurcation, oscillation, divergence or instability which may be harmful to a system [1, 2]. The stability of neural networks with delays has been studied in [2–24] and references therein.

In addition to the delay effects, a neural system is usually affected by external perturbations. Synaptic transmission is a noisy process brought on by random fluctuations from the release of neurotransmitters and other probabilistic causes [9]. Therefore, it is significant to consider stochastic effects to the stability property of the delayed recurrent neural networks.

Moreover, both in biological and artificial neural networks, diffusion effects cannot be avoided when electrons are moving in asymmetric electromagnetic fields. Hence, it is essential to consider the state variables varying with time and space. The neural networks with diffusion terms can commonly be expressed by partial differential equations [10–15] have considered the stability of neural networks with diffusion terms, in which boundary conditions are all the Neumann boundary conditions.

The neural networks model with Dirichlet boundary conditions has been considered in [16, 21], but it concentrated on deterministic systems and did not take random perturbation into consideration. To the best of our knowledge, few authors have considered global exponential stability in the mean square of stochastic reaction–diffusion recurrent neural networks with continuously distributed delays and Dirichlet boundary conditions. Motivated by these, the stability of stochastic reaction–diffusion neural networks with both continuously distributed delays and Dirichlet boundary conditions is studied in this paper. We use a similar method as in [21], but deal with a more general case in which stochastic perturbations are concerned. The influence of diffusion, continuously distributed delays upon the stability of the concerned system is also discussed. New conditions ensuring the globally exponential stability in the mean square are presented by using of Lyapunov method, inequality techniques and stochastic analysis. These conditions show that the stability is independent of the magnitude of delays, but is dependent of the magnitude of noise and diffusion effects. Therefore, under the sufficient conditions, diffusion and noisy fluctuations are important to the system. The proposed results extend those in the earlier literature and easier to verify.

This paper is constructed as follows. In Sect. 2, our mathematical model of the stochastic reaction–diffusion recurrent neural networks with continuously distributed delays and Dirichlet boundary conditions is presented and some preliminaries are given. Our main results are given in Sect. 3 . In Sect. 4, some examples are provided to illustrate the effectiveness of the obtained results. Our conclusions are drawn in Sect. 5.

2 Model description and preliminaries

Consider the following stochastic reaction–diffusion delayed recurrent neural networks with the Dirichlet boundary conditions:

In the above model,

-

(i)

n ≥ 2 is the number of neurons in the networks; \(x=(x_1,x_2,\ldots,x_m)^T \in X \subset R^m\) and X = {x = (x1, x2,…,x m )T||x i | < l i = 1,2,…,m} is a bounded compact set with smooth boundary ∂X and mesX > 0 in space Rm;

-

(ii)

u(t,x) = (u1(t,x),u2(t,x),…,u n (t,x))T ∈ Rn and u i (t,x) is the state of the ith neuron at time t and in space x;

-

(iii)

smooth function D ik > 0 represents the transmission diffusion operator along the ith unit; b i > 0 represents the rate with which the ith unit will reset its potential to the resting state in isolation when disconnected from the networks and external inputs; c ij ,d ij denote the strength of jth unit on the ith unit at time t and in space x;

-

(iv)

f j (u j (t,x)) and g j (u j (t,x)) denote the activation function of the jth unit at time t and in space x; ϕ(t,x) = (ϕ1(t,x),ϕ2(t,x),…,ϕ n (t,x))T and ϕ i (t,x) are continuous function;

-

(v)

w(t) = (w1(t),…,w n (t))T is a n-dimensional Brownian motion defined on a complete probability space \((\Omega,{{\mathcal{F}}},P)\) with a natural filtration \(\{{{\mathcal{F}}}_t\}_{t{\ge} 0} \) generated by the standard Brownian motion {w(s):0 ≤ s ≤ t}.

-

(vi)

The delay kernels K ij :[0, +∞)→ [0, +∞) (i,j = 1,2,…,n) are real-valued nonnegative continuous functions and satisfy the following conditions

-

(a)

∫ ∞0 K ij (s)ds = 1,

-

(b)

∫ ∞0 sK ij (s)ds < ∞,

-

(c)

There exists a positive μ such that ∫ ∞0 seμs K ij (s)ds < ∞.

-

(a)

Let C[(−∞,0] × X,R n] denote the Banach space of continuous functions which map (−∞,0] × X into R n with norm \(\|\phi\|=\sup_{-\infty<{\theta\leq}0}|\phi(\theta)|. |\cdot|\) is the Euclidean norm in R n. x t = {x(t + θ) :−∞ < θ ≤ 0} for t ≥ 0. Denote by \(C=C^b_{{{\mathcal{F}}}_0}[(-\infty,0]\times X,R^n]\) the family of all bounded \({{\mathcal{F}}}_0\) measurable, random variables ϕ, satisfying \(\|\phi\|_{L^p}^p=\sup_{-\infty<\theta\leq 0}E\|\phi(s)\|^p<\infty,\) where E stands for the mathematical expectation with respect to the given probability measure P. For ϕ ∈ C, define the norm

Let L 2(X) be the space of real Lebesgue measurable function on X. It is a Banach space for the L 2-norm

where u(t,x) = (u 1(t,x),u 2(t,x),…,u n (t,x))T ∈ R n. Define

Throughout this paper, for system (1), we have the following assumptions:

-

(A1)

f j , g j and σ ij are Lipschitz continuous with Lipschitz constants F j > 0,G j > 0 and L ij > 0, respectively, for i,j = 1,2,…,n.

-

(A2)

B−C+F−D+G is a nonsingular M-matrix, where

F = diag(F1,…,F n ), G = diag(G1,…,G n );

B = diag(b1,…,b n ), C+ = (|c ij |)n × n, D+ = (|d ij |)n × n;

If there is no noise in system (1), it turns out to be the following deterministic system.

$$ \begin{aligned} \hbox{d}u_i(t,x)&=\sum_{k=1}^m\frac{{\partial}}{{\partial x_k}}\left(D_{ik}\frac{{\partial u_i}}{{\partial x_k}}\right)\hbox{d}t+\left[-b_iu_i(t,x)+\sum_{j=1}^nc_{ij}f_j(u_j(t,x))\right.\\&\quad+\left.\sum_{j=1}^nd_{ij}\int\limits_{-\infty}^tK_{ij}(t-s)g_j(u_j(s,x))\hbox{d}s+J_i\right]\hbox{d}t, \quad x\in X. \end{aligned} $$(2)Let

$$ \begin{aligned}H_i(u_i)&=-b_iu_i+\sum_{j=1}^nc_{ij}f_j(u_j)\\&\quad+\sum_{j=1}^nd_{ij}\int\limits_{-\infty}^tK_{ij}(t-s)g_j(u_j)\hbox{d}s+J_i\quad i=1,2,\ldots,n \end{aligned} $$then it is known that the solution of H i (u i ) = 0 (i = 1,2,…,n) are equilibrium point of system (2).

Since

$$ \begin{aligned}H_i(u_i)&=-b_iu_i+\sum_{j=i}^nc_{ij}f_j(u_j)+\sum_{j=1}^nd_{ij}g_j(u_j)\int\limits_{-\infty}^tK_{ij}(t-s)\hbox{d}s+J_i\\&=-b_iu_i+\sum_{j=1}^nc_{ij}f_j(u_j)+\sum_{j=1}^nd_{ij}g_j(u_j)+J_i,\qquad i=1,2,\ldots,n \end{aligned} $$from hypothesis (A1) and (A2) (see [24]), we know that system (2) has one unique equilibrium point u * = (u *1 ,u *2 ,…,u * n )T. Suppose

-

(A3)

σ ij (u * i ) = 0 for i,j = 1,2,…,n.

Then u * = (u *1 ,…,u * n )T is an equilibrium point of system (1) provided that system (1) satisfies (A1)–(A3).

We end this section by introducing the definition of globally exponential stability in the mean square and a useful lemma.

Definition 1

For every \(\phi \in C=C^b_{{{\mathcal{F}}}_0}[(-\infty,0]\times X,R^n],\) the equilibrium solution of system (1) is said to be globally exponentially stable in the mean square if there exists positive scalars α > 0 and β > 0 such that

Lemma 1

[16] Let X be a cube |x i | < l i (i = 1,2,…,m) and let h(x) be a real-valued function belonging to C 1(X) which vanish on the boundary ∂X of X, i.e., h(x)|∂X = 0. Then

3 Main results

In this section, we will employ the Ito formula and martingale theory to present a sufficient condition for the globally exponential stability in the mean square of stochastic reaction–diffusion recurrent neural networks with continuously distributed delays and Dirichlet boundary conditions defined by Eq. 1. The usual continuously distributed delayed RNNs without diffusion or stochastic perturbation are included as special cases of equation (1).

Theorem 1

The stochastic reaction–diffusion delayed recurrent neural network (1) with Dirichlet boundary condition is globally exponentially stable in mean square if there exist constantsr i > 0, β ij > 0, (i = 1,2,…,n, j = 1,2,…,n), such that

in which F i ,G i and L ij , (i = 1,2,…,n, j = 1,2,…,n) are Lipschitz constants.

Proof

If condition (3) holds, we can always find a small positive number γ > 0 such that for i = 1,2,…,n

Let us consider functions

From (4) and (A2), we have p i (0) < −γ < 0 and p i (y i ) is continuous for y i ∈ [0, +∞). In addition, p i (y i )→ +∞ as y i → +∞. Thus there exists constant ε i ∈ (0, +∞) such that

for i ∈ 1,2,…,n.

By choosing ε = min1 ≤ i ≤ n{ε i } > 0, we have

for i ∈ 1,2,…,n.

Let u * = (u *1 ,…,u * n )T be an equilibrium point of system (1) and let z i = u i −u * i , then Eq. 1 is equivalent to

where

For system (6), we construct the following Lyapunov functional:

with

and

Applying Ito formula to V 1(t) and V 2(t), respectively, we obtain

and

Since

substituting (9) into (7) and combining with (8), we have

From the Dirichlet boundary conditions and Lemma 1, we have

Since \(\int_0^t\int_X 2\hbox{e}^{\epsilon s}\sum_{i=1}^nr_iz_i(s,x)\sum_{j=1}^n\sigma_{ij}(u_j(s,x))\hbox{d}x\hbox{d}w_j(s)\) is a martingale [20], we have

Therefore, taking expectation on both sides of (10), we obtain

It follows from (5) that

Since

and

combining (12) and (13) with (11), we have

where

is a constant. This complete the proof.

In Theorem 1, if we take D ik = 0, i = 1,…,n,k = 1,…,m, system (1) turns out to be the following stochastic recurrent neural networks with continuously distributed delays.

Corollary 1

Under assumptions (A1)–(A3), if there exist constantsr i > 0, β ij > 0, (i = 1,2,…,n,j = 1,2,…,n) such that

Then for all \(\phi \in C=C^b_{{{\mathcal{F}}}_0}[(-\infty,0],R^n],\) the equilibrium solution of system (14) is globally exponentially stable in the mean square.

For system (1), when σ ij = 0, i,j, = 1,2,…,n, it turns out to be the deterministic recurrent neural networks (2). So we have

Corollary 2

Under assumptions (A1)–(A2), if there exist constantsr i > 0, β ij > 0, (i = 1,2,…,n,j = 1,2,…,n), such that

Then for all \(\phi \in C=C^b_{{{\mathcal{F}}}_0}[(-\infty,0],R^n],\) the equilibrium point of system (2) is globally exponentially stable.

Proof

If there is no stochastic perturbation, the solution of system (2) is deterministic. By Cauchy inequality and Theorem 1, we have \(\|z(t)\|=E\|z(t)\| \leq(E\|z(t)\|^2)^\frac{{1}}{2} \leq \alpha^\frac{{1}}{2}(E\|\phi\|^2)^\frac{{1}}{2}\hbox{e}^{-\frac{{\epsilon}}{{2}}t},\) for all t ≥ 0.

Thus system (2) is globally exponentially stable, which is the result of [21].

Remark 1

We extend [21] to systems with stochastic perturbation.

Remark 2

Noting \((E|x(t)|^{\hat{p}})^{1/\hat{p}}\leq (E|x(t)|^p)^{1/p}\) for \(0 < \hat{p}< p,\) we see that the pth moment exponential stability implies the \(\hat{p}\) th moment exponential stability (see [19]). Therefore for system (1), the globally exponential stability in the mean square implies the mean value exponential stability of an equilibrium solution.

Remark 3

Global exponential stability is the term used for the deterministic system. For the effect of stochastic forces to the stability property of continuously distributed delayed RNNs (1), we usually study the almost sure exponential stability, mean square exponential stability and mean value exponential stability of their equilibrium solution. Generally speaking, the pth moment exponential stability and the almost sure stability do not imply each other and additional conditions are required in order to deduce one from the other (see [19])

Remark 4

In [18], the activation functions are bounded. However, in corollary 1, the stability conditions are obtained without assuming the boundedness, monotonicity and differentiability of the activation functions nor symmetry of synaptic interconnection weights. Hence the proposed results are easier to verify.

4 An example

In this section, we present a numerical example to demonstrate the effectiveness of the proposed results.

Example 1

Consider the following stochastic reaction–diffusion delayed recurrent neural networks:

where X = {x|x i | < 1, i = 1,2} and D 11 = 0.5, D 12 = 0.5, D 21 = 0.3, D 22 = 0.7, b 1 = 0.5, b 2 = 0.4, c 11 = 0.5, c 12 = 0.4, c 21 = 0.3, c 22 = 0.2, d 11 = 0.1, d 12 = 0.2, d 21 = 0.3, d 22 = 0.5, L 11 = L 12 = L 21 = L 22 = 0.4, J = (J 1,J 2)T is the constant input vector. K ij (t) = te−t, i,j = 1,2,g j (x) = arctan(x) , f j (x) = 0.5(|x + 1| − |x − 1|), (j = 1,2). Obviously, f j (·) and g j (·) satisfy Lipschitz condition with F j = G j = 1. By simple calculation , we get

Choosing r 1 = r 2 = 1, β11 = 1, β12 = 1, β21 = 1, and β22 = 1, we have

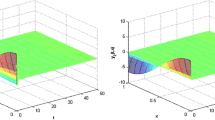

Hence, it follows from Theorem 1 that system (16) is globally exponentially stable in the mean square. Figure 1 gives the numerical results of Example 1.

Example 2

Consider a stochastic reaction–diffusion recurrent neural network with continuously distributed delays:

where \(\tanh(x)=\frac{{\hbox{e}^x-\hbox{e}^{-x}}}{{\hbox{e}^x+\hbox{e}^{-x}}}, \quad K(t)=\hbox{e}^{-t} \) and X = {x||x i | < 1, i = 1,2}.

It is obvious that F j = G j = 1, j = 1,2 and

which is a nonsingular M-matrix. Choosing r 1 = r 2 = 1, β11 = 1, β12 = 1, β21 = 1, and β22 = 1, we have

and

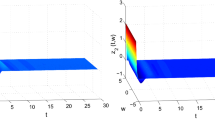

It follows from Theorem 1 that system (17) is globally exponentially stable in the mean square.

5 Conclusions

As is pointed out in Sect. 1, stochastic perturbation and diffusion do exist in a neural network, due to random fluctuations and asymmetry of the field. Thus it is necessary and rewarding to study stochastic effects to the stability property of reaction–diffusion neural networks. In this paper, some new conditions ensuring the global exponential stability in the mean square of the considered system are derived, by using of Lyapunov method, inequality techniques and stochastic analysis. Notice that, these obtained results show that, the stability conditions on system (1) are independent of the magnitude of delays, but are dependent of the magnitude of noise and diffusion effect. Therefore, in the above content, globally exponential stability in the mean square holds, regardless of system delays. The proposed results extend those in the earlier literature and are easier to verify. Our methods are also suitable to the more general stochastic reaction–diffusion neural networks with time delays.

References

Grossberg S (1988) Nonlinear neural networks: principles, mechanisms and architectures. Neural Netw 1:17–61

Liao X, Waong K, Leung SC, Wu Z (2001) Hopfield bifurcation and chaos in a single delayed neuron equation with non-monotonic activation function. Chaos Solitons Fractals 12:1535–1547

Arik S (2002) An analysis of global asymptotic stability of delayed cellular neural networks. IEEE Trans Neural Netw 13:1239–1242

Cao J (2001) Global stability conditions for delayed CNNs. Circuits Syst I 48:1330–1333

Chen T, Amari S (2001) Stability of asymmetric Hopfield networks. IEEE Trans Neural Netw 12:159–163

Chen W, Guan Z, Lu X (2004) Delay-dependent exponential stability of neural networks with variable delays. Phys Lett A 326:355–363

Cohen MA, Grossberg S (1983) Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Syst Man Cybern 13:815–826

Song Q, Cao J, Zhao Z (2006) Periodic solutions and its exponential stability of reaction–diffusion recurrent neural networks with continuously distributed delays. Nonlinear Anal Real World Appl 7:65–80

Haykin S (1994) Neural networks. Prentice-Hall, Englewood Cliffs

Song Q, Cao J (2005) Global exponential stability and existence of periodic solutions in BAM networks with delays and reactionCdiffusion terms. Chaos Solitons Fractals 23:421–430

Zhao Y, Wang G (2005) Existence of periodic oscillatory solution of reaction–diffusion neural networks with delays. Phys Lett A 343:372–383

Liang J, Cao J (2003) Global exponential stability of reaction–diffusion recurrent neural networks with time-varying delays. Phys Lett A 314:434–442

Wang L, Gao Y (2006) Global exponential robust stability of reactionCdiffusion interval neural networks with time-varying delays. Phys Lett A 350:342–348

Wang L, Xu D (2003) Asymptotic behavior of a class of reactionCdiffusion equations with delays. Math Anal Appl 281:439–453

Zhao Z, Song Q, Zhang J (2006) Exponential periodicity and stability of neural networks with reaction–diffusion terms and both variable and unbounded delays. Comput Math Appl 51:475–486

Lu J (2008) Global exponential stability and periodicity of reaction–diffusion delayed recurrent neural networks with Dirichlet boundary conditions. Chaos, Solitons Fractals 35:116–125

Arnold L (1972) Stochastic differential equations: theory and applications. Wiley, New York

Hu J, Zhong S, Liang L (2006) Exponential stability of stochastic delayed cellular neural networks. Chaos, Solitions Fractals 27:1006–1010

Mao X (1997) Stochastic differential equations and applications. Ellis Horwood, Chichester

Karatzas I, Shreve SE (1991) Brownian motion and stochastic calculus, 2nd edn. Springer, Berlin

Lu J, Lu L (2007) Global exponential stability and periodicity of reaction–diffusion recurrent neural networks with distributed delays and Dirichlet boundary conditions. Chaos, solitons Fractals. doi:10.1016/j.chaos.2007.06.040

Yang H, Chu T (2007) LMI conditions for the stability of neural networks with distributed delays. Chaos Solitons Fractals 34:557–563

Song Q, Cao J (2006) Stability analysis of Cohen-Grossberg neural networks with both time-varying and continuously distributed delays. Comput Appl Math 197:188–203

Zhang J (2003) Global exponential stability of neural networks with variable delays. IEEE Trans Circuits Syst I 50:288–290

Acknowledgements

The authors are grateful to the referees for their careful reading and very constructive comments on the original manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research supported by National Science Foundation of China (No. 10371133).

Rights and permissions

About this article

Cite this article

Liu, Z., Peng, J. Delay-independent stability of stochastic reaction–diffusion neural networks with Dirichlet boundary conditions. Neural Comput & Applic 19, 151–158 (2010). https://doi.org/10.1007/s00521-009-0268-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-009-0268-9