Abstract

This paper introduces a new meta-heuristic technique, named geometric mean optimizer (GMO) that emulates the unique properties of the geometric mean operator in mathematics. This operator can simultaneously evaluate the fitness and diversity of the search agents in the search space. In GMO, the geometric mean of the scaled objective values of a certain agent’s opposites is assigned to that agent as its weight representing its overall eligibility to guide the other agents in the search process when solving an optimization problem. Furthermore, the GMO has no parameter to tune, contributing its results to be highly reliable. The competence of the GMO in solving optimization problems is verified via implementation on 52 standard benchmark test problems including 23 classical test functions, 29 CEC2017 test functions as well as nine constrained engineering problems. The results presented by the GMO are then compared with those offered by several newly proposed and popular meta-heuristic algorithms. The results demonstrate that the GMO significantly outperforms its competitors on a vast range of the problems. Source codes of GMO are publicly available at https://github.com/farshad-rezaei1/GMO.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization techniques in the field of evolutionary computation can be categorized into two classes: individual-based versus population-based techniques. Individual-based techniques are set to begin the process of optimization by a single search agent usually generated randomly in a search space attempting to find the global optimum of the problem. This category of algorithms benefits from some advantages and suffers from some disadvantages. The main shortcoming is premature convergence emerging in solving problems with unimodal and multi-modal search landscapes. In unimodal problems, premature convergence usually occurs when the algorithm converges to the global optimum very slowly, while in multi-modal optimization problems, the local optima entrapment is known as the main reason for premature convergence (Mirjalili 2015). Indeed, individual-based algorithms begin the optimization from an initial random point in the search space and usually can only search for the optimum solution in the proximity of that initial random point, without being able of escaping from that region to search other promising regions in the search space. On the contrary, population-based techniques start the optimization process by generating a set of initial random solutions and improving their quality by lapse of iterations. This category of the techniques is of less probability to be stuck in the local optima in multi-modal problems and also conducts the search process more rapidly than the first category when solving the unimodal problems. However, more computational cost as a result of evaluating much more objective functions are two substantial disadvantages this category suffers from as compared to the individual-based problems. Some of the popular individual-based algorithms include single candidate optimizer (Shami et al. 2022).

Moreover, the optimization approaches can also be laid in two different categories: stochastic versus deterministic approaches. Stochastic approaches do not need the derivation of the mathematical functions, as these algorithms frequently change the variables and evaluate the resulting objective functions to reach the best-fitted variables at the framework of a solution. Stochastic algorithms have also lower chances to fall in local optima than the deterministic algorithms (Paper 2015).

Evolutionary algorithms mainly tend to be stochastic and population-based, so they are considered to be the best options to solve any optimization problem with any degree of complexity in variable domain and in the objective function. Some of the most popular and widely used evolutionary algorithms are genetic algorithm (GA) (Goldberg and Holland 1988), and differential evolution (DE) (Storn and Price 1997). It is worth mentioning that the no free lunch (NFL) theorem (Wolpert and Macready 1997) let new evolutionary algorithms be created, since according to this theory, the performances of all algorithms are the same when implemented on several optimization problems. Thus, one algorithm may be highly effective when solving some optional series of problems but is absolutely ineffective on some other series. In the past decade, the researchers have proposed numerous new evolutionary algorithms as required by the NFL theorem. These algorithms can be broken down into four main categories:

-

(1)

Swarm-based algorithms: cuckoo search (CS) (Gandomi et al. 2013), bat algorithm (BA) (Yang 2010), grey wolf optimizer (GWO) (Mirjalili et al. 2014), moth-flame optimization (MFO) [1], Harris Hawks optimizer (HHO) (Heidari et al. 2019), whale optimization algorithm (WOA) (Mirjalili and Lewis 2016), gradient-based optimizer (GBO) (Ahmadianfar et al. 2020), and Aquila optimizer (AO) (Abualigah et al. 2021c).

-

(2)

Physics-based algorithms: gravitational search algorithm (GSA) (Rashedi et al. 2009), galaxy-based search algorithm (GbSA) (Hosseini 2011), black hole (BH) algorithm (Hatamlou 2013), colliding bodies optimization (CBO) (Kaveh and Mahdavi 2014), equilibrium optimizer (EO) (Faramarzi et al. 2020), and flow direction algorithm (FDA) (Karami et al. 2021).

-

(3)

Human-based algorithms: group search optimizer (GSO) (He et al. 2009), interior search algorithm (ISA) (Gandomi 2014), nomadic people optimizer (NPO) (Salih and Alsewari 2020), and teaching–learning-based optimization (TLBO)(Rao et al. 2011).

-

(4)

Evolutionary techniques: gradient evolution (GE) (Kuo and Zulvia 2015), biogeography-based optimization (BBO) (Simon and Member 2008), and space transformation search (Wang et al. 2009).

Every optimization algorithm must accomplish two missions to solve an optimization problem: exploration and exploitation. At the exploration phase, the positions of the search agents are frequently varied while kept diversified to be able of searching as broadly as possible. When transiting from the exploration to the exploitation stage, the search agents must gradually avoid further moving in the space and intensify locally searching their possibly high-fitness neighborhood. Every evolutionary algorithm must be able to hold a balance between these two key phases of any optimization process to make this process be properly and accurately done without leaving any chance for the premature convergence to occur (El et al. 2017).

As it is known, in a variety of the other meta-heuristics, there are two separate mechanisms to do exploration and exploitation, and thus, these two phases may be inconsistent, as a solution may have high fitness but low diversity or is of high diversity but low fitness. In this case, deciding on which particle should be selected as a guide may be very difficult and even if be done, this selection may disrupt the exploration–exploitation balance at numerous iterations of the algorithm. So, there is a need to design an index that is able to simultaneously evaluate fitness and diversity of the solutions as two major factors to be considered during the optimization process. As is clear, the high fitness is a major player to choose the guide of the search agents in the exploitation phase of the optimization process, and the diversity is the major factor helping designate the guide of the search agents in the exploration step. The integration of the abilities to evaluate these two major factors can facilitate the process of selection of the guides such that the best possible guides can be selected by the optimizer. The importance of this issue shows itself better when knowing that it is impossible to delineate a certain boundary between the exploration process and the exploitation step over the course of iterations of an optimization process. Accordingly, the major motivation of the authors of this paper is to introduce a new optimization algorithm while benefitting from an index evaluating fitness and diversity at the same time.

As mentioned above, this paper proposes a novel algorithm, called geometric mean optimizer (GMO). The geometric mean operator in mathematics is found so effective to enhance the abilities which an optimization algorithm should have. Utilizing this operator, the GMO algorithm can deal with both diversity of the search agents and the fitness of them at the same time by evaluating a single simple index, named dual-fitness index (DFI). This index is calculated for all agents in the population and used to define a local guide for each search agent during its search process. The introduction of the DFI in the proposed GMO can make knowing the phase of the optimization (exploration or exploitation) unnecessary and neutral during the optimization process that is being on its right way to be accomplished despite ambiguity in the boundary between the exploration and exploitation phases of an optimization process. Designing the DFI index in GMO is the major novelty of this algorithm against any other meta-heuristic. Among the other advantages of the GMO, there are multiple unique guides designated for the search agents at the proper distances from them in the GMO to firstly help them avoid getting trapped in local optima, and secondly not engaged in a drift causing to lose a large number of good solutions when fast moving toward their guides. Moreover, a Gaussian mutation is applied on the guides in the GMO to further give stochastic nature to the search process of this algorithm as a way to better explore the search space of any optimization problem. At last but not least, the GMO has no parameter to tune, contributing its results to be highly reliable.

The organization of the rest of this paper can be summarized as follows: Sect. 2 is dedicated to present the theory of the GMO, its unique properties helping the GMO to effectively solve the optimization problems, and the steps to implement GMO in detail. In Sect. 3, the results of testing the performance of the proposed algorithm and its rivals on numerous benchmark test functions are introduced. In this section, a statistical analysis is also presented to benchmark how significant the results the proposed algorithm presents are when become superior to its competitors. A qualitative analysis is also conducted in this section to further investigate the convergence behavior of the GMO. In Sect. 4, the results of solving several engineering problems by the GMO are compared to those offered by a large number of other optimizers. Section 5 highlights the strengths and weaknesses of the proposed GMO algorithm. Finally, in Sect. 6, the major conclusions are introduced.

2 Methodology

2.1 Geometric mean optimizer (GMO)

GMO is a meta-heuristic technique, utilizing the behavior of several search agents when socially interacting with each other. The way these agents should interact to yield the best result must be delineated in any optimization algorithm. Here, the unique mathematical properties of the GMO algorithm when handling optimization problems are first explained and then the detailed formulation by help of which this algorithm solves the problems is presented. Assume \({X}_{i}=({x}_{i1}, {x}_{i2}, \dots , {x}_{iD})\) and \({V}_{i}=\left({v}_{i1}, {v}_{i2}, \dots , {v}_{iD}\right)\) are the ith agent’s position and velocity, respectively. According to the requirements mentioned in Sect. 1 for solving the optimization problems, the GMO can simultaneously evaluate the fitness and diversity of the search agents in the search space by a single index, named the dual-fitness index (DFI). The way to calculate the DFI is presented in the remainder of this section in detail. Referring to the fuzzy logic concepts, first introduced by Zadeh (Zadeh 1965), the similarity among several variables can be expressed by multiplying the fuzzy membership function (MF) values attributed to those variables. This multiplication is known as the product-based Larsen implication function, vastly applied in the fuzzy systems. In contrast, the geometric average of n membership degrees (MD) can be expressed by \(\sqrt[n]{{\mu }_{1}\times {\mu }_{2}\times \dots \times {\mu }_{n}}\). As a result, the multiplication of several MDs, i.e., \(({\mu }_{1}\times {\mu }_{2}\times \dots \times {\mu }_{n})\), can be treated as the geometric average of them, while not having the nth root, in which \({\mu }_{i}\) is the ith MD and i = 1, 2, …, n. The experimental results that are presented in Sect. 3 of this paper revealed that the nth root should be neglected in the geometric average operator employed in the GMO. Therefore, this form of the geometric average named the pseudo-geometric average and calculated over the MFs of several variables, can reflect the average and similarity, simultaneously, among them.

According to some mathematical underpinnings mentioned above, the structure of GMO is to be explained here. In GMO, the comprehensive fitness of a search agent can be calculated with regard to its opposites’ finesses. Here, the “opposite” agents of a certain agent are referred to as the set of individuals forming the whole population of agents/elite agents excepting the certain agent itself. At each iteration, the personal best-so-far agent position (the position identified to be the best position that agent has experienced till that iteration) is selected. Then, for all opposite personal best-so-far agents of a certain agent, the multiplication of the objective values, transformed into the fuzzy MFs, is calculated. It is noteworthy that in the implementation of GMO, the fuzzy membership functions must be directly proportional to the fuzzified variables and thus must be ascending in the domain. In a minimization process, the larger the pseudo-geometric average of the MFs obtained for the opposite agents of a focused agent, the more fitted that agent will be. This is because in this case, two tasks are simultaneously fulfilled for the focused agent:

-

(1)

The opposite agents’ MF values are averagely larger, revealing that the focused agent is of relatively less MF and thus the less objective value.

-

(2)

The similarity among the opposite agents’ MFs/objectives is at a higher level, meaning that these agents are gathered in a closed region and thus, the diversity of them is at a lower level. Consequently, the focused agent is located in a relatively more diversified region in the search space.

Both these highlight the capability of the focused agent to have a more suitable situation in terms of both fitness and diversity together in a minimization process, as compared to another agent having less pseudo-geometric average on its opposite agents. To define the MFs, we have used the following formula.

In Eq. (1), a and c stand for the parameters of the sigmoidal MF. Indeed, this function assigns an MD to “high x values” set to the variable x. Since the parameters a and c of Eq. (1) are unknown in each fuzzification effort, there is a need to tune these parameters via a trial-and-error technique. The trial and error procedure has been demonstrated to be an inexact and time-consuming process for parameter tuning in fuzzy systems. Hence, it is better to determine these parameters via other efficient methods. One of these methods can be making the fuzzy membership functions’ parameters such as a and c in Eq. (1) dependent on the statistical parameters. Considering this idea, Rezaei et al. (Rezaei et al. 2017) proved that in an ascending sigmoidal MF, \(c=\mu \) and \(a=\frac{-4}{\sigma \sqrt{e}}\); where \(\mu \) and \(\sigma \) represent the mean and standard deviation of the x values, respectively. Also, e is Napier’s constant. Substituting x by the objective function value calculated for a personal best-so-far search agent, the fuzzy MF value of this agent can be calculated according to Eq. (2).

where \(Z_{best,j}^{t}\) is the objective function value of the jth personal best-so-far agent at the tth iteration; \({\mu }^{t}\) and \({\sigma }^{t}\) are the mean and standard deviation of the objective function values of all personal best-so-far agents at the tth iteration, respectively; \({\mathrm{MF}}_{j}^{t}\) is the MF value of the jth personal best-so-far agent, and \(N\) is the population size. Then, a novel index, we name the dual-fitness index (DFI), can be calculated for a search agent as follows:

where \(N\) is the population size and \({\text{DFI}}_{i}^{t}\) is the dual-fitness index of the ith agent at the tth iteration. For making all personal best-so-far agents contribute to generating a unique global guide agent for each agent, a weighted average of all opposite personal best-so-far agents are defined whose weights are the DFI values that are assigned to them. Equation (4) indicates the mentioned relation:

where \(Y_{i}^{t}\) is the position vector of the unique global guide agent calculated for the agent i at iteration t, \({X}_{j}^{\mathrm{best}}\) stands for the personal best-so-far position vector of the jth search agent, and \({DFI}_{j}^{t}\) is the dual-fitness index of the jth search agent at the tth iteration. Moreover, ε is a very small positive number added to the denominator of Eq. (4) to avoid singularity. It is noteworthy that the ε is necessary to be added here only when solving the simple problems especially the problems with the optimum at the center of their domains. However, adding the ε is unnecessary when solving the more complicated problems, especially the problems with the optimum at a position away from the center of the domain. If there is no prior information about the problem decided to be solved, it is recommended not to add the ε to the denominator of Eq. (4) and consequently Eq. (5). For making the algorithm search process more effective as well as alleviating the computational burden of performing the algorithm, it is recommended to involve only the elite personal best-so-far agents in the calculation of each guide agent. For this purpose, all personal best-so-far agents are sorted from the highest DFI to the lowest DFI and the first Nbest personal best-so-far agents are selected as the elite agents. As a simple way to set the number of Nbest, it can be linearly decreased over the course of iterations, such that it is equal to the population size at the first iteration and is equal to 2 at the final iteration. Considering the value of 2 for Nbest at the final iteration mainly helps make the elite agents always change their positions to further keep diversity. Thus, Eq. (4) can be transformed to Eq. (5) when elitism is addressed in finding the guide agents.

To make the \(Y_{i}^{t}\) more stochastic in nature which can help diversity of the guide agents be better preserved, these agents are mutated in GMO. The mutation scheme is considered to be a Gaussian mutation. The equation imposing this form of mutation on the guide agents is as follows:

where \(randn\) is a random vector generated from the standard normal distribution; \(Std^{t}\) is the standard deviation vector calculated for the personal best-so-far agents at the tth iteration; \({Std}_{\mathrm{max}}^{t}\) is a vector consisting of the maximum standard deviation values of the personal best-so-far agents’ dimensions at the tth iteration, and \(w\) is a parameter decreasing the mutation step size by lapse of iterations and calculated through Eq. (9). Finally, \({Y}_{i,\mathrm{mut}}^{t}\) is the mutated \({Y}_{i}^{t}\) used to guide the search agents. As can be seen, the more the standard deviation of a dimension in the search space, the smaller the mutation step of the guide agents to maintain the existing high diversity among the best agents, contributing to keep the whole population diversified in the search space. On the other hand, the less the standard deviation of a dimension in the search space, a larger step is taken in the mutation mechanism, to broaden the search space and enhance the diversity of the agents in the certain dimension.

Finally, the updating equations can be formulated for each search agent according to Eqs. (7) to (9):

where \( w\) is a control parameter, t is the number of current iteration, and \({t}_{max}\) is the maximum number of iterations. \({V}_{i}^{t}\) is the velocity vector of the ith search agent at the tth iteration, \({V}_{i}^{t+1}\) is the velocity vector of the ith search agent at \(\left(t+1\right)\) th iteration, \({Y}_{i,\mathrm{mut}}^{t}\) is the position vector of the unique global guide for the agent i at iteration t and \({X}_{i}^{t}\) is the ith agent’s position vector at iteration t. In addition, \(\varphi \) is a scaling parameter vector, delineating the steps the agent \(i\) takes toward its guide; and \(rand\) is a random number generated in [0, 1]. As can be seen, the length of the vector of \(\varphi \) is decreasing, as it is varied in the ranges [0, 2], [0.1, 1.9], [0.2, 1.8], …, [0.8, 1.2], [0.9, 1.1], and [1, 1] = \(\left\{1\right\}\), as the iterations go on. This decreasing order considered for the \(\varphi \) ranges is able of enhancing the exploration capability of the GMO at the initial iterations as well as highly increasing the focus on the exploitation at the final iterations to help make the exploration–exploitation transition well-balanced. Figure 1 illustrates the flowchart of the GMO.

2.2 Differences between GMO and PSO

Within this section, we are willing to compare the strategies used by each of the GMO and PSO algorithms to solve the optimization problems to disambiguate the possible similarities between these two methods. To do so, we first explain the search process of the PSO and then mention several substantial differences there are between the two algorithms. Assume that \({X}_{i}=\left({x}_{i1}, {x}_{i2}, \dots , {x}_{iD}\right)\) and \({V}_{i}=\left({v}_{i1}, {v}_{i2}, \dots , {v}_{iD}\right)\) are the vectors containing the position and velocity of the ith particle in each dimension, respectively, and D is the dimensionality of the search space. If \({Pbest}_{i}^{t}=\left({p}_{i1}, {p}_{i2}, \dots , {p}_{iD}\right)\) is the ith personal best (Pbest) position and \({Gbest}^{t}=\left({p}_{g1}, {p}_{g2}, \dots , {p}_{gD}\right)\) represents the global best (Gbest) position of the whole swarm, the velocity- and position-updating procedure of the particles in PSO are illustrated in Eqs. (10) and (11), respectively (Eberhart and Kennedy 1995).

where i Є{1, 2, …, N}; N is the population size; t is the current number of iteration; w is the inertia weight; r1 and r2 are two random vectors in the interval [0, 1], and c1 and c2 are two tunable parameters, usually set to 2. As can be inferred from the updating procedure of the PSO, each particle has some tendency to move toward both its Pbest and Gbest. The mission of the Pbests is maintaining the particles diversified to do exploration, while the role of the Gbest is providing the particles with the access to the high-fitness regions to do exploitation. There are many substantial discrepancies between the GMO and PSO algorithms that are briefly listed below.

-

(1)

In GMO, a novel single index, named dual-fitness index (DFI), is defined to simultaneously evaluate the fitness and diversity of the agents. While, in a variety of the other meta-heuristics including the PSO, there are two separate mechanisms to do so, and thus, the exploration and exploitation phases may be inconsistent, as a particle may have high fitness but low diversity or is of high diversity but low fitness. In this case, deciding on which particle should be selected as a guide may be very difficult and even if be done, this selection may disrupt the exploration–exploitation balance at numerous iterations of the algorithm. Designing the DFI index in GMO is the major novelty of this developed algorithm against the PSO and any other meta-heuristic.

-

(2)

In PSO, each particle has two guides (Pbest and Gbest), attracting it toward themselves. As these guides may be occasionally conflicting in attracting the particles, the particles’ movements may be biased to one of these two guides and thus get unbalanced, contributing them to be stuck in local optima when the Gbest is too near to the particles or making them get into a drift if the Gbest is too far away from the particles helping numerous optima be missed by the particles. Both these behaviors can bring about the premature convergence in the PSO, while, in GMO, each search agent is assigned a single and unique global guide that is positioned at a proper distance from each agent, hindering premature convergence.

-

(3)

In PSO, there are two control parameters (c1 and c2) in the velocity updating procedure of the algorithm with two random numbers to be generated and assigned to each one, while in GMO, there is a single control parameter (\(\varphi \)) which requires only one random number to be generated to calculate its value. As a result, the complexity of the GMO can be less than that of the PSO, making the GMO a faster algorithm than the PSO.

-

(4)

In PSO, there is no interaction between the particles, whereas, in GMO, the unique guide of each search agent is obtained as the average of several elite agents exchanging their information with that guide and thus can positively affect the search process of each search agent.

-

(5)

In PSO, there is a single guide (Gbest) to impede the particles to be trapped in local optima especially when solving the multi-modal problems, while in GMO, multiple guides attempt to make the search agents escape from the local.

-

(6)

In GMO, a Gaussian mutation strategy is carefully set at the body of the algorithm to impart a more uncertain nature to the guides, especially at the initial stages of the optimization, while in PSO, there is no mutation imposed on the guide particles and thus, there is no enforcement for the particles to escape from the local optima, if trapped in them.

-

(7)

In GMO, a smooth transition is conducted from the exploration to the exploitation, as the search agents are gradually approaching their guides, while in PSO, the tendency of the particles to their Pbests and Gbest is always the same over the course of iterations. This fact means that a particle may completely focus on its guides (Pbest and/or Gbest) at the initial iterations of the PSO, while distancing itself from its guides and searching for the possible good positions within this distance at the final iterations. In this case, the exploration and exploitation procedures are not properly performed throughout the optimization process.

2.3 The potential merits of GMO and its computational complexity

The GMO has the ability to integrate the evaluation procedure for the fitness and diversity of the existing solutions in the search space to delineate the guide agents. This is a unique ability that discriminates this algorithm from any other meta-heuristic and is proposed to use in this algorithm for the first time. Since the measurement of the fitness and diversity follow two completely different and independent mechanisms in the meta-heuristics, there may be always a chance to choose inappropriate guide agents, leading the other agents in an unbalanced and ineffective way. If the algorithm is in the exploration phase that entails the search agents to be scattered as much as possible, the different mechanisms to lead the search agents may take effect in a way that agents are so far from the high-fitness area and their movement toward this area is retarded. In the multi-modal problems, this method to excessively stress the exploration process may prompt the agents to get trapped in local optima. Taking highly different mechanisms to lead the search agents may also gather these agents in a highly closed and densely populated region nearby the high-fitness area at the exploitation phase of the search process, speeding up the convergence but at the same time, leading to premature convergence.

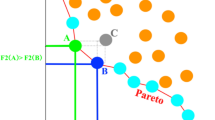

The GMO attempts to change this commonly followed way to guide the search agents by defining a novel index representing the fitness and diversity of each search agent in an integrated way, thus impeding the possible disruptions in the exploration and exploitation phases of the algorithm to more effectively accomplish the optimization process. In GMO, there are multiple guide agents calculated at each of the iterations helping better keep the diversity among the solutions found during the search process. Although these guides are computed so precisely by an integrated index, their merits are still uncertain. As a solution, a Gaussian mutation procedure is applied to them to promote their role as the guide agents. As the exploration and exploitation abilities of the search agents are made balanced in the GMO, these agents are set to gradually approach their guides, in contrast to some other meta-heuristics allowing the agents to always have a stochastic movement around their guide search agents, disregarding if the algorithm is at its early or late iterations. An elitism mechanism is also incorporated into the GMO to improve its performance and speed up its convergence to the optimum. These potential merits are quantitatively evaluated through conducting numerous comparisons between the GMO and a vast range of the other newly proposed and widely used meta-heuristic algorithms. A schematic way to conduct the optimization process in the GMO is illustrated in Fig. 2. It is worth mentioning that in this figure, the average fitness of the four separate regions is different such that: \(\overline{{\mathrm{fitness} }_{R1}}\ge \overline{{\mathrm{fitness} }_{R2}}\ge \overline{{\mathrm{fitness} }_{R3}}\ge {\overline{\mathrm{fitness}} }_{R4}\), in which \(R\) denotes the \(Region\). Accordingly, we have a relation as follows: \({DFI}_{1}^{t}>{DFI}_{3}^{t}>{DFI}_{5}^{t}\).

Computation complexity of the GMO depends on the complexity of four main sections in the algorithm: (1) fitness evaluations, (2) sorting mechanism of the elite solutions, (3) guide solution computation mechanism, and (4) updating procedure of the solutions. The computational complexity of the fitness evaluations is \(O(M\times N)\), and the computational complexity of the sorting mechanism of the elite solutions and the guide solution computation mechanism is both \(O(M\times {N}^{2})\). Finally, the complexity of the updating procedure of the solutions is \(O(M\times N\times D)\). Consequently, the computational complexity of the GMO is \(O\left(M\times N\times (N+D)\right)\). It is worth mentioning that \(M\) is the maximum number of iterations, \(N\) is the number of search agents, and \(D\) is the number of decision variables.

3 Results and discussion

3.1 Test problems

To investigate how eligible the GMO algorithm is when solving the optimization problems, it is implemented on 52 benchmark functions including 23 widely used classical benchmark test problems, and 29 CEC2017 test function suite (Yao et al. 1999; Wu et al. 2017). The aim is to minimize these benchmark functions. D is set to 30 for the classical benchmark functions and is assumed to 50 for all the functions included in the CEC2017 test suite.

3.2 Comparison with other well-known algorithms

3.2.1 Preparation of the algorithms

To investigate the competence of the GMO to tackle a variety of difficulties included in the optimization problems, the GMO was compared with six popular and newly proposed algorithms as the first set of the examinees. These methods include: (1) Harris Haws optimizer (HHO) (Bui et al. 2019); (2) arithmetic optimization algorithm (AOA) (Abualigah et al. 2021a); (3) Aquila optimizer (AO) (Abualigah et al. 2021c), (4) gradient-based optimizer (GBO) (Ahmadianfar et al. 2020); (5) flow direction algorithm (FDA) (Karami et al. 2021); and (6) equilibrium optimizer (EO) (Faramarzi et al. 2020). The parameter settings of these algorithms as well as the proposed GMO are presented in Table 1. The population size and the maximum number of iterations of all algorithms implemented on first 23 test problems were set to 50 and 1000, except for the fixed-dimensional multi-modal problems (F14-F23), the maximum number of iterations of which was set to 500. Moreover, the number of solutions and maximum iterations was assumed to be 50 and 1000 for all algorithms when applied to the CEC2017 functions. All methods were run for 30 independent times. The final experimental results are shown in Tables 2, 3 and 4. The results corresponding to the best-performing algorithms are emboldened in these tables. The abbreviations “Ave” and “Std” in these tables stand for the average and standard deviation of the final results achieved during all runs. The convergence curves of the algorithms plotted for the classical benchmark functions are shown in Figs. 3 and 4.

3.2.2 Comparative results on the unimodal functions

The experimental results obtained from running algorithms on the unimodal problems indicate that GMO significantly outperforms all the comparative algorithms on 10 out of 14 (71%) of all the performance criteria. This desirable performance indicates the supreme ability of GMO to do exploitation, as having proper exploitation ability is the most important challenge in solving unimodal functions. Since strong exploration ability can also speed up the convergence to the optimal point when an algorithm attempts to solve the unimodal functions, the remarkable performance of GMO to solve this category of the benchmark functions also represents its ability to perfectly explore the search space of these functions. The assignment of the unique and diversified guide agents to each agent and taking the diversity of the solutions into account as a major player to delineate the competence of the guide agents are among the other major factors holding diversity among the search agents and enhance the exploration capability of the proposed GMO algorithm.

The convergence curves depicted for the competitive algorithms are shown in Fig. 3. As can be inferred, the GMO algorithm is significantly faster when converging to the optimal point of all of the functions falling in this category. On F1, F3, and F4, the GMO is still willing to converge to the optimal point even after the stopping criterion is fulfilled, while its competitors seem to be stagnated at the late iterations. Furthermore, the GWO shows the fastest convergence behavior on the F6 and is the only algorithm reaching the optimal objective.

3.2.3 Comparative results on the multi-modal functions

The results received upon applying the algorithms to these problems indicate that the GMO is superior to the other competing methods on 7 out of 12 (58%) of the quality criteria, behaving similar to the GBO and slightly better than the AO and HHO each of which outperforms the other algorithms on 7, 6 and 6 out of 12 (58, 50, and 50%) of the criteria. It is clear that making the diversity be integrated into the main fitness of the solutions to enable the algorithm to assign the appropriate weights to each agent during the calculation of the unique guide agents could be very effective to help premature convergence have a little chance to occur when solving the multi-modal functions comprising a large number of local optima in their search space. In addition, imposing the mutation on the guide agents could further contribute the search agents to avoid local optima. The results of solving these functions are included in Table 2. The convergence curves are plotted in Fig. 4. This figure indicates the significantly faster convergence of the GMO to the optimal solution on both F10 and F13 functions, on which the GMO reaches the best solution at the end of the search process. On F9 and F11, the GMO reaches the zero value as the analytical optimal objective value of these problems, just like its competitors except for FDA and AOA on F11 and FDA on F9. The convergence rate of the GMO seems to be slightly less than the other algorithms on all the unimodal and multi-modal test problems at the early iteration; however, this rate is gradually boosted as the optimization process approaches its final stages. This behavior of the GMO is related to its high ability to diversify the search agents at the early iterations, which in turn can provide the bed to more accurately converge to the optimal point at the end of the optimization process.

3.2.4 Comparative results on the fixed-dimension multi-modal functions

The results of applying the comparative algorithms to this category of the classical benchmark functions suggest that the proposed GMO is superior to its rivals only on 3 out of 10 (30%) of the benchmark functions. While the FDA, GBO, and EO show better performance on these problems, outperforming their competitors on 5, 4, and 4 out of 10 (50, 40, and 40%) of the test functions. The HHO and AO behave similar to the GMO, and AOA shows the worst performance among the other algorithms. As can be inferred, the proposed GMO algorithm is not so suitable for solving simple and/or low-dimensional optimization problems. Accordingly, it is recommended to employ this algorithm to solve complex, high-dimensional, and practical problems to receive the most desirable results that the other optimizers are unable to offer.

3.2.5 Comparative results on the CEC2017 benchmark functions

This set of the functions is a very challenging and hard-to-handle one for any optimization algorithm. The functions included in this set seem more realistic as compared to those included in the 23 classical benchmark functions, as the CEC2017 test suite comprises a variety of the composition, hybrid, unimodal, and multi-modal functions. Among these functions, the composite ones are the combination of various shifted, rotated, and biased multi-modal functions and provide a challenging environment for investigating the eligibility of the proposed algorithm in solving optimization problems. Here, all the functions included in the CEC2017 test suite are set to be 50-dimensional to further challenge the capabilities of the proposal to handle such large-scale and complex optimization problems. As the results suggest, the proposed algorithm performs far better than the other competitors on this test bed, such that the GMO can achieve the best performance criteria on 23 out of 58 (40%) of all cases, along with reaching the best averaged final results on 12 out of 29 (41%) of all cases. In detail, the GMO outperforms half of the composite functions, 2 out of 10 hybrid functions, and half of the unimodal and multi-modal functions. While only FDA has better performance than that of the GMO on the hybrid problems, outperforming the other competitors on 4 out of 10 hybrid functions. The HHO, AOA, and AO show the worst performance among the others, being able to outperform none of their rivals on the CEC2017 test suite.

The major characteristic making the proposed GMO the leading algorithm to solve such a challenging test suite is hidden in its highly enhanced exploration capability. Referring to the structure of this algorithm, the abilities to evaluate the fitness and diversity of the search agents are integrated into a single measure which in turn, can help both these major features of the agents be consistently and interactively evaluated. This property the GMO benefits from is a unique one, not allowing the diversity among the solutions to be lost in favor of achieving the high-fitness regions at the late iterations, and also not letting the search agents remain far away from the high-fitness region and get trapped in local optima at the early iterations. Among the other strengths of the GMO leading to this performance on CEC2017 test functions, the mutation mechanism imposed on the guide agents may be of high importance, since the guide agents are always uncertain to rely as the best existing agents in terms of both fitness and diversity. The elitism mechanism incorporated into the GMO is another factor helping the convergence of the algorithm get accelerated. The latter point is especially important when dealing with the unimodal functions or the small- and middle-scale optimization problems. As the final point, having multiple guide agents that are averaged in the search space can considerably boost the exploratory ability of the proposal and help the better interactions and information exchanges among the search agents during the optimization process.

3.3 Comparison with other popular algorithms

3.3.1 Preparation of the algorithms

To further investigate the merits of the GMO to compete a series of classical and popular optimizers as well as a couple of recently proposed and efficient optimization algorithms, the GMO was compared with six other algorithms as the second set of the examinees including: (1) genetic algorithm (GA); (2) particle swarm optimization (PSO); (3) grey wolf optimizer (GWO), (4) gravitational search algorithm (GSA); (5) termite life cycle optimizer (TLCO) (Minh et al. 2023); and (6) K-means optimizer (KO) (Minh et al. 2022). The parameter settings of these algorithms are delineated in Table 4. Just like the first category of the examined algorithms, the population size and the maximum number of iterations of these algorithms were set to 50 and 1000, when implemented on the classical 23 test problems, except for the fixed-dimension multi-modal problems (F14-F23), the maximum number of iterations of which was set to 500. In addition, number of search agents and maximum number of iterations were assumed to be 50 and 1000 for this set of the algorithms when applied to the CEC2017 functions. All optimizers were run for 30 independent times. The final experimental results are shown in Tables 5, 6 and 7. The results corresponding to the best-performing algorithms are shown in bold in these tables. The abbreviations “Ave” and “Std” in these tables denote the average and standard deviation of the final results achieved during 30 runs.

3.3.2 Comparative results on the classical benchmark functions

The results obtained from implementing the above-mentioned algorithms on the unimodal problems indicate that GMO significantly outperforms the GA on 18 out of 23 (78%) of all the performance criteria. The GMO also dominates PSO on 18 out of 23 (78%) of the criteria over the entire 23 classical functions. When the GMO is compared with two more recently proposed and well-known algorithms such as GWO and GSA, the outperformance rates of the GMO are still extended. The proposal performs much better than the GWO and GSA on 17 out of 23 (74%) and 18 out of 23 (78%) of all the performance criteria, respectively. The noticeable point is that the GMO absolutely dominates the GWO and GSA on all the unimodal and multi-modal functions and slightly performs worse that these algorithms on the fixed-dimension functions. This performance is a sign of the robustness of the GMO in solving any type of the global optimization problems, especially when they are high-dimensional in domain. Also, the results suggest the strong exploration and exploitation abilities of the GMO when facing the classical problems, as the high-dimensional problems over which the GMO is fully dominant, are quite desirable examples to test these two abilities any user expects any optimizer to be equipped with. As per the experimental results, the GMO is also superior to two newly proposed algorithms, namely TLCO and KO on 9 out of 13 (69%) of all the unimodal and multi-modal functions included in the examined classical benchmark functions, while each of these two competitors slightly outperforms the GMO on 8 out of 13 (62%) of the unimodal and multi-modal functions. The KO is rated as the best-performing algorithm on 10 fixed-dimension functions with a dominance rate of 70%, a rate very close to that the TLCO offers on this test bed. These results indicate that the GMO is specifically designed to handle large-scale and so complicated problems. The blunt reason for this inference is its brilliant performance when facing the first 13 high-dimensional problems and its slight weakness in solving the 13 fixed-dimension problems in the classical test functions. This point also clarifies that the GMO is of higher convergence accuracy than the convergence speed, contributing such a proposed optimizer to better tackle the problems with large dimensions, complicated domains, and constraints. It is noteworthy that the results of solving engineering design problems mentioned at the end of this paper clearly demonstrate the abilities asserted that the GMO benefits from when solving complicated problems.

3.3.3 Comparative results on the CEC2017 benchmark functions

To further examine the real abilities of the proposed algorithm in solving optimization problems, the performance of the GMO and the second set of the optimizers is all tested on the CEC2017 test suite. Here, the dimensions of all the CEC2017 benchmark functions are set to be 50 to challenge the abilities of the proposal to handle such large-scale and complex optimization problems. As the results suggest, the proposed algorithm performs far better than the other competitors on this test bed, such that the GMO can reach the best performance criteria on 29 out of 58 (50%) of all the problems, while achieving the best average optimal objective function values on 19 out of 29 (66%) of all the problems. In detail, the GMO is superior to its competitors on 7 out of 10 (70%) of the composite functions, 6 out of 10 (60%) of the hybrid functions, and 6 out of 9 (67%) of the unimodal and multi-modal functions, while the PSO is the closest rival of the GMO, achieving the best performance criteria on 10 out of 58 (17%), and the best average results on 6 out of 29 (21%) of the entire test problems of the CEC2017 suite. The GWO performs slightly better than the GSA, reaching the best performance criteria on 7 versus 6 out of 29 criteria. However, the GWO offers the best average results on 3 problems as well, while the GSA yields no best average results against the other examinees. After the GA that is rated as the worst optimizer, absolutely losing the competition to the other algorithms, the KO and TLCO stand on the second and third places as the optimizers not performing desirably on this test suite. As these two optimizers performed desirably on the 23 classical benchmark functions, the undesirable performance of them on the CEC2017 suite clearly indicate that the convergence speed of these optimizers is much higher than their convergence accuracy, at least compared to the other four optimizers examined on this test bed. In other words, the strength of the TLCO and KO is in their exploitation ability, while their exploration ability can be enhanced.

3.4 Statistical analysis

In statistical analysis, the Wilcoxon rank-sum test is used as a nonparametric test to delineate whether a couple of sets of solutions are significantly different [43]. When assuming the results of a series of algorithms as the solutions, the best-performing algorithm is usually compared to its competitors to benchmark the significance of its dominances over them. The output of this test is a number named p-value. If the test results in a p-value < 0.05 for an algorithm, it is assumed to be statistically significantly different compared to another algorithm taking part in this test.

The test results are presented in Tables 8, 9, 10 and 11. In these tables, the expression N/A mentioned in each row represents that the algorithm ahead of the corresponding row is “not applicable” in the test, meaning that this algorithm cannot be compared to itself. Also, the p-values exceeding 0.05 are underlined in these tables. On the classical benchmark functions, the GMO outperforms the first set of its competitors on 11 out of 23 (48%) of the benchmark functions, while GBO outperforms the others on 8 out of 23 (35%) and the HHO and AO both outperform their competitors on 7 out of 23 benchmarks (30%). The other algorithms show worse performance than the mentioned algorithms as the leading ones. On the CEC2017 functions, the GMO dominates its rivals on 12 out of 29 (41%), trailed by the EO and FDA each of which outperforms the other algorithms on 7, 6, and 4 out of 29 functions, meaning 24, 21, and 14% of all cases. As can be seen, the GMO shows much better performance on such a hard-to-solve set of the benchmark functions. However, as the Wilcoxon test suggests, there is a close competition among the examined algorithms in this paper on the classical 23 benchmark test problems, such that the rate of the significant outperformance is low among the comparative algorithms. In detail, among the first set of the examinees, the GMO, GBO, HHO, and AO that were identified to be the best-performing algorithms among the others to solve the classical benchmark functions, can only be significantly superior to two other algorithms among their rivals. While on the CEC2017 test problems, the GMO and FDA are significantly superior to all the other algorithms except for one, while the EO shows significant superiority to all other algorithms.

On the classical test problems, the GMO outperforms the second set of its competitors on 9 out of 23 benchmarks (39%), while the TLCO and KO are superior to the other competitive algorithms on 14 and 15 out of 23 benchmarks (61) and (65%), respectively. The other examinees perform far worse than the GMO, TLCO, and KO, outperforming their rivals on maximum two test functions included in the classical functions. On the CEC2017 test suite, the GMO is superior to the other optimizers on 19 out of 29 (66%) of all the problems, followed by the PSO as its closest rival outperforming the other algorithms on 6 out of 29 (21%) of all the test functions of this suite. As mentioned before, the other optimizers such as TLCO and KO perform not desirably on the CEC2017 suite, at least when compared to their performance on the classical functions and also the performance of their competitors on the CEC2017 test bed. As the Wilcoxon test suggests, the TLCO and KO that were identified to be the best-performing algorithms to solve the classical problems cannot significantly outperform their rivals on these functions, such that the TLCO has a significant dominance over the all its competitors except for the GMO, and the KO is significantly superior to the other algorithms except for the TLCO and GMO. The GMO is also significantly superior to all its rivals from the second set of the examined optimizers except for the GSA, TLCO, and KO. While on the CEC2017 test suite, the GMO is not only the best-performing algorithm among the other six ones, but also shows significant superiority to all its competitors, the characteristics that none of the other examinees in the second set benefit from.

The statistical test reveals that the CEC2017 may be a more appropriate bed for benchmarking the effectiveness of each of the examined algorithms when evaluated individually or as compared to the other examinees, especially considering the CEC2017 test suite as a more challenging and more realistic test bed for evaluating the efficiency of each of the optimizers. Overall, the proposed GMO shows the best performance whether evaluated in terms of the dominance over its rivals or examined in terms of the significance of its dominances when solving both classical benchmarks and the CEC2017 test bed.

3.5 Qualitative analysis for the convergence of GMO

In this subsection, we qualitatively analyze the convergence behavior of the proposed GMO algorithm using five criteria illustrated for several benchmark functions selected among the 23 classical functions. These functions include a variety of unimodal and multi-modal problems adopted in a 2D domain. The detailed analytical results are presented in Figs. 5 and 6, depicted for unimodal and multi-modal functions, respectively.

The first column indicates the search history of the GMO when optimizing each test problem, illustrating the individual and interactional behavior of the search agents during the optimization process. As can be seen in Figs. 5 and 6, the search agents are all scattered across the full domain of all problems, showing the diversity of the solutions are properly maintained during the search process. In addition, the search agents are able to efficiently explore the search space and find the fittest region of the search space to focus on this region sufficiently to finally exploit the optimal or near-optimal solution of each problem.

The second column illustrates the position of the optimal solution at its first dimension. As can be seen, the optimal solution fluctuates at the early iterations of the optimization process, but soon converges to its optimal position in the search space. As shown in the second column for all exemplary test problems, the fluctuation of the optimal solution’s first position is continued up to 50th iteration and then, the curve of the best position is flattened. This behavior of the best (optimal) solution indicates the fast convergence of the proposed algorithm on all the test functions.

The third column illustrates the average fitness of the population depicted iteration-by-iteration. As can be seen, the average fitness is high at the early iterations, while slightly fluctuating up to 50th iteration and then converges to the optimal fitness of each problem. Among the problems examined in this analysis, F9 has somehow different behavior, such that the average fitness of each iteration experiences severe fluctuations at the early iterations, being continued to the nearby of the final iterations and suddenly converges to the optimal fitness of the problem. The main reason explaining this behavior may be hidden in the nature of this function. The F9 is a highly multi-modal function, and reaching the optimal solution of this problem is a difficult task and cannot be soon accomplished. Generally, the third column again evidences the high convergence rate of the GMO when solving a variety of the unimodal and multi-modal optimization problems.

The fourth column illustrates the trajectory of the position of the first solution at the first dimension of the test functions. As this column suggests, the fluctuations of the first solution’s position are high at the early iterations and are gradually decreased over the course of iterations to converge to its optimal position as a task fulfilled after 50th iteration on all problems. It is worth noticing that on the unimodal functions, the position of the first solution fluctuates just around the optimal solution that is located at the center of the domain; however, when dealing with the multi-modal functions, this position begins the search from one corner of the domain and gradually finds its right path to reach its optimal position in the search space. This is another evidence of difficulty of the multi-modal functions to be solved as compared to the unimodal ones.

The fifth column illustrates the convergence curve of the GMO when handling the exemplary test functions. As this curve displays the best fitness function found iteration-by-iteration, the convergence behavior of the algorithm can be easily identified. As can be seen in this column when depicted for all problems, the high convergence rate of the proposed algorithm is clearly inferred. Finally, this qualitative analysis stresses the abilities of the GMO to both maintain the diversity of the solutions and helping them rapidly converge to the optimal point on the optimization problems.

4 Comparative results on engineering problems

To validate the proposal to tackle other types of difficulties an optimization problem may be engaged with, the GMO is implemented on nine constrained engineering problems. The penalty functions are added to the cost functions of these problems for constraint handling. Furthermore, 50 search agents, and 1000 iterations, are set to be used in GMO. The algorithm is run 30 times, and the best results achieved are presented versus those reached by its competitors. It is worth noticing that the results corresponding to the best-performing algorithm are emboldened in the tables presented for all problems.

4.1 Welded beam design problem

Coello (Coello Coello 2000) first proposed the welded beam problem which aims to minimize the construction cost. The main objective function is subject to constraints on shear stress (\(\tau \)), bending stress (\(\sigma \)) in the beam, buckling load on the bar (\(P\)), end deflection of the beam (\(\delta \)), and side constraints. The problem includes four design variables consisting of \(h({x}_{1})\), \(l({x}_{2})\), \(t({x}_{3})\), and \(b({x}_{4})\), as shown in Fig. 7. The problem is formulated as follows.

Welded beam scheme (Mahdavi et al. 2007)

Subject to:

where

Table 11 shows the optimal cost function and design variables computed by the GMO and the other comparative algorithms upon solving the problem. As the results suggest, the GMO can reach the minimum cost among the other algorithms on such a highly constrained and complex real life problem.

4.2 Tension/compression spring design problem

Arora (Arora 2004) first introduced this problem. This problem aims to minimize the weight of a tension/compression spring (TCS), shown in Fig. 8, subject to some constraints. The design variables are wire diameter \(d\left( {x_{1} } \right)\), mean coil diameter \(D\left( {x_{2} } \right)\), and number of active coils \(N\left( {x_{3} } \right)\). The problem is formulated as follows.

Structure of tension/compression spring (Mahdavi et al. 2007)

Subject to:

Table 12 indicates the final results the GMO and its rivals can reach when the problem is solved. As can be seen, the GMO reaches the minimum weight of the spring, while not violating any of the constraints. However, the optimal results of the HS, RO, GSA, and AOA, violate the constraint g2, and the results of the OBSCA violate the constraint g1. The constraints violated are underlined in the table. As can be seen, the optimal objective value achieved by the OBSCA and AOA are less than that of the proposed GMO algorithm, however, these values cannot be accepted as their corresponding optimal variables violate one of the constraints of the problem. As a result, the GMO outperforms all its competitors on this engineering problem as it could calculate the least weight for the beam while fully satisfying the constraints of the problem.

4.3 Speed reducer design problem

This problem targets to minimizing the weight of a speed reducer, subject to numerous constraints (Mezura-Montes and Coello 2005). A graphical image of the speed reducer is displayed in Fig. 9. The variables \({x}_{1}\) to \({x}_{7}\) represent \(b\), \(m\), \(z\), \({l}_{1}\), \({l}_{2}\), \({d}_{1}\) and \({d}_{2}\), respectively, as depicted in Fig. 9. Having 11 constraints in its structure, this problem is provided with a high difficulty which can make it much harder to solve for any algorithm. This problem is formulated as follows.

Speed reducer scheme (Abualigah et al. 2021b)

Subject to:

Table 13 shows the final results calculated by the algorithms upon solving this problem. As can be seen, the proposed GMO algorithm reaches the minimum weight of the speed reducer achieved by all the competitors. The success of the proposed algorithm in solving such complex problem confirms the strength of the GMO and the novel approach it has utilized to effectively explore and intensively exploit the search space when dealing with an optimization problem.

4.4 Pressure vessel design problem

The goal of this problem is to reach the minimum fabrication cost of a pressure vessel depicted in Fig. 10. The design variables are Ts, Th, R, and L, represented by \({x}_{1}\), \({x}_{2}\), \({x}_{3}\), and \({x}_{4}\), respectively, in the problem formulation which is presented as follows.

Pressure vessel scheme (Abualigah et al. 2021b)

Subject to:

The results of solving this problem by several examined algorithms are shown in Table 14. As can be seen, the GMO can find the lowest cost among the other competitive algorithms. As is clear in the problem formulation, this problem is a highly constrained one and the superiority of the proposed GMO to its rivals demonstrates its high competence to solve such problems.

4.5 Three-bar truss design problem

The goal of this problem is minimizing the weight of a three-bar truss subject to stress, deflection, and buckling constraints. This truss has three variable cross section areas marked by A1, A2, and A3. As depicted in Fig. 11, \({A}_{1}={A}_{3}\). Thus, there are only two areas as the decision variables to be optimized which are denoted by \({x}_{1}\) and \({x}_{2}\) in the problem formulation presented below.

Three-bar truss structure (Abd Elaziz et al. 2017)

Subject to:

Table 15 shows the results obtained by numerous algorithms employed to solve this problem. As this table suggest, the results found by the first five algorithms in the table, violate the constraint g1. As a result, their optimal objective function values are not validated. The constraints violated are underlined in the table. Among the remaining four algorithms; the MFO finds the best objective value, however, it is larger than the value obtained by the proposed GMO. Accordingly, the GMO is superior to all its competitors on such a problem with a difficult constrained search space.

4.6 Cantilever beam design problem

The objective of this problem is to minimize the weight of a cantilever beam consisting of five hollow blocks with variable heights and constant thicknesses, depicted in Fig. 12. Thus, there are five decision variables needed to be optimized denoted by \(x_{1}\) to \(x_{5}\) as the blocks’ heights. This problem is formulated as follows.

Cantilever beam problem (Mahdavi et al. 2007)

Subject to:

As the results obtained by several algorithms including the GMO suggest in Table 16, the proposed GMO reaches the minimum weight of the cantilever beam among those obtained by all the other competitors.

4.7 Gas transmission compressor design problem

The goal of this problem is to minimize the cost of delivering 100 million cft gas per a day. The decision variables \({x}_{1}\), \({x}_{2}\), and \({x}_{3}\) are the gas transmission parameters expressed as the distance between the two compressors, the compression ratio value, and value of the inside diameter of the gas pipe, respectively. A schematic image of this problem is shown in Fig. 13. This problem is formulated as follows.

Gas transmission compressor design problem (Kumar et al. 2021)

Subject to:

As can be seen in Table 17, the proposal can calculate the best objective function value, contributing the GMO to be superior to all of its rivals taking part in this very close competition.

4.8 Himmelblau’s nonlinear constrained optimization problems (version-I)

This problem is a highly constrained engineering problem, widely used in the literature to examine the eligibility of the optimization algorithms. The problem has two versions. The first version of this problem is formulated as follows.

Subject to:

where

Table 18 shows the optimal results each of the examined algorithms can find when solving this problem. While the optimal objective values calculated by all the comparative algorithms are so close to each other, the proposed GMO algorithm can achieve significantly lowest objective value compared to the other competitors, demonstrating high merits of the proposal to solve such problem.

4.9 Himmelblau’s nonlinear constrained optimization problems (version-II)

A group of researchers used 0.00026 instead of 0.0006262 as the coefficient of the second term in the first constraint, resulting in the second version of the Himmelblau’s problem. Table 19 shows the optimal results that several algorithms can find for this problem. The results of three algorithms violate the constraint g1 and are invalid. The constraints violated are underlined in the table. Among all examined algorithms, the proposed GMO can reach the far lowest objective value and thus significantly outperforms all its competitors.

As can be seen, the GMO is superior to the numerous optimizers when solving all of the engineering problems. These problems are highly constrained and these constraints strongly limit the search space. Since the optimal solution of these problems must be located in the feasible region whose boundaries are delineated by the numerous constraints embedded in the problems’ formulations, superiority of the proposed GMO in solving these problems indicates the great and promising performance of this algorithm. This means that the GMO not only can desirably solve a variety of the standard benchmark test problems, but can be noticed as a favorite algorithm to solve engineering problems as the real-world cases in the optimization field.

The key factor contributing to such performance of the GMO may be hidden in the new approach this algorithm takes when designating the guide search agents. In GMO, the abilities to measure the fitness and diversity as two key factors to be focused in selecting or computing the guide solutions are integrated. This integration is carried out using the geometric mean operator in mathematics whose unique properties to compute the mean of a number of variables are found highly effective in designating a weight for each solution in the search space to determine its overall eligibility to guide the other solutions. Presenting such comprehensive weights can highly assure the algorithm that the most competent search agents are computed as the guides. These guides are consistently calculated so throughout the optimization process, whether the GMO is in the exploration or exploitation phase. Since there is no boundary between these two major phases of the optimization process in the GMO, there is no chance for the possible disruptions and interruptions occurring when needing the algorithm to show more exploration or exploitation activities in some stages of the optimization, contributing all the movements of the search agents to be made balanced and reliable to help the proposal accomplish the optimization process in the best way. Imposing mutation on the guides to mitigate their intrinsic uncertainty, embedding an elitism mechanism in the algorithm, gradually propelling the search agents toward their guides, and having the efficient computations for this algorithm are all among the other facilities the proposed algorithm benefits from. Hence, the GMO can be strongly recommended as one of the best algorithms in the field of meta-heuristic optimization.

5 The pros and cons of the proposed GMO

As the results of solving global optimization problems (classical benchmark functions and the CEC2017 test suite) as well as the constrained engineering design problems clearly indicated, the high capabilities of the proposed GMO algorithm to both explore and exploit the search space of the optimization problems with a variety of search domains, dimensionality, and difficulties are demonstrated. Furthermore, the GMO is capable of handling complicated engineering design problems with a large number of constraints, stressing its power to effectively explore the search space and detect the real position of the optimal solution wherever it is placed in the domain. Among the advantages of the proposed GMO, its ability to simultaneously evaluate the fitness and diversity by means of the dual-fitness index, computational efficiency, having no parameter to tune, and designating multiple and distinct guides for every solution as a way to help the algorithm avoid premature convergence, are the most eminent and effective ones. However, there are some minor weaknesses that can be handled such as lack of a strategy to set the accurate value of decrease in the steps of the guides when mutated, lack of a self-learning mechanism to accurately determine the rate of decay in the inertia weight multiplied by the velocity term, and occasional occurrence of singularity when calculating the guide positions that makes the algorithm to add \(\varepsilon \) to the denominator of the guides when handling the unimodal or simple problems and not to add \(\varepsilon \) when dealing with the real-world and more complicated problems.

6 Conclusion

This paper presented a novel meta-heuristic optimization algorithm, called geometric mean optimizer (GMO), inspired by the unique properties of the geometric mean operator in mathematics. The geometric mean operator can simultaneously represent the average and similarity of several variables of the same scale. If these variables are assumed as the normalized objective function values of a number of the opposites of a focused search agent in the search space, the geometric mean of these variables can simultaneously represent the fitness and diversity of the focused agent. The higher this geometric mean, the higher the similarity between the opposite agents, and thus, the higher the density of the area these agents are distributed in. Simultaneously, the higher the geometric mean of the opposite agents of an agent, the higher the average objective values of these agents. In this way, the focused agent may be more diversified and has the lower objective/higher fitness value at the same time, in a minimization process. This geometric mean operator, which is turned into a root-free form and named the pseudo-geometric mean operator in the present work, can be employed as a weight assigned to each personal best-so-far agent found by the GMO algorithm at each of the iterations. Then, a weighted averaging process is conducted over the opposite agents of a focused agent and the resulting average agent is adopted as the unique and only guide of the focused search agent. In this algorithm, the guides are all imposed a Gaussian mutation operator. This act can help further strengthen the exploration ability of the proposed GMO.

The strengths of the proposed GMO algorithm to handle the optimization problems can be summarized in five main points: (1) integrating the abilities to evaluate fitness and diversity of the search agents to yield them a weight to better diversify the search space while facilitating access to the high-fitness areas; (2) mitigating the uncertainty of the guide agents via mutating them to impede the GMO to get trapped in prematurely identified local optima while reducing the possibility of drift occurrence during the search agents’ movements toward their guides; (3) incorporating an elitism mechanism into the GMO to further enhance the exploitation capability and expedite its convergence to the optimum; (4) considering the velocity for each search agent to keep pushing the agents to continue their exploration in the search space; and (5) having no parameter to tune.

The proposed GMO was then validated via comparison with two sets of classical, newly proposed and popular meta-heuristics, with reference to some performance criteria. The results obtained in solving 52 standard benchmark test problems and nine engineering problems demonstrated the superiority of the proposal to all its competitors.

As future work, it is suggested to apply GMO to solve some large-scale and broader engineering problems to further investigate the strengths and weaknesses of this algorithm in finding the optimum of such problems to remove the shortcomings the proposal may suffer from.

Data availability

Data will be made available on reasonable request.

References

Abd Elaziz M, Oliva D, Xiong S (2017) An improved opposition-based sine cosine algorithm for global optimization. Expert Syst Appl 90:484–500. https://doi.org/10.1016/j.eswa.2017.07.043

Abualigah L, Diabat A, Mirjalili S et al (2021a) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609. https://doi.org/10.1016/j.cma.2020.113609

Abualigah L, Elaziz MA, Sumari P et al (2021b) Reptile Search Algorithm (RSA): a nature-inspired meta-heuristic optimizer. Expert Syst Appl 191:116158. https://doi.org/10.1016/j.eswa.2021.116158

Abualigah L, Yousri D, Abd Elaziz M et al (2021c) Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput Ind Eng 157:107250. https://doi.org/10.1016/j.cie.2021.107250

Ahmadianfar I, Bozorg-Haddad O, Chu X (2020) Gradient-based optimizer: a new metaheuristic optimization algorithm. Inf Sci (ny) 540:131–159. https://doi.org/10.1016/j.ins.2020.06.037

Arora J (2004) Introduction to optimum design. Elsevier, Netherlands

Baykaso A, Ozsoydan FB (2015) Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl Soft Comput 36:152–154

Baykasoğlu A, Akpinar Ş (2017) Weighted Superposition Attraction (WSA): a swarm intelligence algorithm for optimization problems – Part 1: Unconstrained optimization. Appl Soft Comput 56:520–540. https://doi.org/10.1016/j.asoc.2015.10.036

Beightler CS, Phillips DT (1976) Applied geometric programming. John Wiley & Sons, UK

Ben Guedria N (2016) Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl Soft Comput 40:455–467. https://doi.org/10.1016/j.asoc.2015.10.048

Bui DT, Moayedi H, Kalantar B et al (2019) Harris Hawks optimization: a novel swarm intelligence technique for spatial assessment of landslide susceptibility. Sensors 19:3590. https://doi.org/10.3390/s19163590

Chakraborty P, Roy GG, Das S et al (2009) An improved harmony search algorithm with differential mutation operator. Fundam Informaticae 95:401–426. https://doi.org/10.3233/FI-2009-157

Cheng M-Y, Prayogo D (2014) Symbiotic organisms search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112. https://doi.org/10.1016/j.compstruc.2014.03.007

Chickermane H, Gea HC (1996) Structural optimization using a new local approximation method. Int J Numer Methods Eng 39:829–846. https://doi.org/10.1002/(SICI)1097-0207(19960315)39:5%3c829::AID-NME884%3e3.0.CO;2-U

Coello CAC (2000) Treating constraints as objectives for single-objective evolutionary optimization. Eng Optim 32:275–308. https://doi.org/10.1080/03052150008941301

Coello Coello CA (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41:113–127. https://doi.org/10.1016/S0166-3615(99)00046-9

Czerniak JM, Zarzycki H, Ewald D (2017) AAO as a new strategy in modeling and simulation of constructional problems optimization. Simul Model Pract Theory 76:22–33. https://doi.org/10.1016/j.simpat.2017.04.001

Deb K (1991) Optimal design of a welded beam via genetic algorithms. AIAA J 29:2013–2015. https://doi.org/10.2514/3.10834

Dimopoulos GG (2007) Mixed-variable engineering optimization based on evolutionary and social metaphors. Comput Methods Appl Mech Eng 196:803–817. https://doi.org/10.1016/j.cma.2006.06.010

Duary A, Rahman MS, Shaikh AA et al (2020) A new hybrid algorithm to solve bound-constrained nonlinear optimization problems. Neural Comput Appl 32:12427–12452. https://doi.org/10.1007/s00521-019-04696-7

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: Micro Machine and Human Science, 1995. MHS’95., In: Proceedings of the sixth international symposium on . pp 39--43

El AMA, Ewees AA, Hassanien AE (2017) Whale optimization algorithm and moth-flame optimization for multilevel thresholding image segmentation. Expert Syst Appl 83:242–256. https://doi.org/10.1016/j.eswa.2017.04.023

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowledge-Based Syst 191:105190–105454. https://doi.org/10.1016/j.knosys.2019.105190

Fesanghary M, Mahdavi M, Minary-Jolandan M, Alizadeh Y (2008) Hybridizing harmony search algorithm with sequential quadratic programming for engineering optimization problems. Comput Methods Appl Mech Eng 197:3080–3091. https://doi.org/10.1016/j.cma.2008.02.006

Gandomi AH (2014) Interior search algorithm (ISA): a novel approach for global optimization. ISA Trans 53:1168–1183. https://doi.org/10.1016/j.isatra.2014.03.018

Gandomi AH, Yang X-S, Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29:17–35. https://doi.org/10.1007/s00366-011-0241-y

Garg H (2019) A hybrid GSA-GA algorithm for constrained optimization problems. Inf Sci 478:499–523. https://doi.org/10.1016/j.ins.2018.11.041

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3:95–99. https://doi.org/10.1023/A:1022602019183

Hatamlou A (2013) Black hole: a new heuristic optimization approach for data clustering. Inf Sci 222:175–184. https://doi.org/10.1016/j.ins.2012.08.023

He Q, Wang L (2007a) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20:89–99. https://doi.org/10.1016/j.engappai.2006.03.003

He Q, Wang L (2007b) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186:1407–1422. https://doi.org/10.1016/j.amc.2006.07.134

He S, Wu QH, Saunders JR (2009) Group search optimizer: an optimization algorithm inspired by animal searching behavior. IEEE Trans Evol Comput 13:973–990. https://doi.org/10.1109/TEVC.2009.2011992

Heidari AA, Mirjalili S, Faris H et al (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872. https://doi.org/10.1016/j.future.2019.02.028

Hosseini HS (2011) Principal components analysis by the galaxy-based search algorithm: a novel metaheuristic for continuous optimisation. Int J Comput Sci Eng 6:132. https://doi.org/10.1504/IJCSE.2011.041221

Huang FZ, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186:340–356. https://doi.org/10.1016/j.amc.2006.07.105

Karami H, Anaraki MV, Farzin S, Mirjalili S (2021) Flow direction algorithm (FDA): a novel optimization approach for solving optimization problems. Comput Ind Eng 156:107224–115115. https://doi.org/10.1016/j.cie.2021.107224

Kaveh A, Khayatazad M (2012) A new meta-heuristic method: ray optimization. Comput Struct 112–113:283–294. https://doi.org/10.1016/j.compstruc.2012.09.003

Kaveh A, Mahdavi VR (2014) Colliding bodies optimization: a novel meta-heuristic method. Comput Struct 139:18–27. https://doi.org/10.1016/j.compstruc.2014.04.005

Kaveh A, Talatahari S (2010) An improved ant colony optimization for constrained engineering design problems. Eng Comput 27:155–182. https://doi.org/10.1108/02644401011008577