Abstract

In this paper, the defects and deficiencies of the recently proposed whale optimization algorithm (WOA) are improved. A whale optimization algorithm mixed with an artificial bee colony (ACWOA) is proposed to solve the WOA problems of slow convergence, low precision, and easy to fall into local optimum. The ACWOA algorithm integrates the artificial bee colony algorithm and chaotic mapping, effectively avoiding the local optimal situation and improving the quality of the initial solution. Also, nonlinear convergence factors and adaptive inertia weight coefficients are added to accelerate the convergence rate. To verify the performance of the improved algorithm, 20 benchmark functions and CEC2019 multimodal multi-objective benchmark functions have been used to compare ACWOA with the classical intelligent population algorithms (PSO, MVO, and GWO) and the recent state-of-the-art algorithms (CWOA, HWPSO, and HIWOA) in recent years. The proposed algorithm is applied to two well-known engineering mathematical models and a real application (the quality process control). The experiments show that the ACWOA algorithm has strong competitiveness in convergence speed and solution accuracy and has certain practical value in complex mathematical model scenarios.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the fields such as machine learning, image processing, and neural networks, there are large-scale complex global optimization problems. Such problems have characteristics, for example, the variables are closely related to each other and the dimensions are high, so it is very difficult to obtain good results using traditional optimization algorithms in this complicated environment (Luo and Shi 2018). To solve the large-scale complex high-dimensional optimization problems, new and various optimization algorithms are constantly appearing. Population intelligence algorithm is one of the most popular research fields. This is an algorithm that is generated by simulating the way life animals live in nature (Kennedy and Eberhart 2002; Rajabioun 2011; Rashedi et al. 2009; Failed 2021d). Due to the complex and ever-changing problems of predators in nature (like predation of prey, handling of food, searching for paths, etc.), the cooperation and interconnection between individuals have formed a powerful group force to solve these problems. For example, Marco Dorigo comes up with the ant colony optimization (ACO) algorithm after discovering the path behavior of ants searching for food (Dong et al. Oct 2018). Mirjalili represents gray wolf optimization (GWO) algorithm by observing gray wolfs kill their prey (Mirjalili et al. 2014). Karaboga adopts the artificial swarm optimization (ASO) algorithm based on the bees’ behavior of going out to collect honey (Karaboga 2010). Kennedy comes up with the famous particle swarm optimization (PSO) algorithm (Niknam and Amiri 2010). The population intelligence algorithms have brought great practical value to society and have achieved success in many projects (Talbi et al. 2004; He et al. Nov 2015; Yang et al. 2019; Cai et al. Aug 2019; Failed 2021a, b; Mohammadzadeh and Gharehchopogh 2021). However, different algorithms have their advantages and disadvantages in application environments (Singh et al. 2015; Zhang et al. 2019).

The whale optimization algorithm (WOA) is one of the population intelligence algorithms, which originates from the feeding behavior of whales. Because of its advantages of simple implementation and few parameters, it has been successfully applied in various fields (Mirjalili and Lewis May 2016; Mohammadzadeh and Gharehchopogh 2021). In the WOA, each individual represents a solution to the problem. According to the historical optimal value in the population, each individual is assigned a fitness function value and a speed to update the location. After continuous position updates, the optimal solution of the problem is obtained. However, in the process of individual search, the speed of the individual is fast in the early stage, and it is easy to fall into the local optimum. As the number of iterations increases, the diversity of the population will decrease, causing individuals to lose the ability to search. The final result cannot meet our needs in some complex problems.

Artificial bee colony algorithm (ABC) is also a high-quality population intelligent algorithm. It has the extremely high exploratory ability, and the richness of the population is strong. However, it has a slower convergence speed and lower accuracy in the latter part of the iteration.

However, how to balance the exploitation and detection of algorithms is still the focus of research and attention (Gharehchopogh and Gholizadeh 2019; Mehne and Mirjalili Jul 2018; Ding et al. 2018d). To solve the above problem and make up for the shortcomings of the two algorithms (WOA and ABC), we propose a combination of whale optimization algorithm and artificial bee colony algorithm. The main contributions of this paper are the following:

To avoid the local optimum and improve the accuracy, we propose to combine the whale optimization algorithm with the artificial bee colony algorithm to make up for the shortcomings of each algorithm. The whales are subjected to the behavior of bees. The individuals who will carry out the whale movement, the individuals who take the honey movement, and the last moment individual whales make greedy decisions through the fitness function, retaining the individuals with the best fitness values, and the other two are directly eliminated.

2. To improve the sensitivity of population initialization, we propose to use a chaotic mapping model instead of a random average distribution model. The chaos theory can make the initial conditions more sensitive and can produce a more variable number range (Sayed et al. Jul 2018).

3. To further accelerate the convergence speed, we use nonlinear convergence and adaptive inertia weights.

4. We experimented with the proposed algorithm and other advanced optimization algorithms in multiple environments (like 20 benchmark functions, two well-known engineering mathematical models, and a real application).

The rest of this paper is organized as follows: In Sect. 2, we review the related works. In Sect. 3, a detailed introduction to the traditional WOA algorithm, artificial bee colony algorithm, and chaotic mapping is given. Section 4 details the architecture of the ACWOA algorithm. Sections 5 and 6, respectively, describe the comparison between ACWOA and other advanced algorithms on various experiments (20 test benchmark functions, two well-known mathematical models, and a real application). The algorithms are analyzed and discussed from the experimental results. Section 7 summarizes the results of this study and the outlook for the future.

2 Related works

The whale optimization algorithm is a new population intelligence algorithm proposed in 2016. It is constructed by Mirjalili, a scholar at Griffith University in Australia (Mirjalili and Lewis May 2016). This algorithm is inspired by the hunting behavior of the humpback whales (bubble net attack behavior). They prey on prey by fighting with prey, attacking prey, and searching for prey (Sun et al. 2018a). The WOA algorithm simulates the process of predation behaviors to find the optimal solution. Due to its simple principle, convenient implementation, and fewer parameters, the WOA is widely used in various fields (Gharehchopogh and Gholizadeh 2019). Karlekar uses a support vector machine based on whale optimization to medical data classification (Karlekar and Gomathi 2018). The algorithm is applied to the nonlinear Gaussian adaptive PID controller (Khadanga et al. 2018b, c). This algorithm is also used by Aziz in image segmentation (Abd El Aziz et al. 2017), etc.

Although the WOA algorithm has achieved results in many engineering applications, it has early convergence speeds and is easy to fall into local optimum in dealing with some complex or high-dimensional optimization problems (Gharehchopogh and Gholizadeh 2019; Abdel-Basset et al. Aug 2018; Mohapatra et al. 2017; Khadanga et al. 2018c). In recent years, experts and scholars have proposed many improved measures to compensate for their shortcomings in the population intelligence algorithm. Mafarja improves the performance of the WOA algorithm by adding crossover and mutation (Mafarja and Mirjalili 2018). Mafarja optimizes the optimal solution by mixing the WOA algorithm with the classical annealing algorithm (Mafarja and Mirjalili 2017). Sun, Y. J. uses the Levy flight strategy to WOA algorithm to avoid local optimization and adds a quadratic interpolation to improve local mining capacity (Sun et al. 2018). Yan, Z. H. adopts weight coefficient to the WOA algorithm to improve the convergence rate and accuracy (Yan et al. 2018). Mohammadzadeh et al. propose to add a flower pollination algorithm (FPA) to WOA to improve efficiency (Mohammadzadeh and Gharehchopogh 2021). And these measures have been confirmed in practical engineering applications.

On the other hand, to overcome the shortcomings of the ABC algorithm, there are currently many schemes. For example, Nouria Rahnema et al. propose a combined algorithm of ABC and WOA (ABCWOA). Random memory (RM) and elite memory (EM) are proposed in the ABC algorithm, where RM is used in the search phase of the WOA algorithm and EM is used to improve the convergence of the algorithm (Rahnema and Gharehchopogh 2020). Peng Shao et al. represent the enhancing ABC algorithm using the refraction principle (EABC-RP). In the search phase, to increase population diversity, the unified opposition-based learning (UOBL) based on the refraction principle is applied to the ABC algorithm. For exploitation, the UOBL is used to effectively avoid the local optimal (Shao et al. 2020).

Some measures (such as chaotic mapping, nonlinear convergence, and adaptive inertia weights) can also have a better effect on the population intelligence algorithm. Various chaotic maps are used to the vortex search algorithm (VSA) to obtain better performance by Gharehchopogh (2021). Sayed, G. I. improves the quality of the initial solution of the WOA algorithm using chaotic maps (Sayed et al. 2018). Converting linear convergence to nonlinear convergence and assigning their respective weight values to the populations can make the algorithm get better results (Tang et al. 2019).

The algorithm proposed in this paper is more similar to the ABCWOA algorithm proposed by Nouria Rahnema et al. (Rahnema and Gharehchopogh 2020). The difference compared with ABCWOA is that we use a greedy decision-making method to update the position of individuals in the population. The individuals who will carry out the whale movement, the individuals who take the honey movement, and the last moment individual whales make greedy decisions through the fitness function, retaining the individuals with the best fitness values, and the other two are directly eliminated. At the same time, the threshold is set up. When the position update of the whale population does not change, the whales are converted into “detection whales” to further jump out of the local optimum. On the other hand, chaos mapping, nonlinear convergence, and adaptive weights are added to further optimize the performance of the algorithm.

3 Background

3.1 Whale optimization algorithm

The whale optimization algorithm is a novel optimization algorithm whose mechanism is derived from the social behavior of humpback whales. Adult humpback whales are typically 13–15 m long and feed on small fish and shrimp. Its special predation method is blister-net predation, which includes three activities (enveloping prey, spiraling off prey, and randomly searching for prey). The entire predation behavior process does not know the optimal solution location in advance. Through the interaction and cooperation between individuals, the individual positions are continuously updated, and finally an approximate optimal solution is obtained (Mirjalili and Lewis May 2016).

3.2 The activity of surrounding prey

Average random placement is used to initialize all individual whales and infer which whale is closest to the prey. The movement of other whales is changed by the optimal individual, so that the whales in the non-optimal position are close to the optimal position of the whales. The movement of whale individual position is as follows:

where \(\vec{X}(t)\) and \(\vec{X}(t{ + 1})\) represent the current location of the whale individual and the location of the next moment whale, respectively. \(\overrightarrow {{X_{{{\text{best}}}} }} (t)\) is the location of the whale closest to the prey. \(\vec{a}\) is used as a linear convergence factor, and its value decreases linearly from 2 to 0 as the number of iterations increases. The number of iterations at this time \(t\) and the maximum number of iterations \(t_{\max }\) are adopted. \(\overrightarrow {{{\text{rand}}}}\) is a random number, ranging from 0 to 1.

3.3 The activity of spirally attacking prey

The humpback whale revolves around the prey with its unique blister-like spiraling behavior. The mathematical function model of the individual position is as follows:

where the logarithmic spiral shape constant b is 1 and then \(l \in [ - 1,1]\) represents a random number. It is worth noting that \(q\) is a random number of [0,1], and its value determines whether the whale population is surrounding prey or spirally ascending prey. Each behavior has a 50% probability.

3.4 The activity of searching for prey

The search for prey behavior is divided into two categories. One is the behavior of the whale population surrounding the prey, which is Formula (1), and the whale population moves closer to \(\overrightarrow {{X_{{{\text{best}}}} }} (t)\). The other is to randomly acquire the location of a whale individual and force other whale individuals to make corresponding location updates. The detailed whale running model is as follows:

where \(\overrightarrow {X}_{{{\text{rand}}}} (t)\) represents the location of a random whale individual. Other whales are affected by \(\overrightarrow {X}_{{{\text{rand}}}} (t)\) rather than \(\overrightarrow {{X_{{{\text{best}}}} }} (t)\). This behavior will increase the diversity of the population and improve global detection capabilities. The location update of the whale population depends on \(\left| A \right|\). It is defined that when \(\left| A \right| \le 1\), the whale population performs Formula (1), and when \(\left| A \right| \ge 1\), the whale population performs Formula (6). As the number of iterations increases, \(\vec{a}\) will decrease linearly. When \(\vec{a}\) is reduced to 1, the whale population will only move to \(\overrightarrow {{X_{{{\text{best}}}} }} (t)\).

The pseudo-code of the WOA algorithm is as follows, and the flowchart of the WOA algorithm is shown in Fig. 1.

3.5 Artificial bee colony algorithm

In 2005, scholar Karaboga proposes a new population intelligence algorithm, an artificial population bee algorithm, by observing the bee colony for honey collecting behavior (Xue et al. 2018). The principle of the algorithm comes from the cooperation of the bees. These bees have different identities and perform different behaviors. They conduct collective movements through the exchange of bee colonies and finally find the location of the largest honey source (the optimal solution to the problem).

The artificial bee colony algorithm divides the bee colony into three identities: employed bees, onlooker bees, and scout bees. Each bee learns the honey source of the current location, which represents a possible solution to the optimization problem. The employed bees occupy 50% of the number of populations, and the rest are onlooker bees. The employed bees look for food near the food source through memory and share food-related information with onlooker bees. The onlooker bees get the best food source through comparison and look for food around the best food source. At this point, some employed bees are converted into scout bees to find new food sources. After the continuous updates, we finally get the corresponding results. The specific implementation process is as follows.

We suppose that the possible solution of the high-dimensional complex problem is \(X_{i}^{{}} = \{ x_{i1} ,x_{i2} ,...,x_{i\dim } \}\). Firstly, all of the populations are initialized. The specific mathematical expression is as follows:

where dim and i indicate the number of dimensions and the total number of populations, respectively. \(r_{1}\) is random between [0, 1]. \(x_{\max }^{j}\) and \(x_{\min }^{j}\) are upper boundary and lower boundary, respectively.

Secondly, the employed bees establish a new food source near their respective locations. The specific location update is as follows:

where \(x_{ij}\) is the j-dimensional position of the ith honey source at the current moment and \(x_{kj}\) randomly selects another (non-ith) honey source position. \(r_{2}\) is a random number between [− 1, 1], and \(x_{ij}^{ * }\) represents the position of a new honey source. The original possible solution \(X_{i}^{{}} = \{ x_{i1} ,x_{i2} ,...,x_{i\dim } \}\) is compared with the new possible solution \(X_{i}^{ * } = \{ x_{i1} ,x_{i2} ,...,x_{i\dim } \}\). If the fitness value of the new possible solution \({\text{F}}(X_{i}^{ * } )\) is better than the original possible solution \({\text{F}}(X_{i} )\), the original honey source is replaced by the new honey source, otherwise the original honey source information is retained.

Thirdly, after employed bees searched, the employed bees pass the result to onlooker bees. The onlooker bees will select a honey source to follow by a probability calculation. The specific probability formula is as follows:

\(p_{i}\) is the probability of following the ith honey source and \(F_{i}\) represents the fitness value of \(X_{i}^{{}}\). According to the probability value \(p_{i}\), the onlooker bees select the corresponding food source, and these onlooker bees perform a position update according to Formula (11). \(X_{i}^{{}}\) does not find a better honey source when it reaches the threshold Limit; all of the bees will become scout bees to find a new honey source. The mathematical model for its location update is Formula (10).

3.6 Chaos map

Most of the population algorithms use Gaussian distribution or uniform distribution to initialize individuals, but the initial population produced by this method is not sensitive. The chaos theory can make the initial conditions more sensitive and can produce a more variable number range (Sayed et al. 2018). Therefore, many experts and scholars are working to construct different chaotic maps instead of random initialization. It has been confirmed in documents (Sayed et al. 2018; Gupta and Deep 2019; Li et al. 2018e) that chaotic maps will be used in most population intelligence algorithms to show better results. Five common chaotic map information is detailed in Table 1. We can set up different population initialization values by choosing different mapping functions.

4 ACWOA

This section describes the proposed ACWOA algorithm in detail, which integrates the artificial bee colony algorithm and chaotic map to improve the global search ability and adds nonlinear convergence factor and adaptive weight coefficient to improve its convergence rate.

4.1 Adding chaotic mapping

The quality of the initial population will directly affect the performance of the entire algorithm. Therefore, a good initial decision has been discussed by many scholars and experts. It is common to use chaotic map initialization instead of random average initialization in the process of optimization and improvement (Cai et al. Aug 2019; Sayed et al. Jul 2018; Li et al. 2018e). The experimental results are effective, and in this paper, a chaotic map, Logistic, is selected to initialize the population (Gandomi et al. Feb 2013).

4.2 Inserting artificial bee colony algorithm

In the process of optimizing the population intelligence algorithm, many experts and scholars improve the performance of the algorithm by mixing other algorithms. The ultimate goal is to improve the diversity and convergence rate of the algorithm. For example, the PSO algorithm is added to the WOA algorithm (Ding et al. 2018d). The fusion algorithm of PSO and biogeography-based optimization is applied in a novel computer-aided diagnosis (Zhang et al. 2015). The hybrid algorithm of the GWO algorithm and cellular automata is applied in urban growth simulation (Cao et al. Aug 2019). Experimental results show that the hybrid algorithm is improved in terms of local and global search capabilities. Inspired by this, this paper proposes to add the artificial bee colony algorithm to the traditional WOA algorithm to improve the detection ability and avoid falling into the local optimum.

In this paper, the whales are subjected to the behavior of collecting bees. A random dimension in the individual whale is updated in the position of Formula (10), the fitness function \({\text{F}}(X_{i}^{ * } )\), and then the greedy strategy is adopted. The individuals who will carry out the whale movement, the individuals who take the honey movement, and the last moment individual whales make greedy decisions through the fitness function, retaining the individuals with the best fitness values, and the other two are directly eliminated. Its function model is as follows:

where \(\overrightarrow {{X_{i}^{ * } }} (t + 1)\) indicates the position at which the next moment of the bee movement. \(\overrightarrow {{X_{i} }} (t)\) and \(\overrightarrow {{X_{i} }} (t{ + }1)\), respectively, represent the current position of the ith whale and the position of the whale movement at the next moment. \(F( \cdot )\) represents its corresponding fitness function. Next, we set a statistic Li (i = 1, 2, …, N) and a threshold Limit. When the individual position of the population changes (\(\overrightarrow {{X_{i} }} (t{ + }1) = \overrightarrow {{{\text{X}}_{i} }} (t + 1)\) or \(\overrightarrow {{X_{i} }} (t{ + }1) = \overrightarrow {{X_{i}^{ * } }} (t + 1)\)), Li = 0. Otherwise, Li = Li + 1. If Li = Limit, it means that the individual of the population has not changed position for a long time. At this point, this individual is converted into a “detection whale.” The “detection whale” performs location updates according to Formula (10).

4.3 Nonlinear convergence factor

The most important parameter in the original WOA algorithm is \(A\), which will directly determine the convergence speed and accuracy of the algorithm. However, it can be seen from Formula (2) that \(A\) is mainly determined by \(a\). As the number of iterations increases, \(a\) decreases gradually, which leads to the enhancement of the local development ability of the algorithm and the convergence rate is accelerated, but the possibility of falling into local optimum will increase. However, although the global detection ability is strong at the initial stage of the iteration, the convergence speed is slow. To improve the convergence rate in the early stage, it is necessary to speed up the decrement of \(a\). For another thing, the environment is complex and variable, and a linear decrement cannot fully reflect the nonlinear search. Therefore, a new type of nonlinear convergence factor is proposed here. The specific model is as follows:

Therefore, the motion update models of Formulas (1) and (8) are changed to Formulas (15) and (16), respectively:

4.4 Adaptive weight coefficient

Literature studies (Eberhart and Shi 2000; Fan and Chiu 2007) illustrate that the weight coefficient directly affects the searchability of the algorithm. Therefore, the optimization of the algorithm by inserting the inertia weight coefficient is also a hot topic. The common point of the intelligent population algorithm is that as the number of iterations increases, the ability of local detection will continue to increase. The final ideal state is that all the populations are in the vicinity of the optimal value. The optimal value is the optimal individual position in the ideal state. To further reflect that the proportion of the optimal individual position becomes more and more important as the number of iterations increases, the adaptive weight coefficient is introduced. The following is the specific expression of the adaptive coefficient proposed in this paper.

Therefore, Formula (6) of the traditional WOA algorithm is updated to Formula (18).

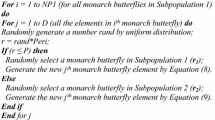

The pseudo-code of the ACWOA algorithm is as follows, and the flowchart of the ACWOA algorithm is shown in Fig. 2.

4.5 Experimental results and analysis of benchmark functions

To test the performance of the proposed ACWOA algorithm, this paper uses 20 standard benchmark functions and CEC2019 multimodal multi-objective benchmark functions for experimental detection and evaluation. The simulation experiment platform is run under Windows 10 (64-bit) operating system, Intel(R) Core(TM) i7-8750cpu, 2.20 Ghz main frequency, and 16 GB memory environment. Meanwhile, we use MATLAB 2014b programming software. The traditional WOA algorithm, three advanced improved WOA algorithms (CWOA, HWPSO, and HIWOA), and three classical population intelligent algorithms (PSO, MVO, and GWO) are selected for experimental performance comparison.

4.6 Set up the experiment and benchmark function

To reflect the fairness of the experiment, the number \(N\) of the solution is set to 30. The dimension dim is 30. (The number of dimensions of the partial test basis function is given.) The maximum number of iterations \(t_{\max }\) is 1000, and each algorithm is separate on each function. The number of times each one all the fitness functions \(T_{\max }\) is 10000 and the number of times the fitness functions is counted each time. After a lot of simulation experiments, we set the threshold Limit = 20. The number of runs is \(N_{r}\) = 20 times, and the standard deviation, average value, and optimal value are averaged. Most of the parameters in this paper are based on the literature (Mirjalili and Lewis 2016). Other parameter values are obtained according to experimental experience. Document specifically describes the above (Kennedy and Eberhart 2002; Mirjalili et al. 2014, 2015; Mirjalili and Lewis 2016; Gandomi et al. 2013; Laskar et al. 2019; Tang et al. 2019) HIWOA, HWPSO, CWOA, WOA, PSO, MVO, and GWO.

Tables 2, 3, and 4 provide a detailed description of the 20 standard test basis functions used in this experiment. These functions are roughly divided into three categories: single-peak functions (the global optimal solution is set to have one and only one), the variable-dimensional multi-peak functions (when the default dimension dim is 30, the optimal solution has two or more), and the fixed dimension multimodal functions (in the case of a given dimension, there are two or more global optimal solutions). F1–F7 are the single-peak functions, F8–F12 are the variable-dimensional multi-peak functions, and F13-F20 are the fixed dimension multimodal functions.

To further verify the effectiveness of the ACWOA algorithm, we also add CEC2019 multimodal multi-objective benchmark functions. These functions are described in Table 5.

4.7 Evaluation measures

The metrics used to evaluate the performance of each algorithm are the average, standard, and optimal values of the fitness values, and the specific descriptions are given in Table 6.

4.8 Analysis of experimental results

This section compares and analyzes the proposed ACWOA algorithm with the other six optimization algorithms described above. Tables 7, 8, 9, 10, 11, and 12 provide the average values, the best values, and the standard deviations of the fitness values of the respective algorithms.

4.9 Average fitness value

As shown in Tables 7 and 8, the average values of the respective algorithms on the 30 test functions.

On the single-peak functions, the proposed ACWOA has the best performance on F1, F2, F3, F4, and F7. The proposed algorithm, HWPSO, performs well on F4 and F5.

On the variable-dimensional multi-peak functions, ACWOA has the best performance on F8, F9, F10, and F12, ranking second on F11. The WOA and ACWOA perform best on F8 and F10. The GWO has the best performance on F10. The PSO performs well on F11. HIWOA has the best performance on F8 and F10.

On the fixed dimension multimodal functions, all algorithms have the best performance on F15, F16, and F17. The ACWOA achieves the best performance on F13, F14 and F19, ranking third on F18 and F20. The WOA performs best on F18. The GWO performs best on F18 and F20. The MVO and HIWOA both achieve the best solution on F13.

On the CEC2019 test functions, the ACWOA has the best performance on F21, F22, F23, F25, F26, and F28, ranking second on F27 and F30 and ranking third on F24 and F29. GWO, PSO, MVO, and HIWOA perform well on functions F24, F30, F27, and F21, respectively.

4.10 Best fitness value

Tables 9 and 10 provide the best values of the respective algorithms on the 30 test functions.

On the single-peak functions, the proposed ACWOA has the best performance on F1, F2, F3, F4, F5, and F7, ranking third on F6. The proposed algorithms, HWPSO and HIWOA, perform well on F6 and F4, respectively.

On the variable-dimensional multi-peak functions, ACWOA has the best performance on F8, F9, F10, and F12, ranking third on F11. However, PSO achieves the best performance on F11. WOA, CWOA, HWPSO, GWO, and HIWOA perform well on functions F8 and F10.

On the fixed dimension multimodal functions, all algorithms have the best performance on F13 and F15–F20. The ACWOA has the best performance on F14.

On the CEC2019 test functions, the ACWOA has the best performance on F21, F22, F23, F24, F25, and F26, ranking second on F27 and F30 and ranking third on F29. GWO performs well on functions F21, F28, and F29. PSO has the best performance on F26 and F30. MVO and HIWOA achieve the best performance on F27 and F21, respectively.

4.11 Standard deviation

To test the stability of the algorithm, the standard deviation is used to measure the performance of the algorithm on the 30 test functions, as shown in Tables 11 and 12.

On the single-peak functions, the proposed ACWOA has the best performance on F1, F2, F3, F5, and F7, ranking second on F4 and F6. The proposed algorithm, HIWOA, performs well on F1 and F4. HWPSO has the best performance on F6.

On the variable-dimensional multi-peak functions, ACWOA has the best performance on F8, F9, F10, and F12, ranking second on F11. However, the PSO achieves the best performance on F11. WOA, CWOA, and HIWOA perform well on functions F8 and F10. GWO performs well on function F10.

On the fixed dimension multimodal functions, ACWOA ranks second on F20 and ranks third on F17. ACWOA has the best performance on other functions. The proposed algorithm, HWPSO, performs well on F15 and F16. PSO achieves the best performance on F15, F16, and F17. GWO performs well on function F20.

On the CEC2019 test functions, the ACWOA has the best performance on F21, F26, and F28, ranking second on functions F22, F24, F29, and F30. GWO achieves the best performance on F26, F27, and F29. MVO has the best performance on F24 and F30. CWOA and HIWOA achieve the best performance on F23 and F22, respectively.

4.12 Convergence comparison

To compare the convergence speed of the various algorithms, one single-peak function F7, one localization multimodal function F9, one nondeterministic multimodal function F14, and one CEC2019 test function F21 are given. As shown in Fig. 3, with the increase in the number of iterations, the fitness value of ACWOA algorithm decreases rapidly, indicating that the convergence speed is significantly accelerated, which is shown in all four functions. ACWOA algorithm has the potential to jump out of local optimization. Among functions F7, F9, F14, and F21, ACWOA algorithm has strong convergence, and its results are very good.

4.13 Statistical comparison

To further compare the performance of each algorithm, we use the Mann–Whitney U test and Friedman’s test. The Mann–Whitney U test results of ACWOA and the other algorithms are illustrated in Table 13. The marks (+ /≈/ −) are used to describe the performance of the algorithms. Compared with the WOA algorithm, the ACWOA has better performance on 26 functions and is “tied” on 4 functions. Compared with the HIWOA algorithm, the proposed algorithm “wins” on 23 functions, is “tied” on 5 functions, and has no “loss.” Compared with the CWOA, HWPSO, GWO, PSO, and MVO, the ACWOA “wins” on 24 functions, 24 functions, 23 functions, 24 functions, and 25 functions, respectively.

To further reflect the performance of each algorithm, we use Friedman’s test to compare all the algorithms. The Friedman’s test results of eight algorithms in Table 14 demonstrate that the ACWOA has the best Friedman rank. Meanwhile, p value is 1.50e-05, which means the performance of ACWOA is different than other algorithms.

5 Application of ACWOA in a classical mathematical model

To further verify that the ACWOA algorithm has certain practical value in complex scenarios. The proposed algorithm, the classical intelligent population algorithms (PSO, MVO, and GWO), and the recent state-of-the-art algorithms (CWOA, HWPSO, and HIWOA) are applied to two well-known mathematical models (the spring design and the photovoltaic design) and a real application (the control process of a welding production line) (Chen et al. 2019). In this experiment, each algorithm is iterated 1000 times, run 30 times separately, and take the optimal solution. Since these mathematical models have multiple constraints, each algorithm needs to find the most suitable and optimal combination according to these conditions to obtain the optimal fitness value. As known in the literature (Coello 2002), the penalty functions are divided into static penalty, dynamic penalty, adaptive penalty, death penalty, etc. In this paper, the static penalty proposed by Hoffmeister and Sprave is used as the fitness value in the spring model, and the death penalty is used as the penalty function in the photovoltaic model. A detailed description of the penalty function is found in Coello (2002).

5.1 Spring design problem

The spring design problem is a classic problem in mathematical models (Dhar and Introduction to Optimum Design[M]. xxxx). The problem is the three main variable factors: wire diameter \(d\), average coil diameter \(D\), and the number of active coils \({\text{Num}}\). The minimum value of the spring–mass is obtained by a reasonable combination with each other. The relevant constraints that exist here are as follows:

We conduct 30 experiments on each algorithm. It can be seen from Table 9 that the proposed ACWOA algorithm has the best penalty function value compared with other algorithms. We set \(d\), \(D\), and \({\text{Num}}\) to 0.05231571, 0.37198223, and 10.4602112, respectively, and obtain the minimum value of this optimization task among the above algorithms. The HIWOA, CWOA, and HWPSO rank 4th, 5th, and 6th, respectively, defeating the traditional WOA algorithm. The GWO algorithm has good performance in this model, ranking second. However, the MVO algorithm is not suitable for this model, and the result is the worst. Therefore, the ACWOA algorithm can be a very effective auxiliary tool when solving the spring problem. Figure 4 shows the box diagram of the experimental results of each algorithm tested on this model. The mean and median values of the ACWOA algorithm are 0.01304 and 0.01313, respectively. ACWOA has the best performance. Although the mean and median values of the WOA algorithm are 0.01352 and 0.01361, respectively, the traditional WOA has the best stability. MVO has the worst performance in this model.

5.2 Photovoltaic design problem

The photovoltaic design model is similar to the spring design model described above. The difference is that there are four dimensions in this model: shell depth \(d_{s}\), head depth \(d_{h}\), inner radius \(R\), and interface width \(L\). The goal is to find the best solution to minimize the total cost (Mirjalili and Lewis 2016). The detailed description and constraints are as follows:

We conduct 30 experiments on each algorithm. The results of the various algorithms in this optimization task are given in Table 10. ACWOA is better than the PSO algorithm, ranking first, with a minimum of 5345.116. \(d_{s}\), \(d_{h}\),\(R\), and \(L\) are 1.2588, 0.6222, 65.2252, and 10.000, respectively. The recent state-of-the-art algorithms (CWOA (ranking 6th), HWPSO (ranking 7th), and HIWOA (ranking 3rd)) are better than WOA. The classical intelligent population algorithms (like PSO (ranking 2nd), MVO (ranking 4th), and GWO (ranking 5th)) are effective in this model. Therefore, ACWOA algorithm still has certain practical value in photovoltaic design. Figure 5 shows the box diagram of the experimental results of each algorithm tested on this model. The mean and median of ACWOA are 5388.137 and 5398.5536, respectively. The mean and median of PSO are 5388.317 and 539.804, respectively. ACWOA was the best on average, but PSO was better than ACWOA on median. The traditional WOA algorithm is not good in photovoltaic design model.

5.3 The control process of a welding production line

We further prove the performance of the ACWOA algorithm in a practical application (the quality process control of automated welding of car bodies in Geely cars). At present, the traditional scheme is to use a simple control chart to control the hole center, X, Y, Z coordinate size. However, there are some disadvantages of this scheme. On the one hand, it does not effectively consider the economic situation. On the other hand, it can only control one-dimensional independent variables, resulting in poor performance. In order to obtain better performance, we design a multivariate Bayesian VSI control chart. Some of the economic parameters are calculated through empirical data, but the statistical parameters can only be obtained by using population intelligence algorithms. The quality control model is described as follows:

The economic parameters are the results obtained after a lot of experiment, such as fixed cost (b = 4.9), variable sampling (c = 0.6), cost in the out-of-control state (M = 111), cost of search (A = 115.5), cost of additional repairs (R = 349.5), \(\theta\) = 0.013. \(\pi_{0p} = 0.5\) and \(\pi_{1p} = 0.5\) are the state probability and the steady-state probability, respectively. \(T_{0} = 1\) and \(T_{1} = 2\) are the investigation time and the expected time, respectively. Some variables that need to be solved are as follows: control variable \(p_{Si}\)(i = 1, 2), sample size (n), and sampling period (h1, h2). It is worth noting that \(p_{Ri} = 0.5 * p_{Si}\)(i = 1, 2) denotes the alarm limits. We conduct 30 experiments on each algorithm. The ACWOA is compared with other algorithms, and the results are described in Fig. 6. We can get the best fitness of each algorithm from this figure (18.457 (WOA), 19.15 (CWOA), 17.15 (HWPSO), 21.4144 (GWO), 17.115 (ACWOA), 27.123 (PSO), 19.175 (MVO), and 19.4096 (HIWOA)). ACWOA has the best performance. (The mean is 19.171 and the median is 20.175.) PSO falls into local optimum in this model. Although it has the best stability, it has the worst accuracy.

6 Summary and prospect

Embedding a chaotic map and artificial bee colony algorithm into the WOA algorithm is the main idea of this paper. The feature that the artificial bee swarm algorithm can effectively jump out of the local optimum is added to the WOA, which effectively avoids the possibility that the algorithm falls into local optimum. It is also very effective to add chaotic maps to improve the quality of the initialized population. For another thing, the nonlinear convergence factor and the adaptive weight value are added to the position update of the whale to improve the convergence speed of the algorithm. Finally, the proposed improved algorithm is compared with classical and the recent state-of-the-art six algorithms through 30 standard benchmark functions, two classical mathematical models, and a practical application. It is evaluated by various metrics such as optimal value, mean value, variance value, convergence curve, and penalty function. The final experimental results show that the ACWOA algorithm has certain competitiveness in the optimization problem and beats all other algorithms in most benchmark functions to get the first. In the mathematical model and practical application, ACWOA also shows an outstanding side and has certain practical value. However, there are two shortcomings in ACWOA. One is that the threshold Limit is obtained experimentally and is not intelligent. In different practical problems, Limit will affect the detection ability of the population. How to design adaptive Limit is a challenge. Other one is that there is also the question of which mapping model to choose for initialization. We use the classic Logistic model. However, different mapping models construct different initializations, which will affect the final convergence result. In addition, the method can be further integrated and inserted with other various effective and practical algorithms to obtain a better hybrid algorithm in the future. Meanwhile, the calculation can be applied to multiple practical engineering fields to solve some of the complex environmental optimization problems.

References

Abd El Aziz M, Eweesc AA, Hassanien AE (2017) Whale optimization algorithm and moth-flame optimization for multilevel thresholding image segmentation. Expert Syst Appl 83:242–256

Abdel-Basset M, Manogaran G, El-Shahat D, Mirjalili S (2018) A hybrid whale optimization algorithm based on local search strategy for the permutation flow shop scheduling problem. Future Gener Comput Syst Int J Esci 85:129–145

Cai ZH, Lou J, Zhao J, Wu K, Liu NJ, Wang YX (2019) Quadrotor trajectory tracking and obstacle avoidance by chaotic grey wolf optimization-based active disturbance rejection control. Mech Syst Signal Process 128:636–654

Cao M, Huang MX, Xu RQ, Lu GN, Chen M (2019) A grey wolf optimizer-cellular automata integrated model for urban growth simulation and optimization. Trans GIS 23:672–687

Chen H, Xu Y, Wang M, Zhao X (2019) A balanced whale optimization algorithm for constrained engineering design problems. Appl Math Model 71:45–59

Coello CAC (2002) Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: a survey of the state of the art. Comput Methods Appl Mech Eng 191:1245–1287

Dhar PL (2017) Introduction to optimum design. Ther Syst Des Simul 2017:385–407

Ding T, Chang L, Li CS, Feng C, Zhang N (2018d) A mixed-strategy-based whale optimization algorithm for parameter identification of hydraulic turbine governing systems with a delayed water hammer effect. Energies 11:2367

Dong XS, Dong WY, Call YL (2018) Ant colony optimisation for coloured travelling salesman problem by multi-task learning. IET Intel Transp Syst 12:774–782

Eberhart RC, Shi Y (2000) Ieee, and Ieee, Comparing inertia weights and constriction factors in particle swarm optimization. IEEE, New York

Fan SKS, Chiu YY (2007) A decreasing inertia weight particle swarm optimizer. Eng Optim 39:203–228

Gandomi AH, Yun GJ, Yang XS, Talatahari S (2013) Chaos-enhanced accelerated particle swarm optimization. Commun Nonlinear Sci Numer Simul 18:327–340

Gharehchopogh FS, Gholizadeh H (2019) A comprehensive survey: Whale optimization algorithm and its applications. Swarm Evol Comput 48:1–24

Gharehchopogh FS, Farnad B, Alizadeh A (2021a) A farmland fertility algorithm for solving constrained engineering problems. Concurr Comput Pract Exp 33:17

Gharehchopogh FS, Maleki I, Dizaji ZA (2021) Chaotic vortex search algorithm: metaheuristic algorithm for feature selection. Evolut Intell 2021(4)

Goldanloo MJ, Gharehchopogh FS (2021) A hybrid OBL-based firefly algorithm with symbiotic organisms search algorithm for solving continuous optimization problems. J Supercomput 2021:1–34

Gupta S, Deep K (2019) An efficient grey wolf optimizer with opposition-based learning and chaotic local search for integer and mixed-integer optimization problems. Arab J Sci Eng 44:7277–7296

He Q, Hu XT, Ren H, Zhang HQ (2015) A novel artificial fish swarm algorithm for solving large-scale reliability-redundancy application problem. ISA Trans 59:105–113

Karaboga D (2010) Artificial bee colony algorithm. Scholarpedia 5:6915

Karlekar NP, Gomathi N (2018) OW-SVM: Ontology and whale optimization-based support vector machine for privacy-preserved medical data classification in cloud. Int J Commun Syst 31:e3700

Kennedy J, Eberhart R (2002) Particle swarm optimization. In: Icnn95-international conference on neural networks.

Khadanga RK, Padhy S, Panda S, Kumar A (2018b) Design and analysis of multi-stage PID controller for frequency control in an islanded micro-grid using a novel hybrid whale optimization-pattern search algorithm. Int J Numer Model Electron Netw Devices Fields 31:e2349

Laskar NM, Guha K, Chatterjee I, Chanda S, Baishnab KL, Paul PK (2019) HWPSO: a new hybrid whale-particle swarm optimization algorithm and its application in electronic design optimization problems. Appl Intell 49:265–291

Li MS, Zhang HJ, Liu L, Chen BS, Guan LX, Wu Y (2018e) A quantitative structure-property relationship model based on chaos-enhanced accelerated particle swarm optimization algorithm and back propagation artificial neural network. Appl Sci Basel 8:1121

Luo J, Shi B (2018) A hybrid whale optimization algorithm based on modified differential evolution for global optimization problems. Appl Intell 49:1982–2000

Mafarja MM, Mirjalili S (2017) Hybrid Whale Optimization Algorithm with simulated annealing for feature selection. Neurocomputing 260:302–312

Mafarja M, Mirjalili S (2018) Whale optimization approaches for wrapper feature selection. Appl Soft Comput 62:441–453

Mehne HH, Mirjalili S (2018) A parallel numerical method for solving optimal control problems based on whale optimization algorithm. Knowl Based Syst 151:114–123

Mirjalili S, Lewis A (2016) The Whale optimization algorithm. Adv Eng Softw 95:51–67

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey Wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S, Mirjalili SM, Hatamlou A (2015) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27:495–513

Mohammadzadeh H, Gharehchopogh FS (2021) A multi-agent system based for solving high-dimensional optimization problems: a case study on email spam detection. Int J Commun Syst 34(3):e4670

Mohammadzadeh H, Gharehchopogh FS (2021) A novel hybrid whale optimization algorithm with flower pollination algorithm for feature selection: case study email spam detection. Comput Intell 37:176–209

Mohapatra P, Das KN, Roy S (2017) A modified competitive swarm optimizer for large scale optimization problems. Appl Soft Comput 59:340–362

Niknam T, Amiri B (2010) An efficient hybrid approach based on PSO, ACO and k-means for cluster analysis. Appl Soft Comput 10:183–197

Puchta EDP, Bassetto P, Biuk LH, Itaborahy MA, Converti A, Kaster MD et al (2021) Swarm-inspired algorithms to optimize a nonlinear gaussian adaptive PID controller. Energies 2021:14

Rahnema N, Gharehchopogh FS (2020) An improved artificial bee colony algorithm based on whale optimization algorithm for data clustering. Multimed Tools Appl 79:32169–32194

Rajabioun R (2011) Cuckoo optimization algorithm. Appl Soft Comput J 11:5508–5518

Rashedi E, Nezamabadi-pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179:2232–2248

Sahu PR, Hota PK, Panda S (2018c) Modified whale optimization algorithm for fractional-order multi-input SSSC-based controller design. Optim Control Appl Methods 39:1802–1817

Sayed GI, Darwish A, Hassanien AE (2018) A New chaotic whale optimization algorithm for features selection. J Classif 35:300–344

Shao P, Yang L, Tan L, Li GQ, Peng H (2020) Enhancing artificial bee colony algorithm using refraction principle. Soft Comput 24:15291–15306

Singh RP, Dixit M, Silakari S (2015) Image contrast enhancement using GA and PSO: a survey. In: International conference on computational intelligence and communication networks

Sun YJ, Wang XL, Chen YH, Liu ZJ (2018) A modified whale optimization algorithm for large-scale global optimization problems. Expert Syst Appl 114:563–577

Sun WZ, Wang JS, Wei X (2018a) An improved whale optimization algorithm based on different searching paths and perceptual disturbance. Symmet Basel 10:210

Talbi H, Batouche M, and Ieee (2004) Hybrid particle swarm with differential evolution for multimodal image registration

Tang C, Sun W, Wu W, Xue M (2019) A hybrid improved whale optimization algorithm. In: IEEE 15th international conference on control and automation (ICCA), 2019, https://doi.org/10.1109/ICCA.2019.8900003.

Xue Y, Jiang JM, Zhao BP, Ma TH (2018) A self-adaptive artificial bee colony algorithm based on global best for global optimization. Soft Comput 22:2935–2952

Yan ZH, Sha JX, Liu B, Tian W, Lu JP (2018) An ameliorative whale optimization algorithm for multi-objective optimal allocation of water resources in Handan, China. Water 10:87

Yang CH, Yang HS, Chuang LY (2019) PBMDR: a particle swarm optimization-based multifactor dimensionality reduction for the detection of multilocus interactions. J Theor Biol 461:68–75

Zaman HRR, Gharehchopogh FS (2021) An improved particle swarm optimization with backtracking search optimization algorithm for solving continuous optimization problems. Eng Comput. https://doi.org/10.1007/s00366-021-01431-6

Zhang YD, Wang SH, Dong ZC, Phillip P, Ji GL, Yang JQ (2015) Pathological brain detection in magnetic resonance imaging scanning by wavelet entropy and hybridization of biogeography-based optimization and particle swarm optimization. Prog Electromag Res Pier 152:41–58

Zhang K, Huang Q, Zhang Y (2019) Enhancing comprehensive learning particle swarm optimization with local optima topology. Inf Sci 471:1–18

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant U1813205, Independent Research Project of State Key Laboratory of Advanced Design and Manufacturing for Vehicle Body 71765003, and Hunan Key Laboratory of Intelligent Robot Technology in Electronic Manufacturing Open Foundation Grant 2017TP1011.

Funding

Funding was provided by Innovative Research Group Project of the National Natural Science Foundation of China (U1813205).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tang, C., Sun, W., Xue, M. et al. A hybrid whale optimization algorithm with artificial bee colony. Soft Comput 26, 2075–2097 (2022). https://doi.org/10.1007/s00500-021-06623-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-06623-2