Abstract

This paper presents a novel metaheuristic algorithm named as life choice-based optimizer (LCBO) developed on the typical decision-making ability of humans to attain their goals while learning from fellow members. LCBO is investigated on 29 popular benchmark functions which included six CEC-2005 functions, and its performance has been benchmarked against seven optimization techniques including recent ones. Further, different abilities of LCBO optimization algorithm such as exploitation, exploration and local minima avoidance were also investigated and have been reported. In addition to this, scalability is tested for several benchmark functions where dimensions have been varied till 200. Furthermore, two engineering optimization benchmark problems, namely pressure vessel design and cantilever beam design, were also optimized using LCBO and the results have been compared with recently reported other algorithms. The obtained comparative results in all the above-mentioned experimentations revealed the clear superiority of LCBO over the other considered metaheuristic optimization algorithms. Therefore, based on the presented investigations, it is concluded that LCBO is a potential optimizer for engineering problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization is the process of obtaining optimum results for a given problem while satisfying certain constraints. Several requirements in different fields like science, mathematics, engineering and finance can be framed as optimization problems. Some of the applications of optimization are training neural networks, tuning of controllers, designing digital filters, etc. Though many classical optimization algorithms do exist, they are problem dependent and require gradient information to reach an optimum solution. Further, in some cases, classical methods may fail to attain global optima as they get stuck around local optima making the algorithm unsuitable for that particular problem.

The present fast-paced engineering world is the result of continuous improvements over several centuries. Particularly, this has been achieved through inspiration from numerous intelligent processes that exist in nature. In fact, understanding and modelling of such processes has led to the development of many optimization techniques. These techniques have always been the driving force in solving large number of complex problems involving several variables.

For the past few decades, metaheuristic algorithms have been introduced to solve many complex optimization problems and such algorithms are gaining popularity because of the following key reasons:

Simplicity Most optimization algorithms are based on simple phenomenon which can be represented and described by simple mathematical expressions and methods.

Independency from gradient Unlike traditional optimization methods like gradient descent, metaheuristic algorithms do not use gradient for their implementation. This feature has been very helpful when the function under consideration either does not have gradient or it is difficult to obtain.

Local optima avoidance Due to randomness and exploration factor of optimization algorithms, they have an inherent capability to avoid local optima.

Problem independence Most optimization algorithms consider the problems as black boxes and therefore are treated as universal algorithms.

Further, for an optimizer, the two most desired features are exploitation and exploration. The overall capability of an optimization algorithm is highly dependent on these two features. Exploitation refers to rigorously searching the promising search space for global optima while exploration refers to searching for new promising search space. It is noteworthy that improved exploitation leads to fast convergence to optimal solution and improved exploration leads to avoidance of local optima. On the other hand, it may also be noted that very high exploitation leads to convergence towards local optima before the solutions could reach near global optima and very high exploration may lead to slow convergence of solution towards the global optima. Therefore, for a good optimization algorithm, there must exist a balance between exploitation and exploration.

2 Related works

As mentioned above, understanding and modelling of many natural processes and phenomena has led to the creation of several optimization techniques which have been very helpful in solving complex scientific problems. These algorithms can be broadly divided into following four categories:

Evolutionary algorithms This category is based on evolutionary processes present in the nature. In this class of algorithms, firstly, random population is generated and their fitness is calculated. Following this, new generation is evolved based on the stated rules of evolutionary algorithm. Genetic algorithm (GA) (Holland 1992) is the most popular in this category. In this algorithm, new population is generated by the process of crossover, cloning and mutation. Further, many algorithms have been developed in this category. Some of them are Evolutionary Strategy (François 1998), Genetic Programming (Koza 1994), Population-Based Incremental Learning (Baluja 1994), Fast Evolutionary Programming (Yao and Liu 1996), Differential Evolution (Storn and Price 1997), Grammatical Evolution (Ryan and Collins 1998), Enhanced GA (Coello and Montes 2002), Gene Expression Programming (Ferreira 2006), Co-Evolutionary Differential Evolution (CEDE) (Huang et al. 2007), Biogeography-Based Optimizer (Simons 2008), Asexual Reproduction Optimization (Farasat et al. 2010), States of Matter (Cuevas et al. 2014), Adaptive Dimensional Search (Hasançebi and Azad 2015), Stochastic Fractal Search (SFS) (Salimi 2015) and Multi-Verse Optimizer (MVO) (Mirjalili et al. 2016).

Swarm-based optimization algorithms This class of algorithms are based on social behaviour of animals. Collectively these are called swarms and are inspired from how swarms interact with each other in order to get their food. Particle Swarm Optimization (PSO) (Kennedy and Eberhart 1995) is the most popular algorithm in this category. In PSO, each particle changes its position based on personal best, global best, previous velocity and inertia. Following this, there had been several algorithms which make use of swarm-based optimization algorithm. Some of the examples are Ant Colony Optimization (Dorigo and Di Caro 1999), Marriage in Honey Bees Optimization Algorithm (Abbass 2002), Wasp Swarm Optimization (Pinto et al. 2005), Bees Algorithm (Pham et al. 2006), Cat Swarm Optimization (Chu et al. 2006), Co-Evolutionary Particle Swarm Optimization (CEPSO) (Krohling and Dos santos coelho 2006), Glow-Worms Optimization (Krishnanand and Ghose 2006), Artificial Bee Colony (Karaboga and Basturk 2007), Monkey Search Algorithm (Zhao and Tang 2008), Bee Collecting Pollen (Lu and Zhou 2008), Dolphin Partner Optimization (Yang et al. 2009), Group Search Optimizer (He et al. 2009), Cuckoo Search Algorithm (CSA) (Yang 2009b), Termite Colony Optimization (Hedayatzadeh et al. 2010), Firefly Algorithm (Yang 2009a), Bat Algorithm (BA) (Yang 2010), Hunting search (Oftadeh et al. 2010), Enhanced PSO (Gao and Hailu 2010), Krill Herd Algorithm (Gandomi and Alavi 2012), Migrating Birds Optimization (Duman et al. 2012), Fruit Fly Algorithm (Pan 2012), Flower Pollination Algorithm (Yang 2012), Enhanced CSA (Gandomi et al. 2013), Dolphin Echolocation Algorithm (Kaveh and Farhoudi 2013), Social Spider Optimization (Cuevas et al. 2013), Symbiotic Organisms Search (Cheng and Prayogo 2014), Grey Wolf Optimizer (Mirjalili et al. 2014), Bird Mating Optimizer (Askarzadeh 2014), Animal Migration Optimization (Li et al. 2014), Chicken Swarm Optimization (Meng et al. 2014), Firework Algorithm (Tan and Zhu 2015), Moth Flame Optimization (Mirjalili 2015a), Ant Lion Optimizer (Mirjalili 2015b), Elephant Herding Optimization (Wang et al. 2016a), Monarch Butterfly Optimization (Wang et al. 2016b), Dragon-Fly Algorithm (Mirjalili 2016a, b), Whale Optimization Algorithm (Mirjalili and Lewis 2016), Lion Optimization Algorithm (2016) (Yazdani and Jolai 2016), Spotted Hyena Optimizer (SHO) (Dhiman and Kumar 2017) and Salp Swarm Algorithm (Mirjalili et al. 2017).

Physics-inspired optimization These methods draw inspiration from physical processes present in the nature. One of the oldest algorithms in this category is Simulated Annealing (SA) (Van Laarhoven and Aarts 1987). Annealing means heating a solid and then slowly letting it to cool down. In SA, the process of annealing is used mathematically to solve problems. Other examples of physics-based optimization algorithm are as follows. Harmony search (Woo Geem et al. 2001), Big Bang–Big Crunch (Erol and Eksin 2006), Colonizing Weeds (Mehrabian and Lucas 2006), Gravitational Search Algorithm (Rashedi et al. 2009), Intelligent Water Drops (Hosseini 2009), Charged System Search (Kaveh and Talatahari 2010), Grenade Explosion Method (Ahrari and Atai 2010), Chemical-Reaction-Inspired Metaheuristic (Lam and Li 2010), Artificial Chemical Reaction Optimization Algorithm (Alatas 2011), Galaxy-Based Search Algorithm (Hosseini 2011), Curved Space Optimization (Moghaddam et al. 2012),Water Cycle Algorithm (Eskandar et al. 2012), Black Hole Algorithm (Hatamlou 2013), Mine Blast Algorithm (Sadollah et al. 2013), Colliding Bodies Optimization (Kaveh and Mahdavi 2014), Forest Optimization Algorithm (Ghaemi and Feizi-Derakhshi 2014), Optics Inspired Optimization (Husseinzadeh Kashan 2014), Ecogeographic-Based Optimization (Zheng et al. 2014), Ray Optimization Algorithm (Kaveh 2014b), Tree Seed Algorithm (Kiran 2015), Water Wave Optimization (Zheng 2015), Lightning Search Algorithm (Shareef et al. 2015), Ions Motion Algorithm (Hatamlou et al. 2015), Runner-Root Algorithm (Merrikh-Bayat 2015), Electromagnetic Field Optimization (Abedinpourshotorban et al. 2016), Water Evaporation Optimization (Kaveh and Bakhshpoori 2016), Vibrating Particles System (Kaveh and Ilchi Ghazaan 2017) and Thermal Exchange Optimization (Kaveh and Dadras 2017).

Human-based optimization This optimization class draws inspiration from behaviour and activities performed by humans. It may be noted that humans are the most intelligent species in this world, and this very fact offers good inspiration for developing optimization algorithms. One of the recent algorithms in this class is Jaya algorithm (Venkata 2016) which takes inspiration from human behaviour of following best and avoiding worst. Other examples of human-based algorithm are as follows. Tabu Search (Glover 1989), Seeker-Based optimization (Dai et al. 2006), Imperialist Competitive Algorithm (Atashpaz-Gargari and Lucas 2007), Teaching Learning-Based Optimization (Rao et al. 2007), Interior Search (Gandomi 2014), Soccer League Competition Algorithm (Moosavian and Kasaee Roodsari 2014), Exchange Market Algorithm (Ghorbani and Babaei 2014), Group Counselling Optimization Algorithm (Eita and Fahmy 2014), Tug of War Optimization (Kaveh and Zolghadr 2016), Most Valuable Player Algorithm (Bouchekara 2017) and Volleyball Premier League Algorithm (Moghdani and Salimifard 2018).

In addition to the above major classes, there have been several algorithms which are inspired from mathematics concepts like geometry, algebra, etc. The Method of Moving Asymptotes (Svanberg 1987), Nonlinear Integer and Discrete Programming (NIDP) (Sandgren 1990), Generalized Convex Approximation (Chickermane and Gea 1996) and Sin Cosine Algorithm (Mirjalili 2016b) are such algorithms.

From the above-presented survey, one can easily infer that different metaheuristic algorithms have been developed to target different problems. Therefore, in the interest of technical development, there is always a need for a new algorithm to be developed and evaluated for particular problem so as to obtain superior results than the existing algorithms. The new algorithm introduced in this paper, life choice-based optimizer (LCBO), comes under the category of human-based algorithm. It is based on how a person makes a decision in life to attain his/her goal. Generally, a person makes decision based on different parameters which are dependent upon his colleagues. This very fact has been the key motivation of this work. Further, according to no free lunch (NFL) theory (Wolpert and Macready 1997), no algorithm performs best for all problems. Though several optimization algorithms, as mentioned above, already exist, NFL says that no algorithm is uniformly perfect and therefore there is always a need to develop superior methods. Furthermore, superior techniques are always required to be developed and tested for different scientific problems as they will save the time and effort of the scientific community, thereby making a significant contribution to the domain.

The paper is organized into five sections. Following introduction and survey in Sect. 1 and 2, respectively, in Sect. 3, inspiration, mathematical formulation and representation of LCBO are presented. In Sect. 4, the details of the used 29 benchmark functions, which consist of unimodal, multimodal and six CEC-2005 composite functions (Liang et al. 2005; Suganthan et al. 2005), are provided. In Sect. 5, LCBO is tested on optimization of benchmark functions and the comparative study of the obtained results with other recently reported popular algorithms has been presented in this section. Further, this section also includes the investigations of scalability and convergence tests for enhanced dimensions. LCBO is also investigated for solving two engineering benchmark problems, namely, pressure vessel design (PVD) and cantilever beam design (CBD), and the comparative performance results have been reported in this section. Finally, Sect. 6 draws the conclusion and presents the future scope of research for LCBO. The mathematical descriptions of the investigated engineering problems are given in “Appendix” section.

3 Life choice-based optimizer

In this section, the inspiration and mathematical details of the LCBO algorithm are presented. Mathematical modelling of the LCBO algorithm has been presented highlighting all the background formulations and their expressions.

3.1 Inspiration

The LCBO algorithm is inspired by carefully observing the life cycle of an human being and his work ethics during active life where a person is motivated and has several different aims and targets to achieve. It is noteworthy that human is truly the most intellectual species and is thus far smarter and strategic. Humans always took inspiration from nature and thereby learnt new things. For example, certain Yogasanas like Gomukhasana (Cow-Face Pose) and Simhasana (Lion Pose) are practised for healthy lifestyle world over. The ability to learn from our fellow creatures and species has always been a crucial factor that has helped humans to emerge as far more superior than any other species. Humans have understood the significance of food chain and lifecycle that nature has enforced upon all species. Humans are able to realize the significance of each species and roles played by them for sustenance of life, so instead of focusing on complete extinction of other species, they have considered animals and plants as a part of a big family and focused on mutual survival. Humans have also built restricted zones for animals, created wildlife reserve throughout and are highly resolute to protect the endangered species from extinction. They have tamed animals, adopted them as pets and hence focused on mutual survival. Thus, humans have the capability to understand things better than any other species; that’s why a lot of focus and investment has been made for creating machines that are able to think and act like humans, for example recently built humanoid robots. Recently, Sophia, a humanoid robot, became the first robot to receive citizenship in a country (Saudi Arabia) and was also named the United Nations Development Program Innovation Champion, also the first humanoid to hold a United Nations title (https://www.hansonrobotics.com/sophia/). Therefore, there exists lot of scope to develop new and future technologies which are based on human behaviour and thus the novel algorithm LCBO is also inspired from the choices and thinking pattern of humans to accomplish a target.

Inspiration from Jaya optimization technique The algorithm proposed in this work is also inspired from the already established recent algorithm Jaya which makes use of selective influence. It may be noted that in Jaya (Venkata 2016), only the best and worst search agent affects the current search agent, whereas in the proposed optimizer, Eq. 6, which is only a particular/optional branch in the proposed algorithm according to random number generation, the best and better search agents (explained later in Sect. 3.2.2) also affect the current search agent resulting in better exploitation.

3.2 LCBO algorithm

In the proposed LCBO, the following three concepts can be used to completely describe it. These are presented in the following subsections.

3.2.1 Learning from the common best group

Human is always inspired by one thing or the other, whether it is his/her senior, some celebrity or fellow mates. When a person has some target in sight, he/she ponders and studies about how the best people in that field work to create a strategy in order to achieve targets. He/she always tries to take something resourceful from the best in the fields to achieve the target and derive a pattern or parameter by observing the superior person’s efficiency and work on it so that he/she can develop some skills to achieve the target or solve the problem under consideration. For a given population \( X \) with sorted fitness values/cost functions, Eq. 1 represents the learning from the best feature of the LCBO algorithm:

Here, in summation, \( k \) varies from \( 1 \) to \( n \), where \( n \) is a parameter in the algorithm and is equal to the ceil of the square root of the population considered to solve the problem. Parameter \( X_{j} \) is the \( j{\text{th}} \) or the current search agent in process, and \( X_{j}^{{\prime }} \) represents that \( X_{j} \) will be updated only if \( X_{j}^{{\prime }} \) has better fitness than \( X_{j} \). Figure 1 depicts this feature of the algorithm. The search agent in the centre of the circles represents the current search agent in the process. The search agent is affected only by the position of the common best \( n \) search agents, and the level of influence is decided by the random numbers as shown in Fig. 1 by arrows of variable lengths.

3.2.2 Knowing very next best

Everyone wants to achieve his/her target, like achieving the dream job or purchasing a dream car but to accomplish large targets or dream, it takes lot of time and perseverance. Instead of completely focusing onto massive targets, one must be able to realize the current position and the very nearest target in sight. Further, one also needs to understand how to move to a better position from the current position. So, the current target should also be prioritized. Therefore, there is a requirement to focus both on final destination as mentioned above and on the very next destination to achieve future goals. This operation is implemented with the help of the following algorithm:

Here, \( f1 \) and \( f2 \) vary linearly from \( 0 \) to 1 and 1 to 0, respectively. The value of \( r1 \) is constant 2.35, and \( X_{j - 1} \) refers to the position of the search agent whose fitness was just better than current search agent till the previous iteration. Further, \( X_{1} \) refers to the best position of search agent that has been achieved till the previous iteration. The position of \( X_{j} \) will only be updated to \( X_{j}^{{\prime }} \) if \( X_{j}^{{\prime }} \) has better fitness than \( X_{j} \). From Fig. 2, one can see that the current search agent is only affected by the search agents which have the best fitness value and agent that has just better fitness value and the level of influence is determined by Eqs. 3–6.

3.2.3 Reviewing mistakes

If humans are stuck somewhere or the technique they have been using to solve the problem under consideration is not working, they have the natural intelligence to review things and do proper analysis of the technique which is being used to solve the problem and try alternate methods. They are also capable of doing things in reverse to evaluate and approach the problem in a completely different manner, and it also increases the exploration part in the algorithm by trying to look at things from a completely different perspective.

The technique described by Eq. 7 is named as Avi escape technique and has been used as generalized technique to increase the exploration of algorithm. Here, in the algorithm, \( Xmax \) and \( Xmin \) are the upper and lower bound values, respectively. It is similar to GA (Holland 1992) and CSA (Yang and Deb 2009) where new agents are created to solve the problem by using upper bound and lower bound values. Here, \( X_{j} \) is the current agent in the process of evaluation.

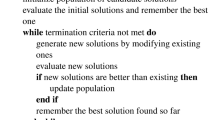

As usual with all the optimization methods, the LCBO will start with population size, lower and upper bounds and number of iterations. For the first iteration, population is generated and corresponding fitness is evaluated and ordered along with the agents. Now, the positions of the agents and their fitness are updated iteratively till the target fitness has been obtained or the number of iterations is exhausted. It may be noted that only one of the three operations as described above will be executed for updating of the agents depending on the value of random number. The pseudocode presented in the next section describes the operations in an orderly manner.

3.3 Pseudocode

The following is the pseudocode of the LCBO.

4 Details of the used benchmark functions

In order to determine the optimization efficiency of an algorithm, its critical testing is required. The importance of exploration and exploitation has already been stated in Introduction section, and thus for checking the overall performance of the algorithm, the benchmark test functions have been carefully chosen and have been presented in the following subsections. For systematic evaluation of the LCBO algorithm, the 29 chosen functions are divided into following three parts.

4.1 Unimodal functions (functions 1 to 7)

In the chosen unimodal functions (1 to 7), there exists only a single local optima value and hence it is the global minimum value of the respective function. These functions are used to test the exploitation affinity of the algorithm. Algorithms which are able to optimize these functions have great exploitation ability. As there is only a single minimum value, the LCBO algorithm should be able to reach quickly towards the global minima. The details of these functions are presented in Table 1.

4.2 Multimodal benchmark functions (functions 8 to 23)

These functions consist of many local optima and therefore are difficult to solve than unimodal functions. The search agents sometimes get stuck in the local optima and are unable to escape. It is noteworthy that functions 8 to 13 are of variable dimension and 14 to 23 are of fixed-dimension multimodal benchmark functions. The mathematical details of these functions are tabulated in Tables 2 and 3. It may be noted that the difficulty level of these functions increases with the search area, number of local optima and number of dimensions. The ability to explore new search region plays a vital role in evaluation of these functions, and hence they are good for determining the exploration ability of the algorithm.

4.3 CEC composite benchmark functions (functions 24 to 29)

These are six composite benchmark functions taken from CEC-2005. These are rotated and shifted classical variants of standard functions and are of greatest complexity among all benchmark functions in terms of difficulty. They have a large number of local optima values which are very difficult to escape from. The functions are available in Table 4. The detailed equations and expression of the benchmark function are available in the CEC-2005 technical reports (Liang et al. 2005; Suganthan et al. 2005).

5 Results and discussions

In this section, the experimental setup used to conduct various tests and the details regarding the evaluation of tests and obtained results of the used benchmark functions are presented. In subsection 5.1, experimental setup arrangement is presented which includes details regarding function testing such as population, iteration and system software version. In subsection 5.2, the study of the results of LCBO and other algorithms has been carried out. In subsection 5.3, the analysis of results of functions 1 to 13 having very high dimension (200) shows the adaptability of LCBO for dealing with high-complexity problems. In subsection 5.4, the convergence curve patterns are analysed. Engineering problem solving is an important component for the testing of any proposed optimization method. Therefore, two important design benchmark problems, namely PVD and CBD, are investigated for LCBO in subsection 5.5.

5.1 Experimental setup

For investigating the optimization capability of LCBO, each of the functions described earlier was optimized 30 times independently and the results in terms of average fitness value of 30 runs along with the standard deviations for each function or application have been recorded. The optimization technique offering least average fitness and deviation is considered as the winning technique. The software used for all the investigation was MATLAB™ on Windows 10 and 64 bits i-5 Processor 7th Generation, 2.5 GHz and 8 GB RAM.

5.2 Benchmark functions’ testing results

In this section, comparative study of LCBO algorithm with the other popular and latest algorithms has been presented. The presentation has been organized into three different subsections: Sects. 5.2.1, 5.2.2 and 5.2.3, for detailed and complete analysis of the performance of LCBO algorithm. For comparative performance analysis, optimization of 29 benchmark functions using seven potential reported optimization techniques, namely SHO, GWO, PSO, MFO, MVO, SCA and GA, has been used. It may be noted that SHO, GWO, MFO, MVO and SCA are the most recent ones as they were reported in 2017, 2014, 2014, 2016 and 2016, respectively. The results and parameters of these algorithms were already reported in SHO (Dhiman and Kumar 2017) for the above-mentioned benchmark functions. It is noteworthy that the population and iteration used for benchmark function optimization in (Dhiman and Kumar 2017) were 30 and 1000, respectively, for each algorithm. In order to offer a fair competition, same population and iteration values were chosen for LCBO algorithm.

5.2.1 Functions 1–7 (unimodal)

Table 5 presents the obtained results in terms of the average fitness values and the standard deviations for the optimized unimodal functions. As mentioned above along with the LCBO, the results of seven other optimization methods as reported by Dhiman and Kumar (2017) are also presented. Based on the average fitness values and the deviations obtained, one can clearly infer that LCBO offered least values. Therefore, for optimization of unimodal benchmark functions, it is concluded that LCBO is a superior optimization method as compared to the seven other methods. LCBO algorithm offers the best results for functions 1 to 5 and second best results for functions 6 and 7.

5.2.2 Functions 8–23 (multimodal)

In line with the unimodal function optimization, functions 8 to 23 were investigated under multimodal function optimization. Table 6 presents the obtained results wherein it can be inferred that for functions 8, 9, 10, 11, 13, 15, 18, 19, 20, 21 and 23, LCBO is the clear winner. On the other hand, for functions 12, 14, 16, 17 and 22, it is the second or third best. Therefore, for optimization of multimodal benchmark functions also, it can be concluded that LCBO is a superior optimization method as compared to the seven other methods.

5.2.3 Functions 24–29 (composite CEC benchmark functions)

In order to further test the capability of the LCBO, the next experiment was to test the complex function optimization. For the same, six composite benchmark functions were taken from CEC 2005. These are rotated and shifted classical variants of standard functions and therefore offer greatest complexity among all the benchmark functions. They also have multiple local optima values, and it is usually difficult to escape from these local optima. From the results given in Table 7, one can clearly observe that LCBO algorithm gives the best result for four out of the six functions (24, 25, 26 and 28). This confirms the LCBO’s ability to easily escape local minima and move towards global minima. It also ensures superior balance between exploration and exploitation ability as exhibited by LCBO.

5.3 Scalability test

Scalability test is an important component of evaluation of any optimization technique. It assesses the ability of an optimization technique to handle higher dimensions’ test functions. The complexity of an optimization problem increases exponentially with increase in the number of dimensions, and solving any problem set with large number of unknown variables is always a challenge. In this test, functions 1 to 13, defined in Tables 1 and 2, were used wherein the dimensions were increased from 30 to 200. For scalability performance, population and iterations were kept as 30 and 1000, respectively. In line with previous subsection, 30 independent trials were executed and the results in terms of average and standard deviation were recorded. For comparison purpose, scalability results of seven techniques, namely ALO, PSO, SMS, BA, FPA, CSA and GA, as reported in (Mirjalili 2015b) were used. It may be noted that in this work the population and iteration values of 100 and 5000 were used by competing methods against the 30 and 1000 for LCBO. The setting of these parameters is a real challenge to LCBO. The results of scalability test are presented in Table 8 wherein it can be confirmed that LCBO algorithm gives the best result for all functions from 1 to 13 except function 6. This proves the dominance of the LCBO algorithm in dealing with functions with large dimensions and clearly shows that LCBO is superior as it gives better results in significantly lower number of function calls. The performance of other algorithms is considerably poorer than LCBO. Dull performance of the rest of the algorithms also highlights the fact that large dimensions’ problem solving is quite difficult.

5.4 Convergence analysis

Having dealt with the scalability analysis, the next activity was to study the convergence. Convergence pattern is useful for understanding the exploration and exploitation ability of an optimization algorithm. For the same, in this section, a total of 16 scalable functions were tested and their convergences were recorded for varying dimensions. The investigated dimensions were 30, 50, 80, 100 and 200. Figures 3 and 4 depict the convergence plots of LCBO for the 16 functions considered for varying dimensions. It may be noted that Figs. 3 and 4 represent the plots for unimodal and multimodal functions, respectively. As evident from these plots, LCBO survives the test of scalability and passes it with flying colours.

Figure 5 shows the convergence of the LCBO for CEC test functions. Again in all the presented cases, LCBO convergence can be clearly observed.

Based on the above-presented results, the following remarks about LCBO can be inferred.

The exploitation ability of LCBO algorithm is very impressive as can be seen from results of unimodal functions optimization.

The exploration ability of LCBO algorithm is great as can be seen from its superior result than the other algorithms. In none of the multimodal functions, it offered unsatisfactory result and was always in top 3 in about 95% of the benchmark functions.

In multimodal composite CEC functions, which are extremely difficult to handle, it gave best result in four out of six functions.

LCBO has a very good balance between exploration and exploitation and thus has a very wide scope for modification and future work.

Further, the optimization techniques are usually applied for real-life problem solving and they are expected to be a strong tool in this aspect also. For the same, two benchmark engineering problems have been taken up as described in the following section.

5.5 Engineering applications

Engineering problems are generally constraint based, and the optimization algorithms are required to be modified accordingly so as to apply in these applications. Different types of penalty functions are used for handling constraints. The basic idea behind using these penalty functions is that when the search agents go out of range or violate given constraints, then some form of penalty is imposed to the cost function so that these agents are modified. The following are the popular types of penalty functions.

Static penalty This type of penalty function is completely independent of the number of iterations, and this type of penalty varies with the square of magnitude of amount of violation.

Dynamic penalty In this type of penalty function, the penalty value varies with time and may increase or decrease with the current iteration value. Usually, it increases with time.

Annealing penalty In this type of penalty function, the penalty coefficients are changed with iteration whenever the algorithm gets stuck in the local optima and only the active constraints are considered in each iteration that is generally increased with iteration.

Death penalty Whenever any constraint is violated by the search agent, it is assigned zero fitness and there is no need to compute extent of violation of constraints.

In this work, death penalty has been imposed for both the following design problems. This was done by assigning zero fitness to the search agents violating the constraints.

5.5.1 Pressure vessel design

In this problem, it is required to reduce the cost of fabrication of the vessel. The mathematical description of the PVD problem has been taken the same as in (Salimi 2015), and mathematical expressions are provided in “Appendix” section. There are four constraint conditions apart from cost function minimization. The population and iteration, for optimizing PVD, were kept as 30 and 400, respectively. The performance of LCBO algorithm has been compared with other algorithms, namely GA, CEPSO, CEDE, PSO, NIDP and SFS, as reported in (Salimi 2015). Table 9 presents the best results obtained out of 30 trials of LCBO and compares the results reported in (Salimi 2015). From Table 9, it can be concluded that LCBO offers the least cost function and therefore, is the most suitable technique for presser vessel design. It is able to maintain all the given constraints while leading to the optimal solution.

In addition to the above, statistical analysis of the 30 cost function values was also performed and the results are presented in Table 10. From Table 10, one can infer that the best, mean and worst values of cost function of LCBO are the least among the investigated methods. The number of function evaluations (FE) gives the computation load of a given method. As seen from Table 10, LCBO makes use of very small number of FE and therefore is a very light optimization technique.

5.5.2 Cantilever beam design

CBD is one of the most widely tackled engineering problems. The mathematical description of the CBD problem has been the same as in (Wolpert and Macready 1997), and brief expressions are given in “Appendix” section. In this problem, one needs to find the optimum values of the five parameters of the beam within the given bounds. The designed parametric values should be so as to yield the minimum cost function while obeying the given constraint.

The population and iteration, for optimizing CBD, were kept as 30 and 1000, respectively. The performance of LCBO algorithm has been compared with other algorithms such as MFO, MMA, GCA_1, GCA_2, CSA and SOS which is the same as considered in (Mirjalili 2015a). Table 11 presents the best results obtained of 30 trials of LCBO and compares them with the results reported in (Mirjalili 2015a). From Table 11, it can be concluded that LCBO offers the least cost function and hence is the most suitable technique for CBD. It is able to maintain the given constraint while leading to the optimal solution.

Based on the detailed investigations presented in both the case studies reported above, it can be clearly concluded that LCBO has performed extremely well.

6 Conclusion

In this work, a life choice-based optimizer (LCBO) has been proposed and investigated. LCBO essentially makes use of the fundamental choices humans make in life to sort priorities and always move ahead to improve and achieve life objectives. The proposed LCBO algorithm has been described, and its performance has been assessed for exploration and exploitation on several benchmark functions. The functions used included varieties such as unimodal, multimodal and composite CEC-2005 benchmark functions. Detailed investigations on scalability and convergence were conducted and presented. Additionally, application of the LCBO algorithm on two important practical engineering problems was also investigated. The performance comparison between LCBO and other popular algorithms clearly revealed the superiority of LCBO over other algorithms in dealing with different optimization problems.

Overall, based on the presented investigations, it is concluded that LCBO is a competent algorithm which can compete with recent algorithms such as Spotted Hyena Optimizer, Moth Flame Optimizer, The Ant Lion Optimizer and Grey Wolf Optimizer as well as the standard algorithms like Particle Swarm Optimization and Genetic Algorithm. For future research work, several development options are available such as multiobjective form of LCBO and binary version of the algorithm which are a big possibility and application of this algorithm for various different fields related to optimization and parameter determination could be looked into.

References

Abbass H (2002) MBO: marriage in honey bees optimization-a Haplometrosis polygynous swarming approach. Inst Electr Electron Eng 1:207–214

Abedinpourshotorban H, Mariyam Shamsuddin S, Beheshti Z, Jawawi D (2016) Electromagnetic field optimization: a physics-inspired metaheuristic optimization algorithm. Swarm Evol Comput 26:8–22

Ahrari A, Atai A (2010) Grenade explosion method—a novel tool for optimization of multimodal functions. Appl Soft Comput J 10(4):1132–1140

Alatas B (2011) ACROA: artificial chemical reaction optimization algorithm for global optimization. Expert Syst Appl 38(10):13170–13180

Askarzadeh A (2014) Bird mating optimizer: an optimization algorithm inspired by bird mating strategies. Commun Nonlinear Sci Numer Simul 19(4):1213–1228

Atashpaz-Gargari E, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In: IEEE congress on evolutionary computation (CEC 2007), pp 4661–4667

Baluja S (1994) Population-based incremental learning: a method for integrating genetic search based function optimization and competitive learning. https://www.ri.cmu.edu/pub_files/pub1/baluja_shumeet_1994_2/baluja_shumeet_1994_2.pdf. Accessed 2 June 1994

Bouchekara H (2017) Most valuable player algorithm: a novel optimization algorithm inspired from sport. Oper Res 1–57

Cheng M, Prayogo D (2014) Symbiotic organisms search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112

Chickermane H, Gea H (1996) Structural optimization using a new local approximation method. Int J Numer Methods Eng 39(5):829–846

Chu S-C, Tsai P-W, Pan J-S (2006) Cat swarm optimization. PRICAI 2006: trends in artificial intelligence. PRICAI 2006. Lect Notes Comput Sci 4099:854–858

Coello C, Montes M (2002) Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Adv Eng Inform 16(3):193–203

Cuevas E, Cienfuegos M, Zaldívar D, Pérez-Cisneros M (2013) A swarm optimization algorithm inspired in the behavior of the social-spider. Expert Syst Appl 40(16):6374–6384

Cuevas E, Echavarría A, Ramírez-Ortegón M (2014) An optimization algorithm inspired by the states of matter that improves the balance between exploration and exploitation. Appl Intell 40(2):256–272

Dai C, Zhu Y, Cheng W (2006) Seeker optimization algorithm. Computational intelligence and security. CIS 2006. Lect Notes Comput Sci 4456:167–176

Dhiman G, Kumar V (2017) Spotted hyena optimizer: a novel bio-inspired based metaheuristic technique for engineering applications. Adv Eng Softw 114:48–70

Dorigo M, Di Caro G (1999) Ant colony optimization: A new meta-heuristic. In: Proceedings of the 1999 congress on evolutionary computation-CEC99, pp 1470–1477

Duman E, Uysal M, Alkaya A (2012) Migrating birds optimization: a new metaheuristic approach and its performance on quadratic assignment problem. Inf Sci 217:65–77

Eita M, Fahmy M (2014) Group counseling optimization. Appl Soft Comput J 22:585–604

Erol O, Eksin I (2006) A new optimization method: big bang–big crunch. Adv Eng Softw 37(2):106–111

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012) Water cycle algorithm—a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110–111:151–166

Farasat A, Menhaj M, Mansouri T, Moghadam M (2010) ARO: a new model-free optimization algorithm inspired from asexual reproduction. Appl Soft Comput J 10(4):1284–1292

Ferreira C (2006) Gene expression programming: mathematical modeling by an artificial intelligence. Studies in Computational Intelligence, vol. 21, Springer, Berlin

François O (1998) An evolutionary strategy for global minimization and its Markov chain analysis. IEEE Trans Evol Comput 2(3):77–90

Gandomi A (2014) Interior search algorithm (ISA): a novel approach for global optimization. ISA Trans 53(4):1168–1183

Gandomi A, Alavi A (2012) Krill herd: a new bio-inspired optimization algorithm. Commun Nonlinear Sci Numer Simul 17(12):4831–4845

Gandomi A, Yang X, Alavi A (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(1):17–35

Gao L, Hailu A (2010) Comprehensive learning particle swarm optimizer for constrained mixed-variable optimization problems. Int J Comput Intell Syst 3(6):832–842

Ghaemi M, Feizi-Derakhshi M (2014) Forest optimization algorithm. Expert Syst Appl 41(15):6676–6687

Ghorbani N, Babaei E (2014) Exchange market algorithm. Appl Soft Comput J 19:177–187

Glover F (1989) Tabu search—part I. ORSA J Comput 1(3):190–206

Hasançebi O, Azad S (2015) Adaptive dimensional search: a new metaheuristic algorithm for discrete truss sizing optimization. Comput Struct 154:1–16

Hatamlou A (2013) Black hole: a new heuristic optimization approach for data clustering. Inf Sci 222:175–184

Hatamlou A, Javidy B, Mirjalili S (2015) Ions motion algorithm for solving optimization problems. Appl Soft Comput 32:72–79

He S, Wu Q, Saunders J (2009) Group search optimizer: an optimization algorithm inspired by animal searching behavior. IEEE Trans Evol Comput 13(5):973–990

Hedayatzadeh R, Salmassi F, Keshtgari M, Akbari R, Ziarati K (2010) Termite colony optimization: a novel approach for optimizing continuous problems. In: Proceedings—2010 18th Iranian conference on electrical engineering, ICEE, pp 553–558

Holland J (1992) Adaptation in natural and artificial systems. The MIT Press, Cambridge

Hosseini H (2009) The intelligent water drops algorithm: a nature-inspired swarm-based optimization algorithm. Int J Bio Inspired Comput 1(1/2):71–79

Hosseini H (2011) Principal components analysis by the galaxy-based search algorithm: a novel metaheuristic for continuous optimisation. Int J Comput Sci Eng 6(1/2):132–140

Huang F, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186(1):340–356

Husseinzadeh Kashan A (2014) A new metaheuristic for optimization: optics inspired optimization (OIO). Comput Oper Res 55:99–125

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Global Optim 39(3):459–471

Kaveh A (2014) Advances in metaheuristic algorithms for optimal design of structures. Springer, Berlin

Kaveh A, Bakhshpoori T (2016) Water evaporation optimization: a novel physically inspired optimization algorithm. Comput Struct 167:69–85

Kaveh A, Dadras A (2017) A novel meta-heuristic optimization algorithm: thermal exchange optimization. Adv Eng Softw 110:69–84

Kaveh A, Farhoudi N (2013) A new optimization method: dolphin echolocation. Adv Eng Softw 59:53–70

Kaveh A, Ilchi Ghazaan M (2017) Vibrating particles system algorithm for truss optimization with multiple natural frequency constraints. Acta Mech 228(1):307–322

Kaveh A, Mahdavi V (2014) Colliding bodies optimization: a novel meta-heuristic method. Comput Struct 139:18–27

Kaveh A, Talatahari S (2010) A novel heuristic optimization method: charged system search. Acta Mech 213(3–4):267–289

Kaveh A, Zolghadr A (2016) A novel meta-heuristic algorithm: tug of war optimization. Int Journal of Optim Civil Eng 6(4):469–492

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95—international conference on neural networks, pp 1942–1948

Kiran M (2015) TSA: tree-seed algorithm for continuous optimization. Expert Syst Appl 42(19):6686–6698

Koza JR (1994) Genetic programming II: automatic discovery of reusable subprograms. MIT Press, Cambridge

Krishnanand KN, Ghose D (2006) Glowworm swarm based optimization algorithm for multimodal functions with collective robotics applications. Multiagent and Grid Systems 2(3):209–222

Krohling R, Dos Santos Coelho L (2006) Coevolutionary particle swarm optimization using gaussian distribution for solving constrained optimization problems. IEEE Trans Syst Man Cybern B Cybern 36(6):1407–1416

Lam A, Li V (2010) Chemical-reaction-inspired metaheuristic for optimization. IEEE Trans Evol Comput 14(3):381–399

Li X, Zhang J, Yin M (2014) Animal migration optimization: an optimization algorithm inspired by animal migration behavior. Neural Comput Appl 24(7–8):1867–1877

Liang J, Suganthan P, Deb K (2005) Novel composition test functions for numerical global optimization. Swarm Intell Symp 2005:68–75

Lu X, Zhou Y (2008) A novel global convergence algorithm: bee collecting pollen algorithm. Advanced intelligent computing theories and applications. With aspects of artificial intelligence. ICIC 2008. Lect Notes Comput Sci 5227:518–525

Mehrabian A, Lucas C (2006) A novel numerical optimization algorithm inspired from weed colonization. Ecol Inform 1(4):355–366

Meng X, Liu Y, Gao X, Zhang H (2014) A new bio-inspired algorithm: chicken swarm optimization. Advances in swarm intelligence. ICSI 2014. Lect Notes Comput Sci 8794:86–94

Merrikh-Bayat F (2015) The runner-root algorithm: a metaheuristic for solving unimodal and multimodal optimization problems inspired by runners and roots of plants in nature. Appl Soft Comput J 33:292–303

Mirjalili S (2015a) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl Based Syst 89:228–249

Mirjalili S (2015b) The ant lion optimizer. Adv Eng Softw 83:80–98

Mirjalili S (2016a) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Mirjalili S (2016b) SCA: a sine cosine algorithm for solving optimization problems. Knowl Based Syst 96:120–133

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Mirjalili S, Mirjalili S, Lewis A (2014) Grey Wolf Optimizer. Adv Eng Softw 69:46–61

Mirjalili S, Mirjalili S, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Mirjalili S, Gandomi A, Mirjalili S, Saremi S, Faris H, Mirjalili S (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Moghaddam F, Moghaddam R, Cheriet M (2012) Curved space optimization: a random search based on general relativity theory

Moghdani R, Salimifard K (2018) Volleyball premier league algorithm. Appl Soft Comput J 64:161–185

Moosavian N, Kasaee Roodsari B (2014) Soccer league competition algorithm: a novel meta-heuristic algorithm for optimal design of water distribution networks. Swarm Evol Comput 17:14–24

Oftadeh R, Mahjoob M, Shariatpanahi M (2010) A novel meta-heuristic optimization algorithm inspired by group hunting of animals: hunting search. Comput Math Appl 60(7):2087–2098

Pan W-T (2012) A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl Based Syst 26:69–74

Pham D, Eldukhri E, Soroka A, Ghanbarzadeh A, Kog E, Otri S, Zaidi M (2006) The bees algorithm-a novel tool for complex optimisation problems. In: Intelligent production machines and systems, pp 454–459

Pinto P, Runkler TA, Sousa JM (2005) Wasp swarm optimization of logistic systems. In: Ribeiro B, Albrecht RF, Dobnikar A, Pearson DW, Steele NC (eds) Adaptive and natural computing algorithms. Springer, Vienna, pp 264–267

Rao RV, Vimal JS, Vakharia DP (2007) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Ryan C, Collins J (1998) Grammatical evolution: evolving programs for an arbitrary language. Genet Program Lect Notes Comput Sci 1391:83–96

Sadollah A, Bahreininejad A, Eskandar H, Hamdi M (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput J 13(5):2592–2612

Salimi H (2015) Stochastic fractal search: a powerful metaheuristic algorithm. Knowl-Based Syst 75:1–18

Sandgren E (1990) Nonlinear integer and discrete programming in mechanical design optimization. J Mech Des 112(2):223–229

Shareef H, Ibrahim A, Mutlag A (2015) Lightning search algorithm. Appl Soft Comput J 36:315–333

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12(6):702–713

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–349

Suganthan PN, Hansen N, Liang JJ, Deb K, Chen YP, Auger A, Tiwari S (2005) Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization. KanGAL report, 2005005

Svanberg K (1987) The method of moving asymptotes—a new method for structural optimization. Int J Numer Methods Eng 24:359–373

Tan Y, Zhu Y (2015) Fireworks algorithm for optimization. Advances in swarm intelligence. ICSI 2010. Lect Notes Comput Sci 6145:355–364

Van Laarhoven PJM, Aarts EHL (1987) Simulated annealing. In: Simulated annealing: theory and applications. Springer, Dordrecht, pp 7–15

Venkata RR (2016) Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int J Ind Eng Comput 7(1):19–34

Wang G, Deb S, Coelho L (2016a) Elephant herding optimization. In: Proceedings—2015 3rd international symposium on computational and business intelligence, ISCBI 2015, pp 1–5

Wang G, Zhao X, Deb S (2016b) A novel monarch butterfly optimization with greedy strategy and self-adaptive. In: Proceedings—2015 2nd international conference on soft computing and machine intelligence, ISCMI 2015, pp 45–50

Wolpert D, Macready W (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

Woo Geem Z, Hoon Kim J, Loganathan G (2001) A new heuristic optimization algorithm: harmony search. Simul Trans Soc Model Simul Int 76(2):60–68

Sophia Robot. https://www.hansonrobotics.com/sophia/

Yang X-S (2009) Firefly algorithms for multimodal optimization. Stochastic algorithms: foundations and applications. SAGA 2009. Lect Notes Comput Sci 5792:169–178

Yang X-S (2010) A new metaheuristic Bat-inspired Algorithm. Stud Comput Intell 284:65–74

Yang X-S (2012) Flower pollination algorithm for global optimization. Unconventional computation and natural computation. UCNC 2012. Lect Notes Comput Sci 7445:240–249

Yang X-S, Deb S (2009) Cuckoo search via levy flights. In: 2009 World congress on nature & biologically inspired computing (NaBIC), pp 210–214

Yang S, Jiang J, Yan G (2009) A dolphin partner optimization. In: Proceedings of the 2009 WRI global congress on intelligent systems, GCIS 2009, vol. 1, pp. 124–128

Yao X, Liu Y (1996) Fast evolutionary programming. Computational intelligence and intelligent systems. ISICA 2010. Commun Comput Inf Sci 107:79–86

Yazdani M, Jolai F (2016) Lion optimization algorithm (LOA): a nature-inspired metaheuristic algorithm. J Comput Des Eng 3(1):24–36

Zhao R, Tang W (2008) Monkey algorithm for global numerical optimization. J Uncertain Syst 2(3):165–176

Zheng Y (2015) Water wave optimization: a new nature-inspired metaheuristic. Comput Oper Res 55:1–11

Zheng Y, Ling H, Xue J (2014) Ecogeography-based optimization: enhancing biogeography-based optimization with ecogeographic barriers and differentiations. Comput Oper Res 50:115–127

Acknowledgements

The author thanks the anonymous reviewers who contributed to improving the quality and clarity of this paper with their comments during the revision process. MATLAB code of LCBO may be provided to the researchers and developers on request.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The engineering problems used in this paper are pressure vessel design and cantilever beam design. The mathematical details of these engineering problems have been presented. The mathematical equations of constraints, range space and cost function to be minimized are given below.

1.1 Pressure vessel design

The objective of this problem is to minimize the total cost consisting of material, forming and welding of a cylindrical vessel as in Fig. 6. Both ends of the vessel are capped, and the head has a hemispherical shape. There are four variables in this problem, namely thickness of the shell (\( T_{s} \)), thickness of the head (\( T_{h} \)), inner radius (\( R \)) and length of the cylindrical section without considering the head (\( L \)). The function \( f\left( {T_{s} ,T_{h} ,R,L} \right) \) is to be minimized subjected to the following four constraints \( g1 \), \( g2 \), \( g3 \) and \( g4 \) and variable ranges:

1.2 Cantilever beam design

The cantilever beam shown in Fig. 7 is made of five elements, each having a hollow cross section with constant thickness. There is external force acting at the free end of the cantilever. The weight of the beam is to be minimized while assigning an upper limit on the vertical displacement of the free end. The design variables are the heights (or widths) \( x_{i} \) of the cross section of each element. Another interesting requirement is the lower bounds on these design variables are very small and the upper bounds very large so they do not become active in the problem. The problem is formulated using classical beam theory as follows:

subjected to the following constraint and range of variables:

Rights and permissions

About this article

Cite this article

Khatri, A., Gaba, A., Rana, K.P.S. et al. A novel life choice-based optimizer. Soft Comput 24, 9121–9141 (2020). https://doi.org/10.1007/s00500-019-04443-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04443-z