Abstract

Spectral efficiency (SE) and energy efficiency (EE) are both important metrics in massive multiple-input multiple-output (MIMO) systems. However, maximizing EE and SE is always conflicting with each other, and they can hardly be achieved simultaneously. In this paper, we focus on the tradeoff optimization between EE and SE in multiuser massive MIMO systems in terms of the number of transmit antennas and the transmit power. Different from the previous EE-oriented or SE-oriented method, the EE–SE tradeoff problem is formulated into a multi-objective optimization problem. To efficiently attain the Pareto optimal front (POF) of EE–SE tradeoff, a multi-objective adaptive genetic algorithm, inspired by the non-dominated sorting genetic algorithm (NSGA-II), is proposed to improve the convergence speed. Experimental comparisons against several well-known multi-objective algorithms show that the proposed algorithm can quickly adapt to the true POF of EE–SE tradeoff and maintain good performance on benchmark functions in terms of the adopted performance metrics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The recent increasing of multimedia applications has spurred a massive access of mobile telecom devices, which enables a quite concern of steadily rising energy costs. According to the latest data, information and communications technology (ICT) accounts for about 10% of the world’s energy consumption (Jiang et al. 2016). To this end, the concept of green communication has been put forward. Specially, in the future fifth-generation (5G) green communications, apart from pursuing high spectral efficiency (SE), the energy efficiency (EE) defined as bits per Joule (bits/J) (Kwon and Birdsall 1986) becomes another major metric that needs to be considered in the network design. Massive multiple-input multiple-output (MIMO) technique, using a large-scale transmit antennas to serve a much smaller number of users, is recognized as a promising technology in 5G cellular networks to provide high SE and EE. Nevertheless, one issue that comes along with using large number of antennas is the heavy energy consumption. Since SE-oriented designs are always achieved at huge power consumption (Tsilimantos et al. 2016; Lin et al. 2014), it is a big challenge to achieve the optimal EE and SE simultaneously. Therefore, the tradeoff between EE and SE becomes an urgent but important issue to be addressed.

The existing investigations on the tradeoff of EE–SE can be mainly divided into three categories: (1) to characterize the EE–SE relationship as accurately as possible (Onireti et al. 2011; Verdu 2002; Héilot et al. 2012), (2) to maximize either EE or SE while imposing constraints on the other (Mohammed et al. 2014; Huang and Qiu 2014) and (3) to formulate the EE–SE tradeoff optimization as a multi-objective optimization (MOO) problem (Amin et al. 2014, 2016; Deng et al. 2013).

To exploit the tradeoff relationship between EE and SE, early works were usually committed to derive the closed-form expression in terms of system parameters. In Verdu 2002, a novel and generic EE–SE tradeoff expression was derived in terms of numerous antenna configurations, but only in the low-SE regime. Compared with Verdu (2002), a wider range of SE values and antenna configurations were considered for the closed-form approximation of EE–SE tradeoff in Héilot et al. (2012). Similarly in Onireti et al. (2011), the closed-form approximation of EE–SE tradeoff was proposed based on Wyner model, which showed great similarity with Monte Carlo simulation. Although the closed-form approximation was not so accurate, it had the advantage of simple form.

The second category aimed at further characterizing a unique solution for global maximization. For example, an analytical method that maximizes EE subject to constrained SE was investigated in Mohammed et al. (2014). In Huang and Qiu (2014), the optimal selection of the base station (BS) antennas was obtained to maximize EE with fixed SE requirement for random beam-forming multi-antenna systems.

For the two categories mentioned above, the first one, i.e., characterizing the tradeoff relationship of EE–SE, can hardly provide enough guidance for system design due to the lack of global optimal solution, while the second category cannot dynamically track with the design objectives under the continually changing circumstances efficiently (Amin et al. 2016). Toward this point, the MOO approach is chosen to deal with the EE–SE tradeoff problems.

In Amin et al. (2014), the EE–SE tradeoff was proved to be equivalent to the optimization that minimizes the total energy consumption and maximizes the channel capacity. In Deng et al. (2013), the Pareto optimal for EE–SE tradeoff set was characterized firstly. Then, the weighted product scalarization method, similar to the Cobb–Douglas production function in economics (Cobb and Douglas 1928), was applied to convert the MOO problem into a single-objective optimization (SOO) problem. Furthermore, the unified EE–SE tradeoff metric was confirmed to be quasi-concave in terms of the transmit power, yielding a unique global optimum. Tang et al. (2014) provided an alternative way to convert the MOO problem into SOO problem. That is, by introducing an additional concept called resource efficiency, the tradeoff between EE and SE was exploited. In addition, the new system metric was optimized by the power allocation in single-cell OFDMA downlink systems.

However, in reality, due to the multi-objectivity, the goal of solving MOO problems is to find a set of tradeoff solutions instead of a single optimal solution. Thus, in Liu et al. (2017), Liu first formulated the EE–SE tradeoff MOO problem into a SOO problem. Afterward, a set of EE–SE tradeoff solutions were obtained using two developed algorithms, i.e., the weighted-sum particle swarm optimization (WS-PSO) algorithm and the normal-boundary intersection particle swarm optimization (NBI-PSO). Although this method can make tradeoff between EE and SE under various preferences, it may suffer from not fully exploiting entire Pareto optimal front. This is because a uniform spread of weight coefficient can hardly produce a uniform spread of Pareto front. To overcome this drawback, the most recently developed multi-objective evolutionary algorithms were investigated.

In this paper, the optimization of EE–SE tradeoff in large-scale MIMO system is first formulated into a MOO problem regarding the number of transmit antennas and transmit power. Inspired by NSGA-II, a multi-objective adaptive genetic algorithm, called MAGA, is proposed to solve the complicated MOO problem. In MAGA, a special mating parent selection is first adopted in the preliminary stage so that the solutions can be drawn toward the true Pareto front quickly. Then, a mutation among a small part of individuals is conducted to uniform the distribution. During the evaluation of solutions, a simple fitness assignment strategy is utilized, thereby making the proposed algorithm efficient from a burden of computational cost. For the sake of fairness, we also develop a more rational distribution metric based on Schott’s spacing metric for the discovered Pareto front. And classical evolutionary algorithms are simulated to testify the potential benefits of MAGA. Simulation results indicate that MAGA outperforms the other algorithms with respect to the convergence, while retains good performance in distribution and diversity.

The rest of the paper is organized as follows. Section 2 presents the system model for the downlink transmissions of multiuser massive MIMO systems. Section 3 formulates the EE–SE tradeoff as a mixed-integer continuous-variable-based MOO problem; thereby, the POF of EE–SE tradeoff is characterized. In Sect. 4, MAGA is presented in details to solve the MOO problem, together with the other evolutionary algorithms and performance metrics. The experimental setup and simulation results are shown in Sect. 5. Finally, Sect. 6 concludes the paper.

2 System model

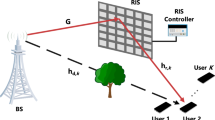

2.1 Massive MIMO system with linear precoding

Consider a downlink multiuser massive MIMO system deployed with Nt transmit antennas and K single antenna users, which is typical for a cellular network. Assume that Nt is large and satisfies \( N_{t} \gg K \). The channel matrix \( {\mathbf{H}} \), denoted as \( {\mathbf{H}}{ = [}{\mathbf{h}}_{1}^{T} \, {\mathbf{h}}_{2}^{T} \ldots {\mathbf{h}}_{K}^{T} ]^{T} \), is completely known at the transmitter. \( {\mathbf{h}}_{k}^{T} \) indicates the channel between the BS and kth user, which is a \( 1 \times N_{t} \) vector and has zero-mean complex Gaussian elements with variance 1/2 for each dimension.

To deal with the interference among users, a simple but effective zero-forcing beam-forming (ZF-BF) technique is employed. The ZF-BF precoding matrix \( {\mathbf{W = }}\text{[}{\mathbf{w}}_{1} \text{ }{\mathbf{w}}_{2} \ldots {\mathbf{w}}_{K} \text{]} \) can be obtained by:

where \( \left( \cdot \right)^{H} \) and \( \left( \cdot \right)^{ - 1} \) are the conjugate transpose and the inverse operation, respectively. Then, the received signal of the kth user, \( {\mathbf{y}}_{k} \), can be written as:

where \( \rho \) indicates the total transmit power, and \( {\mathbf{x}}_{k} \) denotes the kth user’s signal. \( \gamma \) is the normalization factor of kth user’s signal, which is expressed as \( \gamma = {{\left\| {\mathbf{W}} \right\|_{F}^{2} } \mathord{\left/ {\vphantom {{\left\| {\mathbf{W}} \right\|_{F}^{2} } K}} \right. \kern-0pt} K} \), where \( \left\| \cdot \right\|_{F} \) means the Frobenius norm. \( {\mathbf{n}}_{k} \) is the zero-mean complex Gaussian noise at the kth receiver. Without loss of generality, the noise variance is assumed to be 1/2 for each dimension.

2.2 Power consumption model

In the previous studies, the total consumed power of BS has always been calculated as the addition of transmit power and a constant quantity accounting for the circuit consumption. However, this model may not well reflect the actual situations because circuit power consumption in each radio frequency chain is also non-negligible in proportion to the number of transmit antennas through other analog devices. According to Li et al. (2014) and Björnson et al. (2015), the total power consumption is the sum of transmit power \( \rho \) and circuit power consumption Pc, i.e., \( P_{\varSigma } = \rho + P_{c} \). The circuit power consumption Pc is modeled as:

where \( P_{\text{DAC}} \), \( P_{\text{mix}} \), \( P_{\text{filt}} \), \( P_{\text{syn}} \), \( P_{\text{LNA}} \), \( P_{\text{IFA}} \), \( P_{\text{filr}} \) and \( P_{\text{ADC}} \) are the power consumption for the digital-to-analog converter (DAC), the mixer, the active filters at the transmitter side, the frequency synthesizer, the low-noise amplifier, the intermediate-frequency amplifier, the active filters at the receiver side and the analog-to-digital converter (ADC) at the BS, respectively. To simplify notation, \( P_{\text{c}} \) is denoted as:

where \( P_{1} = 2P_{\text{syn}} + P_{\text{LNA}} + P_{\text{mix}} + P_{\text{IFA}} + P_{\text{filr}} + P_{\text{ADC}} \) and \( P_{2} = P_{\text{DAC}} + P_{\text{mix}} + P_{\text{filt}} \).

3 Problem statement

In this section, the optimization of EE–SE tradeoff in downlink massive MIMO system is formulated into a MOO problem in terms of the number of antennas and transmit power. Additionally, effects of system parameters on EE and SE are investigated. On the basis of which, the POF of the EE–SE tradeoff is characterized.

3.1 EE and SE definition

From (2), the capacity for the kth user over a bandwidth of B Hz can be expressed as:

Then, the sum rate capacity is:

According to Jung et al. (2013), \( \left\| {\mathbf{W}} \right\|_{F}^{2} \) can be approximated as \( \left\| {\mathbf{W}} \right\|_{F}^{2} \approx \sum\nolimits_{k = 1}^{K} {\frac{1}{{\left\| {{\mathbf{h}}_{k} } \right\|^{2} }}} \) and follows Gaussian distribution for large K and \( N_{t} \). According to the inequality relationship between the harmonic average and the arithmetic average, we have:

Denoting \( \lambda_{l} = \sum\nolimits_{k = 1}^{K} {\left| {h_{l,k} } \right|^{2} } \), \( l = 1,2, \ldots ,N_{t} \) and \( \lambda_{1} \ge \lambda_{2} \ge \cdots \ge \lambda_{N} \), then \( \lambda_{l} \) follows Chi-square distribution for large \( N_{t} \). Considering that random transmit antenna selection (TAS) in massive MIMO systems can achieve near-optimal performance but with much lower complexity, it is employed in this paper to choose L antennas from the available \( N_{t} \) ones. According to Jung et al. (2013), the capacity with TAS is given by:

According to Hesami and Laneman (2011), we have:

Therefore, the capacity can be further written as:

The SE is defined as the number of bits per unit of bandwidth, which is characterized as:

Finally, the EE, defined as the number of bits per unit of energy, can be obtained from (4) and (11) as:

3.2 Discussion about the relation of EE–SE

In order to better characterize the EE–SE tradeoff, how the number of transmit antennas \( L \) and transmit power \( \rho \) affect the EE and SE is investigated firstly. From (11), it can be discovered that \( \eta_{\text{SE}} \) is strictly increasing with \( \rho \) when the number of antennas \( L \) is constant. To better characterize the relationship between the SE and the number of antennas \( L \), lets take the first derivative with respect to \( L \) for fixed transmit power \( \rho \), i.e.,

Due to the fact that the number of available antennas \( L \) is no more than the maximum number of the antennas \( N_{t} \), we have \( \frac{{\partial \eta_{\text{SE}} }}{\partial L} \ge 0 \), from which we know the SE is strictly increasing with \( L \). As for \( \eta_{\text{EE}} \), two lemmas are presented below.

Lemma 1:

\( \eta_{\text{EE}} \)increases firstly and then decreases with\( \rho \)for fixed\( L \).

The proof is similar to the appendix B in Li et al. (2014), and details are not provided here for limited space.

Lemma 2:

\( \eta_{\text{EE}} \)increases firstly and then decreases with\( L \)for fixed\( \rho \).

Proof:

First take the number of transmit antennas as a continuous variable, and then take the derivative operations twice. Following the proof of Lemmas 1 and 2 can be proved.□

In order to get a comprehensive insight into the impact of both \( L \) and \( \rho \) on EE, the achievable EE performance versus different values of \( \rho \) and \( L \) is displayed in Fig. 1. It can be observed that there exists a maximum \( \eta_{\text{EE}} \). However, too many antennas or too large transmit power can cause a decrease in \( \eta_{\text{EE}} \), which is contrary to the tendency of \( \eta_{\text{SE}} \) regarding large \( \rho \) or \( L \). That is to say, \( \eta_{\text{SE}} \) strictly increases over the \( L \) and \( \rho \), while the \( \eta_{\text{EE}} \) behaved concave shape on curve when \( L \) and \( \rho \) increase. Thus, there exists a tradeoff between the EE and the SE.

3.3 Pareto optimal front of EE–SE tradeoff

To maximize the EE and SE simultaneously, the EE–SE tradeoff is converted to a mixed-integer continuous-variable-based MOO problem expressed below:

where \( \rho^{\hbox{max} } \) is the maximum value of transmit power.

In general, the MOO problem owns a set of tradeoff solutions instead of a single optimal solution. For \( {\rm P}(1) \), the POF, i.e., the image of the Pareto optimal set (POS) in the objective space is shown in the red curve in Fig. 2, which well agrees with the conclusion derived in Sect. 3.2 that the POF of EE–SE tradeoff is neither convex nor concave.

Theoretically, the ideal solution of EE–SE tradeoff is to get the true POF of the optimization problem \( P(1) \). However, \( P(1) \) is a NP-hard problem that does not have closed-form solution, which means to get the true POF usually suffers from prohibitive computational burden by using the exhaustive search method. Toward this end, a better way to implement is to take an approximation of the POF to select the final preferred solutions.

4 Algorithm design and performance metric

4.1 The proposed MAGA algorithm

Due to the advantages of simple in concept and fast convergence rate, multi-objective evolutionary algorithms are extensively employed to handle the MOO problems. Besides, the multi-objective evolutionary algorithms are good at optimizing the mixed integer continuous variable simultaneously, as the property of which is particularly suitable for the optimization problem \( P(1) \) in dealing with the number of transmit antennas and the transmit power. NSGA-II is one of the potential evolutionary algorithms for solving the MOO problems and is widely used or extended in many real-world applications. Considering that the non-dominated sorting of NSGA-II takes a time-consuming process, in this section, a multi-objective adaptive genetic algorithm, i.e., MAGA is proposed based on NSGA-II to further improve the efficiency.

4.1.1 Main framework

The pseudocode of the proposed MAGA algorithm is summarized in Algorithm I. Initially, a random parent population \( {\mathbf{P}} \) with \( N \) individuals is generated, and the corresponding values of objective functions for each individual are calculated. Then, environment selection operation (“Algorithm II”) is executed on \( {\mathbf{P}} \), producing an archive population \( {\mathbf{A}} \) where the non-dominated individuals are preserved.

From Lu and Yen (2003) and Tiwari et al. (2010), we know that mating selection is critical to population evolution since selecting mating parents from \( {\mathbf{P}} \) can demonstrate high population diversity, while selecting parents from \( {\mathbf{A}} \) can significantly speed up the convergence. Inspired by that, an adaptive procedure is designed based on the number of non-dominated individuals. Usually, it is supposed to obtain \( N \) non-dominated individuals with the shortest time, then to guide the solutions toward a uniformly spread-out POF. Therefore, when the number of non-dominated solutions is small, the non-dominated individuals are used to guide the evolutionary of dominated individuals via genetic operators; while when the number of non-dominated individuals is large enough, apart from the normal crossover, a novel mutation is performed with small step size among the members in sparse regions. Detail steps are provided as follows.

Denote \( \left| \cdot \right| \) as the cardinality of a set. If \( \left| {\mathbf{A}} \right| \) is not larger than the population size \( N \), the generate offspring operation (“Algorithm III”) is adopted on \( {\mathbf{P}} \) and \( {\mathbf{A}} \) to produce \( N \) offspring solutions. Then, the environment selection is implemented among the combined population of \( {\mathbf{P}} \) and \( {\mathbf{A}} \), yielding the new parent population \( {\mathbf{P}} \) and the new archive population \( {\mathbf{A}} \). If \( \left| {\mathbf{A}} \right| \) is larger than \( N \), apply crossover operators on \( {\mathbf{P}} \) with crossover probability \( p_{\text{c}} \), and apply mutation operators with the mutation probability \( p_{\text{m}} \). Specially, the mutation operator is performed on the least crowded points with a small mutation step size. Finally, the new offspring individuals are combined with \( {\mathbf{P}} \) and the environment selection is implemented to preserve new \( {\mathbf{P}} \) and \( {\mathbf{A}} \).

Repeat the above process until the stopping criterion is reached.

4.1.2 Environmental selection

The environment selection procedures are summarized in “Algorithm II,” which aims to evaluate the individuals and determine the \( N \) non-dominated solutions from the combined population. The fast non-dominated sorting approach of NSGA-II is rather fine-grained, since each of the individuals is ranked with a non-domination level. Similarly, the fitness assignment method (Jiang and Yang 2017) defining the fitness value as the number of individuals that dominates it is also time-consuming, although it is more concise. In this paper, the quick non-dominated sorting algorithm (Q-NDSA) (Bechikh et al. 2015) is developed for non-dominated sorting. To be different, the individuals are simply categorized into non-dominated ones and dominated ones without caring how poor a solution is. This strategy is beneficial because once an individual is marked as dominated one, and it will never be compared with the other individuals. Thus, it can significantly reduce the number of comparisons and improve the computational efficiency. Detail steps are provided as follows.

Firstly, a fast non-dominated sorting algorithm is developed inspired by the Q-NDSA, so that each member of the population \( i \) is assigned to a fitness value \( F(i) \). In the proposed algorithm, we extend the fitness value just to determine whether the member is dominated or not by:

Afterward, the non-dominated solutions, i.e., the members with a fitness value of zero, are preserved to the archive \( {\mathbf{A}} \). If \( \left| {\mathbf{A}} \right| \) is less than or equal to \( N \), the non-dominated solutions in \( {\mathbf{A}} \) together with \( N - \left| {\mathbf{A}} \right| \) additional random selected solutions from the parent population are copied to a new parent population \( {\mathbf{P}} \). Otherwise, a crowded-comparison operator (Deb et al. 2002) is performed to reduce \( {\mathbf{A}} \) to \( N \) non-dominated individuals, in order that the best diversity possible is demonstrated of the truncated \( {\mathbf{A}} \).

4.1.3 Offspring generation

As illustrated above, when the number of non-dominated individuals is small, the mating parent can be selected from the parent population \( {\mathbf{P}} \) and the archive population \( {\mathbf{A}} \). The mating selection and genetic operation are summarized in “Algorithm III.”

4.1.4 Computational complexity

Next lets discuss the computational complexity of the entire algorithm. In each iteration, computational complexity is mainly produced by the offspring generation and environment selection, including the developed Q-NDSA sorting, the crowding distance assignment and the sorting according fitness value. Since the generation of an offspring solution takes \( O\left( M \right) \) computations, where \( M \) is the number of objectives, then the whole offspring generation requires \( O\left( {MN} \right) \) computations to produce \( N \) offspring solutions, where \( N \) is the population size. Due to the simple fitness assignment, the developed Q-NDSA sorting has a computational complexity of \( O(N{ \log }\text{(}N\text{))} \), which is smaller than the primary complexity of Q-NDSA (Bechikh et al. 2015) with \( O\left( {N^{2} } \right) \) computations. Additionally, the crowding distance calculation takes \( O(MN{ \log }\text{(}N\text{))} \) computations, and the sorting according fitness value requires \( O(N{ \log }\text{(}N\text{))} \) computations. In summary, the overall computational complexity of MAGA for each iteration is \( O(MN{ \log }\text{(}N\text{))} \), which is mainly governed by the crowding distance calculation.

4.2 Compared algorithm

Several well-known multi-objective optimization algorithms are employed for comparison in our studies. They are the NSGA-II, the multi-objective particle swarm optimization (MOPSO), the non-dominated sorting chemical reaction optimization (NCRO) and NSGA-III, representing different classes of multi-objective evolutionary algorithms.

-

1.

NSGA-II: it is a representative genetic algorithm proposed by Deb et al. (2002). Three benefits of NSGA-II are a fast non-dominated sorting approach with \( O\left( {MN^{2} } \right) \) computational complexity, an elitist mechanism conducted by regarding the combined individuals of the parent and offspring population as mating pool, as well as the crowded-comparison operator guiding the selection process toward a uniform spread-out POF. Due to its good performance of diversity and convergence in the obtained non-dominated front, NSGA-II has been widely used in handling MOO problems.

-

2.

MOPSO: the MOPSO is motivated by the simulation of the food searching activities of a swarm of particles (Coello Coello et al. 2004). It has been shown that MOPSO is relatively easy to implement. During the iterative process, each particle updates its velocity and the parent position corresponding to both the local best position and the global best position. In order to guide the flight of the particles, an external repository is extended to keep a historical record of the non-dominated vectors. Different from the elitist mechanism in NSGA-II, MOPSO extracts the non-dominated solutions from the parent population firstly. Then these non-dominated solutions are combined with the repository for another selection, which may cause huge time complexity if there are many non-dominated solutions.

-

3.

NCRO: chemical reaction optimization (CRO) is a state of art heuristic algorithm, inspired by the interaction between molecules during chemical reactions (Lam and Li 2010). In CRO, a solution in the mathematical domain is represented by the structure of a molecular. A molecular possesses potential energy (PE) and kinetic energy (KE) modeled as the vector of objective function values and the tolerance of the solutions to have worse objective function values afterward. What’s more, four kinds of elementary reactions are designed, where two ineffective collisions are implemented for local search and the other two reactions are used to improve the diversity. To exploit the CRO characteristics in solving MOO problems, NCRO is put forward involving Pareto ranking scheme and crowded-comparison operator in CRO (Bechikh et al. 2015).

-

4.

NSGA-III: NSGA-III is a reference-point-based multi-objective evolutionary algorithm following NSGA-II framework. Different from NSGA-II, NSGA-III improves the selection operator with a number of well-spread reference points, which can either be predefined in a structured manner or supplied preferentially by the user. The NSGA-III algorithm has been proved to be able to brilliantly find a well-converged and well-diversified set of solutions repeatedly over a certain number of iterations.

4.3 Performance metric

According to Zitzler et al. (2000), we know that there are three issues that are normally taken into account for a quantitative assessment of the algorithms:

-

1.

Minimize the discrepancy of the approximated POF produced by MOO algorithms with respect to the true POF.

-

2.

Maximize the spread achieved among the obtained solutions to keep a rich diversity.

-

3.

Maximize the extent of uniformity, so that a distribution of vectors as smooth as possible can be obtained.

Based on the above notions, three metrics are employed to measure the performance of the algorithms as follows:

-

1.

Inverted Generational Distance (\( {\text{IGD}} \)): It is used to evaluate the diversity and convergence by calculating the distance between the true POF and the approximated POF. Denote a set of uniformly spread points along the true POF as \( {\text{POF}} \), and the approximated POF as \( {\text{POF}}^{*} \), respectively, then the IGD is defined as (Yuan et al. 2015):

$$ {\text{IGD}} = \frac{1}{{n_{\text{POF}} }}\sum\limits_{i = 1}^{{n_{\text{POF}} }} {d_{i} } $$(16)where \( n_{\text{POF}} \) is the number of individuals in \( {\text{POF}} \), \( d_{i} \) indicates the Euclidean distance between the \( i\text{th} \) member in \( {\text{POF}} \) and its nearest member in \( {\text{POF}}^{*} \).

-

2.

Maximum Spread (\( {\text{MS}} \)): It numerically reflects the extent that the approximated POF covers the true POF as given by (Goh and Tan 2007):

$$ {\text{MS}} = \sqrt {\frac{1}{M}\sum\limits_{k = 1}^{M} {\left[ {\frac{{\hbox{min} \left[ {\overline{{{\text{POF}}_{k} }} ,\overline{{{\text{POF}}_{k}^{*} }} } \right] - \hbox{max} \left[ {\underline{{{\text{POF}}_{k} }} ,\underline{{{\text{POF}}_{k}^{*} }} } \right]}}{{\overline{{{\text{POF}}_{k} }} - \underline{{{\text{POF}}_{k} }} }}} \right]^{2} } } $$(17)where \( \overline{{POF_{k} }} \) and \( \underline{{POF_{k} }} \) are the maximum and the minimum values in terms of the \( k\text{th} \) objective in \( POF \), respectively. Similar definitions are for \( \overline{{POF_{k}^{*} }} \) and \( \underline{{POF_{k}^{*} }} \).

-

3.

Spacing Metric (\( S \)): the spacing metric measures how evenly the individuals in \( {\text{POF}}^{*} \) are distributed. Schott (Schott 1995) has developed this kind of metric regarding the distance between the \( i\text{th} \) solution and its nearest solution. However, it is invalid if partial individuals are clustered. To overcome this drawback, a new spacing metric is adopted in this paper. Firstly, the population is sorted according to the objectives in ascending order, and then the distance between two adjacent members of the \( i\text{th} \) member is calculated. The new spacing metric is expressed as:

$$ S = \sqrt {\frac{1}{{n_{{{\text{POF}}^{*} }} - 1}}\sum\limits_{i = 1}^{{n_{{{\text{POF}}^{*} }} }} {\left( {D_{i} - \bar{D}} \right)^{2} } } $$(18)where \( D_{i} \) is the normalized Euclidean distance between the two adjacent solutions of the \( i\text{th} \) member. For the boundary solutions, \( D_{i} \) indicates the double normalized Euclidean distance between the solution and its nearest member. \( \bar{D} \) is the average value of \( D_{i} \).

In addition to the assessment indexes presented above, we also investigate the number of non-dominated solutions and time complexity analysis below.

5 Simulation results

In this section, simulations are carried out to validate the efficiency of the proposed MAGA algorithm. For comparison, classical multi-objective optimization algorithms, such as NSGA-II, NSGA-III, MOPSO and NCRO, are also employed. Our performed experiments are divided into two parts. The first one is to deal with the EE–SE tradeoff optimization problem, while the second is to handle the two-objective benchmark functions.

5.1 EE–SE Tradeoff Optimization Problem

In this section, simulations are carried out to testify the performance of the proposed MAGA versus other evolutionary algorithms in dealing with the EE–SE tradeoff problem. The default parameters for problem \( {\mathbf{{\rm P}(1)}} \) are: \( P_{1} = 162.5\,{\text{mW}} \), \( P_{2} = 48.2\,{\text{mW}} \), the number of users \( K = 20 \), the maximum number of antennas \( N_{t} = 100 \) and the transmit power \( \rho \in [ - \,40,20]\,{\text{dBW}} \). For all the simulations, the population size is set to 300 and the archive (or the repository) size is the same as the population size. Besides, for all these GA-based evolutionary approaches, a real-number representation is adopted, and the simulation binary crossover (SBX) and the polynomial mutation are chosen as the recombination and the mutation operators, respectively. All these algorithms terminate after 100 iterations. According to Duan and Gan (2015), Deb et al. (2002), Coello Coello et al. (2004), Lam and Li (2010), and Bechikh et al. (2015), the specific parameters for the algorithms in the simulation are summarized in Table 1.

The obtained approximated POFs of MAGA, NSGA-II, MOPSO, NCRO and NSGA-III are shown in Fig. 3. The true POF is also provided for reference. It can be seen that all those algorithms can find solutions along the true POF. However, NSGA-II could rarely produce solutions at the high-EE region, and an uneven distribution of POF is obtained using NSGA-II and MOPSO. By contrast, MAGA and NCRO turns out to be more competitive, owning good diversity and maintaining a uniform distribution.

Figure 4 illustrates the required iterations for the algorithms to get 300 non-dominated solutions. It is clear that NCRO and MOPSO need 6 iterations, thus having the worst convergence. Owing to the selection of mating parents from both \( {\mathbf{P}} \) and \( {\mathbf{A}} \), the proposed MAGA only needs 3 iterations and converges faster than other algorithms.

To further testify the computation efficiency of the proposed algorithm, the CPU time required by different algorithms is provided in Table 2, in which a massive MIMO system with available antennas varying from 1 to 100 and the transmit power varying in range of [0, l] is considered. For comparison, the CPU time of extracting the true POF by the exhaustive search method is also provided, which takes about 2165 s to extract all non-dominated solutions. However, the evolutionary optimization algorithms are more efficient in handling this problem. Specifically, MAGA and NCRO only require about 3 s to run out 100 iterations, which is much less than MOPSO, NSGA-II and NSGA-III. Compared with NCRO, MAGA requires less running time resulting from its special fitness assignment. It is important to point out that MOPSO, NSGA-II and NSGA-III have the computational complexity of \( O\left( {MN^{2} } \right) \) for one generational cycle, while NCRO has a time complexity of \( O\left( {N^{2} } \right) \) for the worst case. However, MAGA has \( O(MN{ \log }\text{(}N\text{))} \) computational complexity for each iteration as illustrated in Sect. 4.1.

Since the performance metrics presented in Sect. 4.3 can statistically reflect the closeness, distribution, coverage and convergence of the obtained POF to true POF, they are employed here to testify the performance of different algorithms in dealing with the EE–SE tradeoff optimization. The best values are highlighted in bold face. The corresponding results of MS metric are provided in Table 3, which can be considered as the percentage that the objective space of approximated POF covers that of the true POF. Clearly, all the algorithms have strong tracking ability to adapt to the true POF soon. The approximations of MAGA and NCRO can almost cover the whole true POF at last, while NSGA-III performs poorly. MOPSO and NSGA-II are not competitive although they also perform well.

Table 4 presents the spacing metric S obtained by all these algorithms. The results of S show that NSGA-III and MOPSO have an uneven data distribution, besides the NSGA-III algorithm performs unsteadily during the evolution. By contrast, MAGA outperforms all the other algorithms with good convergence owing to the small range of mutation among individuals of the sparse region. Both NSGA-II and NCRO employ the crowded-comparison operator, providing comparable or slightly better performance as MAGA at the later stage.

Table 5 compares the IGD performance metric values of different algorithms. Since IGD measures the distance between approximated POF and uniformly distributed true POF, it is more comprehensive than the other metrics. The results indicate that NSGA-III provides the worst results in this measurement, suggesting its poor search ability. The MAGA algorithm obtains the best approximations in terms of the true POF except a slight superiority degradation at the later stage compared with NCRO. Nevertheless, the difference between them is slight.

In summary, NSGA-II is passable in convergence, distribution and diversity; it has the disadvantage of time-consuming evolution. MOPSO and NSGA-III show obvious drawbacks in all these aspects. Although NCRO and MAGA seem equally competitive regarding the distribution, diversity and time complexity, MAGA significantly outperforms NCRO in convergence due to the guide of non-dominated solutions.

5.2 Benchmark functions test

In this part, classical benchmark functions are employed to evaluate the performance of different algorithms mentioned above. Four extensively used bi-objective Zitzler–Deb–Thiele’s (ZDT) test instances (Zitzler et al. 2000) with different difficulties are adopted and described in Table 6. Since more variables are required in the test functions than in the EE–SE tradeoff problem, 500 individuals are generated in each population and all these algorithms run for a maximum number of 300 iterations. Other parameter settings are the same as in Sects. 5.1.

Note that in order to fully assess our proposed algorithm, the metrics of S, IGD and CPU time described in Sect. 4.3 are still employed, while the MS metric is canceled since sometimes it’s extremely difficult for the approximate POFs to cover the true POF.

5.2.1 Test function ZDT1

In Fig. 5 the approximate POFs on ZDT1 are shown, which can readily reflect the tracking ability of different algorithms. It can be seen that both MOSPO and NCRO are capable of finding the POF, and the solutions obtained by the proposed algorithm are very close to the true POF, while NSGA-II and NSGA-III exhibit poor performance in terms of the tracking ability and the diversity.

To get an insight into the evolutionary process, the number of non-dominated solutions versus the iterations is also shown in Fig. 6. Although MOPSO seems to get most non-dominated solutions, it has an unfair advantage due to the special non-dominated solutions preservation. That is to say, the non-dominated solutions produced at each iteration are collected together to select new non-dominated solutions. In that case, it is easy to get 500 non-dominated solutions if there is no better solution. By contrast, the other algorithms just search non-dominated solutions in the present population. In this sense, the proposed algorithm owns a competitive advantage in fast evolution.

Table 7 provides the statistical mean values of S, IGD and CPU time required by different algorithms. The best values obtained by different algorithms are highlighted in bold. The S metric of MOPSO is much smaller after 200 iterations, which mainly benefits from the large number of non-dominated solutions. The proposed MAGA algorithm can achieve a comparable S value as MOPSO after 300 iterations. Besides, MOPSO has the best IGD values, which measures the Euclidean distance between the approximate POF and the true POF indicated by Fig. 5. However, MOPSO takes more CPU time than MAGA and NCRO.

5.2.2 Test function ZDT2

Figure 7 shows the approximated POFs on ZDT2 obtained by different algorithms. It can be discovered that NSGA-II and NSGA-III perform much worse in dealing with such kind of optimization problem. MOPSO exhibits best performance, which is slightly better than NCRO and MAGA.

Figure 8 provides a graphical view of the number of non-dominated solutions versus the iterations on ZDT2. It reveals very similar results as Fig. 6. The number of non-dominated solutions produced by MAGA grows stably and is relatively high when compared with NCRO, NSGA-II and NSGA-III.

The statistical mean values of S, IGD and CPU time on ZDT2 achieved by different algorithms are listed in Table 8, which shows that MOPSO has the most competitive distribution and coverage of true POF. However, the convergence speed of MOPSO is much slower when compared with MAGA and NCRO. In contrast to NSGA-II and NSGA-III, MAGA provides an acceptable performance in terms of S and IGD, but has fast convergence speed.

5.2.3 Test function ZDT3

Figure 9 shows the approximate POFs on ZDT3 achieved by different algorithms. It is clear that the POF is convex and disconnected. The non-dominated solutions attained by MOPSO cannot cover the whole true POF. NCRO has the best performance among those algorithms. Our proposed MAGA algorithm also offers a good performance and performs better than NSGA-II and NSGA-III.

The number of non-dominated solutions versus the iterations on ZDT3 is illustrated in Fig. 10. It can be noticed that the number of non-dominated solutions obtained by MOPSO is a consequence of its reserved accumulations. Besides, due to the guide of non-dominated solution, the proposed MAGA algorithm offers a more stable process of evolution.

The statistical mean values of different performance metrics on ZDT3 are illustrated in Table 9. MOPSO, NSGA-II and NSGA-III exhibit poor performance in terms of approximate POFs. From the IGD and CPU time, it is known that NCRO can achieve much closer POF to true POF with less time, which is slightly better than MAGA. The results of S metric indicate that the POF of MAGA is almost as uniform as that of NCRO.

5.2.4 Test function ZDT4

The benchmark function ZDT4 is a tough optimization problem with a number of local Pareto optimal fronts, which easily make the algorithm trapped in the local optimal. Thus, in order to get out of the local optimum, certain changes should be made in the decision domain (Deb et al. 2002). The approximate POFs obtained by different algorithms on ZDT4 are shown in Fig. 11. It is observed that NSGA-II, NSGA-III and MAGA can get better solutions than NCRO and MOPSO, indicating the strong search ability of crossover and mutation operations in GA-based algorithms. Furthermore, due to the guide of non-dominated solutions, MAGA exhibits its competitive advantage over the other algorithms in dealing with this problem.

Figure 12 shows the number of non-dominated solutions versus the iterations on ZDT4. Different from the conclusions drawn from the first three benchmark functions ZDT1-ZDT3, the advantage of NCRO disappears with only several non-dominated solutions. For MOPSO, as stated before, it is not fair to compare it with other algorithms because its non-dominated solutions are consequences of the accumulation. Compared with NSGA-II and NSGA-III, MAGA can obtain most non-dominated solutions. Besides, the big fluctuations in the curve of MAGA readily reflect the orderly evolution of MAGA. Specifically, once a new non-dominated solution appears, more solutions will be attracted to develop non-dominated solutions; as a result, an expanded non-dominated population is resulted.

The corresponding statistical mean values of S, IGD and CPU time are provided in Table 10, which reveals that MAGA is able to track the true POF with well-distributed solutions. Both MAGA and NCRO take less than 60 s to complete 300 iterations of evolution. However, NCRO attains much fewer solutions than MAGA.

6 Conclusion

In this paper, the tradeoff optimization between EE and SE in multiuser massive MIMO systems has been investigated. The EE–SE tradeoff relationship with respect to the number of BS antennas and transmit power has been derived, on the basis of which the EE–SE tradeoff optimization problem is modeled as a mixed-integer-continuous-variable MOO problem. To efficiently solve the MOO problem, a multi-objective adaptive genetic algorithm, called MAGA, has been developed, in which improvement is employed in mating selection and fitness assignment. Simulation results indicate that MAGA can search the EE–SE tradeoff POF in massive MIMO system with a faster convergence speed when compared with the other algorithms. Moreover, it shows comparable performance as the classical MOO algorithms in terms of quality evaluation metrics on the benchmark functions.

References

Amin O, Bedeer E, Ahmed MH, Dobre OA (2014) Energy efficiency and spectral efficiency trade-off for OFDM systems with imperfect channel estimation. In: Proceedings of the 2014 IEEE international conference on communications (ICC), Sydney, Australia, pp 355–3558

Amin O, Bedeer E, Ahmed MH, Dobre OA (2016) Energy efficiency-spectral efficiency tradeoff: a multiobjective optimization approach. IEEE Trans Veh Technol 65(4):1975–1981

Bechikh S, Chaabani A, Said LB (2015) An efficient chemical reaction optimization algorithm for multi-objective optimization. IEEE Trans Cybern 45(10):2051–2064

Björnson E, Sanguinetti L, Hoydis J, Debbah M (2015) Optimal design of energy-efficient multi-user MIMO systems: is massive MIMO the answer? IEEE Trans Wirel Commun 14(6):3059–3075

Cobb CW, Douglas PH (1928) A theory of production. Am Econ Rev 18(1):139–165

Coello Coello AC, Pulido GT, Lechuga MS (2004) Handling multiple objectives with particle swarm optimization. IEEE Trans Evol Comput 8(3):256–279

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6:182–197

Deng L, Rui Y, Cheng P, Zhang J, Zhang QT, Li MQ (2013) A unified energy efficiency and spectral efficiency tradeoff metric in wireless networks. IEEE Commun Lett 17(1):55–58

Duan H, Gan L (2015) Orthogonal multiobjective chemical reaction optimization approach for the brushless DC motor design. IEEE Trans Magn 51(1):1–7

Goh C, Tan KC (2007) An investigation on noisy environments in evolutionary multiobjective optimization. IEEE Trans Evol Comput 11(3):354–381

Héilot F, Imran MA, Tafazolli R (2012) On the energy efficiency-spectral efficiency trade-off over the MIMO Rayleigh fading channel. IEEE Trans Commun 60(5):1345–1356

Hesami P, Laneman JN (2011) Limiting behavior of receive antenna selection. In: Proceedings of the IEEE annual conference on information sciences and systems, Baltimore, USA, pp 1–6

Huang Y, Qiu L (2014) On the energy efficiency-spectral efficiency tradeoff in random beamforming. IEEE Wirel Commun Lett 3(5):461–464

Jiang SY, Yang SX (2017) A steady-state and generational evolutionary algorithm for dynamic multiobjective optimization. IEEE Trans Evol Comput 21(1):65–82

Jiang D, Zhang P, Lv Z, Song H (2016) Energy-efficient multi-constraint routing algorithm with load balancing for smart city applications. IEEE Internet Things J 3(6):1437–1447

Jung M, Kim T, Min K et al (2013) Asymptotic distribution of system capacity in multiuser MIMO systems with large number of antennas. In: Proceedings of the 2013 IEEE 77th vehicular technology conference (VTC Spring), Las Vegas, USA, pp 1–5

Kwon H, Birdsall T (1986) Channel capacity in bits per Joule. IEEE J Ocean Eng 11(1):97–99

Lam AYS and Li VOK (2010) Chemical-reaction-inspired metaheuristic for optimization. IEEE Trans Evol Comput 14(3):381–399

Li H, Song L, Zhu D, Lei M (2014) Energy efficiency of large-scale multiple antenna systems with transmit antenna selection. IEEE Trans Commun 62(2):638–647

Lin IC, Han S, Xu Z, Pan Z (2014) EE–SE relationship in MIMO systems. In: Proceedings of the 2014 IEEE international wireless symposium (IWS), Beijing, China, pp 1–4

Liu ZJ, Du WM, Sun DC (2017) Energy and spectral efficiency tradeoff for massive MIMO systems with transmit antennas selection. IEEE Trans Veh Technol 66(5):4453–4457

Lu H, Yen GG (2003) Rank-density-based multiobjective genetic algorithm and benchmark test function study. IEEE Trans Evol Comput 7(4):325–343

Mohammed K, Huq S, Mumtaz S et al (2014) Energy efficiency optimization in MU-MIMO systems with spectral efficiency constraint. In: Proceedings of the 2014 IEEE symposium on computers and communications (ISCC), pp 1–5

Onireti O, Hliot F, Imran MA (2011) Closed-form approximation for the trade-off between energy efficiency and spectral efficiency in the uplink of cellular network. In: Proceedings of the 11th European wireless conference, Vienna, Austria, pp 706–711

Schott J (1995) Fault tolerant design using single and multicriteria genetic algorithm optimization. M.S. thesis, Massachusetts Technology

Tang J, So D, Alsusa E, Hamdi K (2014) Resource efficiency: a new paradigm on energy efficiency and spectral efficiency tradeoff. IEEE Trans Wirel Commun 13(8):4656–4669

Tiwari S, Fadel G, Deb K (2010) AMGA2: improving the performance of the archive-based micro-genetic algorithm for multi-objective optimization. Eng Optim 43(4):377–401

Tsilimantos D, Gorce JM, Runser KJ, Poor HV (2016) Spectral and energy efficiency trade-offs in cellular networks. IEEE Trans Wirel Commun 15(1):54–66

Verdu S (2002) Spectral efficiency in the wideband regime. IEEE Trans Inf Theory 48(6):1319–1343

Yuan Y, Xu H, Zhang B, Yao X (2015) Balancing convergence and diversity in decomposition-based many-objective optimizers. IEEE Trans Evol Comput 19(5):694–716

Zitzler E, Deb K, Thiele L (2000) Comparison of multiobjective evolutionary algorithms: empirical results. Evol Comput 8(2):173–195

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (No. 61201135), in part by the Chinese Postdoctoral Science Foundation (3464), in part by the Shaanxi Natural Science Foundation (No. 2015JQ6245), in part by the Fundamental Research Funds for the Central Universities (No. 7214569601) and in part by the 111 Project (B08038).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by V. Loia.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hei, Y., Zhang, C., Song, W. et al. Energy and spectral efficiency tradeoff in massive MIMO systems with multi-objective adaptive genetic algorithm. Soft Comput 23, 7163–7179 (2019). https://doi.org/10.1007/s00500-018-3356-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3356-x