Abstract

The hesitant fuzzy linguistic (HFL) variable can handle the uncertainty very well, and Muirhead mean (MM) operator can consider correlations among any amount of inputs by an alterable parameter, which is a generalization of some existing operators by changing the parameter values. However, the traditional MM is only suitable for crisp numbers. In this article, we enlarge the scope of the MM operator to the HFL circumstance, and two new aggregation operators are proposed, including the HFL Muirhead mean operator and the weighted HFL Muirhead mean (WHFLMM) operator. Simultaneously, we discuss some worthy characters and some special cases concerning diverse parameter values of these operators. Moreover, a multiple-attribute decision-making method under the HFL environment is developed based on the WHFLMM operator. Lastly, a numerical example is applied to explain the feasibility of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since fuzzy set is firstly proposed by Zadeh (1965), the researches about it and its extensions have gotten a rapid development and obtained a number of applications (Abdullah et al. 2017; Qayyum et al. 2016). However, it is unable to express the complex fuzzy information occasionally. With the further research on fuzzy set theory, it develops in two aspects. One aspect is to add no membership degree, and then intuitionistic fuzzy set (IFS) Atanassov (1986) was proposed; another aspect is to add multiple membership degrees, and then hesitant fuzzy set (HFS) Torra (2010) was proposed. The membership degree in HFS can be expressed by a number of possible values (Torra 2010; Torra and Narukawa 2009), and many research results about HFS have been achieved. Torra (2010) explored the correlation between HFS and IFS and then discovered that the envelope of HFS is IFS. Xia and Xu (2011) proposed some aggregation techniques on HFS and applied them to solve the MADM problems. Xu and Xia (2011) proposed diverse distance measures of HFSs. Wei (2012) proposed some prioritized aggregated operators for HFSs.

Due to the complexity of the practical decision problems, people generally give the attribute values for a given project by combining qualitative evaluation with quantitative evaluation. Especially, natural language term like “very good,” “good,” “bad,” “very bad,” and so on is easy to express the people’s judgment for special object. On the basis of HFS and linguistic term (LT), Lin et al. (2014) put forward the definition of HFL set (HFLS), which enables some membership degrees belonging to a certain LT, and further proposed some HFL aggregation operators, especially, proposed induced HFL correlated aggregation operators, HFL prioritized aggregation operators, HFL power aggregation operators. Obviously, HFLS can more conveniently express the uncertain information than HFS or linguistic variables.

In the last few years, many new aggregation operators have been researched (Liu et al. 2016a; Liu and Tang 2016; Liu and Teng 2016; Liu and Wang 2017; Rahman et al. 2017a, b). Especially, some of them can consider relationship among any numbers; for example, based on Bonferroni mean (BM), Zhu et al. (2012) proposed geometric BM for HFSs, which combined the BM with the geometric mean (GM). Xu and Yager (2011) put forward the BM for IFNs, and Liu et al. (2017) proposed interaction PBM operators for IFNs. Liu and Li (2017) proposed power BM operators for interval-valued IFNs (IVIFNs), Liu et al. (2014b) proposed the intuitionistic linguistic (IL) weighted BM, Liu and Liu (2016) put forward the intuitionistic uncertain linguistic (IUL) partitioned BM (PBM), and Liu et al. (2016b) put forward the improved BM for multi-valued neutrosophic numbers. Liu and Chen (2017) proposed some Heronian mean (HM) operators for IFNs, Yu and Wu (2012) proposed HM operators for IVIFNs, and Liu (2017) proposed some power HM operators for IVIFNs.

Liu et al. (2014a) put forward the IULHM operators, and Liu and Teng (2017) proposed the normal neutrosophic number HM operators. Liu and Shi (2017) developed some HM operators for neutrosophic and linguistic information. The BM and HM operators just take the two input arguments interrelationships into account. In order to consider the relationship among any numbers of parameters, the Maclaurin symmetric mean (MSM) Maclaurin (1729) was firstly proposed by Maclaurin and then Detemple and Robertson (1979) proposed the generalized MSM. Further, a more generalized operator, Muirhead mean (MM) Muirhead (1902) was proposed, which can consider correlations among any amount of inputs by an alterable parameter, and BM operator and MSM Qin and Liu (2014) operator are the special cases of MM operator. Thus, the MM is a generalization of some existing operators and it is more suitable to solve MADM circumstance.

As the above discussed, we will find that the HFLS is good at expressing the uncertainty, so it is very helpful to handle the uncertain and vague information, and the MM can consider correlations among any amount of inputs by an alterable parameter, and it is a generalization of some existing operators. Hence, it is significant and indispensable to extend the MM to aggregate HFL information. Motivated by this idea, the goal and contributions of this article are (1) to extend MM operator to HFL information; (2) to explore some worthy characters and some special cases of these proposed operators; (3) to develop a MADM method for HFL information based on the proposed operators; and (4) to show the feasibility and advantages of the proposed method.

So as to achieve above goal, the remaining part of the article is organized as follows. In Sect. 2, we briefly introduce some concepts of linguistic information, HFL information, and the MM operator. In Sect. 3, we put forward some the HFL Muirhead mean operators and also explore worthy characters and some special cases. In Sect. 4, we put forward a decision-making method with HFL information based on the proposed operators. In Sect. 5, we use a practical example to verify the effectiveness of the proposed method in the article and compare this method with the other methods. In Sect. 6, we end the article.

2 Preliminaries

In this section, we will present some concepts and operational laws of the HFLS and the MM operator.

2.1 HFS

Definition 1

(Torra 2010) Given a fixed set Y, then a HFS on Y is described as follows:

where \(h_F(y)\) is a set of some values in [0,1], expressing the possible membership degree of the element \(y\in Y\) to the set F.

Definition 2

(Xia and Xu 2011) For a given HFE hf, we define \(s({hf})=\frac{1}{\# hf}\mathop {\sum }\nolimits _{\gamma \in hf} \gamma \) as the score function of hf, where \(\# hf\) is the number of the elements in hf.

For any two HFEs \(hf_1\) and \(hf_2\) , if \(s({hf_1 })>s({hf_2})\), then \(hf_1 >hf_2 \), and if \(s({hf_1})=s({hf_2})\), then \(hf_1 =hf_2 \).

2.2 Linguistic term set (LTS)

Let \(Sf=\{{sf_t |t=1,2,\ldots ,u} \}\) be a set of LTs with an odd cardinality, the label \(sf_t\) represents a linguistic variable with a possible value, and it shall meet the features as follows [4]:

-

(1)

The ordered set: if \(t>v, sf_t >sf_v \);

-

(2)

The negation operator: neg\(({sf_t})=sf_v\) that \(t+v=u+1\);

-

(3)

The max operator: if \(sf_t\ge sf_v, \max ({sf_t ,sf_v})=sf_t\);

-

(4)

The min operator: if \(sf_t\le sf_v, \min ({sf_t, sf_v})=sf_t\);

For example, Sf can be defined as:

\(Sf=\{{sf_1 ,sf_2 ,sf_3 ,sf_4 ,sf_5 } \}\,=\) {very poor, poor, medium, good, very good}.

2.3 HFLS

Definition 3

(Lin et al. 2014) As a regular set Y, the HFLS can be described as follows:

where \(h_A(y)\) is a sunset of [0,1], expressing the possible membership degree of the element \(y\in Y\) to the linguistic set \(s_{\theta ( y )} \), and \(\theta (y)\in [1,u]\). For convenience, we called \(af=\langle {s_{\theta (y)} ,h_A (y)}\rangle \) as a HFL element (HFLE) and A have all the HFLEs in it.

Definition 4

(Lin et al. 2014) For a HFLE \(af=\langle s_{\theta (y)}, h_A (y) \rangle \), we defined \(s({af} )=( {\frac{1}{\# hf}\mathop {\sum }\nolimits _{\gamma \in hf} \gamma })s_{\theta (y)}\) as the score function of af, where \(\# hf\) is the number of the elements in hf.

For two HFLEs \(af_1 \) and \(af_2 \), if \(s( {af_1 } )>s({af_2 } )\), \(af_1 >af_2 \), and if \(s({af_1})=s({af_2})\), \(af_1 =af_2 \).

For the HFLEs \(af=\langle s_{\theta ( {af} )}, h_A ( {af} ) \rangle \), \(af_1 =\langle s_{\theta ( {af_1 } )} ,h_A ( {af_1 } ) \rangle \), and \(af_2 =\langle {s_{\theta ( {af_2 } )} ,h_A ( {af_2 } )} \rangle \), some new operations on them are given as follows:

-

(1)

$$\begin{aligned}&af_{1} \oplus af_{2} = \left\langle {s_{{\theta \left( {af_{1} } \right) + \theta \left( {af_{2} } \right) }} ,} \right. \nonumber \\&\quad \left. {\bigcup \limits _{{\gamma \left( {af_{1} } \right) \in h\left( {af_{1} } \right) ,\gamma \left( {af_{2} } \right) \in h\left( {af_{2} } \right) }} \left\{ {\gamma \left( {af_{1} } \right) + \gamma \left( {af_{2} } \right) - \gamma \left( {af_{1} } \right) \gamma \left( {af_{2} } \right) } \right\} } \right\rangle ,\nonumber \\ \end{aligned}$$(3)

-

(2)

$$\begin{aligned}&af_{1} \otimes af_{2} = \left\langle {s_{{\theta \left( {af_{1} } \right) \times \theta \left( {af_{2} } \right) }} ,} \right. \nonumber \\&\quad \left. { \bigcup \limits _{{\gamma \left( {af_{1} } \right) \in h\left( {af_{1} } \right) ,\gamma \left( {af_{2} } \right) \in h\left( {af_{2} } \right) }} \left\{ {\gamma \left( {af_{1} } \right) \gamma \left( {af_{2} } \right) } \right\} } \right\rangle , \end{aligned}$$(4)

-

(3)

$$\begin{aligned}&\lambda af=\left\langle {s_{\lambda \theta ( {af} )} ,\bigcup \limits _{\gamma ( {af} )\in h( {af} )} \{ {1-( {1-\gamma ( {af} )} )^{\lambda }} \}}\right\rangle ,\nonumber \\ \end{aligned}$$(5)

-

(4)

$$\begin{aligned}& af^{\lambda }=\left\langle {s_{\theta ( {af} )^{\lambda }} ,\bigcup \limits _{\gamma ( {af} )\in h( {af} )} \{ {\gamma ( {af} )^{\lambda }} \}} \right\rangle {.} \end{aligned}$$(6)

2.4 MM operator

Muirhead (1902) originally put forward the MM operator, which is shown as follows:

Definition 5

(Muirhead 1902) Let \(\phi _i ( {i=1,2,3,\ldots ,n} )\) be a set of positive numbers, the parameters vector is \(P=( {p_1 ,p_2 ,\ldots ,p_l } )\in R^{l}\). Suppose

Then, MM\(^{P}\) is called MM operator, the \(\sigma (js)(js=1,2,\ldots , n)\) is any permutation of \(( {1,2,\ldots ,n} )\), and \(S_n \) is a set of all permutations of \(( {1,2,\ldots ,n})\).

Furthermore, from Eq. (7), we can know that

-

(1)

If \(P=( {1,0,\ldots ,0} ),\) the MM reduces to MM\(^{(1,0,\ldots ,0)}( {\alpha _1 ,\alpha _2 ,\ldots ,\alpha _n } )=\frac{1}{n}\mathop {\sum }\nolimits _{j=1}^n {\alpha _j } \), which is the arithmetic averaging operator.

-

(2)

If \(P=( {1/n,1/n,\ldots ,1/n} )\), the MM reduces to MM\(^{( {1/n,1/n,\ldots ,1/n} )}( {\alpha _1 ,\alpha _2 ,\ldots ,\alpha _n } )=\mathop {\prod }\nolimits _{j=1}^n {\alpha _j^{1/n} }\), which is the GM operator.

-

(3)

If \(P=( {1,1,0,0,\ldots ,0} )\), the MM reduces to MM\(^{( {1,1,0,0,\ldots ,0} )}( {\alpha _1 ,\alpha _2 ,\ldots ,\alpha _n } )=( {\frac{1}{n( {n-1} )}\mathop {\mathop {\sum }\nolimits _{i,j=1}}\limits _{i\ne j}^n {\alpha _i\alpha _j} } )^{\frac{1}{2}}\), which is the BM operator (Liu et al. 2014b).

-

(4)

If \(P=\overbrace{( {1,1,\ldots ,1,} }^k\overbrace{{0,0,\ldots ,0} )}^{n-k}\), the MM reduces to MM\(^{\overbrace{( {1,1,\ldots ,1,}}^k\overbrace{{0,0,\ldots ,0} )}^{n-k}}( {\alpha _1 ,\alpha _2 ,\ldots ,\alpha _n } )=( {\frac{\mathop \oplus \limits _{1\le i_1 \prec \cdots \prec i_k \le n} \mathop \otimes \limits _{j=1}^k \alpha _{i_j } }{C_n^k }} )^{\frac{1}{k}}\) which is the MSM operator (Maclaurin 1729).

3 Hesitant fuzzy linguistic MM aggregation operators

In this part, we propose the MM operator for HFL information (HFLMM) and weighted HFLMM (WHFLMSM) operator, and further we discuss their special cases and properties.

3.1 HFLMM operator

Definition 6

Let \(af_i =\langle {s_{\theta ( {af_i } )} ,h_A ( {af_i } )} \rangle ( {i=1,2,\ldots ,n} )\) be a set of HFLEs, and \(P=( {p_1 ,p_2 ,\ldots ,p_n } )\in R^{n}\) be a vector of parameters. If

then we call \(\hbox {HFLMM}^{P}\) as HFLMM operator, where \(\sigma (js)(js=1,2,\ldots ,n)\) is any permutation of \(({1,2,\ldots ,n} )\), and \(S_n\) is the collection of all permutations of \(( {1,2,\ldots ,n} )\).

Theorem 1

Let \(af_i =\langle {s_{\theta ({af_i})} ,h_A ({af_i} )}\rangle ({i=1,2,\ldots ,n})\) be a set of HFLEs, then the result produced by Definition 6 can be expressed as

Proof

According to the operational laws of the HFLEs, we have

and

then

Further, we can obtain

Therefore

\(\square \)

Example 1

Let \(af_1 =\langle {s_2 ,\{ {0.6,0.8} \}} \rangle \), \(af_2 =\langle {s_4 ,\{ {0.7, 0.8} \}} \rangle \), and \(af_3 =\langle {s_1 ,\{ {0.6, 0.7} \}} \rangle \) be three HFLEs, then according to (9), we can use the \(\hbox {HFLMM}\) operator to aggregate them shown as follows (suppose \(P=( {1,2,1} )\)).

In the next, we will explain certain worthy qualities of the \(\hbox {HFLMM}\) operator.

Theorem 2

(Idempotency). If \(af_i =af=\langle {s_{\theta ( {af} )} ,h_A ( {af} )} \rangle ( {i=1,2,\ldots ,n} )\) for all \(i=1,2,\ldots ,n\), then

Proof

Since \(af=\langle {s_{\theta ({af})} ,h_A ({af} )} \rangle \), based on Theorem 1, we have

which finishes the Proof of Theorem 2. \(\square \)

Theorem 3

(Monotonicity). Let \(af_{js} =\langle {s_{\theta ({af_{js} } )} ,h_A ( {af_{js} } )} \rangle , {a}'_{js} =\langle {s_{\theta ( {{a}'_{js} } )} ,h_A ( {{a}'_{js} } )} \rangle \) be two sets of HFLEs, if \(\theta ( {af_{js} } )\ge \theta ( {{a}'_{js} } ),h_A ( {af_{js} } )\ge h_A ( {{a}'_{js} } )\) for all \(j=1,2,\ldots , n\),

Proof

Since

If \(\theta ( {af_{js} } )\ge \theta ( {{a}'_{js} } ),h_A ( {af_{js} } )\ge h_A ( {{a}'_{js} } )\),

Since \(\theta ( {af_{js} } )\ge \theta ( {{a}'_{js} } )\), then \(( {\frac{1}{n!}\mathop {\sum }\nolimits _{\sigma \in S_n } {\mathop {\prod }\nolimits _{js=1}^n {\theta ( {af_{\sigma (js)}} )^{p_{js} }} } } )^{\frac{1}{\mathop {\sum }\nolimits _{js=1}^n {p_{js} } }}\ge ( {\frac{1}{n!}\mathop {\sum }\nolimits _{\sigma \in S_n } {\mathop {\prod }\nolimits _{js=1}^n {\theta ( {{a}'_{\sigma (js)}} )^{p_{js} }} } } )^{\frac{1}{\mathop {\sum }\nolimits _{js=1}^n {p_{js} } }}\).

Since \(h_A ( {af_{js} } )\ge h_A ( {{a}'_{js} } )\), then \(\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {af_{\sigma (js)} } )^{p_{js} }} \ge \mathop {\prod }\nolimits _{js=1}^n {\gamma ( {{a}'_{\sigma (js)} } )^{p_{js} }} \),

and \(( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {af_{\sigma (js)} } )^{p_{js} }} } )\le ( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {{a}'_{\sigma (js)} } )^{p_{js} }} } )\).

further, \(\mathop {\prod }\nolimits _{\sigma \in S_n } {( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {af_{\sigma (js)} } )^{p_{js} }} } )^{\frac{1}{n!}}} \le \mathop {\prod }\nolimits _{\sigma \in S_n } {( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {{a}'_{\sigma (js)} } )^{p_{js} }} } )^{\frac{1}{n!}}} \),

so, \(( {1-\mathop {\prod }\nolimits _{\sigma \in S_n } {( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {af_{\sigma (js)} } )^{p_{js} }} } )^{\frac{1}{n!}}} } )^{\frac{1}{\mathop {\sum }\nolimits _{js=1}^n {p_{js} } }}\ge ( {1-\mathop {\prod }\nolimits _{\sigma \in S_n } {( {1-\mathop {\prod }\nolimits _{js=1}^n {\gamma ( {{a}'_{\sigma (js)} } )^{p_{js} }} } )^{\frac{1}{n!}}} } )^{\frac{1}{\mathop {\sum }\nolimits _{js=1}^n {p_{js} } }}\),

i.e., \(\hbox {HFLMM}^{P}(af_1 ,af_2 ,\ldots ,af_n )\ge \hbox {HFLMM}^{P}({a}'_1 ,{a}'_2 ,\ldots ,{a}'_n )\),

which finishes the Proof of Theorem 4. \(\square \)

Theorem 4

(Boundedness). Suppose \(af^{-}=\min (af_1 ,af_2 , \ldots ,af_n ), af^{+}=\max (af_1 ,af_2 ,\ldots ,af_n)\), then

Proof

Suppose \(af^{-}=\min (af_1 ,af_2 ,\ldots ,af_n ), af^{+}=\max (af_1 ,af_2 ,\ldots ,af_n )\). According to Theorem 3, we have

and according to Theorem 1, we have

So, we can get \(af^{-}\le \hbox {HFLMM}^{P}(af_1 ,af_2 ,\ldots ,af_n )\le af^{+}\),

which finishes the proof of Theorem 4. \(\square \)

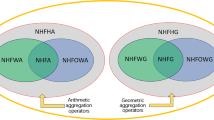

Next, we will investigate some special cases of the HFLMM operator with the different parameter vector.

-

(1)

When \(P=( {1,0,\ldots ,0})\), the HFLMM operator will reduce to the HFLAM operator (Lin et al. 2014).

(13)

(13) -

(2)

When \(P=({\lambda ,0,\ldots ,0} )\), the HFLMM operator will reduce to the HFL GAM operator (Lin et al. 2014).

(14)

(14) -

(3)

When \(P=({1,1,0,0,\ldots ,0} )\), the HFLMM operator will reduce to the HFL BM operator (Lin et al. 2014).

(15)

(15) -

(4)

When \(P=\overbrace{({1,1,\ldots ,1}{.}}^k\overbrace{,0,0,\ldots ,0)}^{n-k}\), the HFLMM operator will reduce to the HFLMSM operator (Lin et al. 2014).

(16)

(16) -

(5)

When \(P=( {1,1,\ldots ,1} )\), the HFLMM operator will reduce to the HFL geometric averaging operator (Lin et al. 2014).

(17)

(17) -

(6)

When \(P=( {1/n,1/n,\ldots ,1/n} )\), the HFLMM operator will reduce to the HFL geometric averaging operator (Lin et al. 2014).

(18)

(18)

3.2 WHFLMSM operator

Definition 7

Let \(af_i =\langle {s_{\theta ( {af_i } )} ,h_A ( {af_i } )} \rangle ( {i=1,2,\ldots ,n} )\) be a set of HFLEs and \(P=( {p_1 ,p_2 ,\ldots ,p_n } )\in R^{n}, \omega =( {\omega _1 ,\omega _2 ,\ldots ,\omega _n } )^{T}\) be the weighted vector of \(a_{js}, js=1,2,\ldots ,n\), with \(\omega _i \in [ {0,1} ]\), \(i=1,2,\ldots ,n\) and \(\mathop {\sum }\nolimits _{i=1}^n {\omega _i =1} \). If

then WHFLMM is the WHFLMSM operator, where \(\sigma (js) (js=1,2,\ldots ,n)\) is any permutation of \(( {1,2,\ldots ,n} )\), and \(S_n \) is the collection of all permutations of \(( {1,2,\ldots ,n} )\).

Theorem 5

Let \(a_i =\langle {s_{\theta ( {a_i } )} ,h_A ( {a_i } )} \rangle ( {i=1,2,\ldots ,n} )\) be a set of HFLEs and \(P=( {p_1 ,p_2 ,\ldots ,p_n } )\in R^{n}\),

\(\omega =( {\omega _1 ,\omega _2 ,\ldots ,\omega _n } )^{T}\) be the weighted vector of \(a_{\mathrm{js}}\ \ \ js=1,2,\ldots ,n\) with \(\omega _i \in \left[ {0,1} \right] , i=1,2,\ldots ,n\) and \(\mathop {\sum }\nolimits _{i=1}^n {\omega _i =1} \). Then,

The proof of this theorem is similar to Theorem 1, and it is omitted here.

Example 2

Let \(af_1 =\langle {s_2 , \{ {0.6,0.8} \}} \rangle \), \(af_2 =\langle {s_4 ,\{ {0.7, 0.8} \}} \rangle \), and \(af_3 =\langle {s_1 ,\{ {0.6,0.7} \}} \rangle \) be three HFLEs, and \(\omega =(0.3,0.4,0.3)^{T}\) be the weighted vector of \(af_i (i=1,2,3)\), then we can use the WHFLMM operator to aggregate the three HFLEs. Suppose \(P=(1,2,1)\), by Formula (20), we can get

In light of the operational laws of the HFLEs, the WHFLMM operator has also the same desirable properties expressed as follows.

Theorem 6

(Monotonicity). If \(\theta ( {af_{js} } )\ge \theta ( {{a}'_{js} } ),h_A ( {af_{js} } )\ge h_A ( {{a}'_{js} } )\) for all js, then

Theorem 7

(Boundedness). Suppose \(af^{-}=\min (af_1 ,af_2 ,\ldots ,af_n ) \ \ af^{+}=\max (af_1 ,af_2 , \ldots ,af_n )\), then

The proofs of the above theorems are similar to the corresponding theorems of HFLMM operator, so it is omitted here.

4 A group decision-making approach based on the WHFLMM operator

In this part, we will make use of the proposed WHFLMM operator to solve the MADM for ERP system selection [adapted from Lin et al. (2014)].

A MADM problem for selecting the ERP system with HFL information is described as follows. The \(Af=\{ {Af_1 ,Af_2 ,\ldots ,Af_m } \}\) are a set of alternatives, and \(Gf=\{ {Gf_1 ,Gf_2 ,\ldots ,Gf_n } \}\) are a set of attributes. The decision maker can use a HFL element \(\langle {s_{\theta ( {a_{ij} } )} ,h( {a_{ij} } )} \rangle \) to describe the attribute \(Gf_j\) of the alternative \(Af_i \). Suppose the weighted vector of the attributes is \(\omega =(\omega _1 ,\omega _2 ,\ldots ,\omega _n )\) satisfying \(\omega _j \ge 0(j=1,2,\ldots ,n),\mathop {\sum }\nolimits _{j=1}^n {\omega _j } =1\), and the decision matrix \(H=( {\tilde{h}( {a_{ij} } )} )_{m\times n} =( {\langle {s_{\theta ( {a_{ij} } )} ,h( {a_{ij} } )} \rangle } )_{m\times n} \) is the hesitant fuzzy linguistic matrix where \(\langle {s_{\theta ( {a_{ij} } )} ,h( {a_{ij} } )} \rangle ( i=1,2, \ldots ,m )( {j=1,2,\ldots ,n} )\) adopts the form of HFLEs.

Then, we utilize the WHFLMM operator to handle the ERP system selection with HFL information.

Procedure 1: Standardization of the attributes

Among the practical decision-making problems, two types of the attribute values maybe exist, i.e., cost attribute and benefit attribute. In order to eliminate the difference in types, we need convert them into the same type.

Suppose the converted decision matrices are expressed by \(\hat{{H}}=[ {\hat{{h}}_{( {a_{ij} } )}}]_{m\times n} \), and for the cost type, it can be converted into benefit type by

Procedure 2: Calculate the comprehensive evaluation value as follows:

Procedure 3: Rank \(\hat{{h}}_i\ ( {i=1,2,\ldots ,m} )\) in descending order in light of Definition 4.

Procedure 4: End.

5 Numerical example

In the following, we use a practical problem to illustrate application developed method. Assume a company wants to select an ERP system [adapted from Lin et al. (2014)]. By collecting ERP vendors and systems’ all possible information, we pick five ERP systems \(Af_i\ ({i=1,2,3,4,5})\) as potential systems. The company invites a number of outside specialists to give their evaluation values. To make this problem clear, the company picks four attributes: (1) function and technology \(Gf_1 \), (2) strategic fitness \(Gf_2 \), (3) vendor’s ability \(Gf_3 \), and (4) vendor’s reputation \(Gf_4 \) for this problem, and the attribute weight vector is \(\omega =( {0.2,0.1,0.3,0.4} )^{T}\). For keeping the independence of the evaluation results, the DMs are needed to assess the five possible ERP systems \(Af_i\ ({i=1,2,3,4,5} )\) under the above four attributes in anonymity and the HFL decision matrix \(H=( {\tilde{h}( {a_{ij} } )} )_{5\times 4} =( {\langle {s_{\theta ( {a_{ij} } )} ,h( {a_{ij} } )} \rangle } )_{5\times 4} \) is presented in Table 1, where \(\langle {s_{\theta ( {a_{ij} } )}, h( {a_{ij} } )} \rangle ( {i=1,2,3,4,5} )( j=1,2, 3,4 )\) takes the form of HFLEs.

Then, we can use the above method to choose the best ERP system(s).

5.1 Evaluation steps of the proposed method

Procedure 1: Because all attributes are benefit type, it is no necessary to transform the attribute values.

Procedure 2: Calculate the comprehensive evaluation value by Formula (24) and assume \(P=( {1,1,\ldots ,1} )\) that have

Procedure 3: Calculating scores for each \(\hat{{h}}_i\ ( i=1,2,3,4,5 )\) by Formula (7), we have

Procedure 4: Rank the alternatives

In light of Definition 4, the result is \(Af_3 \succ Af_2 \succ Af_5 \succ Af_1 \succ Af_4 \).

The best is alternative \(Af_3 \), i.e., the third ERP system is the best one.

5.2 Discussion

So as to understand the impact of parameter P on MADM problem in this example, we use diverse values to obtain the ranking results, which are shown in Table 2.

We can find that, from Table 2, the score functions value varies with the different parameter vector P and corresponding to that, the results of ranking are different. Generally speaking, DMs can select some particular values; for example, when \(P=(1,1,1,1)\), the WHFLMM operator reduced to HFLWG operator (Lin et al. 2014); Furthermore, for the WHFLMM operator, we can easily obtain that with the increase of the interrelationships of attributes we consider, score functions value decreased. Hence, diverse P can be regarded as the DMs’ risk preference.

In order to prove the validity and the prominent advantage of the proposed method, we use the existing methods such as the methods based on the HFLWA operator in Lin et al. (2014) and the HFLWG operator in Lin et al. (2014) to rank this example and the ranking results are listed in Table 3.

From Table 3, we can find that these two methods have the same best alternative \(A_3 \) although the ranking results are different. Further, it is clear that the score functions and ranking result produced by the \({ HFLWA}\) operator are exactly the same as those produced by our method when \(P=(1,0,0,0)\) while the score functions and ranking result produced by the \({ HFLWG}\) operator are exactly the same as those produced by our method when \(P=(1,1,1,1)\) or \(P=(0.25,0.25,0.25,0.25)\). Obviously, these results can easily be explained that the \({ HFLWA}\) operator is the special case of our method when \(P=(1,0,0,0)\) and the \({ HFLWG}\) operator is the special case of our method when \(P=(1,1,1,1)\) or \(P=(0.25,0.25,0.25,0.25)\). So, these results can show that our proposed method is effective and feasible and is also more general than the methods proposed by Lin et al. (2014).

The following table shows the comparisons of the existing two methods with our proposed method concerning some features, which are shown in Table 4.

Compared with the method based on HFLWA operator proposed by Lin et al. (2014), it is clear that the method from Lin et al. (2014) can easily integrate vague information, and its computation is relatively simple. However, its disadvantage is that it does not take the correlation between the inputs into account because it assumes that the inputs are independent. The method proposed in the article can take the correlation among all inputs into account, and it provides a general and flexible aggregation function because it can generalize most existing aggregation operators. For example, HFLWA operator proposed by Lin et al. (2014) is a special case of WHFLMM operator when the \(P=(1,0,\ldots ,0,0)\), and the WHFLMM operator reduced to WHFLWG operator when the \(P=(1,1,\ldots ,1,1)\). So, the method in the article is more universal and flexible to solve MADM problems than the method based on the HFLWA operator (Lin et al. 2014).

6 Conclusion

The MADM problems based on HFL information have a wide range of applications in a variety of fields. Because hesitant fuzzy linguistic variable is good at handling the uncertainty and the MM operator has the remarkable feature that could take the correlations among any amount of inputs by parameter P, in this article, we extended MM operator to handle the HFLEs and put forward some MM aggregation operators by combining it with the HFL information, such as the HFLMM operator and WHFLMM operator, and some worthy characteristics of these operators, at the meanwhile, some special cases are explained. Lastly, a MADM method with the HFL information based on WHFLMM operator is proposed. This method is more universal and effective than some other methods in processing HFL information of solving practical MADM problems.

In further study, we will use the proposed method to solve the practical decision-making problems or extend the proposed operators and methods to other fuzzy information environment.

References

Abdullah S, Ayub S, Hussain I, Khan MY (2017) Analyses of S-boxes based on interval valued intuitionistic fuzzy sets and image encryption. Int J Comput Intell Syst 10:851–865

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20:87–96

Detemple D, Robertson J (1979) On generalized symmetric means of two variables. Univ Beograd Publ Elektrotehn Fak Ser Mat Fiz No 634(677):236–238

Lin R, Zhao XF, Wang HJ, Wei GW (2014) Hesitant fuzzy linguistic aggregation operators and their application to multiple attribute decision making. J Intell Fuzzy Syst 27(1):49–63

Liu P (2017) Multiple attribute group decision making method based on interval-valued intuitionistic fuzzy power Heronian aggregation operators. Comput Ind Eng 108:199–212

Liu P, Chen SM (2017) Group decision making based on Heronian aggregation operators of intuitionistic fuzzy numbers. IEEE Trans Cybern 47(9):2514–2530

Liu P, Li H (2017) Interval-valued intuitionistic fuzzy power Bonferroni aggregation operators and their application to group decision making. Cogn Comput 9(4):494–512

Liu ZM, Liu PD (2016) Intuitionistic uncertain linguistic partitioned Bonferroni means and their application to multiple attribute decision making. Int J Syst Sci 48:1092–1105

Liu P, Shi L (2017) Some neutrosophic uncertain linguistic number Heronian mean operators and their application to multi-attribute group decision making. Neural Comput Appl 28(5):1079–1093

Liu P, Tang G (2016) Multi-criteria group decision-making based on interval neutrosophic uncertain linguistic variables and Choquet integral. Cognit Comput 8(6):1036–1056

Liu P, Teng F (2016) An extended TODIM method for multiple attribute group decision-making based on 2-dimension uncertain linguistic variable. Complexity 21(5):20–30

Liu PD, Teng F (2017) Multiple attribute group decision making methods based on some normal neutrosophic number Heronian mean operators. J Intell Fuzzy Syst 32:2375–2391

Liu P, Wang P (2017) Some improved linguistic intuitionistic fuzzy aggregation operators and their applications to multiple-attribute decision making. Int J Inf Technol Dec Mak 16(3):817–850

Liu PD, Liu ZM, Zhang X (2014a) Some intuitionistic uncertain linguistic Heronian mean operators and their application to group decision making. Appl Math Comput 230:570–586

Liu PD, Rong LL, Chu YC, Li YW (2014b) Intuitionistic linguistic weighted Bonferroni mean operator and its application to multiple attribute decision making. Sci World J 2014:1–13

Liu P, He L, Yu XC (2016a) Generalized hybrid aggregation operators based on the 2-dimension uncertain linguistic information for multiple attribute group decision making. Group Decis Negot 25(1):103–126

Liu P, Zhang L, Liu X, Wang P (2016b) Multi-valued Neutrosophic number Bonferroni mean operators and their application in multiple attribute group decision making. Int J Inf Technol Dec Mak 15(5):1181–1210

Liu P, Chen SM, Liu J (2017) Some intuitionistic fuzzy interaction partitioned Bonferroni mean operators and their application to multi-attribute group decision making. Inf Sci 411:98–121

Maclaurin C (1729) A second letter to Martin Folkes, Esq.; concerning the roots of equations, with demonstration of other rules of algebra. Philos Trans R Soc Lond Ser A 36:59–96

Muirhead RF (1902) Some methods applicable to identities and inequalities of symmetric algebraic functions of n letters. Proc Edinb Math Soc 21(3):144–162

Qayyum A, Abdullah S, Aslam M (2016) Cubic soft expert sets and their application in decision making. J Intell Fuzzy Syst 31(3):1585–1596

Qin JD, Liu XW (2014) An approach to intuitionistic fuzzy multiple attribute decision making based on Maclaurin symmetric mean operators. J Intell Fuzzy Syst 27(5):2177–2190

Rahman K, Abdullah S, Ahmed R, Ullah M (2017a) Pythagorean fuzzy Einstein weighted geometric aggregation operator and their application to multiple attribute group decision making. J Intell Fuzzy Syst 33(1):635–647

Rahman K, Abdullah S, Shakeel M, Sajjad M, Khan A, Ullah M (2017b) Interval-valued Pythagorean fuzzy geometric aggregation operators and their application to group decision making problem. Cogent Math 4:1338638. https://doi.org/10.1080/23311835.2017.1338638

Torra V (2010) Hesitant fuzzy sets. Int J Intell Syst 25:529–539

Torra V, Narukawa Y (2009) On hesitant fuzzy sets and decision. In: The 18th IEEE international conference on fuzzy systems, Jeju Island, Korea, pp 1378–1382

Wei GW (2012) Hesitant Fuzzy prioritized operators and their application to multiple attribute group decision making. Knowl Based Syst 31:176–182

Xia M, Xu ZS (2011) Hesitant fuzzy information aggregation in decision making. Int J Approx Reason 52(3):395–407

Xu ZS, Xia M (2011) Distance and similarity measures for hesitant fuzzy sets. Inf Sci 181(11):2128–2138

Xu ZS, Yager RR (2011) Intuitionistic fuzzy Bonferroni means. IEEE Trans Syst Man Cybern Part B Cybern 41(2):568–578

Yu DJ, Wu YY (2012) Interval-valued intuitionistic fuzzy Heronian mean operators and their application in multi-criteria decision making. Afr J Bus Manag 6(11):4158–4168

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–356

Zhu B, Xu ZS, Xia MM (2012) Hesitant fuzzy geometric Bonferroni means. Inf Sci 205(1):72–85

Acknowledgements

This paper is supported by the National Natural Science Foundation of China (Nos. 71771140, 71471172, and 71271124), the Special Funds of Taishan Scholars Project of Shandong Province (No. ts201511045), Shandong Provincial Social Science Planning Project (Nos. 16CGLJ31 and 16CKJJ27), the Natural Science Foundation of Shandong Province (No. ZR2017MG007), the Teaching Reform Research Project of Undergraduate Colleges and Universities in Shandong Province (No. 2015Z057), and Key research and development program of Shandong Province (No. 2016GNC110016). The authors also would like to express appreciation to the anonymous reviewers and editors for their very helpful comments that improved the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Human and animals rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by X. Li.

Rights and permissions

About this article

Cite this article

Liu, P., Li, Y., Zhang, M. et al. Multiple-attribute decision-making method based on hesitant fuzzy linguistic Muirhead mean aggregation operators. Soft Comput 22, 5513–5524 (2018). https://doi.org/10.1007/s00500-018-3169-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3169-y