Abstract

Many numerical methods applied on a Shishkin mesh are very popular in solving the singularly perturbed problems. However, few approaches are used to obtain the Shishkin mesh transition parameter. Thus, in this paper, we first use the cubic B-spline collocation method on a Shishkin mesh to solve the singularly perturbed convection–diffusion problem with two small parameters. Then, we transform the Shishkin mesh transition parameter selection problem into a nonlinear unconstrained optimization problem which is solved by using the self-adapting differential evolution (jDE) algorithm. To verify the performance of our presented method, a numerical example is employed. It is shown from the experiment results that our approach is efficient. Compared with other evolutionary algorithms, the jDE algorithm performs better and with more stability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the following singularly perturbed convection–diffusion problem with two small parameters

where \(0<\varepsilon \ll 1\) and \(0<\mu \ll 1\). The functions b(x), c(x) and f(x) are assumed to be sufficiently smooth satisfying

where \(b^*\) and \(c^*\) are two positive constants. When \(\mu =0\) or \(\mu =1\), this problem encompasses reaction–diffusion problem or convection–diffusion problem, respectively. These kinds of problems arise in transport phenomena in chemistry and biology (Bigge and Bohl 1985). The nature of the two-parameter problem was asymptotically examined by O’Malley (1967), where the ratio of \(\mu \) to \(\varepsilon \) has significant role in solution. For this problem, two boundary layers occur at \(x=0\) and \(x=1\). Because of the presence of these layers, some standard numerical methods applied on a uniform mesh fail to give a satisfactory numerical solution. Thus, much attention has been focused on the use of a non-uniform mesh that is adapted to the singularly perturbed problems.

Recently, Gracica et al. (2006) used a second-order monotone numerical scheme which was combined with a piecewise-uniform Shishkin mesh to solve problem (1). Linß (2010) presented a streamline-diffusion finite element method (SDFEM) on a Shishkin mesh. Furthermore, Linß and Roos (2004) developed a first-order upwind difference scheme on a piecewise-uniform Shishkin mesh. Roos and Uzelac (2003) also proposed a SDFEM on a Shishkin mesh to solve problem (1). Herceg (2011) presented a finite difference scheme for a class of linear singularly perturbed boundary value problems with two small parameters which was discretized on a Bakhvalov-type mesh. Kadalbajoo and Yadaw (2008) solved problem (1) by using the cubic B-spline collocation method on a piecewise-Shishkin mesh.

In a word, it can be seen from the above literature that the upwind finite difference scheme applied on a Shishkin mesh is very popular in solving the singularly perturbed convection–diffusion equation with two small parameters. As far as we know, this mesh contains two grid transition points \(\lambda _1\) and \(\lambda _2\) which have some different definitions in some papers. In Miller et al. (1996), the authors defined \(\lambda _1\) and \(\lambda _2\) as

where \(\sigma _1,\sigma _2\) are two positive constants, \(\mu _1\) and \(\mu _2\) are defined in Miller et al. (1996), and N, our discretization parameter, is a positive even. Then, they divided the intervals \([0,\lambda _1]\) and \([1-\lambda _2,1]\) into N / 4 subintervals, respectively, and \([\lambda _1,1-\lambda _2]\) is dissected into N / 2. In practical computation, the numerical results of problem (1) are related to the choice of constants \(\sigma _1,\sigma _2\). As far as we know, there is no any method which is used to calculate the grid parameters \(\sigma _1\) and \(\sigma _2\). Therefore, it is very important to study a clearly method to get the best Shishkin mesh parameters.

In recent years, various improved intelligence algorithms or hybrid intelligence algorithms have been designed to solve optimization problems (Ouyang and Yang 2016), such as PSO with neighborhood operator (Suganthan 1999), distance-based locally informed PSO (Qu et al. 2013), hybrid PSO algorithm (Ouyang et al. 2014), hybird genetic algorithm (Xu et al. 2014), hybrid chemical reaction optimization (Xu et al. 2015), parallel hybrid PSO (Ouyang et al. 2015), heterogeneous CLPSO algorithm (Lynn and Suganthan 2015), multi-population DE algorithm (Wu et al. 2016), hybrid harmony search algorithm (Ouyang et al. 2016a), hybrid cultural algorithm (Ali et al. 2016a, b), hybrid invasive weed optimization algorithm (Ouyang et al. 2016b).

As we know, differential evolution (DE) algorithm (Srorn and Price 1997) is a fast and simple method which performs well on a wide variety of problems. It is a population-based stochastic search technique, which is inherently parallel. DE algorithm is a relatively new nonlinear search and optimization approach, which is particularly well suited to solve some complicate optimization problems. Due to its advantages of simple structure, easy implementation and good computational efficiency, DE algorithm has been successfully used to solve many problems such as mechanical engineering (Abderazek et al. 2015), Signal processing (Liu and Lampinen 2005), pattern recognition (Das and Konar 2009), some problems of parameter estimation (Gong and Cai 1976). Recently, some hybrid DE algorithms (Gong et al. 2011, 2015) were also presented for some global numerical optimization.

In view of the unique advantages of differential evolution algorithm for estimating parameter, the mainly work of this paper is motivated by using jDE algorithm (Brest et al. 2006) to obtain the best mesh transition points. More specifically, we will first use the B-spline collocation technique developed in Kadalbajoo and Yadaw (2008) to study the numerical solution of problem (1). Then, we may use the double-mesh principle (Matthews et al. 2002) to estimate the absolute errors. At last, we transform the choice of mesh parameter problem into a nonlinear unconstrained optimization problem. Furthermore, we utilize the jDE algorithm to find two suitable mesh transition points and the corresponding numerical results for the problem (1).

The remainder of this paper is organized in the following way. Section 2 gives a simple introduction to the mesh selection strategy. Section 3 shows a detailed theoretical analysis of B-spline collocation method. Section 4 introduces A differential evolution algorithm to optimize the Shishkin mesh parameters. Section 5 displays the numerical experimental results and discussions in detail. Finally, the paper concludes with Sect. 6.

2 Mesh selection strategy

At first, we use the piecewise-uniform grid to divide the interval [0,1] into three subintervals:

where the transition parameters are given by

where \(\delta _1\) and \(\delta _2\) are two positive parameters. Then, we place N / 4, N / 2 and N / 4 mesh points in three subregions \([0,\lambda _1]\), \([\lambda _1,1-\lambda _2]\) and \([1-\lambda _2,1]\), respectively. Finally, the mesh widths can be obtained as follows:

3 B-spline collocation method

Let \(\overline{\varOmega }^N=\{x_0,x_1,x_2,\ldots ,x_N\}\) be a Shishkin mesh defined in Sect. 2, and then, the cubic B-spline functions (Kadalbajoo and Yadaw 2008) are given as follows:

where \(i=0,1,2,\ldots ,N\). For the above functions (2), we introduce four additional knots \(x_{-2}<x_{-1}<x_0\) and \(x_{N+2}>x_{N+1}>x_N\). Obviously, each of the function \(B_i(x)\) is twice continuously differentiable on the entire real line. In addition, for each \(x_j\), \(j=0,1,\ldots ,N\), we have

Similarly, we can show that

and

As far as we know, the dimensional of cubic B-spline function space is \(N+3\). Similar to (2), we first define two extra cubic B-spline functions \(B_{-1}\) and \(B_{N+1}\). Then, the cubic B-spline function space can be given as follows

Thus, for any cubic polynomial function S(x), we have

where \(a_i\) are unknown real coefficients.

Here, we use function S(x) defined in (6) to approximate the exact solution of (1), yield

and

By using the values of B-spline functions \(B_i\) and of derivatives at mesh points \(\overline{\varOmega }^N\), we obtain the following system of \(N+1\) linear equations with \(N+3\) unknown variables

where \(0\le i \le N\).

From the boundary conditions, we have

and

Next, eliminating \(a_{-1}\) from first equation (9) and (10), we obtain

Similarly, we have

Finally, we can get the following system of \(N+1\) linear equations with \(N+1\) unknown variables

where \(x_N=(a_0,a_1,\ldots ,a_{N})^{\mathrm {T}}\) are the unknown real coefficients with right hand side

and

where

It is easy to see that the matrix T is strictly diagonally dominant. Thus, we can solve the above system equations (14) for \(a_0,a_1,\ldots ,a_N\). Furthermore, we obtain \(a_{-1}\) and \(a_{N+1}\) by substitute \(a_0,a_1,\ldots ,a_N\) into the boundary condition (10) and (11). Hence the collocation method by using a basis of cubic B-splines functions applied to problem (1) has a unique solution.

4 A differential evolution algorithm to optimize the Shishkin mesh parameters

4.1 The objective function

In general, the exact solution of problem (1) is not available, especially for the nonlinear problem. Thus, in order to estimate the absolute errors of numerical solution of problem (1), we can use the double-mesh principle developed in Matthews et al. (2002) to estimate the absolute errors. Obviously, for each \(\varepsilon \), \(\mu \) and N, the solution of (7) is a binary function about variables \(\delta _1\) and \(\delta _2\). So, we define \(\mathbf S _{\varepsilon ,\mu }^N(\delta _1,\delta _2)\) be the solution of the approximate scheme on the original Shishkin mesh. Similarly, on the mesh produced by uniformly bisecting the origin mesh, we define \(\mathbf S _{\varepsilon ,\mu }^{2N}(\delta _1,\delta _2)\). Then we can use the following formula to estimate the maximum point-wise error

In practical computation, one may choose suitable parameters \(\delta _1\) and \(\delta _2\) to make the value of \(E_{\varepsilon ,\mu }^N(\delta _1,\delta _2)\) as small as possible. Therefore, in this paper, we may transform the problem of mesh parameter calculation into the following nonlinear unconstrained optimization problem

Obviously, the above objective function (16) is an implicit function above variables \(\delta _1\) and \(\delta _2\), and is not differentiable. So, some traditional optimization methods are not suitable to solve it. In addition, once the above objective function (16) has many local extreme points, the traditional optimization methods may not find the global optimization solution, efficiently.

4.2 A brief review of differential evolution algorithm

Differential evolution (DE) algorithm presented by Srorn and Price (1997) is an effective and practical intelligent optimization algorithm. It aims at solving an optimization problem by evolving a population of D-dimensional parameter vectors, so-called individuals, which encode the candidate solutions, i.e., \(\mathbf x _{i,G}=(x_{1i,G},\ldots ,x_{Di,G})\), \(i=1,\ldots ,\hbox {NP}\) toward the global optimum. Here, NP be the number of individuals in the population and \(\mathbf x _{i,G}\) be each target vector at the generation G. First, the initial population should be chosen by uniformly randomizing individuals with the search space constrained by the minimum and maximum bounds \(\mathbf x _{min}\) and \(\mathbf x _{max}\). Then, the DE algorithm can be concluded three operations: mutation, crossover and selection.

4.2.1 Mutation

After initialization, for each target vector \(\mathbf x _{i,G}\), a mutant vector \(\mathbf v _{i,G}=(v_{1i,G}, v_{2i,G}, \ldots , v_{Di,G})\) is generated by

where \(r_1,r_2,r_3\in [1,\hbox {NP}]\) are mutually different random indexes and \(F\in [0,2]\) is a scaling constant that controls the amplification of the difference vector \((\mathbf x _{r_2,G}-\mathbf x _{r_3,G})\).

4.2.2 Crossover

A trial vector \(\mathbf u _{i,G+1}=(u_{1i,G+1},u_{2i,G+1},\ldots ,u_{Di,G+1})\) is obtained by the crossover operator, according to the following scheme

where \(j=1,2,\ldots ,D\), \(r(j)\in [0,1]\) is the jth evaluation of uniform random generator number. \(\hbox {CR}\in [0,1]\) is a user-specified constant which controls the fraction of parameter values copied from the mutant vector. \(rn(i)\in (1,2,\ldots ,D)\) is a randomly chosen index which ensures that \(\mathbf u _{i,G+1}\) gets at least one element from \(\mathbf v _{i,G+1}\).

4.2.3 Selection

A greedy selection scheme is given by

where \(j=1,2,\ldots ,D\). In other words, if, and only if, the trial vector \(\mathbf u _{i,G+1}\) has less or equal objective function value than the corresponding target vector \(\mathbf x _{i,G+1}\), the trial vector \(\mathbf u _{i,G+1}\) will replace the target vector \(\mathbf x _{i,G+1}\) and enter the population of the next generation. Otherwise, the old value \(\mathbf x _{i,G}\) will remain in the population for the next generation.

DE algorithm can be used to solve multi-objective, non-differentiable problems, and so on. It is very efficiently in a lot of diverse engineering applications such as neural networks (Piotrowski 2014), image processing (Ali et al. 2014), etc.

4.3 Previous work related to DE algorithm

The effectiveness of standard DE algorithm in solving a complicated optimization problem highly depends on the chosen mutation strategy and its associated parameter values. In the past few years, many DE researchers have some techniques for choosing trial vector generation strategies and their associated control parameter settings. According to Srorn and Price (1997), DE algorithm is very sensitive to the choice of parameters F and CR. The suggested choices are \(F\in [0.5,1]\), \(\hbox {CR}\in [0.8,1]\) and \(\hbox {NP}=10D\). Liu and Lampinen (2005) used control parameters set to \(F=0.9\), \(\hbox {CR}=0.9\). Ali and Törn (2004) chose \(\hbox {CR}=0.5\) and used the following scheme to calculate F

where \(f_{max}\) and \(f_{min}\) are the maximum and minimum values of vectors \(\mathbf{x}_{i,G}\), respectively. Gämperle et al. (2002) considered different parameter settings for DE on Sphere, Rosenbrock and Rastrigin functions. In their experiment results, the scaling factor F is equal to 0.6, the crossover rate CR be between [0.3, 0.9], and NP be between [3D, 8D]. Rönkkönen et al. (2005) suggested using F values in [0.4, 0.95] and CR values in [0, 0.2]. Recently, several researchers (Zaharie 2003; Zaharie and Petcu 2003; Abbass 2002) have developed some approaches to control parameters F and CR. Very recently, more and more researchers paid attention to the self-adaptive DE algorithm, see, e.g., Qin and Suganthan (2005), Omran et al. (2005) and Rahnamayan et al. (2008).

4.4 Self-adapting differential evolution (jDE) algorithm

Based on the above literature review, the effectiveness of convectional DE algorithm in solving a numerical optimization problem depends on the selected mutation strategy and its associated parameter values. Therefore, choosing suitable control parameter values for the convection DE algorithm is a very important task. In Brest et al. (2006), by introducing two new control parameters \(\tau _1\) and \(\tau _2\) to adjust the value of F and CR, Brest et al. proposed a self-adapting differential evolution (jDE) algorithm. The new parameters \(F_{i,G+1}\) and \(\hbox {CR}_{i,G+1}\) are calculated by

where \(\hbox {rand}_j\in [0,1]\), \(j=1,2,3,4\) are uniform random values, \(F_l=0.1\) and \(F_u=0.9\) are the lower and upper bounds of the F, respectively. Obviously, from Equations (21)–(22), the new F takes a value from [0.1, 1.0] in a random manner and the new CR takes a value form [0, 1].

In our paper, in order to solve the above nonlinear optimization problem (16), we will use the technique presented in (21)–(22) to obtain the control parameters F and CR. For the population size NP, we do not change it during the run.

5 Numerical experiments

In this section, the following numerical example is given to illustrate the effectiveness of the presented method

For two given parameters \(\delta _1\) and \(\delta _2\), let \(\texttt {S}^N\) and \(\texttt {S}^{2N}\) be the numerical solutions which are calculated on N and 2N mesh intervals, respectively. Then, the following formula is defined:

Here, to solve the above problem (23)-(24), we first use jDE algorithm to optimize the problem (16), and obtain the optimal parameters \(\delta _1\) and \(\delta _2\) and the corresponding numerical solution. To facilitate the experiments, we use the software MATLAB2012a to program a M-file for implementing the algorithms on a PC with a 32-bit windows 7 operating system, a 4GB RAM and a 3.10 GHz-core(TM) i5-based processor.

In the experiment, in order to illustrate the advantages of the jDE algorithm to solve above optimize problem (16), we also calculate the numerical results by using (original) DE, particle swarm optimization (PSO) algorithm (Kennedy and Eberhart 1995), comprehensive learning particle swarm optimization(CLPSO) (Liang et al. 2006), real-coded genetic algorithm (rcGA) (Ono and Kobayashi 1997), covariance matrix adaptation evolution strategy (CMA-ES) (Hansen et al. 2003), self-adaptive DE (SaDE) algorithm (Qin et al. 2009), JADE (Zhang and Sanderson 2009) and self-adapting differential evolution (jDE) (Brest et al. 2006).

Throughout this paper, the parameter settings of each stochastic algorithm are as follows:

-

(1)

the maximum number of generations \(D=50\), the population size \(\hbox {NP}=50\).

-

(2)

For the PSO and CLPSO algorithms, two accelerating factors are set to 0.5 and 0.5, respectively. Inertia weight factor is set to 0.45.

-

(3)

For the (original) DE, SaDE and JADE algorithms, crossover factor \(F=0.5\), crossover probability \(\hbox {CR}=0.1\).

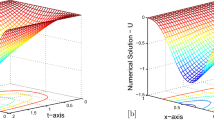

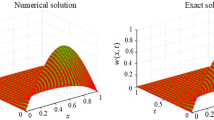

In our experiment, for different values of \(\varepsilon \), \(\mu \) and N, the numerical results of 30 independent runs are summarized in Tables 1, 2, 3, 4,5, 6, 7, 8 and 9. The maximum values, minimum values of \(E_{\varepsilon ,\mu }^{N}\) and the corresponding parameters \(\delta _1\), \(\delta _2\) are also listed in Tables 1, 2, 3, 4,5, 6, 7, 8 and 9. Meanwhile, in order to compare the robustness of the each algorithm, the average computed values of \(E_{\varepsilon ,\mu }^{N}\) and variance are also given. It can be seen from Tables 1-9 that the computing precision of jDE is slightly higher than the other seven algorithms (PSO, CLPSO, rcGA, CMA-ES, DE, SaDE, JADE) by comparing the maximum, minimum and mean values. In addition, the algorithm stabilization of jDE is slightly stronger than the other seven algorithms (PSO, CLPSO, rcGA, CMA-ES, DE, SaDE and JADE) by comparing the variance values. The experimental results show that the jDE algorithm has certain competition advantages in computing precision and algorithm stabilization than the other seven algorithms (PSO, CLPSO, rcGA, CMA-ES, DE, SaDE and JADE).

The comparison of statistical data shows that the jDE algorithms give better results than rcGA, PSO, CLSPO and CMA-ES algorithm. Furthermore, jDE algorithm performs better than original DE, while original DE algorithm does not always perform better than PSO. Thus, to further illustrate the advantages of jDE algorithm, the convergence speed of the jDE, DE, SaDE, JADE and PSO algorithms are plotted in Figs. 1, 2 and 3 with \(\varepsilon =10^{-8}, \mu =10^{-10}\), \(\varepsilon =10^{-10}, \mu =10^{-12}\), \(\varepsilon =10^{-12}, \mu =10^{-14}\) and \(N=32\), respectively. Obviously, from these figures, one can easily see that jDE algorithm is certainly better than PSO, DE, SaDE, JADE. It is shown from Figs. 1, 2 and 3 that the jDE algorithm has certain superiority in convergence velocity than the other four algorithms.

6 Conclusions

In most of the cases, the Shishkin mesh method is frequently used to solve the singularly perturbed problem. However, for the Shishkin mesh transition points, almost all of authors are arbitrary selection parameters. Thus, this work can be viewed as a preliminary step-up in finding a challenging numerical method to obtain the Shishkin mesh transition points. Specially, we transform the Shishkin mesh transition parameter selection problem into a nonlinear unconstrained optimization problem which is solved by using the self-adapting differential evolution (jDE) algorithm. The experimental results show that the jDE algorithm has certain competition advantages in computing precision, algorithm stabilization and convergence speed than the state-of-art algorithms. It is noted that the method presented in this paper can be extend to other type of singularly perturbed problems.

References

Abbass HA (2002) The self-adaptive Pareto differential evolution algorithm. In: Proceedings of the congress evolutionary computation, Honolulu, HI, pp 831–836

Abderazek H, Ferhat D, Atanasovska I (2015) A differential evolution algorithm for tooth profile optimization with respect to balancing specific sliding coefficients of involute cylindrical spur and helical gears. Adv Mech Eng 7(9):1–11

Ali MM, Törn A (2004) Population set-based global optimization algorithms: some modifications and numerical studies. Comput Oper Res 31(10):1703–1725

Ali M, Ahn CW, Pant M (2014) Multi-level image thresholding by synergetic differential evolution. App Soft Comput 17:1–11

Ali MZ, Awad NH, Suganthan PN, Duwairi RM, Reynolds RG (2016a) A novel hybrid Cultural Algorithms framework with trajectory-based search for global numerical optimization. Inf Sci 334–335(C):219–249

Ali MZ, Suganthan PN, Reynolds RG, Al-Badarneh AF (2016b) Leveraged neighborhood restructuring in cultural algorithms for solving real-world numerical optimization problems. IEEE Trans Evol Comput 20(2):218–231

Bigge J, Bohl E (1985) Deformations of the bifurcation diagram due to discretization. Math Comput 45(172):393–403

Brest J, Greiner S, Boskovic B et al (2006) Self-adapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans Evolut Comput 10(6):646–657

Das S, Konar A (2009) Automatic imageg pixel clustering with an improved differential evolution. Appl Soft Comput 9(1):226–236

Gämperle R, Müller SD, Koumoutsakos P (2002) A parameter study for differential evolution. In: Grmela A, Mastorakis NE (eds) Advances in intelligent systems, fuzzy systems, evolutionary computation. WSEAS Press, Interlaken, pp 293–298

Gong W, Cai Z (1976) Parameter optimization of PEMFC model with improved multi-strategy adaptive differential evolution. Eng Appl Artif Intel 27(C):28–40

Gong W, Cai Z, Ling CX (2011) DE/BBO: a hybrid differential evolution with biogeography-based optimization for global numerical optimization. Soft Comput 15(4):645–665

Gong W, Zhou A, Cai Z (2015) A multioperator search strategy based on cheap surrogate models for evolutionary optimization. IEEE Trans Evolut Comput 19(5):746–758

Gracica JL, O’Riordan E, Pickett ML (2006) A parameter robust second order numerical method for a singularly perturbed two-parameter problem. Appl Numer Math 56(7):962–980

Hansen N, Muller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol Comput 11(1):1–18

Herceg D (2011) Fourth-order finite-difference method for boundary value problems with two small parameters. Appl Math Comput 218(2):616–627

Kadalbajoo MK, Yadaw AS (2008) B-spline collocation mehod for a two-parameter singularly pertubed convection-diffusion boundary value problems. Appl Math Comput 201(1–2):504–513

Kennedy J, Eberhart RC (1995) Particle swarm optimization. In: Proceeding of the IEEE international conference on neural networks (Perth, Australia). IEEE Service Ceter, Piscataway, NJ, pp 1942–1948

Liang JJ, Qin AK, Suganthan PN, Baskar S (2006) Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evolut Comput 10(3):281–295

Linß T (2010) A posteriori error estimation for a singularly perturbed problem with two small parameters. Int J Numer Anal Model 7(3):491–506

Linß T, Roos HG (2004) Analysis of a finite-difference scheme for a singularly perturbed problem with two small parameters. J Math Anal Appl 289(2):355–366

Liu W, Wang P, Qiao H (2012) Part-based adaptive detection of workpieces using differential evolution. Signal Process 92(2):301–307

Liu J, Lampinen J (2005) A fuzzy adaptive differential evolution algorithm. Soft Comput 9(6):448–462

Lynn N, Suganthan PN (2015) Heterogeneous comprehensive learning particle swarm optimization with enhanced exploration and exploitation. Swarm Evol Comput 24:11–24

Matthews S, O’Riordan E, Shishkin GI (2002) A numerical method for a system of singularly perturbed reaction–diffusion equations. J Comput Appl Math 145:151–166

Miller JJH, O’Riordan E, Shishkin GI (1996) Fitted numerical methods for singular perturbation problems. Error estimates in the maximum norm for linear problems in one and two dimensions. World Scientific, Singapore

O’Malley RE Jr (1967) Two-parameter singular perturbation problems for second order equations. J Math Mech 16:1143–1164

Omran MGH, Salman A, Engelbrecht AP (2005) Self-adaptive differential evolution. In: Lecture notes in artificial intelligence. Springer, Berlin, pp 192–199

Ono I, Kobayashi S (1997) A real coded genetic algorithm for function optimization using unimodal normal distributed crossover. In: International conference on genetic algorithms, East Lansing, MI, USA, pp 246–253

Ouyang A, Yang Z (2016) An efficient hybrid algorithm based on harmony search and invasive weed optimization. In: 2016 12th international conference on natural computation, fuzzy systems and knowledge discovery (ICNC-FSKD), Changsha, pp 167–172. doi:10.1109/FSKD.2016.7603169

Ouyang A, Li K, Truong TK, Sallam A, Sha EHM (2014) Hybrid particle swarm optimization for parameter estimation of Muskingum model. Neural Comput Appl 25(7–8):1785–1799

Ouyang A, Tang Z, Zhou X, Xu Y, Pan G, Li K (2015) Parallel hybrid PSO with CUDA for lD heat conduction equation. Comput Fluids 110:198–210

Ouyang A, Peng X, Liu Y, Fan L, Li K (2016a) An efficient hybrid algorithm based on HS and SFLA. Int J Pattern Recognit Artif Intell 30(5):1659012 (1–25)

Ouyang A, Peng X, Wang Q, Wang Y, Truong TK (2016b) A parallel improved iwo algorithm on gpu for solving large scale global optimization problems. J Intell Fuzzy Syst 31(2):1041–1051

Piotrowski AP (2014) Diiferential Evolution algorithms applied to neural network training suffer from stagnation. Appl Soft Comput 21:382–406

Qin AK, Suganthan PN (2005) Self-adaptive differential evolution algorithm for numerical optimization. In: Proceedings of the IEEE congress evolutionary computation, Edinburgh, Scotland, pp 1785–1791

Qin AK, Huang VL, Suganthan PN (2009) Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans Evolut Comput 13(2):398–417

Qu BY, Suganthan PN, Das S (2013) A distance-based locally informed particle swarm model for multimodal optimization. IEEE Trans Evol Comput 17(3):387–402

Rahnamayan S, Tizhoosh HR, Salama MMA (2008) Oppositionbased differential evolution. IEEE Trans Evolut Comput 12(1):64–79

Rönkkönen J, Kukkonen S, Price KV (2005) Real-parameter optimization with differential evolution. In: Proceedings of the IEEE congress evolutionary computation, Edinburgh, Scotland, pp 506–513

Roos HG, Uzelac Z (2003) The SDFEM for a convection–diffusion problem with two small parameters. Comput Methods Appl Math 3(3):443–458

Srorn R, Price K (1997) Differential evolution: a simple and efficient adaptive scheme for global optimization over continuous spaces. J Global Optim 11(4):341–359

Suganthan PN (1999) Particle swarm optimiser with neighbourhood operator. In: Proceedings of the 1999 congress on evolutionary computation, 1999 (CEC 99), Washington, DC

Wu G, Mallipeddi R, Suganthan PN, Wang R, Chen H (2016) Differential evolution with multi-population based ensemble of mutation strategies. Inf Sci 329:329–345

Xu Y, Li K, Hu J, Li K (2014) A genetic algorithm for task scheduling on heterogeneous computing systems using multiple priority queues. Inf Sci 270:255–287

Xu Y, Li K, He L, Zhang L (2015) A hybrid chemical reaction optimization scheme for task scheduling on heterogeneous computing systems. IEEE Trans Parallel Distrib Syst 26(12):3208–3222

Zaharie D (2003) Control of population diversity and adaptation in differential evolution algorithms. In: Matousek R, Osmera P (eds) Proceeding of the mendel 9th international conference soft computing, Brno, Czech Republic, pp 41–46

Zaharie D, Petcu D (2003) Adaptive pareto differential evolution and its parallelization. In: Proceedings of the 5th international conference on parallel process applied mathematics, Czestochowa, Poland, pp 261–268

Zhang J, Sanderson AC (2009) Jade: adaptive differential evolution with optional external archive. IEEE Trans Evolut Comput 13(5):945–958

Acknowledgements

This work was supported by the National Natural Science Foundation of China (11301044, 11401054, 61662090, 11461011), the general Project of Hunan provincial education department (14C0047), the Natural Science Foundation of Guizhou Provincial Education Department (No. KY[2016]018), the Scientific Research Fund of Hunan Provincial Education Department (No. 13C333), the Doctoral Foundation of Zunyi Normal College (No. BS[2015]13), the open fund of Key Laboratory of Guangxi High Schools for Complex System and Computational Intelligence (No. 15CI03D), Natural Science Foundation of Guangxi Education Department (No. ZD2014080).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Luo, XQ., Liu, LB., Ouyang, A. et al. B-spline collocation and self-adapting differential evolution (jDE) algorithm for a singularly perturbed convection–diffusion problem. Soft Comput 22, 2683–2693 (2018). https://doi.org/10.1007/s00500-017-2523-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-017-2523-9