Abstract

This paper presents a novel denoising approach based on smoothing linear and nonlinear filters combined with an optimization algorithm. The optimization algorithm used was cuckoo search algorithm and is employed to determine the optimal sequence of filters for each kind of noise. Noises that would be eliminated form images using the proposed approach including Gaussian, speckle, and salt and pepper noise. The denoising behaviour of nonlinear filters and wavelet shrinkage threshold methods have also been analysed and compared with the proposed approach. Results show the robustness of the proposed filter when compared with the state-of-the-art methods in terms of peak signal-to-noise ratio and image quality index. Furthermore, a comparative analysis is provided between the said optimization algorithm and the genetic algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Digital images provide significant information that on one hand is used in most real-world applications such as remote sensing, defence and medicine. On the other hand, users of these applications suffer from quality degradation issues caused during image transmissions over the networks. Extracting useful information from degraded images without having the knowledge about the noise type from which they are polluted has been an issue in real-world applications. The noises that may destroy image textures include Gaussian, speckle, and salt and pepper noise. Numerous techniques have been developed to suppress noise and boost image quality such as linear filters, nonlinear filters and wavelet threshold-based strategies. Most of the techniques developed so far do not attempt to diminish the effects of multiple noises. Hence, several hybrid techniques have been proposed to enhance visual quality of a received image by removing multiple noises.

In this paper we investigate the denoising behaviour of linear filters, nonlinear filters and wavelet shrinkage threshold methods. To remove noise, spatial domain filters, i.e. linear and nonlinear, we apply different kinds of arithmetic operations on neighbourhood pixels and finally replace the original pixel value with the resultant value. Whereas frequency domain filters, i.e. wavelet shrinkage methods suppress noise in low-frequency sub-bands of an image while enhancing the details in high-frequency bands. Nonlinear filters, i.e. bilateral, Lee and Kuan, achieve better edge preservation over linear filters, whereas linear filters cause blurs and become efficient in noise reduction.

Appropriate filter selection for a particular image depends on the noise type by which it is polluted. Suitable selection is necessary, as filters are designed for specific noise types.

To get rid of Gaussian noise, filters such as bilateral, mean, Wiener, circular, cone, pyramid and Gaussian low pass filter are designed (Lakshmi et al. 2012; Dangeti 2003; Tomasi and Manduchi 1998). Median filter, conversely, is found to be more effective for removing salt and pepper noise (Mohapatra et al. 2007). Reduction of speckle noise can be done with the help of Lee and Kuan filters (Velaga and Kovvada 2012; Benes and Riha 2012). Window size selection in the case of neighbourhood filters is also the key factor in noise removal. Smaller sizes do not completely remove noise, while larger sizes cause edge blurring.

Wavelets, on the other hand, are successfully employed to remove Gaussian noise. Some wavelet shrinkage methods used for this purpose include Bayes Shrink (Chang et al. 2000), SURE Shrink (Donoho and Johnstone 1995) and Visu Shrink (Donoho 1992). Wavelet shrinkage methods destroy image textaures when they are applied on images to get rid of the Gaussian noise. Their disadvantages include accurate threshold computation and the way how threshold is applied. Moreover, complex wavelet coefficient’s statistical model does not improve image denoising results as expected (Bai 2008). In addition, more time is required to build and train the new models. In the following section we will explore the denoising behaviour of wavelets in detail by conducting experiments.

The advantage of using the aforementioned filters is their simplicity. However, their limitations motivate us to come up with innovative ideas that would help remove noise by preserving image textures. We therefore explored the effectiveness of applying filters in a sequence determined by an optimization algorithm, namely cuckoo search algorithm (CSA). The proposed idea is verified using linear filters only. Nonlinear filters, frequency domain filters and their combinations remain unexplored. The proposed hybrid filter intends to reduce Gaussian, speckle, and salt and pepper noise.

The remaining paper is organized as follows. Section 2 discusses the existing non-artificial intelligence (AI) and AI image denoising techniques. Section 3 presents the proposed approach for image denoising. Thereafter, results and performance analysis of the proposed and existing approaches is discussed in Sect. 4. Finally, we conclude and highlight the future directions.

2 Related work

This section presents recent researches that have been conducted to denoise corrupted digital images which play a vital role in a wide range of applications, i.e. medicine and satellite. A large number of AI and non-AI techniques have been developed till now for images with different textures, resolutions, formats and noises. Some of them are discussed and analyzed to highlight the significance of the proposed approach. The research work discussed below is categorized as satellite images, medical images and general images.

2.1 Satellite images

In Pragada and Sivaswamy (2008), proposed a method that makes use of matched bi-orthogonal wavelets to denoise satellite and medical images corrupted with additive white Gaussian noise. This algorithm decomposes the noisy image into sub-bands using decomposition filters by applying four levels of wavelet decomposition. The decomposed sub-bands are then thresholded using Bayes and Bi-Shrink algorithms. Lastly, the final restructured image is obtained by applying reconstruction filters. The next method proposed by Chitroub (2003) is used for de-correlating, compressing and improving noisy satellite images. A principal component analysis approach based on neural networks is employed to achieve the desired operation with adaptive learning and acceptable convergence rate. The method presented by Chen et al. (2013) is focused on removing multiplicative noise from aerial images. The authors claimed that the proposed model based on partial differential equations (PDE) outperformed the Aubert and Aujol (AA model) for multiplicative noise removal and edge preservation in terms of signal-to-noise ratio (SNR) and edge preservation index (EPI).

2.2 Medical images

The authors Ilango and Marudhachalam (2011) proposed a technique to denoise medical images contaminated with Gaussian noise to make them better in quality for correct diagnosis. The authors proposed three hybrid techniques: hybrid cross median filter, hybrid min-filter and hybrid max-filter for noise reduction. Experiments reveal that the hybrid max-filter outperforms various existing techniques. The next method for denoising medical ultrasound and magnetic resonance imaging is proposed by Pǐzurica et al. (2003) which uses the concept of wavelets to remove Rician and speckle noise. The basic idea behind this method is to empirically approximate the probabilities and probability density function. The authors claim that the said method is less complex and adapts itself to unidentified noise types. The method presented by Anand and Sahambi (2008) denoises magnetic resonance images from white Gaussian noise. This approach uses bilateral filter in un-decimated wavelet domain for reconstructing images. Approximation coefficients are determined by applying un-decimated wavelet transform on a noisy image. Thereafter, a bilateral filter is applied on approximation coefficients to perform denoising and preserve edges. The reconstructed image is then formed using denoised coefficients. The method proposed by Tayel et al. (2011) uses threshold based neural networks for denoising breast cancer tumour images. This method works in two steps: image denoising is performed to make the image clearer; and image segmentation is used to extract the area of interest. Wavelets are combined with neural networks in terms of thresholds to obtain efficient detection and denoising for medical images. The approach presented by Roy et al. (2010) discusses a hybrid filter for Gaussian noise removal from X-ray, ultrasound and telescopic images. The developed hybrid filter is a combination of wavelets and bilateral filter. A bilateral filter applied before and after wavelet decomposition produces better results than state-of-the-art algorithms. The image quality metrics, peak signal-to-noise ratio (PSNR) and image quality index (IQI), were used to assess the performance of the proposed hybrid filter.

2.3 General images

In Ville et al. (2003), developed a denoising filter based on fuzzy theory to reduce additive noise from images. The next approach proposed by Portilla et al. (2003) is a statistical model of coefficients to reduce Gaussian noise. A three-step procedure is followed; first, decomposition of the image into pyramid sub-bands is obtained. Secondly, noisy coefficients are observed and sub-bands are denoised. Lastly, the third image is inverted to obtain the denoised image. Dabov et al. (2007) extended the BM3D filter for colour images to get rid of Gaussian noise. The algorithm performs regularized inversion using BM3D with hard thresholding and afterwards with Wiener filtering. In another novel approach, a non-explicit way of examining the wavelet coefficients using support vector regression (SVR) is presented (Laparra et al. 2010). The estimated wavelet coefficients are encoded using anisotropic kernels to eliminate both Gaussian and non-Gaussian noises. Unlike wavelets that use explicit ways to determine coefficients, the denoising performance of this approach has improved. A mixture of Gaussian/bilateral filter with its noise threshold method using wavelets is designed to get rid of the Gaussian noise (Kumar 2013). Bilateral and Gaussian filters are applied first on the noisy image, respectively, say \(x\). Moreover, wavelet decomposition as well as threshold criteria is applied on the noisy image, say\( y\), and eventually the denoised image is obtained by combining \(x\) and \(y.\) The performance of this method is satisfactory when compared with other methods in terms of PSNR, IQI and visual fidelity index (VIF). Moreover, the work has also been carried out using bilateral filter to denoise the multi-resolution images (Zhang and Gunturk 2008). The filter is applied on sub-bands of a signal decomposed after wavelet filter bank. Thereafter, the signal is combined with wavelet thresholds to effectively remove Gaussian noise. Luisier et al. (2010) proposed a Poisson–Gaussian unbiased risk estimator (PURE-LET) methodology to optimize transform domain methods and remove Poisson/Gaussian noise. Results revealed satisfactory denoising performance in terms of PSNR values when compared with state-of-the-art algorithms.

The method proposed by Gupta et al. (2010) devised a hybrid filter using linear and nonlinear smoothing filters to remove additive as well as multiplicative noises. The filters used to develop this hybrid filter are mean, median, mode, circular, pyramid, cone and Gaussian of size 5 \(\times \) 5. Genetic algorithm (GA) is employed to determine the optimal sequence of filters with SNR or PSNR as its fitness function. Satisfactory results were obtained in comparison to standalone filters.

The discussed techniques reduce Gaussian and Poisson noises from general images except the one Gupta et al. presented. This approach attempted to remove Gaussian, speckle, and salt and pepper noises by applying smoothing filters in a sequence generated using GA. The drawbacks of this hybrid approach include fewer numbers of filters used, smoothing filters having the same window size and comparison with the constituents of the hybrid filter. Furthermore, limitations of GA add to the drawbacks that GA requires tuning of too many parameters and usually gets stuck in a local optimum which prevents generation of optimal solutions.

The focus of this paper is, thus, to explore the effectiveness of applying some smoothing linear and nonlinear filters and a sharpening filter in a sequence to eliminate Gaussian, speckle, and salt and pepper noise at various noise intensities. The sequence would be determined mainly using CSA with PSNR as its fitness function. The reason behind the selection of CSA is its outstanding performance over GA and particle swarm optimization (PSO) in several domains (Yang and Deb 2009). To resolve the drawbacks of Gupta’s approach, we chose 12 filters of different window sizes and compared the results with wavelet shrinkage methods and nonlinear filters renowned for noise removal and edge preservation. A detailed discussion on the proposed method is provided in Sect. 3.

3 Proposed hybrid filter

The goal of this research is to analyze the denoising behaviour of several nonlinear filters and wavelet shrinkage methods against the proposed filter. Moreover, to overcome the drawback of less accurate standalone smoothing filters, the effectiveness of their sequence was examined in this paper. CSA, a soft computing technique, is employed to determine the smoothing filter sequence.

This section is organized as follows: firstly, a brief introduction to CSA, its advantages and applications are presented; secondly, the proposed filter with its advantages is discussed in detail.

3.1 Cuckoo search algorithm

The motivation of CSA is taken from the behaviour of a bird species named cuckoo due to its exceptional way of living and hostile reproduction strategy. Cuckoos lay their eggs in the host birds’ nest with an incredible ability of nest selection and removal of existing eggs to enhance the chances of their hatching. The host birds either fight with cuckoos by throwing away their eggs or build their own nests somewhere else. Cuckoos make random walks to search the new location for hatching. Their random walk follows Levy flight behaviour of other insects such as fruit flies which explore their site by flying across straight paths punctuated by a sudden 90\(^{\circ }\) turn. The step length of a Levy flight can be drawn from the Levy distribution which has infinite mean and variance. The equation of Levy distribution is (Yang 2010):

where \(u\) is the location and \(\lambda \) is the step size at time \(t\).

Three idealized rules mentioned below best describe the CSA:

-

1.

Each cuckoo lays eggs but one at a time and dump it in any nest, selected at random.

-

2.

High-quality eggs (or best solutions) will be kept and passed to the next steps, while others are discarded.

-

3.

The total number of host nests remains unchanged, and the host bird finds out the cuckoo’s egg with a probability pa of [0, 1].

Cuckoo search algorithm (CSA) has been applied in a wide range of applications for optimizing purposes with great success. It is used in spring and welded beam design, steel frames, semantic web services design and in embedded systems (Yang and Deb 2014). CSA is used in optimizing the design of rotor blades (Ernst et al. 2012). It is also employed to resolve the knapsack problem by combining it with the quantum inspired principles (Layeb 2011). Chandrasekaran and Sishaj (2012) used CSA to optimize the multi-objective unit commitment problem with Lagrangian algorithm and fuzzy set theory. An extension of CSA is proposed by Syberfeldt and Lidberg (2012) and used for optimization of the engine manufacturing line.

The above discussion clearly highlights that CSA is widely used in optimization problems and is efficient as well. CSA outperforms GA, PSO and ant bee colony (ABC) (Yang and Deb 2009, 2014). CSA is popular among other optimization algorithms due to the randomization process. In CSA, cuckoos make random walks making solutions of this algorithm more random and optimal in nature. Due to this randomization, CSA does not get stuck in the local optimum as GA does. Another advantage of CSA over other optimization algorithms is that a less number of parameters are required in tuning of CSA. Only the initial population of nests and the probability of finding bad nests are varied to obtain the optimized results. Due to CSA’s effectiveness in solving optimization problems, we have used it to determine the optimal sequence of filters in the proposed algorithm.

3.2 Hybrid smoothing filter

The trend towards hybrid approaches has been increased with the advent of AI techniques. A combination of AI and non-AI approaches is supposed to generate more effective results, which they do obviously, but at the cost of high computational complexity. This trade-off is acceptable when accuracy is the main concern.

Keeping in mind the limitations of standalone filters and wavelet shrinkage threshold methods, we devised a hybrid filter based on smoothing linear and nonlinear filters of several window sizes and a sharpening filter whose sequence is determined via CSA to remove Gaussian, speckle, and salt and pepper noises at various noise intensities. We chose smoothing and sharpening filters for the reason that we want to validate whether this approach would be successful or not and definitely the simple design of filters encourages us to explore them first. The filters used in the design of the proposed hybrid filter are listed below:

In the CSA, an egg represents a solution. A nest can contain more than one solution. However, in our case, a nest has only one solution. At first a population of three nests was generated, each containing a fixed length permutation of four filters. Variable length permutations are left for future investigation. Permutations of filters here represent solutions. For instance, the permutations 3–4–5–1 and 9–8–12–5 are two different solutions containing different sequences of filters. A single sequence would not remove all kinds of noises from different images. Separate sequences are thus required to denoise images polluted with different kinds of noises. CSA will find optimal sequences to eliminate a particular noise type from an image.

After generation of nests, their fitness will be evaluated using an objective function which is as follows:

Only the best nests with higher PSNR values are carried over to the next generation. Cuckoos or we can say new solutions are created by performing random walks of length 0.05. Worst nests having bad solutions are abandoned with the probability \(p_{\text {a}}\) which is 0.25, while the best nests are kept and passed over to the next generations. This process is repeated until the stopping criteria are attained.

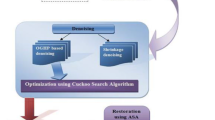

Original and noisy images are provided to the CSA for computation. A population of nests, each containing a solution or a fixed length permutation is generated. The generated permutations are used to denoise the noisy image and its PSNR value is computed, say \(f1\), or we can say that its fitness is evaluated. A cuckoo, which is any random permutation of four filters, is selected and also applied on the same image. The PSNR value after applying the cuckoo’s solution, say \(f2\), is calculated as well. Both the PSNR values \(f1\) and \(f2\) are compared. If \(f2\) is better than \(f1\), then the permutation in the nest would be replaced by the cuckoo’s permutation, otherwise it will be passed to the next generation. The randomly selected nest would be replaced by the cuckoo’s solution only if the newly generated cuckoo has higher fitness values, i.e. higher PSNR values. The iterations will continue until the highest PSNR values for the noisy image are obtained. If values start repeating themselves for over 50 iterations, the algorithm will stop optimization. Flow diagram of the proposed approach is given in Fig. 1.

4 Results and discussion

This section presents the experimental results to demonstrate the performance of the proposed approach on standard 512 \(\times \) 512 images (‘Lena’, ‘Pirate’, ‘Mandrill’) contaminated with Gaussian, speckle, and salt and pepper noises with different degradation levels. The images are listed in Fig. 2. The results of the proposed filter are evaluated against wavelets and nonlinear filters using visual quality metrics PSNR and IQI. The implementation details, parameters for denoising approaches, and information regarding various noise sources and their intensities are provided below.

4.1 Implementation details and parameters

The computing language MATLAB was used to simulate the results on a Pentium IV PC. Standard test images used are of similar resolution of 512 \(\times \) 512. Some implementation constraints for wavelets are as follows: db4 wavelet family is used in the wavelet thresholding strategies with decomposition level 3. The methods used for comparison are Bayes Shrink, Visu Shrink, SURE Shrink, bilateral filter, Lee filter and Kuan filter. The parameters of the optimization algorithm, CS, are:

The initial population of nests (\(n\)) is three with a permutation of four filters in each nest; the probability of finding bad nests (\(p_{\text {a}}\)) is 0.25, and the Levy flight or step size taken by cuckoos is 0.05.

Two experiments were conducted to assess the performance of the existing and proposed denoising approaches with various noises at different levels.

Experiment 1: White Gaussian noise at 0.01, 0.05 and 0.1 and salt and pepper noise at 0.05, 0.1 and 0.5 on standard images.

Experiment 2: Speckle noise at 0.01, 0.05 and 0.1 on standard images.

4.2 Experiment1: Gaussian and salt and pepper noise

The first experiment compares the performance of the proposed hybrid filter with the state of the art algorithms on standard images contaminated with Gaussian noise having intensity 0.1 and salt and pepper noise having 0.5. PSNR and IQI values for the highest noise intensities are shown in the tables below in which the best values are highlighted, while graphs for the remaining noise intensities are provided. Visual comparisons are also attained to deeply investigate the denoising behaviour of all the approaches.

The results of the proposed approach are more than up to par, as standalone filters cause blurs and are not so much effective for noise removal. However, their combination as per CSA optimization is found to be proficient. In Table 1, the resultant PSNR and IQI values of the denoising approaches after eliminating Gaussian noise are presented. For PSNR of the proposed approach, an average increase is around 3.5 %, whereas the best case is observed to be up to 10 %. Though the attained IQI is not equivalent to the specialized Gaussian noise only filters, other than those, as compared to other filters listed, an average of around 0.3 IQI enhancements are observed. The trend for salt and pepper noise is similar as shown in Table 2. The average increase in PSNR against all filters was around 18, but IQI as compared to non-specialized filters was close to 0.16.

It can be concluded from the Table 1 that the proposed filter outperforms other approaches on Lena and pirate images, while the performance of the bilateral filter is slightly better than the proposed one in terms of both PSNR and IQI values on the mandrill image. Visual comparisons also reveal that the bilateral filter, being a nonlinear filter which is specifically designed for Gaussian noise, better preserves edges and results at higher PSNR values. Despite this fact, the performance of the proposed filter on mandrill image is satisfactory as can be seen in the visual comparison. Figure 3 shows the visual comparison between the bilateral and proposed filter. It can be observed from the visual comparison that the reason for lower PSNR value of the proposed filter is its inefficiency in edge preservation, and not noise suppression. The proposed filter obtained higher PSNR values for images of Lena and pirate. The reason behind this is that PSNR is the objective function of the CSA and all filter sequences are evaluated in terms of PSNR.

Like bilateral filter, Lee and Kuan filters are not efficient in Gaussian noise reduction and thus have lower PSNR values. Their poor performance regarding Gaussian noise removal can also be observed through visual comparisons. However, their IQI values are higher than the proposed filter as they do not destroy image textures.

The hybrid filter outperforms the three-wavelet shrinkage methods in both PSNR and IQI values. Visual comparisons further disclose their behaviour that they destroy image textures and contrast which is the main reason for their lower PSNR and IQI values. Visual comparisons of all the denoising approaches on Lena image degraded with Gaussian noise at intensity 0.1 are shown in Fig. 4. The acquired results demonstrate that the denoised Lena images were obtained from the denoising techniques after removing Gaussian noise of intensity 0.1. It is observed that the proposed filter is successful in suppressing noise.

It is evident from Fig. 4 that wavelets destroy image textures while removing noise. Nonlinear filters are good at edge preservation, while the denoising performance of the proposed filter is comparatively better.

Table 2 reveals the results of the denoising approaches after eliminating salt and pepper noise in terms of both PSNR and IQI values. From observing Table 2, it can be said that the denoising performance achieved by the proposed filter is much better than all the denoising techniques in terms of PSNR and IQI values, though the behaviour of Lee and Kuan filters retained greater IQI values. Through visual comparisons it becomes apparent that both these filters are neither effective for Gaussian nor for salt and pepper noise removal.

The proposed filter’s denoising results are also compared with the median filter which is popular due to its effectiveness in salt and pepper noise elimination. The window size selected for the median filter is 5 \(\times \) 5, instead of the widely used 3 \(\times \) 3. This is because the median filter with 5 \(\times \) 5 window size performs better than the median filter with 3 \(\times \) 3 window size. A visual comparison between these two is given below in Fig. 5 after removing salt and pepper noise at intensity 0.5.

Visual comparisons of all denoising approaches is demonstrated in Fig. 6 that constitutes denoised Lena images after removing salt and pepper noise at intensity 0.5. The performance of the proposed filter is found to be exceptional in this case.

Neither wavelets nor nonlinear filters could remove salt and pepper noise. It is worth mentioning here that the sequence which eliminates the salt and pepper noise not only contains the median filter, which is popular for salt and pepper noise removal, but it is also a combination of median 3 \(\times \) 3, median 5 \(\times \) 5, cone and Gaussian. Hence without knowing the noise type, CSA finds an optimal sequence to reduce it. This is in fact the real benefit of the proposed filter.

A performance comparison of the hybrid filter with the state of the art approaches applied on Lena image having Gaussian noise 0.01 and 0.05 and salt and pepper noise 0.05 and 0.1 in terms of PSNR is shown in Figs. 7 and 8, respectively. For Gaussian noise an average increase in PSNR is witnessed as 6.3 %, whereas for salt and pepper noise it is noted to be just above 28 %.

4.3 Experiment 2: Speckle noise

The second experiment was conducted to assess the performance of the proposed hybrid filter with existing approaches on standard images corrupted with speckle noise at 0.1. Both PSNR and IQI values are computed and analysed for this experiment.

As listed in Table 3, the PSNR values of the proposed hybrid filter are higher than those of all other denoising techniques except the bilateral filter. However, Lee and Kuan filters outperform in view of IQI values, the main reason being that these filters are especially designed for speckle noise reduction. Although their IQI values are higher, the visual quality of the denoised image produced by hybrid filter is as good as theirs. The PSNR value of the bilateral filter is again a little bit higher than the proposed filter, but the visual quality of their denoised images is close to each other. Figure 9 shows the visual comparisons of the state of the art and the proposed algorithm on Lena image polluted with speckle noise of intensity 0.1. Lee and Kuan filters remove speckle noise better; however through visual comparisons, it is obvious that they are only good at preserving edges not at noise suppression. In wavelets, SURE Shrink performs better than the other two on Lena and pirate images, while Bayes Shrink performs better on mandrill image. Overall, the average increase of the proposed approach is perceived to be around 1.5 for PSNR and 0.1 for IQI.

Lena image having speckle noise (0.01 and 0.05) in terms of PSNR is shown in Fig. 10, where the average increase is found to be around 5 % for varying noises.

4.4 Comparison with genetic algorithms

In this section, we will assess the performance of our proposed hybrid filter against the hybrid smoothing filter which used GA for generating the sequence of filters. Five images of different sizes contaminated with several noises are selected to conduct this experiment. The proposed hybrid smoothing filter outperforms the hybrid filter presented by Gupta et al. Table 4 highlights the PSNR and IQI values of denoised images obtained after applying the hybrid filters generated through GA and CSA. The noise intensities used in this experiment are as follows:

Gaussian noise with mean 0 and variance 0.25 and salt and pepper noise with variance 0.05. Poisson noise, on the other hand, is data-dependent noise and images cannot be polluted by generating it artificially. We have generated the Poisson noise using the Poisson distribution on the data of the input image. For instance, for a unit-8 pixel in the input image having value 20, a Poisson distribution with mean 20 will be generated for the corresponding output pixel.

Visual comparison between the two hybrid filters is also given in Figs. 11 and 12 to more critically investigate their denoising behaviour. Figure 11 unleashes the fact that denoised image using CSA has sharper edges and is found to be clearer than the GA denoised image, while in Fig. 12 again the noise suppression property of CSA surpasses the GA denoising.

Table 4 reveals the fact that the proposed filter’s performance is better than the other hybrid filter on all the images in terms of PSNR. But the hybrid filter generated using GA has a slightly higher IQI value at only one image, the 4th one. The proposed hybrid filter only considers the quality metric PSNR as its objective function and thus produced filter sequences with greater PSNR values. Although IQI is not the objective function of CSA, it performs well in terms of IQI on all images except the above-mentioned sample. However, it is worth mentioning that the decrease in IQI value on this particular image is not very significant. For other samples, the best readings for IQI increase were measured to be around 0.78, whereas the average increase was noted as 0.3. Nevertheless, for PSNR the average rise is evaluated to be around 18 % which doubled for the best case.

The proposed method effectively removes all kinds of noises at lower as well as higher intensities. This denoising behaviour of the proposed method makes it more reliable than other approaches. However, computational cost of the proposed method is very high in comparison to other methods. Though the proposed method is computationally inefficient, CSA seems better than other AI techniques due to less number of parameters.

The contributions made by this research work are summarized as follows:

-

Improved filters’ denoising performance by using them in a sequence.

-

Explored the CSA as an optimization tool.

-

Developed a hybrid image denoising technique.

-

Devised a more reliable denoising method that performs better image denoising than other techniques that are compared.

-

Studied the behaviour of different denoising algorithms on different kinds of noises at different noise intensities.

5 Conclusion and future directions

We proposed a hybrid filter whose sequence is determined via an optimization algorithm dubbed as cuckoo search algorithm. Comparisons are made among different denoising algorithms and evaluated in terms of PSNR and IQI. The technique proposed in this paper has improved the performance of filters by using them in a sequence. This paper has explored the CSA as an optimization tool and studied the behaviour of different denoising algorithms on different kinds of noises at various noise intensities. The proposed approach outperforms other approaches in removing Gaussian, salt and pepper and speckle noises. On average, 5–28 % rise in PSNR is measured for different noises having varying intensities. Besides, when compared with GA, there is an average enhancement in PSNR of around 18 % which doubles in certain cases. Hence, we conclude that the proposed method effectively removes all kinds of noises at various noise intensities.

CSA, being an optimization technique, can result in better results than other technique(s), as shown in this study. However, it can be computationally inefficient and nowadays different variants of CSA are the current research focus. This suggests making a comparison among AI techniques used in image processing applications to examine which AI technique is best suited for use in these kinds of applications. This is left as a future work. The limitation of CSA in our case is its objective function as we considered only PSNR, but other image quality metrics or their combinations could be used to further improve the results. Image metrics, i.e. IQI, VIF, both IQI and PSNR or both IQI and VIF can be used as an objective function to yield better visual quality. Another drawback which we want to highlight is that the proposed filter performs denoising using fixed length permutations of filters which is not more practical. Therefore in future, variable length permutations should be explored. The proposed approach can be extended to various resolutions, file formats and noise intensities as well. This filter was tested on 2-D images which can be extended to 3-D images by applying 3-D filters. Though PSNR of the denoised images has been increased with the proposed method, it could be improved with the help of nonlinear filters, wavelets and transforms.

Abbreviations

- AI:

-

Artificial intelligence

- CSA:

-

Cuckoo search algorithm

- db4:

-

Daubechies-4 wavelet

- IQI:

-

Image quality index

- GA:

-

Genetic algorithms

- PSO:

-

Particle swarm optimization

- PSNR:

-

Peak signal-to-noise ratio

- SNR:

-

Signal-to-noise ratio

References

Anand CS, Sahambi JS (2008) MRI denoising using bilateral filter in redundant wavelet domain. In: IEEE conference

Bai R (2008) Wavelet shrinkage based image denoising using soft computing. Dissertation, University of Waterloo, Waterloo, Ontario

Benes R, Riha K (2012) Medical image denoising By improved Kuan filter. Digital Image Process Comput Graph, 10(1)

Chandrasekaran K, Simon Sishaj P (2012) Multi-objective unit commitment problem using Cuckoo search Lagrangian method. Int J Eng Sci Technol 4(2):89–105

Chang SG, Bin Yu, Vetterli M (2000) Adaptive wavelet thresholding for image denoising and compression. IEEE Trans Image Process 9(9):1532–1546

Chitroub S (2003) Principal component analysis by neural network. Remote sensing images compression and enhancement. IEEE, ICECS, Application

Dabov K, Foi A, Katkovnik V, Egiazarian K (2007) Image restoration by sparse 3D transform-domain collaborative filtering. IEEE Trans Imag Process 16(8):2080–2095

Dangeti S (2003) Denoising techniques—a Comparison. Dissertion, Andhra University College of Engineering, Visakhapatnam

Donoho DL (1992) De-noising by soft-thresholding. Dissertation, Stanford University, California

Donoho DL, Johnstone IM (1995) Adapting to unknown smoothness via wavelet shrinkage. J Am Stat Assoc 90(432):1200–1224

Ernst B, Bloh M, Seume Jörg R, González AG (2012) Implementation of the “Cuckoo Search” Algorithm to Optimize the Design of Wind Turbine Rotor Blades

Gupta S, Kumar R, Panda SK (2010) A genetic algorithm based sequential hybrid filter for image smoothing. Int J Signal Image Process 1(4):242–248

Ilango G, Marudhachalam R (2011) New hybrid filtering techniques for removal of Gaussian noise from medical images. ARPN J Eng Appl Sci 6(2):15–18

Kumar BKS (2013) Image denoising based on gaussian/bilateral filter and its method noise thresholding. Springer-Verlag London. SIViP 7:1159–1172. doi:10.1007/s11760-012-0372-7

Lakshmi B, Kavita P, Ramu K (2012) A parallel model for noise reduction of images using smoothing filters and image averaging. Indian J Comput Sci Eng (IJCSE) 2(6):837–844

Laparra V, Guti’errez J, Camps-Valls G, Malo J (2010) Image denoising with kernels based on natural image relations. J Mach Learn Res 11:873–903

Layeb A (2011) A novel quantum inspired cuckoo search for Knapsack problems. Int J Bio Inspir Comput, 3(5):297–305

Chen Lixia, LIU Yanxiong, LIU Xujiao, WANG Xuewen (2013) A novel model to remove multiplicative noise. J Comput Inf Syst 9(11):4223–4229

Luisier F, Blu T, Unser M (2010) Image denoising in mixed poisson-Gaussian noise. IEEE Trans Imag Process 20(3):696–708

Matlab 6.1, Image processing toolbox. http://www.mathworks.com/access/helpdesk/help/toolbox/images/images.shtml

Mohapatra S (2008) Development of impulsive noise detection schemes for selective filtering in images. Dissertation, National Institute of Technology Rourkela, Orissa

Mohapatra S, Sa KP, Majhi B (2007) Impulsive noise removal image enhancement technique. In: 6th WSEAS international conference on circuits, systems, electronics, control and signal processing (CSECS-2007), Cairo, Egypt, pp 317–322

Portilla J, Strela V, J. Martin W, Simoncelli EP (2003) Image denoising using scale mixtures of Gaussians in the wavelet domain. IEEE Trans Image Process, 12(11):1338–1351

Pragada S, Sivaswamy J (2008) Image de-noising using matched biorthogonal wavelets. In: 6th Indian conference on computer vision, IEEE graphics and image processing

Pzurica A, Philips W, Lemahieu I, Acheroy M (2003) A versatile wavelet domain noise filtration technique for medical imaging. IEEE Trans Med Imaging 22(3):323–331

Sharma D (2008) A comparative analysis of thresholding techniques used in image denoising through wavelets. Dissertation, Thapar university, Patiala

Roy S, Sinha N, Sen AK (2010) A new hybrid image denoising method. Int J Inf Technol Knowl Manag 2(2):491–497

Syberfeldt A, Lidberg S (2012) Real-world simulation-based manufacturing optimization using Cuckoo search. In: Laroque C, Himmelspach J, Pasupathy R, Rose O, Uhrmacher AM (eds) Proceedings of the 2012 winter simulation conference

Tayel MB, Abdou MA, Elbagoury AM (2011) An efficient thresholding neural network technique for high noise densities environments. Int J Image Process (IJIP) 5(4):403–416

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color Images. In: Proceedings of the 1998 IEEE international conference on computer vision, Bombay, India

The USC SIPI database, USC Viterbi School of Engineering, University of Southern California, United States

Ville Van De D, Nachtegael M, Weken Van der D et al (2003) Noise reduction by fuzzy image filtering. IEEE Trans Fuzzy Syst 11(4):429–436

Velaga S, Kovvada S (2012) Efficient techniques for denoising of highly corrupted impulse noise images. Int J Soft Comput Eng, 2(4):253–257

Yang X-S, Deb S (2009) Cuckoo search via Levy flights. In: Proceedings of world congress on nature and biologically inspired computing NaBIC (2009) India. IEEE Publications, USA, pp 210–214

Yang X-S, Deb S (2014) Cuckoo search: recent advances and applications. Neural Comput Appl. doi:10.1007/s00521-013-1367-1

Yang XS (2010) Engineering optimisation: an introduction with metaheuristic applications. Wiley, New York

Zhang M, Gunturk BK (2008) Multiresolution bilateral filtering for image denoising. IEEE Trans Image Process, 17(12):2324–2333

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Malik, M., Ahsan, F. & Mohsin, S. Adaptive image denoising using cuckoo algorithm. Soft Comput 20, 925–938 (2016). https://doi.org/10.1007/s00500-014-1552-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-014-1552-x