Abstract

Image segmentation techniques have been widely applied in many fields such as pattern recognition and feature extraction. For the primate visual attention model, the perceptual organization is an important process to automatically extract the desirable features. In this article, we propose a new method called an automatic multilevel thresholding algorithm using the stratified sampling and Tabu Search (AMTSSTS) by imitating the primate visual perceptual behaviors. In the AMTSSTS algorithm, a gray image is treated as a population with the gray values of pixels as the individuals. First, the image is evenly divided into several strata (blocks), and a sample is drawn from each stratum. Second, a Tabu Search-based optimization is applied to each sample to maximize the ratio between mean and variance for each sample. The threshold number and threshold values are preliminarily determined based on the optimized samples, and are further optimized by a deterministic method which includes a new local criterion function with property of local continuity of an image. Results of extensive simulations on Berkeley datasets indicate that AMTSSTS can obtain more effective, efficient and smooth segmentation, and can be applied to complex and real-time environments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is an essential and important operation for feature extraction, pattern recognition and classification. It is the process of assigning a label to every pixel in an image. Pixels with the same annotated label constitute a region, and the pixels inside this region share similar visual characteristics. Each region is homogeneous and connected. The union of any two spatially adjacent regions is not homogenous.

For intensity images, there are four popular approaches: threshold techniques, edge-based methods, region-based techniques, and connectivity-preserving relaxation methods. Of these, the image thresholding methods are the most widely used in image segmentation. The thresholding techniques involve bi-level thresholding and multilevel thresholding. Bi-level thresholding techniques classify the pixels into two groups; the first group includes the pixel values that are above a certain threshold value and the second group includes the pixel values that are below the threshold value. Multilevel thresholding techniques divide the pixels into several classes; that is, for the pixels that can be grouped as the same class, their pixel values should be within a certain range of threshold values.

Many image thresholding methods can be found in Ma et al. (2010), Sezgin and Sankur (2004). In general, the thresholding methods can be also classified into parametric and nonparametric approaches. In the parametric approaches, the gray-level distribution of each class has a probability density function that is generally assumed to obey a Gaussian distribution and will attempt to find an estimate of the parameters of distribution that will best fit the given histogram data. In the nonparametric approaches, they find the thresholds that separate the gray-level regions of an image in an optimal manner based on discriminating criteria such as the between-class variance, entropy, etc. They are easy to extend to multilevel thresholding; however, the amount of thresholding computation significantly increases with this extension. To overcome this problem, some recent multilevel thresholding techniques based on intelligent optimization algorithms (IOAs) have been proposed and shown promising results. However, they still suffer from many problems. We will discuss these problems in Sect. 2.

The structure of this paper is as follows. Section 2 introduces the related work. Section 3 formulates our problem, and presents the related methods and our proposed algorithm. Section 4 provides rigorous discussion regarding our proposed algorithm from the three different aspects: the optimization method, the sampling method, and algorithmic complexity. Section 5 discusses the experimental results. Finally, Sect. 6 concludes our findings and further work in the future research.

2 Related work

IOAs that were proposed for the multilevel thresholding are divided into several categories, genetic algorithms (GAs) (Hammouche et al. 2008; Bosco 2001; Lai and Chang 2009; Lin et al. 2010; Yin 1999; Yang et al. 2003; Lai and Tseng 2004; Cao et al. 2008), particle swarm optimization algorithms (PSOs) (Chander et al. 2011; Yin 2007; Zahara et al. 2005; Gao et al. 2010; Fan and Lin 2007), ant colony optimization algorithms (ACOs) (Malisia and Tizhoosh 2006; Tao et al. 2008), artificial neural networks (ANNs) (Yang et al. 2003; Bhattacharyya et al 2011), fuzzy computing algorithms (FCs) (Chen and Zhang 2004; Li and Staunton 2007; Tan and Isa 2011), simulated annealing algorithms (SAs) (Hou et al. 2006), and honey bee mating optimization algorithms (HBMOs) (Horng 2010).

Hammouche et al. (2008) proposed a fast multilevel thresholding technique based on GA. This technique employed wavelet transform to reduce the length of the histogram, but it was time consuming to choose the appropriate number of thresholds and threshold values by optimizing the automatic thresholding criterion (ATC) proposed by Yen et al. (1995) cited in Hammouche et al. (2008). Lai and Chang (2009) proposed a clustering-based approach using a hierarchical evolutionary algorithm (HEA) for automatic medical image segmentation with the help of the particular property of HEA, but this approach initialized the number of regions randomly. Chander et al. (2011) proposed a new variant of PSO for image segmentation, of which an iterative procedure was proposed to obtain initial thresholds, however, its computational time was still somewhat expensive. Yin (2007) developed a recursive programming technique for computing the minimum cross entropy thresholding (MCET) objective function which stores the results of preceding tries as the basis for the computation of succeeding ones; it can reduce the order of magnitude of computing the multilevel thresholds to some extent. Gao et al. (2010) proposed the quantum-behaved PSO method, which employed the cooperative method (CQPSO) to conquer the curse of dimensionality. Although the cooperative method ensured that the search space was searched more thoroughly and the chances of finding better solutions were improved, it increased computational complexity. Tao et al. (2008) proposed a fuzzy entropy method that incorporated the ACO method for the segmentation of infrared images. The ACO method was used to obtain the optimal combination of the fuzzy parameters; however, how to automatically decide the threshold number in these works (Yin 2007; Zahara et al. 2005; Gao et al. 2010; Fan and Lin 2007; Malisia and Tizhoosh 2006; Tao et al. 2008; Chang and Chung 2001; Bhattacharyya et al 2011) was not mentioned.

Chen and Zhang (2004) proposed two variants of fuzzy C-means (FCM) clustering method with spatial constraints for image segmentation, which were robust to noise and outliers. Chuang et al. (2006) presented a fuzzy C-means algorithm that incorporated spatial information into the membership function for image segmentation, which was also a powerful method for noisy image segmentation. Li and Staunton (2007) proposed a modified fuzzy C-means segmentation method for uneven illumination images, which was based on both pixel intensity and the average intensity of its neighborhood that was within a segment of the biased illumination field. The FCM method has been a popular algorithm in computer vision and pattern recognition due to its clustering validity and simplicity of implementation, but it often encounters two unavoidable difficulties of deciding the threshold number and obtaining the initial thresholds that are properly distributed. In addition, the papers in Chen and Zhang (2004), Chuang et al. (2006), Li and Staunton (2007) did not cover the method of automatically deciding the threshold number, either. Later, Tan and Isa (2011) presented a novel histogram thresholding fuzzy C-means hybrid (HTFCM) approach for color image segmentation, which consists of the histogram thresholding module and the FCM module. The histogram thresholding module was a good solution to overcome the two problems of FCM that were mentioned above, but it still had higher computational complexity in comparison with the technique that uses the stratified sampling technique in this paper. In Hou et al. (2006), Horng (2010), the authors did not discuss the problem of automatically choosing the threshold number, either.

Although many segmentation methods based on IOAs have been used to reduce the computational time in thresholding, they are limited in the following three aspects. First, the threshold number is predetermined, which implies that users must identify the number of regions beforehand; however, this demand is strict and costly in practice. Second, they need preprocessing to reduce or remove the noise to enhance the convergence rate or to improve the stability using some special techniques (Hammouche et al. 2008; Gao et al. 2010; Chen and Zhang 2004). Third, the IOA is employed to optimize the global criterion function based on between-class variance (Otsu 1979), entropy (Li et al. 1995; Cheng and Lui 1997; Huang and Wang 1995) and minimum bayes error (Kittler and Illingworth 1986; Ye and Danielsson 1988); however, no consideration is given to local continuity of an image.

To rectify these limitations, an automatic multilevel thresholding algorithm (AMTSSTS) using stratified sampling and Tabu Search is proposed. This method is based on the study that higher visual processes of primate visual system appear to select a subset of available sensory information before further processing (Tsotsos et al. 1995; Itti et al. 1998). The fundamental idea of AMTSSTS is “forecasting + optimization”. “Forecasting” corresponds to “select a subset of available sensory information”, and “optimization” corresponds to “further processing”. Hence, AMTSSTS is very different from aforementioned algorithms. In AMTSSTS, the threshold number and threshold values are automatically forecasted by the prediction method combining stratified sampling with Tabu Search, and then they are optimized by an optimization algorithm. In addition, instead of the global criterion function based on between-class variance or entropy method, we did propose a new local criterion function, because each of the pixels in a region is similar with respect to some characteristics or computed properties such as intensity and texture.

3 The proposed method

3.1 Problem formulation

A formal definition of segmentation is given below (1). Let \(I\) be an image and \(H\) be a homogeneity predicate defined over connected pixel groups. The image \(I\) is then partitioned into a set of different regions {\(R_{1} ,R_{2} ,\ldots ,R_{M}\)}, which is formulated as follows:

In the following subsections, we will describe our proposed algorithm in detail.

3.2 Tabu Search

The Tabu search algorithm (Glover 1989, 1990) proposed by Glover was successfully applied to different combinatorial problems such as planning and scheduling problems (Glover and Laguna 1997). It was an iterative heuristic optimization method based on the local search techniques that started from a feasible solution. This method moved to another solution at each iteration by trying to search unexplored regions of the solutions space, and it also attempted to avoid repetitions with the help of a tabu list. This list is a short-term memory mechanism containing solutions that have been visited in the recent past (less than tt iterations ago, where tt called the tabu tenure is the number of previous solutions to be stored). Note that the Tabu Search algorithm excludes the solutions in the tabu list. Moreover, a variation of a tabu list prohibits solutions that have certain attributes or prevents certain moves. The new solution selected at each iteration is the best one belonging to \(N(s)\), the neighborhood of the current solution \(s\). In general, the neighborhood contained all the solutions that could be obtained from \(s\) using non-tabu moves. However, the tabu list raised the problem that might hinder some excellent solutions, which might not have been visited. That is to say, when a single attribute was marked as a tabu, this typically resulted in more than one solution being tabu. Some of these solutions that must now be avoided could be of excellent quality and might not have been visited. To mitigate this problem, “aspiration criteria” are introduced to override a solution’s tabu state.

The Tabu Search heuristic follows the general guidelines (Glover and Laguna 1997). Let \(s\) and \(s^*\) denote the current and best known solutions, respectively. \(G\) is the iteration counter and is used to control the loop in the following pseudo code (as shown in Fig. 1). \(H\) is the tabu list.

In the following section, we will present our AMTSSTS framework.

3.3 Framework of AMTSSTS

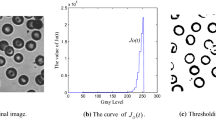

In our method, we treat any pixel value of a gray image as an individual over the population. The proposed AMTSSTS method consists of four main steps: first, an image is evenly divided into some image blocks (strata), and a sample containing a group of pixels is taken from each stratum; second, every sample is optimized by the Tabu Search algorithm whose fitness function is the ratio of its mean and variance; third, the threshold number and threshold values are forecasted according to the optimized samples; and finally, the threshold number and threshold values are optimized by an optimization algorithm. The process of AMTSSTS is shown in Fig. 2. An example of using our segmentation method is further displayed in Fig. 3.

3.3.1 Stratified sampling

Stratified sampling is a method of sampling from a population. In a stratified sample, the sampling frame is divided into non-overlapping groups or strata, e.g., geographical areas, age groups, or genders. A random or systematic sampling technique is then applied within each stratum. This often improves the representativeness of the sample by reducing sampling error. Stratification always achieves great precision provided that the strata have been chosen so that members of the same stratum are as similar as possible with regard to the characteristic of interest. The bigger the differences are between the strata, the greater the gain is in precision. The fitness function in our Tabu Search method is designed according to this principle.

An intensity image \(I\) is treated as a population which contains \(m \, \times \, n\) gray values. We can predict the number of objects in an image by stratified sampling. The sampling method is as follows: an image \(I\) is evenly divided into \(\omega \) strata (as shown in Fig. 4 (\(\omega \) =16)). A sample is then drawn from the middle part of each stratum. The choice of selecting a sample size is discussed in Sect. 4.

3.3.2 The optimization method of samples using Tabu Search

This step is to optimize each sample separately using Tabu Search. The optimized samples can contain salient information about the threshold number and threshold values, and it will be more efficient if the parallelism among samples is developed. The steps and features of our Tabu Search method are described as follows:

-

1.

Fitness function All the samples of an image are arranged from left to right and top to bottom orientation. They are denoted as \(\omega _{1}\), \(\omega _{2}\), ..., \(\omega _{16}\). The mean and variance of \(\omega _{i}\) are \(m_{i}\) and \(s_{i;}\) respectively, where \(i=\) 1, 2,...,16. The fitness function of each sample designed according to Sect. 3.3.1, is then formulated as \(f_{i} = m_{i}/s_{i}\). Note that we prefer a larger \(f_{i}\), \(m_{i}\), and the smaller \(s_{i}\) (In the program, the variance \(s_{i}\) adds 0.0001 temporarily when estimating \(f_{i}\) so as to avoid the exception of dividing by zero raised.)

-

2.

Initialization The initial solutions are generated by Stratified Sampling. Only one solution (sample) is drawn from each stratum.

-

3.

Neighborhood An initial solution \(s\) in each stratum has eight types of moves according to the direction of movement in the corresponding stratum. These are classified as “up move ”, “top-right move”, “right move”, “bottom-right”, “down move”, “bottom-left”, “left move”, and “top-left move”. After the current solution \(s\) selects a move randomly, the new solution \(s' \)at each iteration is generated. Note that the move in every direction follows uniform distribution. More types of moves are needed to yield better solution; however, this increase causes more computational burden.

-

4.

Tabu list, Tabu tenure, aspiration criteria The Tabu list of each stratum is shown in Table 1. The status of each direction is initially set to 0 (if applicable). If \(s' \)is better than \(s\), then \(s \, \leftarrow \, s'\), or else the move is forbidden and the corresponding direction is set to 1 (if not applicable). When all kinds of moves are forbidden, the Tabu list will be reinitialized. This is the aspiration criterion and the tabu tenure strategy in our algorithm.

Table 1 The initialization of Tabu list -

5.

Stopping criteria The search stops after a fixed number of generations is performed or after a maximum number of consecutive generations with no improvement in the best solution. In our experiment, we adopt the former, and \(G\) is set to 20.

3.3.3 The forecasting method for the number of threshold and threshold values

The third step is to forecast the threshold number and threshold values based on the optimized samples. The forecasting results fall into two categories:

-

1.

\(m_{1}=m_{2}={\cdots }=m_{16}\), the threshold number is equal to 1. This is the lower limit of the threshold number in our experiments given in Sect. 5.

-

2.

\(\exists _{i,j}\), \(m_{i}\ne m_{j}\), where \(i\ne j\) or \(\exists _{u,v}\), \(s_{u}\ne s_{v}\), where \(u\ne v\), \(u\), \(v=\)1,...,16, the threshold number is greater than 1 and not greater than 16. The upper limit of the threshold number is 16.

The procedure of our forecasting method is described as follows:

-

1.

\(\omega _{1}\) is treated as a benchmark threshold value of the first category, if the mean of \(\omega _{i}\) is between \(m_{1}\times \) (1\(-\) rangeMean) and \(m_{1}\times \) (1 + rangeMean), and the variance is between \(s_{1}\times (1-{ rangeStd})\) and \(s_{1}\times (1+{ rangeStd})\), then \(\omega _{i}\) belongs to the first category. The rangeMean and rangeStd are measured in percentage, and their ranges are between 0 and 100 %. In our experiment, the gray levels of an image range over [0, 255].

-

2.

In the same way, we treat the first sample \(\omega _{j}\) that is not yet categorized so far as another benchmark threshold value; if the mean of \(\omega _{i}\) (\(i=j\),..., 16) is between \(m_{j}\times \) (1-rangeMean) and \(m_{j}\times (1+{ rangeMean})\); and the variance is between \(s_{j}\times (1-{ rangeStd})\) and \(s_{j}\times (1+{ rangeStd})\), then \(\omega _{i}\) belongs to the second category.

-

3.

All the benchmark threshold values are the forecasting threshold values, the number of which is the threshold number. Empirically, rangeMean can be set to 30 % in \(m_{i}\times \) (1 \(\pm \) rangeMean) and rangeStd can be set to 20 % in \(s_{i}\times \) (1 \(\pm \) rangeStd).

3.3.4 The deterministic optimization algorithm

The fourth step is to further optimize the threshold number and threshold values obtained from the above by implementing a deterministic method which is described in Sect. 4.

4 Algorithm theoretical analysis

4.1 Design and analysis of the deterministic optimization algorithm

Let the gray levels of an image range over [0, \(L\)] and \(h(i)\) denotes the occurrence of gray level \(i\). Let:

Assuming that there are \(M\) thresholds: {\(t_{1}, t_{2},{\ldots }, t_{M}\)}, (1\(\le M\le L-1\)), which divide the original image into \(M\) +1 classes represented as:

For multilevel thresholding, the formula based on Otsu’s (Otsu 1979) method is computed as follows:

where \(t_{0}=\) 0. The optimal thresholds \(t_{1}^*, t_{2}^*\) ,..., \(t_{M}^*\) are the gray levels that maximize (3),

i.e.,

In the Otsu’s method, we exhaustively search for the optimal threshold by maximizing inter-class variance. The k-means clustering aims to partition the \(n\) observations into \(k\) sets (\(k\, \le \, n\)) by minimizing the within-cluster sum of squares. They both adopt the Euclidean distance measure as the similarity metric and squared error metric as the criterion function. The Otsu’s method is extensively employed as the global criterion function of an image (Gao et al. 2010). Because an image has the property of local continuity, we believe that a local criterion function will be better for image thresholding. Therefore, a new local criterion function (4) is designed in the proposed algorithm.

Assuming that the set of the forecasting threshold values \(F_{C}=\) {\(t_{1}, t_{2}\) ,..., \(t_{M}\)}, \(t_{1} \le \,t _{2}\) ,..., \(\le \, t_{M}\), is arranged on a line segment (see Fig. 5). Let \(t_{r}\) denote an arbitrary forecasting threshold value and \(t_{r}^*\) be the corresponding optimal threshold value. We obtain \(t_{r}^*\) by Eq. (5).

When we obtain the first optimal threshold value \(t_{1}^{*}\), \(F_{C}\) is immediately upgraded to \(F_{C}'\) = {\(t_{1}^{*}, t_{2} ,\ldots , t_{M}\)}. Consequently, the second optimal threshold value \(t_{2}*\) is obtained according to \(F_{C}'\), until \(F_{C}'=\) {\( t_{1}^{*}, t_{2}^{*},\ldots ,t_{M}^{*} \)}, which is the set of the optimized threshold values.

Moreover, the threshold number will sometimes decrease after it is optimized: that is, when two optimized threshold values are the same or similar, the threshold number will decrease (see Fig. 6).

Assuming that \(t_{r-{1}}^{*}\) is obtained, a possible case that \(t_{r}^{*}\) will be equal to \(t_{r-{1}}^{*}\) is presented as follows:

Theorem 1

Let \(A=\frac{\sum \nolimits _{i=t_{r-2}^*}^{t_r } {i\times P_i} }{t_{r}-t_{r-2}^*+1}=\frac{\sum \nolimits _{i=t_{r-1}^*}^{t_{r+1} } {i\times P_i} }{t_{r+1} -t_{r-1}^*+1}\),\(A\le t_{r-1}^*\times P_{t_{r-1}^*} (\text{ or } A\ge t_{r-1}^*\times P_{t_{r-1}^*} )\) and \(f(i)=i\times P_{i}\) is a strictly monotone increasing (or decreasing) function in \([t_{r},t_{r+1}]\), then \(t_{r}^{*} =t_{r-{1}}^{*}\).

Proof

See Appendix \(\square \)

4.2 Analysis of the stratified sampling method

The stratified sampling technique is similar to cluster analysis, which is the assignment of a set of observations into subsets (called clusters), so that observations in the same cluster are similar. This sampling is a method of sampling from a population, so it has lower computational complexity. However, Clustering needs to analyze all the pixels, and many clustering algorithms require the specification of the number of clusters, prior to the execution of the algorithm. What important is that an image has the property of local continuity and similarity, so it seems that the stratified sampling method is much suitable for automatic multilevel thresholding.

When we open our eyes on a scene, our visual system can automatically segment it into a set of objects in the scene. In computer vision, how many samples should we draw from an image and how large should each sample be to represent the scene (image) better? We think it must be related to the content, size, and grayscale of an intensity image. Therefore, a thorough analysis is given in the following subsection.

4.2.1 Analysis on the number of samples

The segmented results of the two images are shown in Table 2. “\(a\times b\)” denotes the number of rows of the samples is \(a\) and the number of columns is \(b\). The sample size is 20 \(\times \) 20 \(=\) 400. “Forecasted” denotes the forecasted thresholds by the forecasting method above, and “Optimized” denotes the optimized thresholds by the optimization algorithm above. From Table 2, the following observations can be made: the greater the number of samples is, the more the same/similar elements will sit between the set of “Forecasted” threshold values and the set of “Optimized” threshold values, but the higher the algorithmic complexity. The experimental results of other images show the same conclusion.

4.2.2 Analysis on the sample size

The experimental results of the four images are shown in Table 3. “\(c\times d\)” denotes the sample size. From Table 3, we can conclude that the smaller the sample size is, the better its corresponding fitness is in general. The experimental results of other images show the same conclusion. But if the sample size is too small, it cannot represent the image better.

4.2.3 Analysis of time complexity

The computational time of the thresholding methods based on the Otsu’s method is very expensive when the exhaustive search is applied. For \(M\) thresholds, the complexity is \(O(L^{M})\), which grows exponentially with the threshold number. For AMTSSTS, the complexity of the Tabu Search method is \(O(G*\omega *tt)\), the complexity of the forecasting method is \(O({\omega *(\omega -1)}/2)\), the complexity of the deterministic optimization algorithm is \(O(M*L)\); where \(G\) is the generation number, \(\omega \) is the number of samples, tt is the Tabu tenure. A simple calculation shows that AMTSSTS has lower complexity and is insensitive to the threshold number, which is also shown in Table 5.

5 Experiments

5.1 Setup

We compare the performance of the proposed AMTSSTS algorithm with other algorithms. They are (1) the clustering-based approach using a hierarchical evolutionary algorithm (CHEA) (Lai and Chang 2009), (2) the quantum-behaved PSO algorithm employing the cooperative method (CQPSO) (Gao et al. 2010), and (3) the maximum entropy-based honey bee mating optimization thresholding (MEHBMOT) method (Horng 2010). CHEA can automatically determine the threshold number. For CQPSO and MEHBMOT, their threshold numbers are preset to values estimated by the proposed method.

The experiments are implemented on the Berkeley segmentation data set (Research 0000). The segmented results of nine images are shown in Fig. 9. Figures 7 and 8 display these nine original images with their corresponding histograms. In AMTSSTS, the image is 8-bit grayscale and the size is 481\(\times \)321. The sample size is 10\(\times \)10 and the number of samples is 16; that is, the upper limit of the threshold number is 16 and it is also easy to distinguish between the levels of brightness by human eyes. The other parameters of AMTSSTS are given in Sect. 3.3 and the parameters of other algorithms are set the same as described in their corresponding paper, except that the generation numbers in CQPSO and MEHBMOT are set to 100. We then conduct our experiments on a Compad notebook, with 512 MB RAM and a single core 1.6 GHz CPU, Microsoft Windows XP, and Matlab 7.2. Moreover, three aspects of the performance evaluation are taken into consideration in the experiments: (1) the comparison of segmentation results, (2) the comparison of threshold values, peak signal to noise ratio (PSNR), and computation time, and (3) the ability to conquer “the curse of dimensionality”. In our experiments, when two thresholds satisfy the condition that the absolute value of their difference is not more than 5, we will unify them. Consequently, the threshold number obtained through the experiments may be less than \(M\); i.e., the initial number of thresholds in CQPSO and MEHBMOT.

5.2 Results

5.2.1 Comparison of segmentation results

Figure 9 displays the visual interpretation of the segmentation results. The findings show that our method outperformed others in terms of segmentation quality; in particular, those images of Fig. 9a–f, h images. When the segmented images are enlarged, the following findings are observed: in Fig. 9a, our method obtained the clearer results with 7 thresholds than those of CHEA with 6 thresholds. In Fig. 9c, the segmented result of our method was absolutely clear, except that the upper of the sky was dim. Although CHEA removed the blur of the sky, the bridge and boat were blurred. CQPSO obtained even worse results than those of CHEA. MEHBMOT could clearly separate the sky and water, but the scene was a bit obscured and gloomy. In Fig. 9d, the result of CHEA was more blurred than that of our method and the writing was illegible; the result of CQPSO was also a bit vague. The results of MEHBMOT and our method were similar. In Fig. 9f, the three faces of the results of CHEA and CQPSO were vague and the profiles were not very clear. However, our method and MEHBMOT showed promising results and the segmentation result of our method was much clear and precise. In Fig. 9h, our method also produced a more continuous and smoother segmentation result than others.

5.2.2 Comparison of threshold values, PSNR, computation time

In this section, we use peak signal to noise ratio (PSNR) (Horng 2010; Arora et al. 2008) to evaluate the quality of segmented images. PSNR is defined and measured in decibel (dB), and as follows:

where RMSE is the root mean-squared error defined as:

where \(I \) and \(I'\) are the original and segmented images of size \(m\, \times \,n\), respectively. A larger value of PSNR means that the quality of the segmented image is better. Furthermore, as the threshold number increases, the PSNR tends to be larger. Table 4 shows the comparative analyses of AMTSSTS, CHEA, CQPSO, and MEHBMOT in terms of threshold values, PSNR, and computational time over different images. CHEA was the slowest among the four methods. For AMTSSTS, the computation time was insensitive to the threshold number and was faster than other algorithms. The PSNR of images ‘a1’, ‘b1’, ‘e1’, ‘f1’ and ‘h1’ (as shown in Fig. 9) was the largest among the four methods. The PSNR of image ‘i1’ in Fig. 9 was smallest and the PSNR values of the images ‘c1’, ‘d1’ and ‘g1’ were close to the best. Moreover, the visual inspection of the segmented images shows that AMTSSTS can obtain clearer and more complete segmentation images for video image compression.

Note that in Table 4, \(M\) was predetermined in CQPSO and MEHBMOT according to that estimated by AMTSSTS; \(M\) was automatically determined in CHEA and AMTSSTS.

5.2.3 Curse of dimensionality

To verify the searching ability of our proposed method on high dimensionality, we tested each image, with small values of rangeMean and rangeStd. Table 5 shows the mean computation time (seconds) for 100 runs. This is also consistent with earlier findings suggesting that our proposed method is robust under high dimensionality.

6 Conclusion and future work

This article proposed the AMTSSTS algorithm for automating the multilevel thresholding selection procedure using stratified sampling and a new local criterion function, which extends our preliminary research (Jiang et al. 2012). It has been shown in the previous sections, with the help of results obtained for nine benchmark images, that the proposed method was able to converge rapidly and to produce better segmentation results than several new and developed methods from recent years. In addition, the algorithm has the following characteristics: (a) it does not need to consider any auxiliary or extra image information such as contextual or textual properties. The threshold number does not need to be known in advance, (b) AMTSSTS avoids analyzing histograms and has a lower computing complexity that is almost independent from the threshold number, and (c) the proposed algorithm can produce effective, efficient, and smoother results. We also presented the detailed analysis from three aspects: its optimization method, sampling method and algorithmic complexity.

In our future research, we will further attempt to find a better method to forecast the threshold values and its numbers, with the help of statistical methods and the theory of computer vision. Furthermore, we will apply it to complex and real-time image analysis problem, such as automatic target recognition and medical image analysis.

References

Arora S, Acharya J, Verma A, Panigrahi PK (2008) Multilevel thresholding for image segmentation through a fast statistical recursive algorithm. Pattern Recognit Lett 29(2):119–125

Bhattacharyya S et al (2011) Multilevel image segmentation with adaptive image context based thresholding. Appl Soft Comput 11:946–962

Bosco GL (2001) A genetic algorithm for image segmentation. In: Proceedings of IEEE international conference on image analysis and processing, Palermo, pp. 262–266

Cao L, Bao P, Shi ZK (May 2008) The strongest schema learning GA and its application to multilevel thresholding. Image Vis Comput 26(5):716–724

Chander A, Chatterjee A, Siarry P (2011) A new social and momentum component adaptive PSO algorithm for image segmentation. Exp Syst Appl 38:4998–5004

Chang CY, Chung PC (2001) Medical image segmentation using a contextual-constraint-based Hopfield neural cube. Image Vis Comput 19:669–678

Chen S, Zhang D (2004) Robust image segmentation using FCM with spatial constraints based on new kernel-induced distance measure. IEEE Trans Syst Man Cybern B 34:1907–1916

Cheng HD, Lui YM (1997) Automatic bandwidth selection of fuzzy membership function. Inf Sci 103:1–21

Chuang KS, Tzeng HL, Chen S et al (2006) Fuzzy C-means clustering with spatial information for image segmentation. Comput Med Imaging Graph 30:9–15

Fan SKS, Lin Y (2007) A multi-level thresholding approach using a hybrid optimal estimation algorithm. Pattern Recognit Lett 28:662–669

Gao H, Xu W, Sun J, Tang Y (2010) Multilevel thresholding for image segmentation through an improved quantum-behaved particle swarm algorithm. IEEE Trans Instrum Meas 59(4):934–946

Glover F (1989) Tabu search-part I. ORSA J Comput 1(3):190–206

Glover F (1990) Tabu search-part II. ORSA J Comput 2(1):4–32

Glover F, Laguna M (1997) Tabu Search. Kluwer, Boston

Hammouche K, Diaf M, Siarry P (2008) A multilevel automatic thresholding method based on a genetic algorithm for a fast image segmentation. Comput Vis Image Understand 109(2):163– 175

Horng MH (2010) A multilevel image thresholding using the honey bee mating optimization. Appl Math Comput 215(9):3302–3310

Hou Z, Hu Q, Nowinski WL (2006) On minimum variance thresholding. Pattern Recognit. Lett. 27:1732–1743

http://www.eecs.berkeley.edu/Research/Projects/CS/vision/bsds/

Huang LK, Wang MJ (1995) Image thresholding by minimizing the measure of fuzziness. Pattern Recognit 28:41–51

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Jiang Y, Tsai P, Hao Z, Cao L (2012) A novel auto-parameters selection process for image segmentation, WCCI 2012. IEEE World Congress on Computational Intelligence, Brisbane, Australia, 10–15 June 2012

Kittler J, Illingworth J (1986) Minimum error thresholding. Pattern Recognit 19:41–47

Lai CC, Tseng DC (2004) A hybrid approach using Gaussian smoothing and genetic algorithm for multilevel thresholding. Int J Hybrid Intell Syst 1(3):143–152

Lai CC, Chang CY (2009) A hierarchical evolutionary algorithm for automatic medical image segmentation. Exp Syst Appl 36:248– 259

Li X, Zhao Z, Cheng HD (1995) Fuzzy entropy threshold approach to breast cancer detection. Inf Sci 4:49–56

Lin Z, Wang Z, Zhang Y (2010) Optimal evolution algorithm for image thresholding[J]. J Comput-Aided Des Comput Graph 22(7):1201–1206 (In Chinese)

Li M, Staunton RC (Nov. 2007) A modified fuzzy C-means image segmentation algorithm for use with uneven illumination patterns. Pattern Recognit 40(11):3005–3011

Ma Z, Tavares JMRS, Jorge RN, Mascarenhas T (2010) A review of algorithms for medical image segmentation and their applications to the female pelvic cavity. Comput Methods Biomech Biomed Eng 13(2):235–246

Malisia AR, Tizhoosh HR (2006) Image thresholding using ant colony optimization. In: Proceedings of the 3rd Canadian conference on computer and robot vision, Waterloo, pp 26–31

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern SMC-9:62–66 (1979)

Sezgin M, Sankur B (2004) Survey over image thresholding techniques and quantitative performance evaluation. J Electron Imaging 13(1):146–165

Tan KS, Isa NAM (2011) Color image segmentation using histogram thresholding-fuzzy C-means hybrid approach. Pattern Recognit 44:1–15

Tao WB, Jin H, Liu LM (May 2008) Object segmentation using ant colony optimization algorithm and fuzzy entropy. Pattern Recognit Lett 28(7):788–796

Tsotsos JK, Culhane SM, Wai WYK, Lai YH, Davis N, Nuflo F (1995) Modelling visual attention via selective tuning. Artif Intell 78(1–2):507–545

Yang ZH, Pu ZB, Qi ZQ (2003) Relative entropy multilevel thresholding method based on genetic algorithm. In: IEEE international conference on neural networks and signal processing, Nanjing, China, pp 583–586

Ye Q, Danielsson P (1988) On minimum error thresholding and its implementations. Pattern Recognit Lett 7:201–206

Yen JC, Chang FJ, Chang S (1995) A new criterion for automatic multilevel thresholding. IEEE Trans Image Process IP-4:370–378 (1995)

Yin PY (1999) A fast scheme for optimal thresholding using genetic algorithms. Signal Process 72:85–95

Yin PY (2007) Multilevel minimum cross entropy threshold selection based on particle swarm optimization. Appl Math Comput 184:503–513

Zahara E, Fan SKS, Tsai DM (2005) Optimal multi-thresholding using a hybrid optimization approach. Pattern Recognit Lett 26:1082– 1095

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Loia.

Appendix: Proofs for Sect. 4

Appendix: Proofs for Sect. 4

Proof of Theorem 1

Proof Let \(\beta =\vert t_{r-1}^*\times P_{t_{r-1}^*} -A\vert \).

According to Eqs. (6) and (7), we have,

When \(v_r^*\in [t_r,t_{r+1}]\), \(f(v_r^*)\) is a strictly monotone increasing function, hence \(\vert v_r^*\times P_{v_r^*} -A\vert >\beta \). So, we can obtain the function

which can get the minimum value when \(v_r^*=t_{r-1}^*\) in Eq. (7); i.e., \(t_r^*=t_{r-1}^*\). The similar method for the case\(A\ge t_{r-1}^*\times P_{t_{r-1}^*} \).

Rights and permissions

About this article

Cite this article

Jiang, Y., Tsai, P., Hao, Z. et al. Automatic multilevel thresholding for image segmentation using stratified sampling and Tabu Search. Soft Comput 19, 2605–2617 (2015). https://doi.org/10.1007/s00500-014-1425-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-014-1425-3