Abstract

This paper proposes a framework named multi-objective ant colony optimization based on decomposition (MoACO/D) to solve bi-objective traveling salesman problems (bTSPs). In the framework, a bTSP is first decomposed into a number of scalar optimization subproblems using Tchebycheff approach. To suit for decomposition, an ant colony is divided into many subcolonies in an overlapped manner, each of which is for one subproblem. Then each subcolony independently optimizes its corresponding subproblem using single-objective ant colony optimization algorithm and all subcolonies simultaneously work. During the iteration, each subproblem maintains an aggregated pheromone trail and an aggregated heuristic matrix. Each subcolony uses the information to solve its corresponding subproblem. After an iteration, a pheromone trail share procedure is evoked to realize the information share of those subproblems solved by common ants. Three MoACO algorithms designed by, respectively, combining MoACO/D with AS, MMAS and ACS are presented. Extensive experiments conducted on ten bTSPs with various complexities manifest that MoACO/D is both efficient and effective for solving bTSPs and the ACS version of MoACO/D outperforms three well-known MoACO algorithms on large bTSPs according to several performance measures and median attainment surfaces.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In real-world applications, there are lots of multi-objective combinatorial optimization problems, where several conflicting objectives have to be optimized simultaneously and the goal is to find a set of solutions with good trade-offs among different objectives, usually called Pareto solutions (Miettinen 1999). Inspired by the foraging behavior of real ants, Dorigo proposed a novel metaheuristic algorithm, ant colony optimization (ACO), to solve classical traveling salesman problems (TSPs) (Dorigo et al. 1996; Dorigo and Stützle 2004). Subsequently various versions of ACO were designed, such as ant colony system (ACS) (Dorigo and Gambardella 1997), Max–Min ant system (MMAS) (Stützle and Hoos 2000) and rank-based ant system (ASrank) (Bullnheimer et al. 1999). As an excellent technique for solving single-objective combinatorial optimization problems, ACO is also suitable for multi-objective combinatorial optimization problems, as it is a colony-based optimization approach which can obtain a certain number of Pareto solutions in a single run (García-Martínez et al. 2007; Angus and Woodward 2009).

In the past two decades, various versions of multi-objective ACO (MoACO) were presented (Mariano and Morales 1999; Iredi et al. 2001; T’kindt et al. 2002; Barán and Schaerer 2003; Doerner et al. 2006; López-Ibáñez and Stützle 2010a; Cardoso et al. 2011). In García-Martínez et al. (2007), a taxonomy of MoACO was discussed in terms of the number of pheromone trails and heuristic matrices. Also a comparative analysis was made using experiments carried out on bi-objective traveling salesman problems (bTSPs) and some guidelines on how to devise MoACO algorithm were given. Angus and Woodward (2009) summarized existing MoACO algorithms and provided a classification method, which is an extension of the ACO framework in Gambardella et al. (1999). In what follows, we group various MoACO algorithms into two main classes. In the first class, several objectives were dealt with in a lexicographic ordering manner, such as in MOAQ (Mariano and Morales 1999) and SACO (T’kindt et al. 2002). This class requires the prior knowledge of problems, which could hardly be obtained in real-world applications. The second class of MoACO simultaneously handles several objectives by the use of multiple pheromone trails (Iredi et al. 2001), multiple heuristic matrices (Barán and Schaerer 2003) or a problem-specific pheromone update scheme (Doerner et al. 2006). When designing multi-objective algorithms, two dilemmas are encountered, i.e., (1) a good balance among conflicting objectives is difficult to maintain in the process of iteration, which results in an uneven Pareto front; and (2) it is difficult to approach the Pareto optimal front. These predicaments can be narrowed to the problem of how to appropriately configure the algorithm. Recently, López-Ibáñez and Stützle (2010a) proposed a software framework, which allowed to instantiate the most prominent MoACO algorithms, and the authors also applied automatic algorithm configuration techniques to MoACO algorithm.

It is well known that a Pareto solution to a multi-objective optimization problem (MOP) could be an optimal solution of a scalar optimization problem whose objective is an aggregation of all objectives in the MOP (Miettinen 1999). To obtain a set of Pareto solutions, a MOP can be decomposed into a number of scalar optimization subproblems and thus single-objective problem solvers can be used without many modifications. In Zhang and Li (2007) and Peng et al. (2009), the idea was introduced into a multi-objective genetic algorithm and better results than NSGA-II (Deb et al. 2002) and MOGLS (Ishibuchi and Murata 1998; Jaszkiewicz 2002) were obtained by testing multi-objective 0–1 knapsack problems, multi-objective continuous optimization problems and/or bTSPs. To the best of our knowledge, no investigation focuses on using the decomposition idea to enhance the MoACO performance.

In this paper, a framework named multi-objective ant colony optimization based on decomposition (MoACO/D) is proposed to solve bTSPs. To use the decomposition idea to specify MoACO, a bTSP is first decomposed into a number of scalar optimization subproblems, each of which maintains an aggregated pheromone trail and an aggregated heuristic matrix. In order to adapt the decomposition, an ant colony is divided into subcolonies in an overlapped way, each of which is for one subproblem. During the iteration, each subcolony uses the aggregated pheromone trail and the aggregated heuristic matrix to solve its corresponding subproblem. After an iteration, a pheromone trail share procedure is evoked to realize the information share of those subproblems solved by common ants. It is worth noting that each subcolony independently optimizes its corresponding subproblem using single-objective ACO algorithm and all subcolonies simultaneously work in a single iteration. Based on the framework, three MoACO algorithms designed by, respectively, combining MoACO/D with AS, MMAS and ACS are presented. In the experiments, two important parameters in every MoACO/D variation are discussed in an empirical way. A large number of experiments are conducted on ten bTSPs with various complexities and experimental results show that MoACO/D is efficient and effective for solving bTSPs and the ACS version of MoACO/D obtains the best performance in eight out of ten bTSPs in terms of several measures and median attainment surfaces.

2 Problem statement

As an extension of a traditional TSP, a bTSP is to seek for paths simultaneously satisfying two conflicting objectives. As usual, a bTSP can be modeled as an undirectedly weighted graph G(L, A), where L is a set of vertices and A is a set of undirected arcs linking each pair of vertices. Thus a solution π to a bTSP is a permutation of L vertices. A bTSP can be formulated as

where f 1(π) and f 2(π) are two objective functions; c mπ (i)π (j) is the cost value of the arc (i, j) connecting the vertices i and j in the mth objective, \(m = 1,2, i,j \in \{ 1,2, \ldots ,L\}\) and i ≠ j. The cost could be related to distance, time, money or energy.

In the theory of computational complexity, a bTSP belongs to the class of NP-complete problem. Lots of engineering problems, such as multi-objective network structure design problems, multi-objective machine scheduling problems and multi-objective vehicle routing problems, can be formulated as bTSPs. Consequently, a bTSP is frequently employed as a benchmark problem to evaluate the performance of multi-objective optimization algorithms (García-Martínez et al. 2007; Peng et al. 2009; López-Ibáñez and Stützle 2010b; Jaszkiewicz and Zielniewicz 2009).

3 MoACO/D

This section starts from the decomposition approach and then turns to the detailed description of MoACO/D framework. Finally, the characteristics of MoACO/D and a contrast with existing approaches are presented. For the convenience of discussion, a bTSP is taken as an example in this section.

3.1 Decomposition

This section discusses the decomposition method, the Tchebycheff approach, which will be used in MoACO/D to convert a bTSP into a number of scalar optimization subproblems. The Tchebycheff approach can be described as follows (Miettinen 1999): Let \(\{{\varvec{\lambda}^{1}},{\varvec{\lambda}^{2}}, \ldots, {\varvec{\lambda}^{N}}\} \hbox{ and } {\varvec{z}^{*}} = (z_{1}^{*},z_{2}^{*})\) denote a set of uniform spread weighted vectors and a reference point, respectively, where \(z_{i}^{*} = \min \{ {{f_{i}}(\pi )|\pi \in \Upomega }\}, i = 1,2; \) then a bTSP can be decomposed into N scalar optimization subproblems and MoACO/D minimizes all these N subproblems simultaneously in a single run. The objective function of the kth subproblem is depicted as

where \({\varvec{\lambda}}^{k} = (\lambda_{1}^{k},\lambda_{2}^{k})\) is the weight vector of the kth subproblem, \(k=1, 2, \ldots, N; \,\pi\) is a feasible solution of a bTSP.

If \(\pi^{*}\) is a Pareto solution of a bTSP, there exists a weight vector \({\varvec{\lambda}}\) such that \(\pi^{*}\) is the optimal solution of (2). On the other hand, given a weight vector \(\varvec{\lambda}, \) the optimal solution of (2) is a Pareto solution of the bTSP. Thus with a set of evenly spread weight vectors and a good solver for (2), we can obtain Pareto solutions having good approximations and distributions.

3.2 MoACO/D

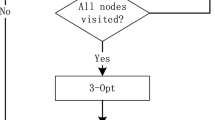

Given N evenly spread weight vectors, MoACO/D first decomposes a bTSP into N scalar optimization subproblems, and an ant colony with N ants is divided into N subcolonies according to the distance between vectors. Meanwhile, each subproblem maintains an aggregated pheromone trail and an aggregated heuristic matrix, which are initialized before the process of iteration and never aggregated again during the run. MoACO/D also uses an external unbounded archive to store non-dominated solutions. During the iteration, each subcolony independently and simultaneously optimizes its subproblem using corresponding pheromone trail and heuristic matrix. After an iteration, a pheromone trail share procedure is evoked to implement the information share of those subproblems solved by common ants, and solutions stored in the archive are updated. The pseudocode for MoACO/D is shown in Fig. 1, where each step is described as follows:

-

(1)

This step consists of three processes: the decomposition of a bTSP, the division of an ant colony and the initialization of aggregated pheromone trails and aggregated heuristic matrices. Their detailed descriptions are as follows:

-

(a)

Decompose bTSP This process uses the weight vectors to transform an original bTSP into a number of scalar subproblems problems and N aggregated distance matrices are generated, one for every subproblem. MoACO/D tackles the bTSP by solving these subproblems during the run. Given N evenly spread weight vectors \(\{{\varvec{\lambda}^{1}},{\varvec{\lambda}^{2}}, \ldots, {\varvec{\lambda}^{N}}\}, {\varvec{\lambda}^{k}} = (\lambda _{1}^{k},\lambda_{2}^{k}), \) satisfying λ k1 , λ k2 ≥ 0 and \(\lambda_{1}^{k} + \lambda_{2}^{k} = 1,\, k = 1,\ldots, N, \) a bTSP is converted into N scalar optimization subproblems using Tchebycheff approach. The objective function of each subproblem is formulated in (2). However, as usual, the process of finding the exact reference point \({\varvec{z}^{*}}\) in (2) is very time consuming; hence, we use \({\varvec{z}}\) for substitution at the initial step, i.e., \({\varvec{z}} = [{f_{1}}(\pi ), {f_{2}}(\pi)],\, t = 1, \) where [f 1(π), f 2(π)] is the objective vector produced by a random solution π. Then along with the algorithm running, \({\varvec{z}}\) will be updated.

-

(b)

Divide ant colony To adapt the decomposition, a colony with N ants is divided into N subcolonies in an overlapped manner, which is similar to the proposal of Iredi et al. (2001). To be specific, the kth subcolony for the kth subproblem is denoted as \(S(k), S(k) = \{ {i}_{1}, {{i}_{2}},\ldots, {i}_{T}\}\) and 1 < T < N, where \({i_1}, {i_2}, \ldots, {i_T}\) are the indexes of the closest T weight vectors to \({\varvec{\lambda}}^{k}.\) Here, the Euclidean distance is used to measure the closeness between any two weight vectors; thus, the closest weight vector to \({\varvec{\lambda}}^{k}\) is itself, i.e., i 1 = k. Then apply \(A(h), A(h) = \mathop {\cup}\limits_{k =1, 2, \ldots, N} \{ k|h \in S(k)\} , \) to represent a set of the indexes of the subproblems solved by the hth ant. The allocation leads to that ants belong to multiple subcolonies at the same time. Hence, each ant will solve at least one subproblem and construct more than one solution per iteration, that is, \(| {A(h)}|\geq 1. \) Besides, a relationship among subproblems is built by the use of A(h), which will help to implement information share among subproblems during the run, as stated in the next step.

To clearly illustrate the division procedure, Fig. 2 shows an example with ten ants and five weight vectors in the neighborhood of each weight vector, i.e., N = 10 and T = 5. Therefore, in every iteration 50 solutions are constructed. In this figure, the ten ants are labeled as 1, 2, …, 10, denoted by heavy points, and the ten straight lines represent ten subproblems. The distances between each pair of adjacent ants are 2, 2, 3, 1, 1, 2, 2, 1, 3, respectively. A straight line under several ants implies the subproblems solved by these ants. In this division, \(S(1) = S(2)= S(3) = \{1, 2, 3, 4, 5\}, S(4) = S(5) = \{3, 4, 5, 6,7\}, S(6) = \{4, 5, 6, 7, 8\}, S(7) = \{5, 6, 7, 8, 9\}, S(8) = S(9) = S(10) = \{6, 7, 8, 9, 10\}, A(1) = A(2) = \{ 1,2,3\}, A(3) = \{ 1, 2, 3, 4, 5\}, A(4) = \{1, 2, 3, 4, 5, 6\}, A(5) = \{1, 2, 3, 4, 5, 6, 7\},A(6) = A(7) = \{4, 5, 6, 7, 8, 9, 10\}, A(8) = \{6, 7,8, 9, 10\}, A(9) = \{7, 8, 9, 10\}, A(10) = \{8, 9,10\}.\)

-

(c)

Initialize pheromone trails and heuristic matrices In MoACO/D, each subproblem maintains an aggregated pheromone trail and an aggregated heuristic matrix, which are initialized using weight vector \({\varvec{\lambda}}\) before iteration and will never be aggregated again during the run. For the kth subproblem, the pheromone trail \({\mathbf{T}}^{k} = {[\tau_{ij}^{k}]_{L \times L}}\) is initialized as

$$ \tau_{ij}^{k} = \tau_{0}^{k}, $$(3)where τ k0 is the initial pheromone trail whose value depends on the single-objective ACO optimizer used in MoACO/D. The heuristic matrix \({\mathbf{I}}^{k} = {[\eta_{ij}^{k}]_{L \times L}}\) is calculated by weighted cost values as

$$ \eta_{ij}^{k} = 1\left/\sum\limits_{m = 1}^{2} {\lambda_{m}^{k}c_{ij}^{m}}, \right. $$(4)where c m ij is the cost value connecting the vertices i and j for the mth objective.

-

(a)

-

(2)

Five processes are involved in this step: tour construction, pheromone trails update, reference point update, pheromone trail share and external archive update. Each process is further described as follows:

-

(a)

Construct tours For every subcolony, each ant employs an ACO optimizer to construct a tour for corresponding subproblem using aggregated pheromone trail and heuristic matrix.

-

(b)

Update pheromone trails After all ants have constructed their tours for corresponding subproblems, the pheromone trails are updated. This step depends on the ACO optimizer used in MoACO/D.

-

(c)

Update reference point After all ants in S(k) finish their construction of tours, each component z m in reference point \({\user2{z}}\) is updated with the minimum of the objective value in \(\{ {{f_m}({\pi^h})|h \in S(k)}\},\, m=1, 2, \) that is

$$ {z}_{m} = \mathop{\min}\limits_{h \in S(k)} \{{f_{m}}({\pi^{h}})\}. $$(5)Through update, the reference point \({\user2{z}}\) will approach the point \({\user2{z}}^{*}.\) Therefore, according to the idea of Tchebycheff decomposition, the solution of (2) will more likely be a Pareto solution of (1).

-

(d)

Share pheromone trails Pheromone trail share is realized by the use of best ant b k and the indexes set A(b k ). First, the best ant b k in S(k) is the one that has a tour with the minimal fitness, i.e.,

$${b}_{k} = \arg \mathop{\min}\limits_{h \in S(k)} \{ {g}_{k}({\pi^{h}}|{\varvec{\lambda}}^{k},{\user2{z}}) \}. $$(6)Then the tour \(\pi^{b_{k}}\) traveled by the ant b k is applied to update the pheromone trails \({\mathbf{T}}^{{p_1}}, {\mathbf{T}}^{p_{2}}, \ldots ,{\mathbf{T}}^{p_{|A({b_{k}})|}}\) of all the subproblems \(p_{1}, p_{2}, \ldots ,p_{| A({b_{k}})|} ({p_{r}} \in A({b_{k}}), r = 1, 2, \ldots ,| {A({b_{k}})}|)\) solved by the ant b k . To be specific, for the subproblem \({p_{r}}, {p_{r}} \in A({b_{k}}), \) the pheromone levels \(\tau_{ij}^{p_r}\) of all the arcs (i, j) belonging to the tour \(\pi^{b_{k}}\) is updated by

$$ \tau_{ij}^{p_{r}} = \tau_{ij}^{p_{r}} + \Updelta \tau^{p_{r}}, $$(7)where \(\Updelta \tau^{p_{r}}\) is the pheromone quantity deposited on the tour \(\pi^{b_{k}}\) for the subproblem p r and is calculated by

$$\Updelta \tau^{p_{r}} = 1\left/\sum\limits_{m = 1}^{2} \lambda_{m}^{p_{r}}f_{m}(\pi^{b_{k}}).\right. $$(8)The solution \(\pi^{b_k}\) is used to update the pheromone trails of more than one colony per iteration, i.e., the pheromone trails \({\mathbf{T}}^{p_{r}}\) of the subproblem \(p_{r}, p_{r} \in A(b_{k}),\, r = 1, 2, \ldots ,| {A({b_{k}})} |. \) The aim is to implement information share among subproblems solved by common ants, which will guide the search directions of the ants for other subproblems in the subsequent iterations.

-

(e)

Update archive An external unbounded archive is used to collect Pareto solutions found during the run. To be specific, for the hth objective vector \(F(\pi^{h}), h \in S(k), \) all the objective vectors dominated by F(πh) are removed from the archive, and if no objective vector in the archive dominates F(πh), F(πh) is added into the archive. When the algorithm stops, we obtain the final approximate Pareto solutions in the archive.

-

(a)

MoACO/D introduces the division strategy of an ant colony to specify the decomposition approach of a bTSP and uses a single-objective ACO optimizer to solve each subproblem. Thus, we can obtain a good Pareto front with a good approximation and an even distribution.

3.3 Characteristics

MoACO/D possesses some characteristics, i.e., (1) weights are assigned to subproblems rather than ants; (2) an ant belongs to multiple subcolonies at the same time and hence constructs more than one solution per iteration; and (3) the same solution \(\pi^{b_{k}}\) is further used for updating the pheromone trails of more than one subproblems to implement information share among subproblems. In the light of the taxonomy proposed by López-Ibáñez and Stützle (2010), MoACO/D belongs to the category that uses one pheromone trail and one heuristic matrix per colony, weighted sum aggregation and many weight vectors.

The first distinction between MoACO/D and the existing approaches is the way that handles multiple objectives during the run. In MoACO/D, the original MOP is transformed into a number of scalar optimization problems, whereas most existing approaches use a lexicographic ordering or cooperative search manner and the non-dominated sorting to handle the multiple objectives.

The other distinction is the pheromone trail update, including two aspects: which solution is utilized to update the pheromone trails and which pheromone trails are updated. Except for the general pheromone trails update as in single-objective ACO algorithms, MoACO/D employs a pheromone trail share procedure, which is also an pheromone trail update procedure. In MoACO/D, for each subproblem the optimal solution searched by an ant is used to update the pheromone trails of all the subproblems solved by the ant, which implements the information share among the subproblems, and hence guides the search direction in subsequent iterations, whereas in Iredi et al. (2001), two methods called update by origin and update by region are used. The former relates to that an ant only updates the pheromone trails in its own colony, and the latter is that after splitting the non-dominated front found so far into many parts with equal size, the ants found solutions in the ith part only update the pheromone trail of subcolony i. In López-Ibáñez et al. (2004), another pheromone update strategy is introduced. The non-dominated solutions are first distributed among colonies and then only the best solutions with respect to each objective are allowed to deposit pheromone in the respective pheromone trail of each colony.

4 MoACO/D variants

In this section, we present three MoACO algorithms by respectively combining MoACO/D with ant systems (AS) (Dorigo et al. 1996), Max–Min ant system (MMAS) (Stützle and Hoos 2000) and ant colony system (ACS) (Dorigo and Gambardella 1997). The three procedures in MoACO/D, pheromone trail initialization, tour construction and pheromone update, are specified. For simplicity, we name the three algorithms as MoACO/D-AS, MoACO/D-MMAS and MoACO/D-ACS, respectively.

4.1 MoACO/D-AS

AS is the first ACO algorithm developed by Dorigo et al. (1996). In AS, a good choice is to set the initial pheromone trails to N/C, where N is the number of ants and C is the length of a feasible tour. To make AS suitable for MoACO/D, for the kth subproblem the initial pheromone trail τ k0 in (3) is assigned as

where π is a feasible tour of bTSP. Then for kth subproblem, each ant \(h, h \in S(k),\) chooses a random vertex as its starting point, and it passes through the rest L − 1 vertices to form a whole tour. At each pace, the ant chooses the next unvisited vertex j when it stays at the vertex i following the probability

where α and β are two parameters which determine the relative influence of the pheromone trail and the heuristic information, and \(\Upomega (i)\) is the set of vertices that the ant h has not visited yet. After all the ants have constructed their tours, the pheromone trail associated with every edge is evaporated by reducing all pheromones by a constant scale as

where \(\rho \in (0,1]\) is the evaporation rate. Subsequently, all ants in S(k) deposit pheromone on the arcs they have crossed in their tour as

where \(\Updelta \tau_{ij}^{h}\) is the amount of pheromone that the ant h deposits on the arc (i, j), calculated as

4.2 MoACO/D-MMAS

As compared with AS, MMAS introduces several modifications: pheromone trail limits, pheromone initialization and pheromone update. In MMAS, the recommended pheromone trail limits are \(\tau_{\min } = \tau_{\max }(1 - \root{L}\of{{0.05}})/((L/2 - 1)\root{L}\of{{0.05}}) \hbox{ and }\tau_{\max} = 1/\rho C, \) where C is the length of the optimal tour. The initial pheromone trail τ0 is set to the upper pheromone trail τmax. In MoACO/D-MMAS, we modify these characteristics to suit for bTSPs. For the kth subproblem, the upper pheromone trail τ kmax is initialized as

and once a new best tour is found, which is measured by the corresponding scalar objective function of kth subproblem, the value of τ kmax is updated. After all ants in S(k) finish their tour construction, pheromone trails are updated by applying evaporation as in (3), followed by the deposit of new pheromone as

where \(\Updelta {\tau^{{b_k}}} = 1/\sum\nolimits_{m = 1}^{2} {\lambda_m^{k}{f_{m}}(\pi^{b_{k}})} \) and b k is the best ant determined by (6).

4.3 MoACO/D-ACS

ACS is another successor of AS and it introduces three major modifications, including modifying the transition rule, introducing local pheromone update and global pheromone update. In ACS, a recommended value for initializing the pheromone trails is set to 1/LC, where C is the length of a feasible tour. To adapt this initialization to bTSP, for the kth subproblem, we initialize τ k0 in (3) as

where π is a feasible tour of bTSP. It is worth noting that τ k0 will be reinitialized after all ants in S(k) have constructed their tours as done in MACS (Barán and Schaerer 2003). Then for kth subproblem, every ant in S(k) chooses a random vertex as its starting point and chooses the next unvisited vertex j when it stays at the vertex i using a pseudorandom proportional transition rule as

where q is a random number ranged in [0, 1]; q 0 is a constant between 0 and 1; \({\hat{i}}\) is a random variable selected by the probability in (10). Once an ant crosses the arc (i, j) the local pheromone update is performed using the formula

where ξ is a preset constant varying in [0, 1]. After all ants have constructed their tours, the best ant is allowed to add pheromone in the tour \(\pi^{b_{k}}. \) In MoACO/D-ACS, this update procedure is implemented by (15). Additionally, the initial pheromone trail τ k0 is reinitialized by applying the weighted average objective function values, i.e.,

where \({\hat{f}}_{m}^{k}\) is the average of the mth objective value \(f(\pi^{h}), h \in S(k), m = 1, 2, \) that is,

5 Experimental results and analysis

In this section, we start from the construction of bTSP instances using benchmark TSPs and then turn to make appropriate configurations for three MoACO/D variants in an empirical way. Subsequently, extensive experiments are conducted on bTSPs. Experimental results compared with three existing MoACO approaches verify the efficiency and effectiveness of the presented MoACO/D framework.

5.1 bTSP instances

In this section, 8 bTSPs, called KroAB50, KroCD50, KroAB100, KroAD100, KroBC100, KroCD100, KroAB150 and KroAB200, having 50, 50, 100, 100, 100, 100, 150 and 200 cities, respectively, are constructed using pairs of benchmark TSPs and the same way as in García-Martínez et al. (2007). The benchmark TSPs, labeled KroA100, KroB100, KroC100, KroD100, kroA150, kroB150, KroA200 and KroB200, are available on the website http://www.iwr.uni-heidelberg.de/groups/comopt/software/TSPLIB95/tsp/. To be specific, KroAB100, KroAD100, KroBC100, KroCD100, KroAB150 and KroAB200 are constructed using KroA100 and KroB100, KroA100 and KroD100, KroB100 and KroC100, KroC100 and KroD100, KroA150 and KroB150, KroA200 and KroB200, respectively. KroAB50 and KroCD50 are constructed using the first 50 cities of KroA100 and KroB100, and the first 50 cities of KroC100 and KroD100, respectively. All the benchmark TSPs used are completely independent; hence, the objectives of these bTSP instances are also independent and non-correlated. Furthermore, in order to show the scalability of the algorithm (how does the growth of the number of cities deteriorate the algorithm performance?), two extra instances with 300 cities, EucildAB300 and EucildCD300 downloaded from the website http://eden.dei.uc.pt/paquete/tsp/, are employed to construct the test bed. Due to difficulties and complexities of these bTSP instances, they are challenging enough to evaluate various multi-objective optimization algorithms (García-Martínez et al. 2007; Jaszkiewicz and Zielniewicz 2009; López-Ibáñez and Stützle 2010b).

5.2 Parameter setting

In MoACO/D, except for general parameters in ACO, another two important parameters are involved, the number N of subproblems or subcolonies or ants in colony and the number T of ants in each subcolony. To obtain appropriate configurations of three MoACO/D variants for further experiments, we use KroAB50 as an example to investigate the effects of these parameters on the performance of MoACO/D-AS, MoACO/D-MMAS and MoACO/D-ACS in an empirical way. In our experiments, the values 5, 10, 15, 20, 25, 30 for N and the values 2, 5, 10 for T are considered. Because of the condition 2 < T < N must be satisfied, there are 15 combinations of N and T. The other parameters are set to the recommended values in single-objective ACO algorithms (Dorigo and Stützle 2004): α = 1, β = 2 for all MoACO/D variants, ρ = 0.5, 0.02 and 0.1 for MoACO/D-AS, MoACO/D-MMAS and MoACO/D-ACS, respectively, q 0 = 0.9 and ξ = 0.1 for MoACO/D-ACS. The weighted vectors are generated using a statistical method, that is, \({\varvec{\lambda}}^{k} = (\lambda^{k},1 - \lambda^{k}),\, k = 1, 2, \ldots, N \hbox{ and } {\lambda^{k}}\) is a random variable following a uniform distribution in the range of (0, 1). The maximal number 2e4 of function evaluations (FEs) is used as the termination condition of all tests and each case is performed for 15 runs independently. All the algorithms in this contribution are implemented on the platform MATLAB 7.6a and all the tests are conducted on PC with 2.4 GHz CPU, 2 GB RAM and Windows XP OS.

Since the quantitative performance evaluation of a multi-objective optimizer is also an MOP (Zitzler et al. 2003), no single metric is able to reflect the performance of a multi-objective algorithm at every aspect. In this paper, several metrics are used: cardinality \(|{{\mathcal{P}}|}\) of the approximation set \({\mathcal{P}}, S\) metric, R1 R metric, R3 R metric and \(\epsilon\) indicator (including \(I_\epsilon ({\mathcal{P}}_{0},{\mathcal{P}})\) and \(I_\epsilon ({\mathcal{P}}, {\mathcal P}_{0})\)) (see Appendix). The S metric allows us to measure the diversity of approximation \({{\mathcal{P}}}\) and the proximity of \({\mathcal{P}}\) to the reference set \({{{\mathcal{P}}_{0}}. }\) The R1 R and R3 R metrics measure the quality of \({\mathcal{P}}\) based on the reference set \({\mathcal{P}}_0.\) The other two indicators, \(I_\epsilon ({\mathcal{P}}_0,{\mathcal{P}})\) and \(I_\epsilon ({\mathcal{P}}, {\mathcal{P}}_{0})\) measure the extent of superiority of reference set \({{{\mathcal {P}}_{0}}}\) over \({\mathcal{P}}.\) According to the definitions of these metrics, the best values of \(S( \le 1), R{1_{R}}( \ge 0.5), R3_{R}( \le 0), I_\epsilon ({\mathcal{P}}_{0},{\mathcal{P}})(\le 1) \hbox{ and } I_\epsilon({\mathcal{P}},{\mathcal{P}}_{0})( \ge 1)\) are 1, 0.5, 0, 1 and 1, respectively.

In the experiments, for every MoACO/D variant, the reference set \({\mathcal{P}}_0\) is generated by the union of all solutions obtained from all runs and all cases except for the dominated ones. Tables 1, 2 and 3 provide the mean results of the metrics when T and N cover all combinations for MoACO/D-AS, MoACO/D-MMAS and MoACO/D-ACS, respectively. According to Tables 1 and 3, T and N could be assigned as 5 and 30 for both MoACO/D-AS and MoACO/D-ACS because they get the best results in 4 out of 6 metrics. Moreover, when T is fixed and as the number of N increases, the values of S and \(|{\mathcal{P}}|\) increase. Also R3 R and \(I_\epsilon({\mathcal {P}}, {\mathcal{P}}_0)\) obtain better results when N is a larger value. Table 2 manifests that T = 2 and N = 20 is an appropriate configuration for MoACO/D-MMAS since it obtains better overall results compared with other combinations. In the next section, these configurations are utilized to make comparisons with other three MoACO algorithms.

5.3 Comparisons with three MoACO approaches

The survey paper García-Martínez et al. (2007) made a convincingly comparative analysis of eight MoACO algorithms reported in literature and two well-known evolutionay multi-objective optimization algorithms, NSGA-II (Deb et al. 2002) and SPEA2 (Zitzler et al. 2001), by using six bTSPs. The experimental results show that the three algorithms, MACS (Barán and Schaerer 2003), BIANT (Iredi et al. 2001; García-Martínez et al. 2007) and UNBI (Iredi et al. 2001; García-Martínez et al. 2007), outperform the other seven approaches. Therefore, we consider the three algorithms, MACS, BIANT and UNBI, as benchmark algorithms in the following experiments. To show the main characteristics of the three algorithms, we give a very brief review of them as follows:

-

MACS (Barán and Schaerer 2003) is a variation of MACS-VRPTW (Gambardella et al. 1999) proposed by Gambardella et al. Contrary to its predecessor, MACS uses a single pheromone trail and several heuristic matrices. During the local pheromone update, the amount of pheromone deposited on the tour is not fixed, but will be reinitialized at the end of each iteration using the average values of Pareto solutions. The global pheromone update is performed using every solution of the current approximation set after an iteration.

-

BIANT (Iredi et al. 2001; García-Martínez et al. 2007) is characterized by multiple pheromone trails and heuristic matrices aggregated by weighted product. As it was originally designed for a bi-criteria vehicle routing problem, García-Martínez et al. modified it to suit for bTSP, i.e., each ant which generated non-dominated solutions at the current iteration is used to update pheromone trail, and the amount of pheromone deposited on the trip is the inverse of the cost of its solution according to the objective function.

-

UNBI (Iredi et al. 2001; García-Martínez et al. 2007) is a modified version of BIANT, which divides an ant colony into Nc subcolonies and each objective in a subcolony has a pheromone trail. When pheromone trails are updated, each ant only contributes to those pheromone trails associated with the subcolony that it belongs to.

To compare the performance of these six algorithms, ten bTSPs with various cites discussed in Sect. 5.1 are considered. To make a fair comparison, we tuned the number N of ants of MACS, BIANT and UNBI and the number Nc of colony of UNBI in the same way as done in previous discussion of MoACO/D variants. Then we chose the best configurations for them to carry out experiments. All parameter values for the algorithms are presented in Table 4. We independently execute each algorithm on each bTSP instance for 15 runs; thus for every bTSP, 15 approximation sets can be obtained for each algorithm.

The presentation of all approximation sets of each algorithm in a single graph is usually confusing and misleading. It is difficult to separate out individual approximation sets and to clearly understand the distribution of the location and extent of the different approximation sets over multiple runs (Knowles 2005). Since an attainment surface can emphasize the distribution and indicate the quality of the individual point, and the visualization of attainment surface allows us to characterize and/or summarize the behavior of a single algorithm in many runs in a graphical manner, we use the attainment surface to visualize the outcomes of the experimental results.

Attainment surface, originally proposed in Fonseca and Fleming (1996), relates to a boundary which separates the objective space into two parts: the objective vectors that are attained by the outcomes returned by an optimizer and those that are not. The notion of attainment surface is formalized in the concept of %-attainment surface, which is the line separating the objective space attained by % of the runs of an algorithm (López-Ibáñez et al. 2010). In this contribution, the median attainment surface, which delimits the region attained by 50% of the runs, is considered to present the average behavior of MoACO/D as well as three benchmark algorithms. We use the software programmed by Knowles (2005), which can be downloaded from the website http://dbkgroup.org/knowles/plot_attainments/, to implement the function, and the resolution 100 is considered in the paper.

The experimental results obtained by the six algorithms are shown in Fig. 3, where each curve provides an estimation of the median attainment surface obtained from 15 approximation sets for a specific algorithm and a bTSP and it implies the location, dispersion, and even skewness of the distribution of solutions in each region of the trade-off surface. From the median attainment surfaces in every plot, three MoACO/D variants would appear to produce good results more often than MACS, BIANT and UNBI on large bTSPs. Specifically, three MoACO/D variants obtain similar median attainment surfaces on all cases and obtain better median attainment surfaces in the center region of the trade-off surface compared with MACS, BIANT and UNBI, while they obtain slightly worse median attainment surfaces in the upper-left and lower-right regions of the trade-off surface than MACS and UNBI. BIANT and UNBI perform similarly. Whereas, along with the increase of the number of cities, the results obtained by BIANT and UNBI are deteriorated severely in the central regions of the trade-off surface, especially in the case of EuclidAB300 and EuclidCD300. As for MACS, the median attainment surfaces are not as good as other algorithms in the center region of the trade-off surface on all bTSPs. However, MACS possesses best spreads and its performance does not degenerate so badly as that of BIANT and UNBI when large-scale bTSPs are considered. According to the plots, MoACO/D variants seem to best approximate to real Pareto front in most regions and MACS seems to generate approximation sets with best spreads. Moreover, we can infer from the plots that MoACO/D-ACS perform best in the three MoACO/D variants.

To quantitatively compare the performance of six algorithms, six metrics used in the section of parameter setting and the average computation time are considered. For each bTSP the reference set \({\mathcal{P}}_{0}\) is generated by the union of all solutions obtained from 6 algorithm and 15 runs, except for the dominated ones. Tables 5, 6, 7, 8, 9, 10, 11, 12, 13 and 14 summarize the statistics of these metrics on ten bTSPs. Through analyzing the statistical results, we can draw some conclusions:

-

Except for KroAB50 and KroCD50, the numbers of non-dominated solutions obtained by MoACO/D-MMAS and MoACO/D-ACS are larger than other approaches, especially much larger than MACS. In the cases of KroAB50 and KroCD50, UNBI gets more non-dominated solutions than other algorithms and BIANT gets more solutions than UNBI on KroAB150, KroAB200, EuclidAB300 and EuclidCD300. As the cardinality of the approximation set could not reflect the quality of solutions, we cannot say that MoACO/D variants outperform others at this moment, and vice versa.

-

Except for KroAB50 and KroCD50, MoACO/D-ACS obtains the best mean S values which affirms the approximation set obtained by MoACO/D-ACS has best diversity and best approximates to the pseudo Pareto set on large bTSPs. MoACO/D-MMAS is the second best approach on KroAB150, KroAB200, EuclidAB300 and EuclidCD300 while MoACO/D-AS performs better than MACS, BIANT and UNBI on EuclidAB300 and Euclid-CD300. Moreover, according to S values UNBI is the best approach on KroAB50 and KroCD50 and MACS is the worst one on all bTSPs.

-

For all bTSPs, MoACO/D-ACS obtains the best mean values for metrics R1 R and R3 R , that is, the values of R1 R and R3 R best approach to 0.5 and 0, respectively, among the six algorithms. The results indicate that MoACO/D-ACS has the largest probability to produce the approximation set well approximating to the pseudo Pareto set among six algorithms. In addition, according to the statistical values, MoACO/D-AS and MoACO/D-MMAS outperforms MACS, BIANT and UNBI on KroAB150, KroAB200, EuclidAB300 and EuclidCD300 and the performance of MACS is poorer than other algorithms referring to R1 R and R3 R metrics.

-

For all bTSPs, MoACO/D-ACS obtains the best mean values in terms of \(I_\epsilon({\mathcal{P}}_{0}, {\mathcal{P}})\) and obtains slight worse values than BIANT or UNBI with respect to \(I_\epsilon({\mathcal{P}}, {\mathcal{P}}_{0}),\) which implies that the extent of superiority of reference set \({\mathcal{P}}_{0}\) over sets generated by MoACO/D-ACS is less than other algorithms to some extent. As for MoACO/D-AS and MoACO/D-MMAS, medium values are obtained. We can also learn from the results that MACS gets the worst values, which implies that reference set \({\mathcal{P}}_{0}\) dominates the sets returned by MACS to a large extent.

-

Referring to average computation time, three MoACO/D variants cost much less time than other algorithms, especially on larger bTSPs, which reflects the efficiency of MoACO/D framework. The reason is that calculating the probability of next vertex to be visited is much simpler in MoACO/D because it only uses one pheromone trail and one heuristic matrix, whereas in MACS, BIANT and UNBI multiple pheromone trails and multiple heuristic matrices are used, which results in a higher computation time. However, MoACO/D simultaneously maintains many pheromone trails and heuristic matrices; hence more memory is required.

To make a comparison for any pair of algorithms, C metric (see Appendix) is employed. The metric evaluates the performance of two algorithms by mapping from the ordered pair sets into a number in the interval [0, 1]. The box plots based on the C metric are shown in Fig. 4, where each rectangle contains ten box plots representing the distribution of the C values for a certain ordered pair of algorithms. From left to right, ten box plots relate to KroAB50, KroCD50, KroAB100, KroAD100, KroBC100, KroCD100, KroAB150, KroAB200, EuclidAB300 and EucidCD300, respectively. In each rectangle, the bottom, middle and top dash lines refer to scales 0, 0.5 and 1, respectively. Each rectangle, representing algorithms \({\mathcal{P}}_{1}\) and \({\mathcal{P}}_{2}\) associated with the corresponding row and column, gives the fraction of \({\mathcal{P}}_{2}\) dominated by \({\mathcal{P}}_1, \) that is \(C({\mathcal{P}}_{1}, {\mathcal{P}}_{2}). \)

The box plots clearly show that for all bTSPs MoACO/D-ACS dominates more than half of the outcomes returned by MACS, BIANT and UNBI, while MACS has the worst performance among six algorithms with respect to C metric. The plots also illustrate that MoACO/D-AS and MoACO/D-MMAS perform better than BIANT on average over eight out of ten bTSPs, and MoACO/D-AS and MoACO/D-MMAS are superior to UNBI on large bTSPs. Moreover, the plots indicate that MoACO/D-ACS performs best among the three MoACO/D variants, followed by MoACO/D-MMAS.

Through extensive experiments and thorough analysis using many performance metrics as well as visualization of the median attainment surfaces, we can easily draw conclusions that MoACO/D framework is efficient and effective for bTSPs, especially on large cases, and the approach combining MoACO/D framework with ACS performs best against two other MoACO/D variants and three benchmark algorithms. The advantage of MoACO/D comes from the unique way that handles the MOP, i.e., MoACO/D transforms an original MOP into many scalar optimization subproblems using Tchebycheff approach and all subproblems are treated equally using single-objective ACO algorithm. Moreover, the pheromone trail share is designed to implement information share among subproblems, which also contributes to the performance of MoACO/D variants.

6 Conclusions

ACO is one of the most powerful population-based search approaches in solving a TSP, a well-known NP-hard combinatorial optimization problem. In the community of multiple objective optimization, how to obtain a Pareto front with good approximation and even distribution is always an ongoing issue. This paper proposes a framework, MoACO/D, for solving bTSPs based on Tchebycheff decomposition. In MoACO/D, a bTSP is decomposed into a number of scalar optimization subproblems and an ant colony is divided into a certain number of subcolonies with overlapping parts to suit for the decomposition. Three MoACO algorithms designed by, respectively, combining MoACO/D with AS, MMAS and ACS are presented. Extensive experiments performed on ten bTSPs with different complexities show that the MoACO/D framework is efficient and effective for solving bTSPs. Among the three MoACO/D variants, MoACO/D-ACS obtains better performance than three well-known MoACO algorithms and the other two variants are competitive to the benchmark MoACO algorithms on larger bTSPs in terms of several performance measures and median attainment surface. Our further work will focus on improving MoACO/D framework performance by introducing an appropriate local search technique and applying it to other multi-objective combinatorial problems.

References

Angus D, Woodward C (2009) Multiple objective ant colony optimisation. Swarm Intell 3(1):69–85

Barán B, Schaerer M (2003) A multiobjective ant colony system for vehicle routing problem with time windows. In: Proceedings of the twenty first IASTED international conference on applied informatics, pp 97–102

Bullnheimer B, Hartl R, Strauss C (1999) An improved ant system algorithm for the vehicle routing problem. Ann Oper Res 89:319–328

Cardoso P, Jesus M, Márquez A (2011) ε-DANTE: an ant colony oriented depth search procedure. Soft Comput 15:149–182

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Doerner K, Gutjahr W, Hartl R, Strauss C, Stummer C (2006) Pareto ant colony optimization with ILP preprocessing in multiobjective project portfolio selection. Eur J Oper Res 171(3):830–841

Dorigo M, Gambardella L (1997) Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput 1(1):53–66

Dorigo M, Stützle T (2004) Ant colony optimization. MIT Press, Cambridge

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Tran Syst Man Cybern Part B 26(1):29–41

Fonseca C, Fleming P (1996) On the performance assessment and comparison of stochastic multiobjective optimizers. In: Vogit H-M, Ebeling W, Rechenberg I, Schwefel HS (eds) Proceedings of PPSN-IV, fourth international conference on parallel problem solving from nature. Lecture notes in computer science, vol 1141. Springer, Berlin, pp 584–593

Gambardella L, Taillard E, Agazzi G (1999) MACS-VRPTW: A multiple ant colony system for vehicle routing problems with time windows. In: Corne D, Dorigo M, Glover F (eds) New ideas in optimization. McGraw-Hill, pp 63–76

García-Martínez C, Cordón O, Herrera F (2007) A taxonomy and an empirical analysis of multiple objective ant colony optimization algorithms for the bi-criteria TSP. Eur J Oper Res 180(1):116–148

Hansen M (1998) Metaheuristics for multiple objective combinatorial optimization. PhD thesis

Hansen M, Jaszkiewicz A (1998) Evaluating the quality of approximations to the non-dominated set. Tech. rep. Technical report IMM-REP-1998-7, Technical University of Denmark

Iredi S, Merkle D, Middendorf M (2001) Bi-criterion optimization with multi colony ant algorithms. In: Zitzler E, Deb K, Thiele L, Coello C, Corne D (eds) First International Conference on evolutionary multi-criterion optimization. Lecture notes in computer science, vol 1993. Springer, Berlin, pp 359–372

Ishibuchi H, Murata T (1998) A multi-objective genetic local search algorithm and its application to flowshop scheduling. IEEE Trans Syst Man Cybern Part C Appl Rev 28(3):392–403

Jaszkiewicz A (2002) On the performance of multiple-objective genetic local search on the 0/1 knapsack problem—a comparative experiment. IEEE Trans Evol Comput 6(4):402–412

Jaszkiewicz A, Zielniewicz P (2009) Pareto memetic algorithm with path relinking for bi-objective traveling salesperson problem. Eur J Oper Res 193:885–890

Knowles J (2005) A summary-attainment-surface plotting method for visualizing the performance of stochastic multiobjective optimizers. In: Proceedings of the 5th international conference on intelligent systems design and applications. IEEE, Washington, DC, USA, pp 552–557

López-Ibáñez M, Stützle T (2010a) Automatic configuration of multi-objective ant colony optimization algorithms. In: Dorigo M, Birattari M, Di Caro G, Doursat R, Engelbrecht A, Floreano D, Gambardella L, Grob R, Sahin E, Stützle T, Sayama H (eds) ANTS 2010. Lecture notes in computer science, vol 6234, Springer, Berlin, pp 95–106

López-Ibáñez M, Stützle T (2010b) The impact of design choices of multiobjective ant colony optimization algorithms on performance: an experimental study on the biobjective TSP. In: Proceedings of the 12th annual conference on genetic and evolutionary computation, ACM, Portland, Oregon, USA, pp 71–78

López-Ibáñez M, Paquete L, Stützle T (2004) On the design of ACO for the biobjective quadratic assignment problem. In: Dorigo M (ed) Proceedings of ANTS. Lecture notes in computer science, vol 3172, Springer, Heidelberg, pp 224–225

López-Ibáñez M, Paquete L, Stützle T (2010) Empirical methods for the analysis of optimization algorithms. In: Dorigo M, Birattari M, Di Caro G, Doursat R, Engelbrecht A, Floreano D, Gambardella L, Grob R, Sahin E, Stützle T, Sayama H (eds) Exploratory analysis of stochastic local search algorithms in biobjective optimization. Springer, Berlin, pp 209–222

Mariano C, Morales E (1999) A multiple Ant-Q algorithm for the design of water distribution irrigation network. Tech. rep. Technical report HC-9904, Instituto Mexicano de Tecnología del Agua

Miettinen K (1999) Nonlinear multiobjective optimization. Kluwer, Boston

Peng W, Zhang Q, Li H (2009) Comparison between MOEA/D and NSGA-II on the multi-objective travelling salesman problem. In: Goh C, Ong Y, Tan K (eds) Multi-objective memetic algorithms. Studies in computational intelligence, vol 171. Springer, Berlin, pp 309–324

Stützle T, Hoos H (2000) MAX–MIN ant system. Future Gener Comput Syst 16(8):889–914

T’kindt V, Monmarché N, Tercinet F, Laügt D (2002) An ant colony optimization algorithm to solve a 2-machine bicriteria flowshop scheduling problem. Eur J Oper Res 142(2):250–257

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11(6):712–731

Zitzler E, Thiele L (1998) Multiobjective optimization using evolutionary algorithms—a comparative case study. In: Eiben A, Bäck T, Schaerer M, Schwefel H (eds) Parallel problem solving from nature V. Springer, Berlin, pp 292–301

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Trans Evol Comput 3(4):257–271

Zitzler E, Laumanns M, Thiele L (2001) SPEA2: improving the strength pareto evolutionary algorithm. Tech. rep. Switzerland, Tech. rep. TIK-Rep, 103

Zitzler E, Thiele L, Laumanns M, Fonseca C, Fonseca V (2003) Performance assessment of multiobjective optimizers: an analysis and review. IEEE Trans Evol Comput 7(2):117–132

Acknowledgments

The authors are very grateful to the Area Editor, Prof. Carlos A. Coello Coello, and the anonymous reviewers for their insightful recommendations and comments to improve this paper. This work was supported by the National Natural Science Foundation of China (61170016), the Project Sponsored by the Scientific Research Foundation for the Returned Overseas Chinese Scholars, State Education Ministry, the Scientific and Technological Funds for Young Scientists of Sichuan (09ZQ026-040), the Fund of Engineering Research Center for Transportation Safety, Ministry of Education (WHUTERCTS2010A01), by the Fundamental Research Funds for the Central Universities (SWJTU11ZT07, SWJTU09ZT10), and the Open Research Fund of Key Laboratory of Signal and Information Processing, Xihua University (SZJJ2009-003).

Author information

Authors and Affiliations

Corresponding author

Appendix: Performance metrics

Appendix: Performance metrics

1.1 S metric

S metric, also named the hypervolume ratio (Zitzler and Thiele 1998), relates to the ratio of the hypervolume of an approximation set \({\mathcal{P}}\) and an optimum Pareto set or pseudo-optimum Pareto set \({\mathcal{P}}_{0}, \) depicted in equation

where \(\hbox{HV}({\mathcal{P}})\) and \(\hbox{HV}({\mathcal{P}}_{0})\) are the hypervolumes of set \({\mathcal{P}}\) and \({\mathcal{P}}_{0}, \) respectively, where the hypervolume relates to the area of coverage of a set and an anti-idea point with respect to the objective for a two-objective MOP, defined as

where q i is a non-dominated vector in \({\mathcal{P}};\, \hbox{vol}_{i}\) is the volume between the anti-idea solution and vector q i . For a minimization MOP, it’s assumed that the anti-idea point is the maximum value for each objective.

The S metric can be used as the basis of a dominance compliant comparison and possess the advantage of measuring both diversity and proximity. S value less than one indicates the approximation set \({\mathcal{P}}\) is not as good as \({\mathcal{P}}_{0}\) and if S value equals to 1, then \({\mathcal{P}}={\mathcal{P}}_{0}. \) Therefore, the larger the value S is, the better the \({\mathcal{P}}\) approximation set is.

1.2 R1 R and R3 R metrics

The R1 R metric (Hansen 1998) calculates the probability that an reference set \({\mathcal{P}}_{0}\) is better than an approximation set \({\mathcal{P}}\) over a set of utility functions U, defined as

where \(u \in U\) is a utility function mapping each set to an utility measure; p(u) is the probability density of the utility function u, and

with \(u({\mathcal {P}}) = \mathop{\min}\limits_{q \in {\mathcal{P}} \{ u(q)\} .}\)

The R3 R metric (Hansen and Jaszkiewicz 1998) measures the probability of superiority of an reference set \({\mathcal{P}}_{0}\) over approximation set \({\mathcal{P}}, \) formulated as

where U and p(u) are defined as in R1 R metric.

According to the metrics, the more the value R1 R is near to \(\frac{1}{2}\) or the smaller the value R3 R is, the better the approximation set \({\mathcal{P}}\) is. Additionally, both R1 R metric and R3 R metric require a set of utility functions U which must be defined. In this contribution, the Tchebycheff utility function set (Hansen and Jaszkiewicz 1998) is used, that is,

Therefore, the original integration in (23) and (25) can be superseded by

1.3 \({\varvec{\epsilon}}\) indicator

For a minimization problem with m positive objectives, an objective vector \({\user2{a}} = ({a_{1}},{a_{2}},\ldots,{a_{m}})\) is said to \(\epsilon\) dominate another objective vector \({\user2{b}} = (b_{1},b_{2},\ldots,b_{m}), \) written as \(a\succcurlyeq_\epsilon b, \) if and only if

for a given \(\epsilon > 0. \) Given two approximation sets, \({\mathcal{P}}_1 \hbox{ and } {\mathcal{P}}_2, \,\epsilon\) indicator measures (Zitzler et al. 2003) the smallest amount \(\epsilon\) such that any solution \(q_{2} \in {\mathcal {P}}_2\) is \(\epsilon\) dominated by at least one solution \(q_{1} \in {\mathcal{P}}_1,\) i.e.,

When \(I_\epsilon({\mathcal {P}}_{1},{\mathcal{P}}_{2}) < 1,\) all solutions in \({\mathcal{P}}_{2}\) are dominated by a solution in \({\mathcal{P}}_1.\) If \(I_\epsilon({\mathcal{P}}_1,{\mathcal{P}}_2) = 1 \hbox{ and } I_{\epsilon}({\mathcal {P}}_{2},{\mathcal{P}}_{1}) = 1, {\mathcal{P}}_{1} \hbox{ and } {\mathcal{P}}_{2}\) represent the same approximation set. If \(I_{\epsilon}({\mathcal{P}}_1,{\mathcal{P}}_2) > 1\) and \(I_{\epsilon}({\mathcal{P}}_2,{\mathcal{P}}_1) > 1, {\mathcal{P}}_1 \hbox{ and } {\mathcal{P}}_{2}\) are incomparable. When the optimum Pareto set or pseudo-optimum Pareto set is considered, i.e., \(I_\epsilon({\mathcal {P}}_0, {\mathcal {P}}) \hbox{ and } {I_{\epsilon}}({\mathcal{P}}, {\mathcal {P}}_{0}), I_{\epsilon}({\mathcal{P}}_{0}, {\mathcal{P}}) \le 1 \hbox{ and } I_{\epsilon}({\mathcal{P}},{\mathcal{P}}_{0}) \ge 1, \) and the more the value is near to 1, the better the set \({\mathcal{P}}\) is.

1.4 C metric

C metric (Zitzler and Thiele 1999) aims to evaluate the performance of two multi-objective algorithms by comparing the approximation Pareto sets. Let \({\mathcal{P}}_1, {\mathcal{P}}_2\) be two approximation Pareto sets obtained by two algorithms, the function C defines a mapping from the ordered pair \(({\mathcal{P}}_1, {\mathcal{P}}_2)\) to the interval [0, 1], i.e.,

where \({\mathbf{a}} \succcurlyeq {\mathbf{b}}\) implies that the solution \({\mathbf{a}}\) dominates the solution \({\mathbf{b}}. \) The value \(C({\mathcal{P}}_1, {\mathcal{P}}_{2}) = 1\) means that all objective vectors in \({\mathcal{P}}_{2}\) are dominated by at least one objective vector in \({\mathcal{P}}_{1}. \) On the contrary, \(C({\mathcal{P}}_{1}, {\mathcal{P}}_{2}) = 0\) represents the case that no point in \({\mathcal{P}}_{2}\) is dominated by any point in \({\mathcal{P}}_1.\) Note that both directions have to be considered, since \(C({\mathcal{P}}_{1}, {\mathcal{P}}_{2})\) is not equal to \(1- C({\mathcal{P}}_{2} ,{\mathcal{P}}_{1}). \)

Rights and permissions

About this article

Cite this article

Cheng, J., Zhang, G., Li, Z. et al. Multi-objective ant colony optimization based on decomposition for bi-objective traveling salesman problems. Soft Comput 16, 597–614 (2012). https://doi.org/10.1007/s00500-011-0759-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-011-0759-3