Abstract

This paper presents a general spatio-temporal model for assessing the air quality impact of environmental policies which are introduced as abrupt changes. The estimation method is based on the EM algorithm and the model allows to estimate the impact on air quality over a region and the reduction of human exposure following the considered environmental policy. Moreover, impact testing is proposed as a likelihood ratio test and the number of observations after intervention is computed in order to achieve a certain power for a minimal reduction. An extensive case study is related to the introduction of the congestion charge in Milan city. The consequent estimated reduction of airborne particulate matters and total nitrogen oxides motivates the methods introduced while its derivation illustrates both implementation and inferential issues.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Environmental, energy and industrial policies are often motivated by the need to improve air quality in terms of pollutants concentration reduction. This is usually pursued by a supposedly appropriate cut of emissive activity, for example reduction of car traffic, substitution of carbon with green energy or requalification of heavy industry. Hence a fundamental step is to assess if a given policy actually obtains a relevant reduction of pollutant concentrations. In this paper, we develop a general statistical methodology for spatiotemporal impact assessment.

The general approach proposed is motivated by and benchmarked on a real application related to the impact of traffic reduction. In particular, on January 16, 2012, the Municipality of Milan introduced a new traffic restriction system known as congestion charge. This requires drivers to pay a fee of five Euros for entering the central area, known as “Area C” which is inside the so called “Cerchia dei Bastioni”. According to Municipality, in the first two months, car traffic decreased by 36 % in Area C and, at the overall city level, Municipality reported a traffic reduction by 6 % which started at the beginning of January, before the congestion charge. This preemptive reduction may be partly due to the preparatory campaign played out by the administration and partly to an overall decrease in gas consumption at the national level caused by the economic crisis.

January and February were cold and heavily polluted months with a large number of days exceeding the thresholds fixed by the European regulations (see Arduino and Fassò 2012). Although the congestion charge is intended as a measure of traffic control, the question which arises is whether there has been an impact on air quality or not. Air quality is usually defined according to the concentrations of various pollutants entering a suitable air quality index, see e.g. Bruno and Cocchi (2007). We will focus here on two important compounds entering most air quality indexes, namely particulate matters and nitrogen oxides which are important for their toxicity. Particulate matters are predominantly secondary pollutants. Hence they have a background level which is large in percentage and difficult to reduce through local traffic policies. Moreover their health effects are known to depend not only on their concentration but also on particle size, composition and black carbon content, therefore, having extensive data on particle numbers (see e.g. Hong-di and Wei-Zehn 2012) or on black carbon content (see e.g. Janssen et al. 2011) would be very useful to understand air quality from a health protection point of view. To a large extent, total nitrogen oxides are compounds of primary pollutants. Hence they are closely linked to local traffic and have a background level which is smaller in percentage than particulate matters.

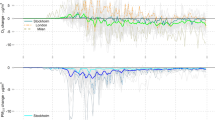

In general, comparing concentrations before and after intervention is a non-trivial problem. In fact, considering PM10 concentration in Milan city center depicted in Fig. 1, we note that the January–February mean of the last three years is 71.3 μg/m3 which is smaller than the after-intervention mean, obtained between January 16 and the end of February, which is 76.7 μg/m3.

Hence the air quality impact of the congestion charge may be obscured by atmospheric conditions, which are known to greatly affect the air quality of the Po Valley basin in general and the Milan area in particular. Moreover a second possible confounder is related to the economic crisis which is reducing both car use and gas consumption around Italy.

This paper aims at an early estimation of the spatially distributed reduction in the yearly average of pollutant concentrations. To do this, it aims to focus on the following three scientific questions, which are exemplified by means of the Milan case study.

-

1.

The first point is whether or not the congestion charge has a measurable permanent impact on air quality after adjusting for meteorological conditions and traffic reduction due to economic crisis.

-

2.

Moreover, the second point aim is to know if there is a different impact within the intervention area (area C) and the rest of the city.

-

3.

Finally, the third point is related to the spatial and temporal information content required to have sound conclusions. That is the number of days, required to “observe” a statistically significant permanent impact with high probability and the number of stations required to understand the spatial impact.

In time series analysis the non spatial part of questions one and two are treated by intervention analysis after the celebrated paper of Box and Tiao (1975). See also e.g. Hipel and McLeod (2005). Soni et al. (2004) discuss spatio-temporal intervention analysis in the context of neurological signal analysis using STARMA models. In river networks water quality monitoring, Clement et al. (2006) considered a spatiotemporal model based on directed acyclic graphs. Here, we extend these methods to a general multivariate spatiotemporal air quality model and develop some examples related to Milan congestion charge. In principle intervention analysis for air quality time series could also be based on neural networks, for example extending Corani (2005) or Gardner and Dorling (1999). Nevertheless this approach does not exploit the spatial dimension in the same explicit way and it is not further considered here.

The rest of the paper is organized as follows. We begin Sect. 2 with a preliminary vector autoregressive model and develop the section with a general spatiotemporal model, which is capable of various levels of complexity according to the information content of the underlying monitoring network. The model allows us to estimate the impact on air quality and the reduction of human exposure following the considered environmental policy. Maximum likelihood estimation is obtained by a version of the EM algorithm and impact testing is proposed as a likelihood ratio test. Moreover we give formulas for the minimum number of observations after intervention guaranteeing a certain high probability of detecting a prespecified hypothetical reduction of pollution. In Sect. 3 the above approach is applied to the introduction of the congestion charge in Milan city. To do this, the general model is tailored to the reduced monitoring network that the environmental agency, ARPA Lombardia, implemented for monitoring particulate matters (PM10 and PM2.5) and nitrogen oxides (NO X ) in the city. The concentration reduction is then assessed for the above pollutants using a preliminary approach based on vector autoregressions and a final spatiotemporal model named STEM used when the spatial information contained in the monitoring network is sufficient. Section 4 discusses the results and gives concluding remarks to the paper, which is closed by an acknowledgment section.

2 Impact modelling

In this section we discuss the extension of intervention analysis to spatiotemporal data. To begin with, we introduce impact modelling in the simple case where data are collected by a single station, and no spatial correlation arises, or by a limited number of stations, and spatiotemporal correlation is modelled by a vector autoregression, see e.g. Hipel and McLeod (2005). This approach is suggested here only as a preliminary analysis to be integrated by a full spatio–temporal model as discussed in Sect. 2.2

2.1 Autoregressive approach

Let y(s j , t) be the observation of a pollutant concentration from station located in s j , j = 1,…, q and time t = 1,…, n. Moreover, let \(y_{t}=\left( y\left( s_{1},t\right) ,\ldots,y\left( s_{q},t\right) \right)\) be the vector of all concentrations at time t and, similarly, let x t be the covariate vector including trends and confounders. Hence the exploratory intervention model is given by

In this model e t is a Gaussian white noise and the impact a t is a step function vanishing before intervention time \(t^{\ast}. \) For \(t\geq t^{\ast}\) we have a t = a which is a q − dimensional vector whose positive elements define the pollution reduction size at the corresponding location s j , j = 1, …, q. The persistence matrix G is taken as a diagonal matrix:

Note that the multivariate approach is important here, because the errors e t may be strongly (spatially) correlated.

According to the AR(1) dynamics, the scalar steady state impact on y t is given by

Moreover, ignoring the uncertainty of the pre-intervention estimation of g, we get the approximate variance for \(\hat{d},\) namely

It follows that the city average steady state effect is given by \(\bar {d}=\Upsigma_{j=1}^{q}\hat{d}_{j}p_{j}\) with variance given by the well known quadratic form \(Var\left(\bar{d}\right) =p^{\prime}Var\left(\hat {D}\right)p.\) In the last formula, \(\hat{D}=\left(\hat{d}_{1},\ldots,\hat {d}_{q}\right)\) and the weights \(p=\left(p_{1},\ldots,p_{q}\right)\) can be based on the population density.

2.2 STEM approach

Following the modern hierarchical modeling approach to spatiotemporal data, we consider here a general model able to assess the impact on air quality of an environmental rule in a geographic region \({{\mathcal{D}}.}\) To do this, we define a spatiotemporal model for the observed concentration at coordinates \({s\in{\mathcal{D}}}\) and day t = 1, 2,…, n, denoted by y(s,t), able to capture the effect of the environmental intervention, dated \(t^{\ast }=n-m+1\) and observed for \(m=n-t^{\ast}+1\) days, namely

The quantity \(\alpha\left(s,t\right)\) represents the expected spatiotemporal impact of the environmental intervention and α(s,t) = 0 for \(t<t^{\ast}.\) In general terms α is a suitable spatiotemporal process and the effectiveness of a environmental policy can be assessed by the expected impact on pollution concentrations over region \({{\mathcal{D}}}\) and time horizon M, which is given by

The weighting function \(p\left(s,t\right)\) may be used for averaging, e.g. \({p\left(s,t\right) =\left\vert {\mathcal{D}}\right\vert ^{-1},}\) or risk assessment. For example, we may be interested in human exposure and, following Finazzi et al. (2013), we may take the weighting function p(s, t) as the dynamic population distribution or a time-invariant p(s) as a static population distribution over the study area. If \(\Updelta<0\) then the impact is negative and we have an increase in pollutant concentration.

The simplest model for reduction assessment, is given by a scalar deterministic impact

which assumes constant impact over time and space after intervention. At an intermediate complexity level, we may use α(s, t) = α(s) which gives a time-invariant reduction map, appropriate for assessing a localized permanent stationary impact. Of course the choice among the above alternatives relies also on the spatial information content of the monitoring network.

Confounders may be covered by a linear confounder model component βx(t) or, more generally, by a time varying linear component β(t) x(t), where β(t) is a deterministic varying coefficient vector or a stochastic process. For example, Finazzi and Fassò (2011) use Markovian dynamics for β(t).

In Eq. (2), the spatiotemporal error \(\zeta(s,t)\) allows for spatial and temporal correlation using either separability or non-separability, see e.g. Porcu et al. (2006), Bruno et al. (2009) and Cameletti et al. (2011). In this paper, in the light of limited spatial information available, we use a simple additively separable latent process with three components adapted from Fassò and Finazzi (2011), namely

where z(t) = γ z(t − 1) + η(t) is a stable Gaussian Markovian process, with \(\vert \gamma{\vert} <1\) and σ 2η = Var(η). The purely spatial component ω(s, t) is given by iid time replicates of a zero mean Gaussian spatial random field characterized by a suitable spatial covariance function \(\gamma(\vert s-s^{\prime}\vert ).\) Finally \(\varepsilon(s,t)\) is a Gaussian measurement error iid over time and space, with variance \(\sigma_{\varepsilon}^{2}.\)

2.3 Estimation and inference

We denote the parameter array characterizing model (2) by θ = (θα, θ∼α), where θα is the component related solely to the effect \(\alpha\left(\cdot\right) =\alpha\left( \cdot|\theta_{\alpha}\right)\) and θ∼α is the parameter component for the global dynamics of y independent on the intervention. Although, in general the impact could depend on \(\theta: \Updelta =\Updelta\left( \theta\right),\) it is convenient to define models for which \(\Updelta\) depends solely on θα. In the simple case of equation (3) , we have \(\alpha=\theta_{\alpha }\propto\Updelta( \alpha).\)

With this notation, the estimated model parameter array is given by \(\hat{\theta}=( \hat{\theta}_{\alpha},\hat{\theta}_{{\sim}\alpha}),\) which may be computed using maximum likelihood as in Fassò and Finazzi (2011). In particular the estimates are computed using the EM algorithm, hence the acronym STEM for this approach. Note that the EM algorithm relies on a posteriori common latent effects, namely \(\hat{z}(t) =E(z(t) |Y),\) which are computed by the Kalman smoother, and a posteriori local effects, namely \(\hat{\omega}( s,t) =E (\omega(s,t) |Y),\) which are computed by Gaussian conditional expectations. An efficient software for EM estimation, filtering and kriging, called D-STEM, has been recently introduced by Finazzi (2012) and is largely used in Sect. 3.

Using the above model, the effectiveness of an environmental measure may be proven by rejecting the non-change hypothesis given by

Suppose that \(\Updelta\left( \theta_{\alpha}\right) =0\) for θα = 0. We can then test the above non effect hypothesis by the (one sided) likelihood ratio test. In particular, if θα = α is a simple scalar parameter, we can approximate the likelihood ratio test by a simple t test and reject H 0 for large significant values of \(\frac{\hat{\alpha}}{se\left(\hat{\alpha}\right) }\) where \(se( \hat{\alpha})\) is a suitable estimate of \(\sqrt{Var(\hat{a}) }.\) Moreover, if α(s) is a Gaussian field, it may be estimated by kriging-like computations, giving the impact map \(\hat{\alpha}(\cdot)\) and the 1 − p level approximate confidence bands given by

where z p is the (1 − p) quantile of the standard Gaussian distribution.

2.4 Days for detection and information

Under regularity assumptions, the observed Fisher information matrix I n , for large n and m, may be approximately partitioned as follows

where the matrix blocks \(i_{\alpha},i_{{\sim}\alpha}\) and \(i_{\alpha ,{\sim}\alpha}\) are the corresponding blocks of the non singular information \(i_{\theta}=-E\left( \frac{\partial^{2}}{\partial\theta \delta\theta^{\prime}}\log\left( f\left(\theta\right)\right)\right).\) It follows that the precision in the estimation of the pollution reduction α depends mainly on m.

Hence in testing (5) for θα = α, the number of days required to detect a reduction of size \(\alpha^{\ast}\) with high probability π is then computed with formulas which generalise the classical sampling results. In particular for the simple scalar parametrization of \(\alpha\left( \cdot\right),\) applying the well known matrix inversion lemma (see e.g. Gentle, 2007, 3.4.1) to expression (6), we have the approximated formula

3 Milan case study

The monitoring network of Milan city, depicted in Fig. 2, is composed by one station for PM2.5, four stations for PM10 and eight stations for NO X . We consider the log-transformed and centered concentrations between January 1st, 2009, and July 20, 2012, totalling n = 1297 of which and m = 186 after the introduction of the congestion charge, dated January 16, 2012.

In order to adjust for the confounders, we considered meteorological conditions in Milan, namely wind speed (daily maximum and average) and direction, humidity, temperature, solar radiation and pressure. Moreover, we used the additional covariates given by the concentration readings of the same pollutants from Meucci station in the city of Bergamo, which is approximately 45 km North-East of Milan. This is an important proxy for all other meteorological and economic factors which are common to the northern plain of Lombardy.

Considering the limited amount of spatiotemporal information contained in the data, in order to avoid overfitting and shadowing of the change point \(t^{\ast},\) we use a deterministic \(\beta\left( t\right) \) with a minimal seasonal structure given by:

Similarly the spatial covariance function of the spatial component \(\omega\left(s,t\right)\) in Eq. (4) is given by

Although r could be estimated as a continuous parameter governing mean square differentiability of ω, due to the limited spatial information, we calibrated this parameter only for r = 1 or 2 and concluded that r = 1 is better in terms of attained log-likelihood, both for particulate matters and nitrogen oxides.

3.1 Fine particulate matters

We start with the single station on fine particulate matters PM2.5, namely Pascal station, which is a ground station external to “Area C” and located in the relatively central quarter named “Città studi”. Here, fine particulate concentrations have a three-year average of approximately 30 μg/m3 before intervention. After January 16 the January–February three-year average increased from 53.7 μg/m3 to 54.1 μg/m3, questioning the congestion charge effect.

To fit the model for fine particulate matters, we use the centered log transformed concentrations which have variance σ 2 y = 0.70, and, after row deletion of missing data, we get the fitted model of Table 1 based on the remaining 1038 observations. Using the seasonal structure of equation (8) for b, it is worth noting that wind speed has an effect both in summer and winter while, not surprisingly, solar radiation is not significant in winter. After adjusting for Bergamo concentrations which are quite significant in the model, the residual autocorrelation is not large resulting in g = 0.212.

Since we are in log scale, the permanent effect, computed as both \(\hat{a}\) or \(\hat{d}\) of Eq. (1), may be interpreted as a percent change. According to this model, at Pascal station, we observe a 0.12 permanent reduction of PM2.5 on log scale with a one-sided p value smaller than 0.2 %. From the bottom row of Table 1, we see that fitting is quite good. The residuals result to be satisfactorily white noise but moderately non Gaussian as shown by Fig. 3 and by unreported kurtosis which is larger than three. Although some alternatives to conditional Gaussian models could be developed, see e.g. Bartoletti and Loperfido (2010) or Nadarajah (2008), the above results were validated by simulation experiments and by comparison with robust estimation methods getting very close results to Table 1.

Days for detection

From the practical point of view it is important to see which is the number of days required to detect a certain reduction in the annual average of PM2.5. Following the approach which gives formula (7), we consider the t-test for the hypothesis of no impact H 0:d = 0 against a reduction d > 0 based on large values of the statistic \(\frac{\hat{a}}{se\left( \hat{a}\right) }.\) Using the nominal significance level p = 5 %, the number of days for detecting a permanent reduction of size \(d^{\ast}=0.10\) in log scale with probability π = 85 % is given by \(m\geq m^{\ast}=162\) as shown in Table 1, which is consistent with the above results.

3.2 Particulate matters

For the PM10, we have three sites, two are traffic stations located inside “Area C”, namely Verziere and Senato stations, and the third is Pascal, which is a ground station located in Città studi which is a semi-peripheral area with pattern similar to the city center.

Table 2 shows the fitted model for PM10. We see that the persistence coefficients g are small and very close to one another, denoting the same weak autocorrelation after adjusting for the Po valley concentration proxy given by Bergamo measurement and local meteorological covariates. Both average and maximum wind speed have an effect on PM10 with a clear seasonal behaviour. The last column shows that fitting is quite satisfactory. We note that, after introducing the Bergamo proxy, only local conditions given by wind speed enter as additional covariates.

As in the previous section, a moderate residual non normality indicated by kurtosis larger than three for all components does not jeopardize the results of Table 2. Moreover, Fig. 4 shows that the residuals can be assumed to be a white noise and the importance of the multivariate approach is appreciated by the marked residual correlation shown in Table 3.

Note that the global three-year average for these stations before intervention is about 45 μg/m3, so the average reduction of d = 0.137 in log scale, with se = 0.053, corresponds approximately to 6 μg/m3 for the yearly average. According to the data, this impact is stronger in Area C.

STEM approach

In the light of the preliminary analysis, we go further with the approach of Sect. 2, obtaining the fitted model of Table 4. To avoid initial values dependence, the EM algorithm has been replicated 100 times applying beta distributed random perturbationsFootnote 1 to the initial estimates which have been computed using the method of moments. Generally speaking the standard errors are small, with the exception of the spatial correlation parameter ρ, which has a quite large uncertainty. This is not surprising since with only three stations, it is not easy to estimate spatial correlation. The reduction parameter α is positive, denoting a significant permanent reduction of particulate concentrations of 0.085 in log scale for the city center with one sided p value smaller than 1 %.

Moreover, the likelihood ratio test for the hypothesis of no effect, namely H 0:α = 0, gives a test statistic 2log(LR) = 13.2 with a p value smaller than 0.1 %. Finally, the number of days for detection of formula (7), with nominal significance level p = 5 %, a permanent reduction of size \(\alpha^{\ast}=0.10\) and power π = 85 % results to be \(m^{\ast}=154\) which is consistent with above results.

According to ARX model, Area C has a little larger PM10 reduction, but the difference in a coefficients of Table 2 is far from significant. Using STEM model, the corresponding analysis is based on the a posteriori local effects of Sect. 2.3, that is \(\bar{\omega}\left( s\right) =\frac{1}{m}\sum_{t=t^{\ast}}^{n}\hat{\omega}\left( s,t\right)\) which are smaller than 1 % and have non significant p values for Pascal, Senato and Verziere. Hence we can conclude the reduction in PM10 is approximately constant around the city center but we have no information on peripheral areas.

Nitrogen oxides

As mentioned above, NO X are monitored in Milan city more extensively than particulate matters. In fact, in addition to the previous three stations, we have four traffic stations near the internal bypass and one ground station in the green area of Parco Lambro, near the eastern circular highway and exposed to Linate airport emissions. Using this relatively larger network, we will address the spatial variability of the congestion charge impact around the city. We first observe that NO X are given as hourly data. In order to have high quality daily data, and considering that STEM approach is resistant to missing data, we defined as missing those daily averages based on less then 20 validated observations. An additional meteorological covariate enters at this stage which was not significant with particulate matters. This is the prevalence of South western wind (SW-PWD) defined as the number of hours per day when the prevailing wind is from SW.

The vector ARX model for these eight stations is reported in Table 5. We keep this as a preliminary analysis model even if, with such a number of spatial locations, a vector approach begins showing its limitations and the need for a unified approach such as STEM is now becoming evident. The proxy from Bergamo and the seasonal effect on wind are again decisive for the good model fitting, reported in the last column of Table 5. As mentioned above, the wind direction from South West is significant for various stations. Despite the number of monitoring stations, the impact of the congestion charge on NO X is far from constant around the city.

In Pascal station, we have the maximum reduction, more than 0.28 in log scale and p value close to zero. Surprisingly this value is much larger than inside the traffic restricted Area C, where the permanent effect estimated by the ARX model is positive but very small especially in Verziere. Moreover, in peripheral areas, at Liguria and Lambro stations, we have statistically significant increases in NO X concentrations. Note that this fact is not a model artifact but reflects the local behaviour as shown in Table 7. These points will be partially overcome in the final STEM model.

The residuals of this model are satisfactorily Gaussian, white noise and homoskedastic according to standard tests. Note that the estimated permanent reduction for Pascal is quite large, \(\hat{d}=0.44\) with a standard error se = 0.05, and will be discussed further in the next section.

STEM approach

In order to deepen the urban variability and seek for a unified conclusion about the congestion charge impact on nitrogen oxides concentrations, we consider a STEM model with spatially varying impact. In the light of the limited spatial information contained in Milan eight station network, we use the following simple impact model for \(t\geq t^{\ast}\text{:}\)

where, for the purpose of this paper, the city center is defined by Area C plus Città studi, as discussed in the previous section. The estimated model, reported in Table 6, clearly shows the difference between the impact in the city center, where a permanent reduction of about 0.22 in log scale is estimated, and the peripheral area where NO X concentrations show an increase of 0.07, although not statistically significant. To have a confirmation about the non-increase in the peripheral area, we tested the hypothesis α2 = 0 using the Likelihood ratio test. This approach gives a restricted Log-likelihood of 6515.0 and a non significant LR statistic with p value = 15.7 %. Interestingly the spatial correlation parameter ρ is very close to the PM10 case but, as expected, the standard deviation is smaller reflecting the major spatial information of nitrogen oxides network. Moreover, the number of days for detection of formula (7), with nominal significance level p = 5 %, a permanent reduction of size \(\alpha^{\ast}=0.20\) and power π = 85 % results to be \(m^{\ast}=39,\) showing that, with this kind of spatial information, one month is sufficient to understand the permanent effect about 20 % in size.

The local effects \(\bar{\omega}(s)\) of Sect. 2.3 are reported in Table 7. Note that, considering for example Pascal, if we sum up α1 from Table 6 and \(-\bar{\omega}(s)\) from Table 7 we get a total reduction of 0.402 which is quite close to the estimate \(\hat{d}\) of the ARX model. Note that these results reflect the change in the unadjusted seasonal average concentration. For example in the last two columns of Table 7, we have the comparison of the average concentrations in the period January 16–July 20 before and after the congestion charge introduction, in the years 2009–2011 and 2012 respectively.

4 Discussion and conclusions

In order to answer the three scientific questions on air quality impact raised in Sect. 1, we introduced a general approach, based on STEM model, for spatiotemporal impact assessment of air quality policies allowing both estimation and testing.

The first question on the presence of a “permanent impact” is positive. In particular, we showed that the congestion charge operating in Milan center since January 16, 2012, has a significant permanent impact on air quality in terms of particulate matters and nitrogen oxides concentrations at least in the city center. The air quality impact has been estimated after adjusting for meteorological factors and other common forcing factors, such as the economic crisis. Interestingly, the reduction on PM2.5, PM10 and NO X concentrations estimated using both a preliminary vector autoregressive model and STEM approach, is not confined inside the traffic restricted area.

The second question, on the spatial distribution of the air quality change has an articulated answer. We observed that, despite the reduced number of monitoring stations in the city, the impact has a noticeable spatial variability which is different for PM10 and NO X . In particular, after the traffic intervention, a significant reduction of both particulate matters and nitrogen oxides has been estimated in the city center. The reduction of particulate matters, which is about 8 %, or 3.6 μg/m3, in city center, is slightly higher in the intervention area, but the spatial variations around the city center either inside or outside the Area C can be neglected. Moreover, consistently with being mostly primary pollutants, nitrogen oxides show a larger reduction, namely 22 %, or 13 ppb, in the city center. Surprisingly, the reduction is higher in Città studi, outside the intervention area, while in Verziere, which is inside Area C, the reduction is barely significant. As expected, in the peripheral areas of the city, we observe only non significant changes of nitrogen oxides.

Considering the third question on spatiotemporal information, we observe that the Milan data give a substantially clear picture of air quality impact for all three contaminants considered, since they satisfy the required number of days for detection \(m^{\ast}\) for each of the three contaminants. In particular the 20 % permanent reduction of nitrogen oxides could be detected after approximately 40 days with high probability. From the spatial point of view, we observe that Milan data are sufficient for discriminating among city center and peripheral areas. Nonetheless, their spatial information content is not enough for estimating a detailed city map either for pollutant concentrations or human exposure as could be done by applying the dynamical kriging capabilities of the STEM approach proposed in this paper to a more informative monitoring network.

Notes

At each replication, the initial values have been rescaled between 0 and three times their value by multiplication with random numbers 3B where \(B^{\prime}s\) have been drawn from the Beta distribution with parameters 4 and 8, so that E(3B) = 1.

References

Arduino G, Fassò A (2012) Environmental regulation in the European Union. In: El-Shaarawi AH, Piegorsch WW (wds) Encyclopedia of environmetrics, 2nd edn. Wiley, New York, pp 886–889

Bartoletti S, Loperfido N (2010) Modelling air pollution data by the skew-normal distribution. Stoch Env Res Risk Assess 24:513–517

Box GEP, Tiao GC (1975) Intervention analysis with applications to economic and environmental problems. J Am Stat Assoc 70(349):70–79

Bruno F, Cocchi D (2007) Recovering information from synthetic air quality indexes. Environmetrics 18:345–359

Bruno F, Guttorp P, Sampson PD, Cocchi D (2009) A simple non-separable. non-stationary spatiotemporal model for ozone. Environ Ecol Stat 16:515–529

Cameletti M, Ignaccolo R, Bande S (2011) Comparing spatio-temporal models for particulate matter in Piemonte. Environmetrics 22(8):985–996

Clement L, Thas O, Vanrolleghem PA, Ottoy JP (2006) Spatio-temporal statistical models for river monitoring networks. Water Sci Technol 53(1):9–15

Corani G (2005) Air quality prediction in Milan: feed-forward neural networks, pruned neural networks and lazy learning. Ecol Model 185:513–529

Fassò A, Finazzi F (2011) Maximum likelihood estimation of the dynamic coregionalization model with heterotopic data. Environmetrics 22(6):735-748

Finazzi F (2012) D-STEM: a statistical software for multivariate space-time environmental data modeling. In Goncalves AM et al (eds), Proceedings of the international workshop on spatio-temporal modelling (METMA VI). Guimaraes, 12–14 September 2012, ISBN 978-989-97939-0-3.

Finazzi F, Fassò A (2011) Spatio temporal models with varying coefficients. In Cafarelli B (ed) Spatial data methods for environmental and ecological processes—2nd edn, Foggia, 1–2 September 2011. ISBN: 978-88-96025-12-3. Also in: Graspa WP, 2011, http://www.graspa.org, ISSN: 2037-7738.

Finazzi F, Scott ME , Fassò A (2013) A model based framework for air quality indices and population risk evaluation. With an application to the analysis of Scottish air quality data. J R Stat Soc C 62(2):287–308

Gardner MW, Dorling SR (1999) Neural network modelling and prediction of hourly NOx and NO2 concentrations in urban air in London. Atmos Environ 33:709–719

Gentle JE (2007) Matrix algebra: theory, computations, and applications in statistics. Springer, New York

Graf-Jaccottet M, Jaunin MH (1998) Predictive models for ground ozone and nitrogen dioxide time series. Environmetrics 9:393–406

Hipel KW, McLeod AI (2005) Time series modelling of water resources and environmental systems. \(\copyright\) 2005 Hipel & McLeod. http://www.stats.uwo.ca/faculty/aim/1994Book/.

Hong-di H, Wei-Zhen L (2012) Urban aerosol particulates on Hong Kong roadsides: size distribution and concentration levels with time. Stoch Env Res Risk Assess 26:177–187

Janssen NA, Hoek G, Simic-Lawson M, Fischer P, van Bree L, ten Brink H, Keuken M, Atkinson RW, Anderson HR, Brunekreef B, Cassee FR (2011) Black carbon as an additional indicator of the adverse health effects of airborne particles compared with PM10 and PM2.5. Environ Health Perspect 119:1691–1699

Nadarajah S (2008) A truncated inverted beta distribution with application to air pollution data. Stoch Env Res Risk Assess 22:285–289

Porcu E, Gregori P, J Mateu J (2006) Nonseparable stationary anisotropic space–time covariance functions. Stoch Env Res Risk Assess 21:113–122

Soni P, Chan Y, Preissl H, Eswaran H, Wilson J, Murphy P, Lowery CL (2004) Spatial-temporal analysis of non-stationary fMEG Data. Neurol Clin Neurophysiol 100:1–6

Acknowledgements

This research is part of Project EN17, ‘Methods for the integration of different renewable energy sources and impact monitoring with satellite data’, funded by Lombardy Region government under ‘Frame Agreement 2009’. The help of Angela Locatelli and Francesco Miazzo for data handling has been much appreciated by the author.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fassò, A. Statistical assessment of air quality interventions. Stoch Environ Res Risk Assess 27, 1651–1660 (2013). https://doi.org/10.1007/s00477-013-0702-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-013-0702-5