Abstract

Introduction

Over the past decade, simulation-based training has come to the foreground as an efficient method for training and assessment of surgical skills in minimal invasive surgery. Box-trainers and virtual reality (VR) simulators have been introduced in the teaching curricula and have substituted to some extent the traditional model of training based on animals or cadavers. Augmented reality (AR) is a new technology that allows blending of VR elements and real objects within a real-world scene. In this paper, we present a novel AR simulator for assessment of basic laparoscopic skills.

Methods

The components of the proposed system include: a box-trainer, a camera and a set of laparoscopic tools equipped with custom-made sensors that allow interaction with VR training elements. Three AR tasks were developed, focusing on basic skills such as perception of depth of field, hand-eye coordination and bimanual operation. The construct validity of the system was evaluated via a comparison between two experience groups: novices with no experience in laparoscopic surgery and experienced surgeons. The observed metrics included task execution time, tool pathlength and two task-specific errors. The study also included a feedback questionnaire requiring participants to evaluate the face-validity of the system.

Results

Between-group comparison demonstrated highly significant differences (<0.01) in all performance metrics and tasks denoting the simulator’s construct validity. Qualitative analysis on the instruments’ trajectories highlighted differences between novices and experts regarding smoothness and economy of motion. Subjects’ ratings on the feedback questionnaire highlighted the face-validity of the training system.

Conclusions

The results highlight the potential of the proposed simulator to discriminate groups with different expertise providing a proof of concept for the potential use of AR as a core technology for laparoscopic simulation training.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

In medical education, the Halstedian principle “see one-do one-teach one”, is for more than a century the main apprenticeship model of training, where trainees observe how a task is performed by an expert clinician and then repeat the same task under guidance [1, 2]. During the past decades however, following the popular paradigm of pilot training in modern aviation [3], simulation has been introduced into medical education as an alternative to the traditional model of training [4]. In general, medical simulation refers to a wide range of educational tools and practices that allow trainees to practice their skills in a safe environment before refining them in the real world. Mannequins, synthetic bench models, animal and human cadaver tissues, human actors playing the patient’s role, and virtual reality (VR) simulation are some of the most popular training tools employed in several medical specialties [5, 6].

In minimally invasive surgery (MIS), the initial step towards simulation-based training was the introduction of box-trainers, where surgeons can practice their skills on synthetic models or inanimate models of human organs. These systems allow the acquisition and enhancement of fundamental surgical skills, such as hand-eye coordination and perception of depth of field, via the use of the actual endoscopic tools. Consequently trainees experience a realistic sense of force feedback during tool–tissue interaction, which provides a better training experience. A negative aspect is that training on box-trainers can prove impractical and costly since the models need replacement after each trial (e.g. cutting tissue), and performance assessment is obtainable only via expert supervision or review of the recorded videos [7].

Over the last decade, VR simulators have been introduced as an alternative paradigm for training in MIS. In principle, VR-based training refers to the graphical representation of 3D anatomical structures on a 2D monitor, with which the trainee is able to interact by manipulating a mechanical interface that captures the necessary kinematic parameters. The existing state-of-the-art VR simulation systems employ highly sophisticated multisensory equipment, advanced computer graphics and physics-based modeling techniques, aiming to reproduce tasks that imitate real-life surgical scenarios [8]. Based on the growing evidence that computer-based simulation training leads to improved patient care, VR simulators are now acknowledged as a certified tool for teaching fundamental as well as advanced technical and cognitive skills in MIS [9, 10]. A significant advantage of VR simulators against box trainers is that the former provide flexibility on the selection of the training scenario, which can be tailored to the trainee’s educational needs. Additionally, the capability for real-time data collection allows the acquisition of various important parameters related to the tool kinematics and errors occurred, providing an automated and objective assessment of performance [11, 12]. Despite these advantages though, VR simulators are often criticized for poor representation of some anatomical organs and tasks, and also for providing a moderate sense of haptics during interaction [13]. Furthermore, in contrast to box trainers VR-based training requires significant financial investment, the return on which remains ambiguous [14].

Augmented reality (AR) is a relatively new, hybrid technology that allows superimposition of VR elements into a scene captured by a camera, resulting in a realistic mixture of real and virtual elements. To date, AR has gained wide spread attention for an increasing number of technological developments. Characteristic examples include: assembly instructions for automotive manufacturing [15], virtual representation of missing parts in archeological monuments [16], instructions delivery for aerospace maintenance [17], and AR mobile applications [18]. In the field of MIS, significant research works have been conducted, mostly on the visual enhancement of the surgical field with 3D anatomical models that are pre-constructed from CT or MRI data [19–21]. In this context, the AR systems are based on two core elements: a display device that performs superimposition of the 3D (virtual) models onto the real world scene, and a technique for continuous estimation of the spatiotemporal relationship (i.e. tracking) between the real and virtual worlds. Typical display technologies include video monitors [19], head-mounted displays [22] and projection-based devices [23], whereas tracking is usually achieved by means of electromagnetic (EM) sensors [24], optical-infrared sensors [25], visual pattern-markers [12] and color tags [26].

Despite the significant developments in AR-assisted surgery, there is a significant gap in the literature regarding studies that explore AR technology for skills training in MIS. Most relevant studies are focused on the enhancement of the scene with features that provide visual instructions and guidance information to the trainee. The only commercially available AR system having these qualities is the ProMIS simulator [27], which has been employed for performance assessment of technical skills such as laparoscopic suturing [28, 29].

Although the aforementioned studies have opened the opportunity for introducing core elements of AR technology into surgical education, the presented implementations do not allow the trainee to interact with virtual training models rendered on the screen. If such a system was to be developed, the VR elements should had the ability to respond to collisions and other type of forces applied by the actual endoscopic tools, similarly to the training models used in box-trainers (e.g. pegs, cutting tissue, etc.). Reproducing these tasks in an AR environment though is not trivial due to a series of interrelated technical challenges such as: tracking and pose estimation of the tools, tracking and geometric modeling of the physical world, 3D rendering of the virtual objects, and physics-based simulation of the interactions occurred between the VR objects and the physical world (e.g. collisions with the box surface and endoscopic tools). Hence, implementing tasks that involve interaction between the actual endoscopic tools and the (training) VR models would clearly signify an important step towards the development of a genuine AR surgical simulator. In addition, important assets that apply in VR simulation, such as automated performance assessment and flexibility in modifying the difficulty level of the tasks, would also be applicable in AR-based training. Initial studies towards this direction have recently been published by our group, and promising results were obtained regarding the potential of developing AR tasks based solidly on computer vision algorithms [12, 26]. However, key degrees of freedom (DOF) such as grasping and tip rotation could not be captured under that framework, thus limiting the training value of the implemented AR tasks.

The aim of this paper is to propose the development of a simulation system for training and assessment of basic laparoscopic skills in an AR environment. In contrast to our previous works where tool tracking was based on image analysis techniques, in this paper we present a multisensory interface that can be easily attached to the handle of the endoscopic tool providing sufficient information about the tool kinematics. The proposed system allows the implementation of training scenarios for technical skills acquisition such as perception of depth of field, hand-eye coordination and bimanual operation. Based on this system, the trainee is able to interact with various virtual elements introduced into the box-trainer, using the actual laparoscopic instrumentation (camera and tools). Ultimately, our goal is to bridge the gap between box-trainers and VR simulators, and demonstrate that an AR-based training system could utilize the important assets of both training modalities: the increased sense of visual realism and force-feedback provided by the endoscopic tools, combined with the flexibility of VR in the development of training scenarios and the opportunity for automated performance assessment based on real-time data collection.

Methods

Hardware setup

The main components of the system include: a standard PC with a monitor (Intel® Core™ 2 Duo 3.1 GHz), a fire-wire camera with appropriate wide-angle lenses (PtGrey Flea®2), a box-trainer, a pair of laparoscopic tools (Fig. 1), and three different types of sensors attached to the laparoscopic tool. The sensors (Fig. 2) are used to provide eight DOF information regarding the tool kinematics: 3D pose of the tool (6 DOF), shaft rotation (1 DOF), and opening angle of the tooltip (1 DOF). Moreover, a fiducial pattern marker is placed on the bottom surface of the box trainer to help us define the global coordinate system of the simulation environment.

To obtain the pose of the tool, trakStar™ (Ascension Tech Corp., Burlington, VT) EM position-orientation sensors are employed. The EM transmitter is placed at a fixed position within the box-trainer, (see Fig. 1) whereas the sensors are attached to the tool handles as shown in Fig. 2. The pose of the receivers with respect to the tool as well as the pose of the transmitter with respect to the global reference frame are obtained through a calibration process.

A custom-made rotary encoder controlled via an ArduinoFootnote 1 microcontroller board is employed to measure the rotation of the shaft. In particular, the encoder consists of a magnetic rotor firmly attached to the shaft, and a plastic stator attached to the handle as shown in Fig. 2. Inside the stator, two Hall effect sensors (HFs), positioned with an approximate 90° angle separation, detect changes on the sinusoidal waveform that the magnetic rotor generates during rotation. The voltage output of the HFs is digitally converted using a microcontroller’s analog to digital converter (ADC). Calculation of the shaft rotation angle is obtained with the CORDIC algorithm [30]. This setup allows us to obtain angular measurements for the full 360° range of the shaft revolution. The microcontroller is equipped with a 10 bit resolution ADC, corresponding to an angle resolution of 0.35°.

The opening angle of the tooltip is acquired via a specially designed IR proximity sensor attached to the trigger of the tool as shown in Fig. 2. The sensor consists of an IR Led emitter–receiver pair. The receiver measures the amount of the emitted IR light reflected to the handle of the instrument. The voltage output of the IR receiver is inversely proportional to the distance between the trigger and the handle. In order to translate distance information into an angle value, a pre-calibration process is required. This process provides the maximum and minimum amount of IR reflectance corresponding to the maximum and minimum opening angle respectively. Additionally, the maximum opening angle of the tooltip has to be known. For the laparoscopic tools employed, the tooltip angle range varies from 0° to 45°. The output of the IR proximity sensor is fed to the microcontroller ADC, and the transformation of the measured reflectance into angle units is provided by:

where \( {\varphi_{\text{curr}}} \) is the calculated rotation angle, \( {R_{\text{curr}}} \) is the reflectance measurement, φ max is the maximum opening angle of the tooltip, and \( {R_{ \hbox{max} }},{R_{ \hbox{min} }} \) are the maximum and minimum reflectance values respectively obtained from the pre-calibration process.

Simulation engine

The software engine for the VR-based laparoscopic tasks was developed using Ogre3DFootnote 2 as a framework for managing both the creation of the graphical user interface and the rendering of the mixed reality scene for the tasks. The ARToolkitProFootnote 3 library was used to calculate the spatial relationship between the camera and the box-trainer reference frame in terms of rotation and translation, by tracking the pose of the pattern marker described earlier. Simulation of the physical behavior of the virtual objects (such as collision detection, response between tools and virtual objects, and soft body deformations), was implemented with the BulletFootnote 4 real-time physics engine. In order to meet the specific needs of each task and achieve realistic behavior of the virtual objects, several modifications and supplementary algorithms to the Bullet source code were applied. Finally, the 3D models of the virtual objects employed in the simulation tasks, were performed in Blender3D.Footnote 5

Task description

Based on the aforementioned simulation engine, three training tasks were developed, targeting different technical skills in laparoscopic surgery (Fig. 3).

Task 1: instrument navigation (IN)

A total of eight rounded white buttons, each one enclosed in a black cylinder, is introduced to the center of the box-trainer (Fig. 3A). The task requires the user to hit the buttons in a sequential order, when each one of them is highlighted in green. The order on which the buttons get highlighted is random and also varies randomly among training sessions. The user is given a time limit of 8 s for each button. Two types of error were recorded during this task: the number of missed targets due to time expiration, and the number of tooltip collisions with either the base of the box-trainer or the black cylinders.

Task 2: peg transfer (PT)

This task is based on a virtual peg-board consisted of four cylindrical targets and an equal number of torus-shaped pegs introduced sequentially into the scene (Fig. 3B). Each target has a distinctive color. The trainee has to lift and transfer each peg to the target with the same color. When a peg has been transferred, another peg of different color is introduced. Two types of error were recorded during this task: unsuccessful transfer attempts and peg drops. Unsuccessful attempts occur when a peg is dropped away from the center of the box-trainer or when it is transferred to a target with a different color, while peg drops occur each time a peg is dropped accidentally during transferring.

Task 3: clipping (CL)

A virtual vein is introduced into the scene as shown in Fig. 3C. The center of the vein is highlighted in green and two different locations on either side of its center are highlighted in red. The width of each highlighted region is 5 mm. The goal is to apply a clip at the center of each of the red regions using a virtual clip applicator. Once clipped, then the user has to use virtual scissors to cut at the center of the green region. Two different metrics were recorded during this task: The distance error during CL/cutting with respect to the center of the corresponding region, and the number of unsuccessful CL/cutting attempts, which are recorded when the user clips/cuts outside the highlighted area.

Study design and statistical analysis

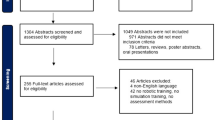

We collected data from subjects with two different levels of expertise: ten experienced surgeons (experts), and ten individuals with no experience in laparoscopic surgery (novices). Each participant performed two trials of each task. Prior to performance, each subject performed a trial session of each task to enhance familiarization.

The collected data regarding the performance of the two groups were statistically analyzed using the MATLAB® Statistics toolbox (MathWorks, Natick, MA, USA). Between-group comparison of performance metrics was undertaken with the Mann–Whitney U test (5 % level of significance).

Questionnaire

To further investigate the training value of the simulator, the subjects from both experience groups were asked to complete a questionnaire after the completion of the study protocol. Using a 5-point Likert scale scoring system, the provided choices were “none”, “low”, “medium”, “high” and “very high”. Each of the three training tasks was assessed based on the following criteria:

-

1.

How do you rate the realism of the graphical representation of the VR objects?

-

2.

How do you rate the realism of the interaction between the instruments and the VR objects?

-

3.

How do you rate the difficulty of the task?

-

4.

How important was the lack of force feedback during tool-object interaction?

-

5.

How restrictive in performing a task was the attachment of sensors on the laparoscopic tool?

Results

To evaluate the construct validity of the proposed system a statistical comparison of the two experience groups was performed with regard to the three tasks described previously. The performance metrics included the two types of errors for each task, the task completion time, and the total pathlength of the laparoscopic tools. Figures 4, 5 and 6 illustrate bar charts of the four performance metrics for the three training tasks respectively. It is clear that experts outperform novices in all tasks and metrics. Table 1 depicts the median values of each group and the p values obtained from the between-group comparison test. It can be noticed that in almost all performance metrics, the p values denote a highly significant performance difference between the groups (<0.01). The only metric that demonstrates a slightly reduced difference between the groups is error 1 for PT, although the measured p value is also significant (<0.05). From the same figures, it can also be noticed that the interquartile difference of experts is clearly smaller than that of the novices, indicating a robust performance. This is especially noticeable for time and pathlength, where the results for the experts group demonstrate a very small interquartile difference across all tasks. Additionally, for the PT task one can notice that error 1 for experts is 0 %, which indicates that none of them missed a peg across the attempted trials. Similarly, for the CL task the error 2 of the experts group is 0 %, indicating that none of them missed a target during the trials. It is also important to notice that for the novices group the completion time and instrument pathlength are two to three times higher than that of the experts group for all training tasks, indicating the potential of these metrics to capture the difference in experience between the two groups. The actual numerical results for this comparison are provided in Table 1.

Figure 7 illustrates a plot of the trajectory of the instrument controlled by the dominant hand of the subject that is closest to the median of the total pathlength. It is clear that the expert’s trajectory is smoother and more confined compared to that of the novice. Especially for the CL task, the expert demonstrates a fine pattern of movements, whereas the novice performs multiple retractions of the tool to locate the targets. Moreover, the tool trajectory of the novice is less targeted compared to that of the expert subject, and it is also accompanied by a significant amount of jitter.

Table 2 illustrates the subjects’ ratings for the face validity of the proposed training system. With regard to the graphical representation and the physics-based behavior of the AR tasks, the subjects from both groups agreed that the attributed realism was more than sufficient (high–very high) to provide the expected training qualities. The subjects also found the difficulty of the IN task to be lower compared to PT and CL. For these two tasks, the novices group encountered greater difficulty in performing them compared to experts. Regarding the sense of force feedback, both groups seem to agree that its absence does not play a significant role for the tasks that do not involve soft-tissue deformations (IN and PT). For the CL task however, force feedback seems to be important, based on the subjects’ ratings. The subjects also concluded that the sensors attached to the laparoscopic instruments do not seem to restrict the maneuvers performed by the user during task performance.

Discussion

In this paper we describe the development of an AR laparoscopic simulator that focuses on the assessment of basic surgical skills. In contrary to the existing AR-based training platforms (ProMIS [28]), our system is a genuine AR-based training system that allows the user to interact in real-time with rigid and deformable VR models. An important advantage of the proposed system is the increased sense of visual realism, emerging from the realistic mixture of real world and VR models that AR technology provides. The improved visual feedback provides better understanding about the position of the VR elements, which in turn provides the trainee enhanced perception of depth compared to purely virtual environments. In addition to the visual realism however, the proposed system allows trainees to gain better familiarization with the actual operating conditions due to the actual laparoscopic instrumentation employed to perform the tasks. Another advantage of the proposed simulator is that its cost is significantly less than that of a commercial VR laparoscopic simulator, since it employs custom-made low-cost sensors and open-source graphics libraries. Hence the proposed system seems a low-cost alternative to the commercial VR simulators for recognizing users with different experience in laparoscopic surgery.

A potential drawback of the proposed simulator is the lack of force feedback during interaction. Although there are a few options for commercially available force feedback devices, it would be problematic to integrate them into the proposed AR setup since they are bulky and thus they would obscure the visual field of view if they were placed inside the box trainer. To overcome this limitation, a device that would provide force feedback without affecting the camera field of view could be employed, but to the best of our knowledge a device with such characteristics is not currently available. Nevertheless, the importance of force feedback in the acquisition of basic surgical skills is subject to controversy [26]. Studies indicate that for basic skills, force feedback does not play a critical role in the efficiency of a training platform [31, 32]. In addition, some studies claim that an inaccurate implementation of force feedback results in poor quality of simulation that could lead to adverse effects and bad habits [33]. However, there are studies that reporting that even a simulator without force feedback can provide effective transfer of training [34]. To assess its significance in the proposed setup, we asked the participants to rate the impact of the lack of force feedback for each task practiced. Based on the replies received, it was concluded that although feedback would be and important asset, it is not crucial for training tasks that do not involve interaction with soft tissues. This may be due to the fact that interaction with soft tissue requires gentle hand movements, and hence force-feedback would provide better realization of the applied forces, helping the surgeon not to damage the tissue.

Besides the importance of force feedback, the questionnaire statements aimed to rate other key aspects of our simulator such as its realism, difficulty of the training tasks and the potential motion-restrictive effects that the attachment of sensors to the tool may produce. First, we asked the participants to evaluate the visual representation and physics-based behavior of the virtual models. The two experience groups seemed to agree that the proposed simulator provides in overall more than a sufficient sense of realism. Additionally, novices faced greater difficulties in achieving the goals of the proposed tasks compared to the experts group. Both experience groups also agreed that the attachment of the sensory equipment to the laparoscopic tools did not reduce their freedom to perform the required hand maneuvers, and consequently did not impeded task performance. In overall, the questionnaire replies indicate that the AR simulator was well accepted both by novices and experts.

With regard to the training tasks, they focus on basic surgical skills such as perception of depth of field and hand eye coordination. These tasks were designed so as to be similar to those offered by commercial VR simulators and box trainers. Although one could certainly develop more complex tasks, our main purpose here was to provide a proof of concept about the educational potential of these tasks in an AR setting. Similarly, the observed metrics are to some extent similar to the metrics employed for performance evaluation in the current VR simulators.

The between-group comparisons of the two experience groups show that the proposed tasks exhibit construct validity. For all metrics, and especially for time and pathlength, our results show significant performance differences between experts and novices. These results can be interpreted as indicative of the simulator’s potential to discriminate groups with different level of surgical experience.

Since AR and VR have in essence the same capabilities, the presented system allows the same flexibility in task prototyping that a VR platform would offer. Consequently, besides the three training tasks demonstrated in this paper, our future work includes the implementation of additional scenarios that will allow further investigation of the simulator’s training efficiency as well as the transferability of the skills acquired with the proposed system to the operating environment. In the same context, we aim to conduct a comparison study that will compare the training value of our simulator with regard to that achieved by a commercial VR simulator. Finally, to further enhance the robustness of our simulator, we aim to improve the stability of the sensors employed, and also improve their design in a modular setup with portable characteristics.

References

Kotsis SV, Chung KC (2013) Application of the “see one, do one, teach one” concept in surgical training. Plast Reconstr Surg 131(5):1194–1201. doi:10.1097/PRS.0b013e318287a0b3

Rodriguez-Paz JM, Kennedy M, Salas E, Wu AW, Sexton JB, Hunt EA, Pronovost PJ (2009) Beyond “see one, do one, teach one”: toward a different training paradigm. Postgrad Med J 85(1003):244–249. doi:10.1136/qshc.2007.023903

McGreevy JM (2005) The aviation paradigm and surgical education. J Am Coll Surg 201(1):110–117. doi:10.1016/j.jamcollsurg.2005.02.024

Satava RM (1993) Virtual reality surgical simulator. Surg Endosc 7(3):203–205

Willaert WI, Aggarwal R, Van Herzeele I, Cheshire NJ, Vermassen FE (2012) Recent advancements in medical simulation: patient-specific virtual reality simulation. World J Surg 36(7):1703–1712. doi:10.1007/s00268-012-1489-0

Okuda Y, Bryson EO, DeMaria S Jr, Jacobson L, Quinones J, Shen B, Levine AI (2009) The utility of simulation in medical education: what is the evidence? Mt Sinai J Med 76(4):330–343. doi:10.1002/msj.20127

Munz Y, Almoudaris AM, Moorthy K, Dosis A, Liddle AD, Darzi AW (2007) Curriculum-based solo virtual reality training for laparoscopic intracorporeal knot tying: objective assessment of the transfer of skill from virtual reality to reality. Am J Surg 193(6):774–783. doi:10.1016/j.amjsurg.2007.01.022

Hanna L (2010) Simulated surgery: the virtual reality of surgical training. Surgery (Oxford) 28(9):463–468

Mohammadi Y, Lerner MA, Sethi AS, Sundaram CP (2010) Comparison of laparoscopy training using the box trainer versus the virtual trainer. J Soc Laparoendosc Surg 14(2):205–212. doi:10.4293/108680810X12785289144115

De Paolis LT (2012) Serious game for laparoscopic suturing training. In: CISIS’12 proceedings of the 2012 sixth international conference on complex, intelligent and software intensive systems, 4–6 July 2012. pp 481–485. doi:10.1109/cisis.2012.175

Gor M, McCloy R, Stone R, Smith A (2003) Virtual reality laparoscopic simulator for assessment in gynaecology. BJOG 110(2):181–187

Lahanas V, Loukas C, Nikiteas N, Dimitroulis D, Georgiou E (2011) Psychomotor skills assessment in laparoscopic surgery using augmented reality scenarios. In: The 17th international conference on digital signal processing (DSP), 2011 , 6–8 July 2011, pp 1–6. doi:10.1109/icdsp.2011.6004893

Palter VN, Grantcharov TP (2010) Simulation in surgical education. Can Med Assoc J 182(11):1191–1196. doi:10.1503/cmaj.091743

Lewandowski WA (2006) return on investment (ROI) model to measure and evaluate medical simulation using a systematic results-based approach. In: Medicine meets virtual reality, pp 24–27

Nolle S, Klinker G (2006) Augmented reality as a comparison tool in automotive industry. In: IEEE/ACM international symposium on mixed and augmented reality, 2006 (ISMAR 2006), 22–25 Oct 2006, pp 249–250. doi:10.1109/ismar.2006.297829

Vlahakis V, Karigiannis J, Tsotros M, Gounaris M, Almeida L, Stricker D, Gleue T, Christou IT, Carlucci R, Ioannidis N (2001) Archeoguide: first results of an augmented reality, mobile computing system in cultural heritage sites. Paper presented at the proceedings of the 2001 conference on virtual reality, archeology, and cultural heritage, Glyfada, Greece

Haritos T, Macchiarella ND (2005) A mobile application of augmented reality for aerospace maintenance training. In: Proceedings of the 24th digital avionics systems conference, 2005 (DASC 2005), 30 Oct–3 Nov 2005, pp vol 1,5.B.3–5.1-9 Vol. 1. doi:10.1109/dasc.2005.1563376

van Krevelen DWF, Poelman R (2010) A survey of augmented reality technologies, applications and limitations. Int J Virtual Real 9(2):1–20

Su LM, Vagvolgyi BP, Agarwal R, Reiley CE, Taylor RH, Hager GD (2009) Augmented reality during robot-assisted laparoscopic partial nephrectomy: toward real-time 3D-CT to stereoscopic video registration. Urology 73(4):896–900. doi:10.1016/j.urology.2008.11.040

Azuma RT (1997) A survey of augmented reality. Presence: Teleoper Virtual Environ 6(4):355–385

Nijmeh AD, Goodger NM, Hawkes D, Edwards PJ, McGurk M (2005) Image-guided navigation in oral and maxillofacial surgery. Br J Oral Maxillofac Surg 43(4):294–302. doi:10.1016/j.bjoms.2004.11.018

Fuchs H, Livingston MA, Raskar R, Colucci Dn, Keller K, State A, Crawford JR, Rademacher P, Drake SH, Meyer AA (1998) Augmented reality visualization for laparoscopic surgery. Paper presented at the proceedings of the first international conference on medical image computing and computer-assisted intervention

Volonte F, Pugin F, Bucher P, Sugimoto M, Ratib O, Morel P (2011) Augmented reality and image overlay navigation with OsiriX in laparoscopic and robotic surgery: not only a matter of fashion. J Hepato Biliary Pancreat Sci 18(4):506–509. doi:10.1007/s00534-011-0385-6

Pagador JB, Sanchez LF, Sanchez JA, Bustos P, Moreno J, Sanchez-Margallo FM (2011) Augmented reality haptic (ARH): an approach of electromagnetic tracking in minimally invasive surgery. Int J Comput Assist Radiol Surg 6(2):257–263. doi:10.1007/s11548-010-0501-0

Weiss CR, Marker DR, Fischer GS, Fichtinger G, Machado AJ, Carrino JA (2011) Augmented reality visualization using image overlay for MR-guided interventions: system description, feasibility, and initial evaluation in a spine phantom. Am J Roentgenol 196(3):W305–W307. doi:10.2214/AJR.10.5038

Loukas C, Lahanas V, Georgiou E (2013) An integrated approach to endoscopic instrument tracking for augmented reality applications in surgical simulation training. Int J Med Robot Comput Surg 9(4):e34–e51

Botden SM, Jakimowicz JJ (2009) What is going on in augmented reality simulation in laparoscopic surgery? Surg Endosc 23(8):1693–1700. doi:10.1007/s00464-008-0144-1

Van Sickle KR, McClusky DA 3rd, Gallagher AG, Smith CD (2005) Construct validation of the ProMIS simulator using a novel laparoscopic suturing task. Surg Endosc 19(9):1227–1231. doi:10.1007/s00464-004-8274-6

Oostema JA, Abdel MP, Gould JC (2008) Time-efficient laparoscopic skills assessment using an augmented-reality simulator. Surg Endosc 22(12):2621–2624. doi:10.1007/s00464-008-9844-9

Lakshmi B, Dhar AS (2010) CORDIC architectures: a survey. VLSI design 2010. doi:10.1155/2010/794891

Maithel S, Sierra R, Korndorffer J, Neumann P, Dawson S, Callery M, Jones D, Scott D (2006) Construct and face validity of MIST-VR, Endotower, and CELTS. Surg Endosc 20(1):104–112

Woodrum DT, Andreatta PB, Yellamanchilli RK, Feryus L, Gauger PG, Minter RM (2006) Construct validity of the LapSim laparoscopic surgical simulator. Am J Surg 191(1):28–32

Panait L, Akkary E, Bell RL, Roberts KE, Dudrick SJ, Duffy AJ (2009) The role of haptic feedback in laparoscopic simulation training. J Surg Res 156(2):312–316

Seymour NE, Gallagher AG, Roman SA, O’Brien MK, Bansal VK, Andersen DK, Satava RM (2002) Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg 236(4):458

Disclosures

Mr. Vasileios Lahanas, Dr. Constantinos Loukas, Mr. Nikolaos Smailis and Dr. Evangelos Georgiou have no conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lahanas, V., Loukas, C., Smailis, N. et al. A novel augmented reality simulator for skills assessment in minimal invasive surgery. Surg Endosc 29, 2224–2234 (2015). https://doi.org/10.1007/s00464-014-3930-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-014-3930-y