Abstract

Background

The design of flexible endoscopes has not changed significantly in the past 50 years. A trend is observed towards a wider application of flexible endoscopes with an increasing role in complex intraluminal therapeutic procedures. The nonintuitive and nonergonomical steering mechanism now forms a barrier in the extension of flexible endoscope applications. Automating the navigation of endoscopes could be a solution for this problem. This paper summarizes the current state of the art in image-based navigation algorithms. The objectives are to find the most promising navigation system(s) to date and to indicate fields for further research.

Methods

A systematic literature search was performed using three general search terms in two medical–technological literature databases. Papers were included according to the inclusion criteria. A total of 135 papers were analyzed. Ultimately, 26 were included.

Results

Navigation often is based on visual information, which means steering the endoscope using the images that the endoscope produces. Two main techniques are described: lumen centralization and visual odometry. Although the research results are promising, no successful, commercially available automated flexible endoscopy system exists to date.

Conclusions

Automated systems that employ conventional flexible endoscopes show the most promising prospects in terms of cost and applicability. To produce such a system, the research focus should lie on finding low-cost mechatronics and technologically robust steering algorithms. Additional functionality and increased efficiency can be obtained through software development. The first priority is to find real-time, robust steering algorithms. These algorithms need to handle bubbles, motion blur, and other image artifacts without disrupting the steering process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Flexible endoscopes are used in a variety of clinical applications, both for diagnosis and therapy. Not much has changed in flexible endoscopy design for the past 50 years, apart from miniaturization of the cameras [1]. Flexible endoscopes come in various lengths and thicknesses, making them suitable for examining almost any hollow, tube-like structure in the human body [2]. Examples include the bowel, stomach, gall ducts, lungs, and even the salivary glands [3] and brain [4]. The most commonly performed procedures are oesophagogastroduodenoscopy (gastroscopy) and colonoscopy [2]. Generally, a flexible endoscope consists of a long, flexible tube with a light source and a lens on the tip (Fig. 1). A lens, CMOS, or CCD chip and video processor are used to convert the image to an electrical signal. The chip is mostly localized directly at the tip.

The endoscope is inserted in the organ of choice, mostly through a natural orifice. The steering mechanism is generic, but the level of complexity of the environment differs between each organ, putting different requirements on the flexible endoscope. Many endoscopists consider endoscope steering mechanisms non-intuitive. Procedural challenges and physical complaints due to nonergonomical design are common [5–7].

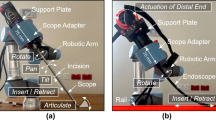

Flexible endoscopy also is the technology of choice for Natural Orifice Transluminal Endoscopic Surgery (NOTES), one of the latest trends in minimally invasive surgery. Example devices include the Anubis-scope, the R-scope, the Direct Drive system and the EndoSamurai [8]. Steering and control of the instruments are challenging for the design of these devices. Additionally, screening programs for colorectal cancer require technological solutions to increase the efficiency of endoscopy procedures. Both an expansion of complexity in intraluminal interventions and an increasing demand for endoscopy procedures in general have moved scientists to research possibilities for automated steering of these endoscopes.

Robust navigation algorithm development could help in the automation of flexible endoscope steering. The steering can be subdivided in an actuator part (the mechanical steering action), performed by the physical endoscope, and a navigation part (sensors, steering control, and/or navigation), performed by the system processor or connected computer. Sensing in flexible endoscope systems is often done using the images made by the endoscopy system. This technique is referred to as “visual navigation.” In this paper, the focus will lie on developing a (visual) navigation tool for flexible endoscopes, which mainly concerns the navigation part. However, the actuators need to react to the navigation information, so this part cannot be completely ignored.

To navigate in any environment, knowledge about the environment itself (Where can I go without problems?), about the current direction (Where am I heading now?), and about the target (Where do I want to end up?) are indispensable. Information about the environment might be obtained using either prior knowledge of the anatomy or current images [9]. Assumptions based on pre-procedural data (prior knowledge from CT or MRI scans, for instance) only hold during the procedure itself if the organ(s) are stationary during this procedure. This often is not the case in the highly deformable organs investigated by flexible endoscopes. Bronchoscopy and ventriculoscopy are examples of exceptions to this rule. Moreover, organ anatomy varies largely among patients, which means that even without considering organ elasticity, it would be very hard to develop a predictive model of these organs. One of the most important requirements for an automated steering technique therefore is: dealing with an unpredictable and unexplored environment.

In this review, we describe solutions for the flexible endoscope steering problem based on visual navigation and to indicate fields for further research. A subdivision of two main techniques can be made. The first is based on keeping a certain target in the center of the image. Mostly, the center of the lumen is this target, so we will refer to this technique as lumen centralization. Visual odometry [10, 11] is an alternative technique for automated steering. This technique is based on automatic detection of key points and subsequently tracking them in the next image. A more elaborate explanation will be provided in the Results section.

Materials and methods

A systematic literature database search was performed in September 2012. Databases that are known for their medical and technological contents were selected. These were Scopus and Medline. Search terms were: flexible endoscopy AND navigation (11 results); automat* AND flexible endoscopy (10 results); and application AND flexible endoscope (114 results). The results were obtained from unrestricted searches over all literature for these terms in the article, title, or abstract. The abstract, introduction, and conclusion were read, unless exclusion criteria were satisfied within one of these sections. Papers were included if more than one of the following elements were present: full text in English, a flexible endoscopy procedure, an endoscopic platform using flexible endoscopes, an image-guided endoscope application, or the presentation or testing of an endoscope system that (partly) steers automatically. Of the papers that described a complete automated image-based endoscopic navigation system, the “cited by” function of Scopus was used as well (13 results).

Results

Most papers (±100) immediately could be excluded, because they focused on the clinical application of flexible endoscopes, i.e., the feasibility or comparison of certain clinical techniques. The technological background of flexible endoscopes was not discussed in such papers. Nineteen papers, most of them focusing on automating flexible endoscopy, were included using the inclusion criteria, and four additional inclusions were made to provide the necessary technological background. Included papers were categorized in either lumen centralization or visual odometry papers and were read for advantages and disadvantages of the described technique(s). In the appendix, more detail on content of the included papers is provided. The general theory about both approaches is explained first, before research details of lumen centralization and visual odometry will be discussed.

Concepts in visual navigation for automated endoscope steering

Most navigation systems for flexible endoscopy use endoscope images as sensory input. In endoscope navigation, a distinction can be observed between the tip orientation and the tip (heading) direction (Fig. 2). The first refers to the bending of the tip and can be expressed in radians or degrees on the x- or the y-axis, with respect to the endoscope shaft. Tip orientation can only be detected externally, not from endoscopic images. The latter (tip direction) refers to the direction in which the tip is currently traveling, or where the tip will end up if no steering action is undertaken. This direction can be expressed in two or three dimensions, and in image or Euclidian coordinates, and can be obtained from endoscopic images.

In lumen centralization, finding the target direction for an endoscope can be achieved with properties of the lumen shown on the images. The target, mostly the center of the lumen, is the deepest area of the environment, and usually appears as a darker area in the images. Depth and image intensity can be extracted automatically from images, which makes them suitable for automated target finding. Steering is based on keeping the target in the center of the image (Fig. 3). The endoscope will then travel towards the center when the endoscope is progressed. This technique is mainly used to determine and influence tip orientation.

With visual odometry, the motion in images is detected. In the case of flexible endoscopy, this motion holds a direct relation to camera displacement, and therefore to endoscope displacement, because the camera is located at the tip. Automatically detected key points not only provide environmental information but also can be used to define a target, using information about their location, relative distance, or intensity properties. Thus, this technique may be used to provide information about tip orientation and tip direction, although the latter in a more indirect manner (see “Results” section).

Research in automating flexible endoscopy

Lumen centralization

As described in the introduction, the lumen center can be seen as a target for the flexible endoscope. The lumen center can be found by searching the darkest region in an image (dark region segmentation) or the deepest region (depth estimation).

An automated navigation and advisory system for endoscopes based on lumen centralization was described in 1996 [12]. Dark region segmentation was used to find the central lumen area of the colon. Visible contours of the bowel wall were used as an indication for tip orientation. Dedicated hardware facilities were assembled to enable real-time endoscope steering. System reliability was demonstrated in anatomical (in vitro) models. No experiments using an in vivo model were described. The researchers from [12] published in 1999 on additional techniques to support the dark region-based lumen centralization [13]. Chettaoui et al. [14], Bricault et al. [15], Reilink et al. [16, 17], Zhiyun [18], and Zhen et al. [19] all used a variety of methods to find the darkest region on endoscopy images. Chettaoui et al. [14] even described a way to differentiate the lumen from diverticula. Especially for fully automated steering systems for colonoscopes and gastroscopes, such distinctions may become very important. Automated detection of edges or contours that surround the central lumen area is another approach in the lumen centralization technique [20–22].

Finding the lumen as the deepest part of the image requires information about the depth of the visible scene. “Depth estimation” is defined in this case as calculating the three-dimensional (3D) relief or 3D reconstruction of the environment, using the monocular (one lens) camera images produced by the endoscope. Several techniques were found in the literature to perform depth estimation within flexible endoscopy images. Generally, a pixel close-by will appear brighter than a pixel that is far away. This allows calculating depths from a single image.

Another method of depth estimation is using structured light. Structured light is an “active method” to obtain depth information from a scene [23, 24]. The idea is to project a known pattern onto a surface and to obtain images of this pattern using a camera. Pattern deformations are caused by depth irregularities of the surface. Analysis of these pattern deformations leads to an accurate and robust method for depth estimation. This technique is applied in industrial endoscopes but was not found applied in clinical endoscopes [25]. Zhang et al. [25] do describe the essentials and possibilities of the technology very clearly.

“Shape from shading” is a last depth estimation technique used in flexible endoscopy [12, 15, 26, 27]. This technique is based on reflecting properties of the surface. In endoscopy, the light source and the observer (the lens) are in the same plane, which makes it possible to invert the reflectance equation. Inverting the equation provides information about the orientation of the surface and subsequently to reconstruct the 3D surface coordinates [28].

Disadvantages of lumen centralization

Although research has shown that lumen detection is feasible for colonoscopic navigation, steering towards the target (the centralization) still poses difficulties. None of the mentioned techniques has successfully been applied in an in vivo situation. The main assumption in all techniques is that by centralizing the lumen center, the endoscope will travel the right path through the organ. Artifacts, such as residual organ or rinsing fluids, cause difficulty in image interpretation. However, the lumen center is not always obtainable from an image. Moreover, multiple forces—intra-abdominal pressure from the patient, insertion pressure from the endoscopist, and intrinsic force from the endoscope—influence the endoscope images by causing unpredictable motion. This motion in turn influences the baseline situation, which makes the main assumption false at times (Figs. 3 and 4). For an accurate result, the previous direction and movement need to be taken into account, just as the environment and information about tip orientation. These data may help to estimate where the lumen center is located, even if it is not in the field of view. The endoscope can then be steered with this lumen center location “in mind.”

Depth information about the environment would partly solve the lack of information in dark region segmentation. However, specular reflections, caused by reflecting properties of the mucosa (Fig. 5), have high pixel intensity. Depth calculations therefore will result in apparent reflections that are extremely close to the camera, while their real location lies on the organ wall. In shape from shading, specular reflections will lead to apparent irregular surface properties. A solution to this problem is to apply preprocessing steps on the images, such as filtering [24, 29], but the exact tip orientation remains unclear.

Shape from shading technology theoretically provides information about the environment and possibly about tip orientation. Previous endoscope motion can be derived from this, which makes this an apparent ideal technique for visual endoscope navigation. However, the main problem in shape from shading technology is computer calculation time [26]. Additionally, some surfaces reflect the light unpredictably, specifically mucosa-covered surfaces, due to the presence of mucus and fluids [12]. Unpredictable reflection will make inverting the reflectance equation highly unpredictable and will lead to inaccurate 3D surface coordinates.

Visual odometry

Visual odometry comprises the technique of (automatically) obtaining key points, or unique recognition points, and tracking them throughout an image sequence with the goal to derive position and orientation information. These key points, such as vascular junctions in the organ wall, are automatically found by the software. “Optical flow” is the technique that calculates the pixel displacement of the key point between two images and uses these displacements to calculate endoscope movement. The key point displacements can be depicted as a field with vectors indicating the direction and the length of the shift. Such a field is then called an “optical flow field” (Fig. 6).

Optical flow field (with outliers). Often it is assumed that large arrows indicate nearby objects. As shown, the deeper part of the lumen displays large arrows as well and nearby parts display small arrows. This assumption will lead to a lack in robustness of the steering algorithm. One prominent vector, just below the center of the image, points in a deviating direction. This vector is an outlier, caused by specular reflection

The optical flow can be calculated in different manners, but in this paper, all different kinds of optical flow methodologies are referred to as “optical flow” or “optical flow calculation.” In many applications, optical flow is used to find the point from which all displacement vectors in the optical flow field seem to emerge, the so-called Focus of Expansion (FOE). The FOE ideally corresponds to the current heading direction of the endoscope and can be obtained in any environment, as long as there are detectable displacements.

Mostly, both detection and tracking of landmarks are combined in one application. Between 1980 and 2011 many different ways to do this have been proposed. Previous research was done on applications in human colonoscopy images [29, 30], in which it was concluded that automated endoscope navigation was feasible. Tests of optical flow algorithms can be performed on simulated images, rendered by computers. Reilink et al. [16] roughly modeled the colon and used an optical flow algorithm to steer the tip of the endoscope towards the lumen center. They also implemented a steering algorithm and tested it on colon images from an endoscopy simulator (Accutouch, CAE Healthcare, Montreal, QC, Canada) [17]. In the paper of Masson et al. [31], three different tracking algorithms are compared in a simulated setting. Deguchi et al. [32] discuss a technique to calculate the 3D shape reconstruction from endoscopic images. This method is called the Factorization Method [33] and is based on the assumption that the observed object remains constant, while the camera motion causes a detectable optical flow pattern. Points far away produce a displacement pattern with small vectors, while nearby points cause large vectors.

Deguchi et al. [34] have extended the visual odometry technique by comparing bronchoscopy images to pre-procedural CT images. The endoscopist now not only is able to see the inside of the lungs, but also the surrounding anatomy and the exact location of the endoscope. Note that bronchi form a relatively stable environment, which makes prior knowledge reliable. Their result seems promising and solves some of the problems that are encountered when using optical flow.

Disadvantages of visual odometry

In visual odometry, the same forces, mentioned in the Disadvantages of Lumen Centralization, still cause problems in image interpretation. However, a combination of knowledge about the traveled path and the environment may predict adequate progression of the endoscope, even if the images are not suitable for highly accurate analyses. This information can be obtained from reliable optical flow calculations, which means the right optical flow algorithm needs to be applied. To date, no systematic comparison of optical flow algorithms on human endoscopic images has been done to our knowledge.

Optical flow algorithms furthermore are very sensitive to illumination changes [31], motion blur and fluid artifacts, and computational time could be problematic in some algorithms. Specular reflections will have to be filtered out to diminish illumination changes. However, these reflections will only lead to falsely tracked landmarks in the neighborhood, which does not necessarily mean that the complete image is tracked falsely.

Optical flow has the advantage of orientation information through tracking endoscope progression, which makes a predictive algorithm theoretically possible. However, uncertainty exists about the scale of the detected pixel displacement. Unless velocity is measured, it needs to be estimated to provide 3D orientation and environmental information, which introduces large inaccuracies.

Discussion

The purpose of this paper is to describe the most promising approaches for flexible endoscope navigation from currently known research. Two fields of interest are identified: lumen centralization and visual odometry. The solutions have not led to commercially available steering systems yet. This is mainly due to vital technological problems that have not been solved.

Both algorithms mostly face robustness challenges, and computational time sometimes leads to a non-real-time solution. Other challenges exist as well. Predictive organ models are difficult to design due to highly deformable organs. Furthermore, many techniques assume that organs are rigid tubes. Because most organs are in fact non-rigid tissues that collapse without internal counter-forces from air or fluids, this assumption leads to a lack of robustness in methods for image-based estimation of tip orientation.

In lumen centralization techniques, the lumen cannot be discerned in all images. Most lumen centralization techniques are very sensitive to illumination changes at the organ surfaces, more than optical flow algorithms. Other problems include image distortion due to residual organ or rinsing fluids. Additionally, flaws in underlying navigational models for steering algorithms arise, because steering inside tubes is not similar to steering inside organs.

As mentioned in the introduction, adjustments of the actuation mechanism of flexible endoscopes are needed to implement navigation algorithms. “Adjustments” mostly are additional hardware devices, which will probably result in additional costs for hospitals. Therefore, accessory hardware adjustments to facilitate automated navigation are considered a disadvantage. The upside of the accessory hardware is that such systems hold potential for performing more complex procedures [8]. Adjusting the hardware of a system or building a completely new one may lead to another kind of automated flexible endoscopy system [35, 36]. However, the currently available systems are not ideal [8, 37]. Yeung and Gourlay [8] discuss all currently available flexible endoscopic “multitasking platforms,” platforms that can simultaneously be employed in diagnostics and therapeutics. With “available” is meant: at least in an advanced prototype state. Multitasking platforms are specifically designed to perform highly complex intraluminal interventions or even complete NOTES procedures. These platforms tend to be technologically complex and most solutions require a complete new endoscope design, such as the Anubis endoscope (Karl Storz GmbH & Co. KG, Tuttlingen, Germany) and the EndoSamurai (Olympus Corp., Tokyo, Japan). Of the discussed platforms, only two use conventional flexible endoscopes, which are suggested to be an easier and cheaper option. These platforms are the Direct Drive Endoscopic System (DDES, Boston Scientific, Natick, MA) and the Incisionless Operating Platform (IOP, USGI Inc., San Clemente, CA). Flexible endoscopy systems could theoretically even be extended to complete telemanipulation platforms, comparable to the Da Vinci surgical system (Intuitive Surgical, Inc., Sunnyvale, CA). In telemanipulation platforms, the mechatronics that control the instruments are controlled by a computer. This computer can be programmed to add functionality to the instruments, such as automated navigation.

Despite the challenges mentioned, automating endoscope steering has potential. Automation of endoscope steering may lead to an easier endoscope introduction and consequently increase procedure efficiency. It holds potential to lower the costs of diagnostic and small therapeutic intraluminal procedures, and to increase patient safety. At the University of Twente, a telemanipulation system for flexible endoscopes is being developed [29, 38]. The primary goal is to develop a cockpit-like surgical platform for complex intraluminal interventions and to improve current diagnostics and therapies. The idea behind the design is to make a low-cost, complete system that is suitable for current clinical practice. For most hardware, the proof-of-principle level is achieved. Additionally, software is being developed for intuitive insertion of the endoscope. Improvements in the steering model are realized by assuming a completely unpredictable environment—and not a rigid tube. A target lock, automatic loop detection, and real-time positional feedback are among the future functionality of the system.

Additionally, therapeutic interventions could benefit from automated steering. Mori et al. [39] aim to real-time track the camera motion of a bronchoscope by utilizing pre-procedural data in the form of CT images. This information can then be employed as a roadmap for an endoscopic navigation system. Performing procedures inside the bronchi and through the bronchial wall becomes easier, because exact localization of relevant lymph nodes and the point of interest are possible. Their method could possibly be extended to other organs, such as the colon.

Some advanced surgical procedures, such as the peroral Heller myotomies (POEM), are already conducted with flexible endoscopes. To expand this field further, automated systems can be of advantage. Automated position control, improved visualization by image processing and an ergonomic user interface instead of steering knobs are all possibilities of telemanipulated flexible endoscopy systems. If these functionalities are indeed realized, surgical procedures through flexible endoscopes may form a superior method of minimally invasive surgery.

In conclusion, vision-based navigation for endoscope steering is widely investigated and likely to enter the clinic not far from now. The implementation of automated flexible endoscope steering possibly holds major advantages for physicians, patients, and extension of the flexible endoscopy field in general. Research is currently focused on developing low-cost hardware solutions and technologically robust steering algorithms, in which real-time implementation of visual navigation techniques will play a major role. Clinical applications are theoretically numerous and the first ones are expected within 5 years.

References

Waye JD, Rex DK, Williams CB (2009) Colonoscopy: principles and practice, 2nd edn. Blackwell Publishing Ltd., Chichester, pp 267–345

Schwab K, Singh S (2011) An introduction to flexible endoscopy. Surgery (Oxford) 29:80–84

Nahlieli O, Neder A, Baruchin AM (1994) Salivary gland endoscopy: a new technique for diagnosis and treatment of sialolithiasis. J Oral Maxillofac Surg 52:1240–1242

Fukushima T, Ishiijima B, Hirakawa K, Nakamura N, Sano K (1973) Ventriculofiberscope: a new technique for endoscopic diagnosis and operation. J Neurosurg 38:251–256

Pedrosa MC, Farraye FA, Shergill AK, Banerjee S, Desilets D, Diehl DL, Kaul V, Kwon RS, Mamula P, Rodriguez SA, Varadarajulu S, Song LMWK, Tierney WM (2010) Minimizing occupational hazards in endoscopy: personal protective equipment, radiation safety, and ergonomics. Gastrointest Endosc 72:227–235

Kuperij N, Reilink R, Schwartz MP, Stramigioli S, Misra S, Broeders IAMJ (2011) Design of a user interface for intuitive colonoscope control. In: IEEE International Conference on Intelligent Robots and Systems, pp 2076–2082

Saunders BP, Fukumoto M, Halligan S, Jobling C, Moussa ME, Bartram CI, Williams CB (1996) Why is colonoscopy more difficult in women? Gastrointest Endosc 43:124–126

Yeung BPM, Gourlay T (2012) A technical review of flexible endoscopic multitasking platforms. Int J Surg 10:1–10

Baumhauer M, Feuerstein M, Meinzer HP, Rassweiler J (2008) Navigation in endoscopic soft tissue surgery: perspectives and limitations. J Endourol 22:751–766

Scaramuzza D, Fraundorfer F (2011) Visual odometry: part I—the first 30 years and fundamentals. IEEE Robot Autom Mag 18:80–92

Fraundorfer F, Scaramuzza D (2012) Visual odometry: part II—matching, robustness and applications. IEEE Robot Autom Mag 19:78–90

Gillies D, Khan G (1996) Vision based navigation system for an endoscope. Image Vis Comput 14:763–772

Kwoh CK, Khan GN, Gillies DF (1999) Automated Endoscope Navigation and Advisory System from medical imaging. In: SPIE’s International Conference on Physiology and Function for Multidimensional Images 3660:214–224

Chettaoui H, Thomann G, Ben Amar C, Redarce T (2006) Extracting and tracking colon ”pattern” from colonoscopic images. In: IEEE Canadian Conference on Computer and Robot Vision, pp 65–71

Bricault I, Ferretti G, Cinquin P (1998) Registration of real and CT-derived virtual bronchoscopic images to assist transbronchial biopsy. IEEE Trans Med Imaging 17:703–714

Reilink R, Stramigioli S, Misra S (2010) Image-based flexible endoscope steering. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, p 6

Reilink R, Stramigioli S, Kappers AML, Misra S (2011) Evaluation of flexible endoscope steering using haptic guidance. Int J Med Robot Comp Assist Surg MRCAS 7:178–186

Zhiyun X (2000) Computerized detection of abnormalities in endoscopic oesophageal images. Nanyang Technological University, Singapore

Zhen Z, Jinwu Q, Yanan Z, Linyong S (2006) An intelligent endoscopic navigation system. In: IEEE International Conference on Mechatronics and Automation, pp 1653–1657

Krishnan SM, Tan CS, Chan KL (1994) Closed-boundary extraction of large intestinal lumen. Proceedings of 16th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp 610–611

Tjoa MP, Krishnan SM, Zheng MM (2003) A novel endoscopic image analysis approach using deformable region model to aid in clinical diagnosis. In: Proceedings of the 15th Annual International Conference of the IEEE EMBS, pp 710–713

Xia S, Krishnan SM, Tjoa MP, Goh PMY (2003) A novel methodology for extracting colon’s lumen from colonoscopic images. J Syst Cybern Inform 1:7–12

Batlle J, Mouaddib E, Salvi J (1998) Recent progress in coded structured light as a technique to solve the correspondence problem: a survey. Pattern Recognit 31:963–982

Salvi J, Pagès J, Batlle J (2004) Pattern codification strategies in structured light systems. Pattern Recognit 37:827–849

Zhang G, He J, Li X (2005) 3D vision inspection for internal surface based on circle structured light. Sens Actuators 122:68–75

Mekaouar A, Ben Amar C, Redarce T (2009) New vision based navigation clue for a regular colonoscope’s tip. Proc SPIE 7261:72611B-9

Ciuti G, Visentini-Scarzanella M, Dore A, Menciassi A, Dario P, Yang G (2012) Intra-operative monocular 3D reconstruction for image-guided navigation in active locomotion capsule endoscopy. In: IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), pp 768–774

Rashid HU, Burger P (1992) Differential algorithm for the determination of shape from shading using a point light source. Image Vis Comput 10(2):119–127

Van der Stap N, Reilink R, Misra S, Broeders IAMJ, Van der Heijden F (2012) The use of the focus of expansion for automated steering of flexible endoscopes. In: Proceedings of the 4th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, pp 13–18

Van Der Stap N, Reilink R, Misra S, Broeders IAMJ, Van Der Heijden F (2012) A feasibility study of optical flow-based navigation during colonoscopy. Int J Comput Assist Radiol Surg 7(S1):S235

Masson N, Nageotte F, Zanne P, De Mathelin M (2009) In vivo comparison of real-time tracking algorithms for interventional flexible endoscopy. In: ISBI, pp 1350–1353

Deguchi K, Sasano T, Arai H, Yoshikawa Y (1994) 3-D shape reconstruction from endoscope image sequences by the factorization method. In: IAPR Workshop on Machine Vision Applications (MVA’94), pp 455–459

Poelman CJ, Kanade T (1997) A paraperspective factorization method for shape and motion recovery. IEEE Trans Pattern Anal Mach Intell 19:206–218

Deguchi D, Mori K, Suenaga Y, Hasegawa J, Toriwaki J, Natori H, Takabatake H (2003) New calculation method of image similarity for endoscope tracking based on image registration in endoscope navigation. Int Congr Ser 1256:460–466

Deguchi D, Mori K, Feuerstein M, Kitasaka T, Maurer CR, Suenaga Y, Takabatake H, Mori M, Natori H (2009) Selective image similarity measure for bronchoscope tracking based on image registration. Med Image Anal 13:621–633

Phee SJ, Ng WS, Chen IM, Seow-Choen F, Davies BL (1997) Locomotion and steering aspects in automation of colonoscopy. Part one. A literature review. IEEE Eng Med Biol 16:85–96

Karimyan V, Sodergren M, Clark J, Yang GZ, Darzi A (2009) Navigation systems and platforms in natural orifice translumenal endoscopic surgery (NOTES). Int J Surg 7:297–304

Ruiter J, Rozeboom E, Van Der Voort M, Bonnema M, Broeders I (2012) Design and evaluation of robotic steering of a flexible endoscope. In: IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), pp 761–767

Mori K, Deguchi D, Sugiyama J, Suenaga Y, Toriwaki J, Maurer CR, Takabatake H, Natori H (2002) Tracking of a bronchoscope using epipolar geometry analysis and intensity-based image registration of real and virtual endoscopic images. Med Image Anal 6:321–336

Disclosures

N. van der Stap and F. van der Heijden have no conflicts of interest or financial ties to disclose. I. Broeders is partner in the Teleflex project, funded by Pieken in de Delta Oost Nederland (PIDON).

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

van der Stap, N., van der Heijden, F. & Broeders, I.A.M.J. Towards automated visual flexible endoscope navigation. Surg Endosc 27, 3539–3547 (2013). https://doi.org/10.1007/s00464-013-3003-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-013-3003-7