Abstract

In many real-world optimization problems, the objective function evaluation is subject to noise, and we cannot obtain the exact objective value. Evolutionary algorithms (EAs), a type of general-purpose randomized optimization algorithm, have been shown to be able to solve noisy optimization problems well. However, previous theoretical analyses of EAs mainly focused on noise-free optimization, which makes the theoretical understanding largely insufficient for the noisy case. Meanwhile, the few existing theoretical studies under noise often considered the one-bit noise model, which flips a randomly chosen bit of a solution before evaluation; while in many realistic applications, several bits of a solution can be changed simultaneously. In this paper, we study a natural extension of one-bit noise, the bit-wise noise model, which independently flips each bit of a solution with some probability. We analyze the running time of the (\(1+1\))-EA solving OneMax and LeadingOnes under bit-wise noise for the first time, and derive the ranges of the noise level for polynomial and super-polynomial running time bounds. The analysis on LeadingOnes under bit-wise noise can be easily transferred to one-bit noise, and improves the previously known results. Since our analysis discloses that the (\(1+1\))-EA can be efficient only under low noise levels, we also study whether the sampling strategy can bring robustness to noise. We prove that using sampling can significantly increase the largest noise level allowing a polynomial running time, that is, sampling is robust to noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In real-world optimization tasks, the exact objective (i.e., fitness) function evaluation of candidate solutions is often impossible, instead we can obtain only a noisy one due to a wide range of uncertainties [21]. For example, in machine learning, a prediction model is evaluated only on a limited amount of data, which makes the estimated performance deviate from the true performance; in product design, the design variables can be subject to perturbations due to manufacturing tolerances, which brings noise.

In the presence of noise, the difficulty of solving an optimization problem may increase. Evolutionary algorithms (EAs) [5], inspired by natural phenomena, are a type of randomized metaheuristic optimization algorithm. They are likely to be able to handle noise, since the corresponding natural phenomena have been well processed in noisy natural environments. In fact, EAs have been successfully applied to solve many noisy optimization problems [7, 22].

Compared with the application, the theoretical analysis of EAs is far behind. But in the last two decades, much effort has been devoted to the running time analysis (an essential theoretical aspect) of EAs. Numerous analytical results for EAs solving synthetic problems as well as combinatorial problems have been derived, e.g., [4, 23]. Meanwhile, a few general approaches for running time analysis have been proposed, e.g., drift analysis [10, 12, 20], fitness-level methods [8, 32], and switch analysis [34].

However, previous running time analyses of EAs mainly focused on noise-free optimization, where the fitness evaluation is exact. Only a few pieces of work on noisy evolutionary optimization have been reported, which mainly considered two kinds of noise models, prior and posterior. The prior noise comes from the variation on a solution, e.g., one-bit noise [13] flips a random bit of a binary solution before evaluation with probability p. The posterior noise comes from the variation on the fitness of a solution, e.g., additive noise [19] adds a value randomly drawn from some distribution. Droste [13] first analyzed the (\(1+1\))-EA on the OneMax problem in the presence of one-bit noise and showed that the tight range of the noise probability p allowing a polynomial running time is \(O(\log n/n)\), where n is the problem size. Gießen and Kötzing [19] recently studied the LeadingOnes problem, and proved that the expected running time is polynomial if \(p \le 1/(6en^2)\) and exponential if \(p=1/2\). They also considered additive noise with variance \(\sigma ^2\), and proved that the (\(1+1\))-EA can solve OneMax and LeadingOnes in polynomial time when \(\sigma ^2=O(\log n/n)\) and \(\sigma ^2\le 1/(12en^2)\), respectively.

For inefficient optimization of the (\(1+1\))-EA under high noise levels, some implicit mechanisms of EAs were proved to be robust to noise. In [19], it was shown that the (\(\mu \)+1)-EA with a small population of size \(\varTheta (\log n)\) can solve OneMax in polynomial time even if the probability of one-bit noise reaches 1. The robustness of populations to noise was also proved in the setting of non-elitist EAs [9, 26]. However, Friedrich et al. [17] showed the limitation of populations by proving that the (\(\mu \)+1)-EA needs super-polynomial time for solving OneMax under additive Gaussian noise \(\mathcal {N}(0,\sigma ^2)\) with \(\sigma ^2 \ge n^3\). This difficulty can be overcome by the compact genetic algorithm (cGA) [17] and a simple Ant Colony Optimization (ACO) algorithm [16], both of which find the optimal solution in polynomial time with a high probability. ACO was also shown to be able to efficiently find solutions with reasonable approximations on some instances of the single-destination shortest path problem with edge weights disturbed by noise [11, 15, 33].

The ability of explicit noise handling strategies was also theoretically studied. Qian et al. [29] proved that the threshold selection strategy is robust to noise: the expected running time of the (\(1+1\))-EA using threshold selection on OneMax under one-bit noise is always polynomial regardless of the noise probability p. For the (\(1+1\))-EA solving OneMax under one-bit noise with \(p=1\) or additive Gaussian noise \(\mathcal {N}(0,\sigma ^2)\) with \(\sigma ^2 \ge 1\), the sampling strategy was shown to be able to reduce the running time from exponential to polynomial [28]. The robustness of sampling to noise was also proved for the (\(1+1\))-EA solving LeadingOnes under one-bit noise with \(p=1/2\) or additive Gaussian noise with \(\sigma ^2 \ge n^2\). Akimoto et al. [2] proved that sampling with a large sample size can make optimization under additive unbiased noise behave as optimization in a noise-free environment. The interplay between sampling and implicit noise-handling mechanisms (e.g., crossover) has been statistically studied in [18].

The noise models considered in the studies mentioned above are summarized in Table 1. We can observe that for the prior noise model, one-bit noise was mainly considered, which flips a random bit of a solution before evaluation with probability p. However, the noise model, which can change several bits of a solution simultaneously, may be more realistic and needs to be studied, as mentioned in the first noisy theoretical work [13].

In this paper, we study the bit-wise noise model, which is characterized by a pair (p, q) of parameters. It happens with probability p, and independently flips each bit of a solution with probability q before evaluation. We analyze the running time of the (\(1+1\))-EA solving OneMax and LeadingOnes under bit-wise noise with two specific parameter settings \((p,\frac{1}{n})\) and (1, q). The ranges of p and q for a polynomial upper bound and a super-polynomial lower bound are derived, as shown in the first two rows of Table 2. For the (\(1+1\))-EA on LeadingOnes, we also transfer the running time bounds from bit-wise noise \((p,\frac{1}{n})\) to one-bit noise by using the same proof procedure. As shown in the bottom right of Table 2, our results improve the previously known ones [19].

Note that for the (\(1+1\))-EA on LeadingOnes, the current analysis (as shown in the last column of Table 2) does not cover all the ranges of p and q. We thus conduct experiments to estimate the expected running time for the uncovered values of p and q. The empirical results show that the currently derived ranges of p and q allowing a polynomial running time are possibly tight.

From the results in Table 2, we find that the (\(1+1\))-EA is efficient only under low noise levels. For example, for the (\(1+1\))-EA solving OneMax under bit-wise noise \((p,\frac{1}{n})\), the expected running time is polynomial only when \(p=O(\log n/n)\). We then study whether the sampling strategy can bring robustness to noise. Sampling is a popular way to cope with noise in fitness evaluation [3], which, instead of evaluating the fitness of one solution only once, evaluates the fitness multiple (m) times and then uses the average to approximate the true fitness. We analyze the running time of the (\(1+1\))-EA using sampling under both bit-wise noise and one-bit noise. The ranges of p and q for a polynomial upper bound and a super-polynomial lower bound are shown in Table 3. Our analysis covers all the ranges of p and q. Note that for proving a polynomial upper bound, it is sufficient to show that using sampling with a specific sample size m can guarantee a polynomial running time, while for proving a super-polynomial lower bound, we need to prove that using sampling with any polynomially bounded m fails to guarantee a polynomial running time. Compared with the results in Table 2, we find that using sampling significantly improves the noise-tolerance ability. For example, by using sampling with \(m=4n^3\), the (\(1+1\))-EA now can always solve OneMax under bit-wise noise \((p,\frac{1}{n})\) in polynomial time.

From the analysis, we also find the reason why sampling is effective or not. Let f(x) and \(f^n(x)\) denote the true and noisy fitness of a solution, respectively. For two solutions x and y with \(f(x)>f(y)\), when the noise level is high (i.e., the values of p and q are large), the probability \(\mathrm {P}(f^n(x) \le f^n(y))\) (i.e., the true worse solution y appears to be better) becomes large, which will mislead the search direction and then lead to a super-polynomial running time. In such a situation, if the expected gap between \(f^n(x)\) and \(f^n(y)\) is positive, sampling will increase this trend and make \(\mathrm {P}(f^n(x) \le f^n(y))\) sufficiently small; if it is negative (e.g., on OneMax under bit-wise noise (1, q) with \(q\ge 1/2\)), sampling will continue to increase \(\mathrm {P}(f^n(x) \le f^n(y))\), and obviously will not work. We also note that if the positive gap between \(f^n(x)\) and \(f^n(y)\) is too small (e.g., on OneMax under bit-wise noise (1, q) with \(q= 1/2-1/n^{\omega (1)}\)), a polynomial sample size will be not sufficient and sampling also fails to guarantee a polynomial running time.

This paper extends our preliminary work [27]. Since the theoretical analysis on the LeadingOnes problem is not complete, we add experiments to complement the theoretical results (i.e., Sect. 4.4). We also add the robustness analysis of sampling to noise (i.e., Sect. 5). Note that the robustness of sampling to one-bit noise has been studied in our previous work [28]. It was shown that sampling can reduce the running time of the (\(1+1\))-EA from exponential to polynomial on OneMax when the noise probability \(p=1\) as well as on LeadingOnes when \(p=1/2\). Therefore, our results here are more general. We prove that sampling is effective for any value of p, as shown in the last row of Table 3. Furthermore, we analyze the robustness of sampling to bit-wise noise for the first time.

The rest of this paper is organized as follows. Section 2 introduces some preliminaries. The running time analysis of the (\(1+1\))-EA on OneMax and LeadingOnes under noise is presented in Sections 3 and 4, respectively. Section 5 analyzes the (\(1+1\))-EA using sampling. Section 6 concludes the paper.

2 Preliminaries

In this section, we first introduce the optimization problems, noise models and evolutionary algorithms studied in this paper, respectively, then introduce the sampling strategy, and finally present the analysis tools that we use throughout this paper.

2.1 OneMax and LeadingOnes

In this paper, we use two well-known pseudo-Boolean functions OneMax and LeadingOnes. The OneMax problem as presented in Definition 1 aims to maximize the number of 1-bits of a solution. The LeadingOnes problem as presented in Definition 2 aims to maximize the number of consecutive 1-bits counting from the left of a solution. Their optimal solution is \(11\ldots 1\) (briefly denoted as \(1^n\)). It has been shown that the expected running time of the (\(1+1\))-EA on OneMax and LeadingOnes is \(\varTheta (n \log n)\) and \(\varTheta (n^2)\), respectively [14]. In the following analysis, we will use \({\mathrm {LO}}(x)\) to denote the number of leading 1-bits of a solution x.

Definition 1

(OneMax) The OneMax Problem of size n is to find an n bits binary string \(x^*\) such that

Definition 2

(LeadingOnes) The LeadingOnes Problem of size n is to find an n bits binary string \(x^*\) such that

2.2 Bit-Wise Noise

There are mainly two kinds of noise models: prior and posterior [19, 21]. Let \(f^n(x)\) and f(x) denote the noisy and true fitness of a solution x, respectively. The prior noise comes from the variation on a solution, i.e., \(f^n(x)=f(x')\), where \(x'\) is generated from x by random perturbations. The posterior noise comes from the variation on the fitness of a solution, e.g., additive noise \(f^n(x)=f(x)+\delta \) and multiplicative noise \(f^n(x)=f(x)\cdot \delta \), where \(\delta \) is randomly drawn from some distribution. Previous theoretical analyses involving prior noise [9, 13, 19, 28, 29] often focused on a specific model, one-bit noise. As presented in Definition 3, it flips a random bit of a solution before evaluation with probability p. However, in many realistic applications, noise can change several bits of a solution simultaneously rather than only one bit. We thus consider the bit-wise noise model. As presented in Definition 4, it happens with probability p, and independently flips each bit of a solution with probability q before evaluation. We use bit-wise noise (p, q) to denote the bit-wise noise model with a scenario of (p, q).

Definition 3

(One-bit Noise) Given a parameter \(p \in [0,1]\), let \(f^{n}(x)\) and f(x) denote the noisy and true fitness of a solution \(x \in \{0,1\}^n\), respectively, then

where \(x'\) is generated by flipping a uniformly randomly chosen bit of x.

Definition 4

(Bit-wise Noise) Given parameters \(p,q \in [0,1]\), let \(f^{n}(x)\) and f(x) denote the noisy and true fitness of a solution \(x \in \{0,1\}^n\), respectively, then

where \(x'\) is generated by independently flipping each bit of x with probability q.

To the best of our knowledge, only bit-wise noise (1, q) has been recently studied. Gießen and Kötzing [19] proved that for the (\(1+1\))-EA solving OneMax, the expected running time is polynomial if \(q=O(\log n /n^2)\) and super-polynomial if \(q=\omega (\log n /n^2)\). Besides bit-wise noise (1, q), we also study another specific model bit-wise noise \((p,\frac{1}{n})\) in this paper. Note that bit-wise noise \((p,\frac{1}{n})\) is a natural extension of one-bit noise; their random behaviors of perturbing a solution correspond to the two common mutation operators, bit-wise mutation and one-bit mutation, respectively.

To investigate whether the performance of the (\(1+1\))-EA for bit-wise noise with two scenarios of (p, q) and \((p',q')\) where \(p\cdot q=p'\cdot q'\) can be significantly different, we consider the OneMax problem under bit-wise noise \((1, \frac{\log n}{30n})\) and \((\frac{\log n}{30n}, 1)\). The comparison gives a positive answer. For bit-wise noise \((1, \frac{\log n}{30n})\), we know that the (\(1+1\))-EA needs super-polynomial time to solve OneMax [19], while for bit-wise noise \((\frac{\log n}{30n}, 1)\), we will prove in Theorem 7 that the (\(1+1\))-EA can solve OneMax in polynomial time. Thus, the analysis on general bit-wise noise without fixing p or q would be complicated, and \(p\cdot q\) may not be the only deciding factor. We leave it as a future work.

2.3 (\(1+1\)) Evolutionary Algorithm

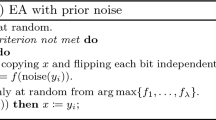

The (\(1+1\))-EA as described in Algorithm 1 is studied in this paper. For noisy optimization, only a noisy fitness value \(f^n(x)\) instead of the exact one f(x) can be accessed, and thus line 4 of Algorithm 1 is “if \(f^{n}(x') \ge f^{n}(x)\)” instead of “if \(f(x') \ge f(x)\)”. Note that the reevaluation strategy is used as in [11, 13, 19]. That is, besides evaluating \(f^{n}(x')\), \(f^{n}(x)\) will be reevaluated in each iteration of the (\(1+1\))-EA. The running time is usually defined as the number of fitness evaluations needed to find an optimal solution w.r.t. the true fitness function f for the first time [2, 13, 19].

Algorithm 1

((\(1+1\))-EA) Given a function f over \(\{0,1\}^n\) to be maximized, it consists of the following steps:

2.4 Sampling

In noisy evolutionary optimization, sampling has often been used to reduce the negative effect of noise [1, 6]. As presented in Definition 5, it approximates the true fitness f(x) using the average of multiple (m) independent random evaluations. For the (\(1+1\))-EA using sampling, line 4 of Algorithm 1 changes to be “if \(\hat{f}(x') \ge \hat{f}(x)\)”. Its pseudo-code is described in Algorithm 2. Note that the sample size \(m=1\) is equivalent to that sampling is not used. The effectiveness of sampling was not theoretically analyzed until recently. Qian et al. [28] proved that sampling is robust to one-bit noise and additive Gaussian noise. Particularly, under one-bit noise, it was shown that sampling can reduce the running time exponentially for the (\(1+1\))-EA solving OneMax when the noise probability \(p=1\) and LeadingOnes when \(p=1/2\).

Definition 5

(Sampling) Sampling first evaluates the fitness of a solution m times independently and obtains the noisy fitness values \(f^n_1(x),\ldots ,f^n_m(x)\), and then outputs their average, i.e.,

Algorithm 2

((\(1+1\))-EA with sampling) Given a function f over \(\{0,1\}^n\) to be maximized, it consists of the following steps:

2.5 Analysis Tools

The process of the (\(1+1\))-EA solving any pseudo-Boolean function with one unique global optimum can be directly modeled as a Markov chain \(\{\xi _t\}^{+\infty }_{t=0}\). We only need to take the solution space \(\{0,1\}^n\) as the chain’s state space (i.e., \(\xi _t \in \mathcal {X}=\{0,1\}^n\)), and take the optimal solution \(1^n\) as the chain’s optimal state (i.e., \(\mathcal {X}^*=\{1^n\}\)). Note that we can assume without loss of generality that the optimal solution is \(1^n\), because unbiased EAs treat the bits 0 and 1 symmetrically, and thus the 0 bits in an optimal solution can be interpreted as 1 bits without affecting the behavior of EAs. Given a Markov chain \(\{\xi _t\}^{+\infty }_{t=0}\) and \(\xi _{\hat{t}}=x\), we define its first hitting time (FHT) as \(\tau =\min \{t \mid \xi _{\hat{t}+t} \in \mathcal {X}^*,t\ge 0\}\). The mathematical expectation of \(\tau \), \(\mathrm {E}(\tau \mid \xi _{\hat{t}}=x)=\sum \nolimits ^{+\infty }_{i=0} i\cdot \mathrm {P}(\tau =i \mid \xi _{\hat{t}}=x)\), is called the expected first hitting time (EFHT) starting from \(\xi _{\hat{t}}=x\). If \(\xi _{0}\) is drawn from a distribution \(\pi _{0}\), \(\mathrm {E}(\tau \mid \xi _{0}\sim \pi _0) = \sum \nolimits _{x\in \mathcal {X}} \pi _{0}(x)\mathrm {E}(\tau \mid \xi _{0}=x)\) is called the EFHT of the Markov chain over the initial distribution \(\pi _0\). Thus, the expected running time of the (\(1+1\))-EA starting from \(\xi _0 \sim \pi _0\) is equal to \(1+2\cdot \mathrm {E}(\tau \mid \xi _{0} \sim \pi _0)\), where the term 1 corresponds to evaluating the initial solution, and the factor 2 corresponds to evaluating the offspring solution \(x'\) and reevaluating the parent solution x in each iteration. If using sampling, the expected running time of the (\(1+1\))-EA is \(m+2m\cdot \mathrm {E}(\tau \mid \xi _{0} \sim \pi _0)\), since estimating the fitness of a solution needs m number of independent fitness evaluations. Note that we consider the expected running time of the (\(1+1\))-EA starting from a uniform initial distribution in this paper.

In the following, we give three drift theorems that will be used to analyze the EFHT of Markov chains in the paper. The additive drift theorem [20] as presented in Theorem 1 is used to derive upper bounds on the EFHT of Markov chains. To use it, a function V(x) has to be constructed to measure the distance of a state x to the optimal state space \(\mathcal {X}^*\). Then, we need to investigate the progress on the distance to \(\mathcal {X}^*\) in each step, i.e., \(\mathbb {E}(V(\xi _t)-V(\xi _{t+1}) \mid \xi _t)\). An upper bound on the EFHT can be derived through dividing the initial distance by a lower bound on the progress.

Theorem 1

(Additive Drift [20]) Given a Markov chain \(\{\xi _t\}^{+\infty }_{t=0}\) and a distance function V(x), if for any \(t \ge 0\) and any \(\xi _t\) with \(V(\xi _t) > 0\), there exists a real number \(c>0\) such that

then the EFHT satisfies that \(\mathrm {E}(\tau \mid \xi _0) \le V(\xi _0)/c.\)

The negative drift theorem [24, 25] as presented in Theorem 2 was proposed to prove exponential lower bounds on the EFHT of Markov chains, where \(X_t\) is usually represented by a mapping of \(\xi _t\). It requires two conditions: a constant negative drift and exponentially decaying probabilities of jumping towards or away from the goal state. To relax the requirement of a constant negative drift, the negative drift theorem with self-loops [30] as presented in Theorem 3 has been proposed, which takes into account large self-loop probabilities.

Theorem 2

(Negative Drift [24, 25]) Let \(X_t\), \(t\ge 0\), be real-valued random variables describing a stochastic process. Suppose there exists an interval [a, b] \(\subseteq \mathbb {R}\), two constants \(\delta ,\epsilon >0\) and, possibly depending on \(l:=b-a\), a function r(l) satisfying \(1\le r(l)=o(l/\log (l))\) such that for all \(t\ge 0\) the following two conditions hold:

Then there is a constant \(c>0\) such that for \(T:=\min \{t \ge 0: X_t \le a \mid X_0 \ge b\}\) it holds \(\mathrm {P}(T \le 2^{cl/r(l)})=2^{-\varOmega (l/r(l))}\).

Theorem 3

(Negative Drift with Self-loops [30]) Let \(X_t\), \(t\ge 0\), be real-valued random variables describing a stochastic process. Suppose there exists an interval \([a,b] \subseteq \mathbb {R}\), two constants \(\delta ,\epsilon >0\) and, possibly depending on \(l:=b-a\), a function r(l) satisfying \(1\le r(l)=o(l/\log (l))\) such that for all \(t\ge 0\) the following two conditions hold:

Then there is a constant \(c>0\) such that for \(T:=\min \{t \ge 0: X_t \le a \mid X_0 \ge b\}\) it holds \(\mathrm {P}(T \le 2^{cl/r(l)})=2^{-\varOmega (l/r(l))}\).

3 The OneMax Problem

In this section, we analyze the running time of the (\(1+1\))-EA on OneMax under bit-wise noise. Note that for bit-wise noise (1, q), it has been proved that the expected running time is polynomial if and only if \(q=O(\log n/n^2)\), as shown in Theorem 4.

Theorem 4

[19] For the (\(1+1\))-EA on OneMax under bit-wise noise (1, q), the expected running time is polynomial if \(q =O(\log n/n^2)\) and super-polynomial if \(q=\omega (\log n / n^2)\).

For bit-wise noise \((p,\frac{1}{n})\), we prove in Theorems 5 and 6 that the tight range of p allowing a polynomial running time is \(O(\log n/n)\). Instead of using the original drift theorems, we apply the upper and lower bounds of the (\(1+1\))-EA on noisy OneMax in [19]. Let \(x^k\) denote any solution with k number of 1-bits, and \(f^n(x^{k})\) denote its noisy objective value, which is a random variable. Lemma 1 intuitively means that if the probability of recognizing the true better solution by noisy evaluation is large (i.e., Eq. (1)), the running time can be upper bounded. Particularly, if Eq. (1) holds with \(l=O(\log n)\), the running time can be polynomially upper bounded. On the contrary, Lemma 2 shows that if the probability of making a right comparison is small (i.e., Eq. (2)), the running time can be lower bounded. Particularly, if Eq. (2) holds with \(l=\varOmega (n)\), the running time can be exponentially lower bounded. Both Lemmas 1 and 2 are proved by applying standard drift theorems, and can be used to simplify our analysis. Note that in the original upper bound of the (\(1+1\))-EA on noisy OneMax (i.e., Theorem 5 in [19]), it requires that Eq. (1) holds with only \(j=k\), but the proof actually also requires that noisy OneMax satisfies the monotonicity property, i.e., for all \(j< k<n\), \(\mathrm {P}(f^n(x^k)<f^n(x^{k+1})) \le \mathrm {P}(f^n(x^j)<f^n(x^{k+1}))\). We have combined these two conditions in Lemma 1 by requiring Eq. (1) to hold with any \(j \le k\) instead of only \(j=k\).

Lemma 1

[19] Suppose there is a positive constant \(c \le 1/15\) and some \(2 < l \le n/2\) such that

then the (\(1+1\))-EA optimizes f in expectation in \(O(n \log n) +n2^{O(l)}\) iterations.

Lemma 2

[19] Suppose there is some \(l \le n/4\) and a constant \(c \ge 16\) such that

then the (\(1+1\))-EA optimizes f in \(2^{\varOmega (l)}\) iterations with a high probability.

First, we apply Lemma 1 to show that the expected running time is polynomially upper bounded for bit-wise noise \((p,\frac{1}{n})\) with \(p=O(\log n/n)\).

Theorem 5

For the (\(1+1\))-EA on OneMax under bit-wise noise \((p,\frac{1}{n})\), the expected running time is polynomial if \(p =O(\log n/n)\).

Proof

We prove it by using Lemma 1. For some positive constant b, suppose that \(p \le b\log n/n\). We set the two parameters in Lemma 1 as \(c=\min \{\frac{1}{15},b\}\) and \(l=\frac{2b\log n}{c} \in (2,\frac{n}{2}]\).

For any \(j \le k<n\), \(f^n(x^j) \ge f^n(x^{k+1})\) implies that \(f^n(x^j) \ge k+1\) or \(f^n(x^{k+1}) \le k\), either of which happens with probability at most p. By the union bound, we get \(\forall j \le k<n\),

For any \(j \le k < n-l\), we easily get

By Lemma 1, we know that the expected running time is \(O(n \log n)+n2^{O(2b\log n/c)}\), i.e., polynomial. \(\square \)

Next we apply Lemma 2 to show that the expected running time is super-polynomial for bit-wise noise \((p,\frac{1}{n})\) with \(p=\omega (\log n/n)\). Note that for \(p=1-O(\log n/n)\), we actually give a stronger result that the expected running time is exponential.

Theorem 6

For the (\(1+1\))-EA on OneMax under bit-wise noise \((p,\frac{1}{n})\), the expected running time is super-polynomial if \(p =\omega (\log n/n) \cap 1-\omega (\log n/n)\) and exponential if \(p =1-O(\log n/n)\).

Proof

We use Lemma 2 to prove it. Let \(c = 16\). The case \(p =\omega (\log n/n) \cap 1-\omega (\log n/n)\) is first analyzed. For any positive constant b, let \(l=b\log n\). For any \(k \ge n-l\), we get

To make \(f^n(x^k)=k\), it is sufficient that the noise does not happen, i.e., \(\mathrm {P}(f^n(x^k)=k) \ge 1-p\). To make \(f^n(x^{k+1})\le k\), it is sufficient to flip one 1-bit and keep other bits unchanged by noise, i.e., \(\mathrm {P}(f^n(x^{k+1})\le k) \ge p\cdot \frac{k+1}{n}(1-\frac{1}{n})^{n-1}\). Thus,

Since \(c\frac{n-k}{n} \le c\frac{l}{n}=\frac{cb\log n}{n}\), the condition of Lemma 2 holds. Thus, the expected running time is \(2^{\varOmega (b\log n)}\) (where b is any constant), i.e., super-polynomial.

For the case \(p =1-O(\log n/n)\), let \(l=\sqrt{n}\). We use another lower bound \(p(1-\frac{1}{n})^{n}\) for \(\mathrm {P}(f^n(x^k)=k)\), since it is sufficient that no bit flips by noise. Thus, we have

Since \(c\frac{n-k}{n} \le \frac{c\sqrt{n}}{n}\), the condition of Lemma 2 holds. Thus, the expected running time is \(2^{\varOmega (\sqrt{n})}\), i.e., exponential. \(\square \)

To show that the performance of the (\(1+1\))-EA for bit-wise noise with two scenarios (p, q) and \((p',q')\) where \(p\cdot q=p'\cdot q'\) can be significantly different, we compare the expected running time of the (\(1+1\))-EA for bit-wise noise \( (1,\frac{\log n}{30n}) \) and \((\frac{\log n}{30n},1)\). For the former case, we know from Theorem 4 that the expected running time is super-polynomial, while for the latter case, we prove in the following theorem that the expected running time can be polynomially upper bounded.

Theorem 7

For the (\(1+1\))-EA on OneMax under bit-wise noise \((\frac{\log n}{30n},1)\), the expected running time is polynomial.

Proof

We use Lemma 1 to prove it. For any \( j\le k<n \), \( f^n(x^j)\ge f^n(x^{k+1}) \) implies that the fitness evaluation of \( x^j \) or \( x^{k+1} \) is affected by noise, whose probability is at most \( 2\cdot \frac{\log n}{30n} =\frac{\log n}{15n}\). Thus, we have \( \mathrm {P}(f^n(x^j)<f^n(x^{k+1}))\ge 1-\frac{\log n}{15n}\). It is then easy to verify that the condition of Lemma 1 holds with \( c=\frac{1}{15} \) and \( l=\log n \). Thus, the expected running time is polynomial. \(\square \)

4 The LeadingOnes Problem

In this section, we first analyze the running time of the (\(1+1\))-EA on the LeadingOnes problem under bit-wise noise \((p,\frac{1}{n})\) and bit-wise noise (1, q), respectively. Then, we transfer the analysis from bit-wise noise \((p,\frac{1}{n})\) to one-bit noise; the results are complementary to the known ones recently derived in [19]. However, our analysis does not cover all the ranges of p and q. For those values of p and q where no theoretical results are known, we conduct experiments to empirically investigate the running time.

4.1 Bit-Wise Noise \((p,\frac{1}{n})\)

For bit-wise noise \((p,\frac{1}{n})\), we first apply the additive drift theorem (i.e., Theorem 1) to prove that the expected running time is polynomial when \(p=O(\log n/n^2)\).

Theorem 8

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise \((p,\frac{1}{n})\), the expected running time is polynomial if \(p =O(\log n/n^2)\).

Proof

We use Theorem 1 to prove it. For some positive constant b, suppose that \(p \le b\log n /n^2\). Let \(\theta \in (0,1)\) be some constant close to 0. We first construct a distance function V(x) as, for any x with \({\mathrm {LO}}(x)=i\),

where \(c=\frac{2b\log n}{1-\theta }+1\). It is easy to verify that \(V(x \in \mathcal {X}^*=\{1^n\})=0\) and \(V(x \notin \mathcal {X}^*)>0\).

Then, we investigate \(\mathrm {E}(V(\xi _t)-V(\xi _{t+1}) \mid \xi _t=x)\) for any x with \({\mathrm {LO}}(x)<n\) (i.e., \(x \notin \mathcal {X^*}\)). Assume that currently \({\mathrm {LO}}(x)=i\), where \(0\le i \le n-1\). Let \(\mathrm {P}_{mut}(x,x')\) denote the probability of generating \(x'\) by mutation on x. We divide the drift into two parts: positive \(\mathrm {E}^+\) and negative \(\mathrm {E}^-\). That is,

where

For the positive drift \(\mathrm {E}^+\), we need to consider that the number of leading 1-bits is increased. By mutation, we have

since it needs to flip the \((i+1)\)-th bit (which must be 0) of x and keep the i leading 1-bits unchanged. For any \(x'\) with \({\mathrm {LO}}(x') \ge i+1\), \(f^n(x') < f^n(x)\) implies that \(f^n(x') \le i-1\) or \(f^n(x) \ge i+1\). Note that,

since at least one of the first i leading 1-bits of \(x'\) needs to be flipped by noise;

since it needs to flip the first 0-bit of x and keep the leading 1-bits unchanged by noise. By the union bound, we get

where the last inequality holds with sufficiently large n, since \(p=O(\log n/n^2)\) and \(\theta \in (0,1)\) is some constant close to 0. Furthermore, for any \(x'\) with \(V(x')\ge i+1\),

By combining Eqs. (6), (9) and (10), we have

where the last inequality is by \((1-\frac{1}{n})^{i} \ge (1-\frac{1}{n})^{n-1} \ge \frac{1}{e}\ge \frac{1}{3}\).

For the negative drift \(\mathrm {E}^-\), we need to consider that the number of leading 1-bits is decreased. By mutation, we have

since it needs to flip at least one leading 1-bit of x. For any \(x'\) with \({\mathrm {LO}}(x') \le i-1\) (where \(i \ge 1\)), \(f^n(x') \ge f^n(x)\) implies that \(f^n(x') \ge i\) or \(f^n(x) \le i-1\). Note that,

since for the first i bits of \(x'\), it needs to flip the 0-bits (whose number is at least 1) and keep the 1-bits unchanged by noise;

since at least one leading 1-bit of x needs to be flipped by noise. By the union bound, we get

Furthermore, according to the definition of the distance function (i.e., Eq. (3)), we have for any \(x'\) with \(0 \le {\mathrm {LO}}(x') \le i-1\),

By combining Eqs. (11), (14) and (15), we have

Thus, by subtracting \(\mathrm {E}^-\) from \(\mathrm {E}^+\), we have

where the second inequality is by \(c=\frac{2b\log n}{1-\theta }+1\) and \(p \le b \log n/n^2\). Note that \(V(x)\le (1+\frac{c}{n})^n \le e^c=e^{\frac{2b\log n}{1-\theta }+1}=en^{\frac{2b}{1-\theta }}\). By Theorem 1, we get

i.e., the expected running time is polynomial. \(\square \)

Next we use the negative drift with self-loops theorem (i.e., Theorem 3) to show that the expected running time is super-polynomial for bit-wise noise \((p,\frac{1}{n})\) with \(p=\omega (\log n/n) \cap o(1)\).

Theorem 9

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise \((p,\frac{1}{n})\), if \(p =\omega (\log n/n) \cap o(1)\), the expected running time is super-polynomial.

Proof

We use Theorem 3 to prove it. Let \(X_t=|x|_0\) be the number of 0-bits of the solution x after t iterations of the (\(1+1\))-EA. Let c be any positive constant. We consider the interval \([0,c \log n]\), i.e., the parameters \(a=0\) (i.e., the global optimum) and \(b=c \log n\) in Theorem 3.

Then, we analyze the drift \(\mathrm {E}(X_t-X_{t+1} \mid X_t=i)\) for \(1\le i<c \log n\). As in the proof of Theorem 8, we divide the drift into two parts: positive \(\mathrm {E}^+\) and negative \(\mathrm {E}^-\). That is,

where

Note that the drift here depends on the number of 0-bits due to the definition of \(X_t\). It is different from that in the proof of Theorem 8, which depends on the number of leading 1-bits due to the definition of the distance function (i.e., Eq. (3)).

For the positive drift \(\mathrm {E}^+\), we need to consider that the number of 0-bits is decreased. For mutation on x (where \(|x|_0=i\)), let X and Y denote the number of flipped 0-bits and 1-bits, respectively. Then, \(X \sim B(i,\frac{1}{n})\) and \(Y \sim B(n-i,\frac{1}{n})\), where \(B(\cdot ,\cdot )\) is the binomial distribution. To estimate an upper bound on \(\mathrm {E}^+\), we assume that the offspring solution \(x'\) with \(|x'|_0 <i\) is always accepted. Thus, we have

For the negative drift \(\mathrm {E}^-\), we need to consider that the number of 0-bits is increased. We analyze the \(n-i\) cases where only one 1-bit is flipped (i.e., \(|x'|_0=i+1\)), which happens with probability \(\frac{1}{n}(1-\frac{1}{n})^{n-1}\). Assume that \({\mathrm {LO}}(x)=k \le n-i\). If the j-th (where \(1 \le j \le k\)) leading 1-bit is flipped, the offspring solution \(x'\) will be accepted (i.e., \(f^n(x') \ge f^n(x)\)) if \(f^n(x') \ge j-1\) and \(f^n(x) \le j-1\). Note that,

where the equality is since it needs to keep the \(j-1\) leading 1-bits of \(x'\) unchanged, and the last inequality is by \(p=o(1)\);

where the first equality is since at least one of the first j leading 1-bits of x needs to be flipped by noise. Thus, we get

If one of the \(n-i-k\) non-leading 1-bits is flipped, \({\mathrm {LO}}(x')={\mathrm {LO}}(x)=k\). We can use the same analysis procedure as Eq. (9) in the proof of Theorem 8 to derive that

where the last inequality is by \(p=o(1)\). Combining all the \(n-i\) cases, we get

By subtracting \(\mathrm {E}^-\) from \(\mathrm {E}^+\), we get

To investigate condition (1) of Theorem 3, we also need to analyze the probability \(\mathrm {P}(X_{t+1} \ne i \mid X_t=i)\). For \(X_{t+1}\ne i\), it is necessary that at least one bit of x is flipped and the offspring \(x'\) is accepted. We consider two cases: (1) at least one of the k leading 1-bits of x is flipped; (2) the k leading 1-bits of x are not flipped and at least one of the last \(n-k\) bits is flipped. For case (1), the mutation probability is \(1-(1-\frac{1}{n})^k\) and the acceptance probability is at most \(p\frac{k+1}{n}\) by Eq. (14). For case (2), the mutation probability is \((1-\frac{1}{n})^{k}(1-(1-\frac{1}{n})^{n-k}) \le \frac{n-k}{n}\) and the acceptance probability is at most 1. Thus, we have

When \(k<n-np\), we have

where the second inequality is by \(n-k>np\) and \(i< c \log n\), the third inequality is by \(p =\omega (\log n/n)\) and the last is by \(n-k>np\). When \(k\ge n-np\), we have

where the second inequality is by \(p=o(1)\) and \(i< c \log n\), the third is by \(p=\omega (\log n/n)\) and the last is by \(n-k \le np\). Combining Eqs. (23), (24) and (25), we get that condition (1) of Theorem 3 holds with \(\epsilon =\frac{1}{576}\).

For condition (2) of Theorem 3, we need to show \(\mathrm {P}(|X_{t+1}-X_{t}|\ge j \mid X_t =i) \le \frac{r(l)}{(1+\delta )^j}\cdot \mathrm {P}(X_{t+1} \ne i \mid X_t=i)\) for \(i \ge 1\). For \(\mathrm {P}(X_{t+1} \ne i \mid X_t=i)\), we analyze the n cases where only one bit is flipped. Using the similar analysis procedure as \(\mathrm {E}^-\), except that flipping any bit rather than only 1-bit is considered here, we easily get

For \(|X_{t+1}-X_{t}|\ge j\), it is necessary that at least j bits of x are flipped and the offspring solution \(x'\) is accepted. We consider two cases: (1) at least one of the k leading 1-bits is flipped; (2) the k leading 1-bits are not flipped. For case (1), the mutation probability is at most \(\frac{k}{n}\left( {\begin{array}{c}n-1\\ j-1\end{array}}\right) \frac{1}{n^{j-1}}\) and the acceptance probability is at most \(p\frac{k+1}{n}\) by Eq. (14). For case (2), the mutation probability is at most \((1-\frac{1}{n})^k\left( {\begin{array}{c}n-k\\ j\end{array}}\right) \frac{1}{n^{j}}\) and the acceptance probability is at most 1. Thus, we have

By combining Eq. (26) with Eq. (27), we get that condition (2) of Theorem 3 holds with \(\delta =1\) and \(r(l)=144\).

Note that \(l=b-a=c \log n\). By Theorem 3, the expected running time is \(2^{\varOmega (c \log n)}\), where c is any positive constant. Thus, the expected running time is super-polynomial. \(\square \)

For \(p=\varOmega (1)\), we can use the negative drift theorem (i.e., Theorem 2) to derive a stronger result that the expected running time is exponentially lower bounded.

Theorem 10

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise \((p,\frac{1}{n})\), the expected running time is exponential if \(p=\varOmega (1)\).

Proof

We use Theorem 2 to prove it. Let \(X_t=i\) be the number of 0-bits of the solution x after t iterations of the (\(1+1\))-EA. We consider the interval \(i \in [0,n^{1/2}]\). To analyze the drift \(\mathrm {E}(X_t-X_{t+1} \mid X_t=i)=\mathrm {E}^+-\mathrm {E}^-\), we use the same analysis procedure as Theorem 9. For the positive drift, we have \(\mathrm {E}^+ \le \frac{i}{n}=o(1)\). For the negative drift, we re-analyze Eqs. (20) and (21). From Eqs. (18) and (19), we get that \(\mathrm {P}(f^n(x') \ge j-1) \ge p(1-\frac{j-1}{n})\) and \(\mathrm {P}(f^n(x) \le j-1) \ge \frac{pj}{3n}\). Thus, Eq. (20) becomes

For Eq. (21), we need to analyze the acceptance probability for \({\mathrm {LO}}(x')={\mathrm {LO}}(x)=k\). Since it is sufficient to keep the first \((k+1)\) bits of x and \(x'\) unchanged in noise, Eq. (21) becomes

By applying the above two inequalities to Eq. (22), we have

where the equality is by \(p=\varOmega (1)\). Thus, \(\mathrm {E}^+-\mathrm {E}^-=-\varOmega (1)\). That is, condition (1) of Theorem 2 holds.

Since it is necessary to flip at least j bits of x, we have

which implies that condition (2) of Theorem 2 holds with \(\delta =1\) and \(r(l)=2\). Note that \(l=n^{1/2}\). Thus, by Theorem 2, the expected running time is exponential. \(\square \)

4.2 Bit-Wise Noise (1, q)

For bit-wise noise (1, q), the proof idea is similar to that for bit-wise noise \((p,\frac{1}{n})\). The main difference led by the change of noise is the probability of accepting the offspring solution, i.e., \(\mathrm {P}(f^n(x') \ge f^n(x))\). We first prove that the expected running time is polynomial when \(q =O(\log n/n^3)\).

Theorem 11

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise (1, q), the expected running time is polynomial if \(q =O(\log n/n^3)\).

Proof

The proof is very similar to that of Theorem 8. The change of noise only affects the probability of accepting the offspring solution in the analysis. For some positive constant b, suppose that \(q \le b \log n /n^3\).

For the positive drift \(\mathrm {E}^+\), we need to re-analyze \(\mathrm {P}(f^n(x') \ge f^n(x))\) (i.e., Eq. (9) in the proof of Theorem 8) for the parent x with \({\mathrm {LO}}(x)=i\) and the offspring \(x'\) with \({\mathrm {LO}}(x') \ge i+1\). By bit-wise noise (1, q), Eqs. (7) and (8) change to

Thus, by the union bound, Eq. (9) becomes

where the last inequality holds with sufficiently large n, since \(q=O(\log n/n^3)\) and \(\theta \in (0,1)\) is some constant close to 0.

For the negative drift \(\mathrm {E}^-\), we need to re-analyze \(\mathrm {P}(f^n(x') \ge f^n(x))\) (i.e., Eq. (14) in the proof of Theorem 8) for the parent x with \({\mathrm {LO}}(x)=i\) (where \(i \ge 1\)) and the offspring \(x'\) with \({\mathrm {LO}}(x') \le i-1\). By bit-wise noise (1, q), Eqs. (12) and (13) change to

Thus, by the union bound, Eq. (14) becomes

where the second inequality is by \((1-q)^{i-1} \ge 1-(i-1)q\) and \(1-2q >0\) for \(q=O(\log n/n^3)\).

By applying Eq. (30) and Eq. (31) to \(\mathrm {E}^+\) and \(\mathrm {E}^-\), respectively, Eq. (16) changes to

That is, the condition of Theorem 1 still holds with \(\frac{1-\theta }{3n^2}\). Thus, the expected running time is polynomial. \(\square \)

Next we prove that the expected running time is super-polynomial when q is in the range of \(\omega (\log n/n^2) \cap o(1/n)\).

Theorem 12

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise (1, q), if \(q =\omega (\log n/n^2) \cap o(1/n)\), the expected running time is super-polynomial.

Proof

We use the same analysis procedure as Theorem 9. The only difference is the probability of accepting the offspring solution \(x'\) due to the change of noise. For the positive drift, we still have \(\mathrm {E}^+ \le \frac{i}{n}\), since we optimistically assume that \(x'\) is always accepted in the proof of Theorem 9.

For the negative drift, we need to re-analyze \(\mathrm {P}(f^n(x') \ge f^n(x))\) for the parent solution x with \({\mathrm {LO}}(x)=k\) and the offspring solution \(x'\) with \({\mathrm {LO}}(x')=j-1\) (where \(1 \le j \le k+1\)). For \(j\le k\), to derive a lower bound on \(\mathrm {P}(f^n(x') \ge f^n(x))\), we consider the j cases where \(f^n(x)=l\) and \(f^n(x') \ge l\) for \(0 \le l \le j-1\). Since \(\mathrm {P}(f^n(x)=l)=(1-q)^lq\) and \(\mathrm {P}(f^n(x') \ge l)=(1-q)^l\), Eq. (20) changes to

where the last inequality is by \((1-q)^{2j} \ge 1-2qj \ge 1/2\) since \(q =o(1/n)\). For \(j=k+1\) (i.e., \({\mathrm {LO}}(x')={\mathrm {LO}}(x)=k\)), we can use the same analysis as Eq. (30) to derive a lower bound \(1-q(k+1) \ge 1/2\), where the inequality is by \(q=o(1/n)\). Thus, Eq. (21) also holds here, i.e.,

By applying Eqs. (32) and (33) to \(\mathrm {E}^-\), Eq. (22) changes to

Thus, we have

For the upper bound analysis of \(\mathrm {P}(X_{t+1} \ne i \mid X_t=i)\) in the proof of Theorem 9, we only need to replace the acceptance probability \(p\frac{k+1}{n}\) in the case of \({\mathrm {LO}}(x') < {\mathrm {LO}}(x)\) with \((k+1)q\) (i.e., Eq. (31)). Thus, Eq. (23) changes to

To compare \(\mathrm {E}(X_t-X_{t+1} \mid X_t=i)\) with \(\mathrm {P}(X_{t+1} \ne i \mid X_t=i)\), we consider two cases: \(k < n-n^2q\) and \(k \ge n-n^2q\). By using \(q = \omega (\log n /n^2)\) and applying the same analysis procedure as Eqs. (24) and (25), we can derive that condition (1) of Theorem 3 holds with \(\epsilon =\frac{1}{192}\).

For the lower bound analysis of \(\mathrm {P}(X_{t+1} \ne i \mid X_t=i)\), by applying Eqs. (32) and (33), Eq. (26) changes to

For the analysis of \(|X_{t+1}-X_{t}|\ge j\), by replacing the acceptance probability \(p\frac{k+1}{n}\) in the case of \({\mathrm {LO}}(x') < {\mathrm {LO}}(x)\) with \((k+1)q\), Eq. (27) changes to

That is, condition (2) of Theorem 3 holds with \(\delta =1, r(l)=48\). Thus, the expected running time is super-polynomial. \(\square \)

For \(q=\varOmega (1/n)\), we prove a stronger result that the expected running time is exponentially lower bounded.

Theorem 13

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise (1, q), the expected running time is exponential if \(q=\varOmega (1/n)\).

Proof

We use Theorem 2 to prove it. Let \(X_t=i\) be the number of 0-bits of the solution x after t iterations of the (\(1+1\))-EA. We consider the interval \(i \in [0,n^{1/2}]\). To analyze the drift \(\mathrm {E}(X_t-X_{t+1} \mid X_t=i)\), we use the same analysis procedure as the proof of Theorem 9.

We first consider \(q = \varOmega (1/n) \cap o(1)\). We need to analyze the probability \(\mathrm {P}(f^n(x') \ge f^n(x))\), where the offspring solution \(x'\) is generated by flipping only one 1-bit of x. Let \({\mathrm {LO}}(x)=k\). For the case where the j-th (where \(1 \le j \le k\)) leading 1-bit is flipped, as the analysis of Eq. (32), we get

If \((1-q)^{2j}<\frac{1}{2}\), \(\frac{1-(1-q)^{2j}}{2} \ge \frac{1}{4}\); otherwise, \((1-q)^{2j} \frac{qj}{1-q} \ge \frac{qj}{2}\). Thus, we have

For the case that flips one non-leading 1-bit (i.e., \({\mathrm {LO}}(x')={\mathrm {LO}}(x)=k\)), to derive a lower bound on \(\mathrm {P}(f^n(x') \ge f^n(x))\), we consider \(f^{n}(x)=l\) and \(f^{n}(x') \ge l\) for \(0 \le l \le k\). Thus,

where the last inequality is by \(q=o(1)\). By applying the above two inequalities to Eq. (22), we get

If \(k \ge \frac{n}{2}\), \(\sum ^{k}_{j=1}\min \{\frac{1}{4},\frac{qj}{2}\}=\varOmega (n)\) since \(q=\varOmega (1/n)\). If \(k <\frac{n}{2}\), \(\frac{n-i-k}{2}=\varOmega (n)\) since \(i \le \sqrt{n}\). Thus, \(\mathrm {E}^{-}=\varOmega (1)\).

For \(q=\varOmega (1)\), we use the trivial lower bound q for the probability of accepting the offspring solution \(x'\), since it is sufficient to flip the first leading 1-bit of x by noise. Then,

Thus, for \(q=\varOmega (1/n)\), we have

That is, condition (1) of Theorem 2 holds. Its condition (2) trivially holds with \(\delta =1\) and \(r(l)=2\). Thus, the expected running time is exponential. \(\square \)

4.3 One-Bit Noise

For the (\(1+1\))-EA on LeadingOnes under one-bit noise, it has been known that the expected running time is polynomial if \(p \le 1/(6en^2)\) and exponential if \(p=1/2\) [19]. We extend this result by proving in Theorem 14 that the expected running time is polynomial if \(p =O(\log n/n^2)\) and super-polynomial if \(p=\omega (\log n/n)\). The proof can be accomplished in the same way as that of Theorems 8, 9 and 10 for bit-wise noise \((p,\frac{1}{n})\). This is because although the probabilities \(\mathrm {P}(f^n(x') \ge f^n(x))\) of accepting the offspring solution are different, their bounds used in the proofs for bit-wise noise \((p,\frac{1}{n})\) still hold for one-bit noise.

Theorem 14

For the (\(1+1\))-EA on LeadingOnes under one-bit noise, the expected running time is polynomial if \(p =O(\log n/n^2)\), super-polynomial if \(p=\omega (\log n/n) \cap o(1)\) and exponential if \(p=\varOmega (1)\).

Proof

We re-analyze \(\mathrm {P}(f^n(x') \ge f^n(x))\) for one-bit noise, and show that the bounds on \(\mathrm {P}(f^n(x') \ge f^n(x))\) used in the proofs for bit-wise noise \((p,\frac{1}{n})\) still hold for one-bit noise.

For the proof of Theorem 8, Eqs. (7) and (8) change to

and thus Eq. (9) still holds; Eqs. (12) and (13) change to

and thus Eq. (14) still holds.

For the proof of Theorem 9, Eqs. (18) and (19) change to

and thus Eq. (20) still holds.

For the proof of Theorem 10, Eq. (28) still holds by the above two equalities; Eq. (29) still holds since the probability of keeping the first \((k+1)\) bits of a solution unchanged in one-bit noise is \(1-p\frac{k+1}{n} \ge p(1-\frac{k+1}{n})\). \(\square \)

4.4 Experiments

In the previous three subsections, we have proved that for the (\(1+1\))-EA solving the LeadingOnes problem, if under bit-wise noise \((p,\frac{1}{n})\), the expected running time is polynomial when \(p=O(\log n /n^2)\) and super-polynomial when \(p=\omega (\log n /n)\); if under bit-wise noise (1, q), the expected running time is polynomial when \(q=O(\log n /n^3)\) and super-polynomial when \(q=\omega (\log n /n^2)\); if under one-bit noise, the expected running time is polynomial when \(p=O(\log n /n^2)\) and super-polynomial when \(p=\omega (\log n /n)\). However, the current analysis does not cover all the ranges of p and q. We thus have conducted experiments to complement the theoretical results.

For bit-wise noise \((p,\frac{1}{n})\), we do not know whether the running time is polynomial or super-polynomial when \(p=\omega (\log n/n^2) \cap O(\log n/n)\). We empirically estimate the expected running time for \(p=(\log n/n)^2\), \(\log n/n^{3/2}\) and \(\log n/n\). On each problem size n, we run the (\(1+1\))-EA 1000 times independently. In each run, we record the number of fitness evaluations until an optimal solution w.r.t. the true fitness function is found for the first time. Then the total number of evaluations of the 1000 runs are averaged as the estimation of the expected running time. To show the relationship between the estimated expected running time and the problem size n clearly, we plot the curve of \(\log (\text {estimated expected running time})/\log n\), as shown in Fig. 1. Note that in subfigures (a) and (b), the problem size n is in the range from 5 to 100, while in subfigure (c), n is from 5 to 45. This is because the expected running time with \(n > 45\) in subfigure (c) is too large to be estimated. We can observe that all the curves continue to rise as n increases, which suggests that the expected running time for the three tested p values is all \(n^{\omega (1)}\), i.e., super-polynomial. For bit-wise noise (1, q) and one-bit noise, we also empirically estimate the expected running time for the values of q and p, which are uncovered by our theoretical analysis. The results are plotted in Figs. 2 and 3, respectively, which are similar to that observed for bit-wise noise \((p,\frac{1}{n})\).

Therefore, these empirical results suggest that the expected running time is super-polynomial for the uncovered ranges of p and q in theoretical analysis, and thus the currently derived ranges of p and q allowing a polynomial running time might be tight. The rigorous analysis is not easy. We may need to analyze transition probabilities between fitness levels more precisely, and design an ingenious distance function or use more advanced analysis tools. We leave it as a future work.

Estimated expected running time (ERT) for the (\(1+1\))-EA on OneMax under noise. Note that for the three studied noise models, the largest noise levels allowing a polynomial running time derived in theoretical analysis are \(O(\log n/n)\), \(O(\log n/n^2)\) and \(O(\log n/n)\), respectively. a Bit-wise noise \((p,\frac{1}{n})\). b Bit-wise noise (1, q). c One-bit noise

Since the theoretical results are all asymptotic, we also empirically compare the expected running time to see when the asymptotic behaviors can be clearly distinguished. For each problem and each kind of noise, we estimate the expected running time for the largest noise level (denoted by a) allowing a polynomial running time derived in our theoretical analysis, and then compare it with the estimated expected running time for one relatively smaller noise level \(a/\log n\), and two relatively larger noise levels \(a\cdot \log n\) and \(a \cdot \sqrt{n}\). For example, for the OneMax problem under bit-wise noise \((p,\frac{1}{n})\), the largest noise level allowing a polynomial running time is \(O(\log n/n)\); thus we compare the estimated expected running time for \(p=1/n\), \(\log n/n\), \((\log n)^2/n\) and \(\log n/n^{1/2}\). The results are plotted in Figs. 4 and 5. We can observe that for the OneMax and LeadingOnes problems, the asymptotic polynomial behaviors (i.e., ‘green \(\circ \)’ and ‘red \(\times \)’) can be distinguished when the problem size n reaches nearly 200; while the asymptotic behaviors of polynomial (i.e., ‘green \(\circ \)’ and ‘red \(\times \)’) and super-polynomial (i.e., ‘blue \(\bigtriangleup \)’ and ‘black \(*\)’) can be clearly distinguished when n reaches 50 and 100, respectively.

5 The Robustness of Sampling to Noise

From the derived results in the above two sections, we can observe that the (\(1+1\))-EA is efficient for solving OneMax and LeadingOnes only under low noise levels. For example, for the (\(1+1\))-EA solving OneMax under bit-wise noise \((p,\frac{1}{n})\), the optimal solution can be found in polynomial time only when \(p=O(\log n/n)\). In this section, we analyze the robustness of the sampling strategy to noise. Sampling as presented in Definition 5 evaluates the fitness of a solution multiple (m) times independently and then uses the average to approximate the true fitness. We show that using sampling can significantly increase the largest noise level allowing a polynomial running time. For example, if using sampling with \(m=4n^3\), the (\(1+1\))-EA can always solve OneMax under bit-wise noise \((p,\frac{1}{n})\) in polynomial time, regardless of the value of p.

Estimated expected running time (ERT) for the (\(1+1\))-EA on LeadingOnes under noise. Note that for the three studied noise models, the largest noise levels allowing a polynomial running time derived in theoretical analysis are \(O(\log n/n^2)\), \(O(\log n/n^3)\) and \(O(\log n/n^2)\), respectively. a Bit-wise noise \((p,\frac{1}{n})\). b Bit-wise noise (1, q). c One-bit noise

5.1 The OneMax Problem

We prove in Theorems 15 and 18 that under bit-wise noise \((p,\frac{1}{n})\) or one-bit noise, the (\(1+1\))-EA can always solve OneMax in polynomial time by using sampling. For bit-wise noise (1, q), the tight range of q allowing a polynomial running time is \(1/2-1/n^{O(1)}\), as shown in Theorems 16 and 17. Let \(x^k\) denote any solution with k number of 1-bits, and \(f^n(x^{k})\) denote its noisy objective value. For proving polynomial upper bounds, we use Lemma 1, which gives a sufficient condition based on the probability \(\mathrm {P}(f^n(x^j) < f^{n}(x^{k+1}))\) for \(j \le k\). But for the (\(1+1\))-EA using sampling, the probability changes to be \(\mathrm {P}(\hat{f}(x^j) < \hat{f}(x^{k+1}))\), where \(\hat{f}(x^j)=\frac{1}{m}\sum ^{m}_{i=1}f^n_i(x^j)\) as shown in Definition 5. Lemma 1 requires a lower bound on \(\mathrm {P}(\hat{f}(x^j) < \hat{f}(x^{k+1}))\). Our proof idea as presented in Lemma 3 is to derive a lower bound on the expectation of \(f^{n}(x^{k+1})-f^n(x^j)\) and then apply Chebyshev’s inequality. We will directly use Lemma 3 in the following proofs. For proving super-polynomial lower bounds, we use Lemma 2 by replacing \(\mathrm {P}(f^n(x^k) < f^{n}(x^{k+1}))\) with \(\mathrm {P}(\hat{f}(x^k) < \hat{f}(x^{k+1}))\). Let poly(n) indicate any polynomial of n.

Lemma 3

Suppose there exists a real number \(\delta > 0\) such that

then the (\(1+1\))-EA using sampling with \(m=n^3/\delta ^2\) needs polynomial number of iterations in expectation for solving noisy OneMax.

Proof

We use Lemma 1 to prove it. For any \(j\le k<n\), let \(Y_{k,j}=f^n(x^{k+1})-f^n(x^j)\) and \(\hat{Y}_{k,j}=\hat{f}(x^{k+1})-\hat{f}(x^j)\). We then need to analyze the probability \(\mathrm {P}(\hat{f}(x^j)<\hat{f}(x^{k+1}))=\mathrm {P}(\hat{Y}_{k,j}>0)\).

Denote the expectation \(\mathrm {E}(Y_{k,j})\) as \(\mu _{k,j}\) and the variance \(\mathrm {Var}(Y_{k,j})\) as \(\sigma ^2_{k,j}\). It is easy to verify that \(\mathrm {E}(\hat{Y}_{k,j})=\mu _{k,j}\) and \(\mathrm {Var}(\hat{Y}_{k,j})=\sigma ^2_{k,j}/m\). By Chebyshev’s inequality, we have

Since \(\mu _{k,j}\ge \delta >0\), \(\sigma ^2_{k,j}=\mathrm {E}(Y^2_{k,j})-\mu ^2_{k,j} \le n^2\) and \(m=n^3/\delta ^2\), we have

where the last inequality holds with sufficiently large n. Let \(l=\log n\). Then, \(\mathrm {P}(\hat{Y}_{k,j}> 0) \ge 1-\frac{\log n}{15n} > 1- \frac{l}{n}\). Let \(c=\frac{1}{15}\). For \(k<n-l, \mathrm {P}(\hat{Y}_{k,j} >0) \ge 1-c\frac{n-k}{n}\). Thus, the condition of Lemma 1 (i.e., Eq. (1)) holds. We then get that the expected number of iterations is \(O(n\log n)+n2^{O(\log n)}=n^{O(1)}\), i.e., polynomial. \(\square \)

For bit-wise noise \((p,\frac{1}{n})\), we apply Lemma 3 to prove that the (\(1+1\))-EA using sampling with \(m=4n^3\) can always solve OneMax in polynomial time, regardless of the value of p.

Theorem 15

For the (\(1+1\))-EA on OneMax under bit-wise noise \((p,\frac{1}{n})\), if using sampling with \(m=4n^3\), the expected running time is polynomial.

Proof

We use Lemma 3 to prove it. Since \(\mathrm {E}(f^n(x^j))=j(1-\frac{p}{n})+(n-j)\frac{p}{n}=(1-\frac{2p}{n})j+p\), we have, for any \(j \le k < n\),

where the last inequality holds with \(n \ge 4\). Thus, by Lemma 3, we get that the expected number of iterations of the (\(1+1\))-EA using sampling with \(m=4n^3\) is polynomial. Since each iteration takes \(2m=8n^3\) number of fitness evaluations, the expected running time is also polynomial. \(\square \)

For bit-wise noise (1, q), we prove in the following two theorems that by using sampling, the tight range of q allowing a polynomial running time is \(1/2-1/n^{O(1)}\).

Theorem 16

For the (\(1+1\))-EA on OneMax under bit-wise noise (1, q) with \(q= 1/2-1/n^{O(1)}\), if using sampling, there exists some \(m = O(poly(n))\) such that the expected running time is polynomial.

Proof

We use Lemma 3 to prove it. Since \(q= 1/2-1/n^{O(1)}\), there exists a positive constant c such that \(q\le 1/2-1/n^c\). It is easy to verify that \(\mathrm {E}(f^n(x^j))=j(1-q)+(n-j)q = (1-2q)j+nq\). Thus, for any \(j \le k < n\),

By Lemma 3, we get that if using sampling with \(m=n^{3+2c}/4\), the expected number of iterations is polynomial, and then the expected running time is polynomial. Thus, the theorem holds. \(\square \)

Theorem 17

For the (\(1+1\))-EA on OneMax under bit-wise noise (1, q) with \(q=1/2-1/n^{\omega (1)}\) or \(q\ge 1/2\), if using sampling with any \(m=O(poly(n))\), the expected running time is exponential.

Proof

We use Lemma 2 to prove it. Note that for the (\(1+1\))-EA using sampling, we have to analyze \(\mathrm {P}(\hat{f}(x^k)< \hat{f}(x^{k+1}))\) instead of \(\mathrm {P}(f^n(x^k)< f^{n}(x^{k+1}))\).

Let Z denote a random variable which satisfies that \(\mathrm {P}(Z=0)=q\) and \(\mathrm {P}(Z=1)=1-q\). In the following proof, each \(Z_i\) is an independent random variable, which has the same distribution as Z. We have \( f^n(x^k)=\sum ^k_{i=1}Z_i+\sum ^n_{i=k+1}(1-Z_i)\), and then,

Since \(\hat{f}(x^k)=\frac{1}{m}\sum ^{m}_{i=1} f^n_i(x^k)\), which is the average of m independent evaluations, we have

where \(Z^*=\sum ^{m-1}_{j=0}\sum _{i=2nj+3}^{2nj+n+1}Z_i-\sum ^{m-1}_{j=0}\sum _{i=2nj+n+2}^{2n(j+1)}Z_i\). To make \(\hat{f}(x^k) \ge \hat{f}(x^{k+1})\), it is sufficient that \(Z^* \le 0\) and \(\sum ^{m-1}_{j=0}\sum _{i=2nj+1}^{2nj+2}Z_i \le m\). That is,

Since \(Z^*\) is the difference between the sum of the same number of \(Z_i\), \(Z^*\) has the same distribution as \(-Z^*\). Thus, \(\mathrm {P}(Z^*\le 0)+\mathrm {P}(Z^*\ge 0)=\mathrm {P}(Z^*\le 0)+\mathrm {P}(-Z^*\le 0)=2\mathrm {P}(Z^*\le 0)\ge 1\), which implies that

We then investigate \(\mathrm {P}(\sum ^{m-1}_{j=0}\sum _{i=2nj+1}^{2nj+2}Z_i\le m)\). Since \(\sum ^{m-1}_{j=0}\sum _{i=2nj+1}^{2nj+2}Z_i\) is the sum of 2m independent random variables which have the same distribution as Z, we have

For any \(t<m\), let \(r= \frac{(1-q)^tq^{2m-t}}{(1-q)^{2m-t}q^t} =(\frac{q}{1-q})^{2m-2t}\). If \(q\ge 1/2\), we have \(r\ge 1\). If \(q=1/2-1/n^{\omega (1)}\), we have

where the first inequality is by \(q\le 1/2\), the second inequality is by \(1-2q=2/n^{\omega (1)}\) and \(1-q \ge 1/2\), and the last is by \(m=O(poly(n))\). Thus, \(\mathrm {P}(\sum ^{m-1}_{j=0}\sum _{i=2nj+1}^{2nj+2}Z_i \le m)>1/3\cdot \mathrm {P}(\sum ^{m-1}_{j=0}\sum _{i=2nj+1}^{2nj+2}Z_i > m)\), which implies that

By applying Eqs. (35) and (36) to Eq. (34), we get

Let \(c=16\) and \(l=n/128\). For any \(n-l\le k<n\),

i.e., the condition of Lemma 2 holds. Thus, the expected number of iterations is \(2^{\varOmega (n/128)}\), and the expected running time is exponential. \(\square \)

For one-bit noise, we show that using sampling with \(m =4n^3\) is sufficient to make the (\(1+1\))-EA solve OneMax in polynomial time.

Theorem 18

For the (\(1+1\))-EA on OneMax under one-bit noise, if using sampling with \(m =4n^3\), the expected running time is polynomial.

Proof

It is easy to verify that the expectation of \(f^n(x^j)\) (i.e., \(\mathrm {E}(f^n(x^j))\)) under one-bit noise is the same as that under bit-wise noise \((p,\frac{1}{n})\). Thus, the proof can be finished in the same way as that of Theorem 15. \(\square \)

From the above analysis, we can intuitively explain why sampling is always effective for bit-wise noise \((p,\frac{1}{n})\) and one-bit noise, while it fails for bit-wise noise (1, q) when \(q=1/2-1/n^{\omega (1)}\) or \(q\ge 1/2\). For two solutions x and y with \(f(x) > f(y)\), if under bit-wise noise \((p,\frac{1}{n})\) and one-bit noise, the noisy fitness \(f^n(x)\) is larger than \(f^n(y)\) in expectation, and using sampling will increase this trend and make the probability of accepting the true worse solution y sufficiently small. If under bit-wise noise (1, q), when \(q=1/2-1/n^{\omega (1)}\), although the noisy fitness \(f^n(x)\) is still larger in expectation, the gap is very small (in the order of \(1/n^{\omega (1)}\)) and a polynomial sample size is not sufficient to make the probability of accepting the true worse solution y small enough; when \(q\ge 1/2\), the noisy fitness \(f^n(x)\) is smaller in expectation, and using sampling will increase this trend and it obviously does not work.

5.2 The LeadingOnes Problem

The bit-wise noise \((p,\frac{1}{n})\) model is first considered. We prove in Theorem 19 that the (\(1+1\))-EA using sampling can solve the LeadingOnes problem in polynomial time, regardless of the value of p. The proof idea is similar to that of Theorem 8. The main difference is the probability of accepting the offspring solution \(x'\), which is changed from \(\mathrm {P}(f^n(x')\ge f^n(x))\) to \(\mathrm {P}(\hat{f}(x')\ge \hat{f}(x))\) due to sampling. Lemma 4 gives some bounds on this probability, which will be used in the proof of Theorem 19.

Lemma 4

For the LeadingOnes problem under bit-wise noise \((p,\frac{1}{n})\), if using sampling with \(m=144n^6\), it holds that

-

(1)

for any x with \({\mathrm {LO}}(x)=i<n\) and y with \({\mathrm {LO}}(y) \le i-2\) or \({\mathrm {LO}}(y)=i-1 \wedge y_{i+1}=0\), \(\mathrm {P}(\hat{f}(x) \le \hat{f}(y))\le 1/n^2\).

-

(2)

for any y with \({\mathrm {LO}}(y) <n\), \(\mathrm {P}(\hat{f}(1^n) \le \hat{f}(y))\le 1/(4n^4)\).

Proof

The proof is finished by deriving a lower bound on the expectation of \(f^n(x)-f^n(y)\) (which is equal to the expectation of \(\hat{f}(x)-\hat{f}(y)\)) and then applying Chebyshev’s inequality. We first consider case (1). For any x with \({\mathrm {LO}}(x)=i<n\),

Note that when flipping the first 0-bit of x and keeping the i leading 1-bits unchanged, the fitness is at least \(i+1\) and at most n. Then for any \(1\le i<n\), we have

Thus, for any x with \({\mathrm {LO}}(x)=i\) and y with \({\mathrm {LO}}(y) \le i-2\) (where \(2 \le i<n\)), letting z be any solution with \({\mathrm {LO}}(z)={\mathrm {LO}}(y)+1\), we have

where the first inequality is by repeatedly applying Eq. (38), the second inequality is by \({\mathrm {LO}}(y)\le i-2\), and the last holds with \(n \ge 12\).

When \({\mathrm {LO}}(y)=i-1 \wedge y_{i+1}=0\), we have to re-analyze Eq. (38) to derive a tighter lower bound, because applying Eq. (38) directly will lead to a negative lower bound for \(i=1\). We first derive a tighter upper bound on \(\mathrm {E}(f^n(y))\). When flipping the i-th bit of y and keeping the \(i-1\) leading 1-bits unchanged, we can now further consider the flipping of the \((i+1)\)-th bit since we know that \(y_{i+1}=0\), rather than directly using a trivial upper bound n on the noisy fitness. If \(y_{i+1}\) is not flipped, \(f^n(y)= i\); otherwise, \(f^n(y)\le n\). Thus, we get

By combining this inequality and the lower bound in Eq. (37), we have that, for any x with \({\mathrm {LO}}(x)=i\) and y with \({\mathrm {LO}}(y) = i-1 \wedge y_{i+1}=0\) (where \(1 \le i<n\)),

where the second inequality holds with \(n \ge 4\).

According to Eqs. (39) and (40), we have a unified lower bound 1 / (12n) on \(\mathrm {E}(f^n(x)-f^n(y))\). Denote \(\mathrm {E}(f^n(x)-f^n(y))\) as \(\mu \) and \(\mathrm {Var}(f^n(x)-f^n(y))\) as \(\sigma ^2\). We have \(\mu \ge 1/(12n)\) and \(\sigma ^2 \le n^2\) since \(|f^n(x)-f^n(y)| \le n\). As \(\hat{f}(x)\) is the average of \(m=144n^6\) independent evaluations, it is easy to verify that \(\mathrm {E}(\hat{f}(x)-\hat{f}(y))=\mu \) and \(\mathrm {Var}(\hat{f}(x)-\hat{f}(y))=\sigma ^2/m\). By Chebyshev’s inequality,

Thus, case (1) holds.

For case (2), we first analyze \(\mathrm {E}(f^n(1^n)-f^n(1^{n-1}0))\). The expectation on \(f^n(1^n)\) can be easily calculated as follows:

Combining this equality with the upper bound in Eq. (37), we get

Then, for any y with \({\mathrm {LO}}(y)<n\), we have

where \(z^{n-1}=1^{n-1}0\), \(z^k \; ({\mathrm {LO}}(y)<k<n-1)\) denotes one solution z with \({\mathrm {LO}}(z)=k\), \(z^{{\mathrm {LO}}(y)}=y\), and the inequality is by applying Eqs. (42) and (38). As the analysis for \(\mathrm {P}(\hat{f}(x)\le \hat{f}(y))\) in case (1) (i.e., Eq. (41)), we can similarly use Chebyshev’s inequality to derive that, noting that \(\mu \ge 1/6\) here,

Thus, case (2) holds. \(\square \)

The following theorem shows that for bit-wise noise \((p,\frac{1}{n})\), using sampling with \(m=144n^6\) is sufficient to make the (\(1+1\))-EA solve LeadingOnes in polynomial time.

Theorem 19

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise \((p,\frac{1}{n})\),if using sampling with \(m=144n^6\), the expected running time is polynomial.

Proof

We use Theorem 1 to prove it. We first construct a distance function V(x) as, for any x with \({\mathrm {LO}}(x)=i\),

where \(c=13\). Then, we investigate \(\mathrm {E}(V(\xi _t)-V(\xi _{t+1}) \mid \xi _t=x)\) for any x with \({\mathrm {LO}}(x)<n\). Assume that currently \({\mathrm {LO}}(x)=i\), where \(0\le i \le n-1\). We divide the drift into two parts: positive \(\mathrm {E}^+\) and negative \(\mathrm {E}^-\). That is,

The positive drift \(\mathrm {E}^+\) can be expressed as Eq. (4), except that \(\mathrm {P}(f^n(x')\ge f^n(x))\) changes to \(\mathrm {P}(\hat{f}(x')\ge \hat{f}(x))\) due to sampling. To derive a lower bound on \(\mathrm {E}^+\), we only consider that the \((i+1)\)-th bit of x is flipped and the other bits keep unchanged, the probability of which is \(\frac{1}{n}(1-\frac{1}{n})^{n-1}\). The only difference between \(x'\) and x is the \((i+1)\)-th bit and \({\mathrm {LO}}(x')>{\mathrm {LO}}(x)=i\). If \({\mathrm {LO}}(x')=n\), \(\mathrm {P}(\hat{f}(x') \le \hat{f}(x)) \le 1/(4n^4)\) by case (2) of Lemma 4, and then \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x)) \ge 1-1/(4n^4)\). If \({\mathrm {LO}}(x')<n\), it must hold that \({\mathrm {LO}}(x)\le {\mathrm {LO}}(x')-1 \wedge x_{{\mathrm {LO}}(x')+1}=x'_{{\mathrm {LO}}(x')+1}=0\). By case (1) of Lemma 4, \(\mathrm {P}(\hat{f}(x') \le \hat{f}(x)) \le 1/n^2\), and then \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x)) \ge 1-1/n^2\). Thus, the probability of accepting the offspring solution \(x'\) is at least 1 / 2. Since \({\mathrm {LO}}(x')>i\), \(V(x)-V(x') \ge (1+\frac{c}{n})^{i+1}-(1+\frac{c}{n})^i=\frac{c}{n}(1+\frac{c}{n})^i\). Then, \(\mathrm {E}^+\) can be lower bounded as follows:

For the negative drift \(\mathrm {E}^{-}\), we need to consider \({\mathrm {LO}}(x')<{\mathrm {LO}}(x)=i\). Since \(V(x')-V(x) \le V(0^n)-V(x)= (1+\frac{c}{n})^i-1\), Eq. (5) becomes

We further divide \({\mathrm {LO}}(x')<i\) into two cases. If \({\mathrm {LO}}(x')\le i-2\) or \({\mathrm {LO}}(x')= i-1 \wedge x'_{i+1}=0\), then \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x)) \le 1/n^2\) by case (1) of Lemma 4. If \({\mathrm {LO}}(x')= i-1 \wedge x'_{i+1}=1\), then \(\sum _{x': {\mathrm {LO}}(x')= i-1 \wedge x'_{i+1}=1}\mathrm {P}_{mut}(x,x') \le 1/n^2\) since it is necessary to flip the i-th and the \((i+1)\)-th bits of x in mutation. Then, we get

By subtracting \(\mathrm {E}^-\) from \(\mathrm {E}^+\), we have, noting that \(c=13\),

Since \(V(x) \le (1+\frac{13}{n})^n \le e^{13}=O(1)\), we have \(\mathrm {E}(\tau \mid \xi _0)=O(n^{2})\) by Theorem 1. Each iteration of the (\(1+1\))-EA using sampling takes \(2m=288n^6\) number of fitness evaluations, thus the expected running time is polynomial. \(\square \)

For bit-wise noise (1, q), we prove in Theorems 20 and 21 that the expected running time is polynomial if and only if \(q = O(\log n/n)\). The proof of Theorem 20 is similar to that of Theorem 19, which considers bit-wise noise \((p,\frac{1}{n})\). The main difference is the probability of accepting the offspring solution \(x'\) (i.e., \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x))\)), due to the change of noise. Lemma 5 gives some bounds on this probability, which will be used in the proof of Theorem 20.

Lemma 5

For the LeadingOnes problem under bit-wise noise (1, q) with \(q\le c_0\log n/n\) (where \(c_0\) is a positive constant), if using sampling with \(m=36n^{2c_0+4}\), it holds that

-

(1)

for any x with \({\mathrm {LO}}(x)=i<n\) and y with \({\mathrm {LO}}(y) \le i-5c_0\log n\) or \(i-5c_0\log n<{\mathrm {LO}}(y)\le i-1 \wedge y_{i+1}=0\), \(\mathrm {P}(\hat{f}(x) \le \hat{f}(y))\le 1/n^2\).

-

(2)

for any y with \({\mathrm {LO}}(y) <n\), \(\mathrm {P}(\hat{f}(1^n) \le \hat{f}(y))\le 1/n^2\).

Proof

The proof is finished by deriving a lower bound on the expectation of \(f^n(x)-f^n(y)\) and then applying Chebyshev’s inequality. We first consider case (1). For any x with \({\mathrm {LO}}(x)=i<n\),

By applying these two inequalities, we get, for any \(k<i<n\),

Thus, for any x with \({\mathrm {LO}}(x)=i\) and y with \({\mathrm {LO}}(y)=k\le i-5c_0\log n\) (where \(5c_0\log n\le i<n\)),

where the third inequality is by \(e^x\ge 1+x\), the fourth is by \(5c_0q \log n \le 5(c_0\log n)^2/n \le 1\) for sufficiently large n, the fifth is by \(q \le c_0\log n /n\), and the last is by

When \(i-5c_0\log n<{\mathrm {LO}}(y)\le i-1 \wedge y_{i+1}=0\) (where \(i\ge 1\)), we calculate \(\mathrm {E}(f^n(x)-f^n(y))\) by

where \(z^i=x\), \(z^k \; ({\mathrm {LO}}(y)<k<i)\) denotes one solution z with \({\mathrm {LO}}(z)=k \wedge z_{i+1}=0\), and \(z^{{\mathrm {LO}}(y)}=y\). We then give a lower bound on \(\mathrm {E}(f^n(z^k)-f^n(z^{k-1}))\), where \({\mathrm {LO}}(y)+1\le k\le i\). For \(\mathrm {E}(f^n(z^k))\), we directly use the lower bound in Eq. (43) to get that

For \(\mathrm {E}(f^n(z^{k-1}))\), instead of directly using the upper bound in Eq. (43), we derive a tighter one:

Note that the inequality is because when the \(k-1\) leading 1-bits of \(z^{k-1}\) keep unchanged and its k-th bit (which must be 0) is flipped, if the \((i+1)\)-th bit (which is 0) is flipped, \(f^n(z^{k-1})\le n\); otherwise, \(f^n(z^{k-1})\le i\). By combining the above two inequalities, we get

where the equality by \(k \ge {\mathrm {LO}}(y)+1 >i-5c_0\log n+1\) and \(q =O(\log n/n)\), and the last is by Eq. (46). Thus, we have

For case (1), by combining Eqs. (45) and (47), we get a unified lower bound \(1/(2en^{c_0})\) on \(\mathrm {E}(f^n(x)-f^n(y))\). As the analysis for \(\mathrm {P}(\hat{f}(x) \le \hat{f}(y))\) (i.e., Eq. (41)) in the proof of Lemma 4, we can similarly use Chebyshev’s inequality to derive that, noting that \(m=36n^{2c_0+4}\) here,

where \(\mu \) and \(\sigma ^2\) are the expectation and variance of \(f^n(x)-f^n(y)\), respectively. Thus, case (1) holds.

Then, we consider case (2), that is, we are to analyze \(\mathrm {P}(\hat{f}(1^n)\le \hat{f}(y))\) with \({\mathrm {LO}}(y)<n\). We calculate \(\mathrm {E}(f^n(1^n)-f^n(y))\) as follows:

It is easy to derive that

Then, we have

where the inequality is by Eq. (46) and \(q=O(\log n/n)\). If \({\mathrm {LO}}(y)\le n-1-5c_0\log n\), by Eq. (45), we directly have \(\mathrm {E}(f^n(1^{n-1}0)-f^n(y))\ge \frac{c_0\log n}{en^{c_0}} \ge 0\). If \({\mathrm {LO}}(y)\ge n-5c_0\log n\), \(\mathrm {E}(f^n(1^{n-1}0)-f^n(y))\) is calculated as follows:

where \(z^{n-1}=1^{n-1}0\), \(z^k \; ({\mathrm {LO}}(y)<k<n-1)\) denotes one solution z with \({\mathrm {LO}}(z)=k\), and \(z^{\mathrm {LO(y)}}=y\). By Eq. (44), we have, for any \({\mathrm {LO}}(y)+1 \le k<n\),

which implies that \(\mathrm {E}(f^n(1^{n-1}0)-f^n(y))\ge 0\). Then, we get

As the analysis in case (1) (i.e., Eq. (48)), we can get that

Thus, case (2) holds. \(\square \)

The following theorem shows that by using sampling, the (\(1+1\))-EA can solve LeadingOnes under bit-wise noise (1, q) in polynomial time when q is in the range of \(O(\log n/n)\).

Theorem 20

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise (1, q) with \(q=O(\log n/n)\), if using sampling, there exists some \(m= O(poly(n))\) such that the expected running time is polynomial.

Proof

Since \(q=O(\log n/n)\), there exists a positive constant \(c_0\) such that for all n large enough, \(q\le c_0\log n/n\). We prove that if using sampling with \(m=36n^{2c_0+4}\), the expected running time is polynomial.

The proof is similar to that of Theorem 19. The distance function V(x) is defined as, for any x with \({\mathrm {LO}}(x)=i\), \(V(x)=\left( 1+\frac{c}{n}\right) ^n-\left( 1+\frac{c}{n}\right) ^i\), where \(c=30c_0\log n+7\). Assume that currently \({\mathrm {LO}}(x)=i\), where \(0\le i \le n-1\). For the positive drift \(\mathrm {E}^+\), we consider that only the \((i+1)\)-th bit (i.e., the first 0-bit) of x is flipped in mutation. If \({\mathrm {LO}}(x')=n\), \(\mathrm {P}(\hat{f}(x') \le \hat{f}(x)) \le 1/n^2\) by case (2) of Lemma 5. If \({\mathrm {LO}}(x')<n\), it must hold that \({\mathrm {LO}}(x)\le {\mathrm {LO}}(x')-1 \wedge x_{{\mathrm {LO}}(x')+1}=x'_{{\mathrm {LO}}(x')+1}=0\), since x and \(x'\) are the same except the \(({\mathrm {LO}}(x)+1)\)-th bit. By case (1) of Lemma 5, \(\mathrm {P}(\hat{f}(x') \le \hat{f}(x)) \le 1/n^2\). Thus, the probability of accepting the offspring solution \(x'\) is \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x)) \ge 1-1/n^2 \ge 1/2\). The positive drift then can be lower bounded by

For the negative drift \(\mathrm {E}^{-}\), we need to consider \({\mathrm {LO}}(x')<{\mathrm {LO}}(x)=i\). We further divide \({\mathrm {LO}}(x')<i\) into two cases. If \({\mathrm {LO}}(x')\le i-5c_0\log n\) or \(i-5c_0\log n<{\mathrm {LO}}(x')\le i-1 \wedge x'_{i+1}=0\), then \(\mathrm {P}(\hat{f}(x') \ge \hat{f}(x)) \le 1/n^2\) by case (1) of Lemma 5. If \(i-5c_0\log n<{\mathrm {LO}}(x')\le i-1 \wedge x'_{i+1}=1\), we consider the probability of generating \(x'\) by mutation on x. Since it is necessary to flip the \((i+1)\)-th bit of x and at least one 1-bit in positions \(i-5c_0\log n+2\) to i simultaneously,

Then, we get

By subtracting \(\mathrm {E}^-\) from \(\mathrm {E}^+\), we have, noting that \(c=30c_0\log n+7\),

Note that \(V(x) \le (1+\frac{c}{n})^n \le e^c = e^{30c_0\log n+7}= n^{O(1)}\). By Theorem 1, we have \(\mathrm {E}(\tau \mid \xi _0)\le 6n^2\cdot n^{O(1)}\). Since each iteration of the (\(1+1\))-EA using sampling takes \(2m=72n^{2c_0+4}\) number of fitness evaluations, the expected running time is polynomial. \(\square \)

For bit-wise noise (1, q) with \(q=\omega (\log n/n)\), we apply the negative drift theorem (i.e., Theorem 2) to prove that using sampling still cannot guarantee a polynomial running time.

Theorem 21

For the (\(1+1\))-EA on LeadingOnes under bit-wise noise (1, q) with \(q=\omega (\log n/n)\), if using sampling with any \(m= O(poly(n))\), the expected running time is exponential.

Proof

We use Theorem 2 to prove it. Let \(X_t=|x|_0\) be the number of 0-bits of the solution x after t iterations of the algorithm. We consider the interval [0, n / 50], that is, the parameters \(a=0\) and \(b=n/50\) in Theorem 2. Then, we analyze the drift \(\mathrm {E}(X_t-X_{t+1}\mid X_t=i)\) for \(1\le i<n/50\). As in the proof of Theorem 9, we divide the drift into two parts: positive \(\mathrm {E}^+\) and negative \(\mathrm {E}^-\). That is,

For the positive drift, we can use the same analysis as that (i,e., Eq. (17)) in the proof of Theorem 9 to derive that \(\mathrm {E}^+\le i/n<1/50\). This is because the offspring solution \(x'\) is optimistically assumed to be always accepted in the analysis of Eq. (17), and thus the change of noise and the use of sampling will not affect the analysis.

For the negative drift, we need to consider that the number of 0-bits is increased. To derive a lower bound on \(\mathrm {E}^-\), we only consider the \(n-i\) cases where only one 1-bit of x is flipped, which happens with probability \(\frac{1}{n}(1-\frac{1}{n})^{n-1}\). Let \(x^j\) denote the solution that is generated by flipping only the j-th bit of x. Then, we have