Abstract

We introduce an exactly-solvable model of random walk in random environment that we call the Beta RWRE. This is a random walk in \(\mathbb {Z}\) which performs nearest neighbour jumps with transition probabilities drawn according to the Beta distribution. We also describe a related directed polymer model, which is a limit of the q-Hahn interacting particle system. Using a Fredholm determinant representation for the quenched probability distribution function of the walker’s position, we are able to prove second order cube-root scale corrections to the large deviation principle satisfied by the walker’s position, with convergence to the Tracy–Widom distribution. We also show that this limit theorem can be interpreted in terms of the maximum of strongly correlated random variables: the positions of independent walkers in the same environment. The zero-temperature counterpart of the Beta RWRE can be studied in a parallel way. We also prove a Tracy–Widom limit theorem for this model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study an exactly solvable one-dimensional random walk in space–time i.i.d. random environment. It is a random walk on \(\mathbb {Z}\) which performs nearest neighbour steps, according to transition probabilities following the Beta distribution and drawn independently at each time and each location. We call this model the Beta RWRE. Using methods of integrable probability, we find an exact Fredholm determinantal formula for the Laplace transform of the quenched probability distribution of the walker’s position. An asymptotic analysis of this formula allows to prove a very precise limit theorem. It was already known that such a random walk satisfies a quenched large deviation principle [34]. We show that for the Beta RWRE, the second order correction to the large deviation principle fluctuates on the cube-root scale with Tracy–Widom statistics. This brings the scope of KPZ universality to random walks in dynamic random environment, and the Beta RWRE is the first RWRE for which such a limit theorem has been proved. Moreover, our result translates in terms of the maximum of the locations of independent walkers in the same environment. Hence, the Beta RWRE can also be considered as a toy model for studying maxima of strongly correlated random variables.

Our route to discover the exact solvability of the Beta RWRE was through an equivalent directed polymer model with Beta weights, which is itself a limit of the q-Hahn TASEP (introduced in [30] and further studied in [15]). However, we show that the RWRE/polymer model can be analysed independently of its interacting particle system origin, via a rigorous variant of the replica method.

Our work generalizes a study of similar spirit, where a limit of the discrete-time geometric q-TASEP [3] was related to the strict weak lattice polymer [17] (see also [29]). It should be emphasized that this procedure of translating the algebraic structure of interacting particle systems to directed polymer models was already fruitful in [4], where formulas for the q-TASEP allowed to study the law of continuous directed polymers related to the KPZ equation.

2 Definitions and main results

2.1 Random walk in space–time i.i.d. Beta environment

Definition 2.1

Let \((B_{x, t})_{x\in \mathbb {Z}, t\in \mathbb {Z}_{\geqslant 0}}\) be a collection of independent random variables following the Beta distribution, with parameters \(\alpha \) and \(\beta \). We call this collection of random variables the environment of the walk. Recall that if a random variable B is drawn according to the \(Beta(\alpha , \beta )\) distribution, then for \(0\leqslant r \leqslant 1\),

In this environment, we define the random walk in space–time Beta environment (abbreviated Beta-RWRE) as a random walk \((X_t)_{t\in \mathbb {Z}_{\geqslant 0}}\) in \(\mathbb {Z}\), starting from 0 and such that

-

\(X_{t+1}=X_t +1\) with probability \(B_{X_t, t}\) and

-

\(X_{t+1}=X_t -1\) with probability \(1- B_{X_t, t}\).

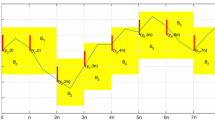

A sample path is depicted in Fig. 1. We denote by \(\mathsf {P}\) and \(\mathsf {E}\) (resp. \(\mathbb {P}\) and \(\mathbb {E}\)) the measure and expectation associated to the random walk (resp. to the environment).

Let \(P(t, x)=\mathsf {P}(X_t\geqslant x)\). This is a random variable with respect to \(\mathbb {P}\). Our first aim is to show that the Beta RWRE model is exactly solvable, in the sense that we are able to find the distribution of P(t, x), by exploiting an exact formula for the Laplace transform of P(t, x).

Remark 2.2

The random walk \((\mathbf {X}_t)_t\) in \(\mathbb {Z}^2\), where \(\mathbf {X}_t:=(t, X_t)\) is a random walk in random environment in the classical sense, i.e. the environment is not dynamic (see Fig. 1). It is a very particular case of random walk in Dirichlet random environment [21]. Dirichlet RWREs have generated some interest because it can be shown using connections between Dirichlet law and Pólya urn scheme that the annealed law of such random walks is the same as that of oriented-edge-reinforced random walks [20]. However, since the random walk \((\mathbf {X}_t)\) can go through a given edge of \(\mathbb {Z}^2\) at most once, the connection to self-reinforced random walks is irrelevant for the Beta RWRE.

Remark 2.3

-

The Beta distribution with parameters (1, 1) is the uniform distribution on (0, 1).

-

For B a random variable with \(Beta(\alpha , \beta )\) distribution, \(1-B\) is distributed according to a Beta distribution with parameters \((\beta , \alpha )\). Consequently, exchanging the parameters \(\alpha \) and \(\beta \) of the Beta RWRE corresponds to applying a symmetry with respect to the time axis.

The thick line represents a possible polymer path in the point-to-point Beta polymer model. The dotted thick part represents a modification of the polymer path that is admissible if one considers the half-line to point polymer (see the Sect. 2.2.2). The partition function for the half-line to point model \(\tilde{Z}(s,k)\) at the point (s, k) shown in gray equals 1

2.2 Definition of the Beta polymer

2.2.1 Point to point Beta polymer

Definition 2.4

A point-to-point Beta polymer is a measure \(Q_{t,n}\) on lattice paths \(\pi \) between (0, 1) and (t, n). At each site (s, k) the path is allowed to

-

jump horizontally to the right from (s, k) to \((s+1,k)\),

-

or jump diagonally to the upright from (s, k) to \((s+1,k+1)\).

An admissible path is shown in Fig. 2. Let \(B_{i,j}\) be independent random variables distributed according to the Beta distribution with parameters \(\mu \) and \(\nu -\mu \) where \(0<\mu <\nu \). The measure \(Q_{t,n}\) is defined by

where the products is taken over edges of \(\pi \) and the weights \(w_e\) are defined by

and Z(t, n) is a normalisation constant called the partition function,

The free energy of the beta polymer is \(\log Z(t,n)\). The partition function of the beta polymer satisfies the recurrence

With the initial data

Remark 2.5

One recovers at the \(\nu \rightarrow \infty \) limit the strict-weak lattice polymer described in [17, 29]. As \(\nu \) goes to infinity,

and \(1-Beta(\mu , \nu -\mu )\Rightarrow 1\). There are \(t-n+1\) horizontal edges in any admissible lattice path from (0, 1) to (t, n), and thus

is the partition function of the strict-weak polymer. Indeed, in the strict-weak polymer, the horizontal edges have weights \(Gamma(\mu )\) whereas upright paths have weight 1.

2.2.2 Half-line to point Beta polymer

Another (equivalent) possible interpretation of the same quantity Z(t, n) is the partition function of an ensemble of polymer paths starting from the “half-line” \(\lbrace (0,n) :n>0\rbrace \). Fix \(t\geqslant 0\) and \(n>0\). One considers paths starting from any point (0, m) for \(0<m\leqslant n\) and ending at (t, n). As for the point-to-point Beta polymer, paths are allowed to make right and diagonal steps. The weight of any path is the product of the weights of each edge along the path, and the weight \(\tilde{w}_e\) of the edge e is now defined by

Let us denote by \(\tilde{Z}(t,n)\) the partition function in the half-line to point model. It is characterized by the recurrence

for all \(t,n>0\) and the initial condition \(Z(0,n)=1\) for \(n>0\). With the above definition of weights, we can see by induction that for any \(t\geqslant 0\) and \(n>t\), \(\tilde{Z}(t, n)=1\). For example, in Fig. 2, the possible paths leading to (s, k) are shown in gray. On the figure, one has

Consequently, the partition functions of the half-line-to-point and the point-to-point model coincide for \(t+1\geqslant n\). In the following, we drop the tilde above Z, even when considering the half-line-to point model, since the models are equivalent.

By deforming the lattice so that admissible paths are up/right, and reverting the orientation of the path, one sees that the Beta polymer and the Beta-RWRE are closely related models, in the sense of Proposition 2.6. This proposition is proved in Sect. 3.3.

Proposition 2.6

Consider the Beta-RWRE with parameters \(\alpha , \beta >0\) and the Beta polymer with parameters \(\mu =\alpha \) and \(\nu =\alpha +\beta \). For any fixed \(t, n\in \mathbb {Z}_{\geqslant 0}\) such that \(t+1\geqslant n\), then we have the equality in law

Moreover, conditioning on the environment of the Beta polymer corresponds to conditioning on the environment of the Beta RWRE.

2.3 Bernoulli-Exponential directed first passage percolation

Let us introduce the “zero-temperature” counterpart of the Beta RWRE.

Definition 2.7

Let \((E_e)\) be a family of independent exponential random variables indexed by the horizontal and vertical edges e in the lattice \(\mathbb {Z}^2\), such that \(E_e\) is distributed according to the exponential law with parameter a (i.e. with mean 1 / a) if e is a vertical edge and \(E_e\) is distributed according to the exponential law with parameter b if e is a horizontal edge. Let \((\xi _{i,j})\) be a family of independent Bernoulli random variables with parameter \( b/(a+b)\). For an edge e of the lattice \(\mathbb {Z}^2\), we define the the passage time \(t_e\) by

The first passage-time T(n, m) in the Bernoulli-Exponential first passage percolation model is given by

where the minimum is taken over all up/right paths \(\pi \) from (0, 0) to \(D_{n, m}\), which is the set of points

Percolation cluster for the Bernoulli-Exponential model with parameters \(a=b=1\) in a grid of size \(100\times 100\). The different shades of gray correspond to different times: the black line corresponds to the percolation cluster at time 0 and the other shades of gray corresponds to times 0.2, 0.5 and 1.2. This implies that for n and m chosen as on the figure, \( 0.2\leqslant T(n,m)\leqslant 0.5\)

Although the quantity that we are fully able to study is T(n, m), that is a point to half-line passage time, is is also natural to introduce the point-to-point passage time \(T^{pp}(n,m)\) defined by

where the maximum is taken over paths between the points (0, 0) and (n, m). We define the percolation cluster C(t) by

It can be constructed in a dynamic way (see Fig. 3). At each time t, C(t) is the union of points visited by (portions of) several directed up/right random walks in the quarter plane \(\mathbb {Z}_{\geqslant 0}^2\). The evolution is as follows:

-

At time 0, the percolation cluster contains the points of the path of a directed random walk starting from (0, 0). Indeed, since for any i, j, \(\xi _{i,j}\) is a Bernoulli random variable in \(\lbrace 0,1\rbrace \), either the passage time from (i, j) to \((i+1,j)\) is zero, or the passage time from (i, j) to \((i,j+1)\) is zero. This implies that there exists a unique infinite up-right path starting from (0, 0) with zero passage-time. This path is distributed as a directed random walk.

-

At time t, from each point on the boundary of the percolation cluster where a random walk can branch, we add to the percolation cluster after an exponentially distributed waiting time, the path of that random walk. Paths starting with a vertical (resp. horizontal) edge are added at rate a (resp. b). This random walk almost surely crosses the percolation cluster somewhere, and we add to the percolation cluster only the points of the walk path up to the first hitting point. Indeed, any edge \(e=(x,y)\) from a point x inside C(t) to a point y outside C(t), has a positive passage time. Hence, one adds the point y to the percolation cluster after an exponentially distributed waiting time \(t_e\). Once the point y is added, one immediately adds to C(t) all the points that one can reach from y with zero passage time. These points form a portion of random walk that will almost surely coalesce with the initial random walk path C(0).

An admissible path for the Bernoulli-Exponential FPP model is shown on the figure. T(n, m) is the passage time between (0, 0) and \(D_{n,m}\) (thick gray line). Note that the first passage time to \(D_{n,m}\) is also the first passage time to \(\tilde{D}_{n,m}\) depicted in dotted gray on the figure (cf. Remark 2.8)

Remark 2.8

Denote by \(\tilde{D}_{n,m}\) the set of points \(\lbrace (i, m) :0\leqslant i\leqslant n\rbrace \) (see Fig. 4). Any path going from (0, 0) to \(D_{n,m}\) has to go through a point of \(\tilde{D}_{n,m}\). Moreover, the first passage time from any point of \(\tilde{D}_{n,m}\) to the set \(D_{n,m}\) is zero. Hence the first passage time from (0, 0) to \(\tilde{D}_{n,m}\) is also T(n, m).

Remark 2.9

When b tends to infinity, \(E_e\) tends to 0 for all horizontal edges, and one recovers the first passage percolation model introduced in [28], which is the zero temperature limit of the strict-weak lattice polymer as explained in [17, 29].

Let us show how the Bernoulli-Exponential first passage percolation model is a limit of the Beta RWRE.

Proposition 2.10

Let \(\alpha _{\epsilon }=\epsilon a\) and \(\beta _{\epsilon } = \epsilon b\). Let \(P_{\epsilon }(t,x)\) be the probability distribution function of the Beta-RWRE with parameters \(\alpha _{\epsilon }\) and \(\beta _{\epsilon }\) and T(n, m) the first-passage time in the Bernoulli-Exponential FPP model with parameters a, b. Then, for all \( n, m\geqslant 0\), \(-\epsilon \log (P_{\epsilon }(n+m,m-n))\) weakly converges as \(\epsilon \) goes to zero to T(n, m), the first passage time from (0, 0) to \(D_{n,m}\) in the Bernoulli-Exponential FPP model.

Proposition 2.10 is proved in Sect. 5.

2.4 Exact formulas

Our first result is an exact formula for the mixed moments of the polymer partition function \(\mathbb {E}[Z(t,n_1) \cdots Z(t,n_k) ]\). In light of Proposition 3.1, this result can be seen as a limit when q goes to 1 of the formula from Theorem 1.8 in [15]. Even so, we prove this in an independent way in Sect. 4 via a rigorous polymer replica trick methods (see Proposition 4.4).

Proposition 2.11

For \(n_1 \geqslant n_2\geqslant \dots \geqslant n_k\geqslant 1\), one has the following moment formula,

where the contour for \(z_k\) is a small circle around the origin, and the contour for \(z_j\) contains the contour for \(z_{j+1} + 1\) for all \(j=1, \dots , k-1\), as well as the origin, but all contours exclude \(-\nu \).

The previous proposition provides a formula for the moments of the partition function Z(t, n). Using tools developed in the study of Macdonald processes [4] (see also [13, 18]), one is able to take the moment generating series, which yields a Fredholm determinant representation for the Laplace transform of Z(t, n). We refer to [4, Section 3.2.2] for background about Fredholm determinants.

Theorem 2.12

For \(u\in \mathbb {C} {\setminus } \mathbb {R}_{>0}\), fix \(n,t\geqslant 0\) with \(n\leqslant t+1\) and \(\nu >\mu >0 \). Then one has

where \(C_0\) is a small positively oriented circle containing 0 but not \(-\nu \) nor \(-1\), and \(K^{\mathrm {BP}}_u :\mathbb {L}^2(C_0)\rightarrow \mathbb {L}^2(C_0)\) is defined by its integral kernel

where

In light of the relation between the Beta RWRE and the Beta polymer given in Proposition 2.6, we have a similar Fredholm determinant representation for the Laplace transform of P(t, x).

Theorem 2.13

For \(u\in \mathbb {C} {\setminus } \mathbb {R}_{>0}\), fix \(t\in \mathbb {Z}_{\geqslant 0}\), \(x\in \lbrace -t, \dots , t\rbrace \) with the same parity, and \(\alpha , \beta >0 \). Then one has

where \(C_0\) is a small positively oriented circle containing 0 but not \(-\alpha -\beta \) nor \(-1\), and \(K^{\mathrm {RW}}_u :\mathbb {L}^2(C_0)\rightarrow \mathbb {L}^2(C_0)\) is defined by its integral kernel

where

2.5 Limit theorem for the random walk

A quenched large deviation principle is proved in [34, Section 4] for a wide class of random walks in random environment that includes the Beta-RWRE model. More precisely, the setting of [34] applies to the random walk \(\mathbf {X}_t=(t, X_t)\) (see Remark 2.2). The condition that one has to check is that the logarithm of the probability of each possible step has nice properties with respect to the environment (the random variables must belong to the class \(\mathcal {L}\) defined in [34, Definition 2.1]). Using the fact that if B is a \(Beta(\alpha , \beta )\) random variable, \(\log (B)\) and \(\log (1-B)\) have integer moments of any order, Ref. [34, Lemma A.4] ensures that the condition is satisfied. The limit

exists \(\mathbb {P}\)-almost surely. Let I be the Legendre transform of \(\lambda \). Then, we have [34, Section 4] that for \(x>(\alpha -\beta )/(\alpha +\beta )\),

Remark 2.14

In the language of polymers, the limit (7) states the existence of the quenched free energy. Theorem 4.3 in [33] states that for such random walks in random environment, we have that

In other terms, the point-to-point free energy and the point-to-half-line free energies are equal.

In [34, Theorem 3.1], a formula is given for I in terms of a variational problem over a space of measures. We provide a closed formula in the present case. It would be interesting to see how the variational problem recovers the formulas that we now present.

For the Beta-RWRE, critical point Fredholm determinant asymptotics shows that the function I is implicitly defined by

and

where \(\Psi \) is the digamma function (\(\Psi (z)= \Gamma '(z)/\Gamma (z)\)) and \(\Psi _1\) is the trigamma function (\(\Psi _1(z)=\Psi '(z)\)). The parameter \(\theta \) does not seem natural at a first sight. It is convenient to use it as it will turn out to be the position of the critical point in the asymptotic analysis. When \(\theta \) ranges from 0 to \(+\infty \), \(x(\theta )\) ranges from 1 to \((\alpha -\beta )/(\alpha +\beta )\). This covers all the interesting range of large deviation events since \((\alpha -\beta )/(\alpha +\beta )\) is the expected drift of the random walk, and we know that \(\mathsf {P}(X_t>x t)=0\) for \(x>1\).

Moreover, we define \(\sigma (\theta )>0\) such that

In the case \(\alpha =\beta =1\), that is when the \(B_{x,t}\) variables are distributed uniformly on (0, 1), the expressions for \(x(\theta )\) and \(I(x(\theta ))\) simplify. We find that

and

so that the rate function I is simply the function \(I:x \mapsto 1-\sqrt{1-x^2}\).

The following theorem gives a second order correction to the large deviation principle satisfied by the position of the walker at time t.

Theorem 2.15

For \(0<\theta <1/2\) and \(\alpha =\beta =1\), we have that

Remark 2.16

As we explain in Sect. 6, we expect Theorem 2.15 to hold more generally for arbitrary parameters \(\alpha , \beta >0\) and \(\theta >0\). The assumption \(\alpha =\beta \) is made for simplifying the computations, whereas the assumption \(\theta <1/2\) is present because certain deformations of contours are justified only for \(\theta <\min \lbrace 1/2, \alpha +\beta \rbrace \). The condition \(\theta >0\) is natural, it corresponds to looking at \(x(\theta )<1\). We know that for \(x(\theta )>1\), then \(P(t, x(\theta )t)=0\).

In the case \(\alpha =\beta =1\), the condition \(\theta <1/2\) corresponds to \(x(\theta )>4/5\).

Remark 2.17

The Tracy–Widom limit theorem from Theroem 2.15 should be understood as an analogue of limit theorems for the free energy fluctuations of exactly-solvable random directed polymers. Similar results are proved in [1, 6] for the continuum polymer, in [4, 6] for the O’Connell-Yor semi-discrete polymer, in [9] for the log-gamma polymer, and in [17, 29] for the strict-weak-lattice polymer.

In light of KPZ universality for directed polymers, we expect the conclusion of Theorem 2.15 to be more general with respect to weight distribution, but this is only the first RWRE to verify this.

In Sect. 6, we also provide an interesting corollary of Theorem 2.15. Corollary 6.8 states that if one considers an exponential number of Beta RWRE drawn in the same environment, then the maximum of the endpoints satisfies a Tracy–Widom limit theorem. It turns out that even if the rescaled endpoint of a random walk converges in distribution to a Gaussian random variable for large t, the limit theorem that we get is quite different from the one verified by Gaussian random variables having the same dependence structure.

2.6 Localization of the paths

The localization properties of random walks in random environment are quite different from localization properties of random directed polymers in \(1+1\) dimensions. For instance, in the log-gamma polymer model, the endpoint of a polymer path of size n fluctuates on the scale \(n^{2/3}\) [35], and localizes in a region of size \(\mathcal {O}(1)\) when one conditions on the environment [14]. For random walks in random environment, it is clear by the central limit theorem that the endpoint of a path of size n fluctuates on the scale \(\sqrt{n}\).

Remarkably, the central limit theorem also holds if one conditions on the environment. A general quenched central limit theorem is proved in [31] for space–time i.i.d. random walks in \(\mathbb {Z}^d\). The only hypotheses are that the environment is not deterministic, and that the expectation over the environment of the variance of an elementary increment is finite. These two conditions are clearly satisfied by the Beta-RWRE model. In the particular case of one-dimensional random walks, and when transition probabilities have mean 1 / 2, the result was also proved in [12]. However, most of the other papers proving a quenched central limit theorem for similar RW models assume a strict ellipticity condition, which is not satisfied by the Beta-RWRE. See also [11, 32] for similar results about random walks in random environment under weaker conditions.

In any case, if we let the environment vary, the fluctuations of the endpoints at time t in the Beta RWRE live on the \(\sqrt{t}\) scale. For the Beta-RWRE, Proposition 6.13 shows that the expected proportion of overlap between two random walks drawn independently in a common environment is of order \(\sqrt{t}\) up to time t. The \(\sqrt{t}\) order of magnitude has already been proved in [31, Lemma 2] based on results from [22], and our Proposition 6.13 provides the precise equivalent.

Let us give an intuitive argument explaining the difference of behaviour between polymers and random walks. Assume that the environment of the random walk (resp. the polymer) has been drawn, and consider a random walk starting from the point 0 (resp. a point-to-point polymer starting from 0). The quenched probability that the random walk performs a first step upward depends only on the environment at the point 0 (i.e. the random variable \(B_{0,0}\) in the case of the Beta RWRE). However, the probability for the polymer path to start with a step upward depends on the global environment. For instance, if the weight on some edge is very high, this will influence the probability that the first step of the polymer path is upward or downward, so as to enable the polymer path to go through the edge with high weight. This explains why two independent paths in the same environment have more tendency to overlap in the polymer model.

In [27], a random walk in dynamic random environment is associated to a random directed polymer in \(1+1\) dimensions, under a condition called north-east induction on the edge-weights. For the log-gamma polymer, it turns out that the associated random walk has Beta distributed transition probabilities. However, the environment is correlated, so that this RWRE is very different from the Beta RWRE. The random walk considered in [27] defines a measure on lattice paths which can be seen as a limit of point-to-point polymer measures. Hence, as pointed out in [27, Remark 8.3], it has very different localization properties than random walks in space–time i.i.d random environment that we consider in the present paper.

2.7 Limit theorem at zero-temperature

Turning to the zero-temperature limit, Theorem 2.13 degenerates to the following for the Bernoulli-Exponential FPP model:

Theorem 2.18

For \(r\in \mathbb {R}_{>0}\), fix \(n,m\geqslant 0\) and consider T(n, m) the first passage time to the set \(D_{n,m}\) in the Bernoulli-Exponential FPP model with parameters \(a,b>0\). Then, one has

where \(C'_0\) is a small positively oriented circle containing 0 but not \(-a-b\), and \(K^{\mathrm {FPP}}_r :\mathbb {L}^2(C'_0)\rightarrow \mathbb {L}^2(C'_0)\) is defined by its integral kernel

where

The integral in (12) is an improper oscillatory integral if one integrates on the vertical line \(1/2+i\mathbb {R}\). One could justify a deformation of the integration contour (so that the tails go to \(\infty e^{\pm i2\pi /3}\) for instance) in order to have an absolutely convergent integral, but it happens that the vertical contour is more practical for analyzing the asymptotic behaviour of \(\det (I+K^{\mathrm {FPP}}_r)\) in Sect. 7.

One has a Tracy–Widom limit theorem for the fluctuations of the first passage time \(T(n,\kappa n)\) when n goes to infinity, for some slope \(\kappa >\frac{a}{b}\). Theorem 2.19 is proved as Theorem 7.1 in Sect. 7.

Theorem 2.19

We have that for any \(\theta >0\) and parameters \(a,b>0\),

where \(\kappa (\theta ), \tau (\theta )\) and \(\rho (\theta )\) are explicit constants (see Sect. 7) such that when \(\theta \) ranges from 0 to infinity, \(\kappa (\theta )\) ranges from \(+\infty \) to a / b.

Notice that in Theorem 2.19, we do not have any restriction on the range of the parameters a, b and \(\theta \).

Another direction of study for the Bernoulli-Exponential FPP model is to compute the asymptotic shape of the percolation cluster C(t) for a fixed time t (but looking very far from the origin). In Sect. 7.3 we explain, based on a degeneration of the results of Theorem 2.19, what should be the limit shape of the the convex envelope of the percolation cluster, and guess the scale of the fluctuations. However, these arguments are based on a non-rigorous interchange of limits and we leave a rigorous proof for future consideration.

Outline of the paper In Sect. 3, we introduce the q-Hahn TASEP [15, 30] and explain how some observables of the q-Hahn TASEP converge to the partition function of the Beta polymer (and likewise endpoint distribution of the Beta RWRE). This already leads to a proof of the Fredholm determinant formulas in Theorems 2.12 and 2.13, using results on the q-Hahn TASEP. We do not write here the necessary technical details to make this approach rigorous. Rather, in Sect. 4, we give a direct proof of Theorems 2.12 and 2.13 using an approach which can be seen as a rigorous instance of the replica method. In Sect. 5, we show that the Beta RWRE converges to the Bernoulli-Exponential FPP, and prove the Fredholm determinant formula of Theorem 2.18. In Sect. 6 we perform an asymptotic analysis of the Fredholm determinant from Theorem 2.13 to prove Theorem 2.15. We also discuss Corollary 6.8 which is about the maximum of the endpoints of several Beta RWRE drawn in a common environment, and we relate this result to extreme value theory. In Sect. 7, we perform an asymptotic analysis of the Bernoulli-Exponential FPP model to prove Theorem 2.19.

3 From the q-Hahn TASEP to the Beta RWRE

In this section, we explain how the Beta-RWRE and the Beta polymer arise as limits of the q-Hahn TASEP introduced in [30] (see also [15]). We first show that some observables of the q-Hahn TASEP converge to the partition function of the polymer model (Proposition 3.1). Discarding technical details (which are written in full details in the arXiv version of this paper), this leads to a first proof of Theorem 2.12. Then we prove that the Beta RWRE and the Beta polymer model are equivalent models in the sense of Proposition 2.6.

3.1 The q-Hahn TASEP

Let us recall the definition of the q-Hahn-TASEP: this is a discrete time interacting particle system on the one-dimensional integer lattice. Fix \(0<q<1\) and \(0\leqslant \bar{\nu } \leqslant \bar{\mu }<1\). Then the N-particle q-Hahn TASEP is a discrete time Markov chain \(\vec {x}(t) = \lbrace x_n(t) \rbrace _{n=0}^{N} \in \mathbb {X}_N\) where the state space \(\mathbb {X}_N\) is

At time \(t+1\), each coordinate \(x_n(t)\) is updated independently and in parallel to \(x_n(t+1) = x_n(t)+j_n\) where \(0\leqslant j_n \leqslant x_{n-1}(t)-x_n(t)-1\) is drawn according to the q-Hahn probability distribution \(\varphi _{q, \bar{\mu }, \bar{\nu }}(j_n\vert x_{n-1}(t)-x_n(t)-1) \). The q-Hahn probability distribution on \(j\in \lbrace 0, 1, \dots ,m\rbrace \) is defined by the probabilities

where for \(a\in \mathbb {C}\) and \(n\in \mathbb {Z}_{\geqslant 0} \cup \lbrace +\infty \rbrace \), \((a;q)_n\) is the q-Pochhammer symbol

3.2 Convergence of the q-Hahn TASEP to the Beta polymer

An interesting interpretation of the q-Hahn distribution is provided in Section 4 of [26]. The authors define a q-analogue of the Pólya urn process: One considers two urns, initially empty, in which one sequentially adds balls. When the first urn contains k balls, and the second urn contains \(n-k\) balls, one adds a ball to the first urn with probability \( [\nu -\mu +n-k]_q/[\nu +n]_q \), where for any integer m, \([m]_q=(1-q^m)/(1-q)\) denotes the q-deformed integer, and we set \(\bar{\mu } = q^{\mu }\) and \(\bar{\nu } = q^{\nu }\). One adds a ball to the second urn with the complementary probability. Then \(\varphi _{q, \bar{\mu }, \bar{\nu }}(j\vert m)\) is the probability that after m steps, the first urn contains j balls. When q goes to 1, one recovers the classical Pólya urn process.

For the classical Pólya urn, it is known that after n steps, the number of balls in the first urn is distributed according to the Beta-Binomial distribution. Further, the proportion of balls in the first urns converges in distribution to the Beta distribution when the number of added balls tends to infinity. Thus, it is natural to consider the q-Hahn distribution as a q-analogue of the Beta-Binomial distribution. Further, we expect that if X is a random variable drawn according to the q-Hahn distribution on \(\lbrace 0, \dots , m\rbrace \) with parameters \((q, \bar{\mu }, \bar{\nu })\), the q-deformed proportion \([X]_q /[m]_q\) converges as m goes to infinity to a q analogue of the Beta distribution, which converges as q goes to 1 to the Beta distribution with parameters \((\nu -\mu , \mu )\).

This interpretation of the q-Hahn distribution as a q-analogue of the Beta-Binomial distribution explains why the partition function of the Beta polymer is a limit of observables of the q-Hahn TASEP. Let \(Z^{\epsilon }(t,n)\) be the rescaled quantity

where \(x_n(t)\) is the location of the \(n^\mathrm{th}\) particle in q-Hahn TASEP and we set \(q=e^{-\epsilon }, \bar{\mu } = q^{ \mu }\) and \( \bar{\nu } = q^{ \nu }\).

Proposition 3.1

For \(t\geqslant 0\) and \(n\geqslant 1\) such that \(n\leqslant t+1\), the sequence of random variables \(\left( Z^{\epsilon }(t,n) \right) _{\epsilon }\) converges in distribution as \(\epsilon \rightarrow 0\) to a limit Z(t, n) and one has

where \(B_{t,n}\) are i.i.d. Beta distributed random variables with parameters \((\mu , \nu -\mu )\). Additionally, we have the weak convergence of processes

where Z(t, n) is the partition function of the Beta polymer.

Proof

See the arXiv version of this paper for a detailed proof. \(\square \)

One has the following Fredholm determinant representation for the \(e_q\)-Laplace transform of \(x_n(t)\).

Theorem 3.2

(Theorem 1.10 in [15]) Consider q-Hahn TASEP started from step initial data \(x_n(0)=-n \ \forall n \geqslant 1\). Then for all \(\zeta \in \mathbb {C}{\setminus } \mathbb {R}_{>0}\),

where \(C_1\) is a small positively oriented circle containing 1 but not \(1/\bar{\nu }, 1/q\) nor 0, and \(K^{\mathrm {qHahn}}_{\zeta } :\mathbb {L}^2(C_1)\rightarrow \mathbb {L}^2(C_1)\) is defined by its integral kernel

with

Let us scale the parameter \(\zeta \) as

and scale the other parameters as previously: \(q=e^{-\epsilon }, \bar{\mu } = q^{\mu }, \bar{\nu } = q^{\nu }\). Then we have

where

is the \(e_q\)-exponential function. Since \(e_q(x)\rightarrow e^x\) uniformly for x in a compact set, we have, using the convergence of processes (16) and the fact that \(Z^{\epsilon }(t,n)\) are uniformly bounded by 1, that

Hence, in order to prove Theorem 2.12, one could take the limit when \(\epsilon \) goes to zero of the Fredholm determinant in the right-hand-side of (17). This is indeed possible, but require a good control of the integrand of the kernel as q goes to 1. Since we provide another proof of Theorem 2.12 independent of the q-Hahn TASEP in Sect. 4, we do not write the required estimates—but refer to the arXiv version of this paper where a complete proof is written.

More precisely, we have the following,

Proposition 3.3

where \(C_0\) is a small positively oriented circle containing 0 but not \(-\nu \) nor \(-1\), and \(K^{\mathrm {BP}}_u :\mathbb {L}^2(C_0)\rightarrow \mathbb {L}^2(C_0)\) is defined by its integral kernel

where

Proof

See the arXiv version of this paper. \(\square \)

Proposition 3.3 combined with (18) yields the Fredholm determinant formula for the Laplace transform of Z(t, n) given in Theorem 2.12. In order to deduce Theorem 2.13, we use the equivalence between the Beta polymer and the Beta-RWRE from Proposition 2.6, proved in Sect. 3.3.

3.3 Equivalence Beta-RWRE and Beta polymer

We show that the Beta RWRE and the Beta polymer are equivalent models in the sense that if the parameters \(\alpha , \beta \) of the random walk and the parameters \(\mu , \nu \) of the polymer are such that \(\mu =\alpha \) and \(\nu =\alpha +\beta \), we have the equality in law

The equality in law is true for fixed t and n. However, as families of random variables, \(\left( Z(t,n) \right) \) and \(\left( P(t,t-2n+2) \right) \) for \(t+1\geqslant n\geqslant 1\) have different laws.

Proof of Proposition 2.6

Let us first notice that since \(\mu =\alpha \) and \(\nu =\alpha +\beta \), the i.i.d. collection of Beta random variables defining the environment for the Beta polymer, and the i.i.d. collection of r.v. defining the environment of the Beta RWRE, have the same law.

Also, as it was already pointed-out in Sect. 2.2.2, the point-to-point Beta polymer is equivalent to a half line to point Beta polymer.

Let t and n having the same parity. The random variable \(P(t, t-2n+2)\) is the probability for the Beta RWRE to arrive above (or exactly at) \(t-2n+2\). This is also the probability for the Beta RWRE to make at most \(n-1\) downward steps up to time t. Let us imagine that we deform the underlying lattice of the Beta polymer so that Beta polymer paths are actually up-right path, and we also consider the path from (t, n) to its initial point. Then the polymer path is the trajectory of a random walk, and one can interpret the weight of this polymer path as the quenched probability of the corresponding random walk trajectory (compare the polymer path depicted in Fig. 2 with the RWRE path depicted in Fig. 5, using the correspondence shown in Fig. 6). Moreover the event that the random walk performs at most \(n-1\) downward steps is equivalent to the fact that the polymer path starts with positive n-coordinate. These events correspond to the fact that the path intersects the thick gray half-lines in Figs. 2 and 5.

A possible path for the Beta-RWRE is shown. It corresponds to the half-line to point polymer path in Fig. 2. P(t, x) is the (quenched) probability that the random walk ends at time t in the gray region

Illustration of the deformation of the underlying lattice for the Beta polymer. The left picture corresponds to the Beta polymer whereas the right picture corresponds to the RWRE. Black arrows represents possible steps for the polymer path (resp. the RWRE) with their associated weight (resp. probability)

Finally, for any fixed \(t, n\in \mathbb {Z}_{\geqslant 0}\) such that \(t+1\geqslant n\), if we set \(x=t-2n+2\), then P(t, x) and Z(t, n) have the same probability law. Moreover, conditioning on the environment of the Beta polymer corresponds to conditioning on the probability of each step for the Beta RWRE.

4 Rigorous replica method for the Beta polymer

4.1 Moment formulas

Let \(\mathbb {W}^{k}\) be the Weyl chamber

For \(\vec {n}\in \mathbb {W}^{k}\), let us define

with the convention that \(Z(t,n)=)\) for \(n<1\). The recurrence relation (1) implies a recurrence relation for \(u(t, \vec {n})\). We are going to solve this recurrence to find a closed formula for \(u(t, \vec {n})\), using a variant of the Bethe ansatz. It is the analogue of Section 5 in [17]. Besides the strict weak polymer [17], such “replica method” calculations have been performed to study moments of the partition function for the continuum polymer [4, 13, 18], the semi-discrete polymer [4, 10], and the log-gamma polymer [4, 36]. However, in those models, the moment problems are ill-posed and one cannot rigorously recover the distribution from them. In the present case, since the \(Z(t,n) \in [0,1]\), the moments do determine the distribution as explained in Sect. 4.2.

Using the recurrence relation (1),

Let us first simplify this expression when \(k=c\) and \(\vec {n} = (n, \dots , n)\) is a vector of length c with all components equal. In this case, setting \(B=B_{t+1, n}\) to simplify the notations, we have

The recurrence relation can be further simplified using the next lemma.

Lemma 4.1

Let B a random variable following the \(Beta(\mu , \nu -\mu )\) distribution. Then for integers \(0\leqslant j\leqslant c\),

where \((a)_k\) is the Pochhammer symbol \( (a)_k= a (a+1) \dots (a+k-1)\) and \((a)_0 =1 \).

Proof

By the definition of the Beta law, we have

\(\square \)

In order to write the general case, we need a little more notation. For \(\vec {n}\in \mathbb {W}^{k}\), we denote by \(c_1, c_2, \dots c_\ell \) the sizes of clusters of equal components in \(\vec {n}\). More precisely, \(c_1, c_2, \dots c_{\ell }\) are positive integers such that \(\sum c_i = k\) and

Define also the operator \(\tau ^{(i)}\) acting on a function \(f:\mathbb {W}^{k}\rightarrow \mathbb {R}\) by

Using the Lemma 4.1, we have that

In words, for each \(\ell \)-tuple \(j_1, \dots , j_{\ell }\) such that \(0\leqslant j_i \leqslant c_i\), we decrease the \(j_i\) last coordinates of the cluster i in \(\vec {n}\), for each cluster, and multiply by

Lemma 4.2

Let X, Y generate an associative algebra such that

Then we have the following non-commutative binomial identity:

where \(p=\frac{\nu -\mu }{\nu }\).

Proof

It is shown in [30, Theorem 1] that if X and Y satisfy the quadratic homogeneous relation

with

and

then

where \(\varphi _{q, \bar{\mu }, \bar{\nu }}(j\vert n)\) are the q-Hahn weights defined in (14). Our lemma is the \(q\rightarrow 1\) degeneration of this result. \(\square \)

Let \( \mathcal {L}^{\mathrm {cluster}}_{c}\) denote the operator

which appears in the R.H.S. of (22), and \(\mathcal {L}^{free}_{c}\) the operator

where \(\nabla _i = p\tau ^{(i)} + (1-p)\). It is worth noticing that for \(c=1\), \(\mathcal {L}^{\mathrm {cluster}}_{c} = \mathcal {L}^{free}_{c}\).

For a function \(f:\mathbb {Z}^c\rightarrow \mathbb {C}\), we formally identify monomials \(X_1 X_2 \dots X_c\) where \(X_i\in \lbrace X,Y\rbrace \) with terms \(f(\vec {n})\) where for all \(1\leqslant i \leqslant c\), \(n_{c-i}=n-1\) if \(X_i=X\) and \(n_{c-i}=n\) if \(X_i=Y\). Using this identification, the binomial formula from Lemma 4.2 says that the operators \(\mathcal {L}^{free}_{c}\) and \(\mathcal {L}^{\mathrm {cluster}}_{c}\) act identically on functions f satisfying the condition

One notices that the operator involved in (22) acts independently by \(\mathcal {L}^{\mathrm {cluster}}_{c}\) on each cluster of equal components. It follows that if a function \(u :\mathbb {Z}_{\geqslant 0}\times \mathbb {Z}^k \rightarrow \mathbb {C}\) satisfies the boundary condition

for all \(\vec {n}\) such that \(n_i=n_{i+1}\) for some \(1\leqslant i < k\), and satisfies the free evolution equation

for all \(\vec {n}\in \mathbb {Z}^k\), then the restriction of \(u(t,\vec {n})\) to \(\mathbb {W}^{k}\) satisfies the true evolution equation (22).

Remark 4.3

The coefficients \(\left( {\begin{array}{c}c\\ j\end{array}}\right) \frac{(\nu -\mu )_j(\mu )_{c-j}}{(\nu )_c}\) that appear in the true evolution equation (22) are probabilities of the Beta-binomial distribution with parameters \(c, \mu , \nu -\mu \). Hence, the true evolution equation could be interpreted as the “evolution equation” for a series of urns where each urn evolves according to the Pólya urn scheme. Such dynamics could be interpreted as the \(q\rightarrow 1\) degeneration of the q-Hahn Boson, which is dual to the q-Hahn TASEP [15].

Proposition 4.4

For \(n_1 \geqslant n_2\geqslant \dots \geqslant n_k\geqslant 1\), one has the following moment formula,

where the contour for \(z_k\) is a small circle around the origin, and the contour for \(z_j\) contains the contour for \(z_{j+1}\) shifted by \(+1\) for all \(j=1, \dots , k-1\), as well as the origin, but all contours exclude \(-\nu \).

Proof

We show that the right-hand-side of (26) satisfies the free evolution equation, the boundary condition and the initial condition for \(u(0,\vec {n})\) for \(\vec {n}\in \mathbb {W}^{k}\) (the initial condition outside \(\mathbb {W}^{k}\) is inconsequential). The above discussion shows that the restriction to \(\vec {n}\in \mathbb {W}^{k}\) then solves the true evolution equation (22). By the definition of the function u in (20) and the initial condition for the half-line to point polymer, \(u(0,\vec {n}) = \prod _{i=1}^k \mathbbm {1}_{n_i\geqslant 1}=\mathbbm {1}_{n_k\geqslant 1}\) (the second equality holds because the \(n_i\)’s are ordered). Let us consider the right-hand-side of (26) when \(t=0\). If \(n_k\leqslant 0\), there is no pole in zero, so one can shrink the \(z_k\) contour to zero, and consequently \(u(0,\vec {n})=0\). When \(n_k>0\) (and consequently all \(n_i\)’s are positive), there is no pole at \(-\nu \) for \(t=0\), so that one can successively send to infinity the contours for the variables \(z_k\), \(z_{k-1}, \dots \) Since the residue at infinity is one for each variable, then \(u(0,\vec {n})=1\). Hence, the initial condition is satisfied.

In order to show that the boundary condition is satisfied, we assume that \(n_i=n_{i+1}\) for some i. Let us apply the operator

inside the integrand. This brings into the integrand a factor

Since it cancels the pole for \(z_i=z_{i+1}+1\), one can use the same contour for both variables, and since the integrand is now antisymmetric in the variables \((z_i, z_{i+1})\) the integral is zero as desired.

In order to show that the free evolution equation is satisfied, it is enough to show that applying the operator \(p\tau ^{(i)} + (1-p)\) for i from 1 to k inside the integrand brings an extra factor \(\prod _{j=1}^{k}\frac{\mu +z_j}{\nu +z_j}\). This is clearly true since

\(\square \)

Remark 4.5

It is possible to prove a generalization of Proposition 4.4 where the parameter \(\mu \) depends on t. In this generalization, the weight of an edge starting from a point (s, n) for any n would have a weight B or \(1-B\) (depending on the direction of the edge), where B is a random variable distributed according to the Beta distribution with parameters \((\mu _s, \nu -\mu _s)\). In the formula (26), the factor \(\left( \frac{\mu + z_j}{\nu +z_j}\right) ^t\) would be replaced by

Such moment formulas with time inhomogeneous parameters have been proved for the discrete-time q-TASEP [3] and for the q-Hahn TASEP in [15, Section 2.4] (see also the discussion in [16, Section 5.7] which deals with a generalization of the q-Hahn TASEP). In all these cases, this allows to prove Fredholm determinant formulas with time-dependent parameters, using the same method as in the homogeneous case. It is not clear however if one can find moment formulas with a parameter inhomogeneity depending on n (e.g. the parameter \(\nu \) would depend on n).

Proposition 4.4 provides an integral formula for the moments of Z(t, n). In order to form the generating series, it is convenient to transform the formula so that all integrations are on the same contour.

Proposition 4.6

For all \(n,t \geqslant 0\), we have

where

where \(g^{\mathrm {BP}}\) is defined in (5) and the integration contour is a small circle around 0 excluding \(-\nu \) and for a partition \(\lambda \vdash k\) (i.e. \(\sum _i \lambda _i =k\)) we write \(\lambda =1^{m_1} 2^{m_2}\dots \), meaning that \(m_j\) is the number of indices i such that \(\lambda _i=j\) components; and \(\ell (\lambda )\) is the number of non-zero components \(\ell (\lambda )=\sum _i m_i\).

Proof

This type of deduction, called the contour shift argument, has already occurred in the context of the q-Whittaker process in [4, Section 3.2.1]. See [8], in particular Proposition 7.4, and references therein for more background on the contour shift argument. The present formulation corresponds to a degeneration when \(q\rightarrow 1\) of Proposition 3.2.1 in [4].

One starts with the moment formula given by Proposition 4.4:

We need to shrink all contours to a small circle around 0. During the deformation of contours, one encounters all poles of the product \(\prod _{A<B} \frac{z_A-z_B}{z_A-z_B-1}\). Thus, a direct proof would amount to carefully book-keeping the residues. Although one could adapt to the present setting the proof of [8, Proposition 7.4], we refer to Proposition 6.2.7 in [4] which provides a very similar formula. The only modification is that the function f that we consider has a pole at \(-\nu \), but this does not play any role in the deformation of contours.

It is also worth remarking that applying Proposition 3.2.1 in [4] to q-Hahn moment formula [15, Theorem 1.8] and taking a suitable limit yields the statement of Proposition 4.6. \(\square \)

4.2 Proof of Theorem 2.12

Thanks to Proposition 4.6, the moments of Z(t, n) have a suitable form for taking the generating series. Let us denote \(\mu _k = \mathbb {E}\left[ Z(t,n)^k\right] \). A degeneration when q goes to 1 of Proposition 3.2.8 in [4] shows that

where \(\det (I+K)\) is the formal Fredholm determinant expansion of the operator K defined by the integral kernel

and \(C_0\) is a positively oriented circular contour around 0 excluding \(-\nu \). Since \(f(v+n_1)\) is uniformly bounded for \(v\in C_0\) and \(n_1\geqslant 1\), and \(v_1+n_1-v_2\) is uniformly bounded away from 0 for \(v_1, v_2 \in C_0, n_1\geqslant 1\), the identity holds also numerically. Since \(\vert Z(t, n)\vert \leqslant 1\), then one can exchange summation and expectation so that for any \(u\in \mathbb {C}\)

It is useful to notice that

Next, we want to rewrite \(\det (I+K)\) as the Fredholm determinant of an operator acting on a single contour. For that purpose we use the following Mellin–Barnes integral formula:

Lemma 4.7

For \(u\in \mathbb {C}{\setminus } \mathbb {R}_{>0}\) with \(\vert u\vert <1\),

where \(z^s\) is defined with respect to a branch cut along \(z\in \mathbb {R}_{\leqslant 0}\).

Proof

The statement of the Lemma is very similar to [4, Lemma 3.2.13].

Since \(\mathrm{Res}_{s=k} \left( \Gamma (-s)\Gamma (1+s)\right) = (-1)^{k+1}\), we have that

where \(\mathcal {H}\) is a negatively oriented integration contour enclosing all positive integers. For the identity to be valid, the L.H.S. of (29) must converge, and the contour must be approximated by a sequence of contours \(\mathcal {H}_k\) enclosing the integers \(1, \dots , k\) such that the integral along the symmetric difference \(\mathcal {H}{\setminus } \mathcal {H}_k\) goes to zero.

The following estimates show that one can chose the contour \(\mathcal {H}_k\) as a rectangular contour connecting the points \(1/2+i\), \(k+1/2+i\), \(k+1/2-i\) and \(1/2-i\); and the contour \(\mathcal {H}\) as the infinite contour from \(\infty -i\) to \(1/2-i\) to \(1/2+i\) to \(\infty +i\).

We first need an estimate for the Gamma function [19, Chapter 1, 1.18 (2)]: for any \(\delta >0\)

Then recall that

Using (30),

It implies that for s going to \(\infty e^{i\phi }\) with \(\phi \in [-\pi /2, \pi /2]\), \(1/g^{\mathrm {BP}}(v+s)\) has exponential decay in \(\vert s\vert \). Moreover, for s going to \(\infty e^{i\phi }\) with \(\phi \in [-\pi /2, \pi /2]\) and \(\phi \ne 0\),

is bounded. Thus, one can freely deform the integration contour \(\mathcal {H}\) in (29) to become the straight line from \(1/2 -i\infty \) to \(1/2+i\infty \). \(\square \)

This shows that for any \(u\in \mathbb {C}{\setminus } \mathbb {R}_{>0}\) with \(\vert u\vert <1\), one has that

where the kernel \(K^{\mathrm {BP}}_u\) is defined in the statement of Theorem 2.12. One extends the result to any \(u\in \mathbb {C}{\setminus } \mathbb {R}_{>0}\) by analytic continuation. The left-hand-side in (31) is analytic since \(\vert Z(t,n)\vert <1\). The right-hand-side is analytic because the Fredholm determinant expansion is absolutely summable and integrable. Indeed, first notice that since the Fredholm determinant contour is finite, it is clear that \(K^{\mathrm {BP}}_{u}(v, v')\) is uniformly bounded for \(v,v'\) in the contour \(C_0\). Moreover, each term in the Fredholm determinant expansion

can be bounded using Hadamard’s bound, so that the sum absolutely converges.

5 Zero-temperature limit

5.1 Proof of Proposition 2.10

In this section, we prove that the Bernoulli-Exponential first passage percolation model is the zero-temperature limit of the Beta-RWRE. The zero temperature limit corresponds to sending the parameters \(\alpha , \beta \) of the Beta RWRE to zero.

Proof

We first show how the transition probabilities for the Beta RWRE degenerate in the zero temperature limit. \(\square \)

Lemma 5.1

Fix \(a,b >0\). For \(\epsilon >0\), let \(B_{\epsilon }\) be a Beta distributed random variable with parameters \((\epsilon a, \epsilon b)\). We have the convergence in distribution

as \(\epsilon \) goes to zero, where \(\xi \) is a Bernoulli random variable with parameter \(b/(a+b)\) and \((E_a, E_b)\) are exponential random variables with parameters a and b, independent of \(\xi \).

Proof

Let \(f, g :\mathbb {R}\rightarrow \mathbb {R}\) be continuous bounded functions.

In order to compute the limit of (32), we evaluate separately the contribution of the integral between 0 and 1 / 2, and between 1 / 2 and 1. By making the change of variable \(z=-\epsilon \log (x)\), we have that

Since

the limit of the right-hand-side in (33) is

The contribution of the integral in (32) between 1 / 2 and 1 is computed in the same way, and we find that

which proves the claim. \(\square \)

Remark 5.2

Whether \(E_a\) and \(E_b\) are independent or not does not have any importance. However, it is important that \(E_a\) and \(E_b\) are independent of the Bernoulli random variable \(\xi \).

Let \(\alpha _{\epsilon }=\epsilon a, \beta _{\epsilon } = \epsilon b\) and \(P_{\epsilon }(t,x)\) the (quenched) distribution function of the endpoint at time t for the Beta random walk with parameters \(\alpha _{\epsilon }\) and \(\beta _{\epsilon }\). Let T(n, m) be the first-passage time in the Bernoulli-Exponential model with parameters a, b.

It is convenient to define the analogue of the set of weights \(w_e\) of the Beta polymer in the context of the Beta RWRE. For an edge e in \((\mathbb {Z}_{\geqslant 0})^2 \) we define \(p_e\) by

where the variables \(B_{\cdot , \cdot }\) define the environment of the random walk. Lemma 5.1 implies that as \(\epsilon \) goes to zero, we have the weak convergence

where the minimum is taken over up-right paths, and the passage times \(t_e\) are defined in (3).

Since the times \(t_e\) in the FPP model are either zero or exponential, and there is at most one path with zero passage time, the minimum over paths of \(\sum _{e\in \pi } t_e\) is attained for a unique path with probability one. We know by the principle of the largest term that as \(\epsilon \rightarrow 0\),

has the same limit as

Since the family of rescaled weights \((-\epsilon \log (p_e))_e\) weakly converges to \((t_e)_e\), then

Hence for any \(n, m\geqslant 0\), \(-\epsilon \log (P_{\epsilon }(t,n)\) weakly converges as \(\epsilon \) goes to zero to T(n , m). \(\square \)

5.2 Proof of Theorem 2.18

Theorem 2.18 states that for \(r\in \mathbb {R}_{>0}\), one has

where \(C'_0\) is a small positively oriented circle containing 0 but not \(-\nu \), and \(K^{\mathrm {FPP}}_r :\mathbb {L}^2(C'_0)\rightarrow \mathbb {L}^2(C'_0)\) is defined by its integral kernel

where

Proof

The proof splits into two pieces. We first show that under appropriate scalings, the Laplace transform \(\mathbb {E}[ e^{u P_{\epsilon }(n+m, m-n)} ]\) converges to \(\mathbb {P}(T(n,m)\geqslant r)\). Then we show that the Fredholm determinant \(\det (I+K^{\mathrm {BP}}_u)\) from Theorem 2.13 converges to \(\det (I+K^{\mathrm {FPP}}_r)_{\mathbb {L}^2(C'_0)}\).

First step We have an exact formula for \(\mathbb {E}[ e^{u P_{\epsilon }(n+m, m-n)} ]\). Let us scale u as \(u=-\exp (\epsilon ^{-1}r)\) so that

If \(f_{\epsilon }(x):= \exp (-e^{-\epsilon ^{-1}x})\), then the sequence of functions \(\lbrace f_{\epsilon } \rbrace \) maps \(\mathbb {R}\) to (0, 1), is strictly increasing with a limit of 1 at \(+\infty \) and 0 at \(-\infty \), and for each \(\delta >0\), on \(\mathbb {R}{\setminus } [-\delta , \delta ]\) converges uniformly to \(\mathbbm {1}_{x>0}\). We define the r-shift of \(f_{\epsilon }\) as \(f_{\epsilon }^r(x) = f_{\epsilon }(x-r)\). Then,

Since the variable T(n, m) has an atom in zero, we are not exactly in the situation of Lemma 4.1.38 in [4], but we can adapt the proof. Let \(s<r<u\). By the properties of the functions \(f_{\epsilon }\) mentioned above, we have that for any \( \eta >0\), there exists an \(\epsilon _0\) such that for any \(\epsilon <\epsilon _0\),

Since we have established the weak convergence of \(-\epsilon \log (P_{\epsilon }(n+m, m-n))\) one can take limits as \(\epsilon \) goes to zero in the probabilities and we find that

Now we take s and u to r and notice that T(n, m) can be decomposed as an atom at zero and an absolutely continuous part. Thus, for any \(r>0\),

Second step We shall prove that the limit when \(\epsilon \) goes to zero of \(\mathbb {E}[ e^{u P_{\epsilon }(n+m, m-n)} ]\) is \(\det (I+K^{\mathrm {FPP}}_r)_{\mathbb {L}^2(C_0)}\) where \(K_r^{\mathrm {FPP}}\) is defined as in Theorem 2.18. For that we take the limit of the Fredholm determinant \(K^{RW}\) from Theorem 2.13. Let us use the change of variables

Assuming that the limit of the Fredholm determinant is the Fredholm determinant of the limit, which we prove below, we have to take the limit of \(\epsilon K^{RW}(\epsilon \tilde{v}, \epsilon \tilde{v}')\). The factor \(\epsilon \) in front of \(K^{RW}\) is a priori necessary, it comes from the Jacobian of the change of variables \(v=\epsilon \tilde{v}\) and \(v'=\epsilon \tilde{v}'\). For any \(1> \epsilon >0\) the kernel \(K^{RW}(v,v')\) can be written as an integral over \(\frac{1}{2} \epsilon + i\mathbb {R}\) instead of an integral over \(\frac{1}{2}+i\mathbb {R}\), since we do not cross any singularity of the integrand during the contour deformation, and the integrand has exponential decay. Thus, one can write

With \(u=-\exp \left( \epsilon ^{-1}r\right) \), we have that \((-u)^{\epsilon \tilde{s}} = e^{\tilde{s}r}\). Moreover, since

we have that

where \(g^{\mathrm {FPP}}\) is defined in (35), and

Because the integrand in (34) is not absolutely integrable, one cannot apply dominated convergence directly. Instead, we will split the integral (36) into two pieces: the integral over s when \({{\mathrm {Im}}}[\epsilon s ]<1/4\) and the integral over s when \({{\mathrm {Im}}}[\epsilon s ] \geqslant 1/4\). Let us begin with some estimates. Since the function \(z\mapsto z/\sin (z)\) is holomorphic on a circle of radius 1 / 2 around zero, there exists a constant \(C>0\) such that for \(s\in 1/2+i\mathbb {R}\) and \(\epsilon >0\) such that \(\vert \epsilon s \vert <1/2\), we have

In order to lighten the notations, we denote

The variables \(\tilde{v}\) and \(\tilde{v}'\) are fixed for the moment. We know that \(G(\epsilon , \tilde{s})\) is bounded for \(\epsilon \) close to zero and \( \tilde{s}\in 1/2+i\mathbb {R}\). Moreover, there exists a constant \(C'>0\) such that for \(\vert \epsilon s \vert <1/2\),

We have the decomposition

The first integral in the R.H.S of (37) can be bounded by

which is \(\mathcal {O}(\epsilon )\). The second integral in the R.H.S of (37) can be bounded by

which is \(\mathcal {O}(\epsilon \log (\epsilon ^{-1}))\). The third integral in the R.H.S of (37) converges to a limit as \(\epsilon \) goes to zero, even if the integrand is not absolutely integrable. The limit is the improper integral

It remains to show that we have made a negligible error when cutting the tails of the integral. We have

The first integral in the R.H.S of (38) goes to zero by dominated convergence, and the second integral in the R.H.S of (38) goes to zero by the Riemann-Lebesgue lemma. At this point we have shown that for any \(\tilde{v}, \tilde{v}'\in C_0\),

Observe now that the kernel \(K^{\mathrm {FPP}}_r(\tilde{v},\tilde{v}')\) is bounded as \(\tilde{v}, \tilde{v}'\) vary along their contour. Using Hadamard’s bound, one can bound the Fredholm series expansion of \(K^{\mathrm {FPP}}_r\) by an absolutely convergent series of integrals, and conclude by dominated convergence that under the scalings above

\(\square \)

6 Asymptotic analysis of the Beta RWRE

Let us first define the Tracy–Widom distribution governing the fluctuations of extreme eigenvalues of Gaussian hermitian random matrices. We refer to [4, Section 3.2.2] for an introduction to Fredholm determinants.

Definition 6.1

The distribution function \(F_\mathrm{GUE}(x)\) of the GUE Tracy–Widom distribution is defined by \(F_\mathrm{GUE}(x)=\det (I-K_\mathrm{Ai})_{\mathbb {L}^2(x,+\infty )}\) where \(K_\mathrm{Ai}\) is the Airy kernel,

where the contours for z and w do not intersect. There is some freedom in the choice of contours. For instance, one can choose the contour for z (resp. w) as constituted of two infinite rays departing 1 (resp. 0) in directions \(\pi /3\) and \(-\pi /3\) (resp. \(2\pi /3\) and \(-2\pi /3\)).

6.1 Fredholm determinant asymptotics

We consider a Beta RWRE \((X_t)_{t\geqslant 0}\) with parameters \(\alpha , \beta >0\). For a parameter \(\theta >0\), we define the quantity

and the function \(I:\big (\frac{\alpha -\beta }{\alpha +\beta }, 1\big ) \rightarrow \mathbb {R}_{>0}\) such that

where \(\Psi \) is the digamma function (\(\Psi (z)= \Gamma '(z)/\Gamma (z)\)) and \(\Psi _1\) is the trigamma function (\(\Psi _1(z)=\Psi '(z)\)). Moreover, we define a real-valued \(\sigma (\theta )>0\) such that

The fact that we can choose \(\sigma (\theta )>0\) is proved in Lemma 6.3. We will see that a critical point Fredholm determinant asymptotic analysis shows that for all \(\theta >0\) and \(\alpha , \beta >0\),

However, due to increased technical challenges in the general parameter case, we presently prove rigorously only the case of Theorem 6.2, which deals with \(\alpha =\beta =1\) (i.e. when the \(B_{x,t}\) variables are distributed uniformly on (0, 1)).

When \(\alpha =\beta \) the expressions for \(x(\theta )\) and \(I(x(\theta ))\) simplify. We find that

and

so that the rate function I is simply the function \(I:x \mapsto 1-\sqrt{1-x^2}\). We also find that for \(\alpha =\beta =1\),

where \(x=x(\theta )\).

Theorem 6.2

For \(0<\theta <1/2\) and \(\alpha =\beta =1\), we have that

The rest of this section is devoted to the proof of Theorem 6.2. Most arguments in the proof apply equally for any parameters \(\alpha , \beta \) except the deformation of contours which is valid for small \(\theta \) and Lemma 6.5 which is only valid for \(\alpha =\beta =1\). We expect the general \(\alpha , \beta , \theta \) to still hold but do not attempt to extend to that case.

We first observe that we do not need to invert the Laplace transform of \(P(t, x(\theta )t)\). Setting \(u=-e^{t I(x(\theta )) - t^{1/3} \sigma (\theta ) y}\), one has that

This convergence is justified by Lemma 4.1.39 in [4], provided that the limit is a continuous probability distribution function, and we see later that this is the case. Hence, in order to prove Theorem 6.2, one has to take the \(t\rightarrow \infty \) limit of the Fredholm determinant (6) in the statement of Theorem 2.13.

The asymptotic analysis of this Fredholm determinant proceeds by steepest descent analysis, and is very close to the analysis presented in the recent papers [2, 5, 6, 17, 24, 38], that deal with similar kernels. Let us assume for the moment that the contour \(C_0\) is a circle around 0 with very small radius. One can make the change of variables \(v+s=z\) in the kernel \(K_u^{\mathrm {RW}}\) so that, with the value of u that we choose,

and the contour for z can be chosen as \(1/2+i\mathbb {R}\). The kernel can be rewritten

where

The function h governs the asymptotic behaviour of the Fredholm determinant of \(K_u^{\mathrm {RW}}\). The principle of the steepest-descent method is to deform the integration contour—both the contour in the definition of \(K_u^{\mathrm {RW}}\) and the \(\mathbb {L}^2\) contour—so that they go across a critical point of the function h. Then one needs to prove that only the integration around the critical point has a contribution in the limit, and one can approximate all terms by their Taylor approximation close to the critical point.

The first derivatives of h are

and

One readily sees that the expressions for \(x(\theta )\) and \(I(x(\theta ))\) in (39) and (40) are precisely chosen so that \(h'(\theta )=h''(\theta )=0\). Let us give an expression of \(h'\) in terms of \(\theta \):

Expressions are much simpler in the case \(\alpha =\beta =1\). In that case we have

In order to understand the behaviour of \({{\mathrm {Re}}}[h]\) around the critical point \(\theta \), we also need the sign of the third derivative of h.

Lemma 6.3

For any \(\alpha , \beta , \theta >0\), we have that \(h'''(\theta )>0 \).

Lemma 6.3 is proved in Sect. 6.2.

By the definition of \(\sigma (\theta )\) in (41), \(\sigma (\theta )=\left( \frac{h'''(\theta )}{2}\right) ^{1/3}\). Then, using Taylor expansion, we have that for z in a neighbourhood of \(\theta \),

We now deform the integration contour in (46) and the Fredholm determinant contour which was initially a small circle around 0. Let \(\mathcal {D}_{\theta }\) be the vertical line \(\mathcal {D}_{\theta } = \lbrace \theta + iy :y\in \mathbb {R}\rbrace \), and \(\mathcal {C}_{\theta }\) be the circle centred in 0 with radius \(\theta \). This deformation of contours does not change the Fredholm determinant \(\det (I+K_u^{\mathrm {RW}})\) only if

-

All the poles of the sine inverse in (46) corresponding with \(z-w\in \mathbb {Z}_{>0}\) stay on the right of \(\mathcal {D}_{\theta }\).

-

We do not cross the pole of h at \(-\alpha -\beta \) when deforming the \(\mathbb {L}^2\) contour.

Hence, we will assume that \(\theta <\min (\alpha +\beta , \frac{1}{2})\) so that the two above conditions are satisfied.

Lemma 6.4

For any parameters \(\alpha , \beta >0\), and \(\theta >0\), the contour \(\mathcal {D}_{\theta }\) is steep-descent for the function \({{\mathrm {Re}}}[h]\) in the sense that \(y\mapsto {{\mathrm {Re}}}[h(\theta +iy)]\) is decreasing for y positive and increasing for y negative.

Lemma 6.4 is proved in Sect. 6.2. The step which prevents us from proving Theorem 6.2 for any parameters \(\alpha , \beta >0\) is the steep-descent properties of the contour \(\mathcal {C}_{\theta }\).

Lemma 6.5

Assume \(\alpha =\beta =1\). Then the contour \(\mathcal {C}_{\theta }\) is steep descent for the function \(-{{\mathrm {Re}}}[h]\), in the sense that \(y\mapsto {{\mathrm {Re}}}[h(\theta e^{i\phi })]\) is increasing for \(\phi \in (0, \pi )\) and decreasing for \(\phi \in (-\pi , 0)\).

Lemma 6.5 is proved in Sect. 6.2. Proving Lemma 6.5 for arbitrary parameters \(\alpha , \beta \) turns out to be computationally difficult, and we do not pursue that here.

In the rest of this section, although the proofs are quite general and do not depend on the value of parameters, we assume that \(\alpha =\beta =1\) so that we can use Lemma 6.5. Let us show that the only part of the contours that contributes to the limit of the Fredholm determinant when t tends to infinity is a neighbourhood of the critical point \(\theta \).

Proposition 6.6

Let \(B(\theta , \epsilon )\) be the ball of radius \(\epsilon \) centred at \(\theta \). We note \(\mathcal {C}_{\theta }^{\epsilon }\) (resp. \(\mathcal {D}_{\theta }^{\epsilon })\) the part of the contour \(\mathcal {C}_{\theta }\) (resp. \(\mathcal {D}_{\theta })\) inside the ball \(B(\theta , \epsilon )\). Then, for any \(\epsilon >0\),

where \(K^{\mathrm {RW}}_{y, \epsilon }\) is defined by the integral kernel

Proof

By Lemmas 6.4 and 6.5, there exists a constant \(C>0\) such that if \(v\in \mathcal {C}_{\theta }\) and \(z\in \mathcal {D}_{\theta }{\setminus } \mathcal {D}_{\theta }^{\epsilon }\), then

and consequently

Since \(\frac{\pi }{\sin (\pi (z-w))\Gamma (z)}\) has exponential decay in the imaginary part of z, the contribution of the integration over \(\mathcal {D}_{\theta }{\setminus } \mathcal {D}_{\theta }^{\epsilon }\) is negligible (by dominated convergence). Thus, \(K^{\mathrm {RW}}_{y}(v,v')\) and \(K^{\mathrm {RW}}_{y, \epsilon }(v,v')\) have the same limit when t goes to infinity.

By Lemmas 6.4 and 6.5, there exists another constant \(C'>0\) such that if \(v\in \mathcal {C}_{\theta }{\setminus }\mathcal {C}_{\theta }^{\epsilon }\) and \(z\in \mathcal {D}_{\theta }\), then

Consider the Fredholm determinant expansion

The \(k{\mathrm{th}}\) term can be decomposed as the sum of the integration over \((\mathcal {C}_{\theta }^{\epsilon })^k\) plus the integration over \((\mathcal {C}_{\theta })^k{\setminus }(\mathcal {C}_{\theta }^{\epsilon })^k\). The second contribution goes to zero since it will be possible to factorize \(e^{-C't}\). Finally, the proposition is proved using again dominated convergence on the Fredholm series expansion, which is absolutely summable by Hadamard’s bound. \(\square \)

Let us rescale the variables around \(\theta \) by the change of variables

The Fredholm determinant of \(K^{\mathrm {RW}}_{y, \epsilon }\) on the contour \(\mathcal {C}_{\theta }^{\epsilon }\) equals the Fredholm determinant of the rescaled kernel

acting on the contour \(\mathcal {C}_{\theta }^{t^{1/3}\epsilon }\).

It is more convenient to change again the contours. For \(L\in \mathbb {R}_{>0}\), define the contour

where \(\phi \) is some angle \(\phi \in (\pi /6, \pi /2)\) to be chosen later. We also set

The contour \(\mathcal {C}_{\theta }^{\epsilon }\) is an arc of circle and crosses \(\theta \) vertically. For \(\epsilon \) small enough, one can replace the contour \(\mathcal {C}_{\theta }^{\epsilon }\) by \(\mathcal {C}^L\) without changing the Fredholm determinants. The values of L and \(\phi \) has to be chosen so that the endpoints of the contours coincide.

We define the rescaled contour for the variable \(\tilde{z}\) by

and we set \(\mathcal {D}:=i\mathbb {R}\).

Proposition 6.7

We have that

where \(K_y\) is defined by its integral kernel

where the contour for z is a wedge-shaped contour constituted of two rays going to infinity in the directions \(e^{-i\pi /3}\) and \(e^{i\pi /3}\), such that it does not intersect \(\mathcal {C}\).

The proof of Proposition 6.7 follows the lines of [24, Proposition 6.4] (see also [5, Proposition 6.13]).

Proof

We take the limit of the rescaled kernel \(\det (I+K^t_{y, \epsilon }(\tilde{v}, \tilde{v}'))\). Let us first examine the pointwise convergence. Under the scalings above

Now we justify that one can take the pointwise limit. We take \(\mathcal {D}^{\epsilon t^{1/3}}\) as the integration contour for the \(\tilde{z}\) variable. Since \(\tilde{z}\) is pure imaginary, \(\exp (\tilde{z}^3/3 - \tilde{z} y \sigma (\theta ))\) has modulus one. Moreover for fixed \(\tilde{v}\) and \(\tilde{v}'\), we can find a constant \(C'''>0\) such that

This means that the integrand of \(K^t_{y, \epsilon }(\tilde{v}, \tilde{v'})\) has quadratic decay, which is enough to apply dominated convergence. It results that

Now we need to prove that one can exchange the limit with the Fredholm determinant. By Taylor expansion, there exists a constant \(C>0\) such that for \(\vert v-\theta \vert <\epsilon \),

Since \(\vert v-\theta \vert <\epsilon \), we have that \(Ct(v-\theta )^4<C\epsilon \tilde{v}^3\). Hence, for \(\epsilon \) small enough, one can factor out \(\exp (-C'\tilde{v}^3/3)\) for some \(C'>0\). By using the same bound as before for the factors in the integrand of \(K^t_{y, \epsilon }\), there exist constants \(C', C''>0\) such that

As \(\exp (- \tilde{v}^3)\) decays exponentially in the direction \(\infty e^{\pm i\phi }\) for \(\phi \in (\pi /2, 5\pi /6)\), we have that for \(\epsilon \) small enough, the integrand of the rescaled kernel decays exponentially and we can apply dominated convergence. Now recall that we can take \(\epsilon \) arbitrarily small in Proposition 6.6. Thus, the Fredholm expansion of \(K^t\) is integrable and summable (using Hadamard’s bound), and dominated convergence implies that the limit of \( \det (I+K^{\mathrm {BP}}_{y, \epsilon })_{\mathbb {L}^2(\mathcal {C}_{\theta }^{\epsilon })}\) is the Fredholm determinant of an operator \(\tilde{K}_y\) acting on \(\mathcal {C}\) defined by the integral kernel

Since the integrand of \(\tilde{K}_y\) has quadratic decay on the tails of the contour \(\mathcal {D}^{\infty }\) one can freely deform the contours so that it goes from \(\infty e^{-i\pi /3}\) to \(\infty e^{i\pi /3}\) without intersecting \(\mathcal {C}^{\infty }\). Finally, by doing another change of variables to eliminate the dependency in \(\sigma (\theta )\) in the integrand, one recovers the Fredholm determinant of \(K_y\) as claimed. \(\square \)

Using the \(\det (I+AB)=\det (I+BA)\) trick, one can reformulate the Fredholm determinant of \(K_y\) as the Fredholm determinant of an operator on \(\mathbb {L}^2(y, \infty )\) (see e.g. [6, Lemma 8.6]). It turns out that

and this concludes the proof of Theorem 6.2.

6.2 Precise estimates and steep-descent properties

The following series representations will be useful:

is valid for z and w away from the negative integers. We also use

Proof of Lemma 6.3

Given the expression (47) for the first derivative of h, we have

where \(\Psi _2\) is the second polygamma function (\(\Psi _2(z)=\frac{\mathrm {d}}{\mathrm {d}z}\Psi _1(z))\). Hence \(h'''(\theta )>0\) is equivalent to

which is equivalent to

The function trigamma \(\Psi _1\) is positive and decreasing on \(\mathbb {R}_{>0}\). The function \(\Psi _2\) is negative and increasing. One recognizes in (57) difference quotients for the function \(\Psi _2 \circ \Psi _1^{-1}\). Thus, it is enough to prove that \( \Psi _2 \circ \Psi _1^{-1}\) is strictly concave. The derivative of \( \Psi _2 \circ \Psi _1^{-1}\) is \( \Psi _3\circ \Psi _1^{-1} / \Psi _2\circ \Psi _1^{-1}\). Since \(\Psi _1\) is decreasing, it is enough to show that \(\Psi _3/\Psi _2\) is increasing, which, by taking the derivative, is equivalent to \(\Psi _4 \Psi _2 >\Psi _3 \Psi _3\).

For all \(n\geqslant 1\), one has the integral representation

Thus for \(x>0\), \(\Psi _4(x) \Psi _2(x) >\Psi _3(x) \Psi _3(x)\) is equivalent to

By symmetrizing the right-hand-side, the inequality is equivalent to

which is true for all \(x>0\).

Proof of Lemma 6.4

By symmetry, it is enough to treat only the case \(y>0\). Hence we show that if \(y>0\), then \({{\mathrm {Im}}}[h'(\theta +iy)]>0.\) Using (47), \({{\mathrm {Im}}}[h'(\theta +iy)]>0\) is equivalent to

Using the series representations (54), Eq. (59) is equivalent to

We have that

and

It yields that (60) can be rewritten as

where

The inequality (61) is equivalent to

Using Cauchy’s mean value theorem, there exist \(\theta _1\in (\theta , \theta +\alpha )\) and \(\theta _2\in (\theta +\alpha , \theta +\alpha +\beta )\) such that (62) is equivalent to

Finally, this last inequality is always true for \(\theta _1<\theta _2\) since we have the series of equivalences

The inequality (63) is satisfied because \(\theta _1<\theta _2\).

Proof of Lemma 6.5

in the case \(\alpha =\beta =1\). We have that

Using formula (48), we have

We have to show that for any \(\phi \in (0, \pi )\), \({{\mathrm {Re}}}[i\theta e^{i\phi } h'(\theta e^{i\phi })]>0\). We can forget the factor \(\theta /((\theta +1)^2+\theta ^2) )\) which is positive. Thus, we have to show that

One can see that the inequality is equivalent to

which is always true for \(\phi \in (0,\pi )\).

6.3 Relation to extreme value theory

Let us now state a corollary of Theorem 6.2. Let \((X^{(1)}_t)_{t\in \mathbb {Z}_{\geqslant 0}}, \dots , (X^{(N)}_t)_{t\in \mathbb {Z}_{\geqslant 0}}\) be N independent random walks drawn in the same Beta environment (Definition 2.1). We denote by \(\mathcal {P}\) and \(\mathcal {E}\) the measure and expectation associated with the probability space which is the product of the environment probability space and the N random walks probability space (for f a function of the environment and the N random walk paths, we have \(\mathcal {E}[ f] = \mathbb {E}[ \mathsf {E}^{\otimes N}[f] ]\) and \(\mathcal {P}(A) = \mathcal {E}[\mathbbm {1}_{A}]\)).

Corollary 6.8

Assume \(\alpha =\beta =1\). We set \(N=\lfloor e^{ct}\rfloor \) for some \(c\in \left( \frac{2}{5},1\right) \), and \(x_0 = I^{-1}(c) = \sqrt{1-(1-c)^2}\). Then we have

where

Remark 6.9

The condition \(c>2/5\) is equivalent to \(x_0>4/5\). It is also equivalent to the condition that \(\theta <1/2\) in Theorem 6.2. Hence, it is most probably purely technical.

Remark 6.10

We expect that Corollary 6.8 holds more generally for arbitrary parameters \(\alpha , \beta >0\). One would have the following result:

Let \(N=\lfloor e^{ct}\rfloor \) such that there exists \(x_0 >\frac{\alpha -\beta }{\alpha +\beta }\) and \(\theta _0>0\) with \(x(\theta _0)=x_0\) and \(I(x(\theta _0)) = c\). Then

where \(I'(x)= \frac{\mathrm {d}}{\mathrm {d} x}I(x)\).

Remark 6.11

The range of the parameter c in Corollary 6.8 is a priori \(c\in (0,1)\). The reason why the upper bound is precisely 1 is because we are in the \(\alpha =\beta =1\) case. In general, the upper bound is I(1), which is always finite. It is natural that c is bounded. Indeed, we know that for all i, \(X_t^{(i)}\leqslant t\) (because the random walk performs \(\pm 1\) steps), and for c very large there exists some i such that \(X_t^{(i)}=t\) with high probability. Hence, one expects that for c large enough, the maximum \(\max _{i=1, \dots , \lfloor e^{ct}\rfloor }\lbrace X^{(i)}_t \rbrace \) is exactly t with a probability going to 1 as t goes to infinity, and there cannot be random fluctuations in that case.

If one considers N simple symmetric random walks (corresponding to the annealed model), the threshold is \(\log (2)\) (i.e. for \(c>\log (2)\), \((1-(1/2)^t)^N\rightarrow 0\) and for \(c<\log (2)\), \((1-(1/2)^t)^N\rightarrow 1\)). One can calculate the large deviations rate function \(I^{a}\) for the simple random walkFootnote 1 and check that \(I^a(1)=\log (2)\).

Proof of Corollary 6.8

This proof relies on Theorem 6.2 which deals only with \(\alpha =\beta =1\). However, this type of deduction would also hold in the general parameter case, and we write the proof using general form expressions. From Theorem 6.2, we have that writing

then \(\chi _t\) weakly converges to the Tracy–Widom GUE distribution, provided x can be written \(x=x(\theta )\) with \(0<\theta <1/2\). For any realization of the environment, we have on the one hand

On the other hand, setting \(x = x_0 + \frac{t^{-2/3}\sigma (x_0) y}{I'(x_0)}\), we have that

By Taylor expansion, we have as t goes to infinity

and

Hence, the R.H.S. of (66) is approximated by

Choosing \(x_0\) such that \(I(x_0)= c\), we have

The second equality relies on Taylor expansion of the logarithm around 1. The third equality is the consequence (66) and (68). Since \(\chi _t\) converges in distribution, \(t^{-1/3}(1+\chi _t))\) converges in probability to zero by Slutsky’s theorem. Hence, the term \(\mathcal {O}(t^{-1/3}(1+\chi _t))\) inside the exponential converges in probability to zero. Recalling that \(I(x_0)=c\), we have