Abstract

We introduce the strict-weak polymer model, and show the KPZ universality of the free energy fluctuation of this model for a certain range of parameters. Our proof relies on the observation that the discrete time geometric \(q\)-TASEP model, studied earlier by Borodin and Corwin, scales to this polymer model in the limit \(q\rightarrow 1\). This allows us to exploit the exact results for geometric \(q\)-TASEP to derive a Fredholm determinant formula for the strict-weak polymer, and in turn perform rigorous asymptotic analysis to show KPZ scaling and GUE Tracy–Widom limit for the free energy fluctuations. We also derive moments formulae for the polymer partition function directly by Bethe ansatz, and identify the limit of the free energy using a stationary version of the polymer model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Results

In this paper we introduce the exactly solvable strict-weak polymer model on the two-dimensional square lattice, and investigate some of its features. This brings the number of known exactly solvable directed lattice polymer models to two. (Subsequent to this paper, a generalization of this model called the Beta Polymer was discovered and analyzed in [2]). The strict-weak model introduced here differs from the earlier studied log-gamma polymer [9, 10, 15] in the definition of the admissible polymer paths. The strict-weak model uses gamma-distributed weights on the edges (or vertices, depending on the formulation chosen) while the log-gamma polymer uses inverse gamma weights.

We show that the strict-weak model belongs to the Kardar–Parisi–Zhang (KPZ) universality class by deriving the Tracy–Widom GUE limit distribution for the fluctuations of the free energy. This result is based on the fact that, under an appropriate scaling of parameters and scaling and centering of the variables, the geometric \(q\)-TASEP particle system converges to the strict-weak polymer. This allows us to write a Fredholm determinant formula for the Laplace transform of the strict-weak polymer partition function.

We also derive an integral formula for the moments of the partition function via the rigorous replica method. Finally, we show that this model has a stationary version where the ratios of nearest-neighbor pairs of partition functions are gamma-distributed. We use the stationary model to give an alternative derivation of the explicit limiting free energy density, which also arises in the proof of the free energy fluctuations.

The Tracy–Widom limit of the strict-weak polymer model was proved independently and concurrently by O’Connell and Ortmann [14]. They derived the Fredholm determinant formula (our Theorem 1.7) in a different way that complements our work. They use previous work of [10] on the geometric RSK correspondence to relate the strict-weak polymer to a particular Whittaker process. Then, using an identity from [10] and a variant of an argument from [9], they arrive at the result of Theorem 1.7.

We turn to the definition of the model and the main results. Our convention is to define the model on a two dimensional \(t-n\) lattice. The variable \(t\) represents discrete time, with the axis pointing to the right. The variable \(n\) is a discrete space variable, with the axis pointing upward.

Recall that a nonnegative random variable \(X\) has Gamma distribution with shape parameter \(k>0\) and scale parameter \(\theta >0\), and write \(X\sim \text{ Gamma }(k,\theta )\), if

The Laplace transform of a Gamma distributed variable \(X\) is given by

When \(k=1\), the Gamma distribution specializes to the exponential distribution.

Definition 1.1

A strict-weak polymer path \(\pi \) is a lattice path which at each lattice site \((t,n)\) is allowed to

-

Jump horizontally to the right from \((t,n)\) to \((t+1,n)\);

-

Or, jump diagonally to the upright from \((t,n)\) to \((t+1,n+1)\).

The partition function with parameters \(k,\theta >0\) for the ensemble of strict-weak polymers from \((0,1)\) to \((t,n)\) is given by

where the product is over all the horizontal and diagonal unit segments in the path \(\pi \), and

-

\(d_{e}=1\) if \(e\) is a diagonal unit segment;

-

\(d_{e}\) is an independent \(\text{ Gamma }(k,\theta )\) distributed random variable if \(e\) is a horizontal unit segment.

The free energy of the strict-weak polymer model is \(\log Z(t,n)\).

The partition functions of the strict-weak polymer system satisfy the recursive relation

where \(Y(t,n)\) are i.i.d. Gamma random variables. This relation can be easily derived by observing that

where \(f\) is the horizontal edge from \((t,n)\) to \((t+1,n)\), and therefore from the definition \(d_{f}\sim \text{ Gamma }(k,\theta )\).

The requirement that the polymer paths all start from \((0,1)\) means that we consider the delta initial data

Furthermore, for any point \((t,1)\) with \(t\ge 0\), there is only one admissible polymer (the straight path) from \((0,1)\) to \((t,1)\), and the total weight it collects is the product of \(t\) i.i.d. \(\text{ Gamma }(k,\theta )\) random variables, namely

The recursive relation (1.2), the initial condition (1.3), and the boundary condition (1.4) together determine the partition function \(Z(t,n)\) for any \(t>0\) and \(n>1\). As an example, one can see easily either from the definition or from this recursive relation that, \(Z(2,2)\) is a sum of two i.i.d \(\text{ Gamma }(k,\theta )\) random variables, which by the property of the Gamma distribution implies that \(Z(2,2)\sim \text{ Gamma }(2k,\theta )\).

Our main result of this paper is the KPZ universality for the strict-weak polymer model, for sufficiently large \(\kappa \) where \(t=\kappa n\). The largeness of \(\kappa \) seems to be only a technical requirement to simplify the asymptotic analysis.

Definition 1.2

Recall the digamma function \(\Psi (x) := \big [\log \Gamma ]'(x)\). Given parameters \(k>0\) and \(\kappa \ge 1\) such that there exists a unique solution \(\bar{t} \in (0,1/2)\) to the equation

we define numbers

Lemma 4.1 ensures that if \(\kappa \) is sufficiently large, the solution \(\bar{t} \in (0,1/2)\) exists and is unique. Note that though \(\bar{f}_{k,\theta ,\kappa }\) depends on \(\theta \), \(\bar{g}_{k,\kappa }\) does not depend on \(\theta \) (as the notation indicates).

Theorem 1.3

There exists \(\kappa ^{*}=\kappa ^{*}(k)>0\) such that the strict-weak polymer free energy with parameters \(k,\theta >0\) and \(\kappa >\kappa ^{*}\) has limiting fluctuation distribution given by

where \(\bar{f}_{k,\theta ,\kappa }\) and \(\bar{g}_{k,\kappa }\) are defined in Definition 1.2, and \(F_{GUE}\) is the GUE Tracy–Widom distribution function.

The proof is given in Sect. 4. Besides describing the fluctuations of the free energy, this theorem also proves that (in the parameter range considered) \(\bar{f}_{k,\theta ,\kappa }\) represents the free energy law of large numbers. In Sect. 7 we provide a different means (applicable for all parameter choices) to identify the free energy law of large numbers as

Though this appears different than the earlier expression for \(\bar{f}_{k,\theta ,\kappa }\) in Definition 1.2, it is readily confirmed that they are, in fact, the same.

The main observation behind the above theorem is a connection between the strict-weak polymer and the discrete time geometric \(q\)-TASEP introduced and studied in [3]. Under suitable centering and scaling, the fluctuations of geometric \(q\)-TASEP particle positions converge weakly to the strict-weak polymer free energies, as \(q\rightarrow 1\).

Recall that the \(N\)-particle discrete time geometric \(q\)-TASEP with jump parameter \(\alpha \in (0,1)\) is an interacting particle system with particle locations on \(\mathbb {Z}\) labeled by

In discrete time \(t\in \mathbb {Z}_{\ge 0}\), particles jump according to the parallel update rule:

Here \(\text{ gap }_{n}(t):=X_{n-1}(t)-X_{n}(t)-1\) for \(i>1\), and \(\text{ gap }_{1}(t):=\infty \). The jump rates are given by

where the \(q\)-Pochhammer symbols are defined as

We will consider step initial condition, where, for \(n\ge 1\),

We study a particular scaling limit of the fluctuations of \(X_{n}(t)\), namely the function \(F^{\varepsilon }(t,n)\) defined via

under the scaling where

There are two ways (we know of) to motivate this scaling. The first, which is most in line with the approach we pursue herein, is that under this scaling one readily sees that the moment formulas for geometric \(q\)-TASEP converge to those of the strict-weak polymer (cf. the end of Sect. 5). The second motivation requires a little more explanation, which we briefly describe here. Macdonald processes [4] are measures on interlacing partitions which enjoy a number of exact formulas owing to the integrable structure of the Macdonald symmetric functions. A special case (corresponding to setting the Macdonald \(t\) parameter to zero) yields \(q\)-Whittaker processes. There exist Markovian dynamics on these interlacing partitions which preserves the class of \(q\)-Whittaker processes, leading to a deterministic evolution on the parameters describing the fixed time marginals of the dynamics. In [4] a continuous time dynamic related to the so-called Plancherel specialization is introduced, and continuous time \(q\)-TASEP arises as a marginal on the smallest parts of the partitions. As \(q\rightarrow 1\), [4] shows that the Plancherel specialized \(q\)-Whittaker process converges to the Plancherel Whittaker process of [13] and \(q\)-TASEP converges to the free energy evolution for the O’Connell-Yor semi-discrete directed polymer. The pure alpha specialization of the \(q\)-Whittaker process is likewise preserved by discrete time Markov dynamics [11] and has discrete time geometric \(q\)-TASEP as its marginal on the smallest parts. The pure alpha specialized \(q\)-Whittaker process converges [4, 6] (under scaling related to those above) to the alpha specialized Whittaker process of [10]. As explained in [14], the analog of the smallest part for the pure alpha Whittaker process is related to the strict-weak polymer free energy. Methods coming from Whittaker processes [10] provide a route to write down a Laplace transform formula for the strict-weak polymer partition function which can be turned (using identities similar to those of [9]) into the Fredholm determinant formula present herein. This is the approach taken in [14]. We do not rely upon the connection to these Macdonald/\(q\)-Whittaker/Whittaker processes in the approach we utilize here, though certainly this was an important motivation in our pursuit.

The following result demonstrates that the limit as \(\varepsilon \rightarrow 0\) of \(e^{F^{\varepsilon }(t,n)}\) satisfies the same recursive relation as \(Z(t,n)\) where the parameter \(k\) is related to \(m_1\) via \(k=m_{1}/\theta \). The proof is given in Sect. 2, though it is also briefly sketched below.

Theorem 1.4

For \(t\ge 0\) and \(n\ge 1\), the sequence of random variables \(F^{\varepsilon }(t,n)\) converge weakly to a limit as \(\varepsilon \rightarrow 0\), denoted as \(F(t,n)\), and one has the recursive relation

for every \(t\ge 0\) and \(n\ge 1\), where \(Y(t,n)\) are i.i.d. Gamma distributed random variables with shape parameter \(k=m_{1}/\theta \) and scale parameter \(\theta \).

Thus we see that \(e^{F(t,n)}\) satisfies the same recursive relation as the polymer partition function (1.2). When \(t=0\), by step initial condition (1.6), we have \(F^{\varepsilon }(0,n)=(1-n)\log \varepsilon ^{-1}\), therefore \(e^{F(0,n)}=\lim _{\varepsilon \rightarrow 0}e^{(1-n)\log \varepsilon ^{-1}}= \mathbf {1}_{n=1}\), which coincides with the initial condition (1.3) for the polymer partition function. Also, one can show that (see Lemma 2.1) \(e^{F^\varepsilon (1,1)}\) converges to a Gamma \((k,\theta )\) random variable. Since the first particle jumps independently at each step, \(e^{F^\varepsilon (t,1)}\) converges to a product of \(t\) of i.i.d. Gamma\((k,\theta )\) random variables, so it also coincides with the boundary condition (1.4).

Therefore, as a consequence of the above theorem, we obtain the convergence of the fluctuation of the geometric \(q\)-TASEP to the polymer free energy. In fact, the convergence of the process, or joint convergence, follows readily from the above theorem and the independence of each jump. The independence of jumps implies independence of the random variables \(Y_\varepsilon (t,n):=(e^{F^\varepsilon (t,n)}-e^{F^\varepsilon (t-1,n-1)})/e^{F^\varepsilon (t-1,n)}\), as well as independence of their limits \(Y(t,n)\). Since the recursive relation is linear in these \(Y_\varepsilon (t,n)\) or \(Y(t,n)\) random variables, each of the variables \(e^{F^\varepsilon (t,n)}\) or \(e^{F(t,n)}=Z(t,n)\) can be written as a sum of products of different \(Y_\varepsilon \)’s or \(Y\)’s. Consequently, weak convergence of \(\{Y_\varepsilon (t,n)\}_{t\ge 0,n>0} \rightarrow \{Y(t,n)\}_{t\ge 0,n>0}\) implies that of the process \(\{e^{F^\varepsilon (t,n)}\}_{t\ge 0,n>0} \rightarrow \{Z(t,n)\}_{t\ge 0,n>0}\) (as can be seen, for instance, from considering characteristic functions). Summarizing, we have the following result.

Corollary 1.5

As \(\varepsilon \rightarrow 0\), the processes \(\{e^{F^\varepsilon (t,n)}\}_{t\ge 0,n>0}\) converge in distribution to the process \(\{Z(t,n)\}_{t\ge 0,n>0}\) of strict-weak polymer partition functions.

Given this convergence result, we can apply the exact formula for the \(e_q\)-Laplace transform of the particle location fluctuations of the geometric \(q\)-TASEP to obtain an exact formula for the strict-weak polymer. The following Fredholm determinant formula for the geometric \(q\)-TASEP is from [3], Theorem 2.4].

Theorem 1.6

For every \(\zeta \in \mathbb {C}\backslash \mathbb {R}_{+}\),

where \(C_{1}\) is a small positively oriented circle containing \(1\) and \(K_{\zeta }:L^{2}(C_{1})\rightarrow L^{2}(C_{1})\) is given by its integral kernel

From the above formula, we take the \(q\rightarrow 1\) limit according to the scaling (1.7) and (1.8) and obtain the following Fredholm determinant formula for strict-weak polymers; the proof of the following formula is given in Sect. 3.

Theorem 1.7

For \(u\in \mathbb {C}\) such that \(Re(u)>0\), let \(t=\kappa n\) for parameter \(\kappa \ge 1\). Then one has

where \(C_{0}\) is a small positively oriented circle containing \(0\) and \(K_{u}:L^{2}(C_{0})\rightarrow L^{2}(C_{0})\) has kernel

We use this Fredholm determinant formula to prove Theorem 1.3.

We remark that there is a zero-temperature limit of our model as \(k\rightarrow 0\) previously studied in [12]. In fact as \(k\rightarrow 0\), the family of random variables \(-k\log d_e\) converge to a family of independent exponential random variables, and the model converges weakly to a directed first passage percolation model (i.e. a problem of minimizing the total weights along paths).

1.1 Outline

Section 2 contains the proof of Theorem 1.4. In Sect. 3 we prove Theorem 1.7. In Sect. 4 we carry out rigorous asymptotic analysis based on the formula in Theorem 1.7 and prove Theorem 1.3. In Sect. 5 we apply the replica method to derive moments formula of the polymer partition function. Finally in Sect. 6 we introduce a stationary version of the polymer model and in Sect. 7 we identify the free energy law of large numbers using this stationary model.

2 Recursive Relation: Proof of Theorem 1.4

The proof of Theorem 1.4 follows from the definition of the discrete time geometric \(q\)-TASEP and certain known limits of \(q\)-deformed functions. We will first demonstrate the limit of the fluctuation of the first particle.

Lemma 2.1

The sequence of random variables \(\exp (F^{\varepsilon }(1,1))\) converge as \(\varepsilon \rightarrow 0\) to a Gamma distributed random variable with shape parameter \(k=m_{1}/\theta \) and scale parameter \(\theta \).

Proof

By the definition (1.7) of the quantity \(F^{\varepsilon }(1,1)\), for any positive real number \(r\), one has \(e^{F^{\varepsilon }(1,1)} =r\) if and only if

Since the left side above is always a non-negative integer, \(F^{\varepsilon }(1,1)\) can only take values \(r\) in a discrete set such that the right side above is also a non-negative integer, namely \(\log r\in \log \varepsilon ^{-1} -\varepsilon \theta \,\mathbb {Z}_+\). For every such \(r\), by the definition of the discrete time geometric \(q\)-TASEP,

where \(\mathbf {p}_{\alpha }\) is defined in (1.5). The exponential factor

By [4], Corollary4.1.10], if we define

then for any \(\delta >0\), there exists \(\varepsilon _{0}>0\) such that if \(\varepsilon <\varepsilon _{0}\) one has

where \(\mathcal {A}(\varepsilon )\) is an \(\varepsilon \) dependent constant (whose value is not important since in our case it will cancel out). Note that in our case,

Therefore for any \(\delta >0\), if \(\varepsilon \) is sufficiently small

As for the \(r\)-independent factor in the numerator (which will be a normalization factor), by the definition of \(q\)-Gamma function

we can take \(x=m_{1}/\theta \), so that

And one has

and therefore for \(\varepsilon \) sufficiently small

Substitute (2.4) and (2.6) into (2.2), and we obtain that the quantity (2.2) is arbitrarily close to

for \(\varepsilon \) sufficiently small.

In general, if \(F^\varepsilon (1,1)\) is a random variable valued in \(a_\varepsilon + \varepsilon \theta \mathbb {Z}\), where \(a_\varepsilon \) is an \(\varepsilon \) dependent shift, and for any \(s\in \mathbb {R}\), one has \((\varepsilon \theta )^{-1}\mathbb P(F_\varepsilon (1,1)=\hat{s})\rightarrow f(s)\) as \(\varepsilon \rightarrow 0\) where \(\hat{s} =\max \{s'\le s| s'\in a_\varepsilon + \varepsilon \theta \mathbb {Z}\}\), then \(F^\varepsilon (1,1)\) converges to a limit \(F\) as \(\varepsilon \rightarrow 0\) weakly and \(F\) takes value in the continuum and has \(f\) as its density function. This can be proved, for instance, via approximating \(\mathbb P(F_\varepsilon (1,1)>t)\) by \(\int _t^{\infty } (\theta \varepsilon )^{-1} \mathbb P(F_\varepsilon (1,1)=\hat{s})\,ds\) up to a small error which goes to \(0\) as \(\varepsilon \rightarrow 0\). This integral converges to \(\int _t^\infty f(s)\,ds\) by point-wise convergence and applying Fatou’s lemma on both \([t,\infty )\) and \((-\infty ,t]\), and the fact that a density function integrates to \(1\) over \((-\infty ,\infty )\).

In our case, note that \((1-e^{-\varepsilon \theta })/(\varepsilon \theta )\rightarrow 1\) as \(\varepsilon \rightarrow 0\), and that \(k=m_1/\theta \). Therefore for any positive real number \(r\), letting \(s=\log r\),

So \(F^\varepsilon (1,1)\) converges to a limiting random variable \(F\) and its density function \(\mathbb P(F\in [s,s+ds))\) is equal to \(f(s)\,ds\) with \(f\) defined above. Since \(ds=\frac{1}{r}dr\), one concludes that \(e^{F^\varepsilon (1,1)}\) converges weakly to \(e^{F(1,1)}\) which is a Gamma\((k,\theta )\) distributed random variable. \(\square \)

Since the geometric \(q\)-TASEP is defined in terms of the probability of the distance that the \(n\)-th particle jumps forward from time \(t-1\) to time \(t\), given the gap between the \(n\)-th particle and the \((n-1)\)-st particle at time \(t-1\), it is natural to consider the distribution of \(F^{\varepsilon }(t,n)\) given the values of \(F^{\varepsilon }(t-1,n)\) and \(F^{\varepsilon }(t-1,n-1)\). This motivates the following proof.

Proof of Theorem 1.4

We compute the probability that \(e^{F^{\varepsilon }(t,n)}=r\) conditioned on \(e^{F^{\varepsilon }(t-1,n)}=v\) and \(e^{F^{\varepsilon }(t-1,n-1)}=u\). Observe that we seek to study the probability that

conditioned on

where \(r\), \(u\) and \(v\) take discrete values such that the right hand sides of the above identities are integers. This means that at time \(t-1\), the gap between the \((n-1)\)-st and \(n\)-th particle is given by

and one asks for the probability that the \(n\)-th particle jumps by the distance

Therefore, the conditional probability

is equal to (the jump rates for q-TASEP \(\mathbf {p}_{\alpha }\) is defined in (1.5)):

For the exponential factor, one has (recall that \(k=m_{1}/\theta \))

As in the proof of Lemma 2.1, By [4], Corollary4.1.10], if we define

then for any \(\delta >0\), if \(\varepsilon \) is sufficiently small then one has (2.3). Using this fact, some of the factors in (2.8) can be written as

Therefore for any \(\delta >0\), if \(\varepsilon \) is sufficiently small

Similarly, one has (now with \(y=-\log (u/v)-\log \theta ^{-1} +\varepsilon \theta \))

For the other two factors in (2.8), one has

The factor \((e^{-\varepsilon \theta },e^{-\varepsilon \theta })_{m_{1}/\theta -1}\) in the denominator will only contribute as a normalization factor. To compute it, we use the \(q\)-Gamma function (2.5). With \(q=e^{-\varepsilon \theta }\) and \(x=m_{1}/\theta \), we have

Therefore,

By definition, the ratio of the other two factors in (2.9) is

Note that the set of admissible values of the conditioning variables \(u,v\) also depends on \(\varepsilon \), that is, \(\log u,\log v\in (t-(n-1))\log \varepsilon ^{-1}-\theta \varepsilon \mathbb {Z}_+\). In the interval \([u,ue^{\theta \varepsilon })\) there is only one admissible value of \(u\), and similarly for \(v\). This implies (combining the above analysis together)

Now we follow the same argument as in the proof of Lemma 2.1 about convergence of discrete valued random variables \(F^\varepsilon \) to continuum valued random variable \(F\). This gives the conditional probability of \(F(t,n)=s:=\log r\), conditioned on \(F(t-1,n-1)=\log u\) and \(F(t-1,n)=\log v\). Let \(w=(e^s-u)/v\), then \(ds=\frac{v}{r}dw\). Note that this factor \(\frac{v}{r}\) cancels with the factor \(\frac{r}{v}\) in the last line of (2.10). Therefore, we have that \(F^{\varepsilon }(t,n)\rightarrow F(t,n)\) and that \((e^{F(t,n)}-e^{F(t-1,n-1)})/e^{F(t-1,n)}\) are \(\text{ Gamma }(k,\theta )\) distributed. The recursive relation follows immediately. \(\square \)

3 Strict-Weak Fredholm Determinant Formula: Proof of Theorem 1.7

We prove the Fredholm determinant formula in Theorem 1.7 for the Laplace transform of the polymer partition function. Firstly, we show that under proper scalings, the left hand side of (1.9) goes to the Laplace transform of \(Z(t,n)=e^{F(t,n)}\). We scale the parameter as

and scale other parameters as in (1.7) and (1.8). Then we have

where

is the \(q\)-exponential, and

Therefore noticing that \(e_{q}(x)\rightarrow e^{x}\) uniformly and \(\frac{\varepsilon \theta }{1-q}\rightarrow 1\) as \(\varepsilon \rightarrow 0\) and \(q\rightarrow 1\) under the scaling (1.8), we have, by Lemma 4.140 of [4] along with the convergence result of Corollary 1.5, that

As the next step, we study the limit of \(K_{\zeta }\) from (1.9) with

At first, we will not take care of describing contours and will only discuss pointwise convergence of the integrand.

Recalling the \(q\)-Gamma function from (2.5), we can write

Combining the above expressions, as well as noting the Jacobian factor \(\tfrac{dw}{dv} = q^v \log q\), we find that \(\det (1+K_{\zeta })_{L^2(C_1)} = \det (1+\tilde{K}_{\zeta })_{L^2(C_0)}\) where \(C_0\) is a small circle around the origin and the kernel \(\tilde{K}_{\zeta }\) is defined as

where

As \(\varepsilon \rightarrow 0\), observe that

Letting \(\tilde{z}=s+v\), and \(t=\kappa n\), the above considerations suggest that \(\tilde{K}_{\zeta }(v,v')\) converges to

where

If we can suitably strengthen the above pointwise convergence of the integrand of the kernel then we can deduce the convergence of the associated Fredholm determinants \(\det (1+K_{\zeta })\) to \(\det (1+K_{u})\). The proof of this convergence which we provide now is analogous to that in [4]. First of all, note that for any fixed compact subset \(D\) of \(-\frac{1}{2}+\mathrm{i}\mathbb {R}\), the convergence of the integrand of \(\tilde{K}_{\zeta }\) is uniform over \(s\in D\). This is due to the fact that the \(\Gamma _{q}\) function converges uniformly to the \(\Gamma \) function on compact domains away from poles (the terms are easily seen to satisfy uniform convergence as well).

The following tail bounds shows that the integrals in \(s\) variables in the Fredholm expansion can be restricted to compact sets, as the contribution to the integrals from outside these compact sets can be bounded (uniformly in \(q\) near 1) arbitrarily close to zero by choosing large enough compact sets.

Lemma 3.1

Let \(D\subset \mathbb {C}\) be an arbitrary compact set containing the unit disk \(\{z:|z|<1\}\). For all \(\kappa \ge 1\), one has the following tail bound of \(h^{q}(s)\): there exists positive constants \(C,c\) such that for all \(s\in (-\frac{1}{2}+\mathrm{i}\mathbb {R})\backslash D\), all \(q\in (1/2,1)\), and all \(v,v'\in C_{0}\), the following bound holds

Proof

The factor \(\left| \frac{\pi }{\sin (-\pi s)}\right| \) decays exponentially in \(|Im(s)|\). The factors \((-\zeta )^{s},\Gamma _{q}(v),(1-q)^{\pm s},\Gamma _{q}(\frac{m_{1}}{\theta }+v)^{-1}\), and \(\frac{q^v \log q}{q^{s}q^{v}-q^{v'}}\) and \(\Gamma _{q}(\frac{m_{1}}{\theta }+s+v)^{\kappa -1}\) can be all bounded by constants independent of \(q\) and \(|Im(s)|\). Therefore we only need to bound the quantity

Using the assumption \(\kappa \ge 1\), one has

for a constant \(C'\) independent of \(q\) and \(\big |Im(s)\big |\). Furthermore, writing \(s=-\frac{1}{2}+iy\) and using \(\Gamma _q (x+1)=[x]_q \Gamma _q(x)\) where \([x]_q\) is the q-number, one has

For \(s\in (-\frac{1}{2}+\mathrm{i}\mathbb {R})\backslash D\), the norm of the ratio of the two q-numbers is bounded by a constant uniformly in \(q \in (1/2,1)\). Furthermore, since \(v\in C_0\) and \(C_0\) is a sufficiently small circle around the origin, \(\frac{m_{1}}{\theta }+\frac{1}{2}+Re(v)\) and \(\frac{1}{2}+Re(v)\) are positive. Therefore we can apply [2], Lemma 2.7], which states that there exists a constant \(C''>0\) such that for all \(s\in (-\frac{1}{2}+\mathrm{i}\mathbb {R})\backslash D\),

Since this polynomially growing bound is dominated by the exponential decaying factor mentioned in the beginning of the proof, the desired tail bound holds. \(\square \)

The condition \(\kappa \ge 1\) of the previous lemma is only a very tiny restriction. In fact the partition function is zero for \(t<n-1\) by definition of the allowed polymer paths.

The following result together with Hadamard’s bound shows that it suffices to consider only a finite number of terms in the Fredholm expansion, as the contribution of the later terms can be bounded (uniformly in \(q\) near 1) arbitrarily close to zero by going out far enough in the expansion.

Lemma 3.2

There exists a constant \(C>0\) such that for all \(q\in (1/2,1)\), and all \(v,v'\in C_{0}\) one has \(|\tilde{K}_{\zeta }(v,v')|<C\).

Proof

For any compact domain \(D\), by Lemma 3.1 the integration over \(s\) outside \(D\) can be bounded uniformly in \(q,v,v'\). Inside \(D\) we use the uniform convergence and the fact that \(h^q(s)\) is bounded uniformly in \(q\) and \(v,v'\in C_0\). \(\square \)

It is now standard to combine the above estimates to show convergence of the Fredholm determinant expansions. The boundedness of \(\tilde{K}_{\zeta }\) (as well as \(K_{u}\)), compactness of the contour \(C_0\), and Hadamard’s inequality enables us to cut off the Fredholm determinant expansions after a finite number of terms with small error (going to zero as the number of terms increases). Then, using the exponential decay of \(h^q(s)\) as well as its uniform convergence to its pointwise limit, we arrive at the convergence of these finite Fredholm expansion terms to their limiting analogs, thus completing the proof of the theorem.

4 Asymptotic Analysis: Proof of Theorem 1.3

We start by observing that a suitable limit of the Laplace transform of \(Z(\kappa n,n)\) will give the asymptotic probability distribution of \(\log Z(\kappa n,n)\), centered and scaled. We then apply the same limit to the Fredholm determinant formula proved earlier for \(Z(\kappa n,n)\). Overall, the proof of Theorem 1.3 follows a similar line as in [9].

Let

where \(\bar{f}_{k,\theta ,\kappa }\) will be specified later. If for each \(r\in \mathbb {R}\) we have

where \(p_{k,\theta ,\kappa }(r)\) is a continuous probability distribution function, then, by Lemma 4.1.39 of [4],

On account of this, in order to prove Theorem 1.3 it suffices to show that for \(\bar{f}_{k,\theta ,\kappa }\) from (4.3) and \(\bar{g}_{k,\kappa }\) from (4.4),

In order to prove the limit in (4.1) we utilize the Fredholm determinant formula from Theorem 1.7. Towards this end, define

Then we can rewrite (3.6) as (recall the relation \(k=m_1/\theta \))

The derivatives of \(G\) is given by

Lemma 4.1

Given the parameters \(k>0\), for every \(\bar{z}>0\), provided that \(\kappa \) is sufficiently large, there exists \(\bar{t}\in (0,\bar{z})\) such that \(\Psi '(\bar{t})-\kappa \Psi '(k+\bar{t})=0\).

The value \(\bar{t}\) depends on \(k,\kappa \). We don’t write this dependence explicitly for simplicity of notation.

Proof

The function \(F(z)=\Psi '(z)-\kappa \Psi '(k+z)\) is continuous on \(z\in (0,\infty )\). If \(z\rightarrow 0\), then \(F(z)\rightarrow \infty \). On the other hand, as \(z\rightarrow \min (1/4,\bar{z})\), the quantity \(\kappa \Psi '(k+z)\) is bounded below by \(\kappa \) times a constant (depending on \(k\)), so as long as \(\kappa \) is sufficiently large, and hence \(F(z)\) is negative. Therefore there exists \(\bar{t}\in (0,\bar{z})\) such that \(F(\bar{t})=0\). \(\square \)

Given a sufficiently large \(\kappa \), let \(\bar{t}=\bar{t}(k,\kappa )\) be such that \(G''(\bar{t})=0\). Lemma 4.1 guarantees that \(\bar{t}\) is small if we assume \(\kappa \) large. One can then choose

so as to make \(G'(\bar{t})=0\) as well. Let

then formally,

where \(h.o.t.\) stands for higher order terms. Substitute this into (4.2), and make changes of variables

then formally (and for the moment neglecting a discussion of contours), one has (note that \( \theta ^{\tilde{z}-v} \rightarrow 1\) as \(n\rightarrow \infty \))

where

The last Fredholm determinant formula (on suitable contours as defined below) is a well-known formula for Tracy–Widom distribution \(F_{GUE}\Big (\big (\tfrac{\bar{g}_{k,\kappa }}{2}\big )^{-1/3}\, r\Big )\).

These discussions have formally demonstrated Theorem 1.3. In the following, we make this derivation rigorous. Note that in the above formal discussions we did not specify the contours. We start with precise definitions of the contours.

Definition 4.2

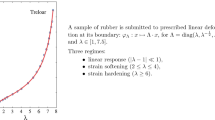

Define a contour \(\mathcal C_{v}\) leaving \(\bar{t}\) at an angle \(2\pi /3\) as a straight line segment from \(\bar{t}\) to \(\sqrt{3}\bar{t}i\), followed by a counter-clockwise circular arc (centered at the origin) until \(-\sqrt{3}\bar{t}i\), then a straight line segment back to \(\bar{t}\). The contour \(\mathcal C_{v}\) is oriented counter-clockwisely. We also define a contour \(\mathcal C_{\tilde{z}}\) which consists of two rays, symmetric with each other by the real axis, from \(\bar{t}+n^{-1/3}\) leaving at an angle \(\pm \pi /3\). The contour \(\mathcal C_{\tilde{z}}\) is oriented so as to have increasing imaginary part. See Fig. 1.

We shift the contours for \(v,v'\) to the contour \(\mathcal C_v\) and shift the contour for \(\tilde{z}\) as to the contour \(\mathcal C_{\tilde{z}}\). Provided that \(\kappa \) is sufficiently large so that \(\bar{t}\) is sufficiently small, these shifts do not cross the poles. More precisely, the integrand of the kernel \(K_u(v,v')\) contains in its denominator the factors \(\sin (\pi (v-\tilde{z}))\) which vanishes if \(v-\tilde{z}\in \mathbb {Z}\), and \(\Gamma (\tilde{z})\) which is zero at \(\tilde{z}=0,-1,-2,\ldots \), and \(\Gamma (k+v)\) which vanishes at \(v=-k.-k-1,-k-2,\ldots \), and finally the factor \(\tilde{z}-v'\). So as long as \(\bar{t}\) is small so that the contour \(\mathcal C_v\) is sufficiently small, and \(\mathcal C_v \) does not intersect with \(\mathcal C_{\tilde{z}}\), these points are all avoided.

We will follow the idea from [5] to parametrize all other parameters (\(\kappa \), \( \bar{f}_{k,\theta ,\kappa }\) and \(\bar{g}_{k,\kappa }\)) by the value of the critical point \(\bar{t}\), and therefore write them as \(\kappa _{\bar{t}}\), \(\bar{f}_{\bar{t}}\) etc.

Lemma 4.3

Suppose that \(\kappa \) is sufficiently large. There exists constants \(c>0,\tilde{c}>0\) only depending on \(\kappa \) such that for all \(v\in \mathcal {C}_{v}\) satisfying \(|v-\bar{t}|<c\),

Furthermore, along the part of \(\mathcal {C}_{v}\) with \(|v-\bar{t}|\ge c\), one has \(Re(G(v)-G(\bar{t}))<c'\) for a strictly negative constant \(c'\).

Proof

By Taylor’s theorem and the fact that \(G'(\bar{t})=G''(\bar{t})=0\) and \(G'''(\bar{t})=-\bar{g}_{k, \kappa }\), one has

so there exists \(c>0,\tilde{c}>0\) only depending on \(\bar{t}\) (or equivalently on \(\kappa \)) such that within the small neighborhood \(|v-\bar{t}|<c\), the bound (4.5) holds.

To show the lemma for \(v\) on the other part of \(\mathcal C_v\), let \(\gamma _{\mathrm{E}}=-\Psi (1)=0.577\ldots \) be the Euler-Mascheroni constant. For \(v\) small, on has (see [9], Section 2])

Utilizing the expansions (4.7), and by the choices of \(\bar{t}\) and \(\bar{f}_{\bar{t}}=\bar{f}_{k,\theta ,\kappa }\) above,

We then first show that for \(\bar{t}\) small enough, there exists a constant \(c_1 \in (0,1)\) independent of \(\bar{t}\), such that if \(c\le |v-\bar{t}|<c_1\bar{t}\) then \(Re(G(v)-G(\bar{t}))\) is bounded by a strictly negative constant. In fact, one has

and if \(\bar{t} \rightarrow 0\) then

which is a negative constant, call it \(-b_1\); and for \(\bar{t}\) small enough, one has \(Re(\Psi ''(v))<0\) and its absolute value is much larger than \(b_1\Psi '(\bar{t})\). So one can choose a universal constant \(c_1\) (for instance \(c_1=1/2\)) such that the function \(Re(G'''(v))<-b_2\) if \( |v-\bar{t}|<c_1\bar{t}\) for some \(b_2>0\). Therefore by the integral form of Taylor’s remainder theorem and \(G'(\bar{t})=G''(\bar{t})=0\),

Noting that \((v-\bar{t})^3>0\) one obtains the claimed bound.

For the region \(|v-\bar{t}|\ge c_1\bar{t}\), expanding

and expanding \(\log \Gamma (k+\bar{t})\) around \(\bar{t}=0\) similarly, one has by the definition of \(G\)

where we all the error terms \(O(v^n)\) have been replaced by \(O(\bar{t}^n)\) since every point \(v\in \mathcal C_v\) is of order \(\bar{t}\).

By the expressions for \(\kappa _{\bar{t}}\) and \(\bar{f}_{\bar{t}}\) in (4.8) and (4.9), one has

and

with \(|c_2-1|\) arbitrarily small as \(\bar{t}\) sufficiently small. Substituting the above two identities into (4.10), and noting that there exists \(c_3>0\) such that \(2\bar{t}^{-1}+c_2\bar{t}^{-2}(v+\bar{t})<c_3\bar{t}^{-1}\), we find

Since along the part of \(\mathcal {C}_{v}\) with \(|v-\bar{t}|\ge c_1\bar{t}\), the \(O(\bar{t})\) error is dominated by the other two terms which are both \(O(1)\), and \(|v|>|\bar{t}|\) so \(\log (v/\bar{t})>0\), therefore \(Re(G(v)-G(\bar{t}))\) is bounded by a strictly negative constant. \(\square \)

Lemma 4.4

Suppose that \(\kappa \) is sufficiently large. There exists constants \(c>0,\tilde{c}>0\) such that for all \(z\in \mathcal {C}_{\tilde{z}}\) satisfying \(|z-\bar{t}|<c\),

Along the part of \(\mathcal {C}_{\tilde{z}}\) such that \(|z-\bar{t}|\ge c\), one has

for some constant \(c'>0\).

Proof

For \(z\in \mathcal {C}_{\tilde{z}}\) in a sufficiently small neighborhood of \(\bar{t}\), namely \(|z-\bar{t}|<c\) for a constant \(c>0\), the bound (4.12) follows from the Taylor’s theorem in the same way as (4.6) in the proof of Lemma 4.3; note that \((z-\bar{t})^3\) is now negative for \(z\in \mathcal C_{\tilde{z}}\).

For \(z\) outside this small neighborhood but within \(O(\bar{t}^{1/2})\) distance from \(\bar{t}\), the argument is the same as in the case of \(|z-\bar{t}|<O(\bar{t})\) in the proof of Lemma 4.3, namely we can show that in this region \(G'''(z)<0\) and note that now \((z-\bar{t})^3<0\) for \(z\in \mathcal C_{\tilde{z}}\).

For all \(z\) outside this \(O(\bar{t}^{1/2})\) the proof is as follows. From [5], Section 5.2]

Write \(z=x+iy\). Assume that \(y>0\), and we will show that the derivative with respect to \(y\) of the left side of (4.13) is bounded below by a positive number, which immediately yields (4.13). The proof for \(y<0\) follows in the same way since \(G(x+iy)\) is even in \(y\). Taking derivative, one has

Define a constant \(C(k,x,y,j)=\big ((x+k+j)^2+y^2 \big ) / \big ((x+j)^2+y^2\big )\). There exists a constant \(C'\) (depending on the fixed shape parameter \(k\) of the Gamma distribution) such that:

-

if \(\max (x,|y|,j)>C'\) then \(C(k,x,y,j)<2\);

-

within the compact domain

$$\begin{aligned} \{(x,y,j): j \ge 1 \text{ and } \max (x,|y|,j)\le C' \}, \end{aligned}$$the continuous function \(C(k,x,y,j)\) (regarding \(j\) as a real number) is bounded by a constant, and within the compact domain

$$\begin{aligned} \{(x,y,j): j=0 \text{ and } |z-\bar{t}|\ge 1 \text{ and } \max (x,|y|)\le C'\} \end{aligned}$$since \((x+j)^2+y^2\) is bounded away from zero, the continuous function \(C(k,x,y,0)\) is again bounded by a constant independent of \(x,y,j\);

-

and finally for \(j=0\) and \(O(\bar{t}^{1/2})<|z-\bar{t}| \le 1\), since we have shown in (4.8) that \(\kappa =O(\bar{t}^{-2})\), the second term on the right of (4.14) is \(O(\bar{t}^{-3/2})\) which dominates over the first term there which is \(O(\bar{t}^{-1/2})\).

Therefore if \(\kappa \) is sufficiently large, every summand on the right of (4.14) is positive.

We show that summing over sufficiently many (positive) terms on the right of (4.14) will give a quantity bounded below by a positive constant independent of \(x,y\). In fact, within a compact domain the right side of (4.14) is bounded below by a positive constant. And outside this compact domain, the right side of (4.14) is bounded below by \((\frac{\kappa }{2}-1)y \sum _{j} \frac{1}{(x+j)^{2}+y^{2}}\). The sum over \(j\) from \(0\) to \(J\) is estimated by \(\frac{1}{y} \Big (\arctan \frac{J+x}{y}-\arctan \frac{x}{y}\Big )\). The factor \(\frac{1}{y} \) cancels with the factor \(y\) outside the sum and we obtain a strictly positive number. Therefore the desired bound holds. \(\square \)

Proof of Theorem 1.3

Given Lemma 4.3 and Lemma 4.4 the proof of the theorem is standard (see for instance [1], [5] or [9]). Indeed the above two lemmas show that for any \(\varepsilon >0\), one can restrict to finite number of leading terms in the Fredholm series expansion, and localize the integrals in these leading terms to a window of size \(n^{-1/3}\) around the critical point, both approximations causing errors that are bounded by \(\varepsilon /3\) uniformly in \(n\). Rescaling the window by \(n^{1/3}\), the integrals from \(K_u\) then converge to the integrals from \(K_r\), which is essentially shown in the beginning of the section. \(\square \)

5 Replica Method for Strict–Weak Polymer

Given \(\overrightarrow{n}=(n_{1}\ge \ldots \ge n_{k})\), consider the moments

In this section we will show an explicit formula for \(u(t,\overrightarrow{n})\), which is stated in Theorem 5.3 below. Define an operator

Lemma 5.1

\(u(t,\overrightarrow{n})\) solves the following evolution equation

where \(\overrightarrow{n}\) is such that

for some positive integers \(c_{1},\ldots ,c_{\ell }\) so that \(\sum _{i=1}^{\ell }c_i=k\), and

where \(\#\) means the number of elements in a set, and finally \(m_{i}\) is the \(i\)-th moment of a Gamma random variable with shape parameter \(k\) and scale parameter \(\theta \) (with the convention \(m_0=1\)).

Proof

By the recursive relation (1.2),

Taking expectations on both sides, and noting that \(Z(t,-)\) is independent of \(Y(t,-)\) and \(Y(t,n)\) are i.i.d. for different \(n\), one has

Note that the last expectation can be written in terms of \(u\) by rearranging the \(n\) variables into non-increasing order (see the definition (5.1) of \(u\)). Within each “cluster” consisting of \(c_{i}\) identical variables

the variables \(n_{i}\) with \(i\notin A\) are subtracted by \(1\) so they must be rearranged to the right of the other \(n_{i}\) with \(i\in A\), resulting in

Therefore we obtain (5.2). \(\square \)

By the Laplace transform of the Gamma distribution given in (1.1), one has \(m_{i}=\theta ^{i}\prod _{j=0}^{i-1}(k+j)\).

Let us momentarily consider the true evolution equation in Lemma 5.1 when \(k=1\) and \(2\). For \(k=1\) we necessarily have \(\ell =1\) and \(c_1=1\). If \(A=\emptyset \), then \(m_{c_1^A}=m_0=1\), and the product \(\prod _j \tau ^{(j)}\) is simply \(\tau ^{(1)}\). If \(A=\{1\}\), then \(m_{c_1^A}=m_1\), and no factor contributes to the product \(\prod _j \tau ^{(j)}\). Therefore

For \(k=2\) and when \(n_1<n_2\), we have \(\ell =2\) and \(c_1=c_2=1\). It is straightforward to check that the cases \(A=\emptyset \), \(\{1\}\), \(\{2\}\) and \(\{1,2\}\) give the four terms on the right side below

And for \(k=2\) and when \(n_1=n_2\), we have \(\ell =1\) and \(c_1=2\). One can check that the cases \(A=\emptyset \), \(\{1\}\), \(\{2\}\) and \(\{1,2\}\) give the four terms on the right side below

Note that for general \(k\) and \(n_{1}>\ldots >n_{k}\), one has

which can be derived either from (5.2) or by taking expectation on (5.3). We call (5.4) the free evolution equation, and (5.2) the true evolution equation. Using the below reduction of the true evolution to the free evolution, it is possible to diagonalize the true evolution equation via coordinate Bethe ansatz. We do not pursue this further here, but reference, for example [7, 8, 16].

Lemma 5.2

Suppose that \(u(t,\overrightarrow{n})\) solves the free evolution equation (5.4) for all \(\overrightarrow{n}=(n_{1}\ge \ldots \ge n_{k})\), and satisfies the following two-body boundary conditions

Then \(u(t,\overrightarrow{n})\) solves the true evolution equation (5.2).

Proof

Since \(m_{1}=\theta k\), \(m_{2}=\theta ^{2}k(k+1)\), the two-body boundary conditions can be re-written as

It suffices to show that for a “cluster”

one has

Apply the moments formula of Gamma random variables and the boundary conditions. The above equation can be written as

Observe that each summand on the right hand side only depends on \(A\) via \(|A|\). So the above identity is equivalent to

This identity can now be proved by induction. For \(c=1\), both sides are \(\theta k+\tau ^{(c)}\). Suppose that it holds for \(c\) and we show that it holds for \(c+1\), namely the right hand side multiplied by \(\theta k+\tau ^{(c)}+c\theta \) is equal to the right hand side with \(c\) replaced by \(c+1\).

In fact, writing \(\theta k+\tau ^{(c)}+c\theta =(\theta k+a\theta )+(\tau ^{(c)}+(c-a)\theta )\), one has

Summing over \(a\) from \(0\) to \(c\), and combining pairs of same terms, one has

Therefore the identity holds by using \({c \atopwithdelims ()a}+{c \atopwithdelims ()a+1}={c+1 \atopwithdelims ()a+1}\). \(\square \)

Theorem 5.3

For \(n_1\ge n_2\ge \cdots \ge n_k\), one has the following moment formula

where the contour for \(z_k\) is a small circle around the origin, and the contour for \(z_j\) contains the contour for \(z_{j+1}+\theta \) for all \(j=1,\ldots ,k-1\), as well as the origin.

The following picture illustrates the choices of contours in the above integrals. The solid lines are contours for \(z_j\) (\(j=1,\ldots ,k\)). The smallest dashed contour is for \(z_k+\theta \), and is contained in the contour for \(z_{k-1}\). The slightly larger dashed contour is for \(z_{k-1}+\theta \), and is contained in the contour for \(z_{k-2}\), etc. These choices avoid the poles of the integrand.

Proof

Since

we immediately obtain that \(u(t,\overrightarrow{n})\) satisfies the free evolution equation.

To show that the boundary conditions are satisfied, we apply \(m_{1}^{2}-m_{2}+m_{1}(\tau ^{(i)}-\tau ^{(i+1)})\) to the right hand side (with \(n_{i}=n_{i+1}\)) yields a factor \(m_{1}^{2}-m_{2}+m_{1}(z_{i}-z_{i+1})\) which cancels (up to \(m_1\)) the same factor \(z_i - z_{i+1}-\theta =z_i - z_{i+1}-\frac{m_2-m_1^2}{m_1}\) in the denominator. Thus we can deform the contours for \(z_{i}\) and \(z_{i+1}\) together, and the factor \(z_{i}-z_{i+1}\) in the numerator shows that the integral is zero.

For the initial condition at \(t=0\), observe that if \(n_{1}>1\) there is no pole at \(z_{1}=\infty \) so the integral is zero; if \(n_{k}<1\) there is no pole at \(z_{k}=0\) so the integral is again zero. Since \(n_{1}\ge \ldots \ge n_{k}\), for the integral to be nonzero one must have \(n_{1}=\ldots =n_{k}=1\), in which case

We integrate \(z_{k},\ldots ,z_{1}\) one by one. Using residue formula at \(z_{k}=0\), \(z_{k-1}=\theta \), \(z_{k-2}=2\theta \) etc. one obtains \(u(t,\overrightarrow{n})=1\) for \(t=0\) and \(\overrightarrow{n}=(1,\ldots ,1)\). \(\square \)

These moments may grow too quickly to recover the Laplace transform of the polymer free energy. This is why we show the convergence of geometric \(q\)-TASEP to our polymer model and apply the \(e_q\)-Laplace transform formula for geometric \(q\)-TASEP in the previous sections.

Let us observe a \(q\)-deformation of the above moment formula:

The contour of \(z_i\) contains the contour of \(q \,z_{i+1}\) and \(1\).

In fact if we scale

then as \(\varepsilon \rightarrow 0\),

which shows that

Note that the formula (5.5) has appeared in [3](Theorem 2.1, case (2)), as describing moments for geometric \(q\)-TASEP (take \(a_i=1\) and \(\alpha _s=\alpha \) there):

The scalings above are consistent with those of Theorem 1.4.

6 Stationary Polymer

In this section we introduce a stationary version of the strict-weak polymer model. Our notation is that \(\mathbb {N}=\{1,2,3,\cdots \}\) and \(\mathbb {Z}_+=\{0,1,2,\cdots \}\). For any \(x=(t,n)\in \mathbb {Z}\times \mathbb {Z}\), we will sometimes write functions such as \(Z(t,n)\) in the form \(Z_x\) for simplicity of notation. If \(x\) and \(y\) are nearest neighbor points both in \(\mathbb {Z}\times \mathbb {Z}\), then we denote by \((x,y)\) the edge between them. We denote by \(e_1,e_2\) the unit vectors in the \(t\) and \(n\) directions, respectively.

Definition 6.1

The stationary process \(\{Z_x^*=Z^*(t,n)\}_{x=(t, n)\in \mathbb {Z}_+\times \mathbb {N}}\) of partition functions is parametrized by \(0<\beta ,k,\theta <\infty \) and defined as follows. Admissible paths \(\pi ^*\) emanate from the point \((0,1)\) and are allowed three types of edges: horizontal edges \((x-e_1,x)\) or diagonal edges \((x-e_1-e_2,x)\) as in Definition 1.1, as well as vertical edges along the \(y\)-axis: \(e=((0,j), (0,j+1))\) for \(j\in \mathbb {N}\). The weights on these edges are:

where \(d_e\) is defined in Definition 1.1, and \(\{\tau _{((i,1),(i+1,1))}\}_{i\in \mathbb {Z}_+}\) and \(\{\tau _{((0,j),(0,j+1))}\}_{j\in \mathbb {N}}\) are given edge weights on the boundary, and independent of \(d_e\). The distributions of these weights are

To paraphrase, admissible paths use weights \(d_e\) in the bulk (\(t\ge 1\) and \(n\ge 2\)) and weights \(\tau _e\) on the boundary (\(t=0\) or \(n=1\)). The partition function \(Z^*(t,n)\) is then defined by

Note that we still have \(Z^*(0,1)=1\), but \(Z^*(0,n)\ne 0\) for \(n>1\). These partition functions still satisfy the same recursive relation as (1.2) for \(t\ge 1\) and \(n\ge 2\)

where \(Y_x := Y_{(x-e_1,x)}\) are i.i.d. Gamma\((k,\theta )\) random variables as in the previous sections. Superscript \(^*\) is used to distinguish this partition function from Definition 1.1. Extend the definition of the variables \(\tau _e\) to the “bulk” by defining

This is true also on the boundary, by definition of \(Z^*_x\).

The edge weights \(\tau _e\) for edges \(e\) in the bulk of \(\mathbb {Z}_+\times \mathbb {N}\) can also be defined inductively. Begin with the given initial weights

and apply repeatedly the formulas

Using the recursive relation (6.4) one shows inductively that equations (6.5) and (6.6) are equivalent.

Proposition 6.2

The distribution of the process \(\big \{\tau _{(x,y)}: x\in \mathbb {Z}_+\times \mathbb {N}, y\in \{x+e_1, x+e_2\} \big \}\) is invariant under lattice shifts. In particular, the distribution of the process \(\big \{\tau _{(a+x,\,a+y)}: x\in \mathbb {Z}_+\times \mathbb {N}, y\in \{x+e_1, x+e_2\} \big \}\) is the same for all \(a\in \mathbb {Z}_+^2\).

The stationarity is a consequence of the inductive definition (6.6) of the weights and the next fact about gamma distributions. In conjunction with (6.6) the next lemma is applied to \((U,V,Y)= ( \tau _{(x-e_1-e_2,\, x-e_2)} , \tau _{(x-e_1-e_2,\, x-e_1)}, Y_x)\) and \((U',V')= ( \tau _{(x-e_1,\,x)}, \tau _{(x-e_2,\,x)})\). The statement for \(Y'\) is included in the lemma for the sake of completeness but not needed for our present purposes.

Lemma 6.3

Fix \(0<\beta , k,\theta <\infty \). Let \((U,V,Y)\) be independent random variables with distributions

Define \((U',V',Y')\) by

Then the vectors \((U',V',Y')\) and \((U,V,Y)\) are equal in distribution.

Proof

Rewrite the formulas as

The lemma follows from two basic facts about the beta-gamma algebra. First, if \(X\sim \) Gamma(\(\mu ,\theta \)) and \(Y\sim \) Gamma(\(\nu ,\theta \)) are independent, then \(X+Y\) is independent of the pair \(( \frac{X}{X+Y}, \frac{Y}{X+Y})\), and

Second, if \(X\sim \) Gamma(\(\mu +\nu ,\theta \)) and \(Z\sim \) Beta(\(\mu , \nu \)) are independent, then \(ZX\) and \((1-Z)X\) are independent with distributions \(ZX\sim \) Gamma(\(\mu ,\theta \)) and \((1-Z)X\sim \) Gamma(\(\nu ,\theta \)). \(\square \)

7 Free Energy Law of Large Numbers

It is a consequence of Theorem 1.3, that for \(\kappa \) large enough, the law of large numbers limit for the free energy of the strict-weak polymer model is given by \(\bar{f}_{k,\theta ,\kappa }\) of Definition 1.1. In this section we explain another approach to identify (and with a little more work, prove) the free energy law of large numbers

and

where in general the subscript \(x\) in \(Z_x(t,n)\) stands for the partition function of polymers emanating from \(x\).

Evaluating \(g^*\) is immediate from the law of large numbers. By following ratios (6.5) from \((0,1)\) to \((\lfloor {Nt}\rfloor ,\lfloor {Nn}\rfloor )\),

where we have used the fact that if \(X\) is \(\text{ Gamma }(k,\theta )\) distributed then \(\mathbb {E}(\log X)=\Psi (k)+\log (\theta )\). The two sums are sums of i.i.d. random variables, though the sums themselves are correlated with each other.

To compute \(g\), the starting point is the decomposition

To be specific, the boundary \(Z\)-values in the decomposition are simply the products

Take \(t=n=1\) in which case the first sum on the right vanishes. \(N^{-1}\log \) and limit as \(N\rightarrow \infty \) convert sums into maximums. Scale the summation index as \(\ell =\lfloor {Ns}\rfloor \) to arrive at the following equation:

The estimation needed for making these steps rigorous is left to the reader. Let \(t=1-s\) and change variables to \(y=\Psi (\beta )+\log \theta \) to get

Extend the convex function \(f(t)=-g(1,t)\) to \(\mathbb {R}\) by setting \(f(t)=\infty \) for \(t\notin [0,1]\). Rewrite the equation above as

This extended \(f\) is convex and lower semicontinuous, and hence by convex duality, for \(0\le t\le 1\),

Limit (7.2) implies homogeneity \(g(t,1)=tg(1,t^{-1})\) for \(t\ge 1\), and consequently we also have

Note that \(g(\kappa ,1)\) is equal to \(\bar{f}_{k,\theta ,\kappa }\) defined in Definition 1.2 since \(\bar{t}\) is defined to be the critical value of \(\beta \) where the infimum is attained.

References

Amir, G., Corwin, I., Quastel, J.: Probability distribution of the free energy of the continuum directed random polymer in \(1+1\) dimensions. Commun. Pure Appl. Math. 64(4), 466–537 (2011)

Barraquand, G., Corwin, I.: Random-walk in beta-distributed random environment. arXiv:1503.04117 (2015)

Borodin, A., Corwin, I.: Discrete time q-TASEPs. Int. Math. Res. Notices (2013). doi:10.1093/imrn/rnt206

Borodin, A., Corwin, I.: Macdonald processes. Probab. Theory Relat. Fields 158(1–2), 225–400 (2014)

Borodin, A., Corwin, I., Ferrari, P.: Free energy fluctuations for directed polymers in random media in \(1+1\) dimension. Commun. Pure Appl. Math. 67(7), 1129–1214 (2014)

Borodin, A., Corwin, I., Ferrari, P., Veto, B.: Height fluctuations for the stationary kpz equation. arXiv:1407.6977 (2014)

Borodin, A., Corwin, I., Petrov, L., Sasamoto, T.: Spectral theory for the q-boson particle system. Compos. Math. arXiv:1308.3475 (2013)

Borodin, A., Corwin, I., Petrov, L., Sasamoto, T.: Spectral theory for interacting particle systems solvable by coordinate Bethe ansatz. arXiv:1407.8534 (2014)

Borodin, A., Corwin, I., Remenik, D.: Log-gamma polymer free energy fluctuations via a Fredholm determinant identity. Commun. Math. Phys. 324(1), 215–232 (2013)

Corwin, I., O’Connell, N., Seppäläinen, T., Zygouras, N.: Tropical combinatorics and Whittaker functions. Duke Math. J. 163(3), 513–563 (2014)

Matveev, K., Petrov, L.: \(q\)-Randomized Robinson–Schensted–Knuth correspondences and random polymers. arXiv:1504.00666 (2015)

O’Connell, N.: Directed percolation and tandem queues. Technical Report STP-99-12, DIAS (1999)

O’Connell, N.: Directed polymers and the quantum Toda lattice. Ann. Probab. 40(2), 437–458 (2012)

O’Connell, N., Ortmann, J.: Tracy–Widom asymptotics for a random polymer model with gamma-distributed weights. arXiv:1408.5326 (2014)

Seppäläinen, T.: Scaling for a one-dimensional directed polymer with boundary conditions. Ann. Probab. 40(1), 19–73 (2012)

Thiery, T., Le Doussal, P.: Log-gamma directed polymer with fixed endpoints via the replica bethe ansatz. arXiv:1406.5963 (2014)

Acknowledgments

I. Corwin was partially supported by the NSF grant DMS-1208998 as well as by Microsoft Research and MIT through the Schramm Memorial Fellowship, by the Clay Mathematics Institute through the Clay Research Fellowship and by the Institut Henri Poincaré through the Poincaré Chair. H. Shen would like to thank Prof. Martin Hairer for his support on a visit to MSRI in July 2014 where part of this work was done. T. Seppäläinen was partially supported by NSF grant DMS-1306777 and by the Wisconsin Alumni Research Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Corwin, I., Seppäläinen, T. & Shen, H. The Strict-Weak Lattice Polymer. J Stat Phys 160, 1027–1053 (2015). https://doi.org/10.1007/s10955-015-1267-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-015-1267-0