Abstract

Since the cell assembly (CA) was hypothesised, it has gained substantial support and is believed to be the neural basis of psychological concepts. A CA is a relatively small set of connected neurons, that through neural firing can sustain activation without stimulus from outside the CA, and is formed by learning. Extensive evidence from multiple single unit recording and other techniques provides support for the existence of CAs that have these properties, and that their neurons also spike with some degree of synchrony. Since the evidence is so broad and deep, the review concludes that CAs are all but certain. A model of CAs is introduced that is informal, but is broad enough to include, e.g. synfire chains, without including, e.g. holographic reduced representation. CAs are found in most cortical areas and in some sub-cortical areas, they are involved in psychological tasks including categorisation, short-term memory and long-term memory, and are central to other tasks including working memory. There is currently insufficient evidence to conclude that CAs are the neural basis of all concepts. A range of models have been used to simulate CA behaviour including associative memory and more process- oriented tasks such as natural language parsing. Questions involving CAs, e.g. memory persistence, CAs’ complex interactions with brain waves and learning, remain unanswered. CA research involves a wide range of disciplines including biology and psychology, and this paper reviews literature directly related to the CA, providing a basis of discussion for this interdisciplinary community on this important topic. Hopefully, this discussion will lead to more formal and accurate models of CAs that are better linked to neuropsychological data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The cell assembly (CA) hypothesis states that a CA is the neural representation of a concept (Hebb 1949). The CA hypothesis (and indeed Hebb’s related learning rule) has been increasingly supported by biological, theoretical, and simulation data since it was made. The CA can act as a categoriser of sensory stimuli, so the presentation of an object (for example, an orange) to an individual may cause a particular CA to become active, allowing the individual to identify the object as an orange. Similarly, CAs can be activated without direct sensory stimuli, so a person’s CA for orange will ignite when they think about an orange.

CAs are composed of neurons, so they are an intermediate structure smaller than the entire brain, yet larger than neurons. CAs provide an organising principle for the study of mind and brain, though certainly not the only organising principle. Moreover, CAs cross the boundary between psychology and neurobiology, because psychological concepts emerge from the behaviour of neurons.

As the neural representation of a concept, the CA is extremely important, but there is no general agreement on its definition, and the scientific community’s understanding of CAs is incomplete. Many in the community support the idea of CAs, but others are not confident that CAs exist. Perhaps this is due to the range of disciplines that make use of the concept. This paper brings together the community’s current understanding of this important concept.

This paper reviews evidence supporting the CA hypothesis, and evidence that further explains CA behaviour, function, and structure. While our conclusion does ultimately rely on converging evidence, we have read a significant fraction of the research literature and have actively sought evidence against our conclusion that CAs exist in mammalian brains. A range of psychological, biological and modelling evidence is used to develop a picture of current understanding of CAs. While there is a rich knowledge of CAs, there are many unanswered questions involving CAs, and this paper discusses some of them.

1.1 Hebb’s cell assembly hypothesis

Hebb’s CA hypothesis (Hebb 1949) states that a CA is a collection of neurons that is the brain’s mechanism for representing a concept. This hypothesis ties neurobiology to psychology in that the collection of neurons is a neurobiological entity, and the concept that they support is a psychological entity. A simple example would be that a person’s concept of dog is neurally implemented by a set of their neurons, their dog CA. CAs encode elements of higher cognitive processes like words, mental images and other types of concepts.

The standard model, derived directly from Hebb (1949), is that these neurons have high mutual synaptic strength. When a sufficient number of them fire, due to, for example, mention of the word dog, they cause other neurons in the CA to fire, which in turn cause other neurons in the CA to fire, leading to a cascade of neural firing. This neural circuit can persist long after the initial stimulus has ceased. The psychological correlate of this persistent firing of the neurons in the CA is a short-term memory (STM); without further support, this reverberation can persist on the order of seconds.

The high mutual synaptic strength is a result of Hebbian learning. The neurons have co-fired in response to earlier stimuli, and this has caused the strength of the synapses between them to increase. Thus, the CA is typically formed due to repeated presentation of similar stimuli, and thus represents a long-term memory (LTM).

Knowledge of brain physiology and pharmacology has advanced greatly since Hebb’s day, but, while taking account of more modern knowledge, the CA hypothesis still rests on three theoretical “principles” about CAs being organized collections of neurons. These principles are

-

1.

A CA is a relatively small set of neurons, which encodes each concept. With an estimated \(10^{11}\) neurons in the human brain (Smith 2010), the authors suggest a range of likely CA sizes in terms of potentially constituent neurons, as being \(10^3\) to \(10^7\) neurons per CA. At the lower end of the range, we suggest CAs are more likely to be atomicFootnote 1, whereas at the upper end of the range we imagine super-CAs composed of many sub-CAs at many levels; e.g. one might consider a cognitive map (Buonomano and Merzenich 1998; McNaughton et al. 2006) as such a super-CA. Note also, at the individual neuron level, it is assumed that a neuron may be a member of different CAs.

-

2.

The neurons of a CA can show self-sustaining persistent activity. This persistent activity is often called reverberation. The basic idea is that the neurons of a CA fire at an elevated rate after the initial stimulation that ignited the CA. (Please note, we consider that when substantially activated, neurons emit a spike or “fire”, whereas activated CAs are said to “ignite” (Braitenberg 1978), and potentially remain ignited and active for seconds.)

-

3.

A CA’s set of neurons, which represents a particular concept, is learned Typically, this learning is assumed to be through synaptic weight change. However, neural death, neural growth, synaptic death, and synaptic growth may all play a part in the growth of the CA, all within a Hebbian learning framework.

These principles are the basis of the standard model, which is further discussed, along with some of its variants, in Sect. 2.6. Important activities occur in the brain at a range of time scales, and the CA provides coherence on at least two of these scales, seconds for STM, and years for LTM.

1.2 What’s in the rest of the paper

Section 2 provides a relatively brief summary of the neural data that supports the existence of CAs in human and other mammalian brains. This section is driven by neural behavioural data linked to psychological behavioural data, and these data provide compelling evidence that CAs do exist in mammalian brains. Though the CA model is informal, this section also introduces a standard model and some of its variants, while discussing one model that is clearly not a CA.

The existence of CAs leads to a series of questions about their location, function, and form. Section 3 reviews current understanding of these questions and their, tentative, answers; this and later sections are necessarily more speculative. One psychological system that is of particular interest is working memory, and Sect. 4 deals with its relations to CAs in some depth. These questions are more complex than the existence question, and thus their answers depend more on analysis of psychological behaviour, arguments from theory, and simulation. These complex questions, combined with the difficulty of analysing the behaviour of CAs composed of thousands or millions of neurons, currently leave modelling as the best method of addressing these questions about CAs. Section 5 reviews models of CAs, and computational systems that use them.

CAs do not provide all the answers to understanding the brain, nor are they fully understood. In the hope of advancing the understanding of CAs, Sect. 6 discusses some current questions about CAs, particularly some questions involving learning. It also notes that CAs may vary from concept to concept, and within one concept over time. Section 7concludes this paper.

The primary concern of this paper is the functioning of humans both psychologically and neurally. However, this functioning is similar to that of other animals, in particular, other primates and other mammals. Consequently, much of the evidence is drawn from animal studies.

Before turning to evidence of CAs in the brain, a final problem we faced building this review is that not all the potentially relevant papers actually mention CAs, or near synonyms such as neuronal ensembles. In this review, we tend to cite papers that are explicitly about CAs, and we can only cite a sample of the papers we have looked at, and these, realistically, are only a fraction of the published literature. The papers that were included were highly cited, seminal, central to our arguments, and usually some combination of the three. We also frequently omitted second papers from authors, as the first provided the link to the body of work.

2 Existence of cell assemblies

Hebb proposed CAs as a solution consistent with the physiological and psychological data available in the 1940s. Since that proposal, there has been a vast amount of scientific evidence uncovered supporting his hypothesis that the neural basis of concepts are sets of neurons that remain persistently active and are formed by some sort of Hebbian learning mechanism.

The basic structure of the brain is a loosely coupled net of neurons where neurons are firing constantly at a low rate. Other cells, such as glia, are widely considered to have little cognitive effect at the level of individual concepts. This neural structure is capable of supporting CAs with the CA igniting when only a small subset of its neurons initially fire (see Sect. 2.1).

CAs require population coding (principle 1), which is also called ensemble coding. A relatively small set of neurons is the basis of each CA, with neurons able to participate in multiple CAs. The evidence supports the use of population coding throughout the brain (Averbeck et al. 2006a, and see Sect. 2.2) a review article shows that population coding accurately correlates with a range of cognitive states (Schoenbaum 1998), and a vast range of specific studies support different aspects of population coding.

There is a large body of evidence for persistent activity (principle 2) (see Sect. 2.3) and for a fourth principle, synchronous neural firing correlated with cognitive states, (see Sect. 2.4). This evidence provides very solid support for the existence of CAs. The data related to learning (principle 3) provide further support for the existence of CAs (see Sect. 2.5). A set of neurons that adhere to these four principles are a CA.

Several articles provide reviews of different sorts of evidence for CAs. A wide range of data are presented showing that neural firing behaviour supports a CA model (Harris 2005). Spike trains show structure that is not present in the stimulus, and they are not strictly controlled by sensory input; spikes are coordinated, and firing behaviour correlates with internal cognitive states. A range of data, including imaging data such as PET and MEG, but also behavioural, lesioning and event-related potential data, suggests that CAs for words are distributed in brain areas that are semantically specific (Pulvermuller 1999). For instance, the CAs for action words include neurons in motor cortices. Some of the evidence from the sources in these reviews is recounted in the remainder of this section.

2.1 Anatomical data

When developing his hypothesis, Hebb considered several anatomical theories that still have substantial scientific support. Two in particular are that neurons are constantly active throughout the brain, and that large amounts of cortex can be removed with apparently little effect. Since 1949, there have been many advances in the understanding of neuroanatomy, with, for instance, more modern evidence showing that each neuron tends to fire about once a second (Bevan and Wilson 1999; Bennett et al. 2000), providing a large range of background activity.

When a neuron fires, it sends activation to neurons to which it is connected, and neurons that have received a great deal of activation fire (Churchland and Sejnowski 1999). Particular sets of neurons are driven at particular times, leading to firing rates as high as every 5ms (Ylinen et al. 1995). Most neurons lose their activation when they fire, and need more activation to fire again (Krahe and Gabbiani 2004). Almost all of the synapses of a neuron connect to a unique neuron, and there are rarely as many as three synapses to one neuron from a given neuron (Braitenberg 1989). Several neurons need to fire as input to a neuron to make it fire (Churchland and Sejnowski 1999; Bruno and Sakmann 2006).

Since many neurons are required to make another neuron fire, and there is a large amount of background activity, a large number of neurons need to fire to make another neuron reliably fire. However, many neurons firing together can make many other neurons fire, so groups of neurons will tend to fire together. Sparse connectivity supports population coding (see Sect. 2.2). Furthermore, since neurons can be removed with little cognitive impact, the cognitive elements must depend on a large distributed set of neurons.

Similarly, connectivity and firing support persistent activity (Sect. 2.3), because an initially firing set of neurons can cause a second set to fire, which can in turn cause the first set to fire. Of course, this behaviour need not be limited to two non-overlapping sets of neurons, so the neurons in both sets can fire at an elevated rate.

Broadly speaking, the cortex is an enormous collection of neurons that is not easily separable into smaller structures (Braitenberg 1989). At a coarse grain, the cortex has a laminar architecture, that is a roughly 2,000 cm\(^2\) sheet with six thin layers (Martini 2001), and this paper mostly considers cortical CAs (but see Sect. 3.1.4). Neuroscientists have divided the cortex into areas (e.g. Brodmann areas), and when there are feed forward connections from one area of the brain to another, there are almost always connections back from the second to the first (Lamme et al. 1998). These recurrent connections could support CAs that cross brain areas and thus integrate features over a range of complexities and modalities.

2.2 Cell assemblies and population coding

The first principle of the CA hypothesis is that each concept is represented by a set of neurons. With population coding, a particular concept is coded by a set of neurons that fire at an elevated rate when the concept is perceived or is in STM.

An alternative to population coding is that a single cell represents a concept, commonly known as the grandmother cell (Barlow 1972). However, a single neuron cannot represent a concept because neurons die, and one would lose the concept of one’s grandmother if that grandmother neuron died. Evidence available to Hebb also eliminates this possibility; the loss of large areas of cortex would remove many concepts, and background neural activity would cause the concepts to pop on and off. More recently, the growing understanding of the prevalence of neural death (Morrison and Hof 1997) shows that the grandmother cell hypothesis is untenable.

The other extreme alternative to population coding is that all of the neurons code for each concept, and particular firing behaviour determines which concept is active. Hebb argued against this sensory equipotentiality using the effects of learning (see Sect. 2.5). Subsequently, substantial direct evidence has supported population coding over equipotentiality, e.g. a review shows population coding is used in a wide range of brain areas (Schoenbaum 1998).

Much of the evidence for population coding, and other phenomena, is derived from the widely used technique of placing electrodes in or near a neuron to measure its electrical potential. The electrodes can measure depolarisations and thus neural spiking. Direct recording of neural activation levels and spikes is, to a large degree, an ideal way of understanding the behaviour of a neuron because it shows when neurons spike, and it is widely believed that spiking is how most information is passed between neurons. Electrodes have been used for neural recordings for quite some time (e.g. Hubel and Wiesel 1962; Fuster and Alexander 1971). The use of electrodes and other single unit recording technology has improved and continues to improve. Still with current technology, it is only possible to measure about 1,000 neurons simultaneously. Improved techniques, for instance optical techniques (see Wallace and Kerr 2010 for a review), are expanding the number of neurons that can be simultaneously recorded. These single unit recording techniques can work in vitro or in vivo, enabling measurement of neurons in behaving animals, and are leading to a better understanding of CA dynamics. Unfortunately, these measurements are intrusive, so only in rare cases can performing neurons be measured in vivo in humans using single unit recording. Moreover, the relatively small number of neurons recorded means that it is difficult to record relatively precise dynamics of a large number of neurons in a living animal.

One piece of evidence for population coding using single unit recording involves neurons in the macaque visual cortex that show population coding of shape (Pasupathy and Connor 2002) with specific neurons spiking rapidly when specific curves are present at particular angles from the centre of the object. Note that, in this case, firing behaviour is directly linked to environmental stimuli. Several neurons respond to the same feature, but the sampled neurons collectively allow a vast number of objects to be uniquely identified. Population coding allows neurons to respond differentially to a range of stimuli, and collectively they can accurately represent a range of stimuli despite the noise of neural response (Averbeck et al. 2006a).

Population coding supports a diverse range of group behaviour. The simplest behaviour is that a set of neurons all respond to a particular stimulus, this is a group of grandmother cells. In a more complex form, two groups of cells can code for specific features, say lines of two given angles; activation of varying numbers or varying degrees of each set can represent an intermediate feature (Hubel and Wiesel 1962). The degree of overlap is a topological issue (see Sect. 3.2) that enables a range of behaviour including continuous valued categories (see Sect. 3.3).

There is a vast amount of evidence of population coding. Many of the papers described below show population coding (e.g. Sakurai et al. 2004; Nicolelis et al. 1995; Sigala et al. 2008) and many papers mentioned in Sect. 3.1). These are just some of the thousands of experiments showing sets of neurons with elevated spike rates during specific cognitive events.

While a range of neurons may respond to a particular stimulus, it is not entirely clear if all responding neurons contribute to the perception. There is evidence (Purushothaman and Bradley 2005) that only the most active neurons participate. If only some of the firing neurons are involved in the perception, the population coding uses fewer neurons.

Population coding does exist without CAs. For example, neurons in the primary visual cortex use population coding, but their elevated activity does not persist when environmental stimulus ceases (Hubel and Wiesel 1962, though see Sect. 3.1.2). The connection between these visual cortical neurons may be insufficiently strong to support reverberation. There does seem to be some confusion in the literature about this issue with, in some cases, the term Cell Assembly referring to population coding without reverberation (e.g. Liebenthal et al. 1994). In this case, the authors would prefer the term neuronal ensemble, ensemble coding, or indeed population coding, all of which refer to both reverberating and non-reverberating sets of neurons.

2.3 Persistent activity

The second principle of the CA hypothesis is persistent activity; the CA reverberates (Sakurai 1998b). A CA can remain active after environmental stimulus ceases, and this reverberation enables the CA to act as an STM (see Sect. 4). Figure 1 reproduces Hebb’s illustration of this reverberation. In the figure, the nodes represent neurons, and the numbers represent order of neural firing, and is meant as an illustration. In measured CAs, this figure is an extreme simplification; hundreds of neurons may spike in any given millisecond, each neuron may spike many times in a second, and any two neurons may not have the same order of spiking in subsequent spikes.

A reverberating circuit (reproduced from Hebb 1949)

The standard mechanism for showing persistence is neural firing measured by single unit recording, and there is extensive evidence of persistence. One review shows sustained activation in a range of brain areas including premotor and inferior temporal neurons (Funahashi 2001). For example, prefrontal neurons of monkeys showedelevated firing, associated with particular tasks, which persistsduring delay portions of those tasks (Assad et al. 2000). Persistentactivity has also been shown in rat motor cortex that correlates in task specific ways (Isomura et al. 2009). Rhesus monkeys display persistent neural firing that correlates with duration (Sakurai et al. 2004), so sets of specific task general neurons maintain specific durations.

Firing behaviour has been linked to cognitive states. For example, rhesus monkeys show elevated neural spiking in the prefrontal cortex and medialis dorsalis for times up to a minute while attending and recalling a place for reward (Fuster and Alexander 1971). Sets of neurons respond to the cue, while other sets respond during the delay, and a third set responds during both cue and delay. As this is not hand or item specific, this suggests sets of neurons that support rehearsal.

There is a range of neuron types, though a discussion is beyond the scope of this paper (but see Klausberger et al. 2003 for example). While typical neurons (e.g. pyramidal) fire relatively regularly given a constant input, other neurons are bursty going through phases when they fire frequently, and then not at all. Bursty neurons oscillate on scales of less than once per second as shown by single unit recording, and this type of persistent activity occurs throughout sensory, motor and association cortices (Steriade et al. 1993). In vitro recordings of rat cortical cells showed persistent firing of stimulus specific cells lasting on the order of 10 s (Larimer and Strowbridge 2009). Many cortical and subcortical regions exhibit this type of behaviour (Seamans et al. 2003). Similarly, spiny neurons change between up and down states at a similar rate, and particular neurons have correlated changes (Stern et al. 1998).

There are a wide range of techniques that, unlike single unit recording, are non-invasive, for example, fMRI, MEG, and EEG are all widely used. Unfortunately, none directly measures the behaviour of single neurons; instead they measure the behaviour of a large number of neurons. For example, fMRI measures blood flow in a region of the brain which corresponds to elevated neural firing rates in brain areas showing the aggregate behaviour of many thousands or millions of neurons. As these techniques are non-invasive, humans can be measured relatively easily. fMRI has been used to show persistent activity in human prefrontal cortex that corresponds with the duration of a working memory (Curtis and D’Esposito 2003). Persistent firing is indicated by fMRI when people locate the source of a sound (Tark and Curtis 2009). This occurs in the frontal eye field even though the location is behind the subject, so there is no direct sensory stimulus.

Transcranial magnetic stimulation is a non-invasive procedure that raises activity (neural firing) in specific areas of the brain. If it is done before stimulus, the stimulus is recognized faster, but if it is done during processing, processing is interrupted (Silvanto and Muggleton 2008). Before stimulus, this is consistent with raising all neural activity in the area which speeds recognition by helping the winning CA ignite faster. During processing, the increased activity causes competing CAs to ignite inhibiting already ignited CAs.

2.4 Correlated activity and functional connectivity

There is evidence that neurons that react to the same stimulus or cause the same action fire synchronously. Modellers have used the saying “neurons that wire together, fire together”. If two isolated neurons fire at an elevated rate, there is no particular reason for a relation between the firing times, but as neurons that are directly connected affect each other’s firing, their mutual firing timings may be correlated. If a large number of neurons are responding to a particular stimulus, they should have correlated firing. Simulation evidence shows that these synchronously firing sets of neurons can be learned using Hebbian learning (Levy et al. 2001).

Correlated activity is not one of Hebb’s principles. von der Malsburg (1981) introduced correlated activity as an extension of the basic CA theory of the day, proposing it as a solution to the binding problem (see Sect. 5.2.2). Extensive mathematical, simulation, and biological evidence indicate that neurons involved in processing the same percept have correlated activity. This enables synchrony to act as evidence that a particular set of neurons population code a concept (e.g. Bressler 1995; Sigala et al. 2008); if they are persistent, and the concept has been learned, synchrony is evidence that particular neurons are firing in support of the same cognitive event.

A review article indicates that correlated firing occurs in virtually all brain areas, and can be used to coordinate activity across areas (Bressler 1995). For example, it has been shown that frontal cortical neurons in monkeys fire synchronously in response to a particular object (Abeles et al. 1993). This synchrony is statistically significant, but not precise to the millisecond level.

Another example shows neurons in the monkey prefrontal cortex coding for task order. Neurons had highly correlated elevated firing rates for particular events in a sequence, and different elements had an orthogonal set of neurons (Sigala et al. 2008). Similarly, there is highly correlated activity in neurons in the prefrontal cortex showing associations between sensory modalities (Fuster et al. 2000). Neural firing in the rat anterior cingulate cortex was measured using multiple single unit recordings, and the neurons tracked each aspect of the task by entering collectively distinct states. Neurons were correlated with specific other neurons in a task and state-dependent manner. This indicated that the correlated neurons were members of particular CAs (Lapish et al. 2008), because they population coded for a concept, persisted, and were learned; that is, CAs were involved in higher order cognitive processing.

Externally measured neural firing in the rat hippocampus can be used to determine the location of the rat, and there is synchronous firing of neurons associated with a particular location. However, firing synchrony is statistically more significant than the correlation of single neurons with location, so the best determinant of a neuron firing is not location but the firing of particular other neurons (Harris 2005).

The evidence for synchronous firing is so significant that it is has been called functional connectivity (e.g. Gazzaley et al. 2004). Though the synapse between two neurons cannot be easily traced, they are functionally connected because they fire synchronously. Synchronous firing has been called a signature of assemblies of cells as components of a coherent code (Singer et al. 1997).

Another link between CAs and synchrony is that percepts can be induced by synchronous presentation (Usher and Donnelly 1998). When an ambiguous stimulus is presented, flashing a subset of the stimulus at 16ms intervals leads the subject to label the stimulus as an element of the category consistent with the subset. The subjects do not notice the flash and report a constant, ambiguous, stimulus. The visual psychophysics experiments of Usher and Donnelly (1998) are claimed to demonstrate that a global, cortically based, visual binding and segmentation mechanism is sensitive to stimulus asynchronies as low as 16ms. They attribute the effect to synchronous neural activation representing spatially separated stimulus elements. Although Usher and Donnelly admit that further research is “required to test and reveal the nature of the synchrony-binding mechanis”, asynchronies of such short duration suggest that if their mechanism is CA based (they do not discuss CAs), then a CA architecture that can comfortably handle differences of a few milliseconds is necessary and the obvious candidate mechanism is the synchronous neural firing of CAs that maintains the genuine asynchrony in the laboratory stimulus.

A third link between synchrony and CAs is shown using voltage sensitive dyes. These dyes respond to the voltage of cell membranes and thus can show the activity of neurons; they are imaged using sensitive fast cameras providing a picture of the membrane potential of many neurons in a few mm square area, evolving rapidly (\(<\).1 ms) (Shoham et al. 1999). Experiments using these dyes showed neurons in early visual areas spontaneously and synchronously firing in the absence of visual stimulus; the firing is correlated with firing of stimulus specific response (Grinvald et al. 2003). Note that synchrony in this case is not linked with persistence, but population coding.

Synchrony can also support interactions between CAs. Evidence for CAs bound (see Sect. 5.2.2) by temporal synchrony has been reviewed (Freiwald et al. 2001); for example, neurons responding to a single moving bar respond in synchrony as a gamma wave (Gray and Singer 1989). It has been shown that neurons across different brain areas collaborating in a task are synchronized, but are unsynchronized after the task (Roelfsma et al. 1997).

Since binding can induce synchrony, if two neurons, or sets of neurons, are firing synchronously, it does not mean they are part of the same CA. However, it does imply they are in some sense working together. However, the more circumstances that the neurons fire synchronously, the more likely the neurons are to be part of the same CA.

Brain waves come in different forms (e.g. theta waves) and emerge from populations of neurons firing roughly synchronously. These oscillations are related to a long standing theory of brain wave propagation (Beurle 1956). A review describes how different frequencies have different cognitive effects (Buzaski and Draguhn 2004), with slower frequencies used to recruit larger groups of neurons. Theta waves are slower than gamma waves, and it has been proposed that they are used in working memory operations (Auseng et al. 2010). Theta waves also support internal state consistency when rat hippocampal ensembles that represent location switch from the representation of one location to another on the theta wave (Jezek et al. 2011).

One way of looking at CAs is that all neurons contribute relatively independently. Another way is that waves of firing create a form of short-term memory. This dynamical CA hypothesis (Fujii et al. 1996) fits in with a synchrony theory (von der Malsburg 1981).

The variety of waves provides different mechanisms for supporting individual CAs and for linking CAs. These linking mechanisms are not well understood, but it seems these waves are a measurable property of interactions between CAs.

2.5 Learning data

The third principle of the CA hypothesis is that CAs are learned. Hebb used the prevalence of learned response in making decisions to derive this principle. The importance of learning fits in with the long-standing recognition of the importance of learning to the functioning cognitive being (James 1892). Hebb’s famous learning rule was introduced in this context, and subsequently, substantial physical evidence that this type of learning occurs has been found.

Hebb noted the importance of learned behaviour. For example, eye blink to a moving object is a learned response, though it is practically immune to extinction (Riesen 1947). Later learning is based on earlier learning with early learning tending to be permanent. More recent neurophysiological evidence of neurons becoming tuned to specific categories has been unearthed (see Keri 2003 for a review).

There is substantial evidence of change in neural response in adult animals that is correlated with cognitive learning. For example, recording of two areas of rat motor cortex showed increasing correlation of particular neurons as a task was learned (Laubach et al. 2000). This enabled prediction of success or failure of the animal performing the task from the behaviour of recorded neurons alone. Another study of adult monkeys shows recorded temporal cortical neurons becoming tuned to specific category relevant features while learning categories, and humans behaved similarly on the tasks (Sigala et al. 2002). Similarly, gerbil primary auditory cortical neurons become tuned to rising or falling modulated tones (Ohl et al. 2001).

Another type of evidence comes from simultaneous activity, where learning-induced functional connectivity persists for at least days in rats that have learned particular tasks (Baeg et al. 2007). This connectivity increases rapidly during early phases of learning, and then approaches an asymptote.

There is evidence that the hippocampus plays a special role in the consolidation of memories (Sutherland and McNaughton 2000). Also stimulation of in vitro hippocampal cells creates temporally separated CAs (Olufsen et al. 2003). This implies that many CAs must include neurons in several cortical areas including (at least in early stages of learning) the hippocampus.

Items learned under high arousal persist longer, but are more difficult to access initially. This is consistent with a CA being formed; the CA formed under high arousal is difficult to access initially since many of its neurons are in a refractory state (Kleinsmith and Kaplan 1963). One computational model explains this via a CA-based simulation (Kaplan et al. 1991).

While an even brief review of the literature on sleep is beyond the scope of this paper, sleep does play a role in learning. For example, hippocampal place cells in rats that are highly active during exploration show higher firing rates during the next sleep cycle (O’Neill et al. 2008). Neurons that co-fire in prefrontal cortex and hippocampus during activity co-fire again in subsequent sleep and rest periods (Nitz and Cowen 2008; Maquet 2001; Sutherland and McNaughton 2000).

There is a great deal of evidence of learning in adults, but less onearlier learning. Behavioural research suggests that the ability to recognize objects improves throughout childhood and adolescence (Nishimura et al. 2009).

There is neural evidence using fMRI that shows the early visual areas complete their development for retinotopic mapping and contrast sensitivity by age seven, while later areas for place, face and object selection take longer to develop (Grill-Spector et al. 2008). One study uses fMRI to show that the area of cortex devoted to face and place grows from children to adolescents and on to adults while the corresponding cognitive ability also grows (Golari et al. 2007). This does not occur for object recognition implying the child (7 years old) has fully or nearly fully developed object recognition capacity.

Developmental studies have the problem of time. It takes longer to run a developmental study compared to a relatively brief study to learn a particular object or task. Overcoming this problem, one study of visual object recognition of children up to age one uses event-related potential as measured by EEG. The study examined brain activity when presenting novel and familiar toys and faces. Statistically significant changes occurred throughout the year, though some types of activity plateaued; e.g. the mid-latency negative component (assumed as an obligatory attentional response) plateaus at 8 months (Webb et al. 2005). This indicates some early brain changes that stabilize.

To this point, this section has used a converging evidence approach with neural studies showing that some sets of neurons use population coding, some persist, and some are learned. One study shows a set of neurons that does all three (Freedman and Assad 2006). Neurons in Macaque middle temporal and intraparietal areas were recorded showing that neurons responded with population coding to the movement of objects in two particular sets of directions. These neurons persistently fired during a delay. When the task changed so that the monkeys had to respond to new sets of directions, the population of neurons reorganised so that they responded to the new categories. Similarly, primate prefrontal and caudate nucleus neurons population code for expected reward or punishment (Histed et al. 2009). Many fire persistently throughout the delay period, and as the task changes, the neurons’ behaviours change.

2.6 The standard model and variants

The authors consider the category of CAs a natural kind, and not one defined by necessary and sufficient conditions. Thus, a model can be used to indicate the features that are central to CAs. However, there really is no such thing as the standard CA model, but Hebb’s original idea is a good starting point. CAs are typically considered to consist of neurons that maintain persistent activity through strong connections with all neurons being roughly similar, largely as described by Hebb (1949).

A first modification of the model was the introduction of inhibition (Milner 1957). Hebb did not have solid evidence of inhibitory neurons or synapses, so left inhibition out of the model. With subsequent biological support, inhibition was added to the model, and supports competition between neurons or CAs.

Neurons are firing constantly, and this background activity has been accounted for by Hebb’s standard theory. Background activity is not usually sufficient to ignite a CA, but once sufficient additional firing has begun, activity in the CA passes a critical threshold, the CA ignites, and can then persist.

The authors also consider synchronous firing as part of the standard theory. While not part of Hebb’s original theory, there is broad evidence for synchronous firing in CAs, and that it occurs is widely agreed in the scientific community. However, the degree to which synchronous firing is used to dynamically bind CAs is not widely agreed.

A neuron that never co-fires with the neurons in a CA is not part of that CA. For that matter, one that is always out of synchrony is also not part of the CA.

In addition to a widely agreed standard model, there are several extensions. There is biological evidence for three other mechanisms for sustaining persistent activity: synfire chains, bistable neurons (Durstewitz et al. 2000), and short-term potentiation (STP). In synfire chains, one set of neurons activates another, then the second set activates a third, and so on, until the initial set is reactivated. These sets of neurons fire synchronously, leading to a chain of synchronously firing neurons (Ikegaya et al. 2004). This differs from the standard model, because the CA is broken into independently firing subsets.

Bistable neurons are another less traditional form of persistence. Mentioned in Sect. 2.3, some neurons move from states of rapid firing (up states), to states of no firing (Stern et al. 1998; Steriade et al. 1993). In another study, unstimulated neurons moved to up states spontaneously, and synchronously (Cossart et al. 2003); this behaviour is consistent with attractor basinsFootnote 2 that can be used for STM. This bistability differs from the standard model because neurons move into states where they fire repeatedly without input; in the standard model, the neurons require input to fire. Rested bistable neurons will shift to an up state with sufficient input.

A third alternative to CAs as STM items is storage via STP, rapid synaptic modification that decays (Mongillo et al. 2008; Fusi 2008). The memory persists as long as the synaptic changes are sufficient to support subsequent reactivation.

To a large extent, synfire chains, bistable neurons, and STP fall within a loose definition of CAs. All use a small set of neurons for a concept, support persistent activity, and can be learned. Of course, all add a different flavour to CAs. A CA based on synfire chains would have more cyclic, wave like behaviour; bi-stable neurons require less input to a neuron to maintain persistence; and STP allows CAs to form rapidly compared to the standard model.

There is an alternative to CAs that has some support in the academic community. This model uses a holographic reduced representation so the same active concept can be represented by two entirely separate sets of neurons. A set of neurons represents a concept by its firing pattern (Plate 1995). This is consistent with population coding, but the firing can pass to another set of neurons, and may never return to the original set. This passing of firing is not entirely consistent with self-sustaining persistence; however, it is related to synfire chains.

Proponents (e.g. Eliasmith and Thagard 2001) of this mechanism show that representations of different concepts can be combined, thus solving the binding problem (see Sect. 5.2.2). Simulated neurons can be used to represent concepts and the concepts can be bound using these mechanisms. However, the authors are unaware of any biological evidence supporting this type of binding.

A further problem with holographic representations is symbol grounding (Harnad 1990); how does the neural firing pattern come to represent the particular concept? With CAs, the neurons have been grounded by interaction with the environment (see Sect. 6). With holographic representations, different sets of neurons need to represent the same concept, and it is not clear how all of these sets might come to represent the same concept. Moreover, if one concept is, for example, associated with a second concept, all of the different sets of neurons in the holographic representation will need to be associated with all the sets of neurons that represent the second concept. In short, learning is the main difference between CAs and holographic representations. It is not clear how holographic representations could be learned.

Holographic representation and associated binding may be compatible with individual neural behaviour. However, the authors feel that it is at best as a complement to the CA model instead of a replacement; it might perhaps be used as a mechanism to create firing patterns of bound CAs in, for example, language processing.

2.7 Cell assemblies are all but certain

The evidence referenced in this section strongly suggests CAs do exist in the brain. The prevalence of population coding throughout the brain has been verified; voltage sensitive dye techniques show this population coding, as do single cell recordings. Evidence of persistence from single cell recordings includes both persistence of regular spiking, and persistence of up bursty states; other imaging techniques show persistent firing in areas, and transcranial magnetic stimulation can disrupt cognitive behaviour by dynamically modifying neural behaviour in specific small brain regions. Functional connectivity indicates that CAs exist by showing that particular neurons behave together. Finally, though more difficult to analyse, learning data shows the firing of neurons in response to particular cognitive states becomes more correlated over time.

The evidence for CAs is compelling, and many readers will find the support for CAs unsurprising. In many fields, it is already assumed. For example, persistent neural activity is currently being used to indicate working memory (see Sect. 4).

Nonetheless, the scientific community will be unable to fully confirm the existence of CAs until all the neurons in a functioning brain can be dynamically monitored constantly. Even then it would take years to understand developmental behaviour. Consequently, it is possible to argue that CAs do not exist.

Even though there is substantial evidence for the existence of CAs, the extent and behaviour of CAs are still not well understood. The next section shows the broad extent of CAs and some of their functions.

3 Cell assembly details

The existence of CAs does not show that CAs are the basis of all concepts. Of course, the term concept itself is ill defined, roughly meaning a mental unit. Typical concepts include things represented by concrete noun symbols such as dog, but the authors would expect animals that did not use symbols, but had categories for dogs (e.g. a macaque or pre-verbal infant familiar with dogs) would have a category for dog. Similarly, abstract nouns (like hope) and verbs (like run) also label categories. Instances of categories (e.g. Dr Hebb) and episodes (e.g. today’s lunch) are also concepts. Procedural tasks (e.g. hitting a tennis ball) might not be concepts, though once labelled, they probably do become concepts with the explicit concept associated with the procedure.

Many questions about CAs remain unanswered, and several are addressed here. The location of CAs in the brain is addressed in Sect. 3.1. The size and shape of a CA are discussed in Sect. 3.2. CAs have three primary and complementary functions; they categorise input, they are LTMs, and they are STMs, but CAs are also involved in a range of other processes and some of these processes are discussed in Sect. 3.3 and 4.

3.1 Location of CAs

Population coding is common throughout the brain, but persistence is not. That is, neurons may stop firing once the stimulus ceases. However, in higher order areas (most of the cortex), persistence allows cognitive states to persist. Once information is in the brain, it can remain active via CA reverberation.

The cortex can be divided into areas, and one commonly used division is the Brodmann areas (Figs. 2, 3); evidence is provided below regarding the presence of CAs in different cortical areas. In almost all cortical areas, there are neurons that population code for specific features, and that persist without external stimulus, implying that there are CAs in all cortical areas except perhaps primary visual cortex. It is less clear whether there are CAs in subcortical areas, but there is evidence for CAs in some.

3.1.1 Frontal and temporal lobes

Much of the frontal lobe is topologically distant from the sensory and motor interfaces, with a chain of several intermediate layers of neurons needing to fire to cause frontal neurons to fire caused by the environment. Among a range of other functions, the frontal lobe is involved in working memory tasks (see Sect. 4). There is substantial evidence for CAs in all frontal areas. For example, the prefrontal cortex generates persistent activity that outlasts stimulus (Wang et al. 2006); single cell recordings of ferret medial prefrontal cortical neurons show persistent activity. Comparisons to visual cortex indicate that the cellular features, synaptic properties, and connectivity properties of these prefrontal neurons favour persistent activity.

Primary motor cortical neurons (Brodmann area 4 BA4) have CAs. Electrode studies of owl monkey’s primary motor cortical neurons (BA4) and dorsal premotor cortical neurons (BA6) were used to guide a robotic arm (Wessberg et al. 2000); these neurons persisted throughout the action. Recordings of neurons in motor areas of rats learning a reaction time task show elevated activity during the task, with most of the recorded neurons active (Laubach et al. 2000); analysis of firing can predict success or failure on particular instances of the task; and behaviour is learned. A human unable to move or sense his limbs for several years had electrodes implanted into his motor cortex. The monitored activity of the neural ensembles enabled control of computer devices and via them external devices (Hochberg et al. 2006). The monitored neurons individually behaved quite differently at different times implying they participate in multiple CAs. Rhesus monkey motor cortical neurons are broadly tuned to the direction of arm movement with many neurons participating in a given action; when the action is delayed, the same neurons remain active (Georgopoulos et al. 1986). This shows BA4, which is close to the motor interface, has sound evidence for CAs, and these CAs need to persist to complete the appropriate action.

A learned response of frontal eye field (BA8) neurons of monkeys was associated with gaze target visual features (Bichot and Schall 1999). These neurons fired persistently while searching for the associated target. Prefrontal neurons (BA9) of monkeys showed task specific firing during execution and delay portions of tasks (Assad et al. 2000). Rhesus monkey prefrontal cortical neurons stored action sequences in CAs (Averbeck et al. 2006b). Prefrontal cortical neurons (BA9 and BA10) also fire during the delay portion of a task with specific neurons correlated with specific visual cues (Quintana and Fuster 1999). PET scans showed persistent elevated activity in premotor cortex (BA6), and frontal cortex (BA10) when the subject had learned to associate a visual stimulus with an auditory stimulus (McIntosh et al. 1998).

Orbitofrontal neurons (BA11 and BA12) of rats, measured by single unit recordings, exhibit persistent firing specific to reward or punishment (Gutierrez et al. 2006). Moreover, they fire in anticipation of the reward or punishment. Ventrolateral prefrontal cortical neurons of the macaque correspond to the inferior frontal gyrus in humans. These macaque neurons population code and respond to semantically specific macaque calls and do so persistently (Romanski et al. 2004). Macaque rostral inferior neurons (a homologue of human BA44) fired in a delay period before the macaque grasped-specific objects, with neurons population coding specific actions (Gallese et al. 1996). Dorsolateral prefrontal cortical neurons (including BA46) and ventrolateral prefrontal cortical neurons (including BA45 and BA47) of monkeys responded in a cue task with population coding and persistently for abstract rules (Wallis et al. 2001).

Like the frontal lobe, the temporal lobe is also topologically distant from the sensory and motor interfaces. Though many areas contain CAs that persist, CAs in this lobe, in general, are driven more by environmental stimuli than in the frontal lobe. Nonetheless, persistence after external stimulus has ceased is evident throughout.

There is strong evidence derived from single neuron recording that inferior temporal cortex (BA20) has CAs. One test of this area showed that neurons population code for specific images and specific features (Desimone et al. 1984). Face-specific neurons were found in the fusiform gyrus (BA37), with neurons responding persistently, but the image remained present. In macaques performing a shape matching task, these neurons population code for specific shapes and spiking persists (Gochin et al. 1994). Similarly, shape-specific neurons fire during delay in a paired association task (Naya et al. 1996).

Single cell recordings of middle temporal neurons (BA21) show that they collectively encode direction of motion of visual stimuli. Moreover, during a delay task, neurons have elevated activity showing persistence (Bisley et al. 2004). This area is also called V5 (a vision area) and it responds to visual stimuli, such as direction of motion, with population coding (Maunsell and Van Essen 1983). In macaques, these neurons are broadly tuned to moving visual stimulus, and fire persistently and synchronously with neurons responding to different stimuli firing asynchronously (Kreiter and Singer 1996). More recently, persistence beyond environmental stimulus has been noticed (Ranganath et al. 2005).

Responses from human neurons of the superior temporal gyrus (BA22) and nearby areas were explored before surgery for epilepsy. These neurons showed persistent activity for up to 2 s in response to auditory clicks (Howard et al. 2000). This is a relatively rare example of a single unit study of the human brain, and is strong evidence for human CAs.

Piriform cortical neurons (BA27) of rats, measured by single unit recording, fired persistently with population coding in response to particular odours (Rennaker et al. 2007). In the piriform cortex (BA27), Zelano et al. (2009), using fMRI, found sustained activity associated with olfactory working memory. Similarly, entorhinal (BA28 and BA34) and hippocampal neurons of behaving rats fire persistently and synchronously (Chrobak and Buzaski 1998), measured by single unit recording. Further studies showed that groups of neurons in this area fired with graded responses (Egorov et al. 2002).

Neurons from the parahippocampal gyrus (BA35 and BA36), hippocampal formation, rhinal cortex and inferior temporal cortex (BA20) of monkeys performing a delay task showed population coding and persistent firing during the delay period (Riches et al. 1991). Hippocampal place cells (BA35) can be used to predict the location a rat will move to in the next theta cycle (Dragoi and Buzaski 2006). Macaque temporal pole neurons (BA38) show population coding for a complex stimulus, including presentation of opposed halves of that stimulus, persisting for seconds after the stimulus (Nakamura et al. 1994).

Marmoset auditory cortical neurons (BA41 and BA42) population code for tones, and fire persistently (Bendor and Wang 2008). Postsubicular rat neurons (BA48) population code for head direction. Moreover, persistent firing has been induced by direct current injection (Yoshida and Hasselmo 2009).

3.1.2 Occipital and parietal lobes

The occipital and parietal lobes contain primary and higher sensory areas. While some researchers feel that even neurons in primary sensory cortices are parts of CAs (Harris 2005), there is evidence of persistence in higher sensory areas, but less in primary sensory areas.

The occipital lobe consists of areas for early visual processing. There is evidence for population coding in primary (BA17) (e.g. Hubel and Wiesel 1962), secondary (BA18) (e.g. Pogio and Fischer 1977) association (BA19) visual cortices (Fischer et al. 1981), though there has been little evidence of persistence with single unit recording. However, using fMRI, BA17 shows increased activation when subjects recall visual stimulus (LeBihan et al. 1993), and functional connections during delay tasks in visual cortices (BA18 and BA19) (Gazzaley et al. 2004). Similarly, neurons in the primary visual cortex of blind subjects are activated during Braille reading (Sadato et al. 1996). Such studies suggest that there is input from higher areas to the lower visual cortical areas, and that this input is able to fire neurons in these areas.

In the parietal lobe, there is evidence for persistence in all areas. Somatosensory neurons (BA5 and BA7) of rhesus monkeys fired persistently in expectation of executing a task (Quintana and Fuster 1999). Owl monkey posterior parietal neurons (BA7) were used to guide a robotic arm (Wessberg et al. 2000); these neurons persisted throughout the action. Parietal neurons (BA7) of rhesus monkeys fired while they considered and performed viewer and object centred tasks (Crowe et al. 2008); in all cases, state was population coded, and these neurons fired persistently. Single unit recordings of neurons from the supramarginal gyrus (BA40) of macaques show population coding and persistence associated with visual saccades (Nakamura et al. 1999).

fMRI studies show elevated activity in the angular gyrus (BA39) in response to linguistic tasks (Booth et al. 2004). Single unit recordings showed elevated firing rates in the parietal reach region during reach planning and this was population coded for direction (Scherberger et al. 2005). Similarly, fMRI showed elevated activity in the parietal operculum (BA43) in tacitly modulated visual tasks (Macaluso et al. 2000).

Suites of rat primary somatosensory cortical neurons (BA1-3) respond to whisker stimulus with latencies increasing in deeper cortical areas (Moore and Nelson 1998). While these neurons did not fire persistently after the stimulus, anterior parietal cortical neurons (BA1-3) of rhesus monkeys that showed elevated activity during tactile tasks showed elevated activity during delay tasks (Zhou and Fuster 1996). These single cell recordings show a form of short-term haptic memory in early sensory processing.

The emerging view is that sensory cortex involved in relatively early processing is also used for short-term storage (Pasternak and Greenlee 2005). Thus CAs involve neurons in early sensory areas in parietal and occipital lobes.

3.1.3 Limbic and insular lobes

All Brodmann areas in the limbic lobe exhibit population coding and persistent neural firing. Suites of macaque anterior cingulate cortical neurons (BA24) responded persistently and correlated with cognitive phenomena, such as search, order, and reward anticipation in a sequence learning task (Procyk et al. 2000). Anterior cingulate cortical neurons (BA33) population coded for stimuli during the delay period of a classical conditioning experiment (Kuo et al. 2009).

Rat anterior cingulate cortical neurons fire persistently during a delay task (Lapish et al. 2008). Monkey cingulate motor neurons (BA23 and BA6) exhibit persistent firing during the delay period of a delay task (Crutcher et al. 2004). Rabbit posterior cingulate cortical neurons (BA29) fired persistently during a delay period in a conditioned stimulus task (Talk et al. 2004). Rat retrosplenial cells (BA26, BA29 and BA30) fired in relation to one or more of the variables location, direction, running speed, or movement. Some fired in anticipation, implying ignition before external stimulus (Cho and Sharp 2001).

Macaque posterior cingulate cortical neurons (BA31) population code for direction of eye saccade, and firing persists beyond the movement (McCoy and Platt 2005). Macaque pregenual cingulate cortical neurons (BA32) fired persistently with population coding for specific tastes (Rolls 2008).

Using fMRI, Siegel et al. (2006) noted varying levels of sustained reactivity to emotional stimuli in the subgenual cingulate cortex (BA25) and amygdala. Single cell recordings showed elevated firing rates in many neurons in BA25 when a macaque went to sleep (Rolls et al. 2003). Other neurons had elevated firing for novel visual stimulus, which decreased as the stimulus was repeated. As these elevated rates were less than 5 spikes per second, this is merely suggestive evidence for CAs in this area.

The insular lobe is small with only three Brodmann areas. Population coding is shown in all three, but persistence is less evident. Rat gustatory cortical neurons (BA13 and BA14) form distinct, population coded CAs that respond to particular stimulus by undergoing coupled changes in firing rates over several 100 ms (Katz et al. 2002). Parainsular auditory cortical neurons (BA52) of squirrel monkeys responded to specific tones, but there was insufficient evidence to see if they persist for a significant time after the stimulus (Bieser and Muller-Preuss 1996).

This paper’s use of Brodmann areas to divide the cortex is intended to show that CAs are prevalent throughout the cortex, except perhaps BA17. Clearly, Brodmann areas can be subdivided, and other systems for partitioning the brain exist. Moreover, in most cases, homologues of other animals have been used to infer neural firing in the human brain. In some cases, e.g. premotor areas, these areas are quite similar, but in language areas for example, the function has changed. However, these invasive studies can be aligned with imaging studies, such as fMRI, and other techniques.

Most of the evidence presented here of CAs in cortical areas is based on population coding and persistence; however, the evidence does not exhibit learning. Methodologically, it is more difficult to find learning in particular neurons than to record spikes. Single unit recording devices need to be placed before the learning is done, and then the learning has to affect those neurons being measured. There is evidence of learning (see Sect. 2.5), but this paper has not provided references to learning in each Brodmann area, assuming that the behaviour has been learned at some time.

The existence of CAs in all or almost all higher cortical areas aligns well with Hebb’s idea of CAs as the basis of concepts. When a concept persists in the brain after the stimulus ceases, an active CA supports this STM. It is possible that some concepts are represented by some other neural mechanism than CAs, however the authors are unaware of any sound evidence of such a mechanism concept pair.

3.1.4 Subcortical areas

While it is unlikely that CAs exist in every area of the brain and the nervous system, there is evidence of CAs in some subcortical areas. However, as a rough guide, CAs have less prevalence as areas get further from the cortex.

There is evidence for CAs in noncortical forebrain areas. Spiny neurons in the striatum exhibit loosely synchronised up and down state dynamics that indicate they are parts of CAs that include cortical neurons (Stern et al. 1998). Neurons in the nucleus accumbens exhibited persistent states (O’Donnell and Grace 1995). Neurons in the rat amygdala fired persistently during fear (Repa et al. 2001); moreover, this response was learned over a series of several trials.

There is population coding in the thalamus. Neurons in the cat lateral geniculate nucleus respond reliably to a particular stimulus (Reinagel and Reid 2000). Similarly, rat posterior medial neurons fired robustly in response to whisker stimulation with neurons responding to multiple whiskers and multiple neurons responding to particular whiskers (Nicolelis et al. 1993). While head direction neurons in the thalamus fire persistently (Stackman and Taube 1998), there is little evidence of persistent firing in other parts of the thalamus.

The cerebellum is involved in eye blink conditioning. Classical conditioning experiments show that cerebellar neurons learn to respond to conditioned stimuli, and persist during a delay period while conditioning (Christian and Thompson 2003). There is some evidence of CAs in the hindbrain. In this case, reverberatory activity is maintained without sensory or other external stimulus. However reverberation can be maintained via sensory stimulus, as is usually the case in primary visual cortex (BA17) and with thalamic head direction neurons. It is also possible the reverberatory activity is maintained by non-sensory input to the nervous system, as may be the case with some areas involved in emotion processing.

While this section has shown evidence that CAs are in many areas of the brain, it has not shown the extent of CA involvement in those areas. It is not clear that all neurons in, for example, the forebrain are part of CAs.

3.2 Topology of a cell assembly

Some aspects of the topology of a CA have extensive empirical evidence. CAs overlap with particular neurons participating in multiple CAs (e.g. Georgopoulos et al. 1986). CAs cross brain areas, so that some CAs are made of neurons in multiple brain areas (e.g. Pulvermuller 1999).

A great deal of the theoretical structure of CAs has been discussed (e.g. Wickelgren 1999). Central to this is the overlapping coding of CAs. The idea of overlapping coding is that some, perhaps most, neurons participate in multiple CAs. New concepts composed of pieces of old concepts will contain some new neurons, though they will also contain some of the neurons from the base concepts.

Overlapping encoding relates to population coding (see Sect. 2.2), where a concept is composed of a large set of, but not the majority of, neurons. There is extensive evidence for overlapping encoding (e.g. Tudusciuc and Nieder 2007; Georgopoulos et al. 1986). The degree of overlap varies from CA to CA. For example, there is evidence that task specific CAs are largely orthogonal in frontal areas (Sigala et al. 2008), while there is extensive overlap in CAs in motor areas (Georgopoulos et al. 1986). Overlapping enables more CAs, but it also supports sharing of information between CAs. If two CAs share many neurons, they are probably closely related. An interesting mathematical model includes overlap in CAs for optimal decisions (Bogacz 2007). A similar information theoretic work suggests that less overlap is better in sensory systems (Field 1994). Note that the earlier use of atomic assemblies does not imply that a CA is orthogonal to other CAs, merely that it is not composed of other CAs.

A range of imaging and non-imaging data suggests that CAs for words are distributed in brain areas that are semantically specific (Pulvermuller 1999). For instance, the CAs for nouns with strong visual associations include neurons in visual cortices. Similarly, concrete nouns can be predicted via fMRI from the brain areas active when the subject thinks of them (Just et al. 2010). Synchronous activity of neurons in behaving rats (Nicolelis et al. 1995) indicates that neural ensembles across several brain areas collaborate to integrate sensory information and determine action. However, it is not entirely clear when CAs cross brain areas. Moreover, the nature of CA processing and formation across laminar levels and brain areas is poorly understood (but see Douglas and Martin 2004 for a proposal).

Orthogonality and CAs in multiple brain areas raise the question of how many neurons are in a CA. The answer to this question has less evidence, but single unit recordings show hundreds of neurons firing at elevated levels for a remembered concept (Histed et al. 2009). This is close to the lower range of \(10^3\) neurons in a CA that the authors suggested while introducing principle 1. This answer also raises the question: are there different types of CAs? As proposed in Sect. 1.1, smaller CAs are more likely to be atomic. That is, they cannot be decomposed into other CAs. Larger CAs are likely to be composed of other CAs, and can act to support composite structures such as cognitive maps.

It also seems likely that some neurons are more central to a particular CA than others. These central neurons may fire more frequently than less central ones, and may have closer links to other neurons in the CA, so membership of a neuron in a CA can be thought of as graded instead of binary.

Other topological issues such as neural type, involvement across laminar layers, and precise neural dynamics are poorly understood. However, it is likely that topological parameters will vary between CAs and within a CA during its life time.

3.3 Function of cell assemblies

From a cognitive perspective, CAs fulfil a wide range of cognitive roles. As Hebb stated, CAs are LTMs and STMs. Persistent firing beyond sensory stimulus is a STM. The formation, via Hebbian learning, of a set of neurons, which can persistently fire, is an LTM. CAs vary in the degree that they are stimulated by the environment or from other parts of the brain. As commonly understood, CAs are ignited, in appropriate circumstances, by sensory stimulus, and categorise that stimulus. These prototypical CAs can also be used as STMs that can be ignited by internal processes such as working memory. In current day thinking, STM, LTM and categorisation are the primary functions of CAs.

Neurons in CAs can function without persistence, firing persistently or transiently based on sensory stimulus. By the principles introduced in Sect. 1.1, if a set of neurons can persist or occasionally persists without a sensory stimulus, it is a CA. Nonetheless, the CA concept is more continuous than binary. Sets of neurons that are population coded and stimulus responsive, but rarely ignite due to internal processes are to a lesser degree CAs; for example, neurons in early visual areas rarely persist without sensory stimulus, and thus are less prototypical examples of CAs. While prefrontal neurons associating a tone with a colour (Fuster et al. 2000) are members of a prototypical CA, as they persist without stimulus for seconds during delay tasks, are quickly learned and population coded. Beyond these factors, CAs vary by their ignition speed, duration of persistence, size, and learning properties.

As categorisers, CAs take a vast range of possible inputs and categorise them as the same thing; e.g. pictures of different dogs, their bark, the name, or even their smell can activate one’s dog CA. An example from the literature involves inferior temporal neurons that respond to particular shapes in a wide range of similar rotations (Logothetis et al. 1995). A similar work shows that medial temporal neurons respond to particular objects from all angles and even different pictures (Quiroga et al. 2005). This goes beyond specific objects with specific neurons firing for specific tasks (Sakurai 1998a). Note that categories do not need to be discrete as bump sensors have been used to model continuous categories (Wang 2001). Also, categorisation may involve systems beyond the CA, gaining continued and directed input from senses, and contextual evidence and control from higher areas.

There is evidence that episodes are encoded by CAs (Dragoi and Buzaski 2006). For example, rat hippocampal place neurons (O’Neill et al. 2008) fire at an elevated rate during sleep after the associated places are visited, and places that were visited in sequence co-fire. Moreover, particular rat hippocampal neurons fired when the rat was at particular locations when running a maze, and in similar order during cognitive rehearsal of running the maze (Pastalkova et al. 2008). This showed internally generated CA sequences that also predicted task performance errors. CAs involving mouse hippocampal place cells could even predict future paths (Dragoi and Tonegawa 2011).

Top-down and bottom-up evidence (Engel et al. 2001) can be combined to ignite a CA. Anticipation can lead to priming in a CA that in turn leads to more rapid recognition or disambiguation when a sensory stimulus is provided. When ambiguous sensory information supports the ignition of different CAs, top-down information can support a particular CA, and thus it alone of the options ignites. Synapses connect sensory areas to higher areas and vice-versa, so information flows between sensory and higher areas, supporting the combination of evidence (Lamme et al. 1998).

Beyond categorisation, STM and LTM, CAs support a range of other activities. They support working memory (see Sect. 4), associative memory, figure-ground separation, and imagery; single unit recordings of human neurons responded to both the presentation of pictures and the task of imagining the picture (Kreiman et al. 2000).

CAs support associative memory (Diester and Nider 2007). Individual CAs are associated with other CAs via excitatory synapses from neurons in the first CA to neurons in the second, and via shared neurons (Sigala et al. 2008). Evidence comes from monkey cortical neurons, where monkeys were trained to associate pairs of pictures. Recordings of neurons in the anterior temporal cortex showed one set that responded to both pairs of stimuli, and a second that responded to one; the second type of neuron had elevated firing during the delay phase of picture presentation when the associated cue was presented (Sakai and Miyashita 1991). There is also extensive simulation of CAs supporting associative memory (see Sect. 5.2.1). Similarly, association leads to priming. There is evidence that semantic priming via pictures for action words leads to an effect on error rates (Setola and Reilly 2005). “This suggests the possibility of a broader account of priming effects in terms of CAs.” That is priming and the closely related concept of associative memory emerge naturally from a network of CAs.

It is well known that primates can make use of cardinality and this is supported by CAs. Monkeys were trained to associate particular pictures with particular cardinalities, providing a label. Particular neurons in the prefrontal cortex were recorded and responded more or less identically to the label and cardinal category (Diester and Nider 2007). This type of association may provide a basis for symbol grounding in humans. Symbol grounding is particularly important as it shows how symbols gain their meaning (Taddeo and Floridi 2005), and symbols are very important in modern society and in artificial intelligence. Indeed there is growing support for a neural basis to symbols in the larger cognitive science community (e.g. Gallese and Lakoff 2005).

Hebb discusses figure-ground separation as one of the benefits of CAs. The figure is activated by CA ignition and this causes it to psychologically pop out. Some evidence shows that edge detecting neurons in BA18 respond when the edge is part of the figure but not when they are part of the ground (Qiu and von der Heydt 2005). This is consistent with the higher order information (e.g. from V3) boosting the activity of neurons in the figure at a lower level.

One very important aspect of CAs is that they are active. CAs that encode symbols are active symbols (Hofstadter 1979; Kaplan et al. 1990). When a CA is active, it influences other CAs, by spreading activation or inhibition to them. This enables one CA to prime many others, inhibit many others, and be involved in other types of processing simultaneously. This active processing can also involve dynamic binding (see Sect. 5.2.2).

It appears that motion is driven by central pattern generators (CPGs) (Butt et al. 2002). CPGs consist of neurons, and they must be triggered. Neuron firing in the motor cortex also causes actions (Brecht et al. 2004). So, neurons in the motor cortex must interact with CPGs. For example, CPGs are involved in walking. It is not clear whether the neurons in the CPG are part of the CA; are those CPGs involved in walking part of the walk CA? However, on a continuum, those neurons are at most weakly part of the CA. The neurons in the motor cortex may or may not persist, and those that do not are weakly part of the CA. Those that do (Laubach et al. 2000) are clearly part of the CA. Moreover, CAs, like CPGs, persist, so CAs can be considered CPGs (Yuste et al. 2005).

CAs are involved in a wide range of cognitive processes, but it is not clear when and how they collaborate. There is some evidence about their collaboration in working memory tasks (see Sect. 4), and evidence from simulations (see Sect. 5).

4 Short term and working memory

While it is simple to say CAs are involved in complex psychological systems, for example, associative memory, and to provide evidence that they are, building an accurate simulated neural model of associative memory is extremely difficult, and one can argue that associative memory cannot be truly understood without such a simulation. A good simulation provides explicit details of basic processes, and shows how more complex behaviour emerges. Progress is being made on a range of cognitive tasks, but the neural implementation of these tasks, like associative memory, is not well understood. This section will make a speculative description of one complex psychological system, the working memory system, and its task-specific use.

One of the basic assumptions of Hebb’s CA hypothesis is that an active CA is the neural basis of an STM. In the intervening 60 years, a related form of memory, working memory, has been studied extensively. Working memory combines short-term buffers and central executive processes (Jonides et al. 1993). Psychological evidence exists that the working memory and STM are separate systems with STM supporting working memory (Engel et al. 1999).

Central executive processing is largely based in the frontal cortex, and working memory leads to behaviour that is more complex than simple STM, while retaining access to a wide range of memories stored outside the frontal cortex (see (Constantinidis and Procyk 2004) for a review of region specific involvement.) Executive processing is under voluntary control, and its mechanisms are an active area of research (Miyake et al. 2000).

An active CA is an STM as evident during sensing, where CAs are ignited from the environment with little input from frontal areas. However, frontal areas can also access these areas during sophisticated processes such as memory retrieval (Buckner and Wheeler 2001). During memory retrieval, frontal areas may begin with, for example, a goal-directed attempt to remember. This, in essence, query is sent to other areas of the brain in an attempt to retrieve the memory. For example, parts of the medial temporal lobe are involved in storing events. If the memory is retrieved, there is enhanced firing in the medial temporal lobe (Henson et al. 1999); that is the CA ignites.

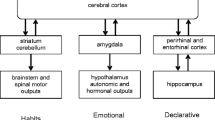

There is not universal agreement on the psychological basis of working memory, but one popular model uses the central executive, the visuospatial sketchpad, and the phonological loop (Baddeley 2003). The sketchpad and the loop act as limited capacity buffers, and the executive control decisions.