Abstract

Climate downscaling using regional climate models (RCMs) has been widely used to generate local climate change information needed for climate change impact assessments and other applications. Six-hourly data from individual simulations by global climate models (GCMs) are often used as the lateral forcing for the RCMs. However, such forcing often contains both internal variations and externally-forced changes, which complicate the interpretation of the downscaled changes. Here, we describe a new approach to construct representative forcing for RCM-based climate downscaling and discuss some related issues. The new approach combines the transient weather signal from one GCM simulation with the monthly mean climate states from the multi-model ensemble mean for the present and future periods, together with a bias correction term. It ensures that the mean climate differences in the forcing data between the present and future periods represent externally-forced changes only and are representative of the multi-model ensemble mean, while changes in transient weather patterns are also considered based on one select GCM simulation. The adjustments through the monthly fields are comparable in magnitude to the bias correction term and are small compared with the variations in 6-hourly data. Any inconsistency among the independently adjusted forcing fields is likely to be small and have little impact. For quantifying the mean response to future external forcing, this approach avoids the need to perform RCM large ensemble simulations forced by different GCM outputs, which can be very expensive. It also allows changes in transient weather patterns to be included in the lateral forcing, in contrast to the Pseudo Global Warming (PGW) approach, in which only the mean climate change is considered. However, it does not address the uncertainty associated with internal variability or inter-model spreads. The simulated transient weather changes may also be unrepresentative of other models. This new approach has been applied to construct the forcing data for the second phase of the WRF-based downscaling over much of North America with 4 km grid spacing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To quantify regional climate change and assess its impacts, detailed climate change information at fine resolution (e.g., on grids of 1–5 km) is often needed (Giorgi and Mearns 1991; Wilby and Wigley 1997; Giorgi et al. 2006). Current global climate models (GCMs) can only produce projections on grids of 50-200km (Collins et al. 2013), insufficient for quantifying regional and local climate change and their impacts. To meet this need, dynamic downscaling using regional climate models (RCMs) (e.g., Girogi and Mearns 1991; Giorgi and Lionello 2008; Mearn et al. 2009; Rasmussen et al. 2011, 2014; Jacob et al. 2014; Vautard et al. 2014; Wang and Kotamarthi 2015; Liu et al. 2016), statistical downscaling (Wilby and Wigley 1997), and other methods (Maraun et al. 2010; Themeßl et al. 2011; Walton et al. 2015) have been widely used to downscale future climate projections from GCMs to finer grids. To force the RCMs, 6-hourly data from individual GCM simulations (either with or without a bias correction, Bruyère et al. 2014) have traditionally been used to provide the forcing at the lateral boundaries for both a current and a future period of 10–30 years. The difference in the mean fields from the RCM simulations between the current and future periods is often interpreted as the response to future changes in greenhouse gases (GHGs) and other external climate drivers. However, it is known that internal climate variability (ICV) can generate large variations in precipitation and other atmospheric fields on decadal to multi-decadal time scales over regional to continental scales within a GCM (Deser et al. 2012a, b, 2014; Wallace et al. 2016; Dai and Bloecker 2017a). Thus, the lateral forcing and the resultant downscaled changes within the RCM domain based on individual GCM simulations contain both GHG-forced long-term changes and ICV-induced decadal-multidecadal variations, which can affect the mean fields averaged over a period of 10–30 years. Furthermore, the GHG-forced response from one model may differ from another (Knutti et al. 2010; Collins et al. 2013). These issues complicate the interpretation of the downscaled changes as they consist of both model-dependent GHG-forced and ICV-induced changes.

The ICV for a given period depends on the starting initial conditions in a coupled GCM or the real world. Current GCM simulations of future climates start from random initial conditions as observations are insufficient to quantify past states of the climate system; thus they are meant to capture the forced response to future GHG changes (after averaging over a number of simulations), but not to simulate future ICV. Therefore, RCM-based downscaling should also focus on GHG-forced changes. For this purpose, the ICV-induced changes in the downscaled fields should be eliminated, or be separated and used as a measure of ICV-induced uncertainty when possible. One way to do that is to perform a large ensemble of RCM simulations forced by different GCM simulations (e.g., Mearns et al. 2009; Jacob et al. 2014; Vautard et al. 2014), and then average the RCM simulations over the ensemble members to smooth out the random ICV, while the spread among the ensemble members can be used as a partial measure of the ICV. However, performing such a large ensemble of RCM simulations is very expensive as they require large computational and human resources, especially for high-resolution (with grid spacing <10 km) simulations over large domains (e.g., Prein et al. 2015; Liu et al. 2016; Kendon et al. 2017).

Another approach to downscaling forced future climate change is the so-called Pseudo-Global Warming (PGW) experiments (e.g., Schär et al. 1996; Hara et al. 2008; Kawase et al. 2009; Rasmussen et al. 2011, 2014; Liu et al. 2016), in which a mean perturbation from a GCM multi-model ensemble of projections is added to 6-hourly forcing data from a reanalysis product (thus no bias correction is needed). The PGW forcing captures the mean response to future GHG changes representative of multi-model ensemble projections, and ensures that the changes in the forcing data are purely due to GHG forcing but not ICV. However, it does not include changes in transient weather activities which may limit the RCM’s ability to simulate the response of extreme events, such as severe storms and intense precipitation (Dai et al. 2017b), and daily extreme temperatures. This is a potential shortcoming for analyzing changes in extreme events (Prein e al. 2017), which is a common application of RCM-downscaled data.

To overcome the shortcomings in the traditional RCM-based climate downscaling methods, we propose a new approach to construct representative forcing data for downscaling GCM projections of externally-forced future climate change. In principal, this approach can be applied to both RCM-based dynamic downscaling (Giorgi and Mearns 1991) and statistical downscaling (Wilby et al. 1997), although for the latter, one can easily perform an ensemble of downscaling to derive the forced change and the ICV. The new approach combines the transient weather signal from one GCM simulation with the monthly mean climate states derived from the multi-model ensemble mean for the present and future periods, together with a bias correction term. It ensures that the mean differences in the forcing data between the present and future periods represent GHG-forced changes only and are representative of the multi-model ensemble mean, while changes in transient weather activities are also considered based on one select GCM simulation. We emphasize that the new approach focuses only on externally-forced climate change. Uncertainties associated with ICV or inter-model spreads of the simulated response will need to be addressed using other methods, such as a combination of statistical and dynamic downscaling (Walton et al. 2015). We show that the adjustments through the monthly fields are comparable in magnitude to mean bias corrections and they are small compared with the variations in 6-hourly data. This suggests that any inconsistency among the independently adjusted forcing fields is likely to be small. This approach allows the downscaled regional climate change to be representative of the response to future GHG forcing without the need to perform an ensemble of RCM simulations, which can be very expensive and impractical for convection-permitting downscaling (Prein et al. 2015; Kendon et al. 2017) over a large domain. This new approach has been used to construct the forcing data for the second phase of the WRF-based downscaling over much of North America with 4 km grid spacing (Liu et al. 2016). Here we describe the approach and address some of the potential issues it may encounter using the planned WRF-based downscaling as an example.

2 Description of the new approach

Future climate changes are often quantified by examining differences in the mean fields averaged over a current and a future period of 10–30 years (e.g., 1980–1999 vs. 2080–2099; Collins et al. 2013). To achieve that, climate downscaling is often carried out over two such periods. Ideally, periods of ~30 years should be used for defining a stable climate (i.e. to average out most of the ICV-induced variations); however, for high-resolution simulations over a large domain (e.g., over most North America, Liu et al. 2016), periods of 10–20 years are more practical due to their high computational demands. For such short periods, the influence of ICV can be very large over many regions (Dai et al. 2013; Dong and Dai 2015) and even for global averages (Dai et al. 2015). Thus, how to minimize the influence of ICV in the forcing data for 3-dimensional horizontal winds (u,v), air temperature (T), specific or relative humidity (q or RH) and geopotential height (Z), and 2-dimensional sea-surface temperature (SST) and sea-level pressure (SLP) (and thus the resultant downscaled fields) over such a short period is even more critical for such downscaling.

Traditionally, 6-hourly forcing data (YC) with a bias correction term over the current simulation period can be expressed as (e.g., Bruyère et al. 2014):

where XC = 6-hourly data for variable X from a GCM run for the current period; XMC = monthly climatology of X over a current climatological period (TC) from the GCM run; \({\rm X}_{\rm C}^{\prime}\) = XC − XMC: \({\rm X}_{\rm C}^{\prime}\) is the current weather and inter-annual variations in the GCM run; ZM = monthly climatology from a reanalysis over TC.

Note that the current period (e.g., 1996–2005) of downscaling may be too short for defining a stable mean bias; instead, a longer period (e.g., TC = 1976–2005) may be used for computing the climatology (i.e., XMC and ZM). In this case, the \({\rm X}_{\rm C}^{\prime}\) would be the transient variations relative to the mean over TC rather than over the current simulation period.

For the future simulation period (e.g., 2091–2100), the traditional method would construct the 6-hourly forcing data as:

where XF = 6-hourly data for variable X from the same GCM run for the future period; XMF = monthly climatology of X over a future climatological period (TF, e.g., TF = 2071–2100) from the GCM run; \({\rm X}_{\rm F}^{\prime}\) = XF − XMF (future weather and inter-annual variations from the same GCM run).

The new approach uses equations similar to Eqs. (1–2), except that a multi-model ensemble mean is used for defining the monthly climatology and the mean model bias, and the forcing for the current period is exactly the same as in the traditional method after the bias correction:

where XEC = monthly climatology of X over a current climatological period (TC) from a multi-model ensemble mean; \({\rm X}_{\rm C}^{\prime}\) = XC − XMC (current weather and inter-annual variations) from one GCM run. The \({\rm X}_{\rm C}^{\prime}\), XC and XMC are the same as for Eq. (1); ZM = monthly climatology from a reanalysis over TC, same as for Eq. (1).

Thus, the forcing from Eq. (3) is a combination of the multi-model ensemble-mean monthly climatology and the transient weather and inter-annual variations from one select model simulation, plus a bias correction term. The only difference between Eqs. (3) and (1) is the replacement of XMC (climatology from the select model run) by XEC (the climatology from the multi-model ensemble mean) in defining the current climatology and the model mean bias. However, the final forcing data for the current period are the same (ZM + \({\rm X}_{\rm C}^{\prime}\)) for both methods. The difference is in the interpretation.

Similarly, for the future period, the new approach uses

where XEF = monthly climatology of X over a future climatological period (TF) from the same multi-model ensemble mean; \({\rm X}_{\rm F}^{\prime}\) = XF − XMF (future weather and inter-annual variations from the same GCM run as for \({\rm X}_{\rm C}^{\prime}\)). The \({\rm X}_{\rm F}^{\prime}\), XF and XMF are the same as for Eq. (2).

Equation (4) can be rearranged to

The first term in the right-hand side of Eq. (5) is the future forcing from the traditional method (Eq. 2), and the second term is the difference of the simulated future mean change between the select GCM and the multi-model ensemble. Thus, Eq. (4) can be considered as Eq. (2) plus a mean bias term to correct the simulated future change by the select GCM relative to the multi-model ensemble mean. This term should be comparable in magnitude to the mean bias term (XMC-ZM). Thus, the adjustments through monthly climatological fields in the new method are not very different from those for the bias correction in the traditional method.

The mean change between the current and future forcing data in this case is XEF − XEC, which represents the change from TC to TF (e.g., from 1976 to 2005 to 2071–2100, a 95 year interval) based on the multi-model ensemble mean, in contrast to that based on the select GCM as in the traditional method. This ensures that the mean forcing change is representative of the multi-model ensemble simulations, which likely represent our best projection of future climate change (Knutti et al. 2010), and that the time-averaged forcing change is due primarily to future GHG and other anthropogenic changes, not due to ICV. However, the transient variations in our forcing are still affected by ICV. In addition, the inclusion of the transient term (\({\rm X}_{\rm C}^{\prime}\) and \({\rm X}_{\rm F}^{\prime}\)) from one select model in the forcing from the new approach allows the downscaling to include temporal and spatial changes in transient weather activities. This is important for studying changes in daily extreme events. Thus, the new approach overcomes the major shortcomings in both the traditional approach and the PGW method.

In the above, the mean forcing change is between TC and TF (e.g., 1976–2005 to 2071–2100, a 95-year interval), not between the two simulation periods (e.g., 1996–2005 and 2091–2100, also a 95 year interval), which are relevant only for defining the transient terms (\({\rm X}_{\rm C}^{\prime}\) and \({\rm X}_{\rm F}^{\prime}\)). In general, the shorter simulation periods may cover only the later part of the climatological periods (TC and TF) to ensure that the simulations are feasible and the time interval between them is similar to that between TC and TF. One should note that simulations over a relative short period (e.g., 10 years) may not provide enough sampling for very extreme events, and a longer simulation period (e.g., 20–30 years) is recommended for studying changes in extremes.

3 Potential inconsistency

The adjustments through the monthly climatology are done separately for each of the forcing variables, which usually include u, v, T, q or RH, Z, SST, and SLP. This could induce some internal inconsistency among these fields due to the nonlinearity in the relationship among them. That is, the physically-consistent 6-hourly fields from the select GCM simulation may become physically-inconsistent among them because of the adjustments through the monthly climatology and the bias correction. However, we argue below that any such inconsistency should be small and have minor impacts on the downscaled fields away from the lateral boundaries.

First, we notice that the adjustment and forcing data are the same for the current period in our new and traditional approaches (cf. Eqs. (1), (3)), and the adjustment is through replacing the model climatology by the reanalysis climatology (ZM) for both approaches. This has been done previously in dynamic downscaling (e.g., Bruyère et al. 2014; Xu and Yang 2015) without noticeable inconsistency. There are likely several reasons for this. First, the difference in the climatology between a model and a reanalysis (in the order of 1–5 °C for T, Liu et al. 2016) is considerably smaller at most locations than the transient variations in the 6-hourly data (in the order of 10–20 °C for T), as illustrated by Fig. 1 for the daily temperature anomalies from Lindenberg, Germany. Because of this, any inconsistency in the 6-hourly data from the adjustments in the monthly climatology is likely to be small. The second reason is that any such inconsistency will be smoothed out in the buffer zone around the lateral boundaries by the regional model dynamics, leading to negligible impacts on the interior part of the regional domain. Thirdly, because the adjustment is applied to all the dynamic fields, the adjusted fields should be internally consistent if the relationship is linear or if the adjustment is small (in comparison with the total variance) so that the dynamical equations can be linearized. Finally, the WRF initialization process attempts to remove internal inconsistency among the forcing fields, thus minimizing any inconsistency in the final forcing data used by the model.

The adjustments to the future forcing (Eqs. 4, 5) consist of the mean bias correction (XMC-ZM, discussed above) and the correction term [(XMF − XMC) − (XEF − XEC)], which should be comparable to, if not smaller than, the multi-model projected change (XEF − XEC), which has been added to reanalysis forcing data in the PGW method (e.g., Liu et al. 2016). No internal inconsistency was found in previous PGW-based downscaling simulations (e.g., Rasmussen et al. 2011, 2014; Liu et al. 2016). This suggests that the adjustment through the monthly mean fields (XEF − XEC) is unlikely to cause significant inconsistency in the 6-hourly data, again presumably due to similar reasons discussed above.

In summary, the adjustments through monthly mean fields in the new approach are comparable to the mean bias correction used in the traditional approach and the perturbation term of mean climate change used in the PGW method. These mean adjustments have not caused any noticeable inconsistency in previous downscaling studies, likely due to (1) the adjustments are small compared with the total variations in the forcing data so that any non-linear effects may be negligible and (2) the buffer zone around the lateral boundaries and WRF initialization system can smooth out such inconsistency, preventing it from affecting the interior of the downscaling domain. Thus, internal inconsistency should not be a significant issue for 6-hourly data constructed by the new approach.

4 Selecting a model for the transient term

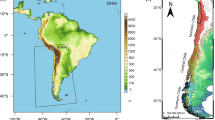

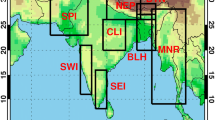

The transient terms \({\rm X}_{\rm C}^{\prime}\) and \({\rm X}_{\rm F}^{\prime}\) need to be derived from a single GCM simulation, preferably with relatively high spatial resolution. This model should show good performance in simulating the current variability on 6-hourly to inter-annual time scales around the lateral boundaries of the regional domain for downscaling. For our WRF-based downscaling with 4 km grid spacing over most North America (Fig. 2; Liu et al. 2016), we evaluated five CMIP5 models that had a grid spacing less than 1.5° and also provided 6-hourly data for the present and future climate under the RCP8.5 scenario. All the data were re-gridded onto a common 1° grid in the comparison. The mean bias (XMC − ZM) and model-to-reanalysis ratio of the variance of \({\rm X}_{\rm C}^{\prime}\) for the period from 1979 to 2005 in comparison with the ERA-Interim reanalysis (Dee et al. 2011) are examined below for the contiguous US (CONUS) and the upstream western and southern zone (Fig. 2).

The CCSM4 versus ERA-Interim ratio of the standard deviation of JJA 500-hPa specific humidity during 1979–2005 for the transient variations (\({\rm X}_{\rm C}^{\prime}\)) from daily to decadal time scales over the North American domain. The red and blue dashed lines outline the upstream western and southern boundary zones, which include the WRF model western and southern boundaries where the CCSM4 model forcing will be used to drive the regional model

Figure 3 compares the normalized variance versus mean bias averaged over the whole CONUS for eight select fields (850 hPa T, u, v and q, 500 hPa u, v and Z, and SLP) from the five CMIP5 models. Similar plots are shown in Figs. 4 and 5 for the upstream western and southern boundary zones which have the largest impact on the interior of the CONUS. Figures 3, 4 and 5 show that the variance of the transient variations (\({\rm X}_{\rm C}^{\prime}\)) from the CCSM4 compares favorably to the ERA-Interim among the five models. The mean biases for some of the fields (e.g., SLP and 500 hPa Z) are relatively large for the CCSM4; however, these mean biases should not have an effect here because the mean climatology from this model will not be used to construct the forcing [cf. Eqs. (3–4)]. The CCSM4 also has a relatively high resolution with 0.94° lat × 1.25° lon spacing. Given these considerations, the CCSM4 was chosen to provide the transient forcing data \({\rm X}_{\rm C}^{\prime}\) using one of its ensemble simulations.

Scatter plots of regionally-averaged normalized variance (i.e., model-to-ERA-Interim ratio of the variance) of the transient variations (\({\rm X}_{\rm C}^{\prime}\)) vs. mean bias (XMC − ZM) for eight different fields for the whole CONUS for five CMIP5 models with relatively high resolution. Each color represents one model (e.g., red = CCSM4) and each symbol is for one season or annual mean (e.g., circles for DJF). Models with the normalized variance close to one perform the best in simulating \({\rm X}_{\rm C}^{\prime}\). Note that the mean bias will be corrected through the bias correction term in Eqs. (3–4)

5 Summary and final remarks

In this short contribution, we discussed some of the problems in the existing approaches to construct 6-hourly forcing data for dynamic downscaling, and proposed a new approach to overcome these shortcomings, which include the impact of realization-dependent ICV in the forcing and the lack of representativeness of the GHG-forced changes in the traditional method, and the lack of transient weather response in the PGW method. The new approach makes use of the multi-model ensemble mean for estimating the mean model bias and future climate change, and combines the future climate from a multi-model ensemble mean with the transient variations from one select model to generate the future forcing. For the current simulation period, the forcing data from the new approach is the same as that from the traditional approach with a mean bias correction (Bruyère et al. 2014). Thus, the new approach ensures that the mean changes in the forcing data between the current and future periods are primarily due to external climate forcing with little contribution from ICV, and are representative of the multi-model ensemble-mean projections. It also includes forced changes in transient weather and inter-annual variations based on one select model simulation.

In theory, the adjustments through monthly climatology for the bias correction and for the use of multi-model ensemble mean could generate some inconsistency among the different fields in the adjusted 6-hourly data because of the nonlinearity in the relationship among these fields. In practice, however, such adjustments have not produced noticeable inconsistency in previous downscaling simulations (e.g., Bruyère et al. 2014; Rasmussen et al. 2011, 2014; Liu et al. 2016). We argued that this may be because (1) the mean adjustments are generally small compared with the total variations in 6-hourly data so that any nonlinearity effect is likely to be small, and (2) the buffer zone in the regional model around the lateral boundaries can smooth out any such inconsistency. Thus, inconsistency among the modified fields is unlikely to be an issue in the forcing data generated by our new approach.

In selecting the global model for providing the transient variations (\({\rm X}_{\rm C}^{\prime}\) and \({\rm X}_{\rm F}^{\prime}\)), one needs to consider how well a model simulates the variance of \({\rm X}_{\rm C}^{\prime}\) in comparison with a good reanalysis product over the simulation domain, especially around the upstream boundaries. Another factor is the resolution of the global model. For directly forcing the regional model without domain nesting, which complicates the downscaling work and decouples the forcing constraint from the GCM for the interior domains, the global model should have relatively high resolution for convection-permitting downscaling to alleviate the large resolution jump between the RCM and GCM.

For quantifying the forced climate change, the new approach is better than using output from one or a few individual GCM simulations, which often contain realization-dependent ICV and may also be unrepresentative of other model-simulated response to future GHG forcing. However, the new approach does not address the uncertainties associated with ICV or inter-model spreads in the simulated response. Furthermore, the simulated changes in transient weather patterns are based on one select GCM simulation, which may not be representative of other models. A large ensemble of downscaling simulations is still needed to quantify these uncertainties, possibly through efficient statistical downscaling (Themeßl et al. 2011; Walton et al. 2015). Nevertheless, for those who can only afford a few RCM simulations, our new approach provides an efficient way for them to quantify the most likely response to future GHG increases.

A final remark is on spectral nudging within the entire downscaling domain (in contrast to the boundary forcing constraint discussed above) to reanalysis fields for large-scale (>1000–2000 km) variations in the free troposphere. This technique is available within the WRF model (for nudging u, v, T, and Z) and has been commonly used to reduce the mean biases over a relatively large domain in present-day simulations, often with good success but at a price of considerable additional computational costs (e.g., Xu and Yang 2015; Liu et al. 2016). However, such a nudging puts a strong constraint on the large-scale downscaled fields, as it essentially brings the large-scale fields back to a pre-defined condition, which is the reanalysis field for the present-day run and the reanalysis plus GCM-derived perturbation for the PGW-based future simulation (e.g., Liu et al. 2016). Thus, the downscaled large-scale changes in the free troposphere (which also affects surface fields) are likely to resemble those from the global models, and thus only meso- and small-scale (<~1000 km) changes are captured in this type of downscaling. The PGW-based downscaling focuses on thermodynamic impacts of climate change, and the nudging keeps the same weather events (such as hurricanes and other extreme events) in both the current and future simulations. This makes it possible to study the impacts of future warming on recent extreme events if they were to occur in the future warmer climate. For non-PGW based downscaling, one does not have a standard to nudge to for the future climate, since one cannot consider the GCM fields more reliable than the regional model simulations. Because of these reasons, we do not recommend spectral nudging in non-PGW based dynamic downscaling of future climate changes. One exception is simulations of the present-day conditions, in which spectral nudging can further constrain the large-scale weather patterns to a reanalysis within the domain and thus greatly improve the simulations in comparison with observations. Without the nudging, the interior of a large domain is only weakly constrained by the boundary forcing and thus is allowed to deviate from the real world. This would make it incompatible with observations and thus difficult to evaluate the model’s performance.

References

Bruyère CL, Done JM, Holland GJ, Fredrick S (2014) Bias corrections of global models for regional climate simulations of high-impact weather. Clim Dyn 43:1847–1856. doi:10.1007/s00382-013-2011-6

Collins M et al (2013) Long-term climate change: Projections, commitments and irreversibility. In: Stocker TF et al (eds) Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, pp 1029–1136

Dai A (2013) the influence of the inter-decadal pacific oscillation on U.S. precipitation during 1923–2010. Clim Dyn 41:633–646. doi:10.1007/s00382-012-1446-5

Dai A, Bloecker CE (2017a) Impacts of internal variability on temperature and precipitation trends in large ensemble simulations by two climate models. Clim Dyn (submitted)

Dai A, Fyfe JC, Xie S-P, Dai X (2015) Decadal modulation of global surface temperature by internal climate variability. Nat Clim Change 5:555–559. doi:10.1038/nclimate2605

Dai A, Rasmussen RM, Liu C, Ikeda K, Prein AF (2017b) Changes in precipitation characteristics over North America by the late 21st century simulated by a convection-permitting model. Clim Dyn (revised)

Dee DP et al (2011) The ERA-Interim reanalysis: configuration and performance of the data assimilation system. Quart J R Meteorol Soc 137:553–597. doi:10.1002/qj.828

Deser C, Knutti R, Solomon S, Phillips AS (2012a) Communications of the role of natural variability in future North American climate. Nat Clim Change 2:775–779. doi:10.1038/nclimate1562

Deser C, Phillips AS, Bourdette V, Teng H (2012b) Uncertainty in climate change projections: the role of internal variability. Climate Dyn 38:527–546. doi:10.1007/s00382-010-0977-x

Deser C, Phillips AS, Alexander MA, Smoliak BV (2014) Projecting North American climate over the next 50 years: uncertainty due to internal variability. J Climate 27:2271–2296. doi:10.1175/JCLI-D-13-00451.1

Dong B, Dai A (2015) The influence of the Inter-decadal Pacific Oscillation on temperature and precipitation over the globe. Clim Dyn 45:2667–2681. doi:10.1007/s00382-015-2500-x

Giogi F, Mearns LO (1991) Approaches to the simulation of regional climate change: a revew. Rev Gephys 29:191–216

Giorgi F, Lionello P (2008) Climate change projections for the Mediterranean region. Global Planet Change 63:90–104

Giorgi F, Jones C, Asrar GR (2006) Addressing climate information needs at the regional level: the CORDEX framework. Bull World Meteorol Organ 58:175–183

Hara M, Yoshikane T, Kawase H, Kimura F (2008) Estimation of the impact of global warming on snow depth in Japan by the pseudo-global warming method. Hydrol Res Lett 2:61–64

Jacob D et al (2014) EUROCORDEX: new high resolution climate change projections for European impact research. Reg Environ Change 4:563–578. doi:10.1007/s1011301304992

Kawase H, Yoshikane T, Hara M, Kimura F, Yasunari T, Ailikun B, Ueda H, Inoue T (2009) Intermodel variability of future changes in the Baiu rainband estimated by the pseudo global warming downscaling method. J Geophys Res 114:D24110. doi:10.1029/2009JD011803

Kendon E, Ban N, Roberts N, Fowler H, Roberts M, Chan S, Evans J, Fosser G, Wilkinson J (2017) Do convection-permitting regional climate models improve projections of future precipitation change? Bull Am Meteor Soc 98:79–93. doi:10.1175/BAMS-D-15-0004.1

Knutti R, Furrer R, Tebaldi C, Cermak J, Meehl GA (2010) Challenges in combining projections from multiple climate models. J Clim 23:2739–2758

Liu C et al (2016) Continental-scale convection-permitting modeling of the current and future climate of North America. Clim Dyn. doi:10.1007/s00382-016-3327-9 (press)

Maraun D et al (2010) Precipitation downscaling under climate change: recent developments to bridge the gap between dynamical models and the end user. Rev Geophys 48:RG3003. doi:10.1029/2009RG000314

Mearns LO, Gutowski WJ, Jones R, Leung L-Y, McGinnis S, Nunes AMB, Qian Y (2009) A regional climate change assessment program for North America. EOS 90:311–312

Prein AF et al (2015) A review on regional convection-permitting climate modeling: demonstrations, prospects, and challenges. Rev Geophys 53:323–361. doi:10.1002/2014RG000475

Prein AF, Rasmussen RM, Ikeda K, Liu C, Clark MP, Holland GJ (2017) The future intensification of hourly precipitation extremes. Nat Clim Change 7:48–52. doi:10.1038/NCLIMATE3168

Rasmussen RM et al (2011) High-resolution coupled climate runoff simulations of seasonal snowfall over Colorado: a process study of current and warmer climate. J Clim 24:3015–3048

Rasmussen RM, Ikeda K, Liu C, Gochis D, Clark M, Dai A, Gutmann E, Dudhia J, Chen F, Barlage M, Yates D (2014) Climate change impacts on the water balance of the Colorado Headwaters: high-resolution regional climate model simulations. J Hydrometeorol 15:1091–1116

Schär C, Frie C, Lu¨ thi D, Davies HC (1996) Surrogate climate-change scenarios for regional climate models. Geophys Res Lett 23:669–672

Themeßl MJ, Gobiet A, Leuprecht A (2010) Empirical-statistical downscaling and error correction of daily precipitation from regional climate models. Int J Climatol 31:1530–1544

Vautard R et al (2014) The European climate under a 2 °C global warming. Environ Res Lett 9:034006. doi:10.1088/1748-9326/9/3/034006

Wallace JM, Deser C, Smoliak BV, Phillips AS (2016) Attribution of climate change in the presence of internal variability. In: Chang CP et al (eds) Climate Change: Multidecadal and Beyond. World Scientific Series on Asia-Pacific Weather and Climate, vol 6, World Scientific, pp 1–29

Walton DB, Sun F, Hall A, Capps S (2015) A hybrid dynamical-statistical downscaling technique. Part I: Development and validation of the technique. J Clim 28:4597–4617

Wang J, Kotamarthi VR (2015) High-resolution dynamically downscaled projections of precipitation in the mid and late 21st century over North America. Earth’s Future 3:268–288. doi:10.1002/2015EF000304

Wilby RL, Wigley TML (1997) Downscaling general circulation model output: a review of methods and limitaitons. Progr Phys Geogr 21:530–548

Xu Z, Yang Z-L (2015) A new dynamical downscaling approach with GCM bias corrections and spectral nudging. J Geophys Res Atmos 120:3063–3084. doi:10.1002/2014JD022958

Acknowledgements

A. Dai acknowledges the supported by the U.S. National Science Foundation (Grant #AGS–1353740), the U.S. Department of Energy’s Office of Science (Award #DE–SC0012602), and the U.S. National Oceanic and Atmospheric Administration (Award #NA15OAR4310086). NCAR is funded by the National Science Foundation. Computer resources were provided by the Computational and Information Systems Laboratory of NCAR.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is a contribution to the special issue on Advances in Convection-Permitting Climate Modeling, consisting of papers that focus on the evaluation, climate change assessment, and feedback processes in kilometer-scale simulations and observations. The special issue is coordinated by Christopher L. Castro, Justin R. Minder, and Andreas F. Prein.

Rights and permissions

About this article

Cite this article

Dai, A., Rasmussen, R.M., Ikeda, K. et al. A new approach to construct representative future forcing data for dynamic downscaling. Clim Dyn 55, 315–323 (2020). https://doi.org/10.1007/s00382-017-3708-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-017-3708-8