Abstract

The intensity of European heatwaves is connected to specific synoptic atmospheric circulation. Given the relatively small number of observations, estimates of the connection between the circulation and temperature require ad hoc statistical methods. This can be achieved through the use of analogue methods, which allow to determine a distribution of temperature conditioned to the circulation. The computation of analogues depends on a few parameters. In this article, we evaluate the influence of the variable representing the circulation, the size of the domain of computation, the length of the dataset, and the number of analogues on the reconstituted temperature anomalies. We tested the sensitivity of the reconstitution of temperature to these parameters for four emblematic recent heatwaves: June 2003, August 2003, July 2006 and July 2015. The paper provides general guidelines for the use of flow analogues to investigate European summer heatwaves. We found that Z500 is better suited than SLP to simulate temperature anomalies, and that rather small domains lead to better reconstitutions. The dataset length has an important influence on the uncertainty. We conclude by a set of recommendations for an optimal use of analogues to probe European heatwaves.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There have been many studies showing that heatwaves are bound to become more intense and more frequent under climate change (Field and Intergovernmental Panel on Climate Change 2012). The evolution of the probabilities of those events and of their properties, such as intensity, duration and extent, is a key question for adaptation due to their impacts, including on crop yields (Ciais et al. 2005) and human health (Peng et al. 2011; Fouillet et al. 2006). A first step is to understand the physical processes at play during heatwaves, such as the influence of soil moisture (Seneviratne et al. 2010), or SST (Feudale and Shukla 2007). Yiou and Nogaj (2004) studied the relation between the atmospheric circulation and extreme events over the North Atlantic and Horton et al. (2015) linked the increase of heatwaves to the increase of the frequency of mainly anticyclonic weather types. In this paper, we aim at quantifying the role of the atmospheric circulation during spells of high temperatures, that occurred in major European heatwaves. In particular, we want to understand which proportion of the heatwave intensities can be explained solely based on the associated atmospheric circulation, in an effort to disentangle its contribution compared to other factors such as global warming or land surface feedbacks (Shepherd 2015).

Our methodology is based on flow analogues (e.g. Yiou et al. 2014). Historically, analogues were used in weather forecasting (e.g. Lorenz 1969; Duband 1981; Toth 1991; Chardon et al. 2016; Ben Daoud et al. 2016). They have been used in empirical downscaling (e.g. Chardon et al. 2014; Zorita and von Storch 1999), circulation dependent bias correction (e.g. Turco et al. 2011; Hamill and Whitaker 2006; Hamill et al. 2015; Djalalova et al. 2015), in combination with ensemble data assimilation (Tandeo et al. 2015), in probabilistic wind energy potential estimation (Vanvyve et al. 2015), and paleo climate reconstruction (Schenk and Zorita 2012; Gómez-Navarro et al. 2014).

Here, the analogues are defined as days with an atmospheric circulation similar to the day of interest. The underlying assumption is that the circulation has an influence on more local climate variables such as temperature and that therefore the temperature in a specific region given a certain type of circulation has a more narrow distribution than the unconditioned temperature in the same region. To isolate the influence of certain types of circulation on the temperature, we compare the probability density functions of temperature anomalies reconstructed for both randomly picked days and days picked among analogues. The analogues depend on many parameters, including the size of the domain of computation, or the length of the dataset. The goal of this paper is to provide general guidelines to choose those parameters to get flow analogues adapted to the study of European summer heatwaves. Those guidelines are obtained from four emblematic cases of heatwaves. Our paper explores physical parameters on which the analogues are computed, and focuses on temperature reconstructions.

Section 2 details the methodology used in this study. Section 3 tests the sensitivity of several physical and statistical parameters on which the methodology is based. A part of this section is devoted to a qualitative evalutation of the uncertainty related to the limited size of the datasets. Section 4 focuses on the role played by the circulation in each of the chosen case studies. The results are discussed in Sect. 5 and conclusions appear in Sect. 6.

2 Methodology

2.1 Heatwave selection

We focus on heatwaves occurring during the summer months (June–July–August: JJA), knowing that the processes involved in the development of a heatwave vary from one season to the other. We chose heatwaves that stroke Europe since 2000: June and August 2003 (e.g. Beniston and Diaz 2004; Fischer et al. 2007; Cassou et al. 2005) in Western Europe (WE), July 2006 (Rebetez et al. 2009) in Northern Europe (NE), and July 2015 (Russo et al. 2015) in Southern Europe (SE). We chose to study June and August 2003 and not the whole summer for consistency in the length of the studied heatwaves. Furthermore, both heatwaves have been studied separately by Stéfanon et al. (2012). We use the NCEP reanalysis I dataset (Kalnay et al. 1996), which provides us with 69 years of data from 1948 to 2016. The advantage of this dataset is that it is updated near real time (with a 3 days delay), so that the methodology could give results already a few days after a given event. Longer datasets like ERA20C (Poli et al. 2016) or the NCEP 20th Century Reanalysis (Compo et al. 2011) are less frequently updated or do not include 2015, and were therefore not retained.

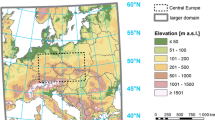

The peak temperatures occurred in different regions for each heatwave. These regions correspond to the black boxes in Fig. 1. They are centered on the region of highest temperature anomaly. The size of the boxes was defined such that the monthly temperature anomalies averaged over them are records (see Fig. 2). Hence we identify two heatwaves in 2003, in June and August, which is consistent with Stéfanon et al. (2012). Choosing a slightly larger box does not alter the results or the methodology.

We observe a significant linear temperature trend (p-value <0.05), related to climate change, for each month and region studied (red lines in Fig. 2): 0.23 \(^{\circ }\rm{C}\) per decade for June (WE), 0.24 °C for July (NE and SE) and 0.25 °C for August(WE). For the rest of the study we calculate detrended temperatures using a non-linear trend, calculated with a cubic smoothing spline (green lines in Fig. 2). The reason is to extract the role of circulation in high temperature extremes, regardless of the state of the background climate, the evolution of which is non-linear.

Evolution of the monthly temperature anomalies averaged over the regions defined in Fig. 1. The red line corresponds to the linear trend, which is significant (p-value <0.05) in all cases. The green line corresponds to a non linear trend calculated with a cubic smoothing spline

2.2 Flow analogues

We used flow analogues to extract the contribution of atmospheric circulation to the chosen heatwave events comparing their temperature anomalies to those of analogues. Analogues were defined as the N days with the most similar detrended sea level pressure (SLP) or geopotential height at 500 hPa (Z500) anomaly fields. The similarity was measured with the Euclidean distance between two maps (Yiou 2014). We only considered the days within a 61 calendar days (30 days before and 30 days after) window centered on the day of interest because of the seasonal cycle of both circulation and temperature (Yiou et al. 2012). We further exclude the days coming from the same year as the event from the 1948–2015 NCEP dataset, because of the persistence of the circulation. The program used to compute analogues CASTf90 is available online (https://a2c2.lsce.ipsl.fr/index.php/licences/file/castf90?id=3). Once the analogues were selected, we came back to the observable of interest (the detrended temperature anomalies) on those selected days. The whole process is summarized in Fig. 3.

A day with an extreme temperature anomaly (map on the top left) has a corresponding circulation, represented by the geopotential height at 500 hPa (map on the bottom left). Flow analogues are days within the database which have a similar circulation to the day of interest (maps on the bottom right). The temperature anomalies of the analogues (maps on the top right) are then compared to the temperature anomalies of the day of interest (map on the top left)

2.3 Reconstruction of temperature distributions

Our goal is to reconstruct the probability distribution of detrended temperature anomalies conditional to the atmospheric circulation. For this, we consider a day i, with a temperature \(T_i\) and a circulation \(C_i\) with N analogues \(C_i^1\ldots ,C_i^N\). The circulation analogues \(\text {ana}_i^1\ldots \text {ana}_i^N\) provide N copies of detrended temperature anomalies. Hence, we can recreate a sequence of daily temperature anomalies over a month by randomly picking one of the N best analogues for each day. The resulting monthly mean temperature anomaly is called uchronic, because it is a temperature anomaly that might have occurred for a given circulation pattern sequence. By reiterating this process, we recreated probability distributions of uchronic monthly detrended temperature anomalies conditional to the atmospheric circulation. We then compared this distribution to a distribution built from random days instead of analogues. In the rest of the article, we set the number of random iterations to 1000. This procedure is a simplified version of the stochastic weather generator of Yiou (2014), who also used weights based on the distances of the analogues. Table 1 illustrates this process for the July 2015 case.

3 Parameter sensitivity tests

The presented method depends on a few parameters. Their choice has an influence on both the results and their robustness. The following section explores the role of those parameters and how tuning them may give us further information on the relationship between circulation patterns and extreme temperature anomalies. We also want to know whether those parameters should depend on the specific event or not. This determines how general the approach can be and therefore its potential application to future events and other extra-tropical regions. In particular, we studied the role played by physical parameters: the variable on which the analogues are computed (SLP or Z500), the choice of the size of the domain on which the analogues are computed, and the length of the dataset, and a statistical parameter: the number N of analogues we kept.

3.1 Variable representing the circulation

The probability density of uchronic temperature anomalies from circulation analogues generated using detrended SLP (left boxplot of each subfigure) or detrended geopotential height at 500 hPa (right boxplot of each subfigure) for each case study: June 2003 (a), August 2003 (b), July 2006 (c), July 2015 (d). The red line represents the observed detrended temperature anomaly of the event. The three lines composing the boxplot are respectively from bottom to top, the 25th (q25), median (q50) and 75th quantiles (q75). The value of the upper whiskers is \(min(1.5\times (q75-q25)+q50,\,max(\)temperature anomaly)). The value of the lower whiskers is its conjugate

SLP (e.g. Cassou and Cattiaux 2016; Sutton and Hodson 2005; Della-Marta et al. 2007) and Z500 (e.g. Horton et al. 2015; Quesada et al. 2012; Dole et al. 2011) are the most commonly used variables to study the atmospheric circulation. We calculated analogues using either the detrended SLP or the detrended Z500. The detrending was needed due to the dependence of Z500 on lower tropospheric temperatures, which are increasing due to anthropogenic climate change. We also detrended SLP since we found a small significant positive trend of mean monthly SLP over the North Atlantic domain for the 1948–2015 period.

The detrending of SLP and Z500 was done by computing a monthly spatial average of those fields. Then a non-linear trend was calculated with a cubic smoothing spline (Green and Silverman 1994), in order to take into account the non linearity of climate change. This trend was removed to daily fields, which preserves the circulation patterns. We calculated the trends for both the North Atlantic region and the smaller regions on which the analogues are calculated. The differences between the trends for both regions were small. We did the detrending on the North Atlantic region in this study because the uncertainties on circulation patterns are amplified for smaller regions, especially as the NCEP reanalysis I grid is coarse (with a resolution of about 210 km).

The uchronic detrended temperature anomalies for each event that were calculated using analogues of detrended SLP or detrended Z500 are shown in Fig. 4. The analogues computed using Z500 give uchronic temperature anomalies closer to the observed detrended temperature anomaly of the event than those computed using SLP. For the July 2015 case with an observed detrended temperature anomaly of 2.06 \(^{\circ }\)C for example the mean of uchronic temperature anomalies calculated using SLP is 0.73 \(^{\circ }\)C while the mean uchronic temperature anomaly calculated using Z500 is 1.76 \(^{\circ }\)C. The results are qualitatively similar for the other cases. The better performance of the Z500 analogues compared to the SLP analogues is probably related to the heat low process (e.g. Portela and Castro 1996). Warm anomalies of surface temperature lead to convection. The elevation of warm air masses creates a local depression, which adds on top of an anticyclonic anomaly a cyclonic anomaly. This flattens the SLP patterns and blurs the signal, which does not happen with Z500. By using Z500 we also avoid any influence of the relief. Hence, we kept the detrended Z500 to compute the analogues for the rest of the study.

3.2 Size of the domain

The scale on which we compare circulation patterns plays a key role in the computation of the analogues. If the domain is too large, the system becomes too complicated, with too many degrees of freedom. The analogues could consequently only extract a low frequency signal, like the seasonal cycle. Van den Dool (1994) evaluates that it would take \(10^{30}\) years of data to find two matching observed flows for analogues computed over the Northern Hemisphere. If we choose too small a domain, then we cannot study the role of the synoptic circulation. So, on the one hand, it is no use to calculate analogues on whole hemispheres, and on the other hand, we do not want to select domains which are smaller than the typical scale of extra-tropical cyclones (1000 km approximately). Radanovics et al. (2013) investigated automatic algorithms to adjust the domain size of the analogues for precipitation. Here, we prefer to select a domain that yields an a priori physical relevance to account for the most important features of the flow that affects high temperatures in Europe.

The ideal size of the domain reveals the scale at which the processes are relevant and may very well vary from one event to the other. This especially applies for studies on other types of events such as heavy precipitation, droughts or storms. We compared three different domains shown in Fig. 5 (right hand side):

-

A large domain (the whole maps in Fig. 5), including the North Atlantic region, which corresponds to the domain usually used to calculate weather regimes (Vautard 1990; Michelangeli et al. 1995),

-

A medium domain (the golden rectangles in Fig. 5), centered on Europe, which is much smaller than the North Atlantic domain while being common to all events, and

-

A small domain tailored for each event (the purple rectangles in Fig. 5), depending on the circulation pattern of the specific summer.

Dependence of the probability density of uchronic detrended temperatures on the size of the domain. The maps on the right column represent the detrended Z500 monthly anomaly (m). The purple rectangles indicate the smallest zones of computation of flow analogues. The golden rectangles indicate the medium zone of computation of flow analogues. The large zone is the whole map. The boxplots of the left column display the distribution of the 1000 uchronic monthly detrented temperature constituted from randomly picked analogues. The color of the boxplot corresponds to the color of the rectangle delineating the region on which the analogues are computed. The red lines on the left hand side of the figure represent the observed detrended temperature of the case studies, from top to bottom: June 2003, August 2003, July 2006, and July 2015

The results are displayed on the left hand side of Fig. 5. The detrended temperature anomalies of the heatwaves of interest, shown by the red lines, are better reproduced using the smaller domains to calculate the circulation analogues for all four cases. This is because there are circulation patterns included in the North Atlantic domain which probably play no role in the establishment of a heatwave over Europe. For example in July 2015 we observe an important anticyclonic anomaly over Greenland. It adds a constraint on the analogues while supposedly playing no role on the lesser anticyclonic anomaly over the Northern Mediterranean region. The standard deviation of the uchronic detrended temperature anomalies also decreases with the size of the domain.

It is relevant to rely on standard domains for a first estimation of the role played by the circulation in the occurrence of a heatwave, for example by using the regions defined in Field and Intergovernmental Panel on Climate Change (2012). However, for a finer analysis focused on one specific heatwave, or a few given events, the choice of a tailored small domain gives better results. In the rest of the study, we hence kept the smaller domains.

3.3 Length of the dataset

The NCEP dataset contains 69 years. Although the recombination of analogues allows to recreate new events, the dataset is finite and hence does not cover the whole range of possible events. For example, if the circulation leading to a heatwave has a return period of more than the dataset length, there might not be similar circulation patterns in the dataset. In this situation, the computed analogues will not be a good proxy of the circulation of interest. Furthermore, even if there are close daily analogues to the daily circulation of the event, it might not account for other thermodynamical processes that may or may not happen simultaneously and lead to extreme temperatures. This shortcoming is called sampling uncertainty (Committee on Extreme Weather Events and Climate Change Attribution 2016, Chap. 3), related to the fact that the past is one occurrence of many realizations which could have happened for a given state of the climate.

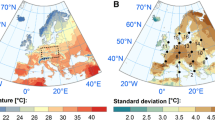

In order to get an order of magnitude of that uncertainty in the reconstruction of probability densities of temperature anomalies we used a 500 years long pre-industrial run from CMIP5 (Taylor et al. 2012). The model used is GFDL-ESM2M (Dunne et al. 2012, 2013). We chose this model because it was the model available on the IPSL data center with the longest run for both the temperature and the Z500. We selected one heatwave similar to July 2015, both in terms of temperature anomaly (compared to the detrended anomaly of July 2015) and circulation patterns (see Fig. 6). We assume that the internal variability of the model is similar to the internal variability of the reanalysis.

Analogues were computed for 60 different subsets of the 500 year dataset. The lengths of the subsets were 33, 68, 100 and 200 years (e.g. subsets of 68 consecutive years each, starting every 5 years of the data set). We then compared the means of the uchronic temperature anomaly distributions for the chosen July 2015-like month to one another for different subset lengths. The spread of the mean uchronic temperature anomalies calculated this way gives an estimation of the uncertainty related to the limited length of the dataset.

Figure 7 displays the results for subsets of 33, 68, 100 and 200 years. When the number of years of the subset decreases, the spread of the mean uchronic temperature anomalies increases, going up to approximately 0.71 \(^{\circ }\)C for the 33 years subsets, 0.62 \(^{\circ }\)C for 68 years, 0.36 \(^{\circ }\)C for 100 years, and 0.14 \(^{\circ }\)C for 200 years. This information is precious to determine in which measure smaller datasets are relevant for this methodology. It means for example that differences of up to 0.71 \(^{\circ }\)C in the mean uchronic temperatures calculated from 33 years long subsets can possibly occur due to internal variability without strictly needing additional forcing.

Sensitivity to interdecadal variability depending on the length of the dataset. Distributions of the mean uchronic temperature anomalies for 60 different subsets of varying sizes (33, 68, 100, or 200 years) from a 500 years long pre-industrial control run (model GFDL-ESM2M) for the small domain of analogues computation

The ability to find analogues close to the circulation of interest is related to both the size of the dataset and the size of the domain on which the analogues are computed (Van den Dool 1994). It means that the analogues method will get more and more accurate as the reanalysis dataset extends in the years to come.

3.4 Number of analogues

For the reconstruction of events by recombination of analogues, we kept the N best analogues. The choice of N has an influence on both the uchronic detrended temperature anomalies and the statistical robustness of the study. The best uchronic detrended temperature anomalies are closer to the observed detrended temperature anomalies of the actual events for all case studies (Fig. 8).

Dependence of the probability density of uchronic temperature anomalies on the number of analogues retained. Difference between uchronic temperature anomaly distributions calculated using different numbers of analogues for each case study: June 2003 (a), August 2003 (b), July 2007 (c), July 2015 (d). The red line represents the observed detrended temperature anomaly of the event

4 The role of circulation in heatwaves

With the parameters kept (Z500, small domains, 68 years reanalysis data, and 20 analogues) we simulated 1000 uchronic detrended monthly mean temperature anomalies for each of the four selected heatwave events (see the analogues boxplots in Fig. 9). The circulation contribution corresponds to the mean of the uchronic temperature anomaly distribution simulated using circulation analogues. The spread of the boxplots is due to the range of other processes which can, for a given circulation, lead to different temperature anomalies.

In order to measure the contribution of the circulation we compared the distribution of uchronic detrended temperature anomalies with a control distribution built using random days (Control-1 boxplots on Fig. 9). The control distribution is supposed to represent monthly detrended temperature anomalies for the given month and the given region without focusing on specific circulation patterns. However, the variability of random summers built that way is not realistic because the dependence between consecutive days is not accounted for. Analogues are by construction dependent from one another, because they are calculated using maps from consecutive (hence correlated) days, whereas randomly picked days are independent.

In order to create a more realistic distribution of temperature anomalies using random days, we also calculated detrended monthly mean temperature anomalies by using only one out of M days. M is a measure of the persistence of the circulation that is accounted for. We computed the autocorrelation of the detrended Z500 NCEP dataset for summer months (JJA) on each of the four small domains, for each grid point, with lags from 1 to 20 days (similar to Yiou et al. (2014)). For more than 10 days, the autocorrelations median tends to an asymptotic value of approximately 0.1. For 3 days, the median of the autocorrelation distribution is of approximately 0.65. For 4 days, it decreases to 0.45. Since the regions are small, the number of degrees of freedom is small too, which means that an autocorrelation of 0.45 is negligible. We hence arbitrarily decided to set \(M=3\) (Control-3 boxplots on Fig. 9). The circulation during heatwaves corresponds to a long-lasting blocking situation, hence the persistence is probably more than 3 days. This underestimation, combined with the limited length of the dataset explains why the studied events are all outside of the distributions calculated using random days subsampled every 3 days.

For every event, the circulation plays a significant role in the occurrence of the extreme. It only explains a part of it, more or less significant depending on the event. Indeed, it explains 38% of the anomaly for August 2003, 57% for June 2003, 81% for July 2015 and 92% for July 2006. Considering only the uchronic detrended temperature anomaly distribution, the observed heatwave is plausible given the large-scale trends and the circulation for both July 2006 and July 2015. Indeed the observed detrended temperature anomaly is within 2\(\sigma\) of the uchronic detrended temperature anomaly distribution. The circulation together with the subtracted large-scale trend could explain the observed temperature anomaly. This is not the case for June and August 2003 where the observed detrended temperature anomaly is respectively 6.1\(\sigma\) and 8.6\(\sigma\) above the mean of the uchronic detrended temperature distribution (see Table 2). The smaller standard deviation of the uchronic detrended temperature distribution compared to the random ones shows the effect of the analogues, that is to select a part of the distribution conditioned to the flow. Indeed the standard deviation of the uchronic detrended temperature anomaly distribution is approximately a third of the standard deviation of the temperature anomaly distribution using random days taking into account the persistence of the circulation (Control-3). Both standard deviations might be slightly underestimated due to persistence that was not accounted for. In the case of the uchronic temperature anomalies this can happen due to the random pick among the analogue days and for the Control-3 due to situations with more than 3 days of persistence that are not accounted for.

Probability distributions of uchronic detrended monthly temperature anomalies simulated using random days (left boxplot of each subfigure), random days subsampled every 3 days to correct for serial dependence (middle boxplot of each subfigure) and analogues (right boxplot of each subfigure) for each case study: June 2003 (a), August 2003 (b), July 2007 (c), July 2015 (d). The red line represents the observed detrended temperature anomaly of the event

In order to contextualize the four case studies, we reproduced the same kind of probability density function experiments for the same regions from 1948 to 2015 (Fig. 10). We calculated the uchronic detrended temperature anomaly distributions for the months of June from 1948 to 2015 on the regions (both the temperature and the circulation regions) defined for June 2003 (Fig. 10a). We did the same for the other three events. This type of recontextualisation can be interpreted as an estimation of how extreme an event really is, with respect to its atmospheric circulation.

Evolution of the detrended temperature distributions for all the months of June in Western Europe (a), August in Western Europe (b), July in Northern Europe (c) and July in Southern Europe (d). The regions are displayed in Fig. 1. The red dots correspond to the observed detrended temperature anomaly for each year

The observed monthly mean detrended temperature anomaly falls between the 10th and 90th percentiles of the uchronic detrended temperature anomaly distribution for more than half of the years between 1948 and 2015. It falls between the 1st and 99th percentiles for more than two thirds of the years, even though the uchronic temperature anomaly distribution has a small spread compared to the total distribution. The years with observed detrended temperature anomalies out of interval between the 1st and 99th percentile correspond mostly to large detrended temperature anomalies with absolute value \({>}{0.5}\) \(^{\circ }\)C. For less than a quarter of the years between 1948 and 2015 the mean of the uchronic detrended temperature anomaly distribution has a sign different from the observed detrended temperature anomaly. Those years correspond to low detrended temperature anomalies with absolute values \({<}{0.5}\) \(^{\circ }\)C.

5 Discussion

The median of the uchronic temperature anomaly distribution is generally different from the observed temperature anomaly. In some cases, the observed detrended temperature anomaly (red line on Fig. 9) is not even in the uchronic temperature anomaly distribution. On Fig. 9 for June and August 2003, and for some of the years on Fig. 10, this is the case (indeed, the monthly detrended temperature anomalies for both months are higher than 3 \(^{\circ }\rm{C}\)). This difference shows caveats in the methodology, and that some heatwave events cannot be explained only by their circulation.

Flow analogues are unable to reproduce the role played by the soil-moisture feedback. Indeed, the analogues do not take into account the history of the heatwave. Extreme heatwaves happen when the circulation causing the initial anomaly of temperature lasts more than a few days. As soil moisture becomes limited, the cooling of the atmosphere through evapotranspiration gets weaker, which exercises a positive feedback on the temperature. Seneviratne et al. (2010) isolates a dry and a wet regime, with a transition phase between both. The three temperature regions used here are prone to different evaporative regimes. In particular, the Northern Europe region is wetter than the other two. The role of soil moisture is thus less important (Seneviratne et al. 2006). On the other hand, several articles (Stéfanon et al. 2012; Fischer et al. 2007) showed the role of soil moisture in the exceptional temperature anomalies of summer 2003, especially for August. The analogues are picked without any condition on the previous days or soil moisture, and consequently they fail to reach the observed anomaly.

The main caveat of this methodology is the limited size of the dataset, which introduces an important sampling uncertainty, as seen in Sect. 3.3, and also affects the quality of the analogues. As a result, the analogues might not be good enough to accurately reproduce the dynamical contribution. Indeed, an extreme temperature can be related to a rare circulation, the like of which might not be found in a short dataset. The distances between the analogues and the event, as well as their correlations, are indices to evaluate the relevance of the analogues in each case. A better definition of what is a good analogue will require further studies. Depending on the magnitude of the studied event, it might not be possible to reconstruct a comparable month by resampling the days in the dataset. This is the case for both June and August 2003, which have temperature anomalies about one degree Celsius above all the other years, despite the detrending. If the event is too rare, it will not be possible to reconstitute uchronic temperature anomalies close to the observed ones.

Another limitation relates to the coherence of the uchronic summers computed using analogues. Due to the persistence of the circulation, the analogues we picked for each day are correlated to one another. Indeed, analogues of following -and thus correlated- days are not independent. In our case, we picked the 20 best analogues for each day. For each event we hence have an ensemble of 20 times the number of days of the month analogues. A proof of the correlation between analogues of following days is that only half of the analogues in this ensemble are unique. However, the persistence is still underestimated compared to real summers. Consequently, the spread of the computed uchronic temperature anomaly distributions is underestimated.

Lastly, this article only considers one month-long heatwaves, while some events as short as three consecutive days can be considered as heatwaves (Russo et al. 2015). We have tested how the length of heatwaves affects the uncertainties of the method using a test similar to the one used in Sect. 3.3, for events of different length (not shown here). The sampling uncertainty on the mean uchronic temperature anomaly decreases for longer events. It also seems that it can differ from one week-long event to the other. For week-long events, the probability to only have days with poor analogues is higher than for longer events, especially if we deal with unusual events in terms of atmospheric circulation. Since the reasons behind those differences relate to the quality of analogues, we intend to treat this more thoroughly in further studies. However, we recommend to accompany any study using analogues as presented in this article with an evaluation of the sampling uncertainty to validate the relevance of the methodology. This evaluation could be based on pre-industrial runs similar to what is displayed here in Sect. 3.3 or on large ensembles of simulations.

6 Conclusion

This paper proposes to quantify the role of the atmospheric circulation in the occurrence of an extreme monthly anomaly of temperature. The strength of our methodology is that it is easily adaptable to other regions, and to other events. The parameter sensitivity tests of section three provide general guidelines to choose flow analogues to investigate European summer heatwaves. It is best to use detrended Z500 as a proxy of circulation, and to compile the analogues on a small domain centered on the Z500 anomaly concomitant to the event. We also advise to use as long a dataset as possible.

The results on parameter sensitivities have potential implications for applications of the analogue method in a downscaling or reconstruction context as well. The questions of the predictor variable (or variables), that is the circulation proxy, is relevant in the downscaling context but may vary depending on the predictand variable. The question of domain size has been treated by several authors (e.g. Chardon et al. 2014; Radanovics et al. 2013; Beck et al. 2015) and the results are systematically in favor of relatively small domains, in line with our findings. Tests on archive lengths larger than typical reanalysis record lengths are rarely performed. The results are relevant since split-sample validation of downscaling methods is common practice and our results show that splitting the limited length reanalysis record leads to large uncertainties in the uchronic temperatures due to the limited sample size even using a relatively small domain.

The reconstitution of an ensemble of uchronic temperatures for a given circulation is a first step to refine the approach of Cattiaux et al. (2010) to extreme event attribution. Indeed, looking at changes for a given circulation should reduce the signal to noise ratio of climate change versus natural variability (Trenberth et al. 2015) in what Shepherd (2016) calls a “storyline approach” to extreme events attribution. There are two ways to compare two worlds with and without climate change. The first one is to use climate simulations with and without anthropogenic forcing. The second one is to compare observations of recent years to observations from further back in time. It is then possible to detect a change between two periods or two simulations outputs. One has to keep in mind that detecting a difference of temperature is not enough to attribute the difference between the two to climate change, rather than to natural variability. Indeed, the internal variability between the two periods could be of the same order of magnitude than the difference caused by climate change. We have shown in Sect. 3.3 that the longer the dataset, the more it reduces the impact of internal variability on the results.

Since among the tested parameters only the regions of the temperature anomaly and of the geopotential height field depend on the event, a diagnosis on heatwaves can be automatized and computed in less than a day once the data set is available.

References

Beck C, Philipp A, Jacobeit J (2015) Interannual drought index variations in Central Europe related to the large-scale atmospheric circulation—application and evaluation of statistical downscaling approaches based on circulation type classifications. Theor Appl Climatol 121(3):713–732. doi:10.1007/s00704-014-1267-z

Ben Daoud A, Sauquet E, Bontron G, Obled C, Lang M (2016) Daily quantitative precipitation forecasts based on the analogue method: Improvements and application to a French large river basin. Atmos Res 169:147–159. doi:10.1016/j.atmosres.2015.09.015

Beniston M, Diaz HF (2004) The 2003 heat wave as an example of summers in a greenhouse climate? Observations and climate model simulations for Basel, Switzerland. Glob Planet Change 44(1–4):73–81. doi:10.1016/j.gloplacha.2004.06.006

Cassou C, Cattiaux J (2016) Disruption of the European climate seasonal clock in a warming world. Nat Clim Change 6:589–594. doi:10.1038/nclimate2969

Cassou C, Terray L, Phillips AS (2005) Tropical Atlantic influence on European heat waves. J Clim 18(15):2805–2811. doi:10.1175/JCLI3506.1

Cattiaux J, Vautard R, Cassou C, Yiou P, Masson-Delmotte V, Codron F (2010) Winter 2010 in Europe: a cold extreme in a warming climate. Geophys Res Lett 37(20):1–6. doi:10.1029/2010GL044613

Chardon J, Hingray B, Favre AC, Autin P, Gailhard J, Zin I, Obled C (2014) Spatial similarity and transferability of analog dates for precipitation downscaling over France. J Clim 27(13):5056–5074. doi:10.1175/JCLI-D-13-00464.1

Chardon J, Favre AC, Hingray B (2016) Effects of spatial aggregation on the accuracy of statistically downscaled precipitation predictions. J Hydrometeorol 17(5):1561–1578. doi:10.1175/JHM-D-15-0031.1

Ciais P, Reichstein M, Viovy N, Granier A, Ogee J, Allard V, Aubinet M, Buchmann N, Bernhofer C, Carrara A, Chevallier F, De Noblet N, Friend AD, Friedlingstein P, Grunwald T, Heinesch B, Keronen P, Knohl A, Krinner G, Loustau D, Manca G, Matteucci G, Miglietta F, Ourcival JM, Papale D, Pilegaard K, Rambal S, Seufert G, Soussana JF, Sanz MJ, Schulze ED, Vesala T, Valentini R (2005) Europe-wide reduction in primary productivity caused by the heat and drought in 2003. Nature 437(7058):529–533. doi:10.1038/nature03972

Committee on Extreme Weather Events and Climate Change Attribution (2016) Attribution of extreme weather events in the context of climate change. doi:10.17226/21852.

Compo GP, Whitaker JS, Sardeshmukh PD, Matsui N, Allan RJ, Yin X, Gleason BE, Vose RS, Rutledge G, Bessemoulin P, Brönnimann S, Brunet M, Crouthamel RI, Grant AN, Groisman PY, Jones PD, Kruk M, Kruger AC, Marshall GJ, Maugeri M, Mok HY, Nordli O, Ross TF, Trigo RM, Wang XL, Woodruff SD, Worley SJ (2011) The twentieth century reanalysis project. Q J R Meteorol Soc 137:1–28. doi:10.1002/qj.776

Della-Marta PM, Luterbacher J, von Weissenfluh H, Xoplaki E, Brunet M, Wanner H (2007) Summer heat waves over western Europe 1880–2003, their relationship to large-scale forcings and predictability. Clim Dyn 29(2–3):251–275. doi:10.1007/s00382-007-0233-1

Djalalova I, Delle Monache L, Wilczak J (2015) PM2.5 analog forecast and Kalman filter post-processing for the Community Multiscale Air Quality (CMAQ) model. Atmos Environ 108:76–87. doi:10.1016/j.atmosenv.2015.02.021

Dole R, Hoerling M, Perlwitz J, Eischeid J, Pegion P, Zhang T, Quan XW, Xu T, Murray D (2011) Was there a basis for anticipating the 2010 Russian heat wave? Geophys Res Lett 38(6):1–5. doi:10.1029/2010GL046582

Van den Dool H (1994) Searching for analogues, how long must we wait? Tellus A 46(3):314–324

Duband D (1981) Prévision spatiale des hauteurs de précipitations journalières (A spatial forecast of daily precipitation heights). La Houille Blanche 7–8:497–512. doi:10.1051/lhb/1981046

Dunne JP, John JG, Shevliakova S, Stouffer RJ, Krasting JP, Malyshev SL, Milly PCD, Sentman LT, Adcroft AJ, Cooke W, Dunne KA, Griffies SM, Hallberg RW, Harrison MJ, Levy H, Wittenberg AT, Phillips PJ, Zadeh N (2012) GFDL’s ESM2 global coupled climate-carbon earth system models. Part I: physical formulation and baseline simulation characteristics. J Clim 25:6646–6665. doi:10.1175/JCLI-D-11-00560.1

Dunne JP, John JG, Shevliakova S, Stouffer RJ, Krasting JP, Malyshev SL, Milly PCD, Sentman LT, Adcroft AJ, Cooke W, Dunne KA, Griffies SM, Hallberg RW, Harrison MJ, Levy H, Wittenberg AT, Phillips PJ, Zadeh N (2013) GFDL’s ESM2 global coupled climate-carbon earth system models. Part II: carbon system formulation and baseline simulation characteristics. J Clim 26(7):2247–2267. doi:10.1175/JCLI-D-12-00150.1

Feudale L, Shukla J (2007) Role of Mediterranean SST in enhancing the European heat wave of summer 2003. Geophys Res Lett 34(3):L03811. doi:10.1029/2006GL027991

Field CB, Intergovernmental Panel on Climate Change (2012) Managing the risks of extreme events and disasters to advance climate change adaptation: special report of the Intergovernmental Panel on Climate Change. doi:10.1017/CBO9781139177245

Fischer EM, Seneviratne SI, Vidale PL, Lüthi D, Schär C (2007) Soil moisture-atmosphere interactions during the 2003 European summer heat wave. J Clim 20(20):5081–5099. doi:10.1175/JCLI4288.1

Fouillet A, Rey G, Laurent F, Pavillon G, Bellec S, Guihenneuc-Jouyaux C, Clavel J, Jougla E, Hémon D (2006) Excess mortality related to the August 2003 heat wave in France. Int Arch Occup Environ Health 80(1):16–24. doi:10.1007/s00420-006-0089-4

Gómez-Navarro JJ, Werner J, Wagner S, Luterbacher J, Zorita E (2014) Establishing the skill of climate field reconstruction techniques for precipitation with pseudoproxy experiments. Clim Dyn 45(5–6):1395–1413. doi:10.1007/s00382-014-2388-x

Green PJ, Silverman BW (1994) Nonparametric regression and generalized linear models : a roughness penalty approach, 1st edn. In: Green PJ, Silverman BW. Monographs on statistics and applied probability, vol 58, Chapman & Hall, London

Hamill TM, Whitaker JS (2006) Probabilistic quantitative precipitation forecasts based on reforecast analogs: theory and application. Mon Weather Rev 134(11):3209–3229. doi:10.1175/MWR3237.1

Hamill TM, Scheuerer M, Bates GT (2015) Analog probabilistic precipitation forecasts using GEFS reforecasts and climatology-calibrated precipitation analyses. Mon Weather Rev 143(8):3300–3309. doi:10.1175/MWR-D-15-0004.1

Horton DE, Johnson NC, Singh D, Swain DL, Rajaratnam B, Diffenbaugh NS (2015) Contribution of changes in atmospheric circulation patterns to extreme temperature trends. Nature 522(7557):465–469. doi:10.1038/nature14550

Kalnay E, Kanamitsu M, Kistler R, Collins W, Deaven D, Gandin L, Iredell M, Saha S, White G, Woollen J, Zhu Y, Chelliah M, Ebisuzaki W, Higgins W, Janowiak J, Mo KC, Ropelewski C, Wang J, Leetmaa A, Reynolds R, Jenne R, Joseph D (1996) The NCEP/NCAR 40-year reanalysis project. doi: 10.1175/1520-0477(1996)077<0437:TNYRP>2.0.CO;2, arXiv:1011.1669v3

Lorenz EN (1969) Atmospheric predictability as revealed by naturally occurring analogues. J Atmos Sci 26(4):636–646. doi:10.1175/1520-0469(1969)26<636:APARBN>2.0.CO;2

Michelangeli PA, Vautard R, Legras B (1995) Weather regimes: recurrence and quasi stationarity. doi:10.1175/1520-0469(1995)052<1237:WRRAQS>2.0.CO;2

Peng RD, Bobb JF, Tebaldi C, McDaniel L, Bell ML, Dominici F (2011) Toward a quantitative estimate of future heat wave mortality under global climate change. Environ Health Perspect. doi:10.1289/ehp.1002430

Poli P, Hersbach H, Dee DP, Berrisford P, Simmons AJ, Vitart F, Laloyaux P, Tan DGH, Peubey C, Thépaut JN, Trémolet Y, Hólm EV, Bonavita M, Isaksen L, Fisher M (2016) ERA-20C: an atmospheric reanalysis of the twentieth century. J Clim 29(11):4083–4097. doi:10.1175/JCLI-D-15-0556.1

Portela A, Castro M (1996) Summer thermal lows in the Iberian peninsula: a three-dimensional simulation. Q J Roy Meteorol Soc 122(1):1–22. doi:10.1002/qj.49712252902

Quesada B, Vautard R, Yiou P, Hirschi M, Seneviratne SI (2012) Asymmetric European summer heat predictability from wet and dry southern winters and springs. Nat Clim Change 2(10):736–741. doi:10.1038/nclimate1536

Radanovics S, Vidal JP, Sauquet E, Ben Daoud A, Bontron G (2013) Optimising predictor domains for spatially coherent precipitation downscaling. Hydrol Earth Sys Sc 17(10):4189–4208. doi:10.5194/hess-17-4189-2013

Rebetez M, Dupont O, Giroud M (2009) An analysis of the July 2006 heatwave extent in Europe compared to the record year of 2003. Theor Appl Climatol 95(1–2):1–7. doi:10.1007/s00704-007-0370-9

Russo S, Sillmann J, Fischer EM (2015) Top ten European heatwaves since 1950 and their occurrence in the future. Environ Res Lett 10(12):124003. doi:10.1088/1748-9326/10/12/124003

Schenk F, Zorita E (2012) Reconstruction of high resolution atmospheric fields for Northern Europe using analog-upscaling. Clim Past 8(5):1681–1703. doi:10.5194/cp-8-1681-2012

Seneviratne SI, Lüthi D, Litschi M, Schär C (2006) Land-atmosphere coupling and climate change in Europe. Nature 443(7108):205–209. doi:10.1038/nature05095

Seneviratne SI, Corti T, Davin EL, Hirschi M, Jaeger EB, Lehner I, Orlowsky B, Teuling AJ (2010) Investigating soil moisture-climate interactions in a changing climate: a review. Earth Sci Rev 99(3–4):125–161. doi:10.1016/j.earscirev.2010.02.004

Shepherd TG (2015) Climate science: the dynamics of temperature extremes. Nature 522(7557):425–427. doi:10.1038/522425a

Shepherd TG (2016) A common framework for approaches to extreme event attribution. Curr Clim Change Rep 2:28–38. doi:10.1007/s40641-016-0033-y

Stéfanon M, Drobinski P, D’Andrea F, De Noblet-Ducoudré N (2012) Effects of interactive vegetation phenology on the 2003 summer heat waves. J Geophys Res Atmos 117(24):1–15. doi:10.1029/2012JD018187

Sutton RT, Hodson DLR (2005) Atlantic Ocean forcing of North American and European summer climate. Science 309(5731):115–118. doi:10.1126/science.1109496

Tandeo P, Ailliot P, Ruiz J, Hannart A, Chapron B, Easton R, Fablet R (2015) Combining analog method and ensemble data assimilation: application to the Lorenz-63 chaotic system. Mach Learn Data Min Approach Clim Sci 3–12. doi:10.1007/978-3-319-17220-0_1

Taylor KE, Stouffer RJ, Meehl Ga (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93(4):485–498. doi:10.1175/BAMS-D-11-00094.1

Toth Z (1991) Estimation of atmospheric predictability by circulation analogs. Mon Weather Rev 119(1):65–72

Trenberth KE, Fasullo JT, Shepherd TG (2015) Attribution of climate extreme events. Nat Clim Change 5(August):725–730. doi:10.1038/nclimate2657

Turco M, Quintana Seguí P, Llasat MC, Herrera S, Gutiérrez JM (2011) Testing MOS precipitation downscaling for ENSEMBLES regional climate models over Spain. J Geophys Res 116(D18):109. doi:10.1029/2011JD016166

Vanvyve E, Monache LD, Monaghan AJ, Pinto JO (2015) Wind resource estimates with an analog ensemble approach. Renew Energy 74:761–773. doi:10.1016/j.renene.2014.08.060

Vautard R (1990) Multiple weather regimes over the North Atlantic: analysis of precursors and successors. Mon Weather Rev 118. doi:10.1175/1520-0493(1990)118<2056:MWROTN>2.0.CO;2

Yiou P (2014) AnaWEGE: a weather generator based on analogues of atmospheric circulation. Geosci Model Dev 7:531–543. doi:10.5194/gmd-7-531-2014

Yiou P, Nogaj M (2004) Extreme climatic events and weather regimes over the North Atlantic: when and where? Geophys Res Lett 31:1–4. doi:10.1029/2003GL019119

Yiou P, Salameh T, Drobinski P, Menut L, Vautard R, Vrac M (2012) Ensemble reconstruction of the atmospheric column from surface pressure using analogues. Clim Dyn 41(5–6):1333–1344. doi:10.1007/s00382-012-1626-3

Yiou P, Boichu M, Vautard R, Vrac M, Jourdain S, Garnier E, Fluteau F, Menut L (2014) Ensemble meteorological reconstruction using circulation analogues of 1781–1785. Clim Past 10(2):797–809. doi:10.5194/cp-10-797-2014

Zorita E, von Storch H (1999) The analog method as a simple statistical downscaling technique: comparison with more complicated methods. J Clim 12(8):2474–2489. doi:10.1175/1520-0442(1999)012<2474:TAMAAS>2.0.CO;2

Acknowledgements

NCEP Reanalysis data provided by the NOAA/OAR/ESRL PSD, Boulder, Colorado, USA, from their Web site at http://www.esrl.noaa.gov/psd/. Program to compute analogues available online https://a2c2.lsce.ipsl.fr/index.php/licences/file/castf90?id=3. PY and SR are supported by the ERC Grant A2C2 (No. 338965).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jézéquel, A., Yiou, P. & Radanovics, S. Role of circulation in European heatwaves using flow analogues. Clim Dyn 50, 1145–1159 (2018). https://doi.org/10.1007/s00382-017-3667-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-017-3667-0