Abstract

Model error is a major obstacle for enhancing the forecast skill of El Niño-Southern Oscillation (ENSO). Among three kinds of model error sources—dynamical core misfitting, physical scheme approximation and model parameter errors, the model parameter errors are treatable by observations. Based on the Zebiak-Cane model, an ensemble coupled data assimilation system is established to study the impact of parameter optimization (PO) on ENSO predictions within a biased twin experiment framework. “Observations” of sea surface temperature anomalies drawn from the “truth” model are assimilated into a biased prediction model in which model parameters are erroneously set from the “truth” values. The degree by which the assimilation and prediction with or without PO recover the “truth” is a measure of the impact of PO. Results show that PO improves ENSO predictability—enhancing the seasonal-interannual forecast skill by about 18 %, extending the valid lead time up to 33 % and ameliorating the spring predictability barrier. Although derived from idealized twin experiments, results here provide some insights when a coupled general circulation model is initialized from the observing system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As the most prominent and predictable mode in the coupled ocean–atmosphere system, El Niño-Southern Oscillation (ENSO) significantly influences human society and ecosystems (McPhaden et al. 2006). While great progress has been made in ENSO predictions with statistical models (e.g., Penland and Magorian 1993) and dynamic models (e.g., Cane et al. 1986; Chen et al. 2004; Jin et al. 2008), ENSO predictability is constrained by model errors, initial errors and stochastic “noise” (e.g., Moore and Kleeman 1999) of the atmosphere.

Studies have revealed that model errors generally play a more important role in climate prediction than initial condition errors (e.g., Stainforth et al. 2005). While statistical bias correction is widely used to improve ENSO forecasts (e.g., Chen et al. 2000, 2004), dynamical methods are also pursued to improve understanding of ENSO mechanisms and reduce model errors as well as enhance forecast skills. Among three model error sources—dynamical core misfitting, physical scheme approximation and model parameter errors (Zhang et al. 2012), the model parameters can be optimized with observational information. Based on data assimilation theory (e.g., Jazwinski 1970), parameter optimization (also called parameter estimation in the literature, hereafter denote as PO) can be realized by a state vector augmentation technique (e.g., Banks 1992a, b), which includes model parameters into control variables of data assimilation. The augmentation technique can be implemented by most of data assimilation methods. Three branches of PO methods exist: (1) ensemble Kalman filter (EnKF; Evensen 1994, 2007) based (e.g., Anderson 2001), (2) adjoint based (e.g., Zhu and Navon 1999) and (3) particle filter based (e.g., Vossepoel and Van Leeuwen 2007). Presently, PO has been widely applied in ocean models (e.g., Peng et al. 2013), atmosphere models (e.g., Laine et al. 2012) and ocean–atmosphere coupled models (e.g., Annan et al. 2004; Kondrashov et al. 2008; Liu et al. 2014). For ENSO prediction, some efforts have been made to explore the impact of model parameters on the modeling and predictability of ENSO. Zebiak and Cane (1987) demonstrated the strong sensitivity of ENSO simulations to model parameters. Yu et al. (2012) used a conditional nonlinear optimal perturbation method to evaluate the impact of model parameter errors on ENSO predictability. Our studies attempt to explore how to apply PO to improve ENSO predictions. As the first step, we set experiments in a relatively-idealized scenario: erroneous model parameters are the only source of model errors.

Due to the strongly ocean–atmosphere coupling nature of ENSO, its prediction initialization shall be carried out by coupled data assimilation (CDA; Zhang et al. 2007; Chen and Cane 2008; Sugiura et al. 2008) that assimilates both oceanic and atmospheric observations into the coupled model. Recently, CDA has been widely applied in ENSO dynamical models, including intermediate coupled models (e.g., Chen et al. 1995; Lee et al. 2000; Karspeck et al. 2006; Karspeck and Anderson 2007) and general circulation models (e.g., Keenlyside et al. 2005). In this study, we implement ensemble coupled data assimilation (ECDA, Zhang et al. 2005, 2007) into an intermediate ENSO model (Zebiak and Cane 1987). Data assimilation experiments are conducted within a biased twin experiment framework. The degree by which the assimilation and prediction with or without PO recover the “truth” is a measure of the impact of PO on ENSO predictions.

The remainder of this paper is arranged as follows: Sect. 2 briefly introduces the Zebiak-Cane ENSO model used and the EnKF-based PO algorithm. Section 3 configures the biased twin experiment. Impacts of PO on the ENSO analysis and prediction are examined in Sects. 4 and 5, respectively. At last, summary and discussions are given in Sect. 6.

2 Methodology

2.1 The Zebiak-Cane ENSO model

Due to its intermediate complexity and application in ENSO simulation and prediction, the Zebiak-Cane (hereafter as ZC) ENSO model is selected to study the impact of PO on ENSO analysis and predictions. The ZC model used here was released in December 2013, and inherits the features of old versions including Holocene radiative perturbations (Mann et al. 2005) to the SST anomaly equation. The components of the ZC model are briefly described as follows.

The atmospheric dynamics are roughly governed by a steady state and linear shallow-water equations (Gill 1980), which are forced by a heating anomaly parameterized by SST anomaly and the moisture convergence parameterized in terms of surface wind convergence. The atmosphere covers the region of 0°–354.375°E and 79°S–79°N, with a grid spacing of 5.625° (longitude) × 2° (latitude). The ocean includes dynamics and thermodynamics. The oceanic dynamics are simulated by a reduced-gravity model, which is forced by the wind stress anomaly from the atmospheric model. The range of the meridional current anomaly (zonal current anomaly and upper ocean depth anomaly) spans over the domain of 125°E–281°E and 28.75°S–28.75°N (124°E–280°E and 28.5°S–29°N) with a grid spacing of 2° (longitude) × 0.5° (latitude). The oceanic thermodynamics are modeled by a three-dimensional nonlinear equation of SST anomaly, covering the domain of 101.25°E–286.875°E and 29°S–29°N with the same grid spacing as the atmosphere. The valid SST domain is 129.375°E–275.625°E and 19°S–19°N where four marginal points correspond to (6, 6)th, (32, 6)th, (32, 25)th and (6, 25)th SST anomaly grids. Time step is 10 days. Note that the radiative forcing in the SST anomaly equation is not activated in this study.

Among all parameters of the ZC model, we focus on the following twelve: 4 atmospheric parameters, 2 ocean dynamical parameters and 6 SST anomaly parameters. The first four columns of Table 1 list the notations, physical meanings, units and default values, respectively. More details of the ZC model can be found in Zebiak and Cane (1987).

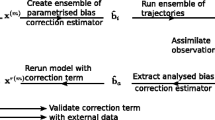

2.2 EAKF-based parameter optimization

We select the EAKF to perform state-parameter estimation, which is briefly introduced here. Detailed descriptions of implementation of the ECDA with the ZC model are illustrated in Sect. 3.

Similar to other sequential filtering methods, when the observational errors are assumed to be uncorrelated, the EAKF can sequentially assimilate observations. While the sequential implementation provides much computational convenience for data assimilation, the EAKF maintains the nonlinearity of background flows as much as possible (Anderson 2003; Zhang and Anderson 2003).

For a single observation, denoted as y, the EAKF consists of the following two steps. The first step computes the observational increment as

where \(\bar{y}^{\text{p}}\) represents the prior ensemble means of y; r and \(\sigma_{y}^{\text{p}}\) denote the standard deviation of observational error and the prior (model-estimated) standard deviation of y. The ith prior ensemble of y, \(y_{i}^{\text{p}} ,\) is usually obtained through applying a linear interpolation to the prior ensemble of state variables. The second step projects the observational increment onto related model variables using the following linear regression formula,

where Δx i is the contribution of y to the model variable x for the ith ensemble member. Cov(x, y) denotes the error covariance between the prior ensemble of x and the model-estimated ensemble of y.

The EAKF can naturally implement multivariate increments through applying crossing or coupling error covariances (e.g., Han et al. 2013) in Eq. (2). Cross correlations can also be applied to conduct PO through a state vector augmentation technique (e.g., Anderson 2001; Hansen and Penland 2007), which includes model parameters into control variables of data assimilation. To estimate geographic-dependent parameters, we adopt the same method as Wu et al. (2012), which is simply introduced here.

At each analysis step of data assimilation, after the observational increment is computed by Eq. (1), it is also linearly mapped to the increment of spatial-related parameter β Footnote 1 as follows

where cov(β, y) denotes the error covariance between the prior ensemble of β and the model-estimated ensemble of y. The quality of PO realized by an EnKF mainly depends on the accuracy of the error covariance between the parameter and observation. To effectively enhance the quality of the above ensemble-evaluated error covariance, Zhang et al. (2012) presented a coupled data assimilation scheme with enhancive parameter correction (DAEPC), which activates PO after the state estimation reaches a “quasi-equilibrium” such that the uncertainty of model states is sufficiently constrained by observations. The PO in this study also employs the idea of DAEPC. Additionally, we assume that the parameters are invariant between two adjacent analysis steps (e.g., Zupanski and Zupanski 2006).

3 Setup of a biased twin experiment

As the first step to study the impact of PO on the ENSO predictability, we design a biased twin experiment to examine the validity of PO.

3.1 Model error

We assume that the model error arises from erroneously-set parameter values. The model with the standard values (see the 4th column of Table 1) of model parameters serves as the “truth” model in the twin experiment. To design a reasonable assimilation model, we first seek the upper and lower bounds of each parameter within which the model can maintain the basic properties of ENSO (e.g., 2–7 years oscillation, irregulation and asymmetry). The last column in Table 1 displays the bounds (in percentage of the default value) for each parameter. For example, 5 % bound of parameter α means the valid range of this parameter is [3.09 × 10−2 × 0.95, 3.09 × 10−2 × 1.05]. Note that the upper and lower values of most parameters are asymmetric with the default values. For simplicity, we set a symmetric bound for each parameter. All parameters are multiplied by one plus the respective bounds (in percentage) serving as the parameter configuration of the assimilation/prediction model. To check whether the combined biased parameters can also keep the fundamental nature of real ENSOs, we analyze the time series (0–50 model years) of 5-month running mean of NINO3.4 index (SST anomaly averaged over 5°S–5°N, 170°W–120°W) generated by the assimilation model and the truth model (Fig. 1a).Footnote 2 Obviously, both the truth and assimilation models reflect the main features of real ENSOs, and the amplitude of NINO3.4 index is close to the observed value.

a Time series of 5-month running mean NINO3.4 indices in the “truth” model (solid) and assimilation model (dashed) starting from the same initial condition; b power spectrums of 5-month running mean NINO3.4 indices in the first 100 model years for the “truth” (solid) and assimilation (dashed) models with 95 % confidence level

To examine the significant periods of the ENSO simulated by the assimilation model, we compute the power spectrum of 5-month running mean of NINO3.4 index in the first 100 model years produced by the assimilation model. Figure 1b depicts the power spectra (thick) and 95 % confidence upper limits (thin) of the NINO3.4 index for the truth (solid) and the assimilation (dashed) models. Both models maintain 3–5 years significant periods while the assimilation model decreases the most significant period of ENSO from 4.2 years generated by the truth model to 3.9 years.

3.2 Observing system

To partly reflect the situation in the real world (only SST observations are available in the long time period), we assume that only SST anomaly is observed at the same gridpoints as Kaplan SST datasets (27.5°S–27.5°N, 122.5°E–92.5°W, with 5° resolutions in both longitude and latitude; Kaplan et al. 1998). Starting from the model states at the end of 100 model years, the truth model is further integrated for another 200 years. On the 1st day of each month, the true SST anomaly is linearly interpolated from the model grids to the observation positions and then perturbed with a Gaussian white noise, which simulates the observational error. Note that the Gaussian noise is imposed on SST anomaly rather than SST. The mean and variance of observational error are zero and 0.1 °C. Thus, the observing system in this study is the same as that in Karspeck and Anderson (2007) except that the final “observation” values may differ from each other.

3.3 Selection of parameters to be estimated

Before conducting PO, we should determine which parameter or parameters should be estimated. Since only observations of SST anomaly are available, the parameters to be optimized should be closely related to SST anomaly. For the model state, observations are only allowed to influence the SST anomaly itself. Six parameters including θ, T 1, T 2, S 1, S 2 and γ are directly related to SST anomaly.

To answer the question at the beginning of this section, we performed the following nine experiments: first is ensemble control run without data assimilation (denote as CTL); second is state estimation (denote as SE) that uses observations of SST anomaly to adjust SST anomaly; third to eighth are single parameter optimizations (denote as SPO) that use observations of SST anomaly to respectively estimate the geographic-dependent θ, T 1, T 2, S 1, S 2 and γ based on SE; last is the multiple parameter optimization (denote as PO) that simultaneously estimates six geographic-dependent parameters based on SE. Note that SPO and PO include both state estimation and parameter optimization.

Figure 2 gives time averaged ensemble mean root-mean-square errors (RMSEs) of prior SST anomaly (Fig. 2a), zonal current anomaly in the mixed layer (Fig. 2b), mix layer depth (Fig. 2c) and zonal wind anomaly (Fig. 2d) for SE (first bar in each panel), SPOs for θ, T 1, T 2, b 1, b 2 and γ (second to seventh bars in each panel), as well as PO (last bar in each panel). Note that for each variable, RMSEs of eight data assimilation experiments have been normalized by those (i.e., 0.87 °C, 24.81 cm s−1, 22.12 m and 1.0 m s−1 for SST anomaly, zonal current anomaly, mix layer depth and zonal wind anomaly) obtained by CTL. Here, the time averaged RMSE of ensemble mean is calculated by

where S represents the number of analysis steps; s indexes the analysis step; X is the model state to be evaluated; i 1 (j 1) and i 2 (j 2) are the first and last zonal (meridional) indices of valid X. Ensemble mean of X is represented by \(\bar{X}.\) The superscripts “prior” and “tru” denote the prior (i.e., forecast background) and the truth values, respectively.

Time averaged root-mean-square errors (RMSEs) of the ensemble mean of prior SST anomaly (a), zonal current anomaly (b), mix layer depth (c) and zonal wind anomaly (d) in 3 schemes—SE (state estimation), SPO (single parameter optimization) for θ, T 1, T 2, S 1, S 2 and γ, and PO (multiple parameter optimization). SE uses observations of SST anomaly to adjust SST anomaly. SPO optimizes the single geographic-dependent parameter with the observations of SST anomaly based on SE. PO instantaneously optimizes six geographic-dependent parameters with the observations of SST anomaly based on SE. Note that for each variable, the shown RMSE is a normalized version by corresponding RMSE of the ensemble control run without observation constraint

According to Fig. 2, simultaneously estimating six parameters can achieve best analysis of model states. In addition, because the univariate adjustment is implemented, the improvement of SST anomaly by SE relative to CTL is larger than those of other variables (comparing the first bars in four panels of Fig. 2). Thus, in the rest part of this study, we use the EAKF to optimize six SST anomaly parameters.

3.4 Sensitivity study

Same as Wu et al. (2012), ensemble spread of model state is used to represent the sensitivity and the ensemble size is set to 20. To get the biased initial conditions of sensitivity study, the assimilation model is integrated forward up to 100 model years from the zero initial condition. During first 120 model days, the assimilation model is forced by a constant zonal wind stress. Afterwards, the artificial wind stress is shut down and the model is freely run for 100 model years to get the biased fields.

For each SST anomaly parameter β, all initial ensemble members of model states are set to the above biased fields. Then, the biased value of β is perturbed by a Gaussian noise with zero mean and the standard deviation equal to one percent of the biased value to form the ensemble members of β. Other 11 empirical parameters keep their biased values. Then the assimilation model is freely run for another 5 model years to perturb the model states by the uncertainty of the parameter. For model variable X, its sensitivity with respect to the parameter β is assessed by:

where (i, j) indexes model grid and t denotes model integration time. N is ensemble size and the over bar indicates the ensemble mean.

Since only observations of SST anomaly are available for the estimation of parameters, we only show the sensitivities of SST anomaly. Figure 3a plots time series (1st day of each month) of spatial averaged sensitivities of SST anomaly for θ.(black), T 1 (blue), T 2 (red), S 1 (dashed), S 2 (pink) and γ (green). Here the sensitivity has been normalized by the climatological standard deviation of SST anomaly at each SST grid. θ (i.e., the damping timescale of SST anomaly) is the most sensitive parameter. In addition, since geographic-dependent parameters are expected to be estimated, it’s also necessary to examine the spatial distributions (Fig. 3b) of sensitivities. Here the spatial sensitivity is the time average of Eq. (5). Due to the nearly same results of other 5 SST anomaly parameters, we only show the result of θ. The most sensitive areas situate at middle and eastern equatorial Pacific.

a Time series of sensitivities (unit: °C) of SST anomaly for sensitivity studies of parameters θ (black), T 1 (blue), T 2 (red), S 1 (dashed), S 2 (pink) and γ (green); b spatial distribution of the sensitivity (unit: °C) of SST anomaly with respect to θ. Note that the sensitivity in a is represented by the spatial average of the ensemble spread, which is normalized by the climatological standard deviation, of SST anomaly. The sensitivity in b is the time mean of the ensemble spread of SST anomaly

3.5 Assimilation experiments

To get the true initial conditions of assimilation experiments, the truth model is integrated forward up to 100 model years from the zero initial condition. The wind stress that forces the truth model is the same as that forces the assimilation model.

The initial 20 ensembles of model states are generated using the same method as in Karspeck and Anderson (2007). That says 20 ensemble members of model states are selected every 5 model years apart from the simulation results of the assimilation model between January 1st in 5th year and January 1st in 100th year. For the parameters to be estimated, their initial ensembles are the same as those in Sect. 3.4. For the parameters not to be estimated, their initial ensemble members use the same biased values in Sect. 3.1. Note that results of PO may be sensitive to the standard deviation of the initial parameter ensembles. However, introduction of parameter inflation scheme will transfer the aforementioned sensitivity from the standard deviation to the parameter inflation factor, which is described as follows.

3.5.1 Inflation

The use of a finite number of ensembles can lead to an underestimation of the background error variance. Subsequently, the role of observations is weakened and ensemble of model state may converge to a value departing from observation (known as filter divergence). Variance inflation is an approach to effectively address this issue. For SE, there are many sophisticated methods, like the static additive method (e.g., Whitaker et al. 2008; Houtekamer et al. 2009), the static multiplicative method (e.g., Anderson and Anderson 1999), and adaptive schemes (e.g., Anderson 2008; Miyoshi 2011). Due to the homogeneous distribution of the observing system in this study, we use the static multiplicative method in this study. That is the ensemble perturbation of SST anomaly is artificially amplified by a constant (i.e., inflation factor) that is larger than 1 before SE and PO are implemented at each analysis step. Substantive trials suggest a 1.03 value of the inflation factor.

For PO, artificial variance inflation is the only mean of imparting spread to the parameter ensemble since there is no dynamical error growth in the parameters. The typical inflation algorithm (e.g., Wu et al. 2013; Zhang et al. 2012; Wu et al. 2012; Aksoy et al. 2006) in PO inflates the ensemble spread of parameter to a fixed value when the spread is less than the fixed value, which is formulated as

Here, β i,j and \({\tilde{\mathbf{\beta }}}_{i,j}\) represent the prior and the inflated ensemble of the parameter β locating at (i, j)th model grid, σ t and σ 0 denote the prior spreads of β i,j at time t and the initial time, α 0 is the parameter inflation factor. To facilitate the examination of the sensitivities of SST anomaly with respect to six SST anomaly parameters, we adopt the same value of α 0 for these parameters. Note that α 0 here can take values less than 1.0, although it is usually greater than 1.0. For a α 0 that is greater than 0 and less than 1.0, parameter ensemble will be compressed to one having an ensemble spread being α 0 σ 0 when the ensemble spread σ t at current analysis step is less than α 0 σ 0.

Here we examine the dependence of PO on the parameter inflation factor α 0 with a serial values (including 0.0, 0.1, 0.2, 0.5, 1.0, 1.5, 2.0, 5.0, 6.0, 7.0, 8.0, 9.0 and 10.0) to determine an optimal value. The time-averaged ensemble mean RMSE of prior SST anomaly defined in Eq. (6) is used to assess the dependence (Fig. 4). On the one hand, overly small inflation factors (like values less than 0.5) cause large errors of SST anomaly; on the other hand, overly large inflation factors (like values larger than 6) lead unstable quality of SST anomaly. Therefore, a mid-range of 2.0 is used in PO experiments in this study.

Additionally, to prevent the occurrence of extreme estimated values, the estimated parameters are bounded by 0.95 and 1.05 of their truth values.

3.5.2 Localization

Due to the sampling error, spurious correlations sampled by a finite ensemble often exist between model states and remote observations in EnKF. Covariance localization eases this issue. So far, many kinds of localization models, like static (e.g., Hamill et al. 2001), adaptive (e.g., Anderson 2007; Bishop and Hodyss 2007) and compensatory (e.g., Wu et al. 2014) approaches, have been developed. In this study, we use a widely used static localization function (GC function; Gaspari and Cohn 1999) in the state estimation. Karspeck and Anderson (2007) applied this localization scheme to the ensemble data assimilation and found that application of isotropic localization in the ZC model could introduce noisy waves that could not be damped. To partly ease this issue and reflect the variation of oceanic Rossby deformation radius with respect to the latitude, the halfwidth of the GC function is set to 1000 km × cos(lat), where lat represents the observed latitude. For PO, the same localization algorithm as SE is used.

3.5.3 Experiments

With the above initial conditions for the assimilation model, the observing system, as well as the state and parameter adjustment scheme, three experiments are conducted to evaluate the performance of two assimilation algorithms. First is an ensemble control run without observational constraint (i.e., CTL in Sect. 3.3); Second is SE; Last is PO. Three experiments use the same ensemble initial conditions and two data assimilation algorithms use the same observations. According to the description of the observing system, observations on the 1st day of each month are assimilated into the assimilation model. That is the assimilation frequency is once a month. Note that PO simultaneously implements SE and parameter optimization. The total data assimilation period is 200 model years. The model time of the initial condition is reset to zero here. Following the approach of DAEPC (Zhang et al. 2012), PO is activated after five years of SE to insure a “quasi-equilibrium” state in SE. Discarding the assimilation results of the first 100 model years, results of the last 100 model years are used to perform error analysis and statistics.

There are another two points should be clarified here. One is that although the stochastic forcing (such as synoptic-scale atmospheric processes, westerly wind bursts and the Madden-Julian oscillation) of the atmosphere also influences ENSO predictability (e.g., Karspeck et al. 2006; Cheng et al. 2010), here we mainly focus on the model error. Thus, no stochastic atmospheric forcing (wind) is imposed in ENSO prediction in this study. The other is that bias correction of SST anomaly is not performed, which may benefit PO (Cheng et al. 2010).

4 Impact of PO on ENSO analysis

Based on the results of sensitivity study in Sect. 3.4, we investigate the impact of PO on analysis results of ENSO. It should be noted that due to the overly large RMSEs, results of CTL are not shown.

4.1 Model states

If the model parameters are reasonably estimated, the model error will be reduced and model states will be correspondingly improved. We first investigate the overall impacts of PO on the analysis of three key variables (i.e., SST anomaly, zonal wind stress anomaly and thermocline depth anomaly), and then analyze the impact of PO on the analysis of El Niño (warm) and La Niña (cold) events.

Figure 5 shows the time series of RMSEs of prior (background/predicted) SST anomaly (Fig. 5a), zonal wind stress anomaly (Fig. 5b) and thermocline depth anomaly (Fig. 5c) for SE (black) and PO (blue). Note that in order to clearly understand the performances of data assimilation experiments, only results of first 100 model years are displayed, and RMSE here equals the RMSE s in Eq. (4). From Fig. 5a, SST anomaly produced by PO is better than that generated by SE for most analysis steps except few cases. Although only SST anomaly is observed and the univariate adjustment is implemented, the improved SST anomaly produced by PO can improve other model variables.

Similar to Fig. 5, Fig. 6 displays the spatial distributions of RMSEs of prior SST anomaly (Fig. 6a, b), zonal wind stress anomaly (Fig. 6c, d) and thermocline depth anomaly (Fig. 6e, f) for SE (Fig. 6a, c, e) and PO (Fig. 6b, d, f). Here, the RMSE for (i, j)th SST anomaly grid is computed by

where S represents the number of analysis steps; s indexes the analysis step. Compared to SE, PO can markedly improve SST anomaly in middle and eastern equatorial Pacific and subsequently further reduce the errors in other model variables. Combining with the results of sensitivity study demonstrates that PO can greatly ameliorate model states at high sensitive places.

To assess the impact of PO on the analysis of ENSO, we plot time series (100–200 model years) of absolute errors (i.e., the absolute value of the difference between prior value and truth) of prior ensemble mean NINO3.4 index for SE and PO (Fig. 7). Generally, PO can achieve better NINO3.4 indices than SE for most analysis steps with some exceptions. Compared to the truth evolution (Fig. 7a, c) of NINO3.4 index, PO outperforms SE for most relatively warm and cold events while failure cases (i.e., PO is not significantly better than SE) usually occur during quiet periods (i.e., neutral events).

Time series (100–200 model years) of absolute error (b, d) (unit: °C) of prior ensemble mean of NINO3.4 index for state estimation (black) and parameter optimization (blue). Note that results are evenly partitioned into 2 parts and time series of “true” NINO3.4 index (red curves in a and c) are also plotted as a reference

To confirm above conclusions, we first define a strong El Niño (La Niña) as a warm (cold) even when NINO3.4 index is greater (less) than 1 °C (−1 °C) (Chen et al. 2004). Then the composite strong El Niño and La Niña are computed with the posterior (analysis) results of SE and PO between January 1st in 101 model years and December 1st in 199 model years with 1 month interval. Figure 8b, c shows the absolute errors of the analysis composite El Niños produced by SE and PO while the truth of composite El Niño is also plotted in Fig. 8a. Compared to SE, PO can improve SST anomaly in central and eastern equatorial Pacific during strong El Niño episodes. For La Niña event (Fig. 9b, c), the same conclusion can be drawn. Then the longitude-time diagram for the relative errors of SST anomaly, zonal wind stress anomaly and thermocline depth anomaly is plotted to further demonstrate the above points. Here, the relative errors along the equator between PO and SE are computed as follows. For each analysis step and each SST anomaly longitude, the analysis ensemble means between 5°S and 5°N of the three variables produced by PO and SE are first averaged and then subtracted from the true counterpart to gain their respective absolute errors. Lastly, the relative error along the equator is calculated as:

where i indexes the zonal grid of SST anomaly and the over bar indicates the meridional arithmetical mean of the analysis ensemble mean of X for PO and SE or the truth of X between 5°S and 5°N. X stands for the aforementioned three variables. A negative (positive) relative error means PO is better (worse) than SE. Figure 10d–f shows an interval (180–190 model years) of the longitude-time diagram while the truth of X is also plotted in Fig. 10a–c. For the strong year-182 La Niña and the strong year-184 El Niño, PO is better than SE. That is the negative values of the relative errors in Fig. 10d–f happen at extreme values in Fig. 10a–c during these periods. During neutral events, PO has no significant superiority over SE. It should be mentioned that PO is better than SE for most (rather than all) strong events. For example, during the strong year-180 El Niño, PO is a little worse than SE. Note that although results in an interval are shown here, the same conclusion can be drawn from the total assimilation period.

a Composite El Niño of truth; b and c absolute errors of the analysis (posterior) composite El Niños produced by state estimation and parameter optimization, respectively; d and e absolute errors of the predicted composite El Niño in 6-month lead produced by state estimation and parameter optimization, respectively. Here the period used to compute the composite El Niños is from Jan 1st in 101 model years to Dec 1st in 199 model years with 1 month interval. The criterion of El Niño here is a NINO3.4 index greater than 1 °C. Note that b–d and e use the same shading scale

The same as Fig. 8 but for composite La Niña. The criterion of La Niña here is a NINO3.4 index less than −1 °C

Longitude-time diagrams of SST anomaly (a, d, unit: °C), zonal wind stress anomaly (b, e, unit: dyn cm−2) and thermocline depth anomaly (c, f, unit: m). Upper row represents the truth while lower row is the relative error (i.e., the difference between the absolute error of model state produced by parameter optimization and that produced by state estimation)

4.2 Estimated parameters

Given the improvement of model states caused by PO, one may be interested in the variations of the estimated parameters.

We first check the spatial distributions of estimated parameters. The analysis ensemble means of the SST anomaly parameters between 100 and 200 model years are averaged and then used to compute the standard deviations in time of these parameters at each SST anomaly grid point. Due to similar results of other 5 parameters, only results of θ are shown here (Fig. 11). We can see that most variations and variabilities of θ occur in the central and eastern Pacific, where is the most sensitive place of SST anomaly (Fig. 3b).

Then, we examine the time series of RMSEs of the analysis ensemble means of θ (Fig. 12a), T 1 (Fig. 12b), T 2 (Fig. 12c), S 1 (Fig. 12d), S 2 (Fig. 12e), and γ (Fig. 12f). Here the RMSE is defined as

where i and j index the valid SST anomaly grids. It is worth mentioning that due to the existence of other model errors (i.e., the biases of other parameters except six SST anomaly parameters), PO attempts to make up for the total model error through optimizing the SST anomaly parameters. Thus, the estimated parameters may not strictly converge to their truth values. From Fig. 12, during the assimilation period of 100–200 model years, the RMSEs of all six parameters are smaller than their initial error. The most stable (unstable) parameter is T 2 (S 2).

5 Impact of PO on ENSO prediction

Following the analysis of the last section, three aspects are discussed in this section to investigate the impact of PO on ENSO prediction. First, an overall assessment of the predictability of ENSO is presented; then, the role of model parameters on the prediction skill of ENSO is compared to that of initial conditions; last, the impact of PO on the seasonal forecast skill of ENSO is explored. All the evaluations are based on the results of the following forecast experiments. Starting from the analysis fields of SE and PO between 1st January in 101 model years and 1st December in 199 model years with 1 month interval, the ZC ENSO model is integrated to 5 model years. Thus, there are 99 × 12 = 1188 forecast experiments. Note that for PO, the optimized parameters with geographical distribution at the last time step of the analysis are used to perform ENSO predictions.

5.1 Predictability of ENSO

The anomaly correlation coefficient (ACC) and RMSE of the predicted ensemble mean of NINO3.4 index relative to the truth are used to evaluate the overall predictability of ENSO. The formulas of these two quantities here for the sth lead time and mth start month are

and

where R represents the number of forecast experiments (here is 99) and r indexes the forecast case. The superscript “f” represents the forecasted quantity. \(\overline{X}^{f}\) (X tru) denotes the forecasted (true) ensemble mean of NINO3.4 index with the same seasonal cycle removed. Here the climatology is computed by the truth model in this study while it should be calculated by the observations in practice. \({\overline{\overline{X}}}^{f}\) \((\overline{X}^{\text{tru}} )\) represents the mean of \(\overline{X}^{f}\) (X tru).

Figure 13a–d shows the variations of ACC (Fig. 13a, c) and RMSE (Fig. 13b, d) of predicted ensemble mean of NINO3.4 index with respect to lead time (in months) and start month for SE and PO, respectively. If an ad hoc 0.6 value of ACC is used to define the valid lead time, PO can extend the valid lead of ENSO from about 30 (24) model months of SE to about 36 (32) model months for summer and autumn (spring and winter). That says PO can extend the valid lead time up to 20 % (33 %) for summer and autumn (spring and winter). In contrast, the predicted error of the amplitude of ENSO produced by SE is systematically reduced by PO for all seasons. In addition, according to the results of RMSE, spring predictability barrier (SPB) exists in both experiments. However, the intensity of SPB for PO is weaker than that SE. Note that the existence of SPB in PO limits the improvement of interannual predictability of ENSO phase for boreal winter and spring.

Variation of ACCs (a, c, e) and RMSEs (b, d, f) of predicted ensemble mean of NINO3.4 indices with respect to lead time (in months) and start month for state estimation (a, b), parameter optimization (c, d) and the forecast experiment with the original guess biased parameters from state estimation and the state initial conditions from parameter optimization (e, f). The solid curves in a, c and e (b, d and f) are 0.6-ACC (1.0-RMSE) contours. Note that RMSEs here have been normalized by the climatological standard deviation (i.e., 1.0) of NINO3.4 indices produced by the “truth” model

It should be noted that due to the idealized model errors and the exclusion of the atmosphere stochastic forcing and the radiative forcing, the predictability of ENSO here is much stronger than the real world. Even so, we still can get some insights from the quantitative analysis of the advantage of PO over SE. If we roughly average the ACCs and RMSEs for first 4 model years lead time and all 12 start months, PO can enhance (reduce) ACC from 0.66 (0.80) of SE to 0.76 (0.64). Combining results of ACC and RMSE, the predictability of ENSO is enhanced by about 18 %.

5.2 Roles of parameters and initial conditions

Since the reduction of model errors also refines the quality of initial condition, the improvement of the predictability of ENSO may attribute to the refinement of initial condition and the correction of SST anomaly parameters. It’s important to figure out which factor plays the dominant role. Although many studies (e.g., Zhu and Navon 1999; Stainforth et al. 2005) have investigated this issue in weather forecast and/or climate prediction community and found that model parameters are more important to the prediction skill than the initial conditions, it’s also a good chance here to confirm this conclusion or find other possibilities in the context of ENSO prediction.

To answer the above question, an additional forecast experiment is conducted as follows. Starting from the same 1188 state initial conditions as PO, the extra experiment uses the original guess biased parameters from SE to perform ENSO forecasting. Figure 13e, f displays results of the extra experiment. Comparison between SE and the extra experiment indicates that improvements of phase and amplitude of ENSO induced by the refinement of initial conditions confine to very short lead time. Thus, the long-term prediction skill of ENSO is mainly affected by model errors rather than initial conditions, which is consistent with previous results.

5.3 Seasonal forecast skill of ENSO

Comparison between Fig. 13a, b and c, d justifies that PO can markedly improve the seasonal forecast skill of ENSO. Taking 6-month lead as an example, we compare the performances of PO and SE as follows.

Similar to Fig. 7, Fig. 14 shows the time series (1st day in each model month) of ensemble mean NINO3.4 index (Fig. 14a, c) and its absolute error (Fig. 14b, d) in 6-month lead for SE (black) and PO (blue) while the truth (red) is also plotted as a reference. PO can improve the forecast skill of intensities of most warm and cold events relative to SE. To justify this verdict, we also plot the absolute errors of composite strong El Niño (Fig. 8d, e) and strong La Niña (Fig. 9d, e) in 6-month lead as those of the analysis results. Comparison between Fig. 8b and d indicates that SE may increase the noise in eastern equatorial Pacific, and propagate it westwards to central equatorial Pacific in seasonal forecast timescale. It’s evident that the large forecast errors of SST anomaly produced by SE mostly happen at the sensitive areas (Fig. 3b) of SST anomaly. Since SE doesn’t correct the SST anomaly parameters, the loss of predictability of ENSO caused by the uncertainties of parameters may concentrate on the sensitive places. However, PO can keep almost the same accuracy of SST anomaly in 6-month lead as that in analysis results (comparing Fig. 8c with e). For the composite strong La Niña, SE can observably amplify the errors of SST anomaly in 6-month lead in western and eastern Pacific compared to its analysis results (Fig. 9b). It’s interesting that there is an area in central equatorial Pacific where the seasonal forecast skill of La Niña is not weakened by SE. Generally, SE rapidly loses the forecast skill of ENSO at the sensitive places in the seasonal timescale. For PO, compared to the analysis results, it mainly losses the seasonal forecast skill of La Niña in the western equatorial Pacific. In sum, the seasonal forecast skill of strong El Niños and La Niñas produced by PO is better than that generated by SE. Additionally, the improvement of the seasonal forecast skill of El Niño produced by PO is larger than that of La Niña. This can be explained by the fact that both the happening frequency and amplitude of El Niño are larger than that of La Niña.

The same as Fig. 7 but for 6-month lead forecast results

6 Summary and discussions

As a part of model errors that confines ENSO predictability, the erroneously-set model parameters can be optimized by observations. As the first step to study the impact of parameter optimization on ENSO prediction skills, an intermediate (Zebiak-Cane) ENSO model is used to set up a biased twin experiment with an ensemble Kalman filter (EAKF, Anderson 2001, 2003). Six out of 12 SST anomaly parameters are optimized. Results show that parameter optimization improves ENSO predictability—enhancing the seasonal-interannual forecast skill by about 18 %, extending the valid lead time up to 20 % (33 %) for summer and autumn (winter and spring) and ameliorating spring predictability barrier apparently. In addition, cross experiments show that optimized parameters help more in enhancing the prediction skill of ENSO at long lead times than improved initial conditions.

It is worth mentioning that we place a limit of 5 % error to the estimated parameter, which is very restrictive due to the hypothetically unknown truth. In reality, these parameters could be factors of two or more. To examine whether parameter optimization is valid or not in these cases, we perform an extreme experiment that does not impose any bounds on the estimated parameters. Results show that the unconstrained parameter optimization is a little better than the constrained parameter optimization in this study (not shown). However, without any constraint, the estimated parameter may take a value that has no physical meaning. Thus, a certain bound is usually imposed on the estimated parameter in reality.

Although results of parameter optimization here are promising, there are many challenges before it can be applied to the real ENSO prediction. First, the more complicated model errors in the real world may degrade the performance of parameter optimization. With the configuration of the model error in the biased twin experiment, both the state estimation and parameter optimization perform very well, which causes the marginal improvement from parameter optimization over state estimation. Second, real long-term observations of SST anomaly (such as Kaplan SST product) and other atmospheric and oceanic observations should be assimilated to the prediction model to improve state estimation and parameter optimization. Third, impacts of the atmosphere stochastic forcing and the radiative forcing on ENSO prediction shall be considered in the follow-up studies. Last, the comparison of the effects of parameter optimization and statistical bias correction shall also be investigated in the future. Probably, an optimal combination of these two approaches can significantly improve ENSO predictions.

Abbreviations

- EAKF:

-

Ensemble adjustment Kalman filter

- SE:

-

State estimation

- PO:

-

Parameter optimization

- CTL:

-

Model control run

- ENSO:

-

El Niño-Southern Oscillation

References

Aksoy A, Zhang F, Nielsen-Gammon JW (2006) Ensemble-based simultaneous state and parameter estimation in a two-dimensional sea-breeze model. Mon Weather Rev 134:2951–2970

Anderson JL, Anderson SL (1999) A Monte Carlo implementation of the nonlinear filtering problem to produce ensemble assimilations and forecasts. Mon Weather Rev 127:2741–2758

Anderson JL (2001) An ensemble adjustment Kalman filter for data assimilation. Mon Weather Rev 129:2884–2903

Anderson JL (2003) A local least squares framework for ensemble filtering. Mon Weather Rev 131:634–642

Anderson JL (2007) Exploring the need for localization in ensemble data assimilation using a hierarchical ensemble filter. Phys D 230:99–111

Anderson JL (2008) Spatially and temporally varying adaptive covariance inflation for ensemble filters. Tellus 61A:72–83

Annan JD, Hargreaves JC, Edwards NR, Marsh R (2004) Parameter estimation in an intermediate complexity earth system model using an ensemble Kalman filter. Ocean Model 8:135–154

Banks HT (1992a) Control and estimation in distributed parameter systems. In: Frontiers in applied mathematics, vol 11. SIAM, 3600 University City Science Center, Philadelphia, Pennsylvania pp 19104–2688

Banks HT (1992b) Computational issues in parameter estimation and feedback control problems for partial differential equation systems. Phys D 60:226–238

Bishop CH, Hodyss D (2007) Flow adaptive moderation of spurious ensemble correlations and its use in ensemble-based data assimilation. Q J R Meteor Soc 133:2029–2044

Cane MA, Zebiak SE, Dolan SC (1986) Experimental forecasts of El Niño. Nature 321:827–832

Chen D, Cane MA (2008) El Niño prediction and predictability. J Comput Phys 227:3625–3640

Chen D, Zebiak SE, Busalacchi AJ, Cane MA (1995) An improved procedure for El Niño forecasting: implications for predictability. Science 269:1699–1702

Chen D, Cane MA, Zebiak SE, Canizares R, Kaplan A (2000) Bias correction of an ocean-atmosphere coupled model. Geophys Res Lett 27:2585–2588

Chen D, Cane MA, Kaplan A, Zebiak SE, Huang D (2004) Predictability of El Niño over the past 148 years. Nature 428:733–736

Cheng Y, Tang Y, Jackson P, Chen D, Deng Z (2010) Ensemble construction and verification of the probabilistic ENSO prediction in the LDEO5 model. J Clim 23:5476–5497

Evensen G (1994) Sequential data assimilation with a nonlinear quasi-geostrophic model using Monte Carlo methods to forecast error statistics. J Geophys Res 99:143–162

Evensen G (2007) Data assimilation the ensemble Kalman filter. Springe Press, Berlin, p 187

Gaspari G, Cohn SE (1999) Construction of correlation functions in two and three dimensions. Q J R Meteor Soc 125:723–757

Gill AE (1980) Some simple solutions for heat-induced tropical circulation. Q J R Meteor Soc 106:447–462

Hamill TM, Whitaker JS, Snyder C (2001) Distance-dependent filtering of background error covariance estimates in an ensemble Kalman filter. Mon Weather Rev 129:2776–2790

Han G, Wu X, Zhang S, Liu Z, Li W (2013) Error covariance estimation for coupled data assimilation using a Lorenz atmosphere and a simple pycnocline ocean model. J Clim 26:10218–10231

Hansen J, Penland C (2007) On stochastic parameter estimation using data assimilation. Phys D 230:88–98

Houtekamer PL, Mitchell HK, Deng X (2009) Model error representation in an operational ensemble Kalman filter. Mon Weather Rev 137:2126–2143

Jazwinski AH (1970) Stochastic processes and filtering theory. Academic Press, New York

Jin EK, Kinter JLIII, Wang B, co-authors (2008) Current status of ENSO prediction skill in coupled ocean-atmosphere models. Clim Dyn 31:647–664

Kaplan A, Cane MA, Kushnir Y, Clement AC, Blumenthal MB, Rajagopalan B (1998) Analyses of global sea surface temperature 1856-1991. J Geophys Res 103:18567–18589

Karspeck AR, Anderson JL (2007) Experimental implementation of an ensemble adjustment filter for an intermediate ENSO model. J Clim 20:4638–4658

Karspeck AR, Kaplan A, Cane MA (2006) Predictability loss in an intermediate ENSO model due to initial error and atmospheric noise. J Clim 19:3572–3588

Keenlyside N, Latif M, Botzet M, Jungclaus J, Schulzweida U (2005) A coupled method for initializing El Niño Southern Oscillation forecasts using sea surface temperature. Tellus 57A:340–356

Kondrashov D, Sun C, Ghil M (2008) Data assimilation for a coupled ocean–atmosphere model, part II: parameter estimation. Mon Weather Rev 136:5062–5076

Laine M, Solonen A, Haario H, Järvinen H (2012) Ensemble prediction and parameter estimation system: the method. Q J R Meteorol Soc 138:289–297

Lee T, Boulanger JP, Foo A, Fu LL, Giering R (2000) Data assimilation by an intermediate coupled ocean-atmosphere model: application to the 1997–1998 El Niño. J Geophys Res 105:26063–26087

Liu Y, Liu Z, Zhang S, Rong X, Jacob R, Wu S, Lu F (2014) Ensemble-based parameter estimation in a coupled GCM using the adaptive spatial average method. J Clim. doi:10.1175/JCLI-D-13-00091.1

Mann M, Cane MA, Zebiak SE, Clement A (2005) Volcanic and solar forcing of El Niño over the past 1000 years. J Clim 18:447–456

McPhaden MJ, Zebiak SE, Glantz MH (2006) ENSO as an integrating concept in earth science. Science 314:1740–1745

Miyoshi T (2011) The Gaussian approach to adaptive covariance inflation and its implementation with the local ensemble transform Kalman filter. Mon Weather Rev 139:1519–1535

Moore AM, Kleeman R (1999) Stochastic forcing of ENSO by the intraseasonal oscillation. J Clim 12:1199–1220

Peng SQ, Li YN, Xie L (2013) Adjusting the wind stress drag coefficient in storm surge forecasting using an adjoint technique. J Atmos Ocean Technol 30:590–608

Penland C, Magorian T (1993) Prediction of Niño 3 sea surface temperatures using linear inverse modeling. J Clim 6:1067–1076

Stainforth DA, Aina T, Christensen C, co-authors (2005) Uncertainty in predictions of the climate response to rising levels of greenhouse gases. Nature 433:403–406

Sugiura N, co-authors (2008) Development of a four-dimensional variational coupled data assimilation system for enhanced analysis and prediction of seasonal to interannual climate variations. J Geophys Res 2008(133):C10017. doi:10.1029/2008JC004741

Vossepoel FC, Van Leeuwen PJ (2007) Parameter estimation using a particle method: inferring mixing coefficients from sea level observations. Mon Weather Rev 135:1006–1020

Whitaker JS, Hamill TM, Wei X, Song Y, Toth Z (2008) Ensemble data assimilation with the NCEP global forecast system. Mon Weather Rev 136:463–482

Wu X, Zhang S, Liu Z, Rosati A, Delworth T, Liu Y (2012) Impact of geographic dependent parameter optimization on climate estimation and prediction: simulation with an intermediate coupled model. Mon Weather Rev 140:3956–3971

Wu X, Zhang S, Liu Z, Rosati A, Delworth T (2013) A study of impact of the geographic dependent of observing system on parameter estimation with an intermediate coupled model. Clim Dyn 40(7–8):1789–1798

Wu X, Li W, Han G, Zhang S, Wang X (2014) A compensatory approach of the fixed localization in EnKF. Mon Wea Rev 142:3713–3733

Yu Y, Mu M, Duan W (2012) Does model parameter error cause a significant “Spring Predictability Barrier” for El Niño events in the Zebiak-Cane model? J Clim 25:1263–1277

Zebiak SE, Cane MA (1987) A model El Niño-Southern Oscillation. Mon Weather Rev 115:2262–2278

Zhang S, Anderson JL (2003) Impact of spatially and temporally varying estimates of error covariance on assimilation in a simple atmospheric model. Tellus 55A:126–147

Zhang S, Harrison MJ, Wittenberg AT, Rosati A, Anderson JL, Balaji V (2005) Initialization of an ENSO forecast system using a parallelized ensemble filter. Mon Weather Rev 133:3176–3201

Zhang S, Harrison JJ, Rosati A, Wittenberg AT (2007) System design and evaluation of coupled ensemble data assimilation for global oceanic climate studies. Mon Weather Rev 135:3541–3564

Zhang S, Liu Z, Rosati A, Delworth T (2012) A study of enhancive parameter correction with coupled data assimilation for climate estimation and prediction using a simple coupled model. Tellus 63A:10963. doi:10.3402/tellusa.v63i0.10963

Zhu Y, Navon IM (1999) Impact of parameter estimation on the performance of the FSU global spectral model using its full physics adjoint. Mon Weather Rev 127:1497–1517

Zupanski D, Zupanski M (2006) Model error estimation employing an ensemble data assimilation approach. Mon Weather Rev 134:1337–1354

Acknowledgments

The authors thank Mark A. Cane and Donna Lee for providing the codes of the ZC model. This research is co-sponsored by grants from the National Natural Science Foundation (41306006, 41376015, 41376013, 41176003, and 41206178), the National Basic Research Program (2013CB430304), the National High-Tech R&D Program (2013AA09A505), and the Global Change and Air–Sea Interaction (GASI-01-01-12) of China.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, X., Han, G., Zhang, S. et al. A study of the impact of parameter optimization on ENSO predictability with an intermediate coupled model. Clim Dyn 46, 711–727 (2016). https://doi.org/10.1007/s00382-015-2608-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-015-2608-z