Abstract

We examine the spatial patterns of variability of annual-mean temperature in the control runs of eight coupled atmosphere–ocean general circulation models (AOGCMs) and of observations. We characterize the patterns of variability using empirical orthogonal functions (EOFs) and using a new technique based on what we call quasi-EOFs. The quasi-EOFs are computed based on the spatial pattern of the correlation between the temperature variation at a given grid point and the temperature defined over a pre-determined reference region, with a different region used for each quasi-EOF. For the first four quasi-EOFs, the reference regions are: the entire globe, the Niño3 region, Western Europe, and Siberia. Since the latter three regions are the centers of strong anomalies associated with the El Niño, North Atlantic, and Siberian oscillations, respectively, the spatial pattern of the covariance with temperature in these regions gives the structure of the model or observed El Niño, North Atlantic, and Siberian components of variability. When EOF analysis is applied to the model control runs, the patterns produced generally have no similarity to the EOF patterns produced from observational data. This is due in some cases to large NAO-like variability appearing as part of EOF1 along with ENSO-like variability, rather than as separate EOF modes. This is a disadvantage of EOF analysis. The fraction of the model time-space variation explained by these unrealistic modes of variability is generally greater than the fraction explained by the principal observed modes of variability. When qEOF analysis is applied to the model data, all three natural modes of variability are seen to a much greater extent. However, the fraction of global time-space variability that is accounted for by the model ENSO variability is, in our analysis, less than observed for all models except the HadCM2 model, but within 20% for another three models. The space-time variation accounted for by the other modes is comparable to or somewhat larger than that observed in all models. As another teleconnection indicator, we examined both Southern Oscillation Index (SOI) and its relation to tropical Pacific Ocean temperature variations (the qEOF2 amplitude), and the North Atlantic Oscillation Index (NAOI) and its relation to North Atlantic region temperatures (the qEOF3 amplitude). All models exhibit a relationship between these indices, and the qEOF amplitudes are comparable to those observed. Furthermore, the models show realistic spatial patterns in the correlation between local temperature variations and these indices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This is the first of two studies in which the interannual variability of the global fields of annual-mean temperature and precipitation as simulated by eight coupled, three-dimensional atmosphere–ocean general circulation models (AOGCMs) is examined and compared with observed variability. Climate model variability is of interest for a number of reasons. First, the ability of a model to successfully simulate key features of the observed variability of climate is an important test of the model processes, complementing its ability to simulate the observed mean climate state. Second, changes in climate at the regional level due to globally averaged warming resulting from the buildup of greenhouse gases (GHGs) might be expressed in part through a change in the amplitude, frequency, or nature of natural modes of climate variability (Hasselmann 1999; Corti et al. 1999; Monahan et al. 2000). If a model cannot successfully simulate these features of observed present-day variability, then confidence in the reliability of projected regional climatic changes must be diminished. Third, since model climate variability obscures the emergence of any anthropogenic signal in model simulations, it is important to properly characterize this variability.

A number of papers have examined various features of AOGCM variability, using a variety of techniques. Key results from recent (1996 or later) studies of the control runs of AOGCMs are given in Table 1. Many studies have focused on the ability of AOGCMs to simulate observed patterns of variability associated with the El Niño-Southern Oscillation (ENSO); some have also focused on the wintertime Arctic Oscillation (AO) and North Atlantic Oscillation (NAO). In the three models where the AO has been examined (CCC, Fyfe et al. 1999; HadCM2, Osborn et al. 1999; HadCM2 and ECHAM4, Zorita and González-Rouco 2000), the models compare well with observations. Some models, however, fail to simulate a realistic ENSO, the most common problem being too small an amplitude of the variability (Mao and Robock 1998; Stouffer et al. 2000; Bell et al. 2000). Other, more recent models, do quite well in simulating ENSO (Yukimoto et al. 1996, 2000; Bacher et al. 1998; Collins, 2000; Collins et al. 2001; Washington et al. 2000). A number of papers have separately analyzed tropical Pacific Ocean variability at sub-decadal and decadal time scales in AOGCMs, and have found the spatial pattern of decadal variability to be broadly similar to the sub-decadal (i.e., ENSO time scale) variability (e.g. CCSR, Kang 1996; Yukimoto et al. 1996, 2000; GFDL, Knutson and Manabe 1998; CSIRO, Walland et al. 2000). This is also true for observed temperature variations (Zhang et al.1997). Some studies (e.g. Li and Hogan 1999, and the earlier work by Meehl et al. 1993) have shown that the nature and magnitude of tropical climate variability (both interannual and sub-seasonal) depend on the simulated climatic mean state, presumably reflecting the fact that the mean state influences the strength of the various feedback processes that give rise to variability.

Recently, the results of transient simulations of climatic change by AOGCMs have been made available through the Intergovernmental Panel on Climate Change (IPCC) Data Distribution Centre (DDC) website (http://ipcc-ddc.cru.uea.ac.uk/ ). These simulations begin in 1860, 1880, 1890 or 1900, and extend to 2100. Results are available for a control run and for one or more cases with GHG forcing only and with GHG plus direct sulfate aerosol forcing. In this study we examine the control-run surface-air temperature fields for eight models from the IPCC DDC website: the Canadian Climate Centre (Victoria, British Columbia) model (CCC); the Center for Climate Research Studies (University of Tokyo) model (CCSR); the Commonwealth Scientific and Industrial Research Organization (Aspendale, Australia) model (CSIRO); two versions of the European Centre for Medium Range Weather Forecasts-University of Hamburg model (ECHAM3 and ECHAM4); two model versions from the Hadley Centre for Climate Prediction and Research (Bracknell, UK), HadCM2 and HadCM3; and the NCAR Parallel Climate Model (PCM). Table 2 lists some of the properties of these models and the references in which the model simulation of the present-day climate is discussed. All of the models except HadCM3 and PCM use flux-adjustment in order to minimize climate drift and errors in the sea surface temperature climatology. ECHAM3 and ECHAM4 are coupled to two different ocean models, as explained in the references given in Table 2. We have chosen these particular models for analysis among those available from the IPCC DDC, as simulation results with GHG forcing through to 2100 (or thereabouts) are also available for these models, something that will be analyzed in a separate paper.

Empirical orthogonal functions (EOFs) have been widely used to analyze the space-time variability of both observed and model-generated climatic data. EOFs provide an objective method of identifying the most important modes of variability, with the first EOF accounting for (“explaining”) the largest fraction of the total space-time variability, and successive EOFs explaining progressively smaller fractions of the total variability. As shown later, when EOF analysis is applied to the model control runs, the patterns that are produced generally have little similarity to the EOF patterns produced from observational data. That is, the model-generated variability is apparently dominated by patterns (and processes) that are not dominant in nature. This is generally due to: (1) excessive winter-time variability in ice-marginal regions, which must be related to deficiencies in the sea-ice simulation or in ice-ocean–atmosphere interactions; and/or (2) excessive summertime variability over continents, which might be related to deficiencies in the land soil moisture scheme. However, this does not preclude the possibility that, embedded in the spurious model variability, there are modes of variability that correspond to observed modes of variability. In order to identify these modes of variability, we developed an alternative technique, dubbed quasi-EOF (qEOF) analysis, that specifically looks for pre-determined patterns of variability.

The qEOF modes of variability correspond to physically distinct, observed modes of variability. Two of these, the ENSO and NAO, correspond in nature to oscillations in the pressure difference between Tahiti and Darwin (the Southern Oscillation Index, or SOI) and between Gibralter and Iceland (the North Atlantic Oscillation Index, or NAOI), respectively. We compare the correlation between these two qEOF amplitudes and the corresponding pressure indices in nature and in each of the models. To the extent that the pressure index and corresponding qEOF amplitude are correlated, maps of the correlation between local temperature variations and the SOI or NAOI should resemble the maps of the corresponding qEOF fields, so we complete our analysis by computing these maps for the observations and the models.

The qEOF analysis technique is explained in Sect. 2. Section 3 presents the results of the EOF and quasi-EOF analyses when applied to observed temperature data. Section 4 describes the model climatologies, followed by EOF and qEOF analysis in Sects. 5 and 6. Section 7 compares the leading qEOF amplitudes with key surface pressure indices. Precipitation data are analyzed in Part 2 (Harvey 2003).

2 Statistical techniques used

The EOF and quasi-EOF analysis will be applied to observed and model annual-mean and seasonal data. In the case of model data, we first compute the annual or seasonal means for the control-run data for each model. We then compute the linear trend at each grid point and subtract this trend from the yearly or seasonal grid-point data in order to remove the effects of model drift (assumed to be spurious and likely to have a spatial pattern that differs from the patterns of internally-generated variability). In the case of observations, we do not detrend the data because the observations contain a signal from anthropogenic forcing that has not varied linearly (e.g. Wigley et al. 1998), so that linear detrending would not entirely remove this signal. Rather, we believe that the EOF and qEOF analysis techniques themselves represent the best way to remove those parts of the anthropogenic signal that are not merely amplifications of the modes of internal variability, allowing us to compare the remaining variability with detrended model control run variability. Nevertheless, the question of how to best remove the anthropogenic component in the observations, and how to correctly compare the model and observed EOFs and qEOFs, is an important part of our investigation, and something to which we will return later.

2.1 Empirical orthogonal function analysis

We first decomposed the space-time variability of the annual-mean and seasonal-mean fields using empirical orthogonal functions (EOFs). For this purpose, we used an EOF analysis package kindly provided by Aiguo Dai and used, for example, in Dai and Wigley (2000). EOF analysis can be performed using the raw data (and involving the computation of a covariance matrix) or using the raw data normalized by the local standard deviation (effectively involving the computation of a correlation matrix). Since the standard deviation tends to be larger at high latitudes than at low latitudes, the correlation matrix approach places more weight on the low-latitude variability. However, we have chosen to use the covariance matrix approach because amplified variability at high latitudes is an important part of real world variability that we wish to retain, and because we also wish to be able to interpret the EOF patterns (or amplitudes) in terms of absolute temperatures. The grid-point data are multiplied by the square root of the cosine of latitude prior to computing the EOFs, in order to account for the smaller area represented by high-latitude grid points.

Having computed the EOFs using the raw data, we divided the EOF fields by the maximum absolute value of the EOF field and multiplied the corresponding EOF amplitude coefficient by the same factor. The amplitude time series for each EOF therefore has units of (°C) and can be interpreted as the maximum temperature change associated with the corresponding EOF found anywhere at a given time. We use these EOF results below to identify particular modes of observed temperature variability, which we then search for in the model control-run data using quasi-EOF analysis.

2.2 Quasi-EOF analysis

A procedure analogous to our quasi-EOF analysis can be found in the work of Jones and Kelly (1983), Kang (1996), Tett et al. (1997), Mitchell et al. (1999), and van den Dool et al. (2000). In three of these papers, maps are presented showing the geographical pattern of the correlation or covariance between the grid point temperature anomaly (deviation from the mean) time series and the time series for the global-mean temperature anomaly. In our quasi-EOF analysis, we generate not one but a series of spatial covariance patterns. The first (which we call quasi-EOF1, or qEOF1) is based on the covariance (Eq. 1) between grid point variations and the variation of the global-mean temperature. The product of this spatial covariance pattern and the annual- and global-mean temperature anomaly (which is the qEOF1 amplitude) gives the pattern of temperature variation for that year associated with qEOF1.

This temperature variation pattern is then subtracted from the original annual (or seasonal) temperature variation pattern, and qEOF2 is computed based on the covariance between the residual temperature variation and the mean variation computed over some new, non-global reference region. The temperature averaged over the new reference region is the qEOF2 amplitude. The temperature variation associated with qEOF2 is then subtracted from the previous residual, and the process repeated in order to generate successive qEOFs, each time using a different reference region as the basis for computing the spatial correlation field. This gives a series of spatial patterns, each tied to the variability in a specific region. If the variability in that region, in turn, represents some specific mode of climate variability (such as Niño3 SSTs as an ENSO indicator), then the corresponding quasi-EOF defines the spatial character of that particular mode.

The nth qEOF is given by

where the summation is over all years i; ΔT n (x, y, t i ) is the temperature deviation at grid point (x, y) and year t i for qEOF order n; ΔT n (t i ) is the temperature change averaged over the domain used to define qEOFn (the domain is the whole globe for qEOF1). Both ΔT n (x, y, t i ) and ΔT n (t i ) are the deviations from their time means. The normalization by ΔT n 2(t i ) and the addition of 1.0 are for convenience of representation and interpretation. A qEOF value of 1.0 at a given grid point means that, on average, the temperature change at that grid point is the same as the temperature change over the reference region, while a qEOF value of 2.0 (for example) implies a temperature change that on average is twice the temperature change over the reference region.

The temperature change field associated with qEOFn is given by

and the residual temperature change field, used for the next iteration, is given by

For calculating the first qEOF, ΔT n (x, y, t i ) is the original temperature anomaly field (detrended in the case of model control-run data, as explained earlier), and ΔT n (t i ) is the global average temperature anomaly in year i. Subtracting qT 1(x, y, t) in Eq. (3) essentially removes that part of the original field associated with changes in the global mean. In the next step, to obtain qEOF2, we use Eq. (1) to define that part of the residual (ΔT 2(x, y, t i )) that is linked to variations over a new (sub-global) region. We then subtract this component, and successively identify variations associated with different regions.

For qEOF two to four, the reference regions used for computing ΔT n (t i ) are the tropical eastern Pacific Ocean (5°S–5°N by 90–150°W, corresponding to the Niño 3 region), Europe (40–70°N by 0–40°E), and Siberia (40–70°N by 70–130°E), respectively. These regions are the centers of strong anomalies associated with the El Niño, North Atlantic, and Siberian oscillations, respectively, as identified using a standard EOF analysis of the observed data (discussed later). The spatial pattern of the covariance with temperatures in these regions therefore gives the structure (i.e., teleconnection pattern) of the model or observed ENSO, North Atlantic, and Siberian components of variability.

The procedure described is similar to the “empirical orthogonal teleconnections” method recently devised and discussed by van den Dool et al. (2000). These authors propose searching for the single point that explains the maximum space-time variance of all points combined, removing that which is correlated with this point from the original space-time dataset, searching the residual data for the next most important point, and so on. Our procedure differs from that of van den Dool et al. (2000) in that we use regions rather than individual grid points as the basis for developing correlations to use in removing successive modes of variability (thereby reducing the statistical noise that might be associated with a single point), and in that we choose the correlation regions so as to coincide with observed physical modes of variability (an option also suggested by van den Dool et al. 2000).

In our analysis, we want qEOF1 to represent the spatial pattern of climatic change at decadal and longer time scales, unobscured by the possible effects of interannual ENSO, NAO, or Siberian oscillation variability, which might be partly transferred to qEOF1. For example, since ENSO causes short-term fluctuations in global-mean temperature (Wigley 2000), part of the variability associated with it could be transferred to qEOF1. To avoid or least minimize this, we use smoothed ΔT 1(x, y, t i ) and ΔT 1(t i ) (using a 9-year running mean) prior to computing qEOF1 and qT 1(x, y, t i ). Thus, ΔT 2(x, y, t i ) is given by the original temperature deviations minus a qT 1(x, y, t i ) derived from smoothed data. All subsequent calculations are computed using unsmoothed ΔT 1(x, y, t i ). Subtracting qT 1(x, y, t i ) from the original data will therefore not noticeably alter the amplitude of year-to-year variations, so that qEOF2 to qEOF4 will pick up the spatial patterns associated with interannual modes of variability.

We apply the qEOF analysis here to observed data and to control-run data from several AOGCMs. In a future paper we will apply the qEOF analysis, as described, to raw temperature data from the GHG and GHG + aerosol forcing runs of the same AOGCMs. For the control runs, we first detrend the data grid-point by grid-point (as noted). This removes any (presumably spurious) long-term trend (drift) in global-mean temperature and/or the spatial patterns of temperature change, which would otherwise appear in qEOF1. For this reason, we can omit qEOF1 in the analysis of model control-run data and avoid the possibility of some ENSO variability being transferred to qEOF1. Thus, in the first step with control-run data (the subject of this study), we compute the local covariances of the detrended data with the average in our El Niño region, using unsmoothed (but detrended) data (we still refer to this as qEOF2). We find that the spatial patterns for qEOFn (n ≥ 2) as computed with and without first computing qEOF1 are almost indistinguishable, but qEOF2 accounts for more of the space-time temperature variation if qEOF1 has not been first computed and subtracted from the data.

3 Application of EOF and qEOF analysis to observed temperature data

The qEOF analysis is designed to search for particular patterns of variability that are found in the observational data according to EOF analysis. Thus, a simple way to test the validity of the qEOF analysis as a means of identifying teleconnection patterns associated with particular modes of variability is to compare the observed qEOF patterns with the corresponding observed EOF patterns. For this purpose, we have used the observed temperature variation data on a 5° × 5° latitude–longitude grid for the period 1900–2000 that are available from the Climatic Research Unit (CRU) of the University of East Anglia (http://www.cru.uea.ac.uk ). In computing the EOF or qEOF fields, only those grid points with at least 30 years of data were used. We begin with an analysis of annual-mean data, then examine seasonal data.

Figure 1 compares the annual-mean EOF and qEOF pairs. Table 3 gives the square of the pattern correlations (or common variances) between corresponding EOFs and qEOFs, and the percent of the original space-time temperature variation that is accounted for by each mode of variability (the EOFs and qEOFs account for comparable amounts of variability). All spatial pattern correlations given here are computed by multiplying the grid-point data by the square root of the cosine of latitude in order to account for the smaller area represented by high-latitude grid points.

Kelly et al. (1999) identified the first four EOFs of the CRU temperature dataset as corresponding to the global warming signal, ENSO, the NAO, and the Siberian Oscillation. We agree with this interpretation for at least the first three EOFs. However, EOF4, while having a maximum in Siberia, can also be viewed as an anti-phase NH–SH oscillation, with high-latitude warming over land in the NH (strongest over Siberia) associated with circum-Antarctic cooling in the SH (and vice versa).

The correlations between the observed EOF and qEOF patterns for the global warming signal and ENSO are quite high (R 2 values of 0.87 and 0.77, respectively, see Table 3), but drop off sharply for the NAO and Siberian Oscillation (to 0.12 and 0.05, respectively). In other words, the teleconnection pattern identified by the qEOF analysis for these two modes of variability differs markedly from that identified by a conventional EOF analysis. This is a reflection of either or both of two things: first, the constraint imposed by orthogonality in the EOF patterns; and second, the constraint imposed by selecting a single region to characterize a mode of variability in the qEOF analysis.

Figure 2 shows the seasonal EOF2 and EOF3 patterns for December–January–February (DJF), March–April–May (MAM), June–July–August (JJA), and September–October–November (SON). For EOF2, positive temperature deviations, characteristic of ENSO, occur in the eastern tropical Pacific Ocean and are least pronounced in MAM and most pronounced in SON. However, teleconnections with regions outside the tropical Pacific Ocean are weakest during JJA. There are pronounced negative deviations in the south-central US in MAM, in the northern USA and southern Canada in JJA, and in northern Russia in MAM and SON.

The classical NAO is a winter mode of atmospheric variability that produces a see-saw in temperature between western Europe and Greenland. Indeed, Slonosky and Yiou (2001) identify a quadrupole temperature structure (in-phase temperature variations in Western Europe and the eastern USA, out of phase in Greenland/eastern Canada and North Africa), and this pattern is evident in EOF3 for DJF (although the North African pole is rather weak). A similar quadrupole was identified by Stephenson and Pavan (2003) in 13 of the 17 models that they studied. MAM shows a weak Greenland/Western Europe see-saw. EOF3 for SON has a distinctly ENSO-like pattern (warm eastern equatorial Pacific, flanked by opposite anomalies in each hemisphere and in the western tropical Pacific). The main difference between EOFs 2 and 3 in SON is that positive temperature anomalies in the eastern equatorial Pacific are associated with positive temperature anomalies in European Russia for EOF3 but with negative anomalies for EOF2. Unlike EOF2, there are marked differences between the EOF3 patterns in different seasons, and none of the seasons shows a pattern as strong as the annual pattern. The annual-mean EOF pattern is a good indicator of the structure of seasonal temperature variability for EOF2 but not for EOF3.

The qEOFs are designed to pick up correlations associated with predefined centers of action. The eastern tropical Pacific Ocean was chosen as the reference region for determining qEOF2 because it is a center of important variability in the annual-mean observations, but it is not the most important center for every season. The seasonal qEOF2 fields (not shown) necessarily show strong positive deviations over the tropical Pacific Ocean, since the qEOF2 fields are determined by the correlations with temperature variations in the eastern tropical Pacific Ocean. However, the eastern tropical Pacific is not the strongest center of variability for EOF2 during MAM and is matched by other centers of variability during DJF. As a result, qEOF2 and EOF2 spatial pattern correlations are relatively low for these seasons (R 2 = 0.35), compared to JJA and SON (R 2 = 0.86 and 0.68, respectively).

Figure 3a shows the annual EOF scaling factors, while Fig. 3b shows the 5-year running means of the annual scaling factors (recall that the magnitude of the EOF scaling factor in a given year is equal to the largest temperature change anywhere associated with that EOF). EOF1, representing the global warming signal, shows an upward trend that matches the variation in global-mean temperature (R 2 = 0.84). EOF2, representing ENSO, shows considerable decadal-scale variability, as well as a weak downward trend from 1905 to the end of the record. There is no evidence of any unusual change during the last 20 years. The qEOF amplitude, which is numerically equal to the average temperature deviation in the eastern tropical Pacific Ocean, also fails to show any major difference between the past 20 years and the preceding record.

This would appear to contradict the work of Trenberth and Hoar (1997) and others, who found evidence of an increase in the intensity and duration of ENSO after the mid 1970s based on an analysis of the southern oscillation index (SOI). However, if we first linearly detrend the observations and then apply the EOF analysis, a distinctive upward shift in the amplitude of EOF1 (which now represents ENSO variability) is seen after the mid-1970s. This is an illustration of how decadal and longer term trends in particular modes of temperature variability can depend on how the data are treated prior to analysis. Pre-treatment clearly affects the comparability of temperature indices (analyzed here) and pressure indices (analyzed by Trenberth and Hoar 1997). Even after detrending the temperature data, the temperature and pressure-based indices do not give entirely the same picture: the amplitude of the temperature ENSO EOF is, by the end of the record, no larger than previous peaks in the 1940s and late 1890s, whereas the SOI reported by Trenberth and Hoar (1997) shows a peak in 1940 that is about 20% weaker than the largest post 1970s peak (in 1983).

EOF3, representing warming in Western Europe and cooling in the North Atlantic Ocean and Canada in the annual mean (Fig. 1), shows weak evidence of an approximately 70-year oscillation (whose long-term significance cannot be judged because of the shortness of the record). A 70-year oscillation is consistent with the work of Schlesinger and Ramankutty (1992), who also find evidence of a 70-year oscillation in the North Atlantic region. Finally, EOF4, representing warming in northern Asia and cooling in parts of eastern Canada, shows a strong downward trend during the first half of the record, followed by an equally strong upward trend during the second half of the record. This implies that the rate of warming in north Asia was considerably less than the global-mean rate of warming during the first part of the record but considerably greater during the second half, whereas eastern North America experienced minimal warming during the past 70 years. This is consistent with direct observations of temperature trends in these regions.

Figure 4 shows the latitudinal variation of the zonally averaged annual-mean EOF and qEOF fields. Results are plotted only for latitudes with data extending back at least 50 years over at least 50% of the grid squares. The qEOFs are calculated such that the mean value of each qEOF is 1.0 °C, averaged over the domain used to compute ΔT n (t i ). Since the reference domain for qEOF1 is the entire globe (exclusive of grid points with less than 30 years of data), the zonal-mean qEOF1 values shown in Fig. 4 represent the zonal-mean temperature changes scaled up to a global-mean warming of 1.0 °C. These show a minimum in warming at low latitudes, increasing with latitude by about a factor of two by latitudes 40°S and 50°N (the zonal means poleward of these latitudes have decreasing significance due to a decrease in the number of grid points with adequate data). This pattern, with an amplification of a factor of two toward high latitudes, is consistent with the transient response of the AOGCMs, as will be discussed in a subsequent study. The second qEOF, representing ENSO variability, has a peak zonal-mean amplitude of about 0.6 °C (near the equator) and minima of about –0.15 °C near 40°S and 40°N. qEOF3 and qEOF4 show zonal-mean peaks of 0.4 °C and 0.3 °C, respectively, around 60°N. Both qEOFs drop to near zero between 30°S and 30°N, then drop to a minima of –0.3 °C (qEOF3) or –0.2 °C (qEOF4) near 60°S, implying inversely correlated Northern and Southern Hemisphere changes around 60° latitude. This anti-phase behavior is also seen in EOF4, as discussed already.

Latitudinal variation in the zonal-mean value of a the first four EOFs and b the first four qEOFs, and c comparison of EOF1, qEOF1, and the linear trend (from 1900 to 2000) in zonal-mean temperature, all as computed from observed annual-mean temperature data for the period 1900–2000. In c, EOF1 has been normalized to give a global-mean value of 1.0 °C, which is comparable to the global-mean value of the linear trend. The global-mean value of qEOF1 is 1.0 °C by definition

Figure 4c directly compares the zonal mean of EOF1 and qEOF1, along with the 100-year temperature change computed from the linear trend over the period 1900–2000. EOF1 shows a hemispheric asymmetry (a greater mid-latitude peak in the NH), while for qEOF1 and the linear trend the midlatitude peaks are virtually the same.

3.1 Impact of prior detrending of the data

Many of the AOGCMs to be analyzed here have substantial regional trends in the control runs (particularly at high latitudes) in spite of the use of flux adjustment. We have assumed that the relatively large magnitudes of these regional trends preclude their being physically realistic manifestations of internal variability. For this reason, the EOF analyses of the model data are applied to temperatures that have been linearly detrended grid-point by grid-point. The EOFs computed from the detrended data thus contain no contribution from long-term (100 years and longer) temperature trends. The observed temperatures, on the other hand, do contain a long-term trend (the global warming signal) that is contained largely in the first EOF. Thus, we would expect the first model EOF to correspond to the second observed EOF (which represents ENSO variability), the second model EOF to correspond to the third observed EOF, and so on.

Alternatively, we could detrend the observed data in the same way that we detrend the model data, and directly compare the corresponding EOFs. However, local observed trends have not been uniform, and the forcing causing global warming has varied nonlinearly, with an acceleration during the last few decades. Thus, even after linearly detrending the observed data, there will be an important component of variability related to external forcing that will be represented in one or more of the first few observed EOFs.

To test the effect on the EOFs of linearly detrending the observed data, we computed the common variances between annual-mean EOFn of the detrended data with annual-mean EOFn+1 of the raw data for the first three pairs. If detrending largely removes the signal picked up by EOF1 of the raw data (which we here identify as the externally-forced global warming signal), then detrending will have little effect on the patterns associated with the subsequent EOFs of the raw data. The common variances are as follows (with the first subscript corresponding to the detrended data): EOF1/2: 0.874, EOF2/3: 0.068, EOF3/4: 0.006. Detrending has little effect on the ENSO mode (EOF1 of the detrended data, EOF2 of the raw data), perhaps because it is the strongest mode. However, detrending significantly alters the other modes. This is because, in the detrended data, the global warming signal is no longer the strongest mode, it having been supplanted by the ENSO mode (which is now EOF1). However, the global warming signal is only crudely removed by detrending, and now appears as parts of EOF2 and EOF3. Hence, EOF2 and EOF3 of the detrended data cannot be expected to match EOF3 and EOF4 of the raw data. We therefore conclude that it is not appropriate to linearly detrend the observed data prior to performing an EOF analysis. Rather, we believe that EOF1 is a more effective method for removing the anthropogenic global warming signal from the raw data, so that the appropriate comparisons are between observed EOFn+1 and control-run EOFn.

This is not to imply that EOF1 captures all of the anthropogenic signal. It is possible that there is some spill-over of the anthropogenic signal onto higher observed EOFs, and this could be a reason for some of the differences between model and observed EOFs, noted later.

In the case of the qEOFs, our procedure for calculating qEOF1 removes that portion of the local trend that is correlated with the trend in the global-mean temperature. As with the EOF case, this is not a perfect method for removing the anthropogenic signal, due to its nonlinearity and spatial heterogeneity. If the local trend increases more (or less) strongly at the beginning (or end) of the time series than based on the average correlation at that grid-point with the global-mean trend, then a local long-term trend will still appear after removing qEOF1. In the case of CCSR (a model with substantial drift in the control run), we find that qEOF1 on average removes about half of the grid-point trend. Conversely, if we linearly detrend the data first, grid-point by grid-point, we do not eliminate qEOF1, there are still short-term fluctuations in the global-mean temperature, and there is a spatial structure in these fluctuations. This “residual” qEOF1 is modestly correlated with the “raw” qEOF1 (i.e., based on the raw data): the common variance between the two is 0.290 in the case of the observed temperature data. However, prior detrending has a negligible effect on qEOF2 and higher qEOFs. For observed temperature, the common variances between qEOFs computed from the raw data and the same qEOFs computed from detrended data are as follows: qEOF2: 0.992, qEOF3: 0.983, qEOF4: 0.980. We therefore conclude that it is valid to compare model qEOFs (computed from detrended data) with the observed qEOFs (computed from raw data), for qEOF2 and higher. This comparability is an additional advantage of qEOF analysis over EOF analysis.

4 Model climatology and variability

In order to provide a context for the presentation of the most important model EOF and qEOF fields, we briefly compare the model climatological temperatures and variability with the corresponding observations. Figure 5a compares the latitudinal variation of the globally averaged surface air temperature for each model, averaged over all years of the linearly detrended control runs, with the observed (1961–1990) climatology (Jones et al. 1999). Overall, the models do well in simulating the observed zonal structure of annual-mean temperature. Table 4 lists the pattern correlation (R 2) between the model and observed climatological annual-mean temperatures (the model data were interpolated to the same latitude–longitude grid in order to compute the pattern correlations). The squared pattern correlations (with grid point values weighted by the square root of the cosine of latitude) range from 0.88 to 0.91.

Figure 5b compares the model and observed grid-point standard deviation of the zonal-mean of the annual-mean temperatures. In this case, the observed values are computed from the gridded time series data available from the Climatic Research Unit at the University of East Anglia (http://www.cru.uea.ac.uk ) and documented by Jones et al. (1997, 2001). We have used data over the period 1900–2000. This temperature dataset is a composite of surface air temperature over land and sea surface temperature, and so is not strictly equivalent to the model data over ocean areas. However, our main conclusion, that the models significantly and consistently over-estimate the interannual variability of zonal-mean temperature in middle latitudes of the Northern Hemisphere, will not be altered by the small difference between sea surface and surface air temperature variability at the same grid point. Later will we see that the interannual temperature variability in specific longitudinal sectors at middle latitudes is about right. The overestimation of zonal-mean variability by the models is due to an inadequate contrast between concurrent positive and negative temperature deviations in different longitudinal sectors, so that there is inadequate cancellation of the regional deviations when computing the zonal-mean deviation.

Figure 6 compares the time series of detrended control-run global- and annual-mean temperatures for the eight models, while Table 4 compares the model and observed standard deviations. As seen from Figure 6 and Table 4, CCSR, HadCM2, and HadCM3 have the largest temperature variability.

5 EOF analysis of AOGCM temperature data

Figure 7 shows EOF1 and EOF2 for the detrended control-run annual-mean temperatures for the eight models. Figure 8 gives the percent of annual variance explained by the first three EOFs for all eight models, as well as for the corresponding EOFs (2 to 4) of the observed temperature data. Comparison of Fig. 7 with Fig. 1 shows that the leading model modes are not particularly realistic, while Fig. 8 shows that the fraction of the model temperature variation explained by these unrealistic modes is generally greater than the fraction of the observed temperature variance explained by the observed modes of variability.

Many of the model EOF fields shown in Fig. 7 consist of strong, closely spaced “bulls-eyes” of alternating sign, generally in mid- or high-latitude regions. This suggests that the models are unable to simulate the spatial scales of teleconnections with any realism. Similar bulls-eye patterns are seen in many of the EOF3 and EOF4 fields. The significance of the EOF3 and EOF4 patterns is doubtful. Both EOFs explain similar amounts of variance, so the detailed fields are likely to be sensitive to minor (noise) variations in the original data (Preisendorfer 1988). Nevertheless, we still include these EOFs in the analysis later.

Table 5 gives the squared pattern correlations between observed EOFs 2 to 4 and the model EOF having the highest correlation with each observed EOF (generally, model EOFn is most closely correlated with observed EOFn+1, but exceptions occur and are listed in Table 5). To compute the pattern correlations, we first interpolated the model EOFs (or qEOFs) to the same 5° × 5° latitude-longitude grid as for the observational data, then multiplied the grid point data by the square root of the cosine of latitude. Pattern correlations are computed based on the grid points with observed EOF values, which correspond to those grid points with at least 30 years of data. The results are rather disappointing. Most of the pattern correlations show common variances less than 30%. Observed EOF2 (the ENSO pattern) is most closely correlated with the leading mode of model variability in five of the eight models, but in only two models (HadCM2 and PCM) is this at all similar (i.e., R 2 > 0.3) to the observed pattern of ENSO variability. qEOF results (qEOF2), also given in Table 5, are better, with pattern R 2 values ranging from 0.29 (CCSR) to 0.53 (PCM).

For observed EOF3 (NAO), only one model has an EOF that shares more than 30% of common variance with the observed NAO pattern (CCC). However, rather than appearing in the model as the second most important mode of variability (i.e., as model EOF2), the NOA-like mode appears as EOF1 in CCC. Nevertheless, it is encouraging that most models show an EOF with an NAO-like structure straddling the North Atlantic, as either the first (CCC, CCSR) or third (ECHAM3, ECHAM4, HadCM2, HadCM3, PCM) EOF, and with either a dipole (CCC, ECHAM4, HadCM2, PCM) or quadrupole (CCSR, ECHAM3, HadCM3) structure.

For the third most important observed mode (EOF4 or qEOF4), the Siberian oscillation, in only one model (HadCM2) is there an EOF pattern that shares more than 20% common variance with the observed mode. In other words, when assessed using traditional EOFs, the observed Siberian oscillation is never a recognizable component of model internal variability. As noted earlier, in the observed EOF analysis, this mode is more properly referred to as an inter-hemispheric mode of variability, so what we are seeing is a failure of the models to exhibit variability that is out of phase between the hemispheres. The situation is greatly improved when variability modes are identified using qEOFs (and where the terminology “Siberian oscillation” for EOF3 is more appropriate). For qEOF3 and qEOF4, model modes share 41–50% and 43–56%, respectively, with the corresponding observed mode.

5.1 Seasonal EOF patterns

We also examined the seasonal EOF1 patterns for each of the models, and found that: (1) there is no resemblance to the observed ENSO pattern (shown in Fig. 2) for any season for CCC, CSIRO, and ECHAM3; (2) for CCSR, there is a weak ENSO pattern in SON, but nothing close to the observed seasonal ENSO pattern for any other season; (3) for ECHAM4 and HadCM2, the most ENSO-like pattern occurs during NH spring; and (4) for HadCM3 and PCM, there is a ENSO pattern in NH spring and fall, with reasonable spatial teleconnections (a weak ENSO pattern is also evident during NH summer in PCM). The seasonal EOF1 patterns are shown for HadCM3 and PCM in Fig. 9. The negative regions in North America in DJF and MAM and in Siberia in NH fall in PCM match the observations well.

An NAO-like temperature variation structure is evident in EOF1 during DJF (and sometimes also during MAM) in all of the models except CSIRO. In the case of ECHAM3, ECHAM4, HadCM3, and PCM, a quadrupole structure occurs, as is evident from Fig. 9 for HadCM3 and PCM. In PCM, this structure persists into MAM, and appears along with the ENSO structure in the tropical Pacific.

The absence of an ENSO structure in EOF1 during DJF for all the models except HadCM2 is not necessarily due to the absence of this mode of oscillation in the models during this season, but rather, appears (except for CSIRO) to be the result of the NAO mode of variation being strongest during this season. As discussed later, this mode is too strong in five of the eight models, while the ENSO mode is too weak in all the models except HadCM2. Because of this it is the NAO mode instead of the ENSO mode that is picked up by EOF1. Thus, the two most important observed modes of variability are present in EOF1 to some extent in most of the models, depending on the season, but combined into a single mode of variability. This often causes the spatial correlation between the model and observed EOFs to be rather poor because, in the observations, there is a clearer separation of the ENSO and NOA modes into separate EOFs.

Yuan and Martinson (2001) and Liu et al. (2002) discuss the “Antarctic dipole”, an out-of-phase oscillation seen in NCEP-NCAR reanalysis surface air temperature at 60°S, with poles centered on the Antarctic Peninsula (at 60°W) and centered at 140°W. The Antarctic dipole is strongly linked to ENSO. Cai and Watterson (2002) also find an Antarctic Dipole, in EOF3 of annual-mean and seasonal 500 mb heights in the Southern Hemisphere (also from NCEP-NCAR reanalysis). They found a similar oscillation in CSIRO pressure variations, and is seen here in annual-mean EOF2 for surface temperature in CSIRO and HadCM2 (see Fig. 7). CCSR also shows an Antarctic dipole structure with 100° of longitude separation between the two poles, but shifted into the eastern hemisphere. This dipole is strongest during JJA in CCSR but appears in EOF1 rather than EOF2 (as for the annual-mean data). In PCM it does not appear in either annual-mean EOF1 or EOF2 (Fig. 7), but a very strong and correctly positioned dipole appears during JJA in EOF1 (Fig. 9). CCC shows a weak Antarctic dipole during JJA. The other models do not show this dipole in either annual- or seasonal-mean EOFs.

5.2 Zonal mean of the annual EOF fields

The differences between the models and observations are highlighted in Fig. 10. Figure 10 compares the latitudinal variation in the zonal means of observed EOF 2 to 4 with the model EOF that has the highest spatial correlation with each of the observed EOFs. For observed EOF2, only HadCM2 and HadCM3 show any similarity with the observations, but even here, this breaks down north of 40°N. For observed EOF3, most of the models show the high-latitude peak in the NH, generally displaced to the south. For the SH, there are no model-observed similarities. For observed EOF4, four models show some similarities in the midlatitudes of the NH, but model-observed similarities are generally poor.

Latitudinal variation in the zonal mean value of observed EOFs 2 to 4, and of the model EOF having the highest pattern correlation with each of these three observed EOFs. The observed EOFs are computed from raw data, while the model EOFs where computed from linearly detrended control-run data. The model EOFs that are shown with each observed EOF are given in Table 5

6 Quasi-EOF analysis of AOGCM temperature data

EOF analysis asks the question, “What are the dominant modes of variability in the space-time data array?” The qEOF analysis asks the question, “To what extent are specific patterns of variability, defined a priori from observations, present in the model space-time data array?” Since the qEOF analysis has been constructed to seek the dominant patterns of variability that have been identified in the observations using EOF analysis, it is not surprising that the EOF and qEOF analyses give essentially the same results when applied to observed data. However, since the models examined here do not replicate or separate the observed modes of variability well, it is equally unsurprising that the qEOF and EOF analyses yield quite different results when applied to the model data. With model data, the advantage of qEOFs is that they allow us to determine how strongly observed modes of variability are represented in the model results.

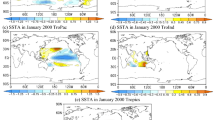

Figure 11 shows annual-mean qEOF patterns for qEOFs 2 to 4 for each model, corresponding to the ENSO, NAO, and Siberian Oscillation modes of variability. When these patterns are compared with the corresponding observed patterns, we can see how well the models simulate the observed teleconnection structures associated with particular modes of variability. Table 5 quantifies this feature by giving the pattern correlations between the model and observed modes (although it should be noted that at least moderate R 2 values are guaranteed because patterns are constrained to be similar near the mode “focus” region). Figure 12 compares the latitudinal variation in the zonal-mean qEOF magnitude in the models with that of observations.

All three natural modes of variability can be seen in the model control runs to some extent. This means, for example, that when the temperature is warmer than average in the eastern tropical Pacific Ocean (ENSO mode, qEOF2), there is a spatial pattern of positive and negative temperature deviations around the globe that partly resembles (R 2 = 0.29 to 0.53) the observed patterns of temperature variation associated with a warm tropical eastern Pacific Ocean. Five of the models (CCC, CCSR, CSIRO, ECHAM3, HadCM2) show much more extensive ENSO teleconnection patterns than seen in observed qEOF2. Except for HadCM2, this result is different from the conclusion that might be drawn from conventional EOF analysis.

For qEOF3 and qEOF4, the R 2 correlations between model and observations tend to be higher and more consistent than for qEOF2, perhaps due to the simpler (and easier to simulate) structure of the observed qEOFs. The correlation between model and observed qEOFs is higher (usually substantially so) than between the corresponding model and observed EOFs, without exception. For qEOF3 (the NAO mode), the models uniformly show a stronger Greenland/Europe dipole than is observed, perhaps because this mode is more uniform across the seasons in the models than in the observations. Many of the models show a weak antiphase relationship between warming in Europe and high southern latitude cooling, as seen in the observed qEOF3 (Fig. 1).

In contrast to the EOF results, zonal-mean temperature fluctuations agree well with the observations; for qEOF2, both the models and observations show a maximum centered at the equator, flanked by minima at 35°S and 35°N. The maxima in the zonal-mean values are close to observed in all of the models for qEOF3 and qEOF4, but significantly too large in many models for qEOF2. This latter is a result of an inadequate anti-phase equatorial temperature anomaly outside the eastern and central equatorial Pacific in most models.

Figure 13 compares the percent of space-time temperature variation accounted for by these three qEOF patterns in the models and in the linearly detrended observations. (When the observations are detrended, qEOF 2 to 4 account for a larger fraction of the space-time variation than if the observed data are not first detrended, and it is more appropriate to compare these higher accounted-for variabilities with the variability accounted for by the model qEOFs.) In all of the models except HadCM2, the ENSO-like variability is too small (accounting for about 3% of the total variability in four of the models, compared to 8% in the observations). For the other two modes, the model variability is comparable to that observed.

Finally, Fig. 14 compares the standard deviations of the interannual qEOF amplitudes for the eight models and for the observations on a seasonal basis (recall, the qEOF amplitude in a given year is simply the average temperature deviation in that year averaged over the appropriate reference region). Depending on the overall model variability, a given model could have a realistic qEOF amplitude variability but account for too small or too large a fraction of the total variability, the quantity shown in Fig. 13.

The amplitude of qEOF2 is, by definition, equal to Niño 3 temperature variability, and in most models this variability is too small and does not have the correct seasonal structure or, usually, no seasonal structure at all. HadCM3 and PCM have the correct annual-mean variability but incorrect seasonal cycle. These results are consistent with the findings for specific models, summarized in Table 1, by Flato et al. (2000), Timmermann et al. (1999), Bacher et al. (1998), Collins (2000), and Washington et al. (2000). For the NAO mode, temperature variability is too large in most models (especially in CCSR), but has the correct seasonality. The Siberian temperature variation tends to be too large in all models, especially in CCSR. In CCSR the maximum variability occurs in MAM, rather than in DJF (as observed), while in HadCM2 the summer variability is too large relative to the winter variability. Thus, no single model simulates a realistic variability magnitude and seasonal structure for all three qEOF modes. The anti-phase behavior of high-latitude temperature fluctuations in the two hemispheres, seen in observed qEOF 2 and 3, is either not present or is very weak in the model qEOFs.

7 Comparison with pressure indices

We have identified temperature qEOF2 and qEOF3 with the ENSO and the NAO, respectively. As a further test of the model performance compared to observations, and as a further means of comparing different models, we have computed the time series of annual-mean Southern Oscillation Index (SOI) and North Atlantic Oscillation Index (NAOI) for each of the models. The SOI we employ is given by the surface pressure at Tahiti (17.5°S, 149.6°W) minus the surface pressure at Darwin (12.4°S, 130.9°E), while the NAOI is given by the pressure at Gibralter (36°N, 5.5°W) minus the pressure at Reykjavik, Iceland (64°N, 22°W). (We choose not to normalize these pressure indices in order to provide a more stringent test of the models.) For the models we use the control-run surface pressures (which are available from the IPCC website) at the grid points closest to the four sites given, while for observations we use the monthly pressure data at these four sites for the period 1900–2000 that are available from the afore-mentioned Climatic Research Unit website.

Table 6 gives the mean and standard deviation of the annual-mean SOI and NAOI for the eight models and for observations. For the SOI, the model pressure differences range from 70% too small to 2.4 times too large, with only HadCM2 having a result similar to observations. Thus, all models bar HadCM2 have serious errors in the trans-Pacific Ocean pressure gradient. In terms of inter-annual variability, all models underestimate this parameter. Even the most variable model (HadCM2) has an SOI standard deviation that is only three-quarters of that observed.

For the NAOI, the average model Gibralter-minus-Reykjavik pressure difference ranges from less than half of that observed to almost twice that observed. For about half of the models, however, the standard deviation is within 10% of that observed. The ranking of the SOI standard deviation is not the same as the ranking of the standard deviation of Niño 3 temperature (shown in Fig. 14a). For example, ECHAM4 and PCM have a temperature variability close to that observed but an SOI variability less than half that observed. ECHAM3 has a relatively high NAOI variability, but relatively little west-European temperature variability.

Also given in Table 6 are the squared correlations between the SOI time series and the corresponding reference-region temperature (qEOF2 amplitude) time series, for the models and for observations, and the corresponding NAOI results. In the observed data, the SOI–qEOF2 time series correlation is 0.61. Two of the models, CCC and HadCM2, show a SOI–qEOF2 correlation that is slightly stronger than observed, but in the other models the correlation is as little as one third that observed. These poor correlations, however, could be due to the centers of pressure variability in the models differing slightly in location from the observations. To test this hypothesis, we considered the 5 longitudinal grid points and the 5 latitudinal grid points centered at the grid cells containing Tahiti and Darwin (25 grid points each), and computed all possible pressure differences between these two sets of points (a total of 625) and the correlation between each of these time series and the qEOF2 time series. The maximum correlation so obtained is given in brackets in Table 6. In every case, the maximum correlation is comparable to (and generally greater than) the observed correlation.

One might suspect that the poor SOI–qEOF3 time series correlations in most models using the original SOI could be related to errors in the tropical pattern of sea surface temperature (SST). This does not appear to be the case. We have computed the deviations in model climatological surface air temperature (which will track SST) from the ocean-only 30°S–30°S mean, and compared this with the deviations in the Jones et al. (1999) observed climatology (where temperatures over ocean regions are SSTs). We find that the differences between the model and observed deviations are less than 1 K everywhere or almost everywhere in all of the flux-adjusted models. In the two models without adjusted fluxes (HadCM3 and PCM), the discrepancies between the model and observed deviation fields are less than 1 K everywhere except in upwelling regions, where discrepancies in excess of 4 K can occur. In spite of the poorer SST simulations in these models, however, their SOI–qEOF3 correlations are not unusual relative to those in flux-adjusted models.

The NAOI–qEOF3 time series correlation in the observed data is much weaker (R 2 = 0.33) than the SOI–qEOF2 correlation (R 2 = 0.61) and this is echoed in the model results. A comparable NAOI–qEOF3 correlation is seen in CCC, CCSR, and PCM. After generating 625 alternative NAO indices for each model (in a manner analogous to that for the SOI), the maximum correlation is comparable to or greater than the observed correlation for all models except CSIRO and HadCM3, where the maximum correlations are about half the observed correlation. This suggests that, in these two models, processes other than North Atlantic pressure fluctuations are the cause of NAO-like temperature variability.

We also computed time series of annual SOI and NAOI values based on seasonal data, and computed the correlations of these with the corresponding seasonal qEOF amplitudes. For the observations, the SOI–qEOF2 correlation is the strongest for SON. In three models (CCSR, ECHAM4, and HadCM2) the correlation is strongest during SON, in two models (ECHAM3 and PCM) it is strongest during DJF, and in remaining models the correlation is strongest during MAM or JJA. In the observations and in six of the models, the NOAI–qEOF3 correlation is strongest during DJF, while in the other two models (CCC and PCM) the correlation is strongest during MAM.

As a final comparison between model and observed ENSO and NAO variability, we prepared maps of the correlation between local temperature and the standard SOI and NAOI for each season. The observed seasonal correlation fields for the SOI and NAOI are shown in Figs. 15 and 16, respectively.

The seasonal SOI- or NAOI-temperature correlation maps resemble the seasonal qEOF2 and qEOF3 fields, respectively, which is not surprising given that the qEOF amplitudes are correlated with the corresponding pressure index (Table 6). For the models we show the spatial correlation fields for the SON (SOI) and DJF (NAOI) seasons only (as Figs. 17 and 18). We computed model correlation fields using both the standard SO and NAO indices and using the indices that maximize the correlation between the SOI or NAOI and the corresponding qEOF amplitude; model results are given in Figs. 17–18 for the standard indices. Use of the SOI that maximizes the correlation with the qEOF2 amplitude or of the NAOI that maximizes the correlation with the qEOF3 amplitude has little effect on the correlation patterns.

As seen from Fig. 17, most models display the main observed features of the observed patterns of correlation with SOI: a broad region of strongly negative correlations in the central and eastern equatorial Pacific, flanked by two diagonal bands of positive correlation in the central and western Pacific that converge on a region of positive correlation centered at Indonesia and northern Australia. Figure 18 indicates that, to some extent, all models show the observed quadrupole structure in the correlation between temperature and the NAOI during DJF, a structure that is also seen in observed EOF3 and in some of the model EOFs, as noted already. Osborn et al. (2000) had previously shown HadCM2 to produce a quadrupole pattern in the temperature-NAOI correlation, and our results are indistinguishable from the results given in their Fig. 5c.

8 Discussion and concluding comments

In order to detect anthropogenic and other externally-forced climatic change signals against the background of internally generated fluctuations, it is essential that these fluctuations be characterized reliably in climate models (Mitchell et al. 2001). Their reliable characterization is also important for estimating the details of future anthropogenic climatic change, since future changes may well involve changes in the relative magnitudes of the primary modes of natural variability (Hasselmann 1999; Corti et al. 1999).

The comparison of model EOFs with observed EOFs, on face value, is somewhat discouraging. Most of the models examined here have major difficulties simulating the observed structure and magnitude of the leading EOFs from the observational data. Of the eight models examined here, HadCM2 has the best pattern correlation between EOF1 and the corresponding observed EOF (the R 2 is around 0.4). For many of the other models, the leading EOFs have squared correlations with the observed EOFs in the range of 0.1–0.2. Furthermore, the variability associated with these modes of variability in the models is often much larger than the variability associated with the observed modes of variability. It should be noted, however, that the poor spatial correlations between model and observed EOFs are related, at least in part, to the fact that the model EOFs often combine elements that appear in separate EOFs for the observations. In particular, an NAO-like temperature structure is often seen in model EOF1, along with or in place of (depending on the season) the ENSO structure.

The correlation between model and observed qEOFs is much better than between model and observed EOFs, thereby validating the use of qEOFs as a measure of model variability. The amplitude of the qEOFs is given by the average temperature in the reference regions used to compute each qEOF field. For CCSR, HadCM2, HadCM3, and PCM, the temperature variability in the eastern equatorial Pacific Ocean (related to qEOF2) is comparable to the observed variability, but it is too small in the other models. Conversely, most of the models have European and Siberian temperature variability comparable to or greater than observed.

We computed the correlations between the amplitude time series for qEOF2 and qEOF3 (corresponding to ENSO and NAO modes of variability) and pressure indices related to these modes of variability (the Southern Oscillation Index, SOI, and the North Atlantic Oscillation Index, NAOI, respectively). In general, model correlations are substantially less than those observed. If alternative pressure indices (using grid cells near to those normally used to compute the indices) are allowed, however, then all models have correlations comparable to those observed.

As a third measure of model and observed variability, we computed maps of the correlation between local temperature and the SOI and NAOI. These maps compare well with similar maps computed from observed data for seasons where the SO and NAO modes of variability have the strongest teleconnections in nature (SON and DJF, respectively). The SOI- and NAOI-temperature correlation maps also closely resemble the qEOF2 and qEOF3 fields, respectively, as one would expect given the correlation between the pressure indices and corresponding qEOF amplitude time series.

In Part 2 (Harvey 2003), the spatial fields of mean precipitation and variability, as simulated by the same eight models, are examined. Surprisingly, although the models do not do as well in simulating the precipitation climatology compared to observations as they do in simulating the temperature climatology, the models generally do much better in simulating the observed patterns of precipitation variability (as given by EOF analysis) than they do in simulating the observed patterns of temperature variability.

References

Achuta Rao K, Sperber KR (2002) Simulation of the El Niño Southern Oscillation: results from the Coupled Model Intercomparison Project. Clim Dyn 19: 191–209

Arblaster JM, Meehl GA, Moore AM (2002) Interdecadal modulation of Australian rainfall. Clim Dyn 18: 519–531

Bacher A, Oberhuber JM, Roeckner E (1998) ENSO dynamics and seasonal cycle in the tropical Pacific as simulated by the ECHAM4/OPYC3 coupled general circulation model. Clim Dyn 14: 431–450

Barnett TP (1999) Comparison of near-surface air temperature variability in 11 coupled global climate models. J Clim 12: 511–518

Bell J, Duffy P, Covey C, Sloan L (2000) Comparison of temperature variability in observations and sixteen climate model simulations. Geophys Res Lett 27: 261–264

Cai W, Watterson IG (2002) Modes of interannual variability of the southern hemisphere circulation simulated by the CSIRO climate model. J Clim 15: 1159–1174

Collins M (2000) The El Niño-southern oscillation in the second Hadley Centre coupled model and its response to greenhouse warming. J Clim 13: 1299–1312

Collins M, Tett SFB, Cooper C (2001) The internal climate variability of HadCM3, a version of the Hadley Centre coupled model without flux adjustments. Clim Dyn 17: 61–81

Corti S, Molteni F, Palmer TN (1999) Signature of recent climate change in frequencies of natural atmospheric circulation regimes. Nature 398: 799–802

Dai A, Wigley TML (2000) Global patterns of ENSO-induced precipitation. Geophys Res Lett 27: 1283–1286

Delworth TL, Mann ME (2000) Observed and simulated multidecadal variability in the Northern Hemisphere. Clim Dyn 16: 661–676

Delworth TL, Mehta VM (1998) Simulated interannual to decadal variability in the tropical and sub-tropical North Atlantic. Geophys Res Lett 25: 2825–2828

Delworth TL, Stouffer RJ, Dixon KW, Spelman MJ, Knutson TR, Broccoli AJ, Kushner PJ, Wetherald RT (2002) Review of simulations of climate variability and change with the GFDL R30 coupled climate model. Clim Dyn 19: 555–574

Doherty R, Hulme M (2002) The relationship between the SOI and extended tropical precipitation in simulations of future climate change. Geophys Res Lett 29 (10): 113, DOI 10.1029/2001GL014601

Emori S, Nozawa T, Abe-Ouchi A, Numaguti A, Kimoto M, Nakajima T (1999) Coupled ocean–atmosphere model experiments of future climate change with an explicit representation of sulfate aerosol scattering. J Meteorol Soc Japan 77: 1299–1307

Flato GM, Boer GJ, Lee WG, McFarlane NA, Ramsden D, Reader MC, Weaver AJ (2000) The Canadian Centre for Climate Modelling and Analysis Global Coupled Model and its climate. Clim Dyn 16: 451–467

Fyfe JC, Boer GJ, Flato GM (1999) The Arctic and Antarctic oscillations and their projected changes under global warming. Geophys Res Lett 26: 1601–1604

Gordon C, Cooper C, Senior CA, Banks H, Gregory JM, John, TC, Mitchell JFB, Wood RA (1999) The simulation of SST, sea ice extents and ocean heat transports in a version of the Hadley Centre coupled model without flux adjustments. Clim Dyn 16: 147–168

Gordon HB, O’Farrell SP (1997) Transient climate change in the CSIRO coupled model with dynamic sea ice. Mon Weather Rev 125: 875–907

Handorf D, Petoukhov VK, Dethloff K, Eliseev AV, Weisheimer A, Mokhov II (1999) Decadal climate variability in a coupled atmosphere–ocean climate model of moderate complexity. J Geophys Res 104: 27,253–27,275

Harvey LDD (2003) Characterizing and comparing control-run variability of eight coupled AOGCMs and of observations. Part 2: precipitation. DOI 10.1007/s00382-003-0358-9

Hasselmann K (1999) Linear and nonlinear signatures. Nature 398: 755–756

Hunt BG, Elliott TI (2003) Secular variability of ENSO events in a 1000-year climatic simulation. Clim Dyn 20: 689–703

Johns TC, Carnell RE, Crossley JF, Gregory JM, Mitchell JFB, Senior CA, Tett SFB, Wood RA (1997) The second Hadley Centre coupled ocean–atmosphere GCM: model description, spinup and validation. Clim Dyn 13: 103–134

Jones PD, Kelly PM (1983) The spatial and temporal characteristics of Northern Hemisphere surface air temperature variations. J Climatol 3: 243–252

Jones PD, Osborn TJ, Briffa KR (1997) Estimating sampling errors in large-scale temperature averages. J Clim 10: 2548–2568

Jones PD, New M, Parker DE, Martin S, Rigor IG (1999) Surface temperature and its changes over the last 150 years. Rev Geophys 37: 173–199

Jones PD, Osborn TJ, Briffa KR, Folland CK, Horton EB, Alexander LV, Parker DE, Rayner NA (2001) Adjusting for sampling density in grid box land and ocean surface temperature time series. J Geophys Res 106: 3371–3380

Kang IS (1996) Association of interannual and interdecadal variations of global mean temperature with tropical Pacific SST appearing in a model and observations. J Clim 9: 455–464

Kelly PM, Jones PD, Pengqun J (1999) Spatial patterns of variability in the global surface are temperature data set. J Geophys Res 104: 24,237–23,256

Knutson TR, Manabe S (1998) Model assessment of decadal variability and trends in the tropical Pacific Ocean. J Clim 11: 2273–2296

Knutson TR, Manabe S, Gu D (1997) Simulated ENSO in a global coupled ocean–atmosphere model: multidecadal amplitude modulation and CO2 sensitivity. J Clim 10: 138–161

Latif M et al (2001) ENSIP: the El Niño simulation intercomparison project. Clim Dyn 18: 255–276

Li T, Hogan TF (1999) The role of the annual-mean climate on seasonal and interannual variability of the tropical Pacific in a coupled GCM. J Clim 12: 780–792

Liu J, Yuan X, Rind D, Martinson DG (2002) Mechanism study of the ENSO and southern high latitude climate teleconnections. Geophys Res Let 29: 10.1029/2002GL015143

Meehl GA, Branstator GW, Washington WM (1993) Tropical Pacific interannual variability and CO2 climate change. J Clim 6: 42–63

Meehl GA, Arblaster JM, Strand WG (2000) Sea-ice effects on climate model sensitivity and low frequency variability. Clim Dyn 16: 257–271

Meehl GA, Gent P, Arblaster JM, Otto-Bliesner B, Brady E, Craig A (2001) Factors that affect amplitude of El Niño in global coupled climate models. Clim Dyn 17: 515–526

Mitchell JFB, Johns TC (1997) On modification of global warming by sulfate aerosols. J Clim 10: 245–267

Mitchell JFB et al (2001) Detection of climate change and attribution of causes. In: Houghton JT et al. (eds) Cambridge. Climate change 2001: the scientific basis. Cambridge University Press, UK, pp 695–738

Monahan AH, Fyfe JC, Flato GM (2000) A regime view of Northern Hemisphere atmospheric variability and change under global warming. Geophys Res Lett 27: 1139–1142

Osborn TJ, Briffa KR, Tett SFB, Jones PD (1999) Evaluation of the North Atlantic oscillation as simulated by a coupled climate model. Clim Dyn 15: 685–702

Preisendorfer RW (1988) Principal component analysis in meteorology and oceanography. Elsevier, Amsterdam, pp 425

Raible CC, Luksch U, Fraedrich K, Voss R (2001) North Atlantic decadal regimes in a coupled GCM simulation. Clim Dyn 18: 321–330

Schlesinger ME, Ramankutty M (1992) An oscillation in the global climate system of period 65–70 years. Nature 367: 723–726

Schneider EK, Zhengxin Z, Giese BS, Huang B, Kirtman BP, Shukla J, Carton JA (1997) Annual cycle and ENSO in a coupled ocean–atmosphere general circulation model. Mon Weather Rev 125: 680–702

Slonosky VC, Yiou P (2001) The North Atlantic Oscillation and its relationship with near surface temperature. Geophys Res Lett 28: 807–810

Stephenson DB, Pavan V (2003) The North Atlantic Oscillation in coupled climate models: a CMIP1 evaluation. Clim Dyn 20: 381–399

Stouffer RJ, Hegerl G, Tett S (2000) A comparison of surface air temperature variability in three 1000-yr coupled ocean–atmosphere model integrations. J Clim 13: 513–537

Tett SFB, Johns TC, Mitchell JFB (1997) Global and regional variability in a coupled AOCGM. Clim Dyn 13: 303–323

Timmermann A, Latif M, Grötzner A (1998) Northern Hemisphere interdecadal variability: a coupled air-sea mode. J Clim 11: 1906–1931

Timmermann A, Latif M, Grötzner A, Voss R (1999) Modes of climate variability as simulated by a coupled general circulation model. Part I: ENSO-like climate variability and its low-frequency modulation. Clim Dyn 15: 605–618

Trenberth KE, Hoar TJ (1997) El Niño and climate change. Geophys Res Lett 24: 3057–3060

van den Dool HM, Saha S, JohanssonÅ (2000) Empirical orthogonal teleconnections. J Clim 13: 1421–1435

Von Storch JS, Kharin VV, Cubasch U, Hegerl GC, Schriever D, von Storch H, Zorita E (1997) A description of a 1260-year control integration with the coupled ECHAM1/LSG general circulation model. J Clim 10: 1525–43

Walland DJ, Power SB, Hirst AC (2000) Decadal climate variability simulated in a coupled general circulation model. Clim Dyn 16: 201–211

Washington WM, Weatherly JW, Meehl GA, Semtner AJ, Bettge TW, Craig AP, Strand WG, Arblaster JM, Wayland VB, James R, Zhang Y (2000) Parallel climate model (PCM) control and transient simulations. Clim Dyn 16: 755–774

Wigley TML (2000) ENSO, volcanoes and record-breaking temperatures. Geophys Res Lett 27: 4101–4104

Wigley TML, Jaumann PJ, Santer BD, Taylor KE (1998) Relative detectability of greenhouse gas and aerosol climate change signals. Clim Dyn 14: 781–790

Yuan X, Martinson DG (2001) The Antarctic dipole and its predictability. Geophys Res Lett 28: 3609–3612

Yukimoto S, Endoh M, Kitamura Y, Kitoh A, Motoi T, Noda A, Tokioka T (1996) Interannual and interdecadal variabilities in the Pacific in an MRI coupled GCM. Clim Dyn 12: 667–683

Yukimoto S, Endoh M, Kitamura Y, Kitoh A, Motoi T, Noda A (2000) ENSO-like interdecadal variability in the Pacific Ocean as simulated in a coupled general circulation model. J Geophys Res 105: 13,945–13,963

Zhang Y, Wallace JM, Battisti DS (1997) ENSO-like interdecadal variability: 1900–93. J Clim 10: 1004–1020

Zorita E, González-Rouco F (2000) Disagreement between predictions of the future behavior of the Arctic Oscillation as simulated in two different climate models: implications for global warming. Geophy Res Lett 27: 1755–1758

Acknowledgements.

This work was support by NSERC research grant OPG0001413 (Harvey), by the ACACIA (a joint NCAR-EPRI program), and by the NOAA Office of Global Programs (Climate Change Data and Detection) under grant NA87GP0105 (Wigley). The constructive comments of two reviewers on an earlier version of this study is appreciated.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Harvey, L.D.D., Wigley, T.M.L. Characterizing and comparing control-run variability of eight coupled AOGCMs and of observations. Part 1: temperature. Climate Dynamics 21, 619–646 (2003). https://doi.org/10.1007/s00382-003-0357-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-003-0357-x