Abstract

Mean value coordinates provide an efficient mechanism for the interpolation of scalar functions defined on orientable domains with a nonconvex boundary. They present several interesting features, including the simplicity and speed that yield from their closed-form expression. In several applications though, it is desirable to enforce additional constraints involving the partial derivatives of the interpolated function, as done in the case of the Green coordinates approximation scheme (Ben-Chen, Weber, Gotsman, ACM Trans. Graph.:1–11, 2009) for interactive 3D model deformation.

In this paper, we introduce the analytic expressions of the Jacobian and the Hessian of functions interpolated through mean value coordinates. We provide these expressions both for the 2D and 3D case. We also provide a thorough analysis of their degenerate configurations along with accurate approximations of the partial derivatives in these configurations. Extensive numerical experiments show the accuracy of our derivation. In particular, we illustrate the improvements of our formulae over a variety of finite differences schemes in terms of precision and usability. We demonstrate the utility of this derivation in several applications, including cage-based implicit 3D model deformations (i.e., variational MVC deformations). This technique allows for easy and interactive model deformations with sparse positional, rotational, and smoothness constraints. Moreover, the cages produced by the algorithm can be directly reused for further manipulations, which makes our framework directly compatible with existing software supporting mean value coordinates based deformations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Boundary value interpolation is a common problem in computer-aided design, simulation, visualization, computer graphics, and geometry processing. Given a polygonal domain with prescribed scalar values on its boundary vertices, barycentric coordinate schemes enable to compute a smooth interpolation of the boundary values at any point of the Euclidean space located in the interior (and possibly the exterior) of the domain. Such interpolations are efficiently obtained through a linear combination involving a weight (or coordinate) for each boundary vertex. These weights can be obtained by solving a system of linear equations [16] or, more efficiently, through a closed-form expression [12, 17].

“Kicking Demon.” Red points indicate positional constraints; blue points indicate unknown rotational constraints. This pose was obtained by specifying only 14 positional constraints. The derivation of the Jacobian and Hessian of the MVC coordinates makes it possible to induce variational MVC deformations (implicit cage deformation based on sparse user constraints, with rotation and smoothness enforcement)

However, in several applications, it may be desirable to enforce additional constraints on the interpolation, in particular constraints involving the partial derivatives of the interpolated function. Derivative constraints have been shown to provide additional flexibility to the interpolation problem and many optimization tasks can benefit from them. In the context of approximation schemes based on Green coordinates [20], constraints on the Jacobian and the Hessian have been used for implicit cage-based deformations [2]. In such a setting, given some sparse user constraints, an optimization process automatically retrieves an embedding of the polygonal domain (the cage) such that the transformation of the interior space is locally close to a rotation.

In order to achieve acceptable precision and time efficiency, such an optimization process requires a closed-form expression of the Jacobian and the Hessian of the interpolated function. While such closed-form expressions are known for approximation schemes (in particular for Green coordinates [2, 26]), this is not the case for interpolation schemes. Moreover, these formulations are specific to the context of space deformation and it is not clear how to extend them to arbitrary functions.

In this paper, we bridge this gap by deriving the closed-form expressions of the Jacobians and the Hessians of functions interpolated with Mean Value Coordinates (MVC) [12, 17], both for the 2D and 3D case. We also provide a complete analysis of their degenerate configurations (on the support of the simplices of the cage [17]) along with accurate approximations of the derivatives for these configurations. We show the accuracy of this derivation with extensive numerical experiments.

We demonstrate the utility of this derivation for several applications, including cage-based implicit 3D model deformations (i.e., variational MVC deformations). This technique allows for easy and interactive model deformations with sparse positional, rotational, and smoothness constraints.

The cages produced by our algorithm can be directly reused for further manipulations, which makes our framework directly compatible with existing software supporting MVC-based deformations. In addition, we provide as supplemental material a lightweight C++ implementation of our derivation, enabling its usage in further optimization problems involving constrained interpolation.

1.1 Related work

Boundary value interpolation through barycentric coordinates has been widely studied in the case of convex domains, first in 2D [4, 5, 22], and more recently in higher dimensions [27, 28]. Several techniques have been proposed to extend these interpolants to nonconvex 2D domains [9–11, 14, 21]. In particular, Floater introduced a coordinate system motivated by the mean value theorem that smoothly interpolates function values defined on concave 2D polygons [11].

The generalization of these techniques to nonconvex 3D domains has been motivated mostly by computer graphics applications, in particular interactive shape deformation. In this setting, a 3D shape (in the form of a triangle soup, point cloud, or volumetric mesh) is enclosed by a closed triangle surface called a cage, from which barycentric coordinates are computed. A deformation of the cage yields a transformation of its embedding functions f x ,f y , and f z . These functions can be interpolated efficiently and smoothly in the interior space enclosed by the cage, providing a mean to interactively deform the interior 3D shape. Mean Value Coordinates (MVC) have been generalized independently to nonconvex 3D domains by Floater [12], Ju et al. [17], and further by Langer et al. [18], with applications to boundary value interpolation, volumetric texturing, shape deformation, and speed-up of deformations based on nonlinear systems [15]. To overcome artifacts occurring in highly concave portions of the cage, Joshi et al. [16] introduced the Harmonic Coordinates (HC). However, these coordinates do not admit a closed form expression and require a numerical solver for their computation. Lipman et al. [20] introduced the Green Coordinates (GC), which induce near-conformal (detail preserving) transformations and which admit a closed form expression. However, these coordinates define an approximation scheme, not an interpolation one.

Among the existing barycentric coordinate systems which support nonconvex 3D domains and which admit closed-form expressions (MVC and GC), the expressions of the Jacobian and the Hessian are only known for the Green coordinates [2, 26]. In particular, Ben Chen et al. [2] proposed an implicit cage-based deformation technique called Variational Harmonic Maps (VHM), where the target embedding of the cage is automatically optimized from sparse user-imposed positional constraints with rotational and smoothness constraints, respectively, involving the Jacobian and the Hessian of the deformation. As the 3D values attributed to the normals of the cage triangles are decorrelated from the 3D values attributed to its vertex positions in their optimization process, the cage cannot be manipulated in a post process for further detail editing. In this work, we introduce these expressions for functions interpolated with mean value coordinates. We also develop an application to implicit cage-based transformations similar to VHM [2]. In contrast to VHM, the solution of the optimization process does not involve the normals of the cage triangles, hence the cages generated by this technique cannot be exploited in a consistent manner for post-processing tasks. On the contrary, the cages produced by our algorithm can be directly reused for further manipulations, which makes our framework directly compatible with existing software supporting MVC-based deformations.

1.2 Contributions

This paper makes the following contributions:

-

1.

the closed-form expressions of the Jacobian and the Hessian of mean value coordinates, both in 2D and 3D—these expressions are more accurate than a variety of experimented finite differences schemes and they are not prone to numerical instability;

-

2.

a thorough analysis of the degenerate configurations of these expressions, along with accurate alternate approximations for these configurations;

-

3.

an implicit cage-based transformation technique using mean value coordinates, called variational MVC deformation, which interactively optimizes the target cage embedding given sparse user positional constraints, while respecting smoothness and rotational constraints;

1.3 Overview

We first review mean value coordinates in Sect. 2. The core contribution of our work, the derivation of the Jacobian and Hessian, is presented in Sects. 3 through 6. In particular, Sects. 4 and 5 provide the specific results for the 2D and 3D cases respectively.

As the derivation of the Jacobian and the Hessian is relatively involved, for the reader’s convenience, we highlighted the final expressions with  , whereas the final expressions for degenerate cases are highlighted with a

, whereas the final expressions for degenerate cases are highlighted with a  . Note that the derivation details are given in ESM (Electronic Supplementary Material).

. Note that the derivation details are given in ESM (Electronic Supplementary Material).

Experimental evidence of the accuracy of our derivation is presented in Sect. 7.

Finally, we present applications demonstrating the utility of our contributions in Sect. 8.

2 Background

In this section, we review the formulation of mean value coordinates in 2D and 3D [17].

2.1 Mean value coordinates

Similar to [17], we note p[x] a parameterization of a closed (d−1)-manifold mesh (the cage) M embedded in \(\mathbb{R}^{d}\), where x is a (d−1)-dimensional parameter, and n x the unit outward normal at x. Let η be a point in \(\mathbb{R}^{d}\) expressed as a linear combination of the positions p i of the vertices of the cage M:

where λ i is the barycentric coordinate of η with respect to the vertex i.

Let ϕ i [x] be the linear function on M, which maps the vertex i to 1 and all other vertices to 0.

The definition of the coordinates λ i should guarantee linear precision (i.e., η=∑ i λ i p i ).

Similar to [17], we note B η (M) the projection of the manifold M onto the unit sphere centered around η, and dS η (x) the infinitesimal element of surface on this sphere at the projected point (\(dS_{\eta}(x)=\frac{(p[x]-\eta)^{t}\cdot n_{x}}{|p[x]-\eta|^{3}}\,dx\) in 3D).

Since \(\int_{B_{\eta}(M)}{\frac{p[x] - \eta}{|p[x] - \eta|}\,dS_{\eta}(x)}=0\) (the integral of the unit outward normal onto the unit sphere is 0 in any dimension d≥2), the following equation holds:

By writing p[x]=∑ i ϕ i [x]p i ∀x, with ∑ i ϕ i [x]=1, we have:

The coordinates λ i are then given by

and the weights w i such that \(\lambda_{i} = \frac{w_{i}}{\sum_{i}{w_{i}}}\) are given by

This definition guarantees linear precision [17]. It also provides a linear interpolation of the function prescribed at the vertices of the cage onto its simplices and it smoothly extends it to the entire space. This construction of mean value coordinates is valid in any dimension d≥2. In the following, we present their computation in 2D and in 3D, as they were introduced in [17].

2.2 3D Mean value coordinates computation

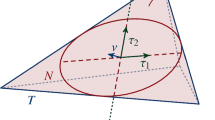

The support of the function ϕ i [x] is only composed of the adjacent triangles to the vertex i (noted N1(i)). Equation (1) can be rewritten as \(w_{i} = \sum_{T \in N1(i)}{w_{i}^{T}}\), with

Given a triangle T with vertices t 1,t 2,t 3, the following equation holds:

The latter integral is the integral of the unit outward normal on the spherical triangle \(\overline{T}=B_{\eta}(T)\) (see Fig. 2). By noting the unit normal as \(n_{i}^{T} = \frac{N_{i}^{T}}{|N_{i}^{T}|}\), with \(N_{i}^{T} \triangleq (p_{t_{i+1}}-\eta) \wedge (p_{t_{i+2}}-\eta)\) (see Fig. 2), m T is given by (since the integral of the unit normal on a closed surface is always 0):

As suggested in [17], the weights \(w_{t_{j}}^{T}\) can be obtained by noting A T the 3×3 matrix \(\{p_{t_{1}}-\eta,p_{t_{2}}-\eta,p_{t_{3}}-\eta\}\) (where t denotes the transpose):

Since \({N_{i}^{T}}^{t} \cdot (p_{t_{j}} - \eta) = 0\ \forall i \neq j\) and \({N_{i}^{T}}^{t} \cdot (p_{t_{i}} - \eta) = \det(A^{T})\ \forall i\), the final expression for the weights is given by:

where Support(T) denotes the support plane of T, i.e., \(\mathit{Support}(T)=\{\eta\in \mathbb {R}^{3} | \det(A^{T})(\eta) = 0\}\).

2.3 2D Mean value coordinates computation

Let I 2 be the 2×2 identity matrix and \(R_{\frac {\pi }{2}}\) the rotation matrix

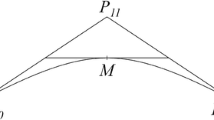

In 2D, the orientation of an edge E=e

0

e

1 of a closed polygon is defined by the normal n

E

:

It defines consistently the interior and the exterior of the closed polygon. Then, similarly to the 3D case,

with

Therefore,

Since \((p_{e_{j}}-\eta)^{t} \cdot N_{j}^{E} = 0\) (Fig. 2), we obtain \(w_{i}^{E}\) with

3 Derivation overview

In the following, we present the main contribution of the paper: the derivation of the Jacobians and the Hessians of mean value coordinates. In this section, we briefly give an overview of the derivation.

Let \(f : M \rightarrow \mathbb{R}^{d}\) be a piecewise linear field defined on M (in 2D, M is a closed polygon, in 3D, M is a closed triangular mesh). As reviewed in the previous section, f can be smoothly interpolated with mean value coordinates for any point η of the Euclidean space:

Then the Jacobian and the Hessian of f, respectively, noted Jf and Hf, are expressed as the linear tensor product of the values f(p i ) with the gradient \(\vec {\nabla }{\lambda_{i}}\) and the Hessian Hλ i of the coordinates, respectively:

Since \(\lambda_{i} = \frac{ w_{i} }{ \sum_{j}{ w_{j} } }\),

Then the Hessian can be obtained with the following equations:

The above expressions are general and valid for the 2D and 3D cases. Thus, in order to derive a closed-form expression of the gradient and the Hessian of the mean value coordinates λ i , one needs to derive the expressions of \(\vec {\nabla }{w_{i}}\) (Eq. (9)) and Hw i (Eq. (10)). The expressions of these terms are derived in Sect. 4.1 and Sect. 4.2, respectively, for the 2D case and in Sect. 5.1 and Sect. 5.2 for the 3D case.

3.1 Properties

Functions interpolated by means of mean value coordinates as previously described have the following properties:

-

1.

they are interpolant on M

-

2.

they are defined everywhere in \(\mathbb{R}^{d}\)

-

3.

they are C ∞ everywhere not on M

-

4.

they are C 0 on the edges (resp. vertices) of M in 3D (resp. in 2D).

Since these are interpolant of piecewise linear functions defined on a piecewise linear domain, they cannot be differentiable on the edges of the triangles (resp. the vertices of the edges) of the cage in 3D (resp. in 2D). Although, as they are continuous everywhere, they may admit in these cases directional derivatives like almost all continuous functions do. Recall that the directional derivative of the function f in the direction u is the value \(\partial f_{u}(\eta) = \lim_{\epsilon \rightarrow 0^{+}}{\frac{f(\eta + \epsilon \cdot u) - f(\eta)}{\epsilon}}\), with \(u \in \mathbb {R}^{3}, \|u\|=1, \epsilon \in \mathbb {R}\), which strongly depends on the orientation of the vector u where the limit is considered. These derivatives cannot be used to evaluate nor constrain the function around the point in general with a single gradient (or Jacobian if the function is multi-dimensional).

In this paper, we provide formulae for the first- and second-order derivatives of the mean value coordinates everywhere in space but on the cage.

4 MV-gradients and Hessians in 2D

For conciseness, the details of this derivation are given in the ESM (additional material) and only the final expressions are given here.

In the following, we note (pq) the line going through the points p and q, and [pq] the line segment between them.

4.1 Expression of the MV-gradients

Given an edge E=e 0 e 1, in the general case where \({(p_{e_{i}}-\eta)^{t} \cdot N_{i+1}^{E} \neq 0} {(\eta\notin (p_{e_{0}}p_{e_{1}}))}\), the gradient of the weights is given by the following expression:

with

Special case: \(\eta \in (p_{e_{0}}p_{e_{1}}), \notin [p_{e_{0}}p_{e_{1}}]\)

4.2 Expression of the MV-Hessians

We define  and

and  .

.

with

and

and

Special case: \(\eta \in (p_{e_{0}}p_{e_{1}}), \notin [p_{e_{0}}p_{e_{1}}]\)

with

5 MV-gradients and Hessians in 3D

For conciseness, the details of this derivation are given in the ESM (additional material) and only the final expressions are given here.

In the following, we note e

i

(x) a set of functions that are well-defined functions on ]0,π[ and admit well-controlled Taylor expansions around 0. These Taylor expansions are given in the ESM (additional material). Note that the functions e

i

(x) are not defined in 0 and that we make use of the Taylor expansions to estimate their values near 0 as well as in 0. The reason these terms appear in the final expressions is that we organized the terms in order to provide formulae whose evaluation converges everywhere, avoiding the typical 0/0 and +∞+−∞ cases, for example. To simplify the expressions,  we translate all 3D quantities to the origin (i.e., \({\hat {p}} := p - \eta\)). We also note \(u_{ij} = \overrightarrow{e_{i}}^{t} \cdot \overrightarrow{e_{j}}\) the dot product between edges i and j, \(m_{i} = (p_{t_{i+2}} + p_{t_{i+1}})/2\) the midpoint of the edge i of the triangle, and \(J_{ij} = {JN_{i}^{T}} \cdot N_{j}^{T}\) the vectorial product between edge i of the triangle and the normal of the triangle supported by the edge j (see inset). From the expression of \(N_{j}^{T}\), we obtain that its Jacobian equals

we translate all 3D quantities to the origin (i.e., \({\hat {p}} := p - \eta\)). We also note \(u_{ij} = \overrightarrow{e_{i}}^{t} \cdot \overrightarrow{e_{j}}\) the dot product between edges i and j, \(m_{i} = (p_{t_{i+2}} + p_{t_{i+1}})/2\) the midpoint of the edge i of the triangle, and \(J_{ij} = {JN_{i}^{T}} \cdot N_{j}^{T}\) the vectorial product between edge i of the triangle and the normal of the triangle supported by the edge j (see inset). From the expression of \(N_{j}^{T}\), we obtain that its Jacobian equals

where k [∧] is the skew 3×3 matrix (i.e., k [∧] t=−k [∧]) such that \(k_{[\wedge]} \cdot u = k \wedge u\ \forall k,u \in \mathbb{R}^{3}\).

5.1 Expression of the MV-gradients

with

where \(e_{1}(x) = \frac{\cos(x)\sin(x)-x}{\sin(x)^{3}}\) and \(e_{2}(x)=\frac{x}{\sin(x)}\).

Special case: η∈Support(T),∉T

where (noting c:=cos(x) and s:=sin(x)) e 3(x)=(3c(cx−s)+cs 3)/s 5, e 4(x)=(3(cx−s)+s 3)/s 3, e 5(x)=(s−xc)c/s 3, and e 6(x)=(s−xc)/s.

5.2 Expression of the MV-Hessians

We define

and

with

where (noting c:=cos(x) and s:=sin(x)) e 3(x)=(3c(cx−s)+cs 3)/s 5, e 4(x)=(3(cx−s)+s 3)/s 3, e 5(x)=(s−xc)c/s 3, and e 6(x)=(s−xc)/s.

Special case: η∈Support(T),∉T

with

where \({e_{7}(x)=\frac{2 \cos(x)\sin(x)^{3} + 3 (\sin(x)\cos(x) - x)}{\sin(x)^{5}}}\).

6 Continuity between the general case and the special case

We obtained the formulae for the gradient and the Hessian of \(w_{i}^{T}(\eta)\) in the general case, when the point of interest η does not lie on the triangle T, and in the special case when η lies on it.

As MVC are C ∞ everywhere not on M, these formulae are guaranteed to converge, since in particular, the gradient and the Hessian are continuous functions everywhere not on M.

The same holds in 2D where the distinction is made for the computation of \(w_{i}^{E}(\eta)\) whether η lies on the line supported by the edge E or not.

7 Experimental analysis

In this section, we present experimental evidence of the numerical accuracy of our derivation and provide computation timings.

7.1 Complexity

For each point η, computing the MVC, the MVC gradients and the MVC Hessians are linear in the number of vertices and edges (faces in 3D) of the cage.

7.2 C++ implementation

We implemented the computation of the mean value coordinates derivatives in C++, following the layout described in Sects. 4 and 5. This C++ implementation is provided as additional material [25]. As it only requires simple matrix-vector products, no third-party library is needed. To implement the applications discussed later in the paper, matrix singular value decomposition needs to be performed, for instance, to project Jacobian matrices onto the space of 2D/3D rotations. We used the GNU Scientific Library for that purpose.

7.3 Global validation with a manufactured solution

We first inspect the numerical accuracy of our derivation using the Method of Manufactured Solution (MMS), a popular technique in code verification [1, 6, 7]. Such a verification approach consists in designing an input configuration such that the resulting solution is known a priori. Then the actual verification procedure aims at assessing that the solution provided by the program conforms to the manufactured solution. In other words, MMS verification consists in designing exact ground-truths for accuracy measurement. However, note that this verification is not general, as it only assesses correctness for the set of manufactured solutions.

Manufactured solution

As mean value coordinates provide smooth interpolations, a global rigid transformation of the cage \(\overline{p_{i}} = T + R \cdot p_{i}\) should infer a global rigid transformation of the entire Euclidean space. In particular, the Jacobians of the embedding function f of the transformed cage (\(f : \mathbb{R}^{3} \rightarrow \mathbb{R}^{3}\), \(f(p_{i}) = \overline{p_{i}}\)) should be equal to R everywhere, and its Hessian should be exactly 0. Then our manufactured configuration is the space of global rigid transformations and our manufactured solution is defined by Jf=R and Hf=0.

Given this manufactured solution, we can derive correctness conditions for the Jacobian evaluation from the following expression:

Thus, to conform to the manufactured solution Jf=R, the following equations should be satisfied:

As for the Hessian evaluation, we can derive similar correctness conditions:

where T x ,T y , and T z are the first, second, and third coordinate of the vector T, respectively (similarly for R cd ).

Thus, to conform to the manufactured solution Hf=0, the following equations should be satisfied:

Note that both the Jacobian and Hessian correctness conditions (Eqs. (21) and (22)) are not functions of the rigid transformation parameters; they also correspond to the constant and linear precision properties of the MVC. These properties remain valid for arbitrary translations and rotations, and thus cover the entire group of rigid transformations.

Figure 3 shows numerical evaluations of these correctness conditions for different cages, at random points (in grey) of a 2D domain. In particular, the histograms plot the entries of the left-hand term (a vector or a matrix) of each of these equations, which should all be zero (for the second Jacobian condition, the entries of the matrix \(\sum_{i}{ p_{i} \cdot \vec {\nabla }{\lambda_{i}}^{t} } - I_{2}\) are shown). As shown in this experiment, the error induced by the violation of the correctness conditions is close to the actual precision of the data structure employed for real numbers (float or double). Also, the error slightly increases when the cage is denser. Indeed, with dense cages, it is more likely that the randomly selected samples lie in the vicinity of the support of the cage edges. These configurations correspond to the special cases discussed earlier and for which Taylor expansions are employed.

Validation based on a manufactured solution (group of rigid transformations) for the 2D case. The histograms show the violation of the correctness conditions associated with the manufactured solution (95 % most relevant samples). Top: simple floating point precision (output precision: 10−5). Bottom row: double precision (output precision: 10−14). Size of the diagonal of the domain: ∼1000

Figure 4 shows a similar experiment in 3D, with a coarse cage (black lines). Similarly, most of the errors are located on the tangent planes of the triangles (special cases). Note that an important part of the error yields from the samples which are located in the vicinity of the cage triangle, a configuration for which we do not provide a closed-form expression, as discussed in Sect. 3. Interestingly, the errors on the correctness conditions for the Jacobian and the Hessian are comparable to the errors induced by the actual computation of the mean value coordinates λ i . In the example shown in Fig. 4, the error range of the positional reconstruction on the grid (i.e., the violation of the linear precision property of the MVC) is [0,1.08×10−5], which is larger than the error ranges observed in two of the six correctness condition evaluations of the derivatives.

Validation based on a manufactured solution (group of rigid transformations) for the 3D case. The violation of the correctness conditions (from transparent blue, low values, to opaque red, high value) is measured on each vertex of a 1003 voxel grid. The full value range (FR) is given below each image while only the 99 % most significant samples are displayed (DI). Size of the diagonal of the domain: ∼600

7.4 Taylor approximations behavior

Validation based on manufactured solutions enables assessing the accuracy of a numerical computation on a subset of predefined configurations. However, in our setting, designing manufactured solutions corresponding to other configurations than rigid transformations is highly involved.

Thus, to extend our analysis to arbitrary configurations, we present in this paragraph an analysis of the Taylor approximations of MVC-functions based on our derivation.

In contrast to manufactured solutions, this analysis is not meant to validate our results, but simply analyze how functions expressed by MVC behave in the local neighborhood of a point.

For regular functions, function values in the neighborhood of a point can be approximated up to several orders of precision, using Taylor approximations:

In the following, we use these approximations to analyze the behavior of our derivation for arbitrary configurations. In particular, we evaluate the following errors:

As the evaluation neighborhood shrinks to a point, these errors should tend to zero, with a horizontal tangent. Figure 5 shows plots of these errors (logarithmic scale) on an arbitrary deformation function defined by user interactions:

-

On regular functions, the derivatives of the MVC characterize the interpolated function correctly: the maximum error is 7×10−3 (for a bounding diagonal of 316). Note that the radius r∈[0,1] of the evaluation neighborhood where they can be used to approximate the function is not too small. Therefore, our derivative formulation provides enough accuracy to enforce sparse derivative constraints on local neighborhoods such as those expressed in the applications described in Sect. 8.

-

The linear approximation can sometimes produce more accurate approximations in average than the quadratic approximation on a large neighborhood, while the quadratic approximation provides results, which are less direction-dependant.

-

The induced errors indeed tend to zero when the neighborhood shrinks to a point and the tangent of the curves in logarithmic scale illustrates that the error conforms to the expected form (O(∥dη∥2) for the linear approximation, O(∥dη∥3) for the quadratic approximation). Indeed, remember that if y=λ⋅x n, then log(y)=log(λ)+n⋅log(x).

Red curve: Linear approximation. Blue curve: Quadratic approximation. Plots show on a logarithmic scale the radial function defined as the average of the absolute error on the sphere of radius r (r∈[0,1]). The evaluation points η were taken on the skeleton shown in the original cage (here are displayed the evaluations for the points #0,#1,#2,#3,#4,#5, but these are representative of the curves we have for all locations). For each plot, the spheres on the top show the linear approximation error from two points of view (red box), the others show the quadratic approximation error from the same two points of view (blue box). From the tangent, we can assess the quadratic convergence of the linear approximation scheme and the cubic convergence of the quadratic approximation scheme

7.5 Comparison with finite differences schemes

In this section, we use finite differences schemes to derive the gradient and the Hessian of the MVC, to compare with the expressions we obtained.

A conventional scheme for approximations of first and second order derivatives at point (x,y,z) is the following:

This scheme requires 19 evaluations of the function in total. Results of convergence of finite differences (FD) of the mean value coordinates derivatives using this scheme are presented on an example in Fig. 6, using double precision and 256 bits precision (using mpfrc++, which is a c++ wrapper of the GNU multiple precision floating point library (mpfr)). The domain is the same as described previously in Fig. 5, and the plots correspond here to the evaluation made in point 0. The error functions that are plotted are \(\vec {\nabla }{\lambda}^{\mathrm{err}}(r) = \sqrt{ \sum_{i}{ \| \vec {\nabla }{\lambda_{i}} - \vec {\nabla }{\lambda_{i}}^{FD(r)} \|^{2} } }\) and \(H\lambda^{\mathrm{err}}(r) = \sqrt{ \sum_{i}{ \| H{\lambda_{i}} - H{\lambda_{i}}^{FD(r)} \|^{2} } }\). Note that these plots are representative of all the experiments we made (i.e., with other cages, at other locations, etc.).

Comparison with finite differences: the domain are the same as described previously in Fig. 5, and the evaluations are performed on point 0. x axis: size of the stencil for finite differences. y axis: difference between finite differences approximations of the derivatives and our formulae. Axes of the plots are in logarithmic scale. The functions that are plotted are \({\vec {\nabla }}{\lambda}^{\mathrm{err}}(r) =\sqrt{ \sum_{i}{ \| {\vec {\nabla }} {\lambda_{i}} -{\vec {\nabla }} {\lambda_{i}}^{FD(r)} \|^{2} } }\) and \(H\lambda^{\mathrm{err}}(r) = \sqrt{ \sum_{i}{ \| H{\lambda_{i}} - H{\lambda_{i}}^{FD(r)} \|^{2} } }\)

These results validate empirically our formulae, as the finite differences scheme converges to our formulae when the size of the stencil tends to 0 (Fig. 6, using 256 bits precision). It also indicates that finite differences schemes are not suited to evaluate MVC derivatives in real life applications (see Fig. 11 for example), as these schemes diverge near 0 when using double precision only (Fig. 6 blue and red curves). Note that this behavior is not typical of mean value coordinates, but rather of finite differences schemes. The choice of the size of the stencil is a typical difficulty in finite differences schemes. Choosing a size which is too small may introduce large rounding errors [8, 24]. Finding the smallest size which minimizes rounding error is both machine dependent and application dependent (in our case ≃0.01 on the example of Fig. 6). Moreover, its has been shown that all finite differences formulae are ill-conditioned [13] and suffer from this drawback. We used different schemes to approximate the derivatives using finite differences methods (9 points evaluation + linear system inversion, 19 points evaluation on a 3×3×3-stencil, tricubic interpolation on a 4×4×4-stencil), and they all diverge in the same manner when using double precision.

The error curves are also similar when looking at the deviation of the gradients and Hessians of the function itself that is interpolated (e.g., the deformation function in Fig. 5), instead of the gradients and Hessians of the weights themselves.

7.6 Comparison with Automatic Differentiation

Along with finite differences, Automatic Differentiation (AD) is also a popular class of techniques for the numerical evaluation of derivatives of functions expressed by a computer program. In this subsection, we compare for the 3D case our formulae to an evaluation provided by an AD software, the C++ library ADOL-C. As expected, in practice, the computation of the Jacobian and Hessian with AD is slower than the evaluation with our formulae (20 times slower in average). A more problematic drawback of AD is its numerical stability, especially in regions nearby the support of the cage triangles, where the MVC are forced to zero (they are only defined by continuity and Eq. (5) is not defined in these positions). To the best of our knowledge, this subtlety cannot be captured efficiently by AD tools. Figures 7(a, b) show histograms of errors (i.e., absolute difference between our formulae and the AD evaluation, \({|\vec {\nabla }{\lambda_{i}(\eta)} - \vec {\nabla }{}_{AD}{\lambda_{i}(\eta)}|}\) and |Hλ i (η)−H AD λ i (η)|), obtained on a set of 1000 points randomly distributed in the bounding box of the cage model (the cage is the Armadillo cage of Fig. 5). Figure 7(c) shows the convergence of the values computed by ADOL-C in the vicinity of the support of the cage triangles (i.e., \({|\vec {\nabla }{w_{t_{i}}^{T}(\eta)} - \vec {\nabla }_{AD}{w_{t_{i}}^{T}(\eta + \epsilon n_{T})}|}\) and \(|H{w_{t_{i}}^{T}(\eta)} - H_{AD}{w_{t_{i}}^{T}(\eta + \epsilon n_{T})}|\)). This plot shows that, as the evaluation point gets closer to the support plane; the AD evaluation diverges. Moreover, in practice, when it lies exactly on the support plane, the value returned by ADOL-C is undefined (NaN, Not a Number).

Comparison with Automatic Differentiation tools. (a) Histogram of errors (x axis is logarithmic) of the computation of the gradients of the weights by ADOL-C (on 1000 points randomly distributed in the model’s bounding box). (b) Histogram of errors (x axis is logarithmic) of the computation of the Hessians of the weights by ADOL-C on the same set. (c) Error of the computation of the derivatives by ADOL-C on the special case of the points lying on the support of the cage’s triangles (x axis represents the distance to the support plane)

7.7 Timings

Table 1 shows average computation times of the evaluation of the mean value coordinates and their derivatives for several input cages. As the cost of the evaluation depends on the occurrence of the special cases (point lying on the support plane of the triangles of the cage), we performed the computation on a set of 1000 points that were randomly distributed inside the bounding box of the model, and the average time is presented. As shown in this table, these computations take only a few milliseconds, which allows their usage in interactive contexts. Also, note that in the applications discussed in the following section, the derivatives are only evaluated on a very small set of points for constraint enforcement.

8 Applications

In this section, we review the applications presented in the original paper [17], and illustrate the utility of our contribution for all of them.

8.1 MVC derivatives visualization

In the context of function design/editing/visualization, the derivatives of the function can be of use to the user, as they have very often an intuitive meaning.

For example, in the context of 2D or 3D deformation, the Jacobian of the transformation J(η) can be put in the form J(η)=R(η)⋅B(η)⋅Σ(η)⋅B(η)t using singular value decomposition. These different matrices represent the scales of the transformation (Σ) in the basis given by B, and the rotation that is applied afterward (R)—which can be represented easily as a vector and an angle (see Fig. 8). In the context of color interpolation, the gradients of the different channels can be displayed. In general, the norm of the Hessian provides the information of how locally rigid the function is around the point of interest. Using our formulation, one can obtain this information at any scale with the same precision, to the contrary of what finite differences schemes would provide.

8.2 Flexible volumetric scalar field design

As shown in [17], mean value coordinates can be used to solve the boundary value interpolation problem for the definition of volumetric scalar fields, given an input field prescribed on a closed surface. Our derivation of the MVC gradients and Hessians enables to extend this application to more flexible volumetric scalar field designs, in particular by enforcing the gradient of the interpolated function. Such flexible scalar fields contribute to volumetric texturing [17] and meshing [23]. Figure 9 illustrates this application where the user sketched gradient constraints inside the volume. To compute a function, which satisfies these constraints, a linear system is solved, where the unknowns are the scalar field values on the cage. In particular, the following energy is minimized:

where \(\overline{V}\) is a set of points where hard constraints are applied on function values, Δf i denotes the cotangent Laplacian of the function at the vertex c i of the cage, and \(\overline{G}\) is the set of points where the gradient constraints are specified. Such an optimization procedure generates a smooth function on the cage (by minimizing its Laplacian) as well as in the interior volume (thanks to the MVC) with enforced gradient constraints. As shown in Fig. 9, the gradient constraints enable interacting with the shape and the velocity of the level sets of the designed function.

Flexible volumetric scalar field design with MVC gradient constraints. Left: Scalar constraints (spheres in the cage). Right: Gradient constraints (arrows in the cage). Blue and red colors respectively correspond to low and high value/gradient. In contrast to simple scalar constraints (left), gradient constraints (right) enable to intuitively interact with the shape and the velocity of the level lines

8.3 Implicit cage manipulation with variational MVC

As shown in [2], intuitive, low distortion, volumetric deformations can be obtained through a variational framework. In this context, the space of allowed transformations is explicitly described and the cage deformation is automatically optimized to satisfy positional constraints, while respecting the allowed transformations.

Since our derivation enables to express the Jacobian and Hessian of the transformation at any point in space as a linear combination of the cage vertices, the user can specify rigidity constraints (by minimizing the norm of the Hessian) or rotational constraints (by setting the Jacobian to the corresponding value).

The solution to this optimization problem is a 3D field \(f : \mathbb{R}^{3} \rightarrow \mathbb{R}^{3}\), defined everywhere in space using MVC, which interpolates the transformation of the cage vertices.

Let \(\overline{P}\) be the set of positional constraints of the transformation (\(\forall v_{i} \in \overline{P} , f(v_{i}) = \overline{v_{i}}\)), \(\overline{J}\) the set of Jacobian constraints (\(\forall v_{i} \in \overline{J} , Jf(v_{i}) = \overline{J_{i}}\)), and \(\overline{H}\) the set of Hessian constraints (\(\forall v_{i} \in \overline{H} , Hf_{x}(v_{i}) = Hf_{y}(v_{i}) = Hf_{z}(v_{i}) = 0_{3}\)). The solution is given by minimizing the following energy:

where w P,w J, and w H are weights for the positional, Jacobian, and Hessian constraints, respectively. Similarly to [2], the transformation can be constrained locally to be a pure rotation. Then, in prescribed locations, the following property should hold:

Note that the actual values of these Jacobian constraints are now unknowns, which can be obtained through an iterative optimization, as described in [2]. Due to the nonlocal nature of mean value coordinates (in comparison to Green coordinates), we constrain pure rotations to a subset of the enclosed surface vertices (blue spheres in Figs. 1 and 10) instead of constraining them to the medial axis.

Implicit cage manipulation with variational MVC. Red points indicate positional constraints; blue points indicate unknown rotational constraints. The quality of the produced cages allows the user to edit small details manually by switching from an implicit manipulation to an explicit manipulation. The same cannot be done with a Green coordinates solver

Figures 1 and 10 illustrate the algorithm, where the input surface is shown on the left in its enclosing cage. In these examples, rotational constraints have been distributed evenly on the surface (blue points) and only 14 positional constraints have been specified and edited by the user. As showcased in the accompanying video, the interactions required by our system are limited and intuitive and our resolution of this optimization process is fast enough to provide interactive feedback despite a CPU-only implementation.

Figure 11 shows a comparison with the results one can obtain using a finite differences scheme in the context of shape deformation. Using finite differences requires to tune the size of the stencil used for computation of the MVC derivatives case by case, and it can be difficult to set it up correctly, resulting in poor reconstructions.

9 Discussion

Other techniques have been proposed in the past for as-rigid-as-possible (ARAP) cage-driven shape deformations. For instance, Borosán et al. [3] presented a technique which solves for ARAP transformations on the cage, while interpolating the results in the interior with MVC. However, as discussed earlier, MVC coordinates exhibit a very global behavior. Thus, ARAP transformations on the cage do not necessarily imply ARAP transformations in the interior. Reciprocally, it is often necessary to generate non-ARAP transformations of the cage in order to yield ARAP transformations in the interior. Instead, our technique enforces ARAP constraints directly on the enclosed shape.

Variational Harmonic Maps (VHM) [2] use Green coordinates as the underlying machinery for deforming shapes in an implicit fashion. Note, however, that the triangle normals are unknowns of the system in VHM; they can therefore take values arbitrarily far from the actual normals dictated by the normalization of the Euclidean cross product of face edges. Hence, the cages generated by this technique cannot be exploited in a consistent manner for post-processing tasks. For instance, loading these cages in a modeling software supporting Green coordinates would fail to correctly reconstruct the enclosed shape if the traditional Euclidean normals were used. Even if the normal solutions provided by the VHM system were used for this initial reconstruction, it would be not clear how to update them consistently given some explicit user deformation of the cage. The same remark goes for other post-processing tasks, such as cage-driven shape interpolation, for animation generation based on key-frames provided by implicit cage manipulation.

On the contrary, our technique (variational MVC) does not suffer from this drawback as only cage vertex positions are unknowns. Thus, the cages produced by our algorithm can be manipulated and reused directly and consistently with existing software supporting MVC based deformations in various post-processing tasks. Also, as demonstrated in the accompanying video, our technique allows the user to switch at any time from implicit to explicit cage manipulation for small detail tuning, which cannot be done with a solver based on Green coordinates. Note, however, that it is not clear how to go from an explicit manipulation to an implicit manipulation, as the constraints enforced by our system are not respected when moving each cage’s vertex independently from the others. Thus, implicit manipulation of the cage using our system can only be done before an explicit manipulation, or this editing phase will be discarded by the system.

10 Conclusion and future work

In this paper, we have presented the closed form expressions of the derivatives of mean value coordinates for piecewise linear cages, both in 2D and 3D. To our knowledge, this is the first work that provides derivatives of interpolant barycentric coordinates, which can be used for interpolation of arbitrary functions prescribed on cage vertices. Similar formulae have been proposed for Green coordinates already, but they are limited to the context of shape deformation, and they are not an interpolant. A full numerical analysis of the derivation has been carried out and both its accuracy and reliability has been demonstrated experimentally. Furthermore, applications involving optimization problems benefiting from MVC derivative constraint enforcement have been presented and the utility of our contribution has been demonstrated.

In future work, we would like to investigate the possibility of expressing derivatives for Positive Mean Value Coordinates (PMVC) [19]. The possible negativity of MVC coordinates has often been discussed as a drawback in certain contexts. Note however, that this particular property makes them the only barycentric coordinates, which allow the definition of coordinates outside of the cage in a straightforward manner. Nevertheless, PMVC can overcome this possible drawback, by only taking into consideration the cage vertices, which are visible from the point under evaluation, which can be done very efficiently on the GPU, using the rasterization hardware machinery. However, these coordinates are not smooth since the visibility function is not smooth either. It would be interesting to study in practice how reliable the MVC derivatives can be when restricted to visibility dependant sub-cages, in order to mimic the behavior observed with PMVC.

References

Babuska, I., Oden, J.: Verification and validation in computational engineering and science: basic concepts. In: Computer Methods in Applied Mechanics and Engineering, pp. 4057–4066 (2004)

Ben-Chen, M., Weber, O., Gotsman, C.: Variational harmonic maps for space deformation. ACM Trans. Graph. (2009). doi:10.1145/153126.1531340, 1–11

Borosán, P., Howard, R., Zhang, S., Nealen, A.: Hybrid mesh editing. In: Proc. of Eurographics (2010)

Loop, C.T., DeRose, T.D.: A mulisided generalization of Bézier surfaces. ACM Trans. Graph. 8, 204–234 (1989)

Wachspress, E.L.: A Rational Finite Element Basis. Academic Press, New York (1975)

Etiene, T., Scheiddeger, C., Nonato, L., Kirby, R., Silva, C.: Verifiable visualization for isosurface extraction. IEEE Trans. Vis. Comput. Graph. 15, 1227–1234 (2009)

Etiene, T., Nonato, L., Scheiddeger, C., Tierny, J., Peters, T.J., Pascucci, V., Kirby, R., Silva, C.: Topology verification for isosurface extraction. IEEE Trans. Vis. Comput. Graph. (2011). doi:10.1109/TVCG.2011.109

Flannery, B.P., Press, W.H., Teukolsky, S.A., Vetterling, W.: Numerical Recipes in C. Press Syndicate of the University of Cambridge, New York (1992)

Floater, M.: Parameterization and smooth approximation of surface triangulations. Comput. Aided Geom. Des. 14, 231–250 (1997)

Floater, M.: Parametric tilings and scattered data approximation. Int. J. Shape Model. 4, 165–182 (1998)

Floater, M.: Mean value coordinates. Comput. Aided Geom. Des. 20, 19–27 (2003)

Floater, M.S., Kos, G., Reimers, M.: Mean value coordinates in 3D. Comput. Aided Geom. Des. 22, 623–631 (2005)

Fornberg, B.: Numerical differentiation of analytic functions. ACM Trans. Math. Softw. 7(4), 512–526 (1981)

Hormann, K., Floater, M.: Mean value coordinates for arbitrary planar polygons. ACM Trans. Graph. 25, 1424–1441 (2006)

Huang, J., Shi, X., Liu, X., Zhou, K., Wei, L.Y., Teng, S.H., Bao, H., Guo, B., Shum, H.Y.: Subspace gradient domain mesh deformation. ACM Trans. Graph. 25, 1126–1134 (2006)

Joshi, P., Meyer, M., DeRose, T., Green, B., Sanocki, T.: Harmonic coordinates for character articulation. ACM Trans. Graph. 26 (2007)

Ju, T., Schaefer, S., Warren, J.: Mean value coordinates for closed triangular meshes. ACM Trans. Graph. 24(3), 561–566 (2005)

Langer, T., Belyaev, A., Seidel, H.P.: Spherical barycentric coordinates. In: Proc. of Symposium on Geometry Processing, pp. 81–88 (2006)

Lipman, Y., Kopf, J., Cohen-Or, D., Levin, D.: Gpu-assisted positive mean value coordinates for mesh deformation. In: Symposium on Geometry Processing, pp. 117–123 (2007)

Lipman, Y., Levin, D., Cohen-Or, D.: Green coordinates. ACM Trans. Graph. 27(3), 1–10 (2008)

Malsch, E., Dasgupta, G.: Algebraic construction of smooth interpolants on polygonal domains. In: Proc. of International Mathematica Symposium (2003)

Meyer, M., Lee, H., Barr, A., Desbrun, M.: Generalized barycentric coordinates for irregular polygons. J. Graph. Tools 7, 13–22 (2002)

Nieser, M., Reitebuch, U., Polthier, K.: CubeCover—parameterization of 3D volumes. Comput. Graph. Forum 30, 1397–1406 (2011)

Squire, W., Trapp, G.: Using complex variables to estimate derivatives of real functions. SIAM Rev. 40(1), 110–112 (1998)

Thiery, J.M.: MVC derivatives C++ implementation. (2013). http://sourceforge.net/projects/meanvaluecoordinatesderivs/files/latest/download?source=files

Urago, M.: Analytical integrals of fundamental solution of three-dimensional Laplace equation and their gradients. Trans. Jpn. Soc. Mech. Eng. C 66, 254–261 (2000)

Warren, J.: Barycentric coordinates for convex polytopes. Adv. Comput. Math. 6, 97–108 (1996)

Warren, J., Schaefer, S., Hirani, A., Desbrun, M.: Barycentric coordinates for convex sets. Adv. Comput. Math. 27, 319–338 (2007)

Author information

Authors and Affiliations

Corresponding author

Electronic Supplementary Material

Rights and permissions

About this article

Cite this article

Thiery, JM., Tierny, J. & Boubekeur, T. Jacobians and Hessians of mean value coordinates for closed triangular meshes. Vis Comput 30, 981–995 (2014). https://doi.org/10.1007/s00371-013-0889-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-013-0889-y