Abstract

Evaporation is one of the key factors in the hydrological cycle, and it is one of the most critical parameters in hydrological, agricultural, and meteorological studies, especially in arid and semi-arid regions. By estimating evaporation, it is possible to make a significant contribution to studies related to water balance, management and design of irrigation systems, estimation of crop amount, and management of water resources. In this regard, in the present study, using artificial neural network (ANN) and its hybrid algorithms including Harris Hawks Optimization (HHO), Whale Optimization Algorithm (WOA), and Particle Swarm Optimization (PSO), the daily evaporation value from the reservoir of Qaleh Chay Ajab Shir dam was estimated. For this purpose, the effective parameters in the evaporation process were introduced to each of the models in the form of different input patterns, and the evaporation value from the reservoir was also considered as output parameter. The results showed that the best selected model for ANN is the P3 model including three parameters of minimum air temperature, and daily evaporation data with NASH of 0.89, RMSE of 1.5 mm/day, and MAE of 1.1 mm/day, which was optimized by applying hybrid algorithms to train the neural network. The results disclosed that all three models had a good performance in estimating the daily evaporation value, so that the value of the correlation coefficient for all three models is in the range of 0.95–0.99. Based on evaluation criteria, ANN–HHO has better performance than the two other algorithms in estimating daily evaporation value. The values of NASH, RMSE and MAE for the selected pattern of the test data are 0.943, 0.908 and 0.736 mm/day, respectively. For better analysis, Taylor diagram is used (RMSD = 0.98, CC = 0.97, STD = 4 for ANN–HHO). The results of this diagram also showed that the ANN–HHO model provides acceptable performance when compared with other models. Considering the promising results of the models in predicting the daily evaporation from dam, it is suggested to use the existing approach for landscaping the groundwater balance and design of irrigation systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Evaporation from land, soil, lakes and water reservoirs is one of the most important processes in meteorology and hydrology. Every year, millions of cubic meters of freshwater are collected from dam reservoirs and evaporate so then, the salts leftover in that place which reduces the water quality [7]. Calculation of the amount of water wasted by evaporation is primarily important for the study and management of water resources on an agricultural and regional scale, or watersheds. In many areas with limited water resources, evaporation calculations are necessary to plan and manage irrigation practices [10, 25, 52]. One of the standard methods of measuring evaporation is class A evaporation pan, which is also used in meteorological stations. Installation of the evaporator pan has some limitations and practical problems, therefore, reduces its estimation accuracy. Hence, it is necessary to provide methods for estimating the evaporation parameter using other meteorological variables. According to the researches there is a non-linear and relatively complex relationship between evaporation and meteorological parameters. Accordingly, without taking into account a linear relationship between the aforesaid parameters, it is quite impossible to obtain an appropriate approximation from the evaporation. Besides, models with high problem-solving capabilities such as machine learning, are required to carry out an appropriate estimation and proper calculation of the relationship between the meteorological parameters and evaporation [35].

The rapid development of computing technologies since the 1980s has led researchers to adopt soft computing techniques to model time-series data in a variety of disciplines, among which hydrological sciences are no exception. Regarding evaporation modeling, numerous studies have employed artificial intelligence (AI) methods, including artificial neural networks (ANNs), genetic algorithms (GA), support vector regression (SVR), or adaptive neuro-fuzzy inference systems (ANFISs), to forecast evaporation rates [21, 28, 33]. The ANNs in particular have received extensive attention from researchers. The artificial intelligence methods has been successfully applied for modeling evaporation in the last decades [4, 8, 18, 24, 26, 29, 34, 36, 42, 43, 45, 49, 50, 55, 58, 63].

Bruton et al. [9] used artificial neural network (ANN) models to estimate daily pan evaporation (Ep). The weather data from Pome, Plains, and Watkinsville, Georgia, consisting of 2044 daily records from 1992 to 1996 used to develop the pan evaporation models. Sudheer et al. [53] studied the Ep prediction from minimum climatic data using artificial neural network method. The results revealed that the values of Ep could be reasonably predicted by air temperature data only through the ANN method. Kisi [35] compared the accuracy of ANN and neuro-fuzzy (NF) methods to estimate Ep by various combinations of pressure, solar radiation, daily air temperature, humidity, and wind speed. He showed that the NF method can predict the Ep better than the ANN by applying the available climatic data. Dogan et al. [13] compared the accuracy of feed forward neural network (FFNN) and radial basis neural network (RBNN) techniques to estimate daily pan evaporation of Lake Sapanca. The results showed that the FFNN model performed better than the RBNN. Piri et al. [46] applied ANN model to estimate Ep in a hot and dry region. They found that ANN method yielded better results at the selected region. Kisi [36] used RBNN, multi-layer perceptrons (MLP), and generalized regression neural network methods to predict daily Ep. Based on his results, the RBNN and MLP techniques could be successfully applied to model Ep process by the available climatic data. Kim et al. [30] investigated the accuracy of cascade correlation neural networks (CCNN) and MLP in estimating daily evaporation for inland and coastal stations in Republic of Korea. They found that the CCNN model works very well than the MLP during the test period for two homogeneous and nonhomogeneous weather stations. Malik and Kumar [38] compared the accuracy of multi-linear regression (MLR ANN), and co-active adaptive neuro-fuzzy inference system (CANFIS) techniques in estimating daily evaporation at Pantnagar, located at the foothills of Himalayas in the Uttarakhand State of India. They indicated that the performance of ANN model was generally superior to the CANFIS and MLR techniques.

Wang et al. [59] investigated different methods to estimate the evaporation in a small high-elevation lake on the Tibetan Plateau (TP). Their results evaluation by applying EC observation-based reference data sets. Wang et al. [60] evaluated the acceleration of global lake evaporation by changes in surface energy allocation in a warmer climate. They simulated lake surface fluxes with a numerical model based on input data achieved by high-emission climate change scenario. The results found that the lake evaporation increased by 16% by the end of the century, despite little change in incoming solar radiation at the surface. Seifi and Riahi [51] applied using the least square support vector machine (LS-SVM), ANN and ANFIS models to estimate the daily evapotranspiration in Iran. The results showed that the air temperature, and wind speed were the most effective climatic variables, and the LSSVM model yielded better results than the ANN and ANFIS methods. Ashrafzadeh et al. [5] modeled the evaporation of free water in northern Iran using ANN, and ANN-krill herd optimization algorithm (ANN–KHA). They used the GT to select the effective input data. They found that the ANN–KHA model works very well than the ANN model. Guo et al. [19] investigated the evaporation over Siling Co Lake on the Tibetan Plateau by applying the single-layer lake evaporation model. They found that the single-layer lake evaporation model could accurately simulate the daily evaporation. Nourani et al. [44] used different Artificial Intelligence (AI) techniques including Feed Forward Neural Network (FFNN), Adaptive Neuro Fuzzy Inference System (ANFIS), Support Vector Regression (SVR), empirical models including Hargreaves and Samani (HS), Modified Hargreaves and Samani (MHS), Makkink (MK), Ritchie (RT) and conventional Multilinear Regression (MLR), to model Reference Evapotranspiration (ET0) in fourteen stations from several climatic regions in Turkey, Cyprus, Iraq, Iran and Libya. They showed that empirical and MLR models could be employed to achieve the valuable results, AI based models are superior in performance to the other models, also promising improvement in ET0 modeling could be achieved by ensemble modeling.

However, it must be taken into account that it is simpler and more cost-effective to use the MLR models in comparison to machine learning models. Even though better results were obtained by utilizing machine learning models when compared to the MLR models, a specialist is required to perform these models and they are not easy to operate.

Optimization is an important subject with many important application, and algorithms for optimization are diverse with a wide range of successful applications [15]. Among these optimization algorithms, modern metaheuristics are becoming increasingly popular, leading to a new branch of optimization, called metaheuristic optimization [57]. Most metaheuristic algorithms are nature-inspired [14, 54, 61], from simulated annealing [32] to ant colony optimization [14], and from particle swarm optimization [11] to cuckoo search [62].

The positive performance of the metaheuristic algorithms led researchers to use them to improve predictions by machine learning models. For example, Najafzadeh and Tafarojnoruz used the PSO algorithm to improve ANFIS and GMDH in predicting the longitudinal dispersion coefficient of the river. Samadianfard et al. [47] applied WOA algorithm to improve ANN in predicting wind speed, Ghorbani et al. [17] used PSO to improve ANN in predicting evaporation from the pan. HHO algorithm was used by Azar et al. [6] to improve ANFIS in predicting longitudinal coefficient of river dispersal, and HHO, PSO, and GWO algorithms by Milan et al. [39] to improve ANFIS in optimal prediction Groundwater abstraction. Ghorbani et al. [17] used Firefly Algorithm for improving ANN to predict evaporation from pan and Allawi et al. [3] used GA algorithm to improve SVR in monthly reservoir evaporation prediction. Tikhamarine et al. [56] applied PSO and WOA algorithms to improve ANN in monthly reference evapotranspiration prediction, and Adib and Mahmoodi [1] used GA algorithm to improve ANN in sediment load prediction Suspended used. The research results show the proper performance of these algorithms in improving the accuracy of ANN, ANFIS and SVR machine learning models. Therefore, in the present study, meta-heuristic algorithms (HHO, PSO and WOA) have been used with the aim of overcoming the limitations of ANN. In addition, research with these three meta-heuristic algorithms has shown that these algorithms are well able to teach ANN [12, 20, 27, 41, 48, 64].

In study of the Biazar et al. [7], the PA approach was applied in the determination of evaporation rate from saline water and the results compared with those of the GT and EnT methods. The results revealed that ANN-GT was a powerful model in the estimation of evaporation; however, it failed to select the optimal input combinations with lowest uncertainties.

Result of different researches revealed that although black box models, such as ANN, can lead to acceptable results, it is well-known issue that in problems having high uncertainty, these models may lead to different results.

Due to the error in predicting the parameters with high uncertainty using AI-based models, the use of evolutionary algorithms obtained by combining AI models with other algorithms can achieve results. High accuracy is very effective for these phenomena. By considering errors which are related to the uncertainty in predicting the parameters of AI-based models, usage of the evolutionary algorithms obtained by combining AI models with other algorithms can give higher accuracy in final results. Makridakis et al. [37] showed that the use of hybrid models instead of single models improves the prediction results to a great extent. In fact, the concept of using hybrid models is to use the unique features of each model in extracting the specific properties of the studied parameter [31]. Therefore, based on the conducted studies, it was concluded that the combined model of intelligent network (AI) and optimization algorithms have not been used to predict the evaporation. Hence, in this regard, the neural network (ANN) was selected as the base model and three optimization algorithms including Particle swarm optimization (PSO), whale optimization algorithm (WOA), and Harris hawks optimization (HHO) were used to optimize the parameters of the ANN model. The aim of this study was to predict the daily evaporation from the reservoir lake of Qaleh Chay Ajabshir dam in East Azarbaijan province, Iran. At first, the sensitivity of the models to environmental parameters such as temperature, humidity and pressure was evaluated, and then by determining the most important parameters affecting the output results, the efficiency of the proposed models in predicting the daily evaporation was evaluated according to various criteria.

2 Materials and methods

2.1 Study area

Ajab Shir is located in the east of Lake Urmia and on the western slope of the Sahand Mountains, in East Azerbaijan Province with the average altitude is about 1300 m above sea level. The study area is located between longitudes 45° 49′ and 46° N and latitudes of 37° 24′ and 37° 31′ E. The location of the study area is shown in Fig. 1. In this study area, Qaleh Chay dam with a total volume of 40 million cubic meters has a catchment area with of 250 square kilometers. Qala-e-Chai network is one of the most important dams in northwestern Iran, covering about 7000 hectares of land in the region. In recent years, due to the excessive use of groundwater resources, the aquifer in this area has suffered a severe decline, so the optimal combined use of surface and groundwater resources was recommended by experts. However, before using the integrated operation method, it is necessary to determine the amount of river flow to the reservoir and the amount of water flow through evaporation to take the necessary measures to prevent evaporation. Measuring the evaporation is difficult due to the lack of special devices and equipment in this field, so predicting evaporation parameter will help water management.

2.2 Used data

The most important point in using machine learning models is to select the appropriate input parameters that affect the output factor and vary with time. For this purpose, in this research to predict daily evaporation (EVD), the parameters of maximum and minimum air temperature (Tmin, Tmax), input flow to the dam reservoir (Qin), wind speed (WS), humidity (H), precipitation and evaporation 1 and 2 days ago (EVD(N-1), EVD(N-2)) have been used. The statistical characteristics of each input parameter and output parameter are given in Table 1. According to Table 1, the evaporation varies in the range of 0–17 mm per day. The lowest amount of evaporation is in the cold months of the year such as February and January and the highest amounts are related to the hot months of the year. The amount of daily rainfall during the study shows that it varies from zero to 37 mm per day, and when the amount of rainfall during the day increases, the amount of inflow into the reservoir naturally increases. The maximum wind speed is about 8.6 m per second, but the average daily wind speed is about 1.97 m per second.

The time series of input and output parameters during study period are shown in Fig. 2. The purpose of drawing the time series of the parameters is to compare the changes of the input parameters with the output parameter. The parameter that has the same behavior and changes as the output parameter, will have the greatest effect on determining the output value. Therefore, observing the variations and comparing them with the output parameter can be useful. It is observed that the changes in maximum and minimum air temperature and the amount of input flow is similar to the changes in evaporation parameter. For example, when more evaporation has occurred, daily temperature and the input flow to the reservoir has been at the maximum level. In addition, changes in evaporation and humidity are reversed. When there is more evaporation, the minimum humidity is recorded, and vice versa. Precipitation also has slightly similar behavior to evaporation, but the wind speed has almost the same trend (constant). In general, the considered parameters are variable with respect to time, and these changes are either similar to evaporation changes, or vice versa, or independent. Combining those parameters as inputs can be effective in the accuracy of the model. For this purpose, it is necessary to consider different combinations for input parameters.

Usually, in EVD models, the input and output variables are usually preprocessed by scaling between 0 and 1 to eliminate their dimensions and to ensure that all variables receive equal attention during training of the models. The most common method for simple linear mapping of the variables is presented [45]:

where \(r_{i}\) is respective normalized value of variable, \(R_{i}\) is actual value, \(R_{\min }\) and \(R_{\max }\) are the minimum and maximum of all the used values, respectively.

Data normalization before the application of EVD predictions, has two primary advantages; avoidance of using attributes in bigger numeric ranges, and avoidance of numerical difficulties while calculation.

2.3 Artificial neural network (ANN)

These models are a parallel distributed information processing system that performs similar to human neural networks [22]. The learning algorithm of ANN is depicted in Fig. 6. According to the flowchart, after selecting the model inputs, the number of hidden and output layers and their size (the number of neurons) should be first determined. The network evaluation criterion is then selected to calculate the error between the observed and predicted values of the networks. ANN determines the weights and bias of the neurons by different algorithms based on training data. This step is repeated until the error between the observed and predicted values becomes lower than a threshold (Fig. 3).

Flowchart of the ANN learning algorithm [2]

2.4 Development of the ANN using evolutionary algorithms

The ANN network contains several major defects, i.e., slow training convergence, ANN training algorithm flaws, and failure to reach the global optimization point in finding the weights and bias of ANN, especially the large search spaces. Therefore, mixed methods, which use the evolutionary algorithm techniques for optimizing ANN parameters, are required to remove these flaws.

In this study, along with the ANN model, several evolutionary algorithms were used to improve the ANN model. According to the literature, each of these algorithms has its unique features that can significantly improve the traditional ANN model. The relationships of hybrid ANN–EAO used in this study to predict the EVD is depicted in Fig. 4. First, scenarios with different variables (maximum and minimum air temperature, inflow to the dam reservoir, wind speed, humidity, precipitation and evaporation 1 and 2 days ago) were given to the model as input, and then, the type of the fuzzy function and the ANN structure is determined. The ANN is improved with evolutionary algorithms to achieve better results. In this structure, the objective function is to minimize the error of the predicted values. Finally, the amount of EVD is predicted by the optimized model.

ANN is trained by PSO, WOA, and HHO algorithms. Each of the abovementioned methods is described in this section.

2.5 Particle swarm optimization (PSO)

PSO is one of the nature-inspired optimization methods first introduced by Eberhart and Kennedy [16]. The PSO algorithm starts with creating a random population. Each component in nature is a different set of decision variables whose optimal values should be provided. Each component represents a vector in the problem-solving space. The algorithm includes a velocity vector in addition to the position vector, which forces the population to change their positions in the search space. The velocity consists of two vectors called p and pg. p is the best position that a particle has ever reached and pg is the best position that another particle in its neighborhood has ever reached. In this algorithm, each particle provides a solution in each iteration. In the search for a d-dimensional space, the position of the particle i is represented by a D-dimensional vector called Xi = (Xi1, Xi2, …, XiD). The velocity of each particle is shown by a D-dimensional velocity vector called Vi = (Vi1, Vi2, …, ViD). Finally, the population moves to the optimum point using Eqs. (2) and (3):

where ω is the shrinkage factor used for convergence rate determination, r1 and r2 are random numbers between 0 and 1 with uniform distribution, N is the number of iterations, c1 is the best solution obtained by a particle, and c2 is the best solution identified by the whole population. In this study, to train ANN using the PSO algorithm, a number of N random vectors with the initial Xi position were created. The ANN was then implemented with the particle positions, and the PSO objective function error was considered. The particles were then moved to find better positions and new parameters are used to construct the ANN. This process was repeated until convergence with the least prediction error was achieved.

2.6 Whale Optimization Algorithm (WOA)

WOA is a nature-inspired algorithm proposed by Mirjalili and Lewis [40] that uses the bubble-net hunting strategy. Each whale releases air bubbles under the sea, which creates walls of rising air in the water. The krill and small fish herds that are inside the aerial wall, because of the fear of being trapped, go to the center of the bubble circle when the whale hunts and eats a large number of them (Fig. 5).

Whale optimization algorithm [40]

The whale can detect the position of the prey and thus surround the prey. However, because the search space’s optimal position is unclear, it assumes that the best current answer is the adjacent prey. After determining this point, the search for other optimal points and position updates continues, which is indicated by Eqs. (4) and (5):

where t is the current iterator, C and A are the coefficient vectors, X* is the best position vector so far, and X is the position vector. The vectors A and C are calculated as follows [40]:

where the vector a is a vector in both exploration and exploitation phases and is reduced from 2 to 0 per repetition. The vector r is a random vector in the range [0 and 1].

2.7 Harris Hawks Optimization (HHO)

Like many other evolutionary algorithms, HHO is a nature-inspired and population-based algorithm, which is based on rabbit hunting by Harris hawks [23]. It includes the following steps:

2.7.1 Exploration phase

In the HHO method, the Harris hawks are the candidate solutions. They perch randomly on some locations and wait to detect prey based on two strategies. Their position is mathematically expressed as Eq. (11):

where X(t + 1) is the position vector of hawks in the next iteration of t, Xrabbit(t) is the position of rabbit, X(t) is the current position vector of hawks, r1, r2, r3, r4, and q are random numbers between 0 and 1, which are updated in each iteration, LB and UB are the upper and lower bounds of variables, Xrand(t)is a randomly selected hawk from the current population, and Xm is the average position of the current population of hawks. The average position of hawks is obtained using the following:

where Xi(t) indicates the location of each hawk in iteration t and N denotes the total number of hawks.

2.7.2 The transition from exploration to exploitation

The energy of prey decreases considerably during the escape. The energy of prey is modeled using Eq. (13):

where E indicates the escaping energy of the prey, T is the maximum number of iterations, and E0 is the initial energy. E0 randomly changes inside the interval (− 1, 1) at each iteration.

2.7.3 Exploitation phase

The hawks intensify the besiege process to catch the exhausted prey effortlessly. The E parameter is utilized to model this strategy and enable the HHO to switch between soft and hard besiege processes. In this regard, when |E|≥ 0.5, the soft besiege happens, and when |E|< 0.5, the hard besiege occurs.

2.7.4 Soft besiege

If r ≥ 0.5 and |E|≥ 0.5, the rabbit still has enough energy and tries to escape by some random misleading jumps, but finally, it cannot. During these attempts, the Harris hawks encircle it softly to make the rabbit more exhausted and then perform the surprise pounce. This behavior is modeled by Eqs. (14) and (15):

where ΔX(t) is the difference between the position vector of the rabbit and the current location in iteration t, r5 is a random number between 0 and 1, and Jrabbit represents the random jump strength of the rabbit throughout the escaping procedure.

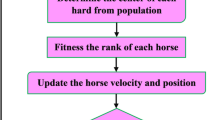

2.7.5 Hard besiege

If r ≥ 0.5 and |E|< 0.5, the prey is exhausted, and it has low escaping energy. Besides, Harris hawks hardly encircle the prey to perform the surprise pounce finally (Fig. 6a). In this situation, the current positions are updated using the following equation:

a Besieging the prey by Harris hawks, b procedure of Harris hawks optimization [23]

2.7.6 Soft besiege with progressive rapid dives

To perform a soft besiege, we supposed that the hawks could evaluate (decide) their next move based on the following rule:

We supposed that they would dive based on the levy flight (LF)-based patterns using the following rule:

where D is the dimension of the problem, S is a random vector, and LF is calculated using Eq. (16):

where u and v are random values between 0 and 1, and β is a default constant set to 1.5. Hence, Eq. (17) shows the final strategy for updating the positions of hawks in the soft besiege phase:

where Y and Z are obtained using Eqs. (14) and (15), respectively.

2.7.7 Hard besiege with progressive rapid dives

If r < 0.5 and |E|< 0.5, the rabbit has not enough energy to escape, and a hard besiege is constructed before the surprise pounce to catch the prey (Fig. 6b). Therefore, the following rule is performed in hard besiege condition:

where Y and Z are obtained using Eqs. (14) and (15):

where \({X}_{m}(t)\) is obtained using Eq. (9).

2.8 Performance evaluation criteria

To evaluate the accuracy of the proposed models, the following evaluation criteria of root mean square error (RMSE), determination coefficient (R2), Nash Sutcliffe Index (NASH), and mean absolute error (MAE) statistics have been selected and used. RMSE is the sample standard deviation of the differences between foreseen and actual values. It is given by

Mean absolute error (MAE) is a measure of errors between foreseen and actual values. It’s an average over the test sample of the absolute differences between foreseen and actual values.The mean absolute error is one of a number of ways of comparing forecasts with their eventual outcomes. It is defined as

The Nash and Sutcliffe (1970) criterion is widely used in hydrological modelling. The Nash–Sutcliffe efficiency is calculated as one minus the ratio of the error variance of the modeled time-series divided by the variance of the observed time-series. In the situation of a perfect model with an estimation error variance equal to zero, the resulting Nash–Sutcliffe Efficiency equals 1 (NSE = 1). Conversely, a model that produces an estimation error variance equal to the variance of the observed time series results in a Nash–Sutcliffe Efficiency of 0.0 (NSE = 0). In reality, NSE = 0 indicates that the model has the same predictive skill as the mean of the time-series in terms of the sum of the squared error:

where N is the number of data, \({\mathrm{EV}}_{o}\) is an observed or actual value of evaporation value, \({\mathrm{EV}}_{e}\) is the model simulated value, respectively, \(\overline{{\mathrm{EV} }_{o}}\) and \(\overline{{\mathrm{EV} }_{e}}\) are the average of the total observed and predicted evaporation value, respectively.

3 Results and discussion

3.1 Sensitivity analysis results

In the present study, the ANN algorithm and its hybrid algorithms including ANN–PSO, ANN–WOA, and ANN–HHO have been used to predict the daily evaporation from the reservoir of Ghale Chay dam. For this purpose, various models with different combinations of effective parameters in the evaporation is developed and the correlation of parameters with the evaporation value (output) is calculated. Table 2 demonstrates the correlation coefficient of each parameter. Any parameter that has a high correlation coefficient was introduced as the first model. The second model was developed by combining the first model with a correlation coefficient in the second row, and other models were developed by this concept.

3.2 Proposed scenarios

The input models have been created according to the correlation coefficient between the input parameters and the output parameter. Based on Table 2, the highest correlation between the input and output parameters was related to the evaporation parameter of 1 day ago with the value of 0.96. Therefore, the first input model consisted of evaporation of 1 day ago (Table 3). Evaporation of 2 days ago with a correlation coefficient of 0.94 is the second effective parameter that meets the evaluation criteria. Therefore, the second input pattern (P2) consists of evaporation in 1 and 2 days ago. Since the correlation coefficient of the minimum air temperature and maximum air temperature parameters are close to each other, so for each of them the input model was developed in combination with the second model. Similarly, based on the correlation coefficient between the input values and the output, nine scenarios were developed, which is shown in Table 3.

To reach a certain and constant value of the parameter of each algorithm, it is necessary to run the algorithm several times with different values of the desired parameters, and the most desirable result indicates the selection of the most appropriate values for each algorithm. Table 4 shows the values of the parameters used in the structure of all three algorithms. The ANN–PSO and ANN–WOA algorithms use 3000 iterations, because there is no improvement in simulation after 3000 iteration. Moreover, the population for each algorithm is different. Among the populations considered for each algorithm, a population of 40, 30 and 30 people were obtained for HHO, PSO and WOA algorithms, respectively. It should be noted that by increasing or decreasing the initial population value in each algorithm, there was no change in improving the results. Other values for each algorithm are presented in Table 4.

3.3 Evolutionary algorithms results

Evaluation criteria for four models are presented in Table 5 for training and test data. Accordingly, a model will be considered as the superior model in which the RMSE and MAE values are the lowest for both testing and training data sets and the Nash coefficient is the highest. According to Table 5, in the ANN algorithm, the optimal values of evaluation criteria are obtained in the P1 (evaporation of 1 day ago) in which the RMSE, MAE and Nash coefficient for test data is 1.58, 1.25 and 0.85, respectively. In the ANN–PSO algorithm, the P4 model with MAE of 0.75, RMSE of 1.084 and Nash coefficient of 0.93 is selected as the superior model. The values of evaluation indexes in P5 are close to the values of the P4 model; however, the P4 model it is selected as the superior model, because it requires fewer parameters than the P5 model. For the ANN–WOA algorithm, the RMSE, MAE and NASH values of the test data for the P2 model are 0.97, 0.8 and 0.93, respectively. The P7 model with RMSE of 1.1, MAE of 1.1, and Nash of 0.93 are close to the P2 model, but the model (P7) requires more parameters and the preparation of these parameters entails extra time and cost. Therefore, the appropriate model for estimating the evaporation parameter will be the P2 model. According to the results, ANN–WOA by having two parameters of evaporation in 1 and 2 days ago, estimated the amount of target parameter with high accuracy. On the other hand, comparing the results of the models for the ANN–HHO model shows that the P2 and P8 have acceptable performance compared to the other models, so they are able to estimate the evaporation parameter with high accuracy. Nevertheless, the P8 model needs more parameters to predict the evaporation, so model P2 which has RMSE, MAE and Nash criteria for test data of 0.99, 0.72 and 0.94 was selected as the best model for ANN–HHO algorithm. Comparisons between models showed that ANNs are not able to predict evaporation due to the fact that their training algorithms are trapped in local optimal points, so ANN performed poorly in estimating evaporation compared to the other three models. To solve this problem and improve the results, evolutionary algorithms PSO, WOA and HHO were used. All three models demonstrated better performance in predicting evaporation. Although the use of evolutionary algorithms has improved the outcomes, in some models it does not create acceptable result. For example, in the ANN–WOA model, model P5 in which the minimum and maximum air temperatures and the evaporation in 1 and 2 days ago are considered as input, the evaluation criteria is higher than 1 that shows the weak performance of this model. On the other hand, comparing the results of this model with the P4 model shows that using the minimum air temperature parameter (in P5) resulted more error and reduced the accuracy of model. Comparison between the selected models of algorithms showed that the ANN–HHO algorithm is more accurate in predicting evaporation parameter which has the highest correlation with the evaporation parameter than the other two algorithms.

To compare the models, a plot of observed and estimated values of the selected pattern of each model is used (Fig. 7). In these graphs, the data are shown in the vertical axis and the time steps (days) are in the horizontal axis. Figure 7a shows that the estimation in some steps is poorly performed which can be seen also in the results of Table 5. According to the obtained results, the observed and estimated data in the ANN–HHO algorithm is close to each other and their difference is very small compared to the other two algorithms. Therefore, this method creates more precise results in predicting evaporation. Moreover, the ANN–WOA model has more errors in predicting evaporation than ANN–PSO model.

However, an important point to be drawn from the time series graphs is that despite detecting the correct trend of evaporation changes, none of the models were able to estimate the exact amount of long peaks well. This factor can have several causes, including the daily nature of the data; because the data are daily, there may have been human or device errors in recording the data, and despite the input parameters being normal values, evaporation has increased dramatically. However, in general, in many steps, the estimated values by the proposed models, especially HHO, have been appropriate.

The scatter plot of the observed and estimated values is shown in Fig. 8. According to Fig. 8, the ANN model has poor performance in comparison with other methods. In contrast, the ANN–HHO demonstrates high accuracy with acceptable results among all models. Moreover, data are close to the bisector line in the ANN–HHO model which reveals its precision.

For further evaluation, the Taylor diagram has been used to investigate the correlation and standard deviation values between estimated and observed evaporation. Evident is that in these diagrams, accordance of observed and estimated outputs represented the validation of models. The value of correlation coefficient for all three models located in the range of 0.95–0.99, which shows the efficiency of all three models in estimating the evaporation. According to Fig. 9, the ANN–HHO has the value of correlation coefficient slightly higher than the other two algorithms, but the RMSE value for ANN–HHO and ANN–WOA is located on the arc 1, while for ANN–PSO, the RMSE value is slightly higher than 1. Examination of this diagram also shows that although all three algorithms have high capability in estimating the evaporation parameter, the closest model to the observed values in terms of Taylor diagram is ANN–HHO. The selection of ANN–HHO using various evaluation criteria demonstrations that this method is able to optimize the values of ANN parameters and increase the performance of model.

Figure 10 shows the receiver operating characteristic curve (ROC) for each of the models. The ROC shows the trade-off between sensitivity (or TPR; true positive rate) and specificity (1 – FPR; false positive rate). Classifiers that give curves closer to the top-left corner indicate a better performance. Thus, the closer the area under the ROC curve (AUC) to one, the better the prediction model. The highest AUC was 0.98, belonging to the ANN–HHO model. The lowest value was observed for the ANN model (AUC = 0.94). In general, the AUC shows that like the other criteria, ANN–HHO is the most appropriate model for EVD prediction.

Figure 11 shows the RMSE error values at each step of the ANN–HHO reputation. The algorithm starts with random and is based on the intended initial populations. The selected model was evaluated by three different populations of 25, 30 and 50 to measure the sensitivity of the results to the original populations. According to the figure, it is observed that this algorithm has reduced the RMSE in all three populations and in the first steps of the mutation, so that by performing only three steps, the amount of error in all three populations has been significantly reduced. Furthermore, it is obvious that achieving the optimal answer is faster at the beginning of the algorithm. The population of 30 has the highest accuracy, while the other two populations had higher RMSE errors. The error reached the final value in 1750 iterations, and after that there has been no change in the amount of error. Therefore, considering the appropriate amount of initial population to start the optimization process can lead to the desired result.

Finally, evaporation values were predicted for the whole data with the selected scenario and model (Fig. 12). It is observed that the selected model could predict the evaporation values with high precision according to the desired input parameters. In all steps, the estimated and observed values are close to each other which indicates the high capability of the selected model. In addition, this method is used for different time series.

4 Conclusion

In the present study, using both ANN method and ANN–EAOM hybrid model, the daily evaporation from the Ajabshir reservoir has been estimated. In hybrid models, three algorithms, PSO, WOA and HHO, are used to optimize the parameters of the ANN algorithm. For the evaluation of the obtained results four methods of root mean square error (RMSE), mean absolute error (MAE), Nash Sutcliffe index (NASH), and determination coefficient (R2) are utilized. For this evaluation, nine scenarios based on various environmental parameters, such as humidity, air temperature, evaporation have been considered. The estimated evaporation parameter in ANN method compared to the observational data has R2 of 0.88, which is the lowest value among the models. The highest R2 is related to ANN–HHO method with the value of 0.96. In addition, in the ANN–HHO model data are close to the regression line, which indicates the acceptable performance of ANN–HHO compared to other models. The value of correlation coefficient for all three models is in the range of 0.95–0.99 which shows that all three models have good performance in estimating the daily evaporation. The correlation coefficient in the ANN–HHO model is slightly higher than other two algorithms, but the RMSE value is equal to 1 for ANN–HHO and ANN–WOA and it is slightly more than 1 for the ANN–PSO model. Furthermore, in terms of Taylor diagram, the ANN–HHO model provides values closer to the observed ANN–HHO values. In general, the study of different evaluation parameters demonstrations that the ANN model is not able to predict evaporation with high accuracy, because its training algorithms are trapped in local optimal points, so ANN has a poor performance in estimating evaporation compared to the other three models. Utilizing three evolutionary algorithms such as HHO, WOA, and PSO improved the performance of ANN model in estimating the evaporation. The ANN–HHO performed better than all other models using two parameters of evaporation in 1 and 2 days ago as inputs which have high correlation with output.

References

Adib A, Mahmoodi A (2017) Prediction of suspended sediment load using ANN GA conjunction model with Markov chain approach at flood conditions. KSCE J Civ Eng 21(1):447–457

Agatonovic-Kustrin S, Beresford R (2000) Basic concepts of artificial neural network (ANN) modeling and its application in pharmaceutical research. J Pharm Biomed Anal 22(5):717–727

Allawi MF, Aidan IA, El-Shafie A (2021) Enhancing the performance of data-driven models for monthly reservoir evaporation prediction. Environ Sci Pollut Res 28(7):8281–8295

Ashrafzadeh A, Kisi O, Aghelpour P, Biazar SM, Masouleh MA (2020) Comparative study of time series models, support vector machines, and GMDH in forecasting long-term evapotranspiration rates in northern Iran. J Irrig Drain Eng 146(6):04020010

Ashrafzadeh A, Malik A, Jothiprakash V, Ghorbani MA, Biazar SM (2018) Estimation of daily pan evaporation using neural networks and meta-heuristic approaches. ISH J Hydraul Eng. https://doi.org/10.1080/09715010.2018.1498754

Azar NA, Milan SG, Kayhomayoon Z (2021) The prediction of longitudinal dispersion coefficient in natural streams using LS-SVM and ANFIS optimized by Harris hawk optimization algorithm. J Contam Hydrol 240:103781

Biazar SM, Ferdosi FB (2020) An investigation on spatial and temporal trends in frost indices in Northern Iran. Theoret Appl Climatol. https://doi.org/10.1007/s00704-020-03248-7

Biazar SM, Dinpashoh Y, Singh VP (2019) Sensitivity analysis of the reference crop evapotranspiration in a humid region. Environ Sci Pollut Res 26(31):32517–32544. https://doi.org/10.1007/s11356-019-06419-w

Bruton JM, McClendon RW, Hoogenboom G (2000) Estimating daily pan evaporation with artificial neural networks. T Asae 43(2):491–496

Brutsaert WH (1982) Evaporation into the Atmosphere. D. Reidel, Dordrecht, p 299

Clerc M, Kennedy J (2002) The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6(1):58–73

Dang NM, Tran Anh D, Dang TD (2019) ANN optimized by PSO and Firefly algorithms for predicting scour depths around bridge piers. Eng Comput 35:1–11

Dogan E, Isik S, Sandalci M (2007) Estimation of daily evaporation using artificial neural networks. Tek Dergi 18(2):4119–4131

Dorigo M (1992) Optimization, learning and natural algorithms. PhD thesis. Politecnico di Milano, Italy

Floudas CA, Pardolos PM (2009) Encyclopedia of optimization, 2nd edn. Springer, Heidelberg

Eberhart R, Kennedy J (1995) Particle swarm optimization. Proc IEEE Int Conf Neural Netw 4:1942–1948

Ghorbani MA, Deo RC, Yaseen ZM, Kashani MH, Mohammadi B (2018) Pan evaporation prediction using a hybrid multilayer perceptron-firefly algorithm (MLP-FFA) model: case study in North Iran. Theoret Appl Climatol 133(3):1119–1131

Goyal MK, Bharti B, Quilty J, Adamowski J, Pandey A (2014) Modeling of daily pan evaporation in sub-tropical climates using ANN, LS-SVR, Fuzzy Logic, and ANFIS. Expert Syst Appl 41(11):5267–5276

Guo Y, Zhang Y, Ma N, Xu J, Zhang T (2019) Long-term changes in evaporation over Siling Co Lake on the Tibetan Plateau and its impact on recent rapid lake expansion. Atmos Res 216:141–150. https://doi.org/10.1016/j.atmosres.2018.10.006

Haghnegahdar L, Wang Y (2020) A whale optimization algorithm-trained artificial neural network for smart grid cyber intrusion detection. Neural Comput Appl 32(13):9427–9441

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: data mining inference and prediction, 2nd edn. California, Springer

Haykin S (1999) Neural network and its application in IR, a comprehensive foundation, Upper Saddle Rever. Prentice Hall, New Jersey, p 842 (13, 775–781)

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872

Holmes TR (2019) Remote sensing techniques for estimating evaporation. In extreme hydroclimatic events and multivariate hazards in a changing environment. Elsevier, Amsterdam, pp 129–143

Jackson RD (1985) Evaluating evapotranspiration at local and regional scales. Proc IEEE 73(6):1086–1096

Karimi-Googhari S (2010) Daily pan evaporation estimation using a neuro-fuzzy based model. Trends Agric Eng 2010:191–195

Karkheiran S, Kabiri-Samani A, Zekri M, Azamathulla HM (2019) Scour at bridge piers in uniform and armored beds under steady and unsteady flow conditions using ANN-APSO and ANN-GA algorithms. ISH J Hydraul Eng 25:1–9

Keskin ME, Terzi O (2006) Artificial neural network models of daily pan evaporation. J Hydrol Eng 11(1):65–70

Kim S, Kim HS (2008) Neural networks and genetic algorithm approach for nonlinear evaporation and evapotranspiration modeling. J Hydrol 351(3–4):299–317

Kim S, Singh VP, Seo Y (2014) Evaluation of pan evaporation modeling with two different neural networks and weather station data. Theoret Appl Climatol 117(1–2):1–13

Kiran NR, Ravi V (2008) Software reliability prediction by soft computing techniques. J Syst Softw 81(4):576–583

Kirkpatrick S, Gellat CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220:670–680

Kisi O, Cimen M (2011) A wavelet-support vector machine conjunction model for monthly streamflow forecasting. J Hydrol 399:132–140

Kisi O (2005) Discussion of ‘“Fuzzy logic model approaches to daily pan evaporation estimation in western Turkey.”’ Hydrol Sci J 50(4):727–728

Kisi O (2006) Daily pan evaporation modelling using a neuro-fuzzy computing technique. J Hydrol 329(3–4):636–646

Kisi O (2009) Daily pan evaporation modelling using multi-layer perceptrons and radial basis neural networks. Hydrol Process 23(2):213–223

Makridakis S, Andersen A, Carbone R, Fildes R, Hibon M, Lewandowski R, Winkler R (1982) The accuracy of extrapolation (time series) methods: results of a forecasting competition. J Forecasting 1(2):111–153

Malik A, Kumar A (2015) Pan evaporation simulation based on daily meteorological data using soft computing techniques and multiple linear regression. Water Resour Manage 29(6):1859–1872

Milan SG, Roozbahani A, Azar NA, Javadi S (2021) Development of adaptive neuro fuzzy inference system–evolutionary algorithms hybrid models (ANFIS-EA) for prediction of optimal groundwater exploitation. J Hydrol 598:126258

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Moayedi H, Gör M, Lyu Z, Bui DT (2020) Herding Behaviors of grasshopper and Harris hawk for hybridizing the neural network in predicting the soil compression coefficient. Measurement 152:107389

Moghaddamnia A, Gosheh MG, Nuraie M, Mansuri MA, Han D (2010) Performance evaluation of LLR, SVM, CGNN and BFGSNN models to evaporation estimation. Energy Environ Eng S 5:108–113

Najafzadeh M, Tafarojnoruz A, Lim SY (2017) Prediction of local scour depth downstream of sluice gates using data-driven models. ISH J Hydraul Eng 23(2):195–202

Nourani V, Elkiran G, Abdullahi J (2019) Multi-station artificial intelligence based ensemble modelling of reference evaporationspiration using pan evaporation measurements. J Hydrol 577:1–20

Nourani V, Sayyah Fard M (2012) Sensitivity analysis of the artificial neural network outputs in simulation of the evaporation process at different climatologic regimes. Adv Eng Softw 47(1):127–146

Piri J et al (2009) Daily pan evaporation modeling in a hot and dry climate. J Hydrol Eng 14(8):803–811

Samadianfard S, Hashemi S, Kargar K, Izadyar M, Mostafaeipour A, Mosavi A et al (2020) Wind speed prediction using a hybrid model of the multi-layer perceptron and whale optimization algorithm. Energy Rep 6:1147–1159

Sammen SS, Ghorbani MA, Malik A, Tikhamarine Y, AmirRahmani M, Al-Ansari N, Chau KW (2020) Enhanced artificial neural network with harris hawks optimization for predicting scour depth downstream of ski-jump spillway. Appl Sci 10(15):5160

Samui P, Dixon B (2012) Application of support vector machine and relevance vector machine to determine evaporative losses in reservoirs. Hydrol Process 26(9):1361–1369

Sanikhani H, Kisi O, Nikpour MR, Dinpashoh Y (2012) Estimation of daily pan evaporation using two different adaptive neuro-fuzzy computing techniques. Water Resour Manage 26(15):4347–4365

Seifi A, Riahi H (2018) Estimating daily reference evapotranspiration using hybrid gamma test-least square support vectormachine, gamma test-ANN, and gamma test-ANFIS models in an arid area of Iran. J Water Clim Change. https://doi.org/10.2166/wcc.2018.003

Sharifan H, Ghahreman B, Alizadeh A, Mirlatifi SM (2006) Comparion of the different methods of estimated reference evapotranspiration (compound and temperature) with standard method and analysis of aridity effects. J Agric Sci Nat Resour 13:19–30 (In Persian)

Sudheer KP, Gosain AK, Mohana Rangan D, Saheb SM (2002) Modelling evaporation using an artificial neural network algorithm. Hydrol Process 16(16):3189–3202

Talbi EG (2009) Metaheuristics: from design to implementation. Wiley, Chichester

Terzi Ö, Keskin ME (2008) Comparison of artificial neural networks and empirical equations to estimate daily pan evaporation. Irrigat Drain. https://doi.org/10.1002/ird.454

Tikhamarine Y, Malik A, Kumar A, Souag-Gamane D, Kisi O (2019) Estimation of monthly reference evapotranspiration using novel hybrid machine learning approaches. Hydrol Sci J 64(15):1824–1842

Tran-Ngoc H, Khatir S, Ho-Khac H, De Roeck G, Bui-Tien T, Wahab MA (2021) Efficient Artificial neural networks based on a hybrid metaheuristic optimization algorithm for damage detection in laminated composite structures. Compos Struct 262:113339

Vaheddoost B, Kocak K (2019) Temporal dynamics of monthly evaporation in Lake Urmia. Theoret Appl Climatol 137(3–4):2451–2462. https://doi.org/10.1007/s00704-018-2747-3

Wang B, Ma Y, Ma W, Su B, Dong X (2018) Evaluation of ten methods for estimating evaporation in a small high-elevation lake on the Tibetan Plateau. Theoret Appl Climatol. https://doi.org/10.1007/s00704-018-2539-9

Wang W, Lee X, Xiao W, Liu S, Schultz N, Wang Y et al (2018) Global lake evaporation accelerated by changes in surface energy allocation in a warmer climate. Nat Geosci 11(6):410. https://doi.org/10.1038/s41561-018-0114-8

Yang XS (2008) Nature-inspired metaheuristic algorithms. Luniver Press

Yang XS, Deb S (2010) Engineering optimization by cuckoo search. Int J Math Modell Num Optimiz 1(4):330–343

Zhao G, Gao H (2019) Estimating reservoir evaporation losses for the United States: fusing remote sensing and modeling approaches. Remote Sens Environ 226:109–124. https://doi.org/10.1016/j.rse.2019.03.015

Zhou Y, Niu Y, Luo Q, Jiang M (2020) Teaching learning-based whale optimization algorithm for multi-layer perceptron neural network training [J]. Math Biosci Eng 17(5):5987–6025

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Arya Azar, N., Kardan, N. & Ghordoyee Milan, S. Developing the artificial neural network–evolutionary algorithms hybrid models (ANN–EA) to predict the daily evaporation from dam reservoirs. Engineering with Computers 39, 1375–1393 (2023). https://doi.org/10.1007/s00366-021-01523-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01523-3