Abstract

In the global optimization process of the firefly algorithm (FA), there is a need to provide a fast convergence rate and to explore the search space more effectively. Therefore, we conduct modular analysis of the FA and propose a novel enhanced exploration firefly algorithm (EE-FA), which includes an enhanced attractiveness term module and an enhanced random term module. The attractiveness term module can improve the exploration efficiency and accelerate the convergence rate by enhancing the attraction between fireflies. The random term module improves the exploration efficiency by introducing a damped vibration distribution factor. The EE-FA uses multiple parameters to balance its exploration efficiency and convergence rate. The parameters have a great influence on the performance of the EE-FA. In order to achieve the best performance of the EE-FA, each parameter of the EE-FA needs to be simulated to determine its optimal value. Compared to multiple variants of the FA, the EE-FA has better exploration efficiency and a faster convergence speed. Experimental results reveal that the EE-FA recreated consistently vanquishes the front for 24 benchmark functions and 4 real design case studies in terms of both convergence rate and exploration efficiency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Global continuous optimization problems have been extensively applied to scientific research, engineering technology and industrial design. Examples include image processing [1], path planning [2], complex structure design optimization [3, 4], electronic system design, gene recognition, etc. In most engineering applications, global optimization problems are often regarded as highly nonlinear with irregular multiple peaks, which are usually called NP-hard problems [5]. NP-hard problems always lead to huge computational complexity and poor computational stability. However, it is difficult to find a suitable and practical algorithm to solve any of the NP-hard problems. In fact, designing the best algorithm requires not only a wealth of experience but also a detailed understanding of the problem. Even so, there is no absolute guarantee that the best or even suboptimal solution will be found.

For continuous problems, in order to achieve efficient global optimization performance, many scholars have invested a great deal of effort in research, and many excellent algorithms have been proposed [6,7,8]. Two categories have been developed from these splendid algorithms: deterministic algorithms and random algorithms. Deterministic algorithms mainly consist of the hill climbing method [9], Newton iteration method [10], simplex method [11], least squares method [12, 13], etc. The strength of deterministic algorithms is that they have high convergence efficiency for certain problems. It only takes a few iterations to find the optimal solution. Their disadvantage is that they easily fall into a local optimum. Due to the unique random mechanism, random algorithms can easily jump out of the local optimum and obtain the global optimum solution. Their weakness is that they can produce different solution sets under the same initial conditions, so their repeatability is very poor [14].

Most random algorithms can be considered metaheuristics. Many new metaheuristic algorithms are inspired by physical processes or biological intelligence in nature, such as particle swarm optimization (PSO) [15], the firefly algorithm (FA) [16], the flower pollination algorithm (FPA) [17], the grasshopper optimisation algorithm (GOA) [18], and various particle swarm algorithms (IPSO [19], YSPSO [20], BPSO [21], etc.). The advantage is that the algorithms themselves have a good information sharing mechanism. The mechanism could make the algorithms converge quickly under certain conditions. They have been used to solve difficult optimization problems encountered in engineering [22]. Gandomi [23] proved that a metaheuristic algorithm has good effects in the global optimization of engineering problems.

The firefly algorithm is a new metaheuristic algorithm that was proposed by Yang [16]. It has the advantages of having simple concepts, being easy to understand, using a clear process, using few parameters, and having a strong random search ability. Lukasik and Żak believed that the FA performs better than PSO on continuous optimization problems [24]. The FA has been used to overcome economic dispatch problems in power system management [25]. Kavousi-Fard used the FA to solve the optimal parameters of the shortest load based on the support vector regression [7]. Similarly, the FA is used to solve NP scheduling problems and travelling salesman problems [26]. Sánchez applied the FA to the field of intelligent recognition [27]. Yang and He discussed the performance of intelligent segmentation and multiple mode processing [28]. Frumen [29] proposed a new ant colony optimization method with dynamic parameter adaptation. Daniela [30] combined PSO and neural networks and applied the resulting method to human recognition. Frumen [31] proposed an improvement to the convergence and diversity of the swarm in PSO using interval type-2 fuzzy logic. Frumen [32] used type-2 fuzzy logic to adjust the dynamic parameters of the GSA. Daniela [33] used the FA to optimize the neural network to realize face recognition.

For global continuous optimization problems, how to make the FA quickly and effectively obtain the global optimal value is key. This requires the FA to have good exploration efficiency and a fast convergence speed. Therefore, the convergence speed and exploration efficiency of the FA have become the research focus of researchers. Many researchers have incorporated some parameter adjustment mechanisms, such as a fuzzy controller, which could be able to update the system parameters in the FA according to the exploration process, into the algorithm [34, 35]. Yang introduced the Lévy flight strategy into the FA, called the Lévy-flight FA (LF-FA) [36]. In 2021, Shuhao adopted a personalized step strategy to improve the FA and proposed the PSSFA [37]. Ao proposed a sigmoid attractive firefly algorithm (IFA) and used it in IIR filter design [38]. Navid combined the FA with IFF and proposed a hybrid FA-IFF [39]. The FA-IFF and extreme learning machines were used to predict the thermal conductivity of soil.

In 2020, Jinran proposed an adaptive firefly algorithm for switching between the exploration mode and development mode (AD-IFA) [40]. The adaptive switch mechanism introduced by the AD-IFA improves the sufficiency of local development. However, the mechanism leads to a substantial increase in the computational complexity and computational costs of the AD-IFA.

The article has the following three innovative aspects:

-

1.

An improved attractiveness term is proposed to enhance the attraction between fireflies.

-

2.

Based on the characteristics of the damped vibration waveform, a new random item is constructed to enhance the exploitation sufficiency of the FA.

-

3.

Based on feedback theory, the firefly absorption coefficient increases as the number of iterations of FA increases.

The remainder of this paper is organized as follows. First, related work on the FA, the Lévy-flight FA and the AD-IFA are reviewed in Sect. 2. Section 3 outlines the method to improve the attractiveness term and random term and proposes the EE-FA. The optimal parameter setting of the EE-FA, the performance comparison of the algorithms, the benchmark functions and the simulation results are given in Sect. 4. Section 5 shows the efficiency of the EE-FA through four engineering applications. Finally, Sect. 6 concludes the paper.

2 Related work

2.1 The standard firefly algorithm

In nature, fireflies use their own light as a signal to attract other individuals of the same kind. FA is a new metaheuristic algorithm proposed by simulating the behaviour of fireflies. In the FA, each individual in the group corresponds to a candidate solution of the problem. The global optimization process is actually the process of moving the position of each individual firefly. The FA has the following three basic principles.

-

1.

All firefly individuals have no gender distinction.

-

2.

The attractiveness of each individual is related to its own brightness. Individuals with weaker brightness will be attracted by individuals with stronger brightness and move towards them, and individuals with the strongest brightness could move randomly in the solution space.

-

3.

The objective function value of the feasible solution of the problem to be solved is usually taken as the individual brightness value.

When constructing the FA, two key issues need to be addressed. The two key issues are the relative brightness and the attractiveness between fireflies.

(1) The relative brightness between fireflies can be expressed as follows:

In the formula, r represents the distance between the fireflies, \(\gamma\) represents the light intensity attraction coefficient, and \(I_{0}\) represents the initial fluorescence brightness of the firefly.

(2) The attraction between fireflies can be expressed as follows:

where \(\beta_{0}\) is the self-attraction of the firefly when r = 0.

(3) The distance between the fireflies can be expressed as follows:

In the formula, assuming that fireflies i and j are located at \(x_{i}\) and \(x_{j}\), respectively, the Cartesian distance between them should be represented by \(r_{ij}\), where D is the space dimension.

The position update formula of the firefly algorithm is as follows:

2.2 The Lévy-flight firefly algorithm

Like other metaheuristic algorithms, the FA cannot avoid falling into a local optimum. Therefore, Lévy flight was introduced into the FA to improve its ability to jump out of local optima (LF-FA) [36]. Compared with the FA, the LF-FA has better global exploration efficiency and a faster convergence speed. Its main idea is to use a Lévy random distribution instead of a traditional random distribution.

where \(\alpha\) indicates the coefficient of the random term, and \(\otimes\) is the Hadamard product. sign() is a sign function. Levy is a Lévy distribution.

The formula of the Lévy random distribution is as follows:

where v and µ obey a standard normal distribution. \(\varphi\) is calculated as follows:

where τ is standard Gamma function, and η = 1.5.

2.3 The adaptive logarithmic spiral-Lévy firefly algorithm

Although the LF-FA has better global exploration efficiency, it breaks the balance between exploration and exploitation. To compensate for this balance, Jinran [40] proposed a new algorithm for exploration and exploitation mode adaptive switching (AD-IFA). Its update position formula is as follows:

where u is a uniform random number in [0, 1], and \(R_{t}\) is calculated as follows:

where \(f_{t}^{*}\) is the best fitness function value of the tth iteration, lg(·) = log10(·), and \({ \lceil } \cdot { \rfloor }\) is the lower bound function. The value expression of \(\theta\) is as follows:

The AD-IFA introduces an adaptive switching mechanism, which truly strengthens the balance between the exploration and exploitation of the algorithm in the global optimization process. However, the AD-IFA has three disadvantages as follows:

-

1.

The adaptive switch mechanism greatly increases the complexity of the AD-IFA.

-

2.

The values of the random parameter \(\alpha\) and the light absorption coefficient \(\gamma\) are random, subjective and unscientific. The two parameters can directly affect the global exploration efficiency of the AD-IFA.

-

3.

The complexity of the AD-IFA does not effectively speed up the convergence speed and reduces the number of iterations.

In order to improve the deficiencies of the AD-IFA, the article improves the FA from the following three aspects:

-

1.

The complexity of AD-IFA is reduced, the adaptive switch mechanism is removed, and the related parameters of the distance factor between the fireflies are used instead.

-

2.

Modify the random term of the FA. According to the damping vibration curve law, the damping vibration factor is introduced into the unified random distribution instead of the Lévy random distribution.

-

3.

Each parameter in the newly constructed algorithm is simulated to determine its optimal parameter.

-

4.

In order to accelerate the convergence rate, let the light attraction coefficient \(\gamma\) increase as the number of iterations increases.

3 A enhanced exploration firefly algorithm

In order to facilitate the study of the FA's position update equation, the FA is divided into three modules: (1) the initial position module, (2) the attractiveness term module, and (3) random term module. Formula (4) can be simplified as follows:

-

1.

\(x_{i,t}\) is the initial position module.

-

2.

The attractiveness term module is as follows:

$$ A_{i,j} = \beta_{0} \cdot {\text{e}}^{{ - \gamma \cdot r_{i,j}^{2} }} \cdot (x_{j,t} - x_{i,t} ). $$(12)\({\text{A}}_{{\text{i,j}}}\) represents the attractiveness term module.

-

3.

The random term module is as follows

$$ B_{i,j} = \alpha \cdot ({\text{rand}} - 0.5). $$(13)

\(B_{i,j}\) is random term module. rand denotes a d-dimensional uniform random vector in [0 1]D. \(\gamma\) is the light absorption coefficient. α is a parameter in [0, 1]. \(\beta_{0}\) is the original light intensity. \(x_{j,t} - x_{i,t}\) is a multidimensional variable, and the dimension is D.

3.1 Attractiveness term module

Under the condition of one-dimensional space, we analyze the attractiveness term module. Let \(\gamma = 1,\beta_{0} = 1\), and \(x_{j,t} - x_{i,t} = z\), where z represents the distance between two fireflies. The one-dimensional movement area of the firefly is [0 10]. The attractiveness term can be simplified as follows:

The characteristic curve of the attractiveness term module is as follows.

The characteristic curve graph of the attractiveness term module shows that the value of the attractiveness term begins to increase rapidly as the distance between the fireflies increases. Then, as the distance continues to increase, the value of the attractiveness term begins to decrease rapidly. When the distance is greater than 3, the value of the attractiveness term becomes diminutive, almost approaching zero. In other words, it is almost unattractive to distant fireflies. The location update formula only works with the random item module. Long-distance fireflies move randomly, directionlessly and aimlessly without restriction. This will greatly increase the number of invalid iterations of the FA.

In order to overcome this drawback, to make the attractiveness term module more attractive to prolonged distant fireflies, we introduce an attractiveness enhancement factor into the attractiveness term module.

The attractiveness enhancement factor is as follows:

The strengthened attractiveness item module is:

where \(\gamma ,\beta ,b,h\) are the undetermined constants; and their default initial values are 0.03, 1, 10, and 100.

The characteristic curve of the enhanced attractiveness item module is as follows.

Figure 2 shows that the value of the enhanced attractiveness item module increases to a certain value as the distance between the fireflies increases. Then, the value of the attractiveness item slowly decreases as the distance increases. Compared with Figs. 1, 2 has better attraction to long-distance fireflies. The novel attractiveness item module is able to effectively attract fireflies and reduce the number of moves.

3.2 Random item module

The random item module is a unique part of the metaheuristic algorithm. It can prevent the FA from falling into the local optimum and improve the exploration efficiency of the FA. The purpose of our reconstruction of the random item module is to realize the connection between the random item module and the firefly. When the distance between fireflies is far, the module can improve the global search efficiency; and when the distance between fireflies decreases, the module can strengthen the local exploration efficiency.

Obviously, Eq. (17) is a random term that is unrelated to the distance between the fireflies. If α takes a fixed value, it is a random number that obeys a [0, 1] uniform distribution. Regardless of whether the distance between the fireflies is far or near, the exploration efficiency of the random term is almost the same. This severely weakens the exploration efficiency of the FA. According to the characteristics of the damping vibration curve, the amplitude decreases rapidly as the number of vibrations increases. The frequency of the damped vibration function can be changed. The purpose of the damping vibration characteristic is to play a major role when the distance between the fireflies decreases. When the distance between fireflies increases, the characteristic does not play a major role.

The damping vibration function formula is as follows [41]:

In order to satisfy that condition when t = 0, y = 0, Eq. (19) below is used to replace it.

The damping vibration distribution equation is as follows:

Let A = 5, a1 = 1 and a2 = 2π. The curves corresponding to formula (19) and formula (20) are as follows.

The right subfigure in Fig. 3 shows that the characteristic curve not only has the characteristic curve of the damping vibration but also has the characteristic curve of a random distribution.

Let A = 5 and α = 5. The best values of A and α are obtained in Sect. 4, which are set arbitrarily to illustrate the principle.

The left subfigure in Fig. 4 shows the following two characteristic points:

-

1.

Compared with Fig. 3, the amplitude is significantly enhanced.

-

2.

As the distance between the fireflies increases, the random attribute grows increasingly smaller.

Therefore, when the distance between fireflies is small, it can play a major role; and when the distance between fireflies is large, formula (17) plays a major role. According to formula (17) and formula 21, the new random term module is reconstructed to realize the enhancement of the exploration efficiency of FA.

The new random term model is as follows:

where k, f, and g are undetermined parameters. The role of k, f, and g is to balance exploration efficiency and convergence behaviour.

This modifies the light absorption coefficient γ as follows:

where iter is the current number of iterations, itermax is the maximum number of iterations, and s is an undetermined parameter.

The location update formulas of the EE-FA are as follows:

4 Numerical simulations

The EE-FA adopts multiple constants to balance the exploration efficiency and convergence speed and determines the best value of these constants through simulation. Then, the exploration efficiency and convergence speed of the FA, LF-FA, PSSFA, IFA, FA-IFF, AD-IFA and EE-FA were compared. Finally, the benchmark functions are globally optimized, and the minimum mean (mean) and standard deviation (std) are recorded.

4.1 Determination of the best parameters of the EE-FA

At this time, γ is a fixed parameter. The EE-FA contains seven undetermined parameters:\(\beta ,\gamma ,b,h,k,f,g\). The best values of the seven independent parameters are determined through the different influences of different parameters on the performance of the EE-FA. The sphere function \(f(x) = \sum\nolimits_{i = 1}^{d} {x_{i}^{2} }\) is selected as the benchmark function. The dimension space d is set to 15. The value of xi ranges from 0 to 100.

To eliminate the influence of random errors, each simulation is executed 50 times, and the average value is calculated.

Using the control variable method, the diverse values of each undetermined parameter are simulated individually. After many simulations, the parameter values are obtained.

4.1.1 Impact of β on the exploration efficiency of the EE-FA

The other six parameter values are initialized as follows: \(\gamma = 2\), \({\text{b}} = 10\), \(h = 100\), \(k = 0.8\), \(f = 6\), and \(g = 0.04\). The effects of different values of β on the performance of the algorithm are shown in the following Fig. 5.

Figure 5 shows that when β = 5.8 and 5.9, the exploration efficiency of the algorithm is the lowest. When β = 5.721, the exploration efficiency is improved. When β = 5.7437, the exploration efficiency is higher than when β = 5.721. When β = 5.743889, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter β is 5.743889.

4.1.2 Impact of γ on the exploration efficiency of the EE-FA

The optimal parameter value obtained was the following: β = 5.743889. The other five parameter values are then initialized as follows: b = 10, h = 100, k = 0.8, f = 6, and \(g = 0.04\). The effects of different values of γ on the performance of the algorithm are shown in the following Fig. 6.

Figure 6 shows that when γ = 2.27, the exploration efficiency of the algorithm is the lowest. When γ = 2.29 and 2.26, the exploration efficiency is improved. When γ = 2.33, the exploration efficiency is higher than when γ = 2.26. When γ = 2.28, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter γ is 2.28.

4.1.3 Impact of b on the exploration efficiency of the EE-FA

The optimal parameter values obtained were β = 5.743889 and γ = 2.28. The other four parameter values are initialized as follows: \(h = 100\), \(k = 0.8\), \(f = 6\), and \(g = 0.04\). The effect of different values of b on the performance of the algorithm is shown in the following Fig. 7.

Figure 5 shows that when b = 14.5, the exploration efficiency of the algorithm is the lowest. When b = 14.3, the exploration efficiency is improved. When b = 15.5 and 16.5, the exploration efficiency is higher than when b = 14.3. When b = 15.0, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter b is 15.0.

4.1.4 Impact of \(h\) on the exploration efficiency of the EE-FA

The optimal parameter values obtained were as follows: β = 5.743889, γ = 2.28, and b = 15. The other three parameter values are then initialized as follows: \(k = 0.8\), \(f = 6\), and \(g = 0.04\). The effects of different values of h on the performance of the algorithm shown in the Fig. 8.

Figure 8 shows that when h = 74.0, the exploration efficiency of the algorithm is the lowest. When h = 73.0 and 85.0, the exploration efficiency is improved. When h = 76.0, the exploration efficiency is higher than when h = 73.0. When h = 75.0, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter h is 75.0.

4.1.5 Impact of \(k\) on the exploration efficiency of the EE-FA

The optimal parameter values obtained were as follows: β = 5.743889, γ = 2.28, b = 15, and h = 75.0. The other two parameter values are then initialized as \(f = 6\) and \(g = 0.04\), The effects of different values of k on the performance of the algorithm are shown in the Fig. 9.

Figure 9 shows that when k = 0.8 and 1.9, the exploration efficiency of the algorithm is the lowest. When k = 1.0, the exploration efficiency is improved. When k = 1.52, the exploration efficiency is higher than when k = 1.0. When k = 1.5, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter k is 1.500.

4.1.6 Impact of \(f\) on the exploration efficiency of the EE-FA

The optimal parameter values obtained were as follows: β = 5.743889, γ = 2.28, b = 15, h = 75.0, and k = 1.5. The last parameter value is initialized as follows: \(g = 0.04\). The effects of different values of k on the performance of the algorithm are shown in the Fig. 10

Figure 10 shows that when f = 10.0, the exploration efficiency of the algorithm is the lowest. When f = 11.0, the exploration efficiency is improved. When f = 9.0 and 6.0, the exploration efficiency is higher than when f = 11.0. When f = 7.0, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter f is 7.0.

4.1.7 Impact of g impact on the exploration efficiency of the EE-FA

The optimal parameter values obtained were as follows: β = 5.743889, γ = 2.28, b = 15, h = 75.0, k = 1.5, and f = 7.0. The effects of different values of g on the performance of the algorithm are shown in the Fig. 11.

Figure 11 shows that when g = 0.01, the exploration efficiency of the algorithm is the lowest. When g = 0.02 and 0.03, the exploration efficiency is improved. When g = 0.004, the exploration efficiency is higher than when g = 0.02. When g = 0.015, the exploration efficiency is the best. Therefore, the optimal value of the undetermined parameter g is 0.015.

The optimal parameter values obtained were as follows: β = 5.743889, γ = 2.28, b= 15, h = 75.0, k = 1.5, f = 7.0, and g = 0.015.

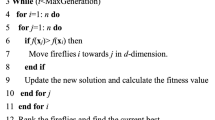

The EE-FA proposed in this article is as follows:

4.2 Improve the iteration speed of the EE-FA

Our research found that the light attraction coefficient of fireflies also has a significant effect on the convergence speed of the FA. Within a certain range, the greater the optical attraction coefficient of the FA is, the faster its iteration speed, but its exploration efficiency is correspondingly weakened.

In order to improve the convergence speed without compromising the exploratory ability, a functional equation to increase the optical attraction coefficient with the number of iterations of the algorithm is proposed (27).

The iterative formula of the light absorption coefficient is as follows:

where itermax is the maximum number of iterations.

Therefore, the position update formulas of the EE-FA with the best parameter value are as follows:

4.3 Comparison of the time complexity of the EE-FA and AD-IFA

The time complexity of the FA and its variants depend on the population size (n), the dimensional size (d), the maximum number of evaluations (Max_iter), and the type of algorithm. The population size (n), dimensional size (d) and maximum number of evaluations (Max_iter) were the same. The FA and its variants differ greatly in time complexity due to different types of algorithms (Tables 1, 2).

The number of independent simulations for each benchmark function is 100. Then, we calculate the total running time of each variant algorithm. The other experimental conditions of the EE-FA and AD-IFA remained the same. Time complexity is measured based on the running time on the same computer in the same environment. F1–F9 are described in Table 4.

The data in Table 3 show that the total time for 100 individual runs of the AD-IFA under the same environment and conditions is much higher than that of the EE-FA. This reflects that the AD-IFA has high time complexity, high computational costs and a long running time. The EE-FA removes the adaptive switching mechanism involved in the AD-IFA. The mechanism increases the time complexity and computational costs.

In short, compared to the AD-IFA, the EE-FA is also cheaper to calculate.

4.4 Test function

We choose benchmark functions with different shapes for the simulation experiment [42, 43] in Table 4. Most of these benchmark functions have multiple local minimums or have bowl, plate and valley shapes.

4.5 Compared with other smart swarm algorithms

We selected PSO and its variants, the FPA, the GOA and the EE-FA, for a performance comparison. Standard function F1–F9 is selected, the search space dimension D = 5, each algorithm is simulated 30 times separately, and the number of search agents is 15.

The comparative data between the EE-FA and the selected object reveal that the mean and std are the smallest. The EE-FA has the highest exploration efficiency. The data from Table 5 prove that the new random item module greatly improves the FA's exploration capabilities.

Figure 12 shows that the EE-FA has the fastest iteration speed and the best exploration capability. The improvement in the convergence speed is mainly attributed to the attractiveness item and the random item module. The enhancement of the attractiveness item speeds up convergence.

The improvement of the EE-FA's exploration efficiency is attributed to the reconstructed random term module.

4.6 Comparison of FA and its variant algorithms

The experimental result data are recorded in Table 6.

As shown in Table 6, for F1, F2, F3, F4, F5, F6, F9, F10, F14, F19 and F24, the minimum mean values of the EE-FA and the AD-IFA are significantly smaller than those of the FA, LF-FA, PSSFA, IFA and FA-IFF. EE-FA and AD-IFA. The EE-FA and AD-IFA have the same exploration efficiency. For F8, F11, F13, F15, F16, F18, F20, F21 and F23, the minimum mean value of the EE-FA was the smallest. The exploration efficiency of the EE-FA is the highest.

For the global continuous optimization problem of 24 benchmark functions, the EE-FA has the highest exploration efficiency, followed by the AD-IFA. Their exploration efficiency is significantly better than that of other algorithms.

Figure 13 shows that the EE-FA has the best exploration efficiency and convergence behaviour. The attractiveness item module enhances the attraction between long-distance fireflies and improves the convergence efficiency. The reconstructed random term has greatly improved the EE-FA's exploration efficiency.

F20, F21 and F24 reveal that the EE-FA has a much better global optimization capability than the FA, LF-FA, PSSFA, IFA, FA-IFF and AD-IFA. For F11, F13 and F16, the convergence speed of the EE-FA is the fastest. For F15 and F20, other algorithms fall into local optima, but the EE-FA can effectively avoid the situation.

Overall, the EE-FA is superior to the FA, LF-FA, PSSFA, IFA, FA-IFF and AD-IFA in terms of both convergence speed and exploration efficiency. This is due to the seven important optimality constants in the EE-FA: β, γ, b, h, k, f, and g.

Finally, the proposed EE-FA enhances the exploration efficiency and speeds up convergence while avoiding the problem of falling into local optima.

5 Real application cases

In this section, the performance of seven algorithms, the FA, LF-FA, PSSFA, IFA, FA-IFF, AD-IFA and EE-FA, are evaluated in the following four engineering real applications: cantilever beam design [44], pressure vessel design [45], tension/compression spring design [46], and a three-bar tress design [45].

Regarding the parameter settings of the FA, LF-FA, PSSFA, IFA, FA-IFF and AD-IFA, α, γ, and β0 are set to 0.2, 1, and 1, respectively. The dimensional space settings are as follows: cantilever beam (d = 5), pressure vessel design (d = 4), tension/compression spring design (d = 3), and a three-bar tress design (d = 2). The corresponding maximum numbers of iterations are the following: 1000, 1000, 200, and 200. The experiment of each case was repeated 50 times to evaluate the performance of the 7 algorithms. The experimental results are recorded in the corresponding table.

5.1 Cantilever beam design

This case is related to the weight optimization of a cantilever beam with a square cross section (Figs. 14, 15). The bound constraints are set as 0.01 ≤ xj ≤ 100. This problem can be expressed analytically as follows:

where \(0.01 \le x_{1} ,x_{2} ,x_{3} ,x_{4} ,x_{5} \le 100\).

Case | Optimizer | Mean of optimal variables | Mean of optimal value f(x) | ||||

|---|---|---|---|---|---|---|---|

Cantilever beam | x1 | x2 | x3 | x4 | x5 | ||

FA | 26.5909 | 28.0631 | 30.3021 | 25.5186 | 30.3066 | 8.7848 | |

LF-FA | 11.7234 | 14.147 | 14.2108 | 10.6045 | 12.1334 | 3.9199 | |

PSSFA | 16.9475 | 18.2402 | 19.3348 | 14.8616 | 19.2409 | 5.5302 | |

IFA | 19.9816 | 21.113 | 21.7019 | 19.0129 | 21.1807 | 6.4266 | |

FA-IFF | 9.42642 | 12.044 | 12.2957 | 9.02233 | 9.6568 | 3.2714 | |

AD-IFA | 6.4233 | 6.3218 | 5.6213 | 4.4494 | 2.6913 | 1.5916 | |

EE-FA | 6.1428 | 5.6241 | 4.917 | 4.0009 | 2.7068 | 1.4596 | |

5.2 Pressure vessel design

In order to minimize the total cost of a cylindrical PV, the thickness of the shell (Ts), the thickness of the head (Th), the inner radius (R), and the length of the annular cross section (L) are optimized (Figs. 16, 17).

Then, the optimization problem can be expressed as follows:

\({\text{where}}\begin{array}{*{20}c} {} & {1 \times 0.0625 \le T_{s} } \\ \end{array} ,T_{h} \le 99 \times 0.0625,{\text{and}}\begin{array}{*{20}c} {} & {10 \le R,L \le 200} \\ \end{array}\).

Case | Optimizer | Mean of optimal variables | Mean of optimal value f(x) | |||

|---|---|---|---|---|---|---|

PVD | Ts | Th | R | L | ||

FA | 2.6250 | 1.2500 | 60.478 | 104.87 | 23320.36 | |

LF-FA | 1.6875 | 0.5625 | 52.953 | 102.35 | 7386.577 | |

PSSFA | 2.1875 | 0.6875 | 54.104 | 103.90 | 11506.35 | |

IFA | 2.3750 | 0.8750 | 54.759 | 102.99 | 14664.36 | |

FA-IFF | 1.8750 | 0.5625 | 52.039 | 102.60 | 7683.466 | |

AD-IFA | 2.5625 | 0.5000 | 56.867 | 66.349 | 6540.617 | |

EE-FA | 1.3750 | 0.6250 | 64.711 | 13.540 | 5560.038 | |

5.3 TCSD (tension/compression spring design) problem

Another classic engineering problem is TCSD. In this case, the decision maker wants to minimize the heaviness of a spring (Figs. 18, 19). The problem consists of the following three variables: the diameter (d), the mean coil diameter (D), and the number of dynamic coils (N).

The expressions for TCSD is as follows.

Consider \( \, \overrightarrow {x} = [x_{1} ,x_{2} ,x_{3} ] = [d,D,N]\).

Minimize \( \, f(\overrightarrow {x} ) = (x_{3} + 2)x_{2} x_{1}\).

Subject to \(g_{1} (\overrightarrow {x} ) = 1 - \frac{{x_{2}^{3} x_{3} }}{{7178x_{1}^{4} }} \le 0\)

Variable ranges \(0.05 \le x_{1} \le 2.00,0.25 \le x_{2} \le 1.30,2.00 \le x_{3} \le 15.0.\)

Case | Optimizer | Mean of optimal variables | Mean of optimal value f(x) | ||

|---|---|---|---|---|---|

TCSD | d | D | N | ||

FA | 0.050 | 0.25005 | 4.7611 | 0.0042266 | |

LF-FA | 0.050 | 0.25000 | 2.3783 | 0.0027365 | |

PSSFA | 0.050 | 0.25000 | 2.0000 | 0.0025000 | |

IFA | 0.050 | 0.25000 | 2.9578 | 0.0030986 | |

FA-IFF | 0.050 | 0.25000 | 2.0000 | 0.0025000 | |

AD-IFA | 0.050 | 0.25000 | 2.0000 | 0.0025000 | |

EE-FA | 0.050 | 0.25000 | 2.0000 | 0.0025000 | |

5.4 A three-bar tress design

This problem was first presented by Nowacki in 1973. The volume of a statically loaded 3-bar truss is to be minimized subject to stress (r) constraints on each of the truss members (Figs. 20, 21). The objective is to evaluate the optimal cross-sectional areas (A1 and A2).

The mathematical formulation is given as follows:

with \(l = 100\;{\text{cm}},\,P = 2KN/CM^{2} ,\;{\text{and }}\sigma = 2KN/CM^{2} (0 \le A_{1} ,A_{2} \le 1).\)

Case | Optimizer | Mean of optimal variables | Mean of optimal value f(x) | |

|---|---|---|---|---|

A three-bar tress | A1 | A2 | ||

FA | 0.98238 | 0.026953 | 2.8300 | |

LF-FA | 1 | 0 | 2.8284 | |

PSSFA | 1 | 0 | 2.8284 | |

IFA | 1 | 0 | 2.8284 | |

FA-IFF | 1 | 0 | 2.8284 | |

AD-IFA | 1 | 0 | 2.8284 | |

EE-FA | 1 | 0 | 2.8284 | |

6 Conclusions

Regarding global continuity optimization, the original FA faced the defects of low exploration efficiency and a slow convergence rate. Although the LF-FA enhances the exploration efficiency by introducing the Lévy distribution, it causes its convergence rate to slow down. On the basis of the LF-FA, the AD-IFA introduces a logarithmic spiral path and adopts an adaptive switch mechanism. The adaptive switch mechanism effectively balances the global search and local development performance while greatly increasing the computational costs. The variant PSSFA adopts a personalized step strategy to improve the defect that the FA has fallen into a local optimum. The IFA uses sigmoid attractiveness to improve convergence efficiency and convergence rate. Compared with the PSSFA, IFA and FA_IFF, the EE-FA has better exploration efficiency and convergence behaviour.

By enhancing the attraction between fireflies and introducing a damping vibration function, the EE-FA can effectively improve the exploration efficiency and accelerate the convergence behaviour. Compared with the AD-IFA, the calculation costs of the EE-FA are lower.

Our future work will further try to strengthen the attractiveness of fireflies and study their exploration efficiency and convergence characteristics. Furthermore, we will explore multiple potential directions. These include tailoring the application of the algorithm in the actual system, such as the privacy protection network in social networking; and the optimal parameter selection in machine learning model training.

References

Horng MH (2012) Vector quantization using the firely algorithm for image compression. Expert Syst Appl 39(1):1078–1091

Montiel O, Sepúlveda R, Orozco-Rosas U (2015) Optimal path planning generation for mobile robots using parallel evolutionary artificial potential field. J Intell Robot Syst 79(2):237–257

Deb K (1995) Optimization for engineering design: algorithms and examples. Prentice-Hall, New Delhi

Yang X-S (2010) Firefly algorithm, stochastic test functions and design optimisation. arXiv:1003.1409

Maeda K, Fukano Y, Yamamichi S, Nitta D, Kurata H (2011) An integrative and practical evolutionary optimization for a complex, dynamic model of biological networks. Bioprocess Biosyst Eng 34(4):433–446

Horst R, Pardalos PM (1995) Handbook of global optimization.Spring- Science & Business Media, B.V.

Kavousi-Fard A, Samet H, Marzbani F (2014) A new hybrid modified firefly algorithm and support vector regression model for accurate short term load forecasting. Expert Syst Appl 41(13):6047–6056

Su CT, Lee CS (2003) Network reconfiguration of distribution systems using improved mixed-integer hybrid differential evolution. Power Deliv 18(3):1022–1027

Goldfeld S M, Quandt R E, Trotter H F. (1996) Maximization by quadratic hill-climbing.Econometrica:journal of the econometric society .pp.541–551.

Abbasbandy S (2003) Improving Newton-Raphson method for nonlinear equations by modified adomian decomposition method. Appl Math Comput 145(2):887–893

Nelder JA, Mead R (1965) A simplex method for function minimization. Comput J 7(4):308–313

Widrow B, Stearns D (1985) Adaptive signal processing. Prentice Hall, Englewood Cliffs

Liu J, Wang F, Zhao H, Han G (2017) Filtering algorithm and application of fuze echo signal based on LMS principle. J Proj Rockets Missiles Guidance 37(06):45-47+56

Yang X-S (2009) Firefly algorithms for multimodal optimization. In: International symposium on stochastic algorithm, pp 169–178

Chu X, Niu B, Liang JJ et al (2016) An orthogonal-design hybrid particle swarm optimiser with application to capacitated facility location problem. Int J Bio Inspired Comput 8(5):268–285

Yang X-S (2008) Nature-inspired Metaheuristic Algorithms. Luniver Press, Beckington

Yang X-S (2012) Flower pollination algorithm for global optimization. In: Durand-Lose J, Jonoska N (eds) Unconventional computation and natural computation, vol 7445. Springer, Berlin, pp 240–249

Saremi S, Mirjalili S, Lewis A (2017) Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47

Li W, Xue M, Jian-guo L (2011) Feature selection and target recognition based on improved particle swarm optimization algorithm. Comput Eng Des 32(11)

Zhen-long S, Xiao-ye L, Ying W (2015) Improved simple particle swarm optimization algorithm. Comput Sci 42(11A)

Zwe-Lee G (2003) Discrete Particle swarm optimization algorithm for unit commitment. In: IEEE power engineering society general meeting, vol 1, Ontario, Canada, pp 418–424

Gandomi AH, Alavi AH (2011) Multi-stage genetic programming: a new strategy to nonlinear system modelling. Inf Sci 181:5227–5239

Gandomi AH, Yang XS (2011) Benchmark problems in structural optimization. In: Koziel S, Yang XS (eds) Computation optimization, methods and algorithms, Chapter 12. Spring, Berlin, pp 267–291

Lukasik S, Żak S (2009) Firefly algorithm for continuous constrained optimization tasks. In: International conference on computational collective intelligence, pp 97–106

Yang X-S, Hosseini SSS, Gandomi AH (2012) Firefly algorithm for solving non-convex economic dispatch problems with valve loading effect. Appl Soft Comput 12(3):1180–1186

Jati GK et al (2011) Evolutionary discrete firefly algorithm for travelling salesman problem. In: Adaptive and intelligent systems. Springer, pp 393–403

Sánchez D, Melin P, Castillo O (2017) Optimization of modular granular neural networks using a firefly algorithm for human recognition. Eng Appl Artif Intell 64:172–186

Yang X-S, He X (2013) Firefly algorithm: recent advances and applications. arXiv:1308.3898

Frumen O, Fevrier V, Oscar C, Claudia IG, Gabriela M, Patricia M (2017) Ant colony optimization with dynamic parameter adaptation based on interval type-2 fuzzy logic systems. Appl Soft Comput 53:74–87

Daniela S, Patricia M, Oscar C (2020) Comparison of particle swarm optimization variants with fuzzy dynamic parameter adaptation for modular granular neural networks for human recognition. J Intell Fuzzy Syst 38:3229–3252

Frumen O, Fevrier V, Oscar C, Patricia M (2016) Dynamic parameter adaptation in particle swarm optimization using interval type-2 fuzzy logic. Soft Comput 20:1057–1070

Frumen O, Fevrier V, Patricia M, Alberto S, Oscar C (2019) Interval type-2 fuzzy logic for dynamic parameter adaptation in a modified gravitational search algorithm. Inf Sci 476:159–175

Daniela S, Patricia M, Oscar C (2017) Optimization of modular granular neural networks using a firefly algorithm for human recognition. Eng Appl Artif Intell 64:172–186

Lagunes ML, Castillo O, Valdez F, Soria J, Melin P (2018) Parameter optimization for membership functions of type-2 fuzzy controllers for autonomous mobile robots using the firefly algorithm. In: North American fuzzy information processing society annual conference, pp 569–579

Castillo O, Soto C, Valdez F (2018) A review of fuzzy and mathematic methods for dynamic Parameter adaptation in the firefly algorithm. In: Advances in data analysis with computational Intelligence methods. Springer, pp 311–321

Yang X-S (2010) Firefly algorithm, Levy flights and global optimization. In: Research and development in intelligent systems, vol xxvi. Springer, pp 209–218

Shuhao Y, Xukun Z, Xianglin F, Zhengyu L, Mingjing P (2021) An improved firefly algorithm based on personalized step strategy. Computing. https://doi.org/10.1007/s00607-021-00919-9

Ao L, Li P, Deng X, Ren L (2021) A sigmoid attractiveness based improved firefly algorithm and its applications in IIR filter design. Connect Sci 33(1):1–25. https://doi.org/10.1080/09540091.2020.1742660

Navid K, Abidhan B, Pijush S, Majidreza N, Annan Z, Danial JA (2021) A novel technique based on the improved firefly algorithm coupled with extreme learning machine (ELM-IFF) for predicting the thermal conductivity of soil. Eng Comput. https://doi.org/10.1007/s00366-021-01329-3

Jinran W, Wang Y-G, Burrage K, Tian Y-C, Lawson B, Ding Z (2020) An improved firefly algorithm for global continuous optimization problems. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2020.113340

Huang J, Cui X, Li D, Feng Y, Lu D (2004) Observation and data analysis in phase space for Pohl pendulum. Acta Sci Natur Univ Sunyatseni 43(Suppl):39–41

Surjanovic S, Bingham D (2018) Virtual library of simulation experiments: test functions and datasets. http://www.sfu.ca/~ssurjano. Accessed 3 Dec

Yelghi A, Köse C (2018) A modified firefly algorithm for global minimum optimization. Appl Soft Comput 62:29–44

Fleury C, Braibant V (1986) Structural optimization: a new dual method using mixed variables. Int J Methods Eng 23(3):409–428

Gandomi AH, Yang X-S, Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(1):17–35

Dhiman G, Kumar V (2017) Spotted hyena optimizer: a novel bio-inspired based metaheuristic technique for engineering applications. Adv Eng Softw 114:48–70

Acknowledgements

This work utilizes the resources and services provided by Southeast University. The platform for calculating data is supported by the laboratory of Nanjing Institute of Technology. This work was supported by National Natural Science Foundation of China (Grant No. 51705238)

Author information

Authors and Affiliations

Contributions

JL conceptualization, investigation, methodology, validation, software, writing, revision, review and editing, visualization; JS supervision, project administration, funding acquisition; FH methodology, formal analysis; writing, revision; formal analysis; MD software, revision, review, conceptualization, formal analysis; XZ revision, review, analysis.

Corresponding authors

Ethics declarations

Conflict of interest

All authors declare no conflict interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, J., Shi, J., Hao, F. et al. A novel enhanced exploration firefly algorithm for global continuous optimization problems. Engineering with Computers 38 (Suppl 5), 4479–4500 (2022). https://doi.org/10.1007/s00366-021-01477-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01477-6