Abstract

The butterfly optimization algorithm (BOA) is a new metaheuristic algorithm that is inspired from food foraging behavior of the butterflies. Because of its simplicity and effectiveness, the algorithm has been proved to be effective in solving global optimization problems and applied to practical problems. However, BOA is prone to local optimality and may lose its diversity, thus suffering losses of premature convergence. In this work, a hybrid metaheuristic algorithm using butterfly and flower pollination base on mutualism mechanism called MBFPA was proposed. Firstly, the flower pollination algorithm has good exploration ability and the hybrid butterfly optimization algorithm and the flower pollination algorithms greatly improve the exploration ability of the algorithm; secondly, the symbiosis organisms search has a strong exploitation capability in the mutualism phase. By introducing the mutualism phase, the algorithm's exploitation capability is effectively increased and the algorithm's convergence speed is accelerated. Finally, the adaptive switching probability is increased to increase the algorithm's balance in exploration and exploitation capabilities. In order to evaluate the effectiveness of the algorithm, in the 49 standard test functions, the proposed algorithm was compared with six basic metaheuristic algorithms and five hybrid metaheuristic algorithms. MBFPA has also been used to solve five classic engineering problems (three-bar truss design problem; multi-plate disc clutch brake design; welded beam design; pressure vessel design problem; and speed reducer design). The results show that the proposed method is feasible and has good application prospect and competitiveness.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In real life, optimization is everywhere. Optimization is a process of finding the optimal solution. At present, a large number of optimization methods have been used to deal with optimization problems. Most of the traditional optimization methods rely on gradient information to update the solution, and the position of the initial solution affects the quality of the final solution. Therefore, in many practical engineering design problems, it is difficult to get a satisfactory solution based on gradient optimization method. Recently, a nature inspired metaheuristic optimization method is becoming more and more popular with the field of optimization and is widely used to solve complex optimization problems of various fields. According to a survey, metaheuristic has solved the optimization problem with sufficient efficiency and reasonable calculation cost compared with the accurate method [1].

The term "metaheuristic" generally refers to the approximate algorithm used for optimization, which is not specifically expressed for a particular problem, some metaheuristics are inspired by natural processes such as evolution, while others are extensions of less complex algorithms such as greedy heuristics and local search [2]. Existing natural metaheuristic algorithms can be simply divided into the following four categories: swarm intelligence based, bio-inspired, physical/chemical inspired and human behaviors. Among the population-based algorithms, some of the more classical algorithms are particle swarm optimization (PSO) [3], ant colony optimization (ACO) [4], artificial bee colony (ABC) [5], cuckoo Search (CS) [6], flower pollination algorithm (FPA) [7], firefly algorithm (FA) [8]. In addition, in recent years, some novel and effective swarm algorithms have been proposed, including Grey wolf optimizer (GWO) [9], whale optimization algorithm (WOA) [10], Harris hawks optimization (HHO) [11], symbiotic organisms search (SOS) [12], butterfly optimization algorithm (BOA) [13], etc. Bio-inspired algorithm includes evolutionary algorithms and immune type algorithms: evolutionary algorithms imitate the reproduction, recombination, selection and mutation stages of biological evolution. Some common evolutionary algorithms include genetic algorithms (GA) [14] and differential evolution (DE) [15]; immune type algorithm is to propose optimization algorithm based on immune mechanism and comply with immunological principle. The most representative ones are immune genetic algorithm (IGA) [16], clonal selection algorithm (CSA) [17], negative selection algorithm (NSA) [18], b-cell algorithm (BCA) [19], etc. In the third physical/chemical inspired algorithm, search individuals are updated based on physical phenomena, rules or some chemical reaction rules. For example, simulated annealing (SA) [20], gravitational search algorithm (GSA) [21], lightning search algorithm (LSA) [22], multi-verse optimizer (MVO) [23], electromagnetic field optimization (EFO) [24], equilibrium optimizer (EO) [25], chemical reaction optimization (CRO) [26], artificial chemical reaction optimization algorithm (ACROA) [27], etc. In the last kind of algorithm based on human behavior, it is subdivided in paper [28] social ideologies, sports competitive behavior, social and cultural interaction and condensation. Among them, the representative ones are cultural evolution algorithm (CEA) [29], teaching learning-based optimization (TLBO) [30], imperial list competitive algorithm (ICA) [31], etc.

Butterfly optimization algorithm (BOA) is a metaheuristic algorithm proposed by Arora and Singh. BOA is based on food foraging behavior and information sharing strategy of butterflies [13]. Literature [13] shows that the performance of BOA is better than that of the generally accepted optimization algorithm. Since the BOA algorithm was proposed, in order to obtain better results in the exploitation and exploration capabilities of the algorithm, Arora et al. have made a series of improvements on BOA: literature [32] proposed an improved butterfly optimization algorithm, which adopts the variable sensing modal parameter strategy to improve the convergence speed of the algorithm; literature [33] proposed an improved butterfly optimization algorithm with chaos; the algorithm's exploration capability has been increased; literature [34] proposed a hybrid method BOA/DE by the ensemble of BOA and DE algorithm, which combines the advantages of BOA and DE to enable the algorithm to achieve between exploration and exploitation balance to produce efficient results; literature [35] proposed the hybrid method BOA/ABC by the ensemble of BOA and ABC algorithm, which is similar to BOA/DE; literature [36] introduced learning automata, which not only keeps the main characteristics of the basic BOA, but also accelerates the global convergence speed and achieves the real global optimization; literature [37] proposed an modified BOA (MBOA), which adds the modification operation to the optimal position, thereby increasing the algorithm exploitation capability. In addition, Singh et al. [38] proposed an adaptive butterfly optimization algorithm, which improved the convergence speed of the algorithm by changing the sensory mode of BOA; Li [39] proposed an improved butterfly optimization algorithm (BOA), by embedding the cross-entropy (CE) method into the BOA, and the results showed that the improved algorithm achieved a good balance in exploration and exploitation; Sharma and Sushmita [40] proposed a butterfly optimization algorithm enhanced with mutualism scheme which improves the exploitation ability of BOA. Sharma et al. [41] proposed an integrated algorithm of butterfly optimization algorithm and symbiotic biological search, called hBOSOS. The global search capability of BOA and the local search capability of SOS are combined to make the proposed hBOSOS robust and effective.

In addition to improving the BOA, researchers have carried out a wide range of applications of BOA. Arora et al. [42] applied BOA to feature selection. Jalali et al. [43] applied BOA to train artificial neural network. Wang et al. [44] used butterfly optimization algorithm to optimize the extreme learning machine technology and applied it to port throughput prediction. Kisi et al. [45] used BOA to forecast drought in a semi-arid environment. Priyadharshini et al. [46] used BOA to optimize capacitated vehicle routing problem (CVRP). Abdul-Rashid et al. [47] used BOA to optimize the parameters of a designed Lead-Lad Controller. El Hajjami et al. [48] used BOA optimal PID control of an autonomous vehicle. Sharma et al. [49] used BOA/ABC for node localization in acoustic sensor networks. Du et al. [44] used butterfly optimization algorithm to optimize the ELM technology for container throughput prediction. These successful applications are due to BOA's advantages over other optimization methods. That is to say, BOA has a few parameters to be instantiated, and no preprocessing stage is required before the main body of the BOA [40]. Moreover, the algorithm is simple and has strong expansibility, which attracts researchers to expand and improve it and apply it in more and more complex fields.

Another efficient metaheuristic algorithm is flower pollination algorithm (FPA), developed by Yang. FPA belongs to bio-inspired algorithms that simulate flower pollination behavior in nature [7]. In recent years, there have been a large number of improved versions of the pollination algorithm, and it has been applied to solve practical optimization problems. The improved FPA versions include: Wang et al. [50] proposed the flower pollination algorithm with dimension by dimension improvement; Zhao et al. [51] proposed a complex encoding flower pollination algorithm; Zhou et al. [52] proposed the elite opposition-based flower pollination algorithm. Nabil et al. [53] proposed a modified flower pollination algorithm. Singh et al. [54] proposed an extended version of flower pollination algorithm. Lei et al. [55] proposed wind-driven flower pollination algorithm. Pauline et al. [56] proposed an improved flower pollination algorithm with chaos theory. At the same time, FPA has been successfully applied to solve various practical optimization problems, mainly in the following areas: cloud computing [57], data clustering [58,59,60], wireless sensor networks (WSNs) optimization [61,62,63], graph coloring problem [64], neural networks training [65, 66], economic load dispatch problem [67,68,69,70], ratios optimization problems (ROPs) [71], vehicle path planning problem [72, 73] and medical image segmentation [74], etc.

BOA has been applied to many aspects, but in terms of the algorithm itself, BOA is easy to fall into the local optimal solution in the early search process; one of the reasons is that the optimal solution is not fully utilized. Moreover, it was observed that random selection of exploration and exploitation phases based on the selected value of switching probability sometimes causes the BOA to lose direction and move away from the global best solution [37]. Therefore, in order to effectively improve the algorithm in the exploration and exploitation capacity and better balance the algorithm in the two stages of switching, in this paper, a hybrid butterfly optimization algorithm and flower pollination base on mutualism mechanism were proposed, which is called MBFPA.

Mutualism is the most common and important inter-specific relationship in ecosystem, and ecosystem cannot exist without mutualism. In nature, flowers send out fragrance to attract butterflies to spread pollen, which makes flowers bear fruit. Butterflies can get nectar and habitat from flowers. The processes of butterfly foraging and flower pollination are a mutually beneficial process. Inspired by the mutualism of different species in nature, a hybrid metaheuristic algorithm using butterfly and flower pollination base on mutualism mechanism was proposed. At the same time, the adaptive switching probability is introduced so that the algorithm can effectively balance the exploration and exploitation stages in the process of operation.

The primary contributions of this paper are summarized as follows:

-

1.

A hybrid metaheuristic algorithm using Butterfly and Flower Pollination base on mutualism mechanism is proposed.

-

2.

The exploration capability of the BOA and FPA is retained, and the exploitation capability is increased through the mutualism mechanism; the dynamic switching probability balance exploitation and exploration ratio is introduced.

-

3.

To fully test the effectiveness of MBFPA, several performance aspects including accuracy, convergence and statistics are evaluated by using 49 complex benchmark functions.

-

4.

The proposed MBFPA algorithm has been utilized to solve six constrained engineering optimization problems, such as (a) three-bar truss design problem; (b) multi-plate disc clutch brake design; (c) pressure vessel design problem; (d) welded beam design problem and (e) speed reducer design.

The rest of this paper is organized as follows: Sect. 2 briefly introduces the BOA and FPA. Section 3 introduces a hybrid butterfly optimization algorithm and flower pollination algorithm base on mutualism mechanism (MBFPA). Section 4 describes the theoretical comparison with other algorithms. Section 5 describes simulation experiments and result analysis. Finally, our conclusions and ideas for future work are presented in Sect. 6.

2 Butterfly optimization algorithm and flower pollination algorithm

2.1 Butterfly optimization algorithm

The butterfly optimization algorithm [13] is a new metaheuristic algorithm. The algorithm is mainly based on the foraging strategy of butterflies, which utilize their sense of smell to determine the location of nectar or mating partner. In order to find the source of nectar, butterflies use sense receptors which are used to smell and these receptors are scattered over butterfly’s body parts like legs, palps, antennae, etc. [37]. In the BOA, it is assumed that each butterfly can release its own fragrance, which is related to its fitness value. The foraging of butterflies can be divided into two situations: when the butterfly can feel the fragrance of the best butterfly in the search space, it will move towards the best butterfly, which is called the global search stage of BOA. When the butterfly can't detect the smell of other butterflies, it will move forward randomly. This stage is called local search. The process of BOA algorithm is described by the following three rules [13]:

-

1.

The fragrance is formulated as a function of the physical intensity of stimulus as follows:

$$f_{i} = cI^{a}$$(1)where \(f_{i}\) is the perceived magnitude of fragrance, how stronger the fragrance is perceived by other butterflies, c is the sensory modality, I is the stimulus intensity and a is the power exponent depended on modality, which accounts the varying degree of absorption.

-

2.

In global search phase, the butterfly takes a step towards the fittest butterfly/solution g* which can be represented as:

$$X_{i}^{t + 1} = X_{i}^{t} + (r^{2} \times g^{*} - X_{i}^{t} ) \times f_{i}$$(2)Xit is the solution vector Xi of the ith butterfly in the t iteration. g* represents the best solution currently found. Fragrance of ith butterfly is represented by fi and r is a random number in [0,1].

-

3.

Local search phase can be represented as:

$$X_{i}^{t + 1} = X_{i}^{t} + (r^{2} \times X_{j}^{t} - X_{k}^{t} ) \times f_{i}$$(3)where Xjt and Xkt are the jth and kth butterflies randomly selected in the solution space. The switch probability p is used in BOA to switch between global search and local search. BOA is presented in Algorithm 1.

2.2 Flower pollination algorithm

Flower pollination algorithm (FPA) [7] is a new metaheuristic swarm intelligence optimization algorithm proposed by Xinshe Yang in 2012. This optimization algorithm simulates the pollination process of plant flowers in nature, mainly including cross-pollination and self-pollination of flowers. In the process of cross-pollination, the flight behavior of the propagator (butterfly, bee, etc.) obeys \(Levy\) flight distribution. In the process of self-pollination, the mature pollen of plants spreads to their own flowers or different flowers of the same type of plants. The process of FPA algorithm is described by the following four rules [7]:

-

1.

Flower constancy can be considered as the reproduction probability is proportional to the similarity of two flowers involved.

-

2.

In the implementation of the algorithm, the conversion between local and global pollination mechanisms is controlled by the value of conversion probability p ∈ [0,1].

-

3.

In the process of biological cross-pollination, the Propagator's flight obeys \(Levy\) flight for global pollination. The formula is described as follows:

$$X_{i}^{t + 1} = X_{i}^{t} + Levy(\lambda )(X_{i}^{t} - g^{*} )$$(4)where \(X_{i}^{t + 1}\) and \(X_{i}^{t}\) represent the position of pollen individual \(i\) in the \(t + 1\) and \(t\) generations, \(g^{*}\) represents the position of the optimal flower and/or a pollen gamete the current population and represents Levy the step factor obeying the Levy distribution, as shown in the following formula:

$$L\sim \frac{\lambda \Gamma (\lambda )\sin (\pi \lambda /2)}{\pi }\frac{1}{{S^{1 + \lambda } }},(S \ge S_{0} > 0)$$(5)where Γ(λ) is the standard gamma function, \(\lambda\) = 1.5.

-

4.

Abiotic self-pollination can be regarded as the local pollination stage in the algorithm, which is expressed as

$$X_{i}^{t + 1} = X_{i}^{t} + \varepsilon (X_{j}^{t} - X_{k}^{t} )$$(6)where \(X_{j}^{t}\) and \(X_{k}^{t}\) represent the position of two different pollens in the same kind of plants. They are randomly selected two individuals; \(\varepsilon\) is the variable of uniform distribution in [0, 1]. FPA is presented in Algorithm 2.

3 Hybrid butterfly optimization algorithm and flower pollination algorithm base on mutualism mechanism

As already discussed, since the butterfly optimization algorithm was proposed, researchers have made different attempts to better improve the algorithm's exploration and exploitation capabilities: Arora and Singh proposed BOA/DE [35], BOA/ABC [34], balanced the algorithm's ability to exploitation and exploration. Because the two different algorithms are mixed, the advantages of the original algorithm can be retained. In addition, the addition of two different renewal mechanisms will inevitably increase the diversity of the population. Sharma and Sushmita [40] effectively improved the algorithm exploitation ability by adding the SOS algorithm to the exploitation stage of the original butterfly optimization algorithm. A good algorithm should be able to take into account two aspects. When the exploitation phase of an algorithm is dominant, the early population of the algorithm will concentrate on the best individual attachments early, leading to the loss of population diversity. During the algorithm update process, If too much consideration is given to the exploration capability of the algorithm, the exploration with low accuracy may lose the optimal solution and slow convergence rate.

Therefore, how to balance the two aspects of the algorithm becomes the key.

Thus, the success of a metaheuristic method on a given optimization problem is defined by its ability to provide a good balance between the exploration and exploitation. The exploration defines the global search ability of the algorithm, whereas the exploitation is the ability to find the optimum around a near-optimal solution, which can also be considered as the local search ability [75].

Therefore, in the current study, in order to deal with this imbalance between exploration capability and exploitation capability, BOA and FPA were adopted to mix through mutualism. The combination of diverse metaheuristics can lead to new exciting approaches since the hybridization can be used to get the advantage of different metaheuristics [76]. Here, the first step is to divide the entire population into two subpopulations: butterflies and flowers. Independent evolution between subpopulations can gain the advantages of both algorithms while increasing the diversity of the entire population. At the same time, the dynamic switching probability is introduced to balance the reasonable distribution of exploration and exploitation. The second stage is called mutualism stage. In this stage, the individual butterfly should randomly select a flower for mutualism, and the flower should do the same, so as to increase the exploitation capability of the algorithm.

Mutualism refers to two kinds of organisms living together, which are mutually beneficial. After the two are separated, life will be greatly affected, even death. Among them, insects, birds, mammals and many other creatures serve for pollination and seed transmission of flowering plants. Plants provide them with nectar and fruits in return. Without symbiosis, most plants would not survive. An example of reciprocity is the relationship between the butterfly and the flower, and the butterfly looks for food/nectar in the flower cluster for the butterfly to maintain its own survival, which is also beneficial to the flower in this activity, because the butterfly's foraging distributes pollen in the process, which is beneficial to the pollination of plants. It's good for both sides of life.

In order to simulate the mutualism between butterfly and flower, the mutualism stage of symbiotic organisms search (SOS) is introduced. In 2014, Cheng and Prayogo [12] proposed SOS, which simulates the interaction between organisms in the ecosystem. In the search space, each individual of different species is regarded as a candidate solution. The algorithm randomly initializes n organisms and generates an ecosystem. On this basis, individuals are updated through mutualism, commensalism and parasitism. SOS has good exploitation capabilities with the processes of mutualism and commensalism. SOS uses best solution as a reference point that might help in exploiting the neighborhood solutions of the current best solution [77].

This paper introduces the mutualism phase in the SOS algorithm. The renewal formula of SOS mutualism stage is introduced as follows [12]:

where \(Mutual_{agent}\) expressed the relationship between the two organisms in the t generation \(x_{i}^{t}\) and \(X_{j}^{t}\). rand[0,1] is a vector of random numbers. \(g^{*}\) is the best organism in the population, and BF1 and BF2 are the interest factors of randomly generated 1 or 2. These factors represent the favorable degree of interaction between two organisms.

In addition, the BOA algorithm uses fixed switching probability in the whole search process. However, the reasonable process should be: search in the global scope in the early stage, and increase the mining intensity in the local scope in the later stage to increase the accuracy of the solution. Therefore, in order to effectively balance the two stages of exploration and exploitation, the dynamic switching probability is used, as shown in the following formula:

where \(Max\_iter\) is the maximum number of iterations and \(itrer\) is the current number of iterations.

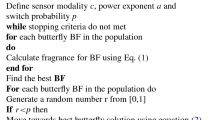

The flowchart of the proposed approach is described in Fig. 1, and pseudocode for the mutualism butterfly flower pollination algorithm is presented in “Algorithm 3”:

4 Theoretical comparison with other algorithm

In the BOA (Arora and Singh), the butterfly optimization algorithm is conceptually compared with other algorithms, namely ABC, CS, DE, FA, GA, MBO and PSO. Based on the comparison of the BOA, m-MBOA makes a comprehensive conceptual comparison among SOS, Jaya, improved BOA, chaotic BOA (CBOA), modified BOA, mutated BOA(BOA-C), BOA/DE and BOA/ABC.

The difference between the proposed algorithm and m-MBOA is that m-MBOA only introduces the symbiosis stage unilaterally, which increases the exploitation ability of the algorithm, and the symbiosis is based on the symbiosis between species. The introduction of the proposed algorithm in the symbiosis phase is not only between species, but also through the interaction between butterflies and flowers. Additionally, the introduction of dynamic switching probability can better balance the ratio of exploitation and exploration within the subpopulation.

TLBO is an algorithm based on human behavior, and its inspiration comes from the teaching and learning process in the classroom. The iterative evolution process of TLBO includes the teaching phase and the learning phase. To enhance the average knowledge level of the class, learners improve their knowledge levels by learning from the teacher in the teaching phase, and they also improve their knowledge levels by learning interactively from another learner selected randomly in learning phase [30]. MBFPA is a hybrid algorithm of butterfly optimization algorithm and flower pollination algorithm, inspired by the mutually beneficial relationship between butterflies and flowers in nature. Through the combination of the two algorithms, the exploration ability of butterfly algorithm and flower pollination algorithm is preserved. Across the introduction of SOS symbiosis stage, the exploitation ability of the algorithm can be enhanced and the probability of losing the optimal solution of the algorithm can be reduced.

GSA can be considered as physics-based metaheuristic search algorithm. At the beginning of the algorithm, each individual is given a mass, and the law of gravity between two objects is used to guide the motion optimization of each particle to search for the optimal solution. Superposition of the gravitational forces, dependency to the distance and the relation between mass values and fitnesses make this algorithm unique [78]. The proposed algorithm is a metaheuristic algorithm that simulates the mixed symbiotic relationship between two organisms in nature, mainly including two basic algorithms of BOA and FPA, which, respectively, simulate the foraging of butterfly and the pollination of flower, and the two subgroups switch through p for exploration and exploitation. At the same time, the increased mutualism stage enables the exchange of information between subgroups, reducing the possibility of entering the local optimal solution.

Hbosos is a hybrid algorithm of BOA and SOS. By combining BOA and SOS, the algorithm can retain the advantages of the two algorithms to the greatest extent. The proposed algorithm does not integrate the SOS algorithm, but improves the algorithm development ability by introducing the mutually beneficial symbiosis stage of SOS.

Li, Guocheng et al. proposed an improved butterfly optimization algorithm (BOA) using the cross-entropy method. The cross-entropy (CE) method was developed by Rubinstein [79] in 1997 to estimate the probability of rare events in complex random networks. This paper embeds the CE method into the BOA to obtain a good balance between exploration and exploitation and improve the BOA’s global search capability. The proposed algorithm is a hybrid algorithm based on two kinds of biological mutualism mechanisms in nature. It enhances the ability of algorithm development by adding mutualism mechanism. In the value of switching probability, adaptive dynamic switching probability is used.

Arora and Singh proposed Learning automata-based butterfly optimization algorithm. Learning automata have been embedded in BOA in which a learning automaton takes the role of configuring the behavior of a butterfly in order to create a proper balance between the process of global and local search [41], by introducing the adaptive dynamic switching probability, which is a simpler and more effective method.

5 Simulation experiments and result analysis

In order to verify the effectiveness of the proposed algorithm, as shown in Table 1, 49 different benchmark functions are tested, including function name, type, dimension, search scope, formulation and optimal value. Generally speaking, reference functions can be divided into two categories: unimodal function and multimodal function. Different types of test functions have different characteristics. Among them, the single-peak test function is used to verify the excavation ability. Multimodal test functions have many local optimal solutions, which is helpful to test the exploration ability of the algorithm and the ability to avoid the optimal value.

The algorithm is implemented in MATLAB R2018b. Experiments are performed on a PC with a 3.30 GHz, Intel(R) Core(TM) i5 CPU, System type 64 bit, Windows 10 operating system. To increase reliability and generate statistically significant results, each function was run 30 times in this validation test. The mean and standard deviation of the proposed algorithm and other algorithms for comparison were recorded.

5.1 Comparison with basic BOA and enhanced BOA

In order to evaluate the performance of the proposed algorithm compared with BOA [13] and enhanced BOA (m-MBOA) [40], it is worth noting that in the comparison experiment, the parameter Settings of the proposed algorithm are the same as those of the other two algorithms in the paper. In this comparison, the number of population is fixed at 50 and the maximum number of iterations is fixed at 10,000. The simulation results include average results and corresponding standard deviation. In the simulation of all reference functions, sensory modality (c) is 0.01 and power exponent (a) is increased from 0.1 to 0.3.

From the test results shown in Table 2, it can be seen that under the same test conditions, f5, f14, f20, f25 and f28 are superior to the other two algorithms, and other test functions can achieve the same accuracy as the other two algorithms. It can be concluded that the proposed algorithm can get high-precision results; in addition, it has better results in multiple functions.

5.2 Comparison with improve FPA

In order to compare the performance of the algorithm with other improved flower pollination algorithms, it is compared with dimensional evolution FPA (MFPA) [50] and bee FPA (BPFPA) [80]. In the comparison experiment, the population size n is set to 30 and the number of iterations is 500 generations. The switching probability of MFPA and BPFPA is set to 0.8, and the parameter setting of the proposed algorithm is consistent with the previous section.

From the test results shown in Table 3, the results compared in some test functions all reached the same accuracy. Except in f10, f25 was less effective than MFPA, and the rest of the test results were better than the comparison algorithm. Therefore, on the whole, the proposed algorithm has better results compared with the two improved FPA (Table 4).

5.3 Comparison with other basic metaheuristic methods

To verify the results of the proposed algorithm, the other six state-of-the-art metaheuristic algorithms are employed: differential evolution( DE), flower pollination algorithm (FPA), particle swarm optimization (PSO), gravitational search algorithm (GSA), symbiotic organisms search (SOS) and teaching learning-based optimization (TLBO). These algorithms have been widely used in various fields. In the current experiment, the population size and maximum iteration of all algorithms are set to 30 and 500, respectively. In 30 independent operations, Table 5 shows function results performance statistics, Table 6 shows the mean and standard deviation of the proposed algorithm and other algorithms in solving the benchmark function, and the bold numbers represent the relatively best values of the compared algorithms. The specific parameter values of the comparison algorithm are shown in Table 4.

From the statistical data, Table 6 shows that the proposed algorithm fails to get satisfactory solutions in functions f10, f14, f20, f26, f28, f43, f46, f48 and f49. The functions of f10, f14, f20, f28, f48 and f49 are far from the theoretical values. Compared with the results obtained by other algorithms in these functions, the DE gets relatively better results in f10 and f47. SOS gets relatively better solutions in f10, f20, f47, f48 and f49; the functions of f49 are far from the theoretical values. PSO get relatively better solutions in function f28. TLBO gets relatively better solutions in f39, f42, f47, but these values are still different from the theoretical values. From the overall perspective, among the 49 benchmark functions, the proposed algorithm can find the theoretical optimal value among the 27 test functions. However, the number of theoretical values of DE, FPA, PSO, GSA, SOS and TLBO is 16, 8, 6, 4, 15 and 15, respectively. Meanwhile, Table 5 shows the number of occasions where the mean performance of MBFPA is better than, equality and worse than other comparison algorithms. From this table, it can be observed that the proposed algorithm performs better than DE, FPA, PSO, GSA, SOS and TLBO in 25,39,40,44,22 and 25 benchmark functions, respectively, equality results are seen in 15, 8, 5,3,18 and 18, and worse results are obtained in 3, 2, 3, 1, 4 and 2. Through the comparison of the experimental results and data, we can see that in most of the test functions, the proposed algorithm can get a more satisfactory solution, and has a strong competitiveness with the compared algorithm.

In addition, the performance of the algorithm is statistically tested. Wilcoxon nonparametric statistical test [80] and Friedman rank test [81] were conducted in this paper. Wilcoxon’s non-parametric statistical test returns a parameter call p-value [80]. When p value is less than 0.05, there is a significant difference between the two algorithms in solving the problem; when p > 0.05, there is no significant difference between the two algorithms in solving the problem. From the p-value test results in Table 7, except for functions f2, f4, f6, f 9, f13, f15, f21,f22, f31,f36 and f44, most of the algorithms can converge to the theoretical value, resulting in no significant difference in the test results of these functions; for the remaining functions, in function f16 and f41 the proposed algorithm has no significant difference with SOS; the proposed algorithm has no significant difference with PSO in function f22, f37, f42 and f49; the proposed algorithm has no significant difference with TLBO in function f22,f26,f37,f39, f41, f48. There are significant differences in other functions. Therefore, from the p value test results, this further shows that the proposed algorithm has superior performance.

Table 8 presents the ranks obtained by Friedman rank test from the mean performances of the algorithms for each benchmark functions. As can be seen from Table 8, MBFPA has the smallest grade, which indicates that MBFPA 's performance is better than the comparison algorithm (Table 9).

In order to compare the advantages of the algorithm in terms of convergence speed, Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13 and 14 show the convergence curve of the proposed algorithm and DE, FPA, PSO, GSA, SOS, TLBO on 14 benchmark functions. Among all the fitness value convergence graphs, their convergence curves are based on the results of 30 times of independent operation of 7 algorithms, and all the convergence curves are drawn with the average value. As shown in Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15, f11, f14, f24, f30 and f33 can reach the fastest convergence speed and can find the global optimal solution. In f6 functions, the fastest convergence speed is not achieved, but still can have a better ranking and ultimately can find the global optimal solution; f1, f 3, f5, f7, f12, f19, f 23, function in the early convergence speed is not the first, but in the later search, it reaches the highest convergence rate and continues to converge to the highest accuracy. Although the convergence effect of some functions cannot reach the fastest convergence rate in the early stage, and the convergence rate of f6 is lower than that of SOS, overall speaking, the convergence rate of the proposed algorithm is better than that of most other comparison algorithms.

5.4 Compared with the hybrid metaheuristic algorithm

In addition to the comparison with the basic metaheuristic algorithm, the other five hybrid metaheuristic algorithms are employed: hybrid algorithm of particle swarm optimization and Grey wolf optimizer (PSOGWO) [82], sine cosine crow search algorithm (SCSCA) [83], hybrid firefly and particle swarm optimization algorithm (HFPSO) [82], modified flower pollination algorithm(MFPA) [53] and hybrid whale optimization algorithm based on modified differential evolution (MDE-WOA) [84].

In the current experiment, the population size and maximum iteration number of all algorithms are set to 30 and 500, respectively. Among the 30 independent operations, Table 10 shows the performance statistics of the results of the function, and Table 11 shows the mean and standard deviation of the proposed algorithm and other algorithms in solving the benchmark function. Bold numbers represent the relative optimal values of the compared algorithms. The specific parameter values of the comparison algorithm are shown in Table 9.

According to the performance statistics in Table 10, among the 49 test results of MBFPA, 48 test results were better than PSOGWO. In the SCCSA results, the test results of f10, f 14, f20, f 26, f 28 and f 34 are better than the proposed algorithm, but there is a large gap with the theoretical value. In other test functions, it can be seen from Table 10 that the test results of the proposed algorithm are better than those of SCCSA in 38 test functions and achieve the same effect in 5 test results. In the HFPSO results, the HFPSO test results are better than the proposed algorithm in the test functions f10, f20, f43 and f49, but f20 and f49 are far from the theoretical values. In the remaining test functions, the proposed algorithm is better than the HFPSO in 37 test results and gets the same value in eight test function results. In the test results of MFPA, the proposed algorithm is not better than MFPA in 11 test functions, but better than the comparison algorithm in 24 test function results, and achieves the same effect in 14 test functions. In the test results of MDE-WOA, the results of functions f14, f20, f28, f34, f43 and f46 are better than the proposed algorithm, but it can be seen from Table 10 that the proposed algorithm is better than MDE-WOA in the 38 test results. Therefore, through the comparison of experimental results and data, we can see that in most of the test functions, the algorithm proposed in this paper can get more satisfactory solutions and has a strong competitiveness compared with the comparison algorithm.

In addition, from the p-value test results in Table 12, there is no significant difference in the test results of these functions, except that the comparison algorithm reaches the theoretical value in individual test results; for most of the other test functions, p-value is less than 0.05. Table 13 presents the ranks obtained by Friedman rank test from the mean performances of the algorithms for each benchmark functions. It can be seen from Table 13 that MBFPA has the smallest grade, which indicates that MBFPA's performance is better than the hybrid metaheuristic algorithm compared.

Figures 16, 17, 18, 19, 20, 21, 22 and 23 illustrate the convergence of the fitness values of PSOGWO, SCSCA, MFPA, HFPSO, BPFPA and MDE-WOA. These convergence graphs are based on the results of 30 independent runs of the six algorithms. From these figures, it can be clearly seen that MBFPA obtains the global optimal value faster than the other four algorithms. These experimental results demonstrate that MBFPA, which reflects its strong global search capability.

5.5 Results analysis

Now, why is MBFPA so effective? It mainly includes the following three aspects:

-

1.

The introduction of dynamic switching probability perfectly balances the distribution of exploitation and exploration.

-

2.

Because of the mixture of BOA and FPA, the exploration capability of the two algorithms is well reserved.

-

3.

Through the introduction of mutualism phase, the mutualism of individuals enhances the exploitation ability of the algorithm.

In particular, the butterfly optimization algorithm controls the switch between exploitation and exploration by transforming probability p. Because every step of exploitation and exploration switch is judged by rand < p, it may cause the individual who has been in the vicinity of the optimal solution to turn to exploration and lose the optimal solution. In addition, the BOA does not directly use the best solution as the reference point, but through \(r^{2} \times g^{{_{*} }}\) as the reference point, where r is a random number and \(g^{{_{*} }}\) is the best solution in the population, which reduces the guiding role of the best solution. Although it can reduce the probability of falling into the local optimum prematurely, it has a great limit on the exploitation ability of the algorithm. In the improved algorithm, the dynamic switching probability is introduced to distribute the proportion of development and exploration more reasonably. In the increasing symbiosis mechanism, on the one hand, the optimal position is used as the reference update position, which increases the ability of individuals to find the optimal solution around the near optimal solution; on the other hand, because symbiosis is carried out between two individuals, the improvement in any one in the symbiosis stage will increase the convergence speed in the whole iterative process.

Although MBFPA is effective, it has some weaknesses. Compared with the original BOA and FPA algorithm, it is obvious that the algorithm becomes more complex, which will increase the running time of the algorithm; in addition, increasing the mutualism stage is more dangerous. Because of the mutualism of each individual, it may lead to the decline of the individual who has been in the local optimal position. Therefore, how to selectively carry out mutually beneficial symbiosis of individuals is also a place worthy of consideration. The advantages and disadvantages of the above MBFPA algorithm are listed in Table 14.

6 Engineering design problems

In order to verify the performance of the proposed algorithm in constrained optimization problems, five engineering optimization problems (three-bar truss design, multi-plate disc clutch brake, pressure vessel, welded beam and speed reducer) from the structural field are compared and the best results are compared with other algorithms. In order to obtain a better solution, different maximum numbers of iterations and different population numbers are used for each different problem according to the number of optimization variables and constraints in all engineering optimization problems. The specific characteristics of these problems are given in Table 15.

6.1 Three-bar truss design problem

Three-bar truss design problem (see “Appendix A.1”) is the most common constraint optimization test problem, which is a minimization problem. The goal is to minimize the weight of three-bar truss, where the constraints are stress, deflection and buckling. Figure 24 shows the shape of the truss and the associated forces on the structure.

In this problem, the fixed population number is 30 and the maximum number of iterations is 100 and the optimal fitness value obtained by the proposed algorithm after 30 independent runs is f(X) = 263.895843376, X = [0.788675132828, 0.408248295461]. The best results of the proposed algorithm will be compared with the optimal solution obtained in the previous literature in Table 16. The results show that the proposed algorithm can get a better solution in this problem and show good performance.

6.2 Multi-plate disc clutch brake

This problem requires the minimization of the weight of a multiple disc clutch brake (see “Appendix A.2”) by considering five discrete design variables: inner radius (r1), outer radius(r0), thickness of discs(t), actuating force(F), and number of friction surfaces(Z) [48]. Figure 25 shows a multiple disc clutch brake. The difficulty of the optimization problem increases, because the problem includes eight different constraints, which will result in only 70% of the feasible region in the solution space.

The fixed population number is 30 and the maximum number of iterations is 500, and the optimal fitness value obtained by the proposed algorithm after 30 independent runs is f(X) = 0.235242457900804, X = [70, 90, 1, 600, 2]. The results obtained by MBPFA were compared with the optimal results of HHO [11], TLBO [30], WCA [47] and PVS [48]. As shown in Table 17, we can see that the proposed algorithm obtained the best solution in solving the problem.

6.3 Pressure vessel design problem

As a classical constrained engineering optimization problem, the goal of optimization is to minimize the total cost of welding, materials and manufacturing. The manufacturing configuration of the problem is shown in Fig. 26. The problem consists of four optimization variables: Ts (thickness of the shell), Th (thickness of the head), r (inner radius), L (length of section without the head). At the same time, it contains four different constraints. The details are given in “Appendix A.3.”

The fixed population number is 20 and the maximum number of iterations is 200, and the optimal fitness value obtained by the proposed algorithm after 30 independent runs is f(X) = 5885.3327736, X = [0.778168641371 0.384649162626 40.3196187241 200.0]. The obtained optimal solution is compared with other algorithms. Table 18 shows that the proposed algorithm is the best to deal with this problem and can obtain the optimal result.

6.4 Welded beam design problem

This problem requires designing the most appropriate height of weld (× 1), length of weld (× 2), height of beam (× 3), and width of beam (× 4) to minimize welding costs. Constraints include: shear stress, bending stress, buckling load on the bar, end deflection and side constraints [48]. The schematic diagram of the problem is shown in Fig. 27; the details are given in “Appendix A.4.” In this example, the fixed population number is 30 and the maximum number of iterations is 500, and the optimal fitness value obtained by the proposed algorithm after 30 independent runs is f(X) = 1.72485185, X = [0.205730, 3.470473, 9.036623, 0.205729]. The obtained optimal solution is compared with other 8 algorithms. In Table 19, we can conclude that the proposed algorithm can find the most suitable parameters and get the minimum fitness value.

6.5 Speed reducer

Speed reducer (as shown in Fig. 28) is designed to minimize the weight of the reducer. There are mainly 7 variables to be optimized: the face width(× 1), module of teeth(× 2), number of teeth on pinion(× 3), length of the first shaft between bearings(× 4), length of the second shaft between bearings(× 5), diameter of the first shaft(× 6) and diameter of the first shaft(× 7) [90]. In this problem, there are as many as 11 constraints, which is a challenging optimization problem, mainly to minimize the weight under the condition of bending stress of the gear teeth, surface stress, transverse deflections of the shafts and stresses in the shafts [90]. The details are given in “Appendix A.5.”

Multi-plate disc clutch brake problem [30]

Pressure vessel design problem [92]

Welded beam design problem [90]

Speed reducer [90]

The optimal fitness value obtained by the proposed algorithm after 30 independent runs is f(X) = 2994.341315, X = [3.5 0.7 17 7.3 7.7153199122 3.35021466 5.28665446]. The optimal solutions obtained by this paper are compared with those obtained by the other five algorithms. Table 20 shows the detailed comparison. It can be concluded that the proposed algorithm has advantages in the optimization under complex constraints and can obtain better solutions.

7 Conclusions and future work

In recent years, butterfly optimization algorithm has been proposed as a new heuristic algorithm, which is widely used in global optimization problems. In BOA algorithm, individuals can find the most fragrant position by simulating the foraging process of butterflies and then find the optimal solution in the global optimization. The random number disturbance is added to the optimal position during the update of the butterfly optimization algorithm, which avoids individual crowding, but it brings a problem: it is difficult to make the most of the current the leading role of the optimal position leads to the possibility of missing the optimal position in actual application. This will cause the algorithm to have low accuracy and slow convergence. Therefore, this paper proposes a hybrid optimization algorithm for butterfly and flower pollination base on mutualism mechanism, which increases the speed and accuracy of algorithm exploitation through the mutualism mechanism. At the same time, because the flower pollination algorithm is mixed, the alienated pollination of flowers is propagated through Levy flight, which can increase the algorithm's exploration capability and introduce a dynamic switching probability equilibrium exploration and exploitation process. The results show that the butterfly optimization algorithm and the flower pollination algorithm can get good results through the appropriate mechanism, and in terms of nature, butterflies and flowers are two inseparable creatures. Compared with other metaheuristics, this algorithm can find better or equal function values in most benchmark problems. From the results of the five engineering examples, the proposed algorithm has great advantages in solving constrained optimization problems and can get better solutions.

In the future research, in order to verify the ability of the hybrid algorithm to solve the constrained optimization problem, we will focus on the application of the proposed algorithm to the solution of practical problems. Since the vehicle routing problem (VRP) was proposed in 1959, it has been widely concerned by researchers because of its wide application and great economic value, such as postal delivery problem, vehicle scheduling problem and computer network topology problem [107]. VRP is a NP hard problem, which is difficult to solve with accurate algorithm. At present, metaheuristics have become prominent approaches in tackling complex and multi-objective problems [108]. So, metaheuristic algorithm is the main method to solve VRP. However, the core of using metaheuristic algorithm to solve VRP problem is how to encode the individuals according to the background of the problem. The quality of coding will directly affect the complexity of algorithm discretization and the performance of the algorithm. In addition, how to discretize the proposed algorithm so that the algorithm can play a good effect in the discrete problem space is also an important issue. Therefore, the design of reasonable coding and discrete version of MBPFA has become the key to VRP path problems in the future. In addition, we have identified four areas that deserve further study in the future.

Hybrid metaheuristics combines the advantages of different metaheuristics to provide simpler, better and faster solutions for many complex problems [76]. A new metaheuristic algorithm is proposed, which actually increases the diversity of metaheuristic algorithm in the update strategy. Different metaheuristic algorithms let the population update according to a specific update strategy. However, how to combine these update strategies in an efficient and reasonable way will be an interesting and exciting thing. Therefore, by coupling the characteristics of different strategies, a more effective hybrid algorithm will be the focus of future algorithm research.

In the absence of gradient information, the metaheuristic algorithm is easier to implement than the precise search algorithm. In the actual performance test, the parameters of metaheuristic algorithm greatly affect the performance of the algorithm. Most of the given reasonable parameters are obtained through a large number of experiments. Therefore, it is necessary to develop a function with adaptive parameter adjustment. Or, we should pay more attention to the nonparametric metaheuristic algorithm, because it can increase our thinking on the algorithm itself, rather than focusing on the adjustment of algorithm parameters. However, developing parameterless metaheuristic algorithms is yet an open problem and needs to be thoroughly studied [77]. Therefore, we should pay more attention to the development of nonparametric meta heuristic algorithm in the future.

Artificial neural networks (ANNs) [109] are well-known techniques utilized in learning, approximating and investigating various classes of complex problems [110]. ANNs are used for prediction purposes in most cases, and it tries to close the gap among the predicted solution and the given target solution by frequently adjusting the values of weight functions [111]. There are two methods to adjust the weight: metaheuristic-based trainers and gradient-based trainers. Among them, the gradient-based trainer has better performance in local search [112], while the metaheuristic-based trainers has better performance in global search and can effectively avoid local optimization [110]. Some works use genetic algorithm (GA), differential evolution (DE) and evolutionary strategy (ES) to improve artificial neural network [113,114,115,116,117,118,119,120,121,122]. The results affirm the satisfactory results of these hybrid MLP structures [123].Although a lot of work has been done so far, searching for global results of hybrid MLP networks is still an open question [124, 125]. From No Free Lunch theorem [126], a new superior SMHA can still be designed to be integrated with MLP networks [123]. Therefore, metaheuristic algorithm is still worthy of optimizing ANN in the future.

In practical engineering applications, such as product design, wing design, investment allocation, urban planning and other issues, they have more than one single goal, and they need to meet a large number of constraints at the same time. In addition, these problems have many decision variables, which are regarded as large-scale optimization problems. Currently, most of the existing metaheuristic algorithms proposed either require thousands of expensive exact function evaluations to obtain acceptable solutions or focus on solving low-dimensional and expensive optimization problems [127]. This greatly limits the application space of metaheuristic algorithm and seriously affects the performance and efficiency of the algorithm. However, the rise of surrogate-assisted metaheuristic algorithms (SAEAs) offers possibilities to solve this problem. The main parts of SAEAs are the surrogates and the evolutionary optimizer. SAEAs use proxy surrogates to reduce the amount of computation brought by the fitness evaluation in the iterative process [128]. In the future development process of metaheuristic algorithms, surrogate-assisted metaheuristic algorithms will become more and more worthy of attention.

Finally, we hope that this paper will inspire researchers in metaheuristics and optimization field.

References

Hussain K et al (2019) Metaheuristic research: a comprehensive survey. Artif Intell Rev 52:2191–2233

Blum C et al (2011) Hybrid metaheuristics in combinatorial optimization: a survey. Appl Soft Comput 11:4135–4151

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of the 1995 IEEE international conference on neural networks, pp 1942–1948.

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. IEEE Comput Intell 1:28–39

Basturk B, Karaboga D (2006) An artificial bee colony (ABC) algorithm for numeric function optimization. In: Proceedings of the IEEE swarm intelligence symposium, pp 12–14.

Yang X-S, Deb S (2009) Cuckoo search via Lévy flights. In: Proceedings of the world congress on nature & biologically inspired computing, NaBIC 2009, pp 210–14

Yang XS (2012) Flower pollination algorithm for global optimization. In: Unconventional computation and natural computation, Lecture notes in computer science, 2012, 7445, pp 240–249

Yang X-S (2010) Firefly algorithm, stochastic test functions and design optimisation. Int J Bio-Inspired Comput 2:78–84

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Heidari AA et al (2019) Harris hawks optimization: algorithm and applications. Future Gener Comput Syst 97:849–872

Cheng M-Y, Prayogo D (2014) Symbiotic organisms search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112

Arora S, and S Singh. Butterfly optimization algorithm: a novel approach for global optimization. Soft Computing 23.3 (2019): 715–734.

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3(2):95–99

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Jiao L, Wang L (2000) A novel genetic algorithm based on immunity. IEEE Trans Syst Man Cybern A Syst Hum 30:552–561

De Castro LN, Von Zuben FJ (2000) The clonal selection algorithm with engineering applications. In: Proceedings of GECCO, vol 2000.

Dasgupta D et al (2004) Negative selection algorithm for aircraft fault detection. In: International conference on artificial immune systems. Springer, Berlin

Kelsey J, Timmis J (2003) Immune inspired somatic contiguous hypermutation for function optimisation. Genetic Evolut Comput Conf, Springer, Berlin

Van Laarhoven PJM, Aarts EHL (1987) Simulated annealing: theory and applications. Springer, Dordrecht, pp 7–15

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179:2232–2248

Shareef H, Ibrahim AA, Mutlag AH (2015) Lightning search algorithm. Appl Soft Comput 36:315–333

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Abedinpourshotorban H et al (2016) Electromagnetic field optimization: a physics-inspired metaheuristic optimization algorithm. Swarm Evolut Comput 26:8–22

Faramarzi A et al (2019) Equilibrium optimizer: a novel optimization algorithm. Knowl Based Syst 105190.

Lam AYS, Li VOK (2009) Chemical-reaction-inspired metaheuristic for optimization. IEEE Trans Evolut Comput 14(3):381–399

Alatas B (2011) ACROA: artificial chemical reaction optimization algorithm for global optimization. Expert Syst Appl 38:13170–13180

Kumar M, Kulkarni AJ, Satapathy SC (2018) Socio evolution & learning optimization algorithm: a socio-inspired optimization methodology. Future Gener Comput Syst 81:252–272

Kuo HC, Lin CH (2013) Cultural evolution algorithm for global optimizations and its applications. J Appl Res Technol 11:510–522

Rao RV, Savsani VJ, Vakharia DP (2011) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Design 43(3):303–315

Atashpaz-Gargari E, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In: 2007 IEEE congress on evolutionary computation. IEEE

Arora S, Singh S (2016) An improved butterfly optimization algorithm for global optimization. Advanced Science, Engineering and Medicine 8(9):711–717

Arora S, Singh S (2017) An improved butterfly optimization algorithm with chaos. J Intell Fuzzy Syst 32(1):1079–1088

Arora S, Singh S (2017) A hybrid optimisation algorithm based on butterfly optimisation algorithm and differential evolution. Int J Swarm Intell 3(2–3):152–169

Arora S, Singh S (2017) An effective hybrid butterfly optimization algorithm with artificial bee colony for numerical optimization. Int J Interact Multimed Artif Intell 4(4):14–21

Arora S, Anand P (2018) Learning automata-based butterfly optimization algorithm for engineering design problems. Int J Comput Mater Sci Eng 7(04):1850021

Arora S, Singh S, Yetilmezsoy K (2018) A modified butterfly optimization algorithm for mechanical design optimization problems. J Braz Soc Mech Sci Eng 40(1):21

Singh B, Anand P (2018) A novel adaptive butterfly optimization algorithm. Int J Comput Mater Sci Eng 7(04):1850026

Li G, Shuang F, Zhao P et al (2019) An improved butterfly optimization algorithm for engineering design problems using the cross-entropy method. Symmetry 11(8):1049

Sharma S, Saha AK (2020) m-MBOA: a novel butterfly optimization algorithm enhanced with mutualism scheme. Soft Comput 24:4809–4827

Sharma S, Saha AK, Ramasamy V et al (2020) hBOSOS: an ensemble of butterfly optimization algorithm and symbiosis organisms search for global optimization. In: Advanced computing and intelligent engineering. Springer, Singapore, vol. 1089, pp 579–588

Arora S, Anand P (2019) Binary butterfly optimization approaches for feature selection. Expert Syst Appl 116:147–160

Jalali SMJ, Ahmadian S, Kebria PM, et al (2019) Evolving artificial neural networks using butterfly optimization algorithm for data classification. In: International conference on neural information processing. Springer, Cham, pp 596–607.

Du P et al (2019) Container throughput forecasting using a novel hybrid learning method with error correction strategy. Knowl-Based Syst 182:104853

Kisi O et al (2019) Drought forecasting using novel heuristic methods in a semi-arid environment. J Hydrol 578:124053

Priyadharshini P, Prakashraj K, Padmapriya S (2019) Improved butterfly optimization algorithm using local search operator for capacitated vehicle routing problem. Inter Res J Eng Technol (IRJET) 6(4):983–988

Abdul-Rashid R, Alawode BO (2019) Robustness evaluation of the butterfly optimization algorithm on a control system. arXiv preprint arXiv:1912.00185

El Hajjami L, Mellouli EM, Berrada M (2019) Optimal PID control of an autonomous vehicle using butterfly optimization algorithm BOA. In: Proceedings of the 4th international conference on big data and internet of things, pp 1–5.

Arora S, Singh S (2017) Node localization in wireless sensor networks using butterfly optimization algorithm. Arab J Sci Eng 42(8):3325–3335

Wang R, Zhou Y (2014) Flower pollination algorithm with dimension by dimension improvement. Math Probl Eng 481791, 9 pages

Zhao C, Zhou Y (2016) A complex encoding flower pollination algorithm for global numerical optimization. In: International conference on intelligent computing. Springer, Cham, pp. 667–678.

Zhou Y, Wang R, Luo Q (2016) Elite opposition-based flower pollination algorithm. Neurocomputing 188:294–310

Nabil E (2016) A modified flower pollination algorithm for global optimization. Expert Syst Appl 57:192–203

Singh D, Singh U, Salgotra R (2018) An extended version of flower pollination algorithm. Arab J Sci Eng 43(12):7573–7603

Lei M, Zhou Y, Luo Q (2019) Enhanced metaheuristic optimization: wind-driven flower pollination algorithm. IEEE Access 7:111439–111465

Pauline O, Meng OK, Kiong SC (2017) An improved flower pollination algorithm with chaos theory for function optimization. AIP Conf Proc AIP Publ LLC 1870(1):050012

Pathak P, Mahajan K (2015) A pollination based optimization for load balancing task scheduling in cloud computing. Int J Adv Res Comput Sci 6(7).

Jensi R, Jiji GW (2015) Hybrid data clustering approach using k-means and flower pollination algorithm. arXiv preprint arXiv:1505.03236.

Agarwal P, Mehta S (2016) Enhanced flower pollination algorithm on data clustering. Int J Comput Appl 38(2–3):144–155

Wang R, Zhou Y, Qiao S et al (2016) Flower pollination algorithm with bee pollinator for cluster analysis. Inf Process Lett 116(1):1–14

Harikrishnan R, Jawahar Senthil Kumar V, Sridevi Ponmalar P (2015) Nature inspired flower pollen algorithm for WSN localization problem. ARPN J Eng. Appl Sci 10(5):2122–2125

Goyal S, Patterh MS (2015) Flower pollination algorithm based localization of wireless sensor network. In: 2015 2nd international conference on recent advances in engineering & computational sciences (RAECS). IEEE, pp 1–5.

Binh HTT, Hanh NT, Dey N (2018) Improved cuckoo search and chaotic flower pollination optimization algorithm for maximizing area coverage in wireless sensor networks. Neural Comput Appl 30(7):2305–2317

Bensouyad M, Saidouni DE (2015) A hybrid discrete flower pollination algorithm for graph coloring problem. In: Proceedings of the international conference on engineering & MIS 2015, pp 1–6.

Chakraborty D, Saha S, Maity S (2015) Training feedforward neural networks using hybrid flower pollination-gravitational search algorithm. In: 2015 International conference on futuristic trends on computational analysis and knowledge management (ABLAZE). IEEE, pp 261–266.

Chiroma H, Khan A, Abubakar AI et al (2016) A new approach for forecasting OPEC petroleum consumption based on neural network train by using flower pollination algorithm. Appl Soft Comput 48:50–58

Velamuri S, Sreejith S, Ponnambalam P (2016) Static economic dispatch incorporating wind farm using flower pollination algorithm. Perspect Sci 8:260–262

Gonidakis D (2016) Application of flower pollination algorithm to multi-objective environmental/economic dispatch. Int J Manag Sci Eng Manag 11(4):213–221

Putra PH, Saputra TA (2016) Modified flower pollination algorithm for nonsmooth and multiple fuel options economic dispatch. In: 2016 8th international conference on information technology and electrical engineering (ICITEE). IEEE, pp 1–5.

Shilaja C, Ravi K (2017) Optimization of emission/economic dispatch using euclidean affine flower pollination algorithm (eFPA) and binary FPA (BFPA) in solar photo voltaic generation. Renew Energy 107:550–566

Abdel-Baset M, Ibrahim MH (2015) An improved flower pollination algorithm for ratios optimization problems. Appl Math Inf Sci Lett Int J 3(2):83–91

Gautam U, Malmathanraj R, Srivastav C (2015) Simulation for path planning of autonomous underwater vehicle using flower pollination algorithm, genetic algorithm and Q-learning. In: 2015 international conference on cognitive computing and information processing (CCIP). IEEE, pp 1–5

Zhou Y, Wang R (2016) An improved flower pollination algorithm for optimal unmanned undersea vehicle path planning problem. Int J Pattern Recognit Artif Intell 30(04):1659010

Wang R, Zhou Y, Zhao C et al (2015) A hybrid flower pollination algorithm based modified randomized location for multi-threshold medical image segmentation. Bio Med Mater Eng 26(s1):S1345–S1351

Doğan B, Ölmez T (2015) A new metaheuristic for numerical function optimization: vortex search algorithm. Inf Sci 293:125–145

Dokeroglu T, Sevinc E, Kucukyilmaz T et al (2019) A survey on new generation metaheuristic algorithms. Comput Ind Eng 137:106040

Ezugwu AE, Prayogo D (2019) Symbiotic organisms search algorithm: theory, recent advances and applications. Expert Syst Appl 119:184–209

Rashedi E, Rashedi E, Nezamabadi-pour H (2018) A comprehensive survey on gravitational search algorithm. Swarm Evolut Comput 41:141–158

Rubinstein RY (1997) Optimization of computer simulation models with rare events. Eur J Oper Res 99(1):89–112

Ram JP, Babu TS, Dragicevic T et al (2017) A new hybrid bee pollinator flower pollination algorithm for solar PV parameter estimation. Energy Convers Manag 135:463–476

Zimmerman DW, Zumbo BD (1993) Relative power of the Wilcoxon test, the Friedman test, and repeated-measures ANOVA on ranks. J Exp Educ 62(1):75–86

Singh N, Singh SB (2017) Hybrid algorithm of particle swarm optimization and grey wolf optimizer for improving convergence performance. J Appl Math 2017:1–14

Khalilpourazari S, Pasandideh SHR (2019) Sine–cosine crow search algorithm: theory and applications. Neural Comput Appl 1–18. https://doi.org/10.1007/s00521-019-04530-0. in press

Luo J, Shi B (2019) A hybrid whale optimization algorithm based on modified differential evolution for global optimization problems. Appl Intell 49(5):1982–2000

Zhang M, Luo W, Wang X (2008) Differential evolution with dynamic stochastic selection for constrained optimization. Inform Sci 178:3043–3074

Saremi S, Mirjalili S, Lewis A (2017) "Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl Based Syst 89:228–249

Liu H, Cai Z, Wang Y (2010) Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl Soft Comput 10:629–640

Mirjalili S et al (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Sadollah A et al (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13(5):2592–2612

Zheng YJ (2015) Water wave optimization: a new nature-inspired metaheuristic. Comput Oper Res 55:1–11

Savsani P, Savsani V (2016) Passing vehicle search (PVS): A novel metaheuristic algorithm. Appl Math Model 40:3951–3978

Deb K (1991) Optimal design of a welded beam via genetic algorithms. AIAA J 29(11):2013–2015

He Q, Wang L (2007) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186:1407–1422

Yao W (2014) Genetic quantum particle swarm optimization algorithm for solving traveling salesman problems. In: Fuzzy information & engineering and operations research & management. Springer, Berlin, pp 67–74

Kaveh A, Bakhshpoori T (2016) Water evaporation optimization: a novel physically inspired optimization algorithm. Comput Struct 167:69–85

Gandomi AH et al (2013) Bat algorithm for constrained optimization tasks. Neural Comput Appl 22:1239–1255

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20(1):89–99

Montemurro M, Vincenti A, Vannucci P (2013) The automatic dynamic penalisation method (ADP) for handling constraints with genetic algorithms. Comput Methods Appl Mech Eng 256:70–87

Mezura-Montes E et al (2007) Multiple trial vectors in differential evolution for engineering design. Eng Opt 39(5):567–589

Moosavi SHS, Bardsiri VK (2017) Satin bowerbird optimizer: a new optimization algorithm to optimize ANFIS for software development effort estimation. Eng Appl Artif Intell 60:1–15

Ragsdell KM, Phillips DT (1976) Optimal design of a class of welded structures using geometric programming, pp 1021–1025.

Lee KS, Geem ZW (2004) A new structural optimization method based on the harmony search algorithm. Comput Struct 82:781–798

Huang F, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186(1):340–356

Mezura-Montes E, Velázquez-Reyes J, Coello CAC (2006) Modified differential evolution for constrained optimization. In: 2006 IEEE international conference on evolutionary computation. IEEE, pp 25–32

Wang Y et al (2009) Constrained optimization based on hybrid evolutionary algorithm and adaptive constraint-handling technique. Struct Multidiscip Optim 37:395–413

Braekers K, Ramaekers K, Van Nieuwenhuyse I (2016) The vehicle routing problem: state of the art classification and review. Comput Ind Eng 99:300–313

Caceres-Cruz J, Arias P, Guimarans D et al (2014) Rich vehicle routing problem: Survey. ACM Comput Surv (CSUR) 47(2):1–28

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5(4):115–133

Aljarah I, Faris H, Mirjalili S (2018) Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput 22(1):1–15

Zhang Y, Jin Z, Chen Y (2020) Hybrid teaching–learning-based optimization and neural network algorithm for engineering design optimization problems. Knowl Based Syst 187:104836

Zhang JR, Zhang J, Lok TM et al (2007) A hybrid particle swarm optimization–back-propagation algorithm for feedforward neural network training. Appl Math Comput 185(2):1026–1037

Alba E, Chicano JF (2004) Training neural networks with GA hybrid algorithms. In: Genetic and evolutionary computation conference. Springer, Berlin, pp 852–863.

Sexton RS, Dorsey RE, Johnson JD (1999) Optimization of neural networks: a comparative analysis of the genetic algorithm and simulated annealing. Eur J Oper Res 114(3):589–601

Sexton RS, Gupta JND (2000) Comparative evaluation of genetic algorithm and backpropagation for training neural networks. Inf Sci 129(1–4):45–59

Siddique MNH, Tokhi MO (2001) Training neural networks: backpropagation vs. genetic algorithms. In: IJCNN'01. International joint conference on neural networks. Proceedings (Cat. No. 01CH37222), vol 4. IEEE, pp 2673–2678.

Whitley D, Starkweather T, Bogart C (1990) Genetic algorithms and neural networks: Optimizing connections and connectivity. Parallel Comput 14(3):347–361

Hansen N, Müller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (cma-es). Evolut Comput 11(1):1–18

Ilonen J, Kamarainen JK, Lampinen J (2003) Differential evolution training algorithm for feed-forward neural networks. Neural Process Lett 17(1):93–105

Slowik A, Bialko M (2008) Training of artificial neural networks using differential evolution algorithm. In: 2008 conference on human system interactions. IEEE, pp 60–65

Wdaa ASI, Sttar A (2008) Differential evolution for neural networks learning enhancement. Universiti Teknologi Malaysia, Johor Bahru

Wienholt W (1993) Minimizing the system error in feedforward neural networks with evolution strategy. In: International conference on artificial neural networks. Springer, London, pp 490–493.

Heidari AA, Faris H, Mirjalili S et al (2020) Ant lion optimizer: theory, literature review, and application in multi-layer perceptron neural networks, Nature-Inspired Optimizers. Cham, Springer, pp 23–46

Derrac J, García S, Molina D et al (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evolut Comput 1(1):3–18

Faris H, Aljarah I, Mirjalili S (2016) Training feedforward neural networks using multi-verse optimizer for binary classification problems. Appl Intell 45(2):322–332

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

Tian J, Sun C, Tan Y et al (2020) Granularity-based surrogate-assisted particle swarm optimization for high-dimensional expensive optimization. Knowl Based Syst 187:104815

Zheng Y, Fu X, Xuan Y (2019) Data-driven optimization based on random forest surrogate. In: 2019 6th international conference on systems and informatics (ICSAI). IEEE, pp 487–491

Acknowledgements

This work is supported by National Science Foundation of China under Grant No. 61563008, and by Project of Guangxi Natural Science Foundation under Grant No. 2018GXNSFAA138146.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

1.1 A.1. Three-bar truss design

Minimize \(f(x) = (2\sqrt 2 x_{1} + x_{2} ) \times l\).

Subject to \(g_{1} (x) = \sqrt 2 x_{1} + x_{2} /\sqrt 2 x_{1}^{2} + 2x_{1} x_{2} P - \sigma \le 0;\)

Variable range \(0 \le x_{1} ,x_{2} \le 1\).

where \(l = 100\,{\hbox{cm}};\;\,P = 2\,{\hbox{kN}}/{\hbox{cm}}^{2} ;r = 2\,{\hbox{kN}}/{\hbox{cm}}^{2}\).

1.2 A.2. Multi-plate disc clutch brake design problem

Minimize \(f(x) = \pi (x_{2}^{2} - x_{1}^{2} )x_{3} (x_{5} + 1)\rho\).

Subject to:

\(g_{1} (x) = x_{2} - x_{1} - \Delta R \ge 0\), \(g_{2} (x) = L_{\max } - (x_{5} + 1)(x_{3} + \delta ) \ge 0\)

\(g_{3} (x) = P_{\max } - P_{rz} \ge 0\), \(g_{4} (x) = P_{\max } v_{sr\max } - P_{rz} v_{sr} \ge 0\)

\(g_{5} (x) = v_{sr\max } - v_{sr} \ge 0\), \(g_{6} (x) = T_{\max } - T \ge 0\)

\(g_{7} (x) = M_{h} - sM_{s} \ge 0\), \(g_{8} (x) = T \ge 0\)

where \(M_{h} = \frac{2}{3}\mu x4x5\frac{{x_{2}^{3} - x_{1}^{3} }}{{x_{2}^{2} - x_{1}^{2} }},\) \(w = \frac{\pi n}{{30}}rad/s\), \(A = \pi (x_{2}^{2} - x_{1}^{2} )\,{\hbox{mm}}^{2} ,\)

\(P_{rz} = \frac{{x_{4} }}{A}\,{\hbox{ N}}/{\hbox{mm}}^{2} ,\) \(V_{sr} = \frac{{\pi R_{sr} n}}{30}\,{\hbox{mm}}/{\hbox{s}}\), \(R_{sr} = \frac{2}{3}\frac{{x_{2}^{3} - x_{1}^{3} }}{{x_{2}^{2} x_{1}^{2} }}\,{\hbox{mm}}\)

\(T = \frac{{I_{z} \pi n}}{{30(M_{h} + M_{f} )}}\) mm, \(\Delta r = 20\,{\hbox{mm}},L\max = 30\,{\hbox{mm}},\mu = 0.6\)

\(T_{\max } = 15s,\mu = 0.5,s = 1.5,M_{s} = 40Nm,\)

\(p_{\max } = 1Mp_{a} ,\rho = 0.0000078\,{\hbox{kg}}/{\hbox{mm}}^{3}\), \(v_{sr\max } = 10\,{\hbox{m}}/{\hbox{s}},\delta = 0.5\,{\hbox{mm}},s = 1.5\)

\(T_{\max } = 15s,n = 250\,{\hbox{rpm}},\;I_{z} = 55{\hbox{kg}}\,{\hbox{m}}^{2}\), \(M_{s} = 40\,{\hbox{Nm}},\;M_{f} = 3\,{\hbox{Nm}}\)

\(60 \le x_{1} \le 80,90 \le x_{2} \le 110,1 \le x_{3} \le 3,\)

\(60 \le x_{4} \le 1000,2 \le x_{5} \le 9,\)\(i = 1,2,3,4,5\).

1.3 A.3. Pressure vessel design problem

Consider \(x = [x_{1} ,x_{2} ,x_{3} ,x_{4} ] = [T_{s} ,T_{h} ,R,L]\).

Minimize \(f(x) = 0.6224x_{1} x_{3} x_{4} + 1.7781x_{2} x_{2}^{3} + 3.1661x_{1}^{2} x_{4} + 19.84x_{1}^{2} x_{3}\).

Subject to:

\(g_{1} (x) = - x_{1} + 0.0193x_{3} \le 0\), \(g_{2} (x) = - x_{3} + 0.00954z_{3} \le 0\)

\(g_{3} (x) = - \pi x_{3}^{2} x_{4} - \frac{4}{3}\pi x_{3}^{3} + 1,296,000 \le 0\),\(g_{4} (x) = x_{4} - 240 \le 0\)

Variable range \(0 \le x_{1} ,x_{2} \le 99\); \(0 \le x_{3} ,x_{4} \le 200\).

1.4 A.4. Welded beam design problem

Consider \({\text{Z}} = [z_{1} ,z_{2} ,z_{3} ,z_{4} ] = [h,l,t,b]\).

Minimize \(f(Z) = 1.10471z_{1}^{2} z_{2} + 0.04811z_{3} z_{4} (14.0 + z_{2} )\).

Subject to

\(g_{1} (Z) = \tau ({\text{Z) - }}\tau_{{\max}} \le 0\),\(g_{2} (Z) = \sigma (Z) - \sigma_{\max } \le 0\),\(g_{3} (Z) = \delta (Z) - \delta_{\max } \le 0\)

\(g_{4} (Z) = z_{1} - z_{4} \le 0\),\(g_{5} (Z) = P - P_{c} (Z) \le 0\),\(g_{6} (Z) = 0.125 - z_{1} \le 0\)