Abstract

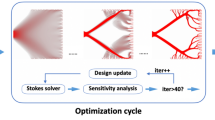

Topology optimization has proven to be viable for use in the preliminary phases of real world design problems. Ultimately, the restricting factor is the computational expense since a multitude of designs need to be considered. This is especially imperative in such fields as aerospace, automotive and biomedical, where the problems involve multiple physical models, typically fluids and structures, requiring excessive computational calculations. One possible solution to this is to implement codes on massively parallel computer architectures, such as graphics processing units (GPUs). The present work investigates the feasibility of a GPU-implemented lattice Boltzmann method for multi-physics topology optimization for the first time. Noticeable differences between the GPU implementation and a central processing unit (CPU) version of the code are observed and the challenges associated with finding feasible solutions in a computational efficient manner are discussed and solved here, for the first time on a multi-physics topology optimization problem. The main goal of this paper is to speed up the topology optimization process for multi-physics problems without restricting the design domain, or sacrificing considerable performance in the objectives. Examples are compared with both standard CPU and various levels of numerical precision GPU codes to better illustrate the advantages and disadvantages of this implementation. A structural and fluid objective topology optimization problem is solved to vary the dependence of the algorithm on the GPU, extending on the previous literature that has only considered structural objectives of non-design dependent load problems. The results of this work indicate some discrepancies between GPU and CPU implementations that have not been seen before in the literature and are imperative to the speed-up of multi-physics topology optimization algorithms using GPUs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past two decades topology optimization has rapidly matured to a point where it can be used in real world design applications with minimal limitations [34]. However, one such limitation is the computational resources required for large scale problems [11]. For engineering problems, the design space being considered is large and the objective function typically involves multiple, complicated, physical phenomena. Therefore, this leads to computationally intensive problems. The aim of this paper is to determine the feasibility of using GPUs with multi-physics topology optimization algorithms for real world design problems. The increase in computational efficiency due to the GPU architecture and quality of the final solutions are compared with the same problem implemented on a CPU.

Recently, reductions in computational expense are achieved by increasing the level of parallelism, i.e. increasing the number of computational cores while maintaining the same clock frequency, in the code [67]. This has meant the development and use of many-core processors, which are processors that have evolved to a high-level of parallelism, for such tasks. GPUs are a class of many-core processors. GPUs have a different design approach compared with CPUs. CPUs are a general purpose multi-core processor containing many high level instructions, whereas GPUs are many-core processors that have a faster and smaller set of instructions, but are capable of handling a large number of concurrent threads. Therefore, GPUs can be used to drastically speed up computationally intensive problems and reduce the overall computational expense.

Topology optimization, generally speaking, aims to evolve an initial design towards an optimum one with regards to minimizing a given objective under several constraints [6]. Several approaches have been developed to guide the evolution of the topology towards the optimum [11, 34, 49]. These approaches can be divided into two main fields: continuous and discrete. Continuous methods, such as the solid isotropic material with penalization (SIMP) [4, 45], apply a relaxation on the design variables so that their values can be inside the entire range defined by [0, 1]. Discrete methods, such as evolutionary structural optimization (ESO) [63] and level-set (LS) [41], do not relax the problem and hence restrict the design variables to the boundaries of the range \(\left\{ 0, 1 \right\}\). While some effort using SIMP and LS methods have been solved with GPU architectures [3], only one recent study exists with ESO methods [30] and only with structural optimization.

Application of the SIMP method to large-scale problems, with millions of design variables, has proven to be computationally demanding and, therefore, requires a high level of parallelism [3]. As an example, the work of Mahdavi et al. [28] demonstrates a SIMP method for topology optimization with parallelization on a CPU. Further Vemaganti and Lawrence [58], look at three different parallel linear solvers for SIMP topology optimization showing speed-up and reduced effects of ill-conditioning in the finite element problems. However, GPUs as an alternative low-cost-high-performance system have also been tested for solving topology optimization problems with SIMP methods. Schmidt and Schulz [47] use SIMP on structured meshes with a matrix-free conjugate gradient solver, showing that it is faster than when a CPU with 48 cores shared memory is used. Such a strategy was also employed by Suresh [53] for solving of the system of equations of elasticity, achieving speedups of one order of magnitude. A GPU-implemented SIMP method with a preconditioned conjugate gradient solver applied to a 2D plate with a heat source yielded a speed up of 20 times compared with a single CPU and 3 times against a multi-threaded CPU [59]. This study was limited to single-precision format, due to the lack of a native double-precision support for early GPUs, which meant that convergence of the solver was not ensured due to round-off errors. Recently, a SIMP approach for unstructured meshes was implemented on a GPU by Zegard and Paulino [67], focusing on assembly of the stiffness matrix. Furthermore Wu et al. [62], used the geometric multi-grid preconditioning for the GPU instance of preconditioned conjugate gradient solvers to perform a reduced number of finite element analyses (FEA), and iterations per FEA, in the SIMP algorithm. This is achieved by reducing the tolerance of the iterative method to increase the GPU performance. However, using this configuration the solution is likely to arrive at a local optimum, meaning a more sound solution might exist [62]. This loss in accuracy was assumed by the authors for the sake of efficiency.

In topology optimization the LS method evolves the boundaries of the structure by minimizing a given objective. The boundary evolution is solved using a finite difference method, which acts on a reduced group of elements, making it suitable for efficient GPU processing [32]. The LS method was implemented on a GPU architecture by Herrero et al. [17]. Later, an inverse homogenization problem was solved with a GPU implementation of a LS method by Challis et al. [8], targeting high resolution topology optimization. They recorded an increasing speed-up with problem size, reaching 13 times speed-up for 3D problems containing over 4 million design variables.

Meta-heuristic optimization methods, where no gradients are taken, can also be used to solve real world problems of high-dimensionality [31]. These methods often use nature inspired algorithms, which are ideal for intelligently harnessing the capacity of GPUs. One such method, which uses a Tabu Search algorithm, has been implemented on a GPU architecture [56]. They showed that for problems of high dimensionality, defined as 270 variables or more, the GPU-implemented version of the code outperforms the CPU version. Up to a 12% speed up was recorded. Later, the same authors developed a GPU-implemented lattice Boltzmann method (LBM) and applied the TS algorithm to a micro fluidic device [57]. They noted that the most computationally intensive part of the process was the simulation of the flow via LBM. Therefore, the TS algorithm was employed on a CPU, since relatively small speed-ups were achieved [56], and the LBM was employed on a GPU. The TS combined with the GPU-LBM delivered results approximately 20 times faster compared to an earlier system that employed a CPU-based LBM code [10]. Recently Laniewski-Wollk and Rokicki [24], developed a discrete adjoint formulation for a wide class of LBMs. They implement their LBM on a GPU architecture for channel flow to design a free-topology mixer and heat exchanger using the method of moving asymptotes (MMA) and a simple descent algorithm, separately. While the method was not compared to a CPU implementation of the code, it was shown to be efficient by demonstrating that the code had nearly linear weak scaling.

The limited literature on GPU-implemented topology optimization shows that the solver is the most time consuming part of the optimization and hence should be the focus during the adaptation to GPU architecture [3]. Furthermore, the other procedures, such as the optimizer, are not computationally expensive and therefore not directly relevant for good acceleration through GPU. Hence, studies have focused on implementing the solver on GPU architectures without topology optimization. For purely structural topology optimization a finite element solver is used to determine the displacements of the structure under a given load. Cecka et al. [7] presented a GPU accelerated FEA code using an unstructured mesh, achieving a speed-up of 30 or more compared to an optimized double-precision single core implementation. For a review of the literature on the use of GPUs in FEA the reader is advised to seek the manuscript by Georgescu et al. [16]. Topology optimization of multi-physics problems is a much less researched topic, especially compared to structural topology optimization. This may be because computational fluid dynamics (CFD) is a much more computationally intensive task compared with FEA. Recent studies have shown the potential of LBM methods in multi-physics topology optimization [24, 29, 35, 36, 42, 43]. Further, since the LBM operates on a finite difference grid, is explicit in nature and requires only next neighbor interaction, it is very suitable for implementation on GPUs [55]. Tölke and Krafczyk [55] demonstrate a very efficient implementation of a LBM in 3D on a GPU. They obtain an efficiency gain of up to two orders of magnitude with respect to the performance on a CPU. Kuznik et al. [23] implement a general purpose LBM code, with all steps of the algorithm running on the GPU, achieving up to one billion lattice updates per second using single-precision floating points. Further, they show that single-precision floating point arithmetic is sufficient having a 3.8 times speed-up compared to double-precision. GPU implementation of LBMs have been used for real-time visualization of fluid–structure interactions (FSI) for two-dimensional problems [15]. The authors achieved a speed increase when using their GPU-LBM of 222 times compared with a one core and 78 times compared with a two core CPU. Schönherr et al. [48] compare two multi-thread based parallel implementations of the LBM on different hardware platforms: a multi-core CPU implementation and a GPU implementation. They show that the limiting factor for the speed of the lattice Boltzmann simulation is the memory bandwidth. More recently Obrecht et al. [40], present a multi-GPU LBM solver managing to run the solver on six GPUs in parallel. With this architecture, they observed up to \(2.15 \times 10^9\) node updates per second for the 2D lid-driven cavity test case. Such a performance is comparable to large high performance clusters or massively parallel super computers, showing the potential of GPU implementation in LBMs. Delbosc et al. [12] showed that real-time compute capability and satisfactory physical accuracy are achievable by combing a lattice Boltzmann model with the parallel computing power of a GPU. Along these lines Khan et al. [22], performed real-time simulations of indoor environments, demonstrating significant speed up when implementing a lattice Boltzmann method on a GPU compared with traditional CFD based large eddy simulations.

The aim of this article is to determine the feasibility of using a GPU-LBM code with a bi-directional evolutionary structural optimization (BESO) algorithm for real world multi-physics design problems. So far BESO algorithms have not been employed with GPU architectures [3]. Furthermore, GPU implementation in topology optimization is a very recent field of research and therefore more studies must be made to increase the application of topology optimization in real world design problems. To the best of the authors’ knowledge this is the first time a multi-physics topology optimization problem with an LBM-FEA code is implemented in a GPU architecture and compared with a CPU version of the code. The speed-up and optimization results are compared and discussed giving new insights into the difficulties involved with GPU-implemented topology optimization.

2 Methodology

In this section, the LBM for modeling the fluid dynamics is briefly introduced. This is followed by the mathematical definition of the topology optimization problem and the BESO method is then described. For further details on GPU computing, the LBM and BESO methods the reader should seek out the textbooks by Sanders and Kandrot [46], Succi [52] and Huang and Xie [20], respectively.

2.1 Lattice Boltzmann modelling

The ability to simulate flows through the use of CFD has progressed considerably, reducing test requirements at a lower cost and risk. Furthermore, CFD has been used to simulate real-world phenomena [25]. In the case when engineering applications require resolving fluid interactions with high accuracy, or involve low Mach number flow, mesoscopic flows and complex geometrical arrangements, LBM offers an alternative CFD method rather than using the Navier–Stokes (NS) equations [52]. Moreover, LBM has been applied to a wide range of applications from theoretical physics to real-world problems, and is expected to provide one of the next evolutions in the computational sciences [2, 60], notably for multi-scale simulation and optimization [26, 27]. The LBM is a memory-bound algorithm, which makes it suitable for the GPU architectures. GPU offers a computational environment with many processors. GPU-implemented LBM codes have been used on a variety of applications in the aerospace field [60], being competitive in both accuracy and execution speed. However, the LBM has not been used extensively in topology optimization algorithms [35]. Furthermore, to the best of the authors’ knowledge, a GPU-implemented LBM has not been coupled with and compared against CPU topology optimization codes. Therefore, this work couples a GPU-implemented LBM to a BESO algorithm and compares both the final design, in terms of objective, and the computational time to a CPU-implemented version of the code.

The LBM constructs kinetic models, based on Newton’s laws, incorporating the essential physics of microscopic processes, such that one can correctly model the macroscopic processes. A finite number of molecules, whose motion is governed by Newton’s laws of dynamics, are used to model the fluid. A discretized Boltzmann equation is solved by the LBM, which uses velocity distribution functions to represent macroscopic properties. Both collision, the interaction of two particles, and streaming, the movement of particles from one node to the nearest neighbor, are modeled by the discrete Boltzmann equation. The fundamental concept behind the LBM is to calculate the macroscopic quantities from the moments of the finite number of velocity distribution functions, which are obtained by solving the discrete Boltzmann equation. A D3Q19 lattice is used in this work, i.e. 3 dimensions and 18 moving particles per rest node. The total number of iterations used for the LBM simulations is 4000, since stability has been demonstrated and validated against NS simulations using a commercial code, ANSYS CFX [14], and experimental analysis [33]. For a more in-depth overview of the LBM, interested readers should seek out the textbook by Succi [52]. For details on how the LBM and FEA are coupled, the reader is advised to seek out the previous works by the authors on this topic [35,36,37].

2.2 Topology optimisation

The first optimization problem studied in this article is the compliance minimization, or stiffness maximization, of a micro fluidic mixer under fluid pressure loads with a structural volume constraint. Therefore, the objective is to find the distribution of a pre-defined amount of material such that a design with maximum stiffness is obtained. Hence, the topology optimization problem can be mathematically stated as:

where \(\mathbf{x }\) is the vector of design variables, \(x_i\), n is the total number of elements in the model and V is a predefined structural volume. Since the algorithm is discrete (Sect. 1) the design variables can only be equal to \(x_i = 1\), representing solid material, or \(x_i = 0\), representing fluid/void material.

The second optimization problem this article is concerned with is the vorticity maximization of micro fluidic mixers for a given Reynolds number and structural volume. Therefore, the objective is to find the topology of the mixer that gives the highest vorticity in the region of interest. Hence, the topology optimization problem for this case is mathematically formulated as follows:

where \(\overrightarrow{\omega }\) is the vorticity of the flow in the region of interest, Re is the Reynolds number of the flow, and \(Re_0\) represents a predefined Reynolds number. For this problem, a design variable of \(x_i = 1\) represents fluid elements, whereas \(x_i = 0\) represents solid elements.

2.2.1 Evolutionary structural optimisation

The original ESO algorithm is monotonic, i.e. elements can only be removed from the design domain [63]. These early methods are based on the successive elimination of inefficient material, gradually evolving the design towards the optimum [65]. Although the ESO method has been applied to a wide range of problems [51, 64], it is limited in two main ways. First, as already mentioned, structure can only be removed from the design domain, consequently the initial model must be significantly over designed. Second, if structure is prematurely removed it cannot be recovered [34]. Subsequent ESO methods, referred to as BESO, allow material to be re-admitted to the design domain [44]. Modern BESO algorithms are convergent and mesh independent [18], simultaneously removing and adding material from and to the design domain until all constraints and a convergence criterion are satisfied. More recently, a further improvement to BESO methods introduced the use of soft material to model the void elements in the FEA [19], known as soft-kill BESO with the former being hard-kill BESO. This article uses a soft-kill BESO method coupled to a GPU-implemented LBM. This work builds on a recent study by the authors [35], which implemented a CPU version of the code, aiming to drastically improve computational efficiency, bring high-fidelity methods forward to the preliminary design stage.

2.2.2 Sensitivity analysis

In this study, two different objectives are considered (Sect. 2.2). The first is minimum compliance or maximum stiffness, used for structural optimization. In FEA the removal of an element results in a reduction in the stiffness of the structure which is equal to the element strain energy [9]. This change is defined as the element sensitivity for the compliance minimization problem:

where c is the compliance, \(p=3\) is the penalization factor, the subscript e represents elemental values and superscript cmp and 0 represents a compliance objective and solid values, respectively. The element sensitivity (Eq. 3) takes advantage of the SIMP material model [5], where the Young’s modulus, E, is modeled using a power law penalization method, as follows:

In design-dependent load problems, as is the case here, changes in the structure lead to variations in the load vector. Therefore, this variation in the load vector must be considered in the sensitivity analysis [35]. Thus, from the definition of the optimization problem (Eq. 1) the sensitivity analysis for compliance minimization (Eq. 3) can be updated such that the variation in the load vector is considered, as follows [35, 37, 66]

where \(\varDelta \mathbf{f }_{e}\) is the change in the element load vector between optimization iterations. Taking the isoparametric bilinear elements used in this work, the change in the load vector of one element for a fluid pressure load is found by [35]

where \(P_i\) and \(A_i\) are the pressure load and elemental area, respectively. Equation 6 is applicable for cases where the flow travels in the x-direction and the structure is aligned perpendicular to the flow, as is the case in this study. If this is not the case then the vector defined in Eq. 6 must be updated to match the loading conditions. This study takes advantage of a two-domain approach, i.e. the structural and fluid dynamics are solved separately, during every optimization iteration. This method is more flexible than a monolithic approach, which solves an adjoint problem to update the structure and fluid solutions together. Furthermore, a monolithic approach is problem specific unlike a two-domain approach, which can be applied to all FSI problems. In a previous study by the authors [37], this approach was demonstrated to work well as both the structural and fluid dynamics were shown to converge, even allowing a relaxation of the coupling conditions.

The second objective considered in the paper is vorticity maximization (Sect. 2.2). Thus, the goal of this problem is, for a given Reynolds number, to increase the mixing of two fluid species. This is imperative for the operation of micro fluidic mixers, as their purpose is to efficiently mix two, or more, fluid species. Hence, since the flows have low Reynolds numbers, normally lower than 1000, vorticity is an accurate measure of the degree of mixing as shown in the works of Woodfield et al. [61] and Moghtaderi et al. [33]. The authors of this work recently developed a soft-kill BESO method for the vorticity maximization of fluids using the LBM [35]. The circulation method for vorticity [1] and the shape derivative given in Kasumba and Kunisch [21] are used to derive the sensitivity number to solve this optimization problem. Therefore, the sensitivity number for the vorticity maximization is determined by:

where \(\overrightarrow{\omega }\) is the vorticity of the flow, the superscript vrt represents the vorticity objective and \(\varDelta \gamma _{e}\) is the change of, \(\varDelta\), the element velocity vector defined as:

where \(\gamma _x\), \(\gamma _y\), \(\gamma _z\) are the spatial components and \(W_x\), \(W_y\) and \(W_z\) are the circulation components. For more information on the derivation of the sensitivity numbers (Eqs. 5 and 8) and for a validation against meta-heuristic algorithms the reader is advised to seek out the previous study by the authors [35], which outlines the method in more detail.

2.2.3 Mesh dependency and convergence

To guarantee that a solution to the topology optimization problem (Eqs. 1 and 2) exists, some restrictions on the design must be introduced [50]. The sensitivity numbers can become discontinuous across the element boundaries, resulting in mesh dependency or checkerboarding (the repetition of solid and void material). A filter scheme is used, to smooth the element sensitivity numbers across the entire domain, alleviating the problem of mesh dependency and checkerboarding. The filter scheme is similar to that presented by Sigmund and Petersson [50]; however, nodal sensitivity numbers are used when calculating the updated element sensitivity numbers based on the surrounding structure. The nodal sensitivity numbers are found by taking the average of all the element sensitivity numbers that are connected to the node, thus:

where M is the number of elements connected to the jth node and \(\alpha _{{e}_i}\) is the ith element sensitivity number (Eqs. 5 and 7). The weighting factor of the ith element, \(w_i\), is a function of the distance between the center of the ith element and the jth node, \(r_{ij}\), thus:

The nodal sensitivity numbers (Eq. 9) are then used in the mesh independency filter to find the smooth element sensitivities. A filter radius, \(r_{\text {min}}\), is defined to identify the nodes that will have an effect on the element sensitivity. The value of \(r_{\text {min}}\) must be large enough such that the associated sub-domain, \(\varOmega\), covers at least one element. Furthermore, this value must remain constant for all mesh sizes. Nodes that are located inside \(\varOmega\) contribute to the smoothing of the element sensitivity, by:

where N is the total number of nodes in the sub-domain, \(\varOmega\), and \(w(r_{ij})\) is the linear weighting factor, defined as:

The filter scheme effectively addresses the mesh-dependency and checkerboard problems. However, the objective function and corresponding topology may not be convergent. To overcome this problem, Huang and Xie [18] showed that when the sensitivity numbers (Eq. 11) are averaged with their previous values the solution becomes steadier, thus:

where itr is the current iteration number. Therefore, the updated sensitivity number includes the history of the sensitivity information from previous iterations.

2.2.4 Convergence criteria

For every iteration the BESO algorithm defines a target volume, found by:

where ER, the evolutionary ratio, is a percentage of the current structural volume, and increases or decreases \(V_{{\text {itr}}+1}\) towards the desired volume constraint, V, defined in Eq. 1. This, in turn, sets the threshold, \(\alpha _{\text {th}}\), of the sensitivity numbers. Thus, solid elements are removed from the design domain when:

and elements are added back to the design domain when:

A maximum addition ratio, \({\text {AR}}_{\text {max}}\), is set to restrict the amount by which the volume of the structure can increase between iterations. Once \({\text {AR}}> {\text {AR}}_{\text {max}}\), the elements with the highest sensitivity numbers only are added, such that \({\text {AR}} = {\text {AR}}_{\text {max}}\). Then, to satisfy the target volume \(V_{{\text {itr}}+1}\), the elements with the lowest sensitivity numbers are removed.

The iteration target volume remains constant at V once the volume constraint is satisfied. The topology evolves until a convergence criterion is satisfied. This is defined as:

where \(\delta\) is a predefined tolerance, O is the objective function and itr is the current iteration of the optimization algorithm. Equation 17 evaluates the change in the objective for the last ten solutions. Therefore, if the change in the objective is minimal the solution is said to be converged. For a more in-depth discussion on evolutionary structural optimization algorithms, one should consult the latest textbook [20] and review paper [34] on the subject.

3 Case study

A baffled micro-reactor is used in this study as depicted in Fig. 1. The model is made up of a main pipe which is fitted with a fuel inlet tube, running along the axis of the main pipe, and a multi-holed baffle, which is where the secondary flow is introduced to the main flow. The lay-out and initial topology of the baffle are shown in Fig. 1.

Baffled micro-reactor used in this study [57]

The fluid domain (Fig. 1c) is defined in LBM nodes, here the lattice used has dimensions \(680 \times 73 \times 73\) lattice units, with additional nodes used for the wall, in the x, y and z directions, respectively. The location of the baffle in the main pipe is 60 lattice units downstream of the flow inlet (Fig. 1c). The inlet boundary condition imposed is the velocity of the flow in the inlet tube and annulus area. The outlet boundary condition has a convective boundary condition, based on the velocity, applied. A no-slip condition is implemented along the walls by modeling them as full-way bounce-back. To mimic the experiments performed by Moghtaderi et al. [33], the difference in the mass flow rate between the inner tube and annulus area is set to \(5\%\).

In the FEA a clamped boundary condition is applied along the perimeter of the baffle. Non-designable material is designated for the central hole boundary, since this is determined by the fuel line and inlet conditions, which have been fixed in the flow domain (Fig. 1a) to be consistent with the previous studies [14, 33].

The CPU simulations are performed on an Intel(R) Core(TM) i7-2720QM CPU 2.20 GHz using 4 cores in parallel. The GPU simulations are performed on a Tesla M2070 with 5375 MB of total global memory, 448 available cores, 1150 MHz of stream processor rate and 1566 MHz of memory clock rate. However, the speed of a simulation on the GPU is affected by the number of threads, which are created in the GPUs. Therefore, the specification of the hardware automatically calculates the number of threads by using the interfacing features of CUDA to query the provided GPU. Here, the solver is instructed to use 512 threads per block/kernel for a single simulation, which is one of the fastest settings provided by the current GPU.

4 Compliance minimization

In this section, topology optimization (Sect. 2.2) is applied to the multi-holed baffle plate (Fig. 1b) to maximize its stiffness for a given volume fraction. First, the CPU results are presented. These results are used as the benchmark for the GPU-implemented solutions. This is followed by the results of the single-precision GPU implantation of the code. In the literature of GPU-implemented FEA codes, it has been shown that using single-precision can cause numerical issues which result in convergence issues [67]. Therefore, a double-precision version of the code is implemented and compared with the CPU and single-precision GPU results as well. However, it must be noted that while double-precision improves the numerical accuracy compared to single-precision, it only partially corrects these numerical issues as has been shown in [13, 54].

4.1 CPU implementation

The CPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters: evolutionary ratio, \({\text {ER}} = 0.02\), volume fraction, \(V = 0.58V_0\), maximum addition ratio, \({\text {AR}}_{\text {max}} = 0.02\), and tolerance, \(\delta = 0.001\); are defined before the BESO algorithm is applied. The CFD mesh has 21,742,320 degrees of freedom. The initial and final structure is shown in Fig. 2.

The final design obtained using the CPU code has been validated in previous numerical studies [35, 36]. The compliance of the initial structure is \(5.13 \times 10^9 \ {\text {Nm}}\), whereas the final structure has a compliance of \(2.281 \times 10^9 \ {\text {Nm}}\). Therefore, the CPU-implementation of the code is able to reduce the compliance by approximately \(56\%\). The convergence history for the CPU algorithm is shown in Fig. 3.

The algorithm takes 179 iterations to converge to the final solution (Fig. 3) when performed on a CPU. The computation time is approximately 7 days and 11 h to complete the optimization. This is mainly due to the computational burden of the LBM, which has to be run 179 times. Typically, at the preliminary design stage, hundreds of design variations are being considered. Thus, at this computational expense it would take the CPU-implementation years to run all the cases. Hence, this is not a viable option and could only be used for the last iterations later in the design process.

4.2 Single-precision GPU implementation

The most computational efficient version of the multi-physics topology optimization algorithm studied in this work makes use of the single-precision GPU-LBM. However, one finds in the literature, on GPU-implemented topology optimization algorithms, cases where single-precision results in a lack of convergence, due to the inherent round-off errors [30]. Thus far, GPU-implemented topology optimization has been confined to structural optimization, hence GPU-FEA codes are producing these errors (Sect. 1). Therefore, it is expected in this study to observe similar errors when a single-precision code is used, since this study extends the GPU implementation to multi-physics problems. Namely, GPU fluids and CPU structures.

The single-precision GPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters are identical to the previous case (Sect. 4.1). The final structure determined by CPU and single-precision GPU is shown in Fig. 4.

The final structure obtained using the single-precision GPU code is clearly not optimal (Fig. 4). First, the initial structure (Fig. 2a) has a symmetry about the x- and y-axis, which is lost with the use of the single-precision GPU code. Further, it is known that the optimal structure for this particular problem should be symmetric, since the physics of the problem does not contain any unsymmetrical behavior [35, 36]. Second, there is clear evidence of numerical errors in the topology (Fig. 4b), shown by the small holes that have formed, which are not present in the final topology of the CPU code (Fig. 4a). The compliance of the final structure found using the single-precision GPU code is \(3.912 \times 10^9 \ {\text {Nm}}\), which is \(71.5\%\) increase from the final compliance of the structure found by the CPU code. The convergence history of the single-precision GPU algorithm is shown in Fig. 5.

Clearly convergence is never achieved by the single-precision GPU algorithm, which stops after 336 iterations (Fig. 5). This equates to a computational time of approximately 13 h, 31 min and 48 s. However, while this is a significant improvement over the computational expense of the CPU algorithm, over 13 times faster, the final result is not a feasible optimum. One could take the best solution found by the algorithm, in this case at iteration 47 a solution was found having a compliance of around \(2.6\times 10^9 \ {\text {Nm}}\), but convergence is never achieved and thus it is unlikely this solution is an optimum. Alternatively the best solution found could be used as an initial structure for an algorithm that is known to converge, possibly speeding up the overall process. Therefore, the single-precision GPU-driven topology optimization code, of this work, cannot guarantee convergence for the multi-physics compliance minimization problem. This confirms the conclusions of previous studies, which have observed the same phenomena for GPU-implemented SIMP algorithms on structural optimization problems [30, 67]. Thus, double-precision must be used.

4.3 Double-precision GPU implementation

Double-precision GPU codes are not as computationally efficient as single-precision GPU codes; however, they have a lower round-off error due to a higher floating point capacity. This is also the cause of the reduction in computational efficiency, since more memory is needed to store a higher amount of floating points. The GPU used in this work has a 2.0 compute capability, meaning that the floating point computation abides by the IEEE 754 standard for floating point arithmetic. Hence, round-off errors should be kept to a minimum.

The double-precision GPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters are again identical to the benchmark case (Sect. 4.1). The final structure determined by the single-precision and double-precision GPU codes is shown in Fig. 6.

The final structure obtained using the double-precision GPU code shows a significant improvement over the single-precision GPU (Fig. 6). The structure is symmetric about both the x- and y-axis (Fig. 6b). Furthermore, no numerical errors are present in the structure, unlike the single-precision GPU code (Fig. 6a). Moreover, clear similarities between the final structure found using the CPU code (Fig. 2b) and the structure found using the double-precision GPU code are observed. The compliance of the final structure found using the double-precision GPU algorithm is \(2.577 \times 10^9 \ {\text {Nm}}\), which is a \(34\%\) reduction compared with the single-precision GPU code and only a \(12\%\) increase compared with the CPU code. This increase in compliance is significant; however, by observing the convergence history of the CPU algorithm (Fig. 3) it is clear that the double-precision GPU algorithm is converging to a local optimum. This is evident by comparing the final compliance found by the double-precision GPU algorithm with the multiple convergence cycles of the CPU algorithm (Fig. 3). Moreover, in a recent study by the authors [37], it was shown that several local optima for this problem exist. Therefore, it is well known that one cannot criticize a gradient based optimization method for finding a locally optimal solution. The convergence history of the double-precision GPU algorithm is shown in Fig. 7.

Unlike the single-precision GPU code, convergence is achieved for the double-precision GPU code (Fig. 7). Furthermore, the double-precision topology optimization algorithm only takes 129 iterations to converge, which is less than the 179 iterations required by the CPU algorithm (Sect. 4.1). Hence, the computational time required to achieve convergence is approximately 10 h, 1 min and 48 s. Hence, 1 optimization iteration takes approximately 280 s, which is about double the time the single-precision topology optimization code takes (145 s). Therefore, the double-precision GPU code is implemented efficiently. Moreover, the double-precision GPU code is about 18 times faster than the CPU code, with a \(12\%\) reduction in objective. Hence, Pareto’s principle of design is applicable here—\(80\%\) of the design comes from \(20\%\) of the time. Thus, this computational efficiency is more than beneficial at the preliminary design stages. Therefore, the double-precision GPU code could feasibly be used at the preliminary stages, whereas the CPU code could only be employed at the last stages in the design.

4.4 Difference between CPU and GPU implementation

The final analysis of this section is to quantify the difference between the CPU and GPU algorithms. Clearly, the CPU algorithm is able to find an optimal solution (Sect. 4.1), while the single-precision GPU is not (Sect. 4.2). The reason for this must lie in a discrepancy between the two algorithms calculated sensitivity functions, which drive the design updates. Therefore, to quantify this discrepancy, the percentage difference of the sensitivity functions for the initial baffle topology using the CPU and GPU algorithms, i.e. \(|\alpha _{\text {CPU}} - \alpha _{\text {GPU}}|/\alpha _{\text {CPU}}\), is illustrated in Fig. 8.

Clearly the discrepancies are kept to a minimum, having a maximum difference of \(0.48\%\) and an average difference of \(0.038\%\) (Fig. 8). However, one observation is that the distribution of the difference between the two sensitivity functions is not uniform and, more importantly, not symmetric. This explains why the GPU implemented algorithm becomes asymmetric, driving the solution to a non-optimal final design (Sect. 4.2). Therefore, even small variations, due to differences in computational architecture are able to perturb a system away from the optimum design. This is an important consideration when performing topology optimization on GPU-architectures.

5 Vorticity maximization

The second problem solved in this article applies the topology optimization algorithm (Sect. 2.2) to the multi-holed baffle plate (Fig. 2b) to maximize the amount of mixing between the two fluid species in the micro fluidic mixer. This problem is first solved using a CPU implementation of the code, to get a benchmark, which the GPU implementation can be compared against. A single-precision GPU-implemented code is then applied to the same problem. The previous section demonstrated how the lower floating point accuracy of single-precision GPU can lead to convergence errors in the topology optimization algorithm. Therefore, a double-precision GPU implementation is applied to the problem and compared against the CPU and single-precision GPU results.

5.1 CPU implementation

The CPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters are identical to that of the compliance minimization problem (Sect. 4). Furthermore, the CFD mesh is the same as for the compliance minimization problem, having 21,742,320 degrees of freedom. The initial and final structure is shown in Fig. 9.

The final design obtained using the CPU algorithm has been validated in previous numerical studies [35, 36]. The vorticity of the initial topology is \(4856 \ {\text {s}}^{-1}\), whereas the final topology has a vorticity of \(6060\ {\text {s}}^{-1}\). Therefore, the CPU implementation of the code increases the vorticity of the fluid by \(25\%\). The convergence history for the CPU algorithm is given in Fig. 10.

The algorithm takes 76 iterations to converge to the final solution (Fig. 10) when performed on a CPU. Therefore, the computation time is approximately 3 days and 4 h to complete the optimization. Hence, similarly to the first topology optimization problem (Sect. 3), at the preliminary design stages this computation time is not viable, making the method only feasible for use later in the design cycle.

5.2 Single-precision GPU implementation

The single-precision GPU implementation has the fastest speed-up compared to the other methods, but has already been shown to produce numerical issues in the topology optimization algorithm (Sect. 4.2). This can only be attributed to the round-off errors inherent in single-precision GPU, since double-precision implementations have been able to achieve convergence (Sect. 4.3). Thus far, GPU-implemented BESO topology optimization algorithms have only been applied to structural objectives, namely compliance minimization. This section deals with a fluid objective, i.e. vorticity. Therefore, it is expected that the single-precision GPU implementation will not be able to solve this topology optimization problem, since the round-off errors are present in the formulation of the objective, i.e. the fluid properties, rather than the load application.

The single-precision GPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters are the same as is defined in the compliance minimization problem (Sect. 4). The final structure determined by CPU and single-precision GPU is shown in Fig. 11.

As was expected, the final topology obtained by the single-precision GPU code is clearly not optimal (Fig. 11). Furthermore, the topology is not physically feasible, since there is structure which is suspended inside void material with no connection to the constraints. Moreover, in [35] it was demonstrated that the final topology had a symmetry about the \(\pm 45^{\circ }\) diagonals. This symmetry is observed in the CPU result (Fig. 11a), whereas the single-precision GPU produces a final topology that is almost symmetric about the x- and y-axis. The final vorticity found using the single-precision GPU code is \(5541 \ {\text {s}}^{-1}\), which is a \(9\%\) decrease compared to the final vorticity found by the CPU code. The convergence history of the single-precision GPU algorithm is shown in Fig. 12.

Unlike the compliance optimization problem (Sect. 4.2), convergence does seem to be achieved (Fig. 12). Furthermore, the algorithm only requires 25 iterations to achieve convergence, compared to 76 for the CPU implementation. This equates to a computational time of approximately 1 h, which is 76 times faster than when a CPU algorithm is used. However, this notable improvement in computational expense is fruitless, since the final result is not a feasible optimum; although, it expressed convergence. Therefore, alike the compliance minimization problem, the single-precision GPU-implemented topology optimization code, of this work, cannot guarantee convergence to a feasible design for the vorticity maximization problem. Therefore, in the next section a double-precision GPU implementation is applied to the same problem to determine if the increase in numerical accuracy can produce a feasible optimum for a minimal increase in computational expense.

5.3 Double-precision GPU implementation

For the compliance minimization problem, it was found that the convergence issues occurring in the single-precision GPU implementation are avoided when double-precision is used. However, for the problem of this section, the single-precision GPU implementation does not seem to have convergence issues, but does not converge to a similar design found by the CPU implementation, or even a feasible one. Therefore, the double-precision GPU code may not be able to improve on the result found by the single-precision code. Nevertheless, the double-precision GPU implementation is applied to determine if round-off errors are the cause of the infeasible final design.

The double-precision GPU-LBM code is coupled with the BESO algorithm (Sect. 2.2). The optimization parameters are defined as in the compliance minimization problem (Sect. 4). The final structure determined by the single-precision and double-precision GPU codes is shown in Fig. 13.

The final topology obtained using the double-precision GPU code (Fig. 13b) is very similar to that found using the single-precision code (Fig. 13a). Again, the topology is almost symmetric about the x- and y-axis. Further, the final design is still not physically feasible since structure is suspended in void material. Unlike the compliance minimization problem, implementing double-precision in the GPU does not produce a feasible optimum. The vorticity of the final topology found using the double-precision code is \(5553 \ {\text {s}}^{-1}\), which is a \(0.2\%\) increase compared to the single-precision code and still a \(8\%\) decrease compared to the CPU code. The convergence history of the double-precision GPU algorithm is given in Fig. 14.

As would be expected, since the final designs are similar (Fig. 13), so too are the convergence histories of the single- and double-precision GPU-implemented topology optimization algorithms (Figs. 12, 14). Again, the double-precision GPU code requires 25 iterations to achieve convergence. Therefore, the computational time required to achieve convergence is approximately 1 h, 56 min and 24 s, almost the double of what is required by the single-precision code. Hence, the double-precision code is about 39 times faster than the CPU implemented code. However, alike the single-precision GPU implementation the double-precision implementation fails to produce a feasible final design. Hence, unlike the compliance minimization problem (Sect. 4), this computational efficiency is not beneficial at the preliminary design stages, since infeasible designs are produced.

The results of this section indicate that there is an inherent difference between CPU and GPU architectures, which results in the optimizer converging to a different solution. This is troubling, since both CPU and GPU topology optimization codes started at the same initial design, had the same optimization parameters and both achieved convergence according to the same convergence criteria; however, they did not produce complementary results. This was not observed in the compliance minimization problem (Sect. 4), where the single-precision GPU code could not achieve convergence, but the double-precision code was able to converge to a design consistent with the CPU algorithm. One possibility is the difference in the reliance of the objectives, compliance and vorticity, on the GPU implementation. The objective, J, for compliance and vorticity is formulated as follows:

where \(\varOmega\) is the fluid domain, \(\gamma\) is the velocity field, \(\mathbf{u }\) is the displacement field and \([\mathbf{K }]\) is the stiffness matrix of the structure. Therefore, since it is assumed that the structure stays within the elastic limit, i.e. does not undergo any plastic deformation, the following must hold:

where \(\mathbf{F }\) is the force field applied to the structure. Hence, the compliance objective can be re-written as:

Therefore, by comparing Eqs. 19–21 it is noted that the reliance of the objectives on the GPU-LBM are different. For the compliance minimization problem the reliance is linear, since the applied force (\(\mathbf{F }\)) is determined by the LBM; whereas, for the vorticity objective it is more complicated, since the velocity field (\(\gamma\)) is determined by the LBM. The vorticity objective takes the square of the curl of this velocity field. Where the curl of a vector describes the infinitesimal rotation of the vector field. Therefore, it takes the difference of the partial differential in the three-spacial dimensions at every point in the vector field, mathematically this is described as:

where \(\mathbf{i }\), \(\mathbf{j }\) and \(\mathbf{k }\) are unit vectors for the x-, y- and z-axes. As pointed out in the CUDA programming guide [38, 39] CUDA implements division and square root operations that are not IEEE-compliant, i.e. their error in units in last place is non-zero. However, addition and multiplication are IEEE-compliant. Therefore, any discrepancies between the CPU and GPU are greater in the vorticity objective than in the compliance objective since division and square root operations are involved in the calculation of the objective and sensitivity functions.

6 Constrained topology optimization

It is sometimes possible, by looking at the physics of the topology optimization problem, to determine certain required conditions, which can be directly enforced as constraints on the optimizer. This reduces the design space of the optimization problem and can often assist the algorithm in finding an optimum solution, or reduce its computational expense. Therefore, in this section two different constraints are applied, separately, to both the single-precision and double-precision GPU implementations to try and improve the designs obtained. First, it was shown that for the compliance minimization problem (Sect. 5.2) a symmetry about the x- and y-axis is an inherent feature of any feasible optimum to this problem [35]. This is due to the lack of any unsymmetrical physical drivers in the system. Therefore, a symmetry constraint is implemented. Similarly, for the vorticity maximization problem, it was found that the CPU result has a symmetry about the \(\pm \ 45^{\circ }\) diagonals (Sect. 5.1); however, is not symmetric about the x- and y-axis. Therefore, a symmetry constraint is applied to this problem, ensuring that this symmetry is enforced. Finally, it was demonstrated that for the vorticity maximization problem, physically infeasible final designs are produced by the single- and double-precision GPU implementations. Therefore, the last analysis of this section derives a novel feasibility constraint and applies it to the vorticity maximization problem only, since the GPU implementations of the compliance minimization problem do not produce infeasible designs.

6.1 Symmetry constraint

6.1.1 Compliance minimization

First, a symmetry constraint is applied to the compliance minimization problem for the single-precision GPU implementation. This constraint simply takes advantage of the symmetry of the problem, by taking only the top left quarter of the structure, at the end of every optimization loop, and then reflecting it about the z and then y-axis (Fig. 1c) to create the bottom half and right side of the structure for the next optimization loop. The final structure determined by CPU without a symmetry constraint and single-precision GPU with a symmetry constraint is shown in Fig. 15.

The final structure obtained using the single-precision GPU code with a symmetry constraint is comparable to the CPU final design (Fig. 15). The symmetry constraint has forced the optimizer to only consider designs which are symmetric about the x- and y-axes. This restriction on the design domain is completely valid, since it is known before the optimizer is run that symmetry is a requirement of the final design [35]. Therefore, by adding the symmetry constraint we are not restricting the optimizer, rather ensuring that it only considers physically feasible designs. The compliance of the final design found using the single-precision GPU code with a symmetry constraint is \(2.416 \times 10^9 \ {\text {Nm}}\), which is only a \(6\%\) increase from the final compliance of the structure found using the CPU implementation (\(2.281 \times 10^9 \ {\text {Nm}}\)). Furthermore, the compliance is \(6\%\) less than the compliance of the final structure found using the double-precision GPU implementation. Hence, simply adding a symmetry constraint has produced a more optimum design than using a double-precision GPU code instead of a single-precision. The convergence history of the single-precision GPU algorithm with a symmetry constraint is shown in Fig. 16.

As is expected, since the final design is consistent with the CPU result, convergence is achieved for the single-precision GPU implementation with a symmetry constraint (Fig. 16). However, the single-precision GPU algorithm with a symmetry constraint takes 287 iterations to converge. Hence, the computational time required for convergence is approximately 11 h, 33 min and 36 s, which is over an hour and half longer than the double-precision code without the symmetry constraint, due to the significant increase in required iterations. Nevertheless, this is still over 15 times faster than the CPU implementation, with only a \(6\%\) reduction in objective. The double-precision had a speed-up of 18 times, but with a \(12\%\) forfeit in objective. Therefore, the double-precision implementation is still the most computationally efficient. A further observation is the large number of convergence cycles present in the convergence history (Fig. 16). This clearly indicates the presence of several local optima in the design space, the impact of this was discussed in the analysis of the results of the double-precision algorithm (Sect. 4.3). Therefore, it is no surprise that the different algorithms find different local optima. The symmetry constraint is not applied to the double-precision implementation for the compliance minimization problem since it produces a symmetric final design. Hence, symmetry is never broken and the constraint would never be violated. Therefore, applying the symmetry constraint will simply yield the same results as without the constraint.

6.1.2 Vorticity maximization

Next, the symmetry constraint is applied to the vorticity maximization problem for the single-precision GPU implementation. In this case, the initial structure of the problem is asymmetric about the x- and y-axis as this was found to be the case in the final design produced by the CPU implementation. Further, the symmetry constraint ensures that the structure is always symmetric about the \(\pm \ 45^{\circ }\) diagonals. This is done by taking only the structure on one side of the \(45^{\circ }\) diagonal, at the end of each optimization loop, and reflecting it over this axis to create the structure on the other-side of the \(45^{\circ }\) diagonal for the next optimization loop. The final structure determined by CPU without a symmetry constraint and single-precision GPU with a symmetry constraint is shown in Fig. 17.

The final structure obtained using the single-precision GPU code with a symmetry constraint is comparable to the CPU design (Fig. 17). The symmetry constraint has forced the optimizer to only consider designs that are symmetric about both the \(\pm \ 45^{\circ }\) diagonals and asymmetric about the x- and y-axis. Unlike the symmetry constraint on the compliance minimization problem, this restriction on the design is not physically valid as there is no physical reason to enforce it. Instead, we are using our knowledge of the CPU solution to assist the optimizer in finding a feasible design. The difference is, one would not have any reason to enforce this symmetry constraint without any prior knowledge of the solution. The final vorticity of the design found using the single-precision GPU code with a symmetry constraint is \(6077 \ {\text {s}}^{-1}\), which is an increase of \(0.3\%\) from the vorticity of the final design obtained using the CPU code (\(6060 \ {\text {s}}^{-1}\)). This small increase in objective is due to the algorithm being guided to the right solution, therefore, it is already in the neighborhood of the solution before the optimization begins. The convergence history of the single-precision GPU algorithm with a symmetry constraint is given in Fig. 18.

The solution only takes 18 iterations to converge to the final design (Fig. 18). This further emphasizes that the solution is put on the right track by the symmetry constraint. This equates to a computational time of approximately 43 min and 30 s. Therefore, while this method would not be possible if no prior knowledge of the solution is known, it could be used after the CPU implementation has revealed certain features of the design, from earlier solutions, to speed up the solution of other design alternatives.

Finally, since the double-precision GPU implementation could not produce feasible design for the vorticity maximization problem, the symmetry constraint is applied. The final structure determined by the single-precision and double-precision GPU code with a symmetry constraint is shown in Fig. 19.

As is expected the double-precision algorithm with a symmetry constraint produces an almost identical final topology to the single-precision algorithm with a symmetry constraint (Fig. 19). The final vorticity of the design found using the double-precision GPU algorithm with a symmetry constraint is \(6084 \ {\text {s}}^{-1}\), which is an increase of \(0.1\%\) from the vorticity of the final design using a single-precision algorithm with a symmetry constraint. The convergence history of the double-precision GPU algorithm with a symmetry constraint is shown in Fig. 20.

The solution takes 19 iterations to converge to the final design (Fig. 20). This equates to a computational time of approximately 1 h, 28 min and 48 s. This is just over double the time required by the single-precision GPU code with a symmetry constraint, with only a \(0.1\%\) improvement in objective. Therefore, in the case of a symmetry constraint the computational efficiency of the single-precision GPU code is superior to the increase in numerical accuracy of the double-precision GPU code.

6.2 Feasibility constraint

As mentioned earlier, the designs produced by the single- and double-precision GPU codes for the unconstrained vorticity maximization problem are not feasible. This is because the final topology contains structure suspended in void material. Therefore, in this section a feasibility constraint, which checks for structural islands is implemented to ensure feasible designs are produced. The feasibility constraint works by creating, what is termed here as, a connectivity matrix, \([\mathbf{A }]_{m \times n}\), where each entry, (m, n), in the matrix corresponds to an element on the baffle structure. Therefore, each entry contains a 1 if the corresponding element is solid and a 0 if the corresponding element is void. Hence, for each structure produced at the end of each optimization loop an \([\mathbf{A }]\) is calculated. The closed structural boundaries, inside the baffle, for each structure are determined. Finally, the feasibility constraint sums the number of closed boundaries, \(n_{\text {cb}}\) inside the structure. If the number of closed boundaries is less than or equal to two, \(n_{\text {cb}} \le 2\), the volume constraint for that iteration is reduced and a new design is found. This process is repeated until a feasible design is produced for that iteration.

The feasibility constraint is applied to the single-precision GPU algorithm for the vorticity maximization problem with the same initial topology and optimization parameters as outlined in Sect. 5. The final design for the single-precision GPU algorithm with and without the feasibility constraint is shown in Fig. 21.

The final structure produced by the single-precision GPU is only feasible when the feasibility constraint is preformed (Fig. 21). There are no longer any structural islands present in the final design. Furthermore, unlike the symmetry constraint applied to the vorticity maximization problem, the restriction on the design domain is physically valid, since a feasible structure is a requirement of the final design. However, the final design is still noticeably different from the design found by the CPU algorithm (Fig. 13). This is because the design found using the single-precision GPU code with a feasibility constraint is still symmetric about the x- and y-axis, similar to that found without a feasibility constraint. The final vorticity of the design found by the single-precision GPU algorithm with a feasibility constraint is \(5881 \ {\text {s}}^{-1}\). This is a \(3\%\) reduction compared to the vorticity of the topology determined by the CPU algorithm (\(6060\ {\text {s}}^{-1}\)). The convergence history of the single-precision GPU algorithm with a feasibility constraint is given in Fig. 22.

The solution takes 28 iterations to converge to the final design (Fig. 22). This equates to a computational time of approximately 1 h, 7 min and 41 s, which is a speed-up of about 67 times compared with the CPU code. Therefore, for such a large increase in computational efficiency and only a \(3\%\) reduction in the objective, this is beneficial in the preliminary design stages where the CPU code is not viable due to its large computation time (over 3 days). Therefore, adding the feasibility constraint to the single-precision GPU algorithm has made the topology optimization method a viable option for use in the preliminary design stages, bringing these tools forwards in the design process.

Finally, the feasibility constraint is applied to the double-precision GPU algorithm to determine if the increase in the numerical accuracy can reduce the drop in the objective from the CPU code. The vorticity maximization problem with the same initial topology and optimization parameters as outlined in Sect. 5 is solved. The final design for the single- and double-precision GPU algorithm with the feasibility constraint is shown in Fig. 23.

The final design produced, with a feasibility constraint, by the double-precision GPU algorithm is almost identical to the design produced by the single-precision algorithm (Fig. 23). Hence, the design is still symmetric about the x- and y-axis when a double-precision GPU algorithm with a feasibility constraint is used, as was the case without the feasibility constraint. The vorticity of the final design found by the double-precision algorithm with a feasibility constraint is \(5903\ {\text {s}}^{-1}\), which is only a \(0.4\%\) increase compared to the single-precision algorithm with a symmetry constraint (\(5881\ {\text {s}}^{-1}\)). The convergence history of the double-precision GPU algorithm with a feasibility constraint is given in Fig. 24.

The solution takes 27 iterations to converge to the final design (Fig. 24). This equates to a computational time of approximately 2 h and 6 min, which is just under double the time required by the single-precision GPU code with a feasibility constraint. Thus in the case of the feasibility constraint, having only a \(0.4\%\) improvement in objective with twice the computation cost, the benefit of the computational efficiency inherent in the single-precision GPU code is superior to the increase in numerical accuracy of the double-precision GPU algorithm.

7 Summary

In this section, a brief summary of the results of this work is given. The first problem solved was the compliance minimization problem (Sect. 4). It is demonstrated that the single-precision GPU implementation is unable to produce a feasible solution, having convergence issues. Therefore, a converged solution is never reached. The cause of this is the inherent round-off errors, which have also been observed in the literature for structural problems only [30]. However, it was shown that by implementing a double-precision GPU algorithm convergence could be achieved and a feasible optimum is found. Furthermore, the speed-up of the double-precision GPU algorithm is about 18 times compared with the CPU algorithm, having only a \(12\%\) reduction in objective. Therefore, it was concluded that the double-precision GPU implementation represent a feasible method that can be used at the preliminary design stages, whereas the CPU algorithm could not. Thus, for the compliance minimization problem, the implementation on a GPU has enabled these methods to be brought forward in the design cycle.

The next problem analyzed in this work was the vorticity maximization problem (Sect. 5). It is shown that neither the single- or double-precision GPU implementation could produce feasible solutions to this problem. However, unlike the compliance minimization problem, both implementations appear to converge. Thus, why implementing double-precision did not solve the problem. Moreover, it is demonstrated that the reliance of the topology optimization algorithm on the GPU numerics is greater in the vorticity maximization problem than in the compliance minimization problem, due to the different formulations of the objective functions.

In an effort to assist the GPU implementations additional constraints were formulated and added to the topology optimization problems. First, a symmetry constraint, which takes advantage of the symmetry of the physics of the compliance minimization problem, is implemented on the single-precision GPU algorithm. Since there are no physical asymmetric drivers, it is evident that the design of the baffle should be symmetric about the x- and y-axes [35]. Therefore, by enforcing this symmetry, through the symmetry constraint, the single-precision GPU implementation is able to achieve convergence and produce optimum designs. Furthermore, the speed-up when compared with the CPU algorithm is about 15 times, with only a \(6\%\) reduction in objective.

Similarly, a symmetry about the \(\pm \ 45^{\circ }\) diagonals and an asymmetry about the x- and y-axes was observed for the optimum solution to the vorticity maximization problem. Therefore, a symmetry constraint was employed, which enforced these conditions, in the single- and double-precision GPU implementations. This resulted in optimum final structures, similar to that found using the CPU algorithm, being produced. Furthermore, the computational efficiency is increased by 105 and 51 times compared with the CPU when using the single- and double-precision GPU algorithm, respectively. However, it was noted that this symmetry constraint was not valid, since the characteristics of the symmetry enforced were only known due to a prior knowledge of the solution. Thus, the symmetry constraint pushed the algorithm in the right direction. Nevertheless, this demonstrates how certain characteristics of the solution can be determined by running the slow CPU and then can be enforced in the fast GPU for quick optimization of other preliminary structures.

Finally, it was noted that the GPU implementations seemed to achieve convergence for the vorticity maximization problem; however, to infeasible designs. Therefore, a feasibility constraint was employed to ensure that the algorithms only considered feasible designs. By adding a feasibility constraint to the single- and double-precision GPU implementations for a vorticity objective, reasonable designs are produced without pushing the optimizer in the correct direction. Therefore, no pre-knowledge of the solution is required, making this a much more realizable solution compared to the symmetry constraint. Furthermore, the final design produces a similar final objective compared to the CPU result, having a reduction of \(3\%\) and \(2\%\) in objective for the single- and double-precision GPU implementation, respectively. However, this comes at a speed-up of 67 and 36 times for the single- and double-precision code, respectively. Thus, the small reduction in objective is worth the huge increase in computational efficiency. Again the double-precision code outperforms the single-precision. However, due to the increase in computational efficiency achieved by the single-precision code (about \(46\%\)) and the small improvement in objective by the double-precision code (about \(0.4\%\)) the single-precision code is more suited.

A quantitative comparison for all cases studied in this article is given in Table 1.

8 Conclusion

A multi-physics topology optimization algorithm has been presented here for use in the preliminary design phases. The aim of this study is to use HPC methods to reduce the computational time required, such that these methods are viable for use at the preliminary design stages. A BESO algorithm is coupled to a GPU-enabled LBM flow solver to optimize the structural and flow characteristics of a micro-reactor. Hence, the process takes advantage of the high computational efficiency of GPU, which carried out the most computationally intensive part of the process, namely, the simulation of the flow via LBM. The implementation on both CPU and single- and double-precision GPU are performed and compared, determining the speed-up gained and loss in objective when different architectures are used. It was found that, for both topology optimization problems, implementation on a GPU resulted in a significant gain in computational efficiency, with only a small reduction in objective. Therefore, bringing these methods forward in the design cycle, where implementation on a CPU is not viable.

First, a multi-physics compliance minimization problem with design-dependent pressure loads was solved. It was found that the single-precision GPU implementation had convergence issues, and thus, was unable to find a suitable optimum. This phenomena has been noted in the literature for GPU-enabled FEA topology optimization [30], but is a first here for multi-physics topology optimization. However, it is demonstrated that using a double-precision GPU implementation avoids these convergence issues, resulting in a speed-up of approximately 18 times, with only a \(12\%\) reduction in objective, compared to CPU.

Next, a vorticity maximization problem was solved on both single- and double-precision GPU. For this case, convergence is achieved; however, to infeasible designs, since structural islands are present for both single- and double-precision GPU. It was concluded that this discrepancy was due to the different reliance on the GPU numerics for the two different objectives.

Finally, a symmetry constraint and a feasibility constraint were added, separately, to the topology optimization problems to improve their convergence by eliminating infeasible designs from consideration. For the compliance minimization problem, adding a symmetry constraint, which takes advantage of the symmetrical physics, enabled the single-precision GPU implementation to converge to an optimum design. Similarly, for the vorticity maximization problem, adding a feasibility constraint forced only structurally feasible design to be considered by the optimizer, resulting in optimum designs being produced. Therefore, a speed-up of about 67 times was achieved for the vorticity maximization problem, with only a \(3\%\) reduction in objective. Hence, the main findings of this article can be summarized by the following points:

-

Single-precision GPU cannot be used without a symmetry constraint for the compliance minimization problem.

-

Double-precision GPU produces better, in terms of objective, designs compared with single-precision for all cases.

-

Single-precision is more computationally efficient for all cases, except compliance minimization problem.

-

For all cases, computational time is drastically reduced with GPU implementation compared to CPU results.

-

Adding a feasibility constraint to the vorticity maximization problem produces comparable designs for both the single- and double-precision GPU codes.

This study adds to the limited literature on GPU-accelerated topology optimization. New insights into the discrepancies between CPU and GPU numerics have been found, with reasonable methods developed to overcome these discrepancies. The work presented here brings high fidelity methods, such as lattice Boltzmann flow simulations, coupled with rewarding optimization algorithms, such as topology optimization, forward to the preliminary design stages. This type of analysis is key to the continued application of topology optimization to real world aerospace design problems.

References

Abrahamson S, Lonnes S (1995) Uncertainty in calculating vorticity from 2D velocity fields using circulation and least-squares approach. Exp Fluids 20:10–20

Aidun C, Clausen J (2010) Lattice-Boltzmann method for complex flows. Annu Rev Fluid Mech 42:439–472

Aissa M, Verstraete T, Vuik C (2014) Use of modern GPUs in design optimization. In: 10th ASMO-UK/ISSMO conference on engineering design optimization. Association for Structural and Multidisciplinary Optimization in the UK

Bendsøe M (1989) Optimal shape design as a material distribution problem. Struct Optim 1(4):193–202. https://doi.org/10.1007/BF01650949

Bendsøe M, Sigmund O (1999) Material interpolation schemes in topology optimization. Arch Appl Mech 69:635–654

Bendsøe M, Sigmund O (2003) Topology optimization—theory, methods and applications, 2nd edn. Springer, Berlin

Cecka C, Lew A, Darve E (2011) Assembly of finite element methods on graphics processors. Int J Numer Methods Eng 85:640–669

Challis V, Roberts A, Grotowski J (2014) High resolution topology optimization using graphics processing units (GPUs). Struct Multidiscip Optim 49(2):315–325

Chu D, Xie Y, Hira A, Steven G (1996) Evolutionary structural optimization for problems with stiffness constraints. Finite Elem Anal Des 21:239–251

D’Ammaro A, Kipouros T, Saddawi S, Savill A, Djenidi L (2010) Computational design for micro fluidic devices using Lattice Boltzmann and heuristic optimisation algorithms. In: Joint OCCAM/ICFD lattice Boltzmann workshop, OCCAM/ICFD

Deaton J, Grandhi R (2014) A survey of structural and multidisciplinary continuum topology optimization. Struct Multidiscip Optim 49:1–38. https://doi.org/10.1007/s00158-013-0956-z

Delbosc N, Khan J, Kapur N, Noakes C (2014) Optimized implementation of the lattice Boltzmann method on a graphics processing unit towards real-time fluid simulation. Comput Math Appl 67:462–475

Demmel J, Nguyen H (2015) Parallel reproducible summation. IEEE Trans Comput 64(7):2060–2070

Djenidi L, Moghtaderi B (2006) Numerical investigations of laminar mixing in a coaxial microreactor. J Fluid Mech 568:223–243

Garcia M, Gutierrez J, Rueda N (2011) Fluid-structure coupling using lattice-Boltzmann and fixed-grid FEM. Finite Elem Anal Des 47:906–912

Georgescu S, Chow P, Okuda H (2013) GPU acceleration for FEM-based structural analysis. Arch Comput Methods Eng 20(2):111–121

Herrero D, Martinez J, Marti P (2013) An implementation of level set based topology optimization using GPU. In: Proceedings of 10th World congress on structural and multidisciplinary optimization, WCSMO/ISSMO

Huang X, Xie Y (2007) Convergent and mesh-independent solutions for the bi-directional evolutionary structural optimization method. Finite Elem Anal Des 43:1039–1049

Huang X, Xie Y (2009) Bi-directional evolutionary topology optimization of continuum structures with one or multiple materials. Comput Mech 43:393–401

Huang X, Xie Y (2010) Topology optimization of continuum structures: methods and applications, 1st edn. Wiley, New York

Kasumba H, Kunisch K (2012) Vortex control in channel flows using translation invariant cost functionals. Comput Optim Appl 52:691–717

Khan M, Delbosc N, Noakes C, Summers J (2015) Real-time flow simulation of indoor environments using lattice Boltzmann method. Build Simul 8:405–414

Kuznik F, Obrecht C, Rusaouen G, Roux JJ (2010) LBM based flow simulations using GPU computing processor. Comput Math Appl 59:2380–2392

Laniewski-Wollk L, Rokicki J (2016) Adjoint lattice Boltzmann for topology optimization on multi-GPU architecture. Comput Math Appl 71:833–848

Li Q, Luo K (2014) Thermodynamic consistency of the pseudopotential lattice Boltzmann model for simulating liquid–vapor flows. Appl Therm Eng 72(1):56–61

Li Q, Luo K, Kang Q, He Y, Chen Q, Liu Q (2016) Lattice Boltzmann methods for multiphase flow and phase-change heat transfer. Prog Energy Combust Sci 52:62–105

Liu H, Kang Q, Leonardi C, Schmieschek S, Narváez A, Jones B, Williams J, Valocchi A, Harting J (2016) Multiphase lattice Boltzmann simulations for porous media applications. Comput Geosci 20:777–805

Mahdavi A, Balaji R, Frecker M, Mockensturm E (2006) Topology optimization of 2D continua for minimum compliance using parallel computing. Struct Multidiscio Optim 32(2):121–132

Makhija D, Pingen G, Yang R, Maute K (2012) Topology optimization of multi-component flows using a multi-relaxation time lattice Boltzmann method. Comput Fluids 67:104–114

Martinez-Frutos J, Herrero-Perez D (2017) GPU acceleration for evolutionary topology optimization of continuum structures using isosurfaces. Comput Struct 182:119–136

Martins J, Lambe A (2013) Multidisciplinary design optimization: a survey of architectures. AIAA J 59:2049–2075

Micikevicius P (2009) 3D finite difference computation on GPUs using CUDA. In: Proceedings of 2nd workshop on general purpose processing on graphics processing units. ACM

Moghtaderi B, Shames I, Djenidi L (2006) Microfluidic characteristics of a multi-holed baffle plate micro-reactor. Int J Heat Fluid Flow 27:1069–1077

Munk D, Vio G, Steven G (2015) Topology and shape optimization methods using evolutionary algorithms: a review. Struct Multidiscip Optim 52(3):613–631. https://doi.org/10.1007/s00158-015-1261-9

Munk D, Kipouros T, Vio G, Steven G, Parks G (2017) Topology optimization of micro fluidic mixers considering fluid–structure interactions with a coupled lattice Boltzmann algorithm. J Comput Phys 349:11–32