Abstract

Rockburst phenomenon is the extreme release of strain energy stored in surrounding rock mass which could lead to casualties, damage to underground structures and equipment and finally endanger the economic viability of the project. Considering the complex mechanism of rockburst and a large number of factors affecting it, the conventional criteria cannot be used generally and with high reliability. Hence, there is a need to develop new models with high accuracy and ease to use in practice. This study focuses on the applicability of three novel data mining techniques including emotional neural network (ENN), gene expression programming (GEP), and decision tree-based C4.5 algorithm along with five conventional criteria to predict the occurrence of rockburst in a binary condition. To do so, a total of 134 rockburst events were compiled from various case studies and the models were established based on training datasets and input parameters of maximum tangential stress, uniaxial tensile strength, uniaxial compressive strength, and elastic energy index. The prediction strength of the constructed models was evaluated by feeding the testing datasets to the models and measuring the indices of root mean squared error (RMSE) and percentage of the successful prediction (PSP). The results showed the high accuracy and applicability of all three new models; however, the GA-ENN and the GEP methods outperformed the C4.5 method. Besides, it was found that the criterion of elastic energy index (EEI) is more accurate among other conventional criteria and with the results similar to the C4.5 model, can be used easily in practical applications. Finally, a sensitivity analysis was carried out and the maximum tangential stress was identified as the most influential parameter, which could be a guide for rockburst prediction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

One of the most important concerns in deep underground activities such as mining and civil projects is the occurrence of rockburst phenomenon. Rockburst is an unexpected and severe failure of a large volume of over-stressed rock caused by the instantaneous release of accumulated strain energy. This phenomenon usually is accompanied by other events such as spalling, slabbing, and throwing of rock fragments which could be led to injuries, deformation of supporting system, damage to equipment or even collapse of a large area of the underground excavation and finally cease the operation [1,2,3,4]. In deep underground activities, the induced seismicity has a great role in rockburst occurrence; therefore, the identification and localization of seismic events are essential in rockburst assessment [5,6,7,8]. Great number of theoretical and experimental studies have been performed since 1930 by many researchers on the mechanism, prediction, and control of rockburst [9, 10]. However, rockburst still remains an unsolved problem in deep mining [11]. Rockbursts can be classified using various criteria comprising potential damage, failure pattern, scale, and severity. From the viewpoint of damage, it classifies into four classes of none, light, moderate, and strong. Based on the failure pattern, there are four types of failures including slabby spalling, dome failure, in-cave collapse, and bending failure. In terms of scale, rockbursts can be introduced as sparse with the rockburst length lower than 10 m, large area with the rockburst length between 10 and 20 m and continuous rockburst with the length higher than 20 m. The severity of rockbursts can be assessed as a function of failure depth [12, 13]. According to the influence diagram developed by Sousa [14], many factors affect the occurrence of rockburst such as mechanical properties of rock, geological circumstances, construction method, and in situ stress state. Considering the great number of effective parameters and the vague mechanism of rockburst, prediction and control of this hazardous phenomenon are very difficult tasks. Rockburst can be predicted in short term and long term. In situ measurement techniques such as microseismic monitoring system and acoustic emission can be used to acquire the exact location and the specific time of rockburst occurrence at each stage of the project (i.e. in short term). However, these techniques are time consuming, costly, and require precise surveying strategies. On the other hand, rockburst prediction in long-term is mainly based on conventional criteria, numerical models, and data mining techniques. Compared to the short-term prediction technique, the long-term one can be served as a quick guide for engineers during the initial stages of the project and consequently, enable them to decide about the excavating and controlling methods [1, 3]. During the last three decades, various rockburst proneness indices have been developed based on strength parameters and rock strain energy (see Table 1) [15].

According to Table 1, the conventional criteria only consider very few input parameters; therefore, cannot take into account a wide range of parameters that may influence rock bursting. Data mining is a relatively new computational method with the aim of discovering latent patterns and relationships between raw datasets which combines different areas such as statistics, machine learning, and so on. Data mining techniques have the capability to deal with the datasets containing multiple input and output variables [15, 19]. Hence, they have been used extensively in different sciences [20,21,22,23]. As a first attempt, Feng and Wang [24] developed two artificial neural networks (ANNs) to predict and control the probable rockbursts. Their successful experience encouraged other scholars to investigate the applicability of novel data mining techniques in rockburst assessment [25,26,27,28]. Although the methods used by the scholars could consider more input parameters, most of them are black box, i.e. they cannot provide a clear and comprehensible relationship between the input and output parameters. Consequently, the developed models using such opaque techniques cannot easily be used in practice. On the other hand, the conventional criteria as reported in many studies could not predict rockburst with high accuracy. Therefore, there is still a need to develop transparent and easy to use rockburst models.

In the current study, the applicability of three robust data mining techniques including genetic algorithm-based emotional neural network (GA-ENN), C4.5, and gene expression programming (GEP) in rockburst prediction was evaluated. Although the GA-ENN is a subset of black-box techniques, it is a new version of the ANN with enhanced capacities. In this study, for the first time, this method is used for a mining and geoscience problem. C4.5 and GEP techniques, in spite of the ones used in the previous studies, can provide simple models to predict rockburst occurrence. For this purpose, after preparing a database and assessing it statistically, three new predictive models were developed based on the training dataset. In continuing, a group of unused data (testing data) was fed to the trained models to testify their forecasting accuracy using two performance indices. Then, to have a comprehensive comparison, the results of the developed models were compared with the results of five conventional criteria. In the end, a sensitivity analysis was carried out to evaluate the influence of input parameters on the corresponding output.

2 Database preparation

A database containing 134 rockburst events was prepared from the literature (see the “Appendix”). These rockburst events have been measured in diverse underground projects associated with coal and non-coal mines, powerhouse stations, hydropower stations, and so on [1, 2, 11, 15]. Each record of the database contains four parameters which are the maximum tangential stress of surrounding rock (\({\sigma _\theta }\)), the uniaxial tensile strength of rock (\({\sigma _t}\)), the uniaxial compressive strength of rock (\({\sigma _c}\)), and the elastic energy index (\({W_{{\text{et}}}}\)). Maximum tangential stress (\({\sigma _\theta }\)) around the excavation is a key factor that is affected by the rock stress, groundwater, shape, and diameter of excavation. Since it would not be possible to measure these four parameters in association with rockburst occurrence, maximum tangential stress can be regarded as a representative parameter of those parameters. Rock compressive strength \({\sigma _c}\) and tensile strength \({\sigma _t}\) are the parameters that represent the capability of rocks to store elastic strain energy as well as its tensile and shear failure characteristics. Many theories have been proposed to describe the rockburst mechanism; however, the most accepted one is “energy theory”. Hence, several energy-based indices have been proposed by the researchers and most of them are correlated with each other and similarly related to rockburst occurrence. Among them, \({W_{{\text{et}}}}\) (the ratio of stored strain energy to that dissipated energy during a cycle of loading–unloading under uniaxial compression) is the most common energy criterion which has a direct influence on rockburst in such a way that with the increase of \({W_{{\text{et}}}}\), the probability of rockburst occurrence and its intensity will increase [3, 15, 29, 30]. Therefore, in the current study, four parameters of \({\sigma _\theta }\), \({\sigma _t}\), \({\sigma _c}\), and \({W_{{\text{et}}}}\) were adopted as the input parameters. Table 2 shows the descriptive statistics of the relevant input parameters that are used to develop rockburst models. For convenience, the abbreviations of input parameters were considered for modeling instead of their symbols; they are characterized by MTS, UTS, UCS, and EEI for \({\sigma _\theta }\), \({\sigma _t}\), \({\sigma _c}\), and \({W_{{\text{et}}}}\), respectively. To understand more about the relationship between the input parameters, Pearson correlation coefficients were computed which results are listed in Table 3. According to this table, there are moderate correlations for the UTS–UCS and EEI–UCS if the categorizations proposed by Dancy and Reidy [31] are followed.

Prior to any modeling, the statistical analysis of original database has high importance. The presence of outliers in the database negatively affects the ability of algorithms to find a precise relationship between input and output parameters and consequently, decreases the reliability of the developed model. Additionally, outliers may create some natural groups with different behaviors in a single dataset and if this is the case, it is necessary to identify them and develop separate models [32, 33]. The box plot is a common and standardized method to display the distribution of data based on minimum, first quartile (Q1), median (Q2), third quartile (Q3), and maximum values. The measurements outside the range of \((Q1 - 3(Q3 - Q1),~Q3+3(Q3 - Q1))\) are defined as extreme outliers and should to be omitted from the database, while those which are in the range of \((Q1 - 1.5(Q3 - Q1),\,~Q3+1.5(Q3 - Q1))\) are known as suspected outliers which are common in a big database and could be considered in modeling [32]. Figure 1 shows the box plots of input parameters. According to this figure, the median line is not in the center of boxes which indicates that the input parameters do not have a symmetric distribution. Besides, with the exception of MTS, other input parameters have few suspected outliers.

As a second effort, a principal component analysis (PCA) was conducted to check the existence or non-existence of natural groups in the database. PCA is a dimension-reduction technique that enables the user to transform the initially correlated variables from an m-dimensional space to an n-dimensional one where n < m. The new uncorrelated variables are nominated as principal components (PCs) which are the linear combination of initial variables [34, 35]. To perform this analysis, first, the datasets were normalized between 0 and 1 using the min–max method to eliminate the effect of range. In the second step, the correlation matrix was created for input parameters. Then, the eigenvalues and eigenvectors corresponding to the previous correlation matrix were calculated for each PC as follows:

where X, \(\lambda\), and V are the matrix of datasets, eigenvalue, and eigenvectors, respectively.

Eventually, the PCs were obtained by multiplying the input parameters in related eigenvectors. Figure 2 shows the scree plot of eigenvalues against the number of components. According to this figure, 92.872% of the database variations can be explained just with three first PCs by projecting the observations on these axes (i.e. PC1, PC2, and PC3). The scatter plots of PC1–PC2 and PC1–PC3 are shown in Fig. 3. As can be seen, there is not any natural group, i.e. the concentration of observations in specific areas in the database. Besides, few suspected outliers mentioned in the previous analysis can be seen in this figure as well. As a result, it can be said that the prepared database is suitable for further analysis. The output parameter is the rockburst occurrence, if any, was nominated as “Yes” otherwise, was nominated as “No”. Since the output is a qualitative parameter, we transferred it to a binary parameter, i.e. 0 (No) and 1 (Yes).

3 Data mining techniques

3.1 Genetic algorithm-based emotional neural network (GA-ENN)

Artificial neural network (ANN), a brain-inspired approach, is a popular branch of soft computing techniques first invented by McCulloch and Pitts [36] and has been used extensively in different areas [37,38,39,40]. ANNs, however, suffer from a fundamental problem which is known generally as the curse of dimensionality, i.e. the number of learning parameters increases exponentially with increase in the number of neurons in input, hidden, and output layers that finally lead to high computational complexity (CC). Recently, a limbic-based emotional neural network (ENN) is developed by Lotfi and Akbarzadeh-T [41] based on the emotional process of the brain with a single-layer structure. Unlike ANNs that are formed based on a biological neuron, ENNs are based on the interaction of four neural areas of the emotional brain comprising thalamus, sensory cortex, orbitofrontal cortex (OFC), and amygdala. These four areas using the features of expanding, comparing, inhibiting, and exciting overcome the shortages related to the common ANNs and provide more precise solutions. Initial ENNs have a low CC during the learning process, but the number of patterns which can be stored is limited that makes a low information capacity (IC) for this method. Lotfi and Akbarzadeh-T [42], thanks to a winner-take-all approach (WTA), introduced a new version of ENN with the name of WTAENN which is able to increase the IC of the algorithm. The structure of WTAENN with n input, one output, and m = 1 competitive part is shown in Fig. 4. According to this figure, original input data (i.e. \(\vec {p}=[{p_1},~{p_2},~ \ldots ,~{p_n}]\)) first enter the thalamus part. In the thalamus, input data will expand by the following equation:

The structure of proposed WTAENN with the single competitive unit [42]

where FE is an expander function which can be a Gaussian or sinusoidal function or in general can be defined as:

Then, the expanded signals are sent to winner sensory cortex \({i^*}\) which is selected if only and only if:

where \({c_1},~{c_2},~ \ldots ,~{c_n}\) are the learning weights.

Afterwards, the signals propagate to the related OFC and amygdala and the weights of \({w_{1,i}},~{w_{2,i}},~ \ldots ,~{w_{n,i}}\) from the ith OFC and the weights of \({v_{1,i}},~{v_{2,i}},~ \ldots ,~{v_{n,i}}\) from ith amygdala are used during the learning process to determine the final output. During the learning process, amygdala receives the imprecise input of \({p_{n+1}}\) from the thalamus to determine the output signal of \({E_a}\). After that, amygdala receives an inhibiting signal from OFC (\({E_o}\)) which with applying the activation function (e.g. \({\text{purelin}}\), \({\text{tansig}}\), \({\text{hardlim}}\) and \({\text{logsig}}\) functions), the final emotional signal (predicted value) will be achieved. The final output can be calculated by the following equation:

where vj,i is the weight of ith amygdala, pj is the input pattern, wj,i is the weight which belongs to ith OFC and bi is the related bias.

Generally, in the process of WTAENN learning, the learning weights (i.e. competitive weights (\(c\)-weights), amygdala weights (\(v\)-weights) and OFC weights (\(w\)-weights)) should be adjusted [42].

The genetic algorithm is the most popular optimization algorithm which can minimize a cost function to achieve the best solution. The solutions in GA are known as chromosomes and each chromosome consists of one or more genes. In relation to WTAENN, a chromosome can be expressed as follows:

where

After generating the first population, the fitness of chromosomes will be evaluated by the following equation:

where Yk is the output of the winner part for kth input pattern, Tk is the related target and m is the number of training pattern targets. By minimizing the cost function, the best learning weights for WTAENN can be obtained [41,42,43].

3.1.1 Rockburst prediction using GA-ENN

In this study, for the first time, the applicability of ENNs was examined to predict rockburst occurrence as a geotechnical engineering problem. In GA-based ENN algorithm, it is necessary to determine the optimum values of its parameters, i.e. the number of competitive parts (m), number of generations, and the population size. The MatLab code was used to develop this model. Since the input parameters have different units and range of values, in soft computing techniques, it is better to normalize datasets on account of speeding up the modeling process, reducing errors, and more importantly preventing the over-fitting phenomenon. So, the input parameters were normalized between 0 and 1 using the following equation:

where Xi, Xmin, Xmax, and Xnorm are ith actual value, minimum value, maximum value and the normalized value of an input parameter, respectively.

In the following, the initial database was divided into three parts of training (70% of the database), validation (10% of the database), and testing (20% of the database) to conduct a series of sensitivity analysis and subsequently to find the best combination of parameters. In the first analysis, the parameters of m and activation function were fixed on 1 and “Hard–limit”, and the values of population size and the number of generations increased from 20 to 300. Figure 5 shows the variation of mean square error (MSE) as the fitness function in each run. According to this figure, after generation no. 100, the MSE value remained constant and no change was observed up to generation no. 300. So, the value of 100 was selected as the optimum value for the parameters of population size and generation number. An increase in MSE can be seen between generations 60–100, which may refer to the stochastic mechanism of ENN algorithm for searching and finding the best combination of training coefficients (i.e. c, v, and w weights) among all the possible solutions. Similarly, the second analysis with the aim of finding the optimum value of m was executed by varying its value from 1 to 40 and recording the corresponding MSE values. The m = 1 provided the minimum value of MSE. Eventually, the algorithm was executed for several times based on the obtained optimum values for parameters and the best model was identified. Table 4 indicates the characteristics of the best GA-ENN model.

3.2 C4.5 algorithm

One of the best-renowned data mining techniques is decision tree (DT). The decision tree is a nonparametric technique which benefits from simple and interpretable structure, low computational cost and the ability to represent graphically. DTs have proven their efficiency for various purposes such as classification, decision-making and as a tool to make a relationship between independent variables and the dependent one [20, 44,45,46]. The most important characteristic of a DT as a “white box” technique is its simple graphical structure which enables the user to clarify the relations between variables easier, while other machine learning techniques such as ANNs have a vague internal computational procedure, which means the results are difficult to interpret. In the case of having a problem with many variables which act in reciprocally and non-linear ways, finding a comprehensive model may be very difficult. In these circumstances, DT can be a suitable alternative which is able to break down (sub-divide) the initial space into smaller parts so that the interactions are easier to manage. A decision tree is a collection of nodes (root node, internal nodes, and terminal or leaf nodes) arranged as a binary tree. The root node and internal nodes belong to decision stage and represent specific input variables which are connected together based on a smaller range of values. The terminal nodes show the final classes [47,48,49,50]. There are various types of decision trees, including classification and regression tree (CART), Chi-squared automatic interaction detection (CHAID), C4.5, ID3, quick, unbiased, efficient statistical tree (QUEST). C4.5 proposed by Quinlan [51] is a powerful classification algorithm which is derived from the development of ID3 algorithm and is able to handle numeric attributes, missing values, and noisy data [52]. C4.5 identifies decision tree classifiers and using a divide-and-conquer method grows the decision tree. The C4.5 algorithm acts in two main stages: tree constructing and pruning. Tree constructing starts by calling the training dataset. All of the datasets first are concentrated in the root node and then divided into homogeneous sub-nodes based on a modified splitting criterion, called gain ratio. The attribute with the highest normalized information gain is chosen to make the decision [51]. This splitting will continue till the stopping condition is met, i.e. all instances in a node belong to the same class and this node is identified as a leaf node. The generated DT by training dataset often is prone to the over-fitting problem because of having a great number of branches and such DTs fail to classify the new unused data. To overcome this problem, there is a need to prune the tree. Pruning is the process of reducing decision tree size by eliminating parts of the tree which have little power for classifying and this process finally led to increasing the accuracy of the classifier and its reliability [51, 53, 54].

3.2.1 Rockburst prediction using C4.5

In this study, the C4.5 algorithm was applied to the training dataset using WEKA (Waikato Environment for Knowledge Analysis) software. There are two main parameters which should be adjusted to develop a high-performance C4.5 classifier including confidence factor (CF) and the minimum number of instances (MNI) (data samples) per leaf. The CF is used to compute a pessimistic upper bound on the error rate at a leaf/node. The smaller this value, the more pessimistic the estimated error is and generally the heavier the pruning. If a CF greater than 0.5 is chosen, then the pruning will be done on the basis of unchanged classification error on the training dataset and this is equivalent to turning off the pruning. The MNI affect the volume (i.e. the complexity) of the developed tree [55]. Hence, according to Bui et al. [55] and Ghasemi et al. [52], the CF and MNI varied from 0.1 to 0.5 and 1 to 20, respectively, and the corresponding accuracy values were recorded. Finally, the optimum values of 0.25 and 2 were determined for CF and MNI, respectively. After adjusting the C4.5 parameters in WEKA software, the model was executed and the corresponding tree was obtained. Figure 6 displays the results obtained by this algorithm which contains a root node, five internal nodes, and seven leaves. There are two numbers in the parentheses of leaf nodes, the first number belongs to the number of instances in that node and the second number shows the number of misclassified instances. The process of rockburst prediction using the developed tree model is very simple. For example, taking into account the values of 4.6, 3, 20, and 1.39 for MTS, UTS, UCS, and EEI, respectively, and passing through the path of \({\text{MTS}} \leq 25.7\), \({\text{UTS}} \leq 4.55\), \({\text{EEI}} \leq 2.04\) and \({\text{UCS}} \leq 30\), the leaf node \({\text{Yes~}}(2,0)\) can be achieved which shows the occurrence of rockburst.

3.3 Gene expression programming (GEP)

During the progress of evolutionary algorithms (EAs) since 1975, Ferreira [56] introduced a new powerful population-based algorithm called gene expression programming (GEP) that takes advantage of basic GA and genetic programming (GP) methods. The main goal of the GEP is to find a rational mathematical relationship between the independent variables and the corresponding dependent in such a way that the defined fitness function reaches its minimal value. In GEP, possible solutions are in the form of fixed-length coded chromosomes consisting of two groups of entities: terminals and functions. Terminals can be both of input variables and user-defined constant values. Functions are algebraic symbols, e.g. +, \(-\), \(\times\), \(/\), \(Ln\), \(Log\) and so on. The chromosomes can consist of one or more genes, and each gene comprises two parts of the head and tail so that the genetic operators create effective changes in these areas to produce better solutions. In contrast to multiple non-linear regression techniques, there is no need to consider a pre-defined mathematical framework (e.g. exponential, power, logarithmic, etc.) for GEP to develop a model. As a matter of fact, the GEP algorithm during its intelligent search is capable to find the optimum combination of terminals and functions to provide a predictive equation with enough accuracy. As shown in Fig. 7, the process of GEP modeling starts with the random generation of chromosomes in Karva language (a symbolic expression of GEP chromosomes) which are then expressed and executed as the tree and mathematical structures, respectively. Then, the generated chromosomes are evaluated according to the pre-defined fitness function. Bests of the first population are copied into the next generation, and the others are influenced by genetic operators, including selection and reproduction (i.e. mutation, inversion, transposition, and recombination). Finally, the modified chromosomes are transferred to the next generation and this process will continue until the stopping criteria (maximum generation number or reach to pre-defined fitness) are met [56,57,58,59,60]. The detailed information concerning genetic operators and their mechanisms can be found in [56].

3.3.1 Rockburst prediction using GEP

The GeneXproTools 5.0, an exceedingly flexible modeling tool designed for function finding, classification, time series prediction, and logic synthesis, was implemented to classify and predict rockburst events. This software classifies the value returned by the evolved model as “1” or “0” via the 0/1 rounding threshold. If the returned value by the evolved model is equal to or greater than the rounding threshold, then the record is classified as “1”, “0” otherwise. Similar to the GA-ENN and C4.5 modeling, 80% of the database was applied to the software as the training dataset to develop the model. In the first step, a fitness function for the algorithm should be defined. The sensitivity/specificity with the rounding threshold of 0.5 was used for this aim. The sensitivity/specificity (SSi) of a chromosome as a solution can be calculated by the following equation:

where SEi is the sensitivity and SPi is the specificity of the chromosome i, and are given by the following formulas:

where TPi, TNi, FPi, and FNi represent, respectively, the number of true positives, true negatives, false positives, and false negatives. TPi, TNi, FPi, and FNi are the four different possible outcomes of a single prediction for a two-class case with classes “1” (Yes) and “0” (No). A false positive is when the outcome is incorrectly classified as “Yes” (or positive) when it is in fact “No” (or negative). A false negative is when the outcome is incorrectly classified as “No” when it is in fact “Yes”. True positives and true negatives are obviously correct classifications. Keeping track of all these possible outcomes is such an error-prone activity that they are usually shown in what is called a confusion matrix. Thus, the fitness value of chromosome i is evaluated by the following equation:

which obviously ranges from 0 to 1000, with 1000 corresponding to the maximum prediction accuracy. In the second step, terminals and functions which are kernels of generated chromosomes should be assigned. Terminals are input parameters (i.e. MTS, UTS, UCS, and EEI). The most common arithmetic functions were selected as follows:

The goal of GEP modeling is to develop a rockburst index in the form of \({\text{RBI}}=f({\text{MTS}},~{\text{UTS}},~{\text{UCS}},~{\text{EEI}})\). The third step is to determine the structural parameters, i.e. the number of genes and head size. These two parameters affect the length of the generated chromosomes and subsequently the complexity of the proposed formula. By trial and error, the best values of 4 and 9 were obtained for the number of genes and head size, respectively. In the fourth step, the ratios of genetic operators (i.e. mutation, inversion, transposition, and recombination) as chromosome modifiers should be determined. A set of values has been recommended by the researchers for genetic operators that their validity has been confirmed in many engineering problems [57, 61,62,63]. So, these values were set for the operators in the current study as well (see Table 5). As the final step, since we face multi-genic chromosomes, we need to define a linking function to link genes to each other. Addition (+) is the most common linking function which was used for this aim. After adjusting the GEP parameters (Table 5), the model was executed in training mode for 2000 generations and the results were recorded. Equation (17) shows the developed rockburst index based on GEP algorithm. By feeding the input parameters to Eq. (17) and comparing the calculated value with Eq. (18), the rockburst occurrence can be determined.

4 Performance evaluation of the proposed models

In this section, the remaining testing datasets (27 cases) were applied to the developed models of GA-ENN, C4.5, and GEP to evaluate their prediction performance. For further evaluation, five conventional criteria mentioned in Table 1 were considered as well. Table 6 shows the obtained results from eight different models in testing stage. A confusion matrix is a useful tool to describe the performance of a classifier on a set of test data. Each row of the matrix represents the instances in an actual class while each column represents the instances in a predicted class (or vice versa). Table 7 shows the confusion matrices of the developed models. According to Tables 6 and 7, GA-ENN and GEP models have the equal number of misclassified cases (i.e. 4 cases), while this number is equal to 9 for stress coefficient and brittleness coefficient criteria. In the following, two indices of root mean squared error (RMSE) (an index to measure the deviation between the actual and predicted data) and the percentage of the successful prediction (PSP) (the percentile quotient of the number of correct predictions to the total number of testing data) were used to investigate the accuracy and capability of the models. Ideally, RMSE and PSP are equal to 0 and 100%, respectively. The results of performance indices are shown in Table 8. As can be seen in this table, all three new constructed models (i.e. GA-ENN, GEP, and C4.5) have higher accuracy and lower estimation error compared with five conventional criteria. Table 8 also shows that two models of GA-ENN and GEP with the similar results outperformed the C4.5. On the other hand, EEI criterion acted just like the C4.5 model which shows that this criterion with its simple formula can be used effectively to predict rockburst occurrence in engineering projects. Figure 8 compares the prediction performance of the developed models.

5 Sensitivity analysis

In this section, a sensitivity analysis is performed to evaluate the effects of input parameters on rockburst prediction models. To this end, the relevancy factor [68] was used which is calculated by the following equation:

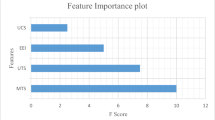

where \({I_{i,k}}\) and \({\bar {I}_k}\) are the ith and average values of the kth input parameter, respectively, \({P_i}\), and \(\bar {P}\) are the ith and average values of the predicted rockburst, respectively, and n is the number of rockburst events. The higher the r value the more influence the input has in predicting the output value. Figure 9 shows the r values. As can be seen in this figure, the maximum tangential stress (MTS) is the most influential parameter in rockburst prediction, and uniaxial compressive strength (UCS) has the lowest impact. These results are in agreement with those obtained by others in a recent study [3].

6 Discussion

A supplementary explanation regarding the proposed three models is contained in this section. As previously mentioned, this is the first attempt in the application of ENNs in earth sciences, and its results were promising. Accordingly, it is highly recommended to check the applicability of ENNs in combination with other meta-heuristic algorithms, as hybrid models, for different aims (e.g. classification, prediction, and minimization) for mining and geotechnical engineering applications. However, as a black-box method like ANN, GA-ENN neither can provide any equation nor a visual pattern for users. This may be considered as a disadvantage for this algorithm, but it is possible to overcome this issue using this technique to find some optimum coefficients of the multiple non-linear regressions in future studies. In contrast to GA-ENN, C4.5 has a very simple modeling mechanism. Its tree structure easily can be adopted as a guide by engineers in the projects to predict the rockburst occurrence just by tracking the values of inputs within the branches of the tree. In some cases, this algorithm may provide large and complex trees according to the defined controlling parameters, which finally decrease the applicability of the developed trees. Besides, C4.5 algorithm on account of its innate PCA characteristic may remove some input parameters during the training stage to increase the accuracy of the final output. Hence, the process of C4.5 modeling requires extensive modeling experiences. The common multiple non-linear regressions need a pre-defined mathematical structure, while the GEP algorithm is able to find the latent relationship between the input and output parameters without any presupposition. This can be introduced as the most important characteristic of GEP algorithm compared with the GA-ENN and C4.5 algorithms. In addition, GEP does not have the limitations of previous methods and is more practical. In the end, it is worth mentioning that the developed models are valid just in the defined ranges of values of inputs and for the new datasets out of these ranges, the models should be adjusted again.

7 Summary and conclusions

This study was intended to assess rockburst hazard in deep underground openings using three renowned data mining techniques including GA-ENN, C4.5, and GEP. A database including the maximum tangential stress of the surrounding rock, the uniaxial tensile strength of rock, the uniaxial compressive strength of rock and the elastic energy index of 134 rockburst experiences in various underground projects was compiled. After a statistical analysis, the GA-ENN, C4.5, and GEP models were developed based on training datasets. In the following, the prediction performance of the models was evaluated by applying unused testing datasets. The results of the new models were compared with five conventional rockburst prediction criteria via performance indices of root mean squared error (RMSE) and percentage of the successful prediction (PSP). Finally, a sensitivity analysis was conducted to know about the influence of input parameters on rockburst using relevancy factor. The following conclusions have been drawn:

-

1.

According to the results of statistical analysis, the original database neither has extreme outliers nor natural groups. So, it is suitable for further analysis.

-

2.

According to the performance indices values, the models of GA-ENN, GEP, and C4.5 have high accuracy in predicting rockburst occurrence, respectively, while the criteria of stress coefficient and brittleness coefficient with the same performance indices values have the lowest capability for predicting.

-

3.

Strain energy index (EEI) with the RMSE of 0.430 and PCP of 81.481% like C4.5 model, can be a beneficial tool to predict rockburst occurrence in practice.

-

4.

The maximum tangential stress (MTS) is the most influential parameter to predict rockburst occurrence. This parameter should be controlled during the design of underground excavations by optimizing their geometry.

References

Adoko AC, Gokceoglu C, Wu L, Zuo QJ (2013) Knowledge-based and data-driven fuzzy modeling for rockburst prediction. Int J Rock Mech Min Sci 61:86–95. https://doi.org/10.1016/j.ijrmms.2013.02.010

Dong L, Li X, Peng K (2013) Prediction of rockburst classification using Random Forest. Trans Nonferrous Met Soc China 23:472–477. https://doi.org/10.1016/S1003-6326(13)62487-5

Li N, Feng X, Jimenez R (2017) Predicting rock burst hazard with incomplete data using Bayesian networks. Tunn Undergr Sp Technol 61:61–70. https://doi.org/10.1016/j.tust.2016.09.010

Weng L, Li X, Taheri A et al (2018) Fracture evolution around a cavity in brittle rock under uniaxial compression and coupled static–dynamic loads. Rock Mech Rock Eng 51(2):531–545. https://doi.org/10.1007/s00603-017-1343-7

Dong LJ, Wesseloo J, Potvin Y, Li XB (2016) Discriminant models of blasts and seismic events in mine seismology. Int J Rock Mech Min Sci 86:282–291. https://doi.org/10.1016/j.ijrmms.2016.04.021

Dong L, Wesseloo J, Potvin Y, Li X (2016) Discrimination of mine seismic events and blasts using the Fisher classifier, naive Bayesian classifier and logistic regression. Rock Mech Rock Eng 49:183–211. https://doi.org/10.1007/s00603-015-0733-y

Dong L, Shu W, Li X et al (2017) Three dimensional comprehensive analytical solutions for locating sources of sensor networks in unknown velocity mining system. IEEE Access 5:11337–11351. https://doi.org/10.1109/ACCESS.2017.2710142

Dong L, Sun D, Li X, Du K (2017) Theoretical and experimental studies of localization methodology for AE and microseismic sources without pre-measured wave velocity in mines. IEEE Access 5:16818–16828. https://doi.org/10.1109/ACCESS.2017.2743115

Weng L, Huang L, Taheri A, Li X (2017) Rockburst characteristics and numerical simulation based on a strain energy density index: a case study of a roadway in Linglong gold mine, China. Tunn Undergr Sp Technol 69:223–232. https://doi.org/10.1016/j.tust.2017.05.011

Akdag S, Karakus M, Taheri A et al (2018) Effects of thermal damage on strain burst mechanism for brittle rocks under true-triaxial loading conditions. Rock Mech Rock Eng 51(6):1657–1682. https://doi.org/10.1007/s00603-018-1415-3

He M, e Sousa LR, Miranda T, Zhu G (2015) Rockburst laboratory tests database—application of data mining techniques. Eng Geol 185:116–130. https://doi.org/10.1016/j.enggeo.2014.12.008

He M, Xia H, Jia X et al (2012) Studies on classification, criteria and control of rockbursts. J Rock Mech Geotech Eng 4:97–114. https://doi.org/10.3724/SP.J.1235.2012.00097

Wang J, Zeng X, Zhou J (2012) Practices on rockburst prevention and control in headrace tunnels of Jinping II hydropower station. J Rock Mech Geotech Eng 4:258–268. https://doi.org/10.3724/SP.J.1235.2012.00258

Sousa R, Einstein HH (2007) Risk analysis for tunnelling projects using bayesian networks. In: 11th Congress of the International Society for Rock Mechanics, 9–13 July 2007, Lisbon, Portugal. Massachusetts Institute of Technology, pp 1301–1304

Jian Z, Xibing L, Xiuzhi S (2012) Long-term prediction model of rockburst in underground openings using heuristic algorithms and support vector machines. Saf Sci 50:629–644. https://doi.org/10.1016/j.ssci.2011.08.065

Russenes B (1974) Analysis of Rock Spalling for Tunnels in Steep Valley Sides. Master Thesis of Science, Norwegian Institute of Technology

Hoek E, Brown ET (1980) Underground excavations in rock. Institution of Mining and Metallurgy, London

Wang YH, Li WD, Lee PKK, Tham LG (1998) Method of fuzzy comprehensive evaluations for rockburst prediction. Chin J Rock Mech Eng 17(5):493–501 (in Chinese)

Berthold M, Hand D (2003) Intelligent data analysis: an introduction. 2nd edn. Springer Science & Business Media, New York

Torres-Jimenez J, Rodriguez-Cristerna A (2017) Metaheuristic post-optimization of the NIST repository of covering arrays. CAAI Trans Intell Technol 2:31–38. https://doi.org/10.1016/j.trit.2016.12.006

Khandelwal M, Shirani Faradonbeh R, Monjezi M et al (2017) Function development for appraising brittleness of intact rocks using genetic programming and non-linear multiple regression models. Eng Comput 33:13–21. https://doi.org/10.1007/s00366-016-0452-3

Aryafar A, Mikaeil R, Haghshenas SS, Haghshenas SS (2018) Application of metaheuristic algorithms to optimal clustering of sawing machine vibration. Meas J Int Meas Confed 124:20–31. https://doi.org/10.1016/j.measurement.2018.03.056

Mikaeil R, Haghshenas SS, Hoseinie SH (2018) Rock penetrability classification using artificial bee colony (ABC) algorithm and self-organizing map. Geotech Geol Eng 36:1309–1318. https://doi.org/10.1007/s10706-017-0394-6

Feng X, Wang L (1994) Rockburst prediction based on neural networks. Trans Nonferrous Met Soc China 4(1):7–14

Zhao HB (2005) Classification of rockburst using support vector machine. Rock Soil Mech 26(4):642–644 (in Chinese)

Gong FQ, Li XB (2007) A distance discriminant analysis method for prediction of possibility and classification of rockburst and its application. Chin J Rock Mech Eng 26(5):1012–1018 (in Chinese)

Shi XZ, Zhou J, Dong L et al (2010) Application of unascertained measurement model to prediction of classification of rockburst intensity. Chin J Rock Mech Eng 29:2720–2726

Zhou J, Shi XZ, Dong L et al (2010) Fisher discriminant analysis model and its application for prediction of classification of rockburst in deepburied long tunnel. J Coal Sci Eng 16(2):144–149

Palmstrom A (1995) Characterizing the strength of rock masses for use in design of underground structures. In: International conference in design and construction of underground structures, p 10

Liu Z, Shao J, Xu W, Meng Y (2013) Prediction of rock burst classification using the technique of cloud models with attribution weight. Nat Hazards 68:549–568. https://doi.org/10.1007/s11069-013-0635-9

Dancy C, Reidy J (2004) Statistics without maths for psychology. Pearson Education Limited, New York

Middleton GV (2000) Data analysis in the earth sciences using MATLAB®. Prentice Hall, Englewood Cliffs

Tiryaki B (2008) Predicting intact rock strength for mechanical excavation using multivariate statistics, artificial neural networks, and regression trees. Eng Geol 99:51–60. https://doi.org/10.1016/j.enggeo.2008.02.003

Yang H, Yu L (2017) Feature extraction of wood-hole defects using wavelet-based ultrasonic testing. J For Res 28:395–402. https://doi.org/10.1007/s11676-016-0297-z

Faradonbeh RS, Monjezi M (2017) Prediction and minimization of blast-induced ground vibration using two robust meta-heuristic algorithms. Eng Comput 33:835–851

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5:115–133. https://doi.org/10.1007/BF02478259

Tracewski L, Bastin L, Fonte CC (2017) Repurposing a deep learning network to filter and classify volunteered photographs for land cover and land use characterization. Geo-Spatial Inf Sci 20:252–268. https://doi.org/10.1080/10095020.2017.1373955

Guo K, Wu S, Xu Y (2017) Face recognition using both visible light image and near-infrared image and a deep network. CAAI Trans Intell Technol 2:39–47. https://doi.org/10.1016/j.trit.2017.03.001

Mohamad ET, Faradonbeh RS, Armaghani DJ et al (2016) An optimized ANN model based on genetic algorithm for predicting ripping production. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2359-8

Mikaeil R, Haghshenas SS, Ozcelik Y, Gharehgheshlagh HH (2018) Performance evaluation of adaptive neuro-fuzzy inference system and group method of data handling-type neural network for estimating wear rate of diamond wire saw. Geotech Geol Eng. https://doi.org/10.1007/s10706-018-0571-2

Lotfi E, Akbarzadeh-T MR (2014) Practical emotional neural networks. Neural Netw 59:61–72. https://doi.org/10.1016/j.neunet.2014.06.012

Lotfi E, Akbarzadeh- TMR (2016) A winner-take-all approach to emotional neural networks with universal approximation property. Inf Sci 346–347:369–388. https://doi.org/10.1016/j.ins.2016.01.055

Lotfi E, Khosravi A, Akbarzadeh-T MR, Nahavandi S (2014) Wind power forecasting using emotional neural networks. In: 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, pp 311–316

Breiman L, Friedman J, Stone CJ, Olshen RA (1984) Classification and regression trees. CRC press, Boca Raton

Salimi A, Faradonbeh RS, Monjezi M, Moormann C (2016) TBM performance estimation using a classification and regression tree (CART) technique. Bull Eng Geol Environ. https://doi.org/10.1007/s10064-016-0969-0

Hasanipanah M, Faradonbeh RS, Armaghani DJ et al (2017) Development of a precise model for prediction of blast-induced flyrock using regression tree technique. Environ Earth Sci. https://doi.org/10.1007/s12665-016-6335-5

Coimbra R, Rodriguez-Galiano V, Olóriz F, Chica-Olmo M (2014) Regression trees for modeling geochemical data: an application to Late Jurassic carbonates (Ammonitico Rosso). Comput Geosci 73:198–207. https://doi.org/10.1016/j.cageo.2014.09.007

Jahed Armaghani D, Mohd Amin MF, Yagiz S et al (2016) Prediction of the uniaxial compressive strength of sandstone using various modeling techniques. Int J Rock Mech Min Sci. https://doi.org/10.1016/j.ijrmms.2016.03.018

Liang M, Mohamad ET, Faradonbeh RS et al (2016) Rock strength assessment based on regression tree technique. Eng Comput. https://doi.org/10.1007/s00366-015-0429-7

Hasanipanah M, Faradonbeh RS, Amnieh HB et al (2017) Forecasting blast-induced ground vibration developing a CART model. Eng Comput. https://doi.org/10.1007/s00366-016-0475-9

Quinlan JR (1993) C4.5: Programs for machine learning. Elsevier, Amsterdam

Ghasemi E, Kalhori H, Bagherpour R (2017) Stability assessment of hard rock pillars using two intelligent classification techniques: a comparative study. Tunn Undergr Sp Technol 68:32–37. https://doi.org/10.1016/j.tust.2017.05.012

Hssina B, Merbouha A, Ezzikouri H, Erritali M (2014) A comparative study of decision tree ID3 and C4.5. Int J Adv Comput Sci Appl 4(2):13–19

Ture M, Tokatli F, Kurt I (2009) Using Kaplan-Meier analysis together with decision tree methods (C&RT, CHAID, QUEST, C4.5 and ID3) in determining recurrence-free survival of breast cancer patients. Expert Syst Appl 36:2017–2026. https://doi.org/10.1016/j.eswa.2007.12.002

Bui DT, Pradhan B, Lofman O, Revhaug I (2012) Landslide Susceptibility assessment in Vietnam using support vector machines, decision tree, and naive Bayes models. Math Probl Eng. https://doi.org/10.1155/2012/974638

Ferreira C (2002) Gene expression programming in problem solving. In: Roy R, Köppen M, Ovaska S et al (eds) Soft computing and industry: recent applications. Springer, London, pp 635–653

Güllü H (2012) Prediction of peak ground acceleration by genetic expression programming and regression: a comparison using likelihood-based measure. Eng Geol 141–142:92–113. https://doi.org/10.1016/j.enggeo.2012.05.010

Armaghani DJ, Faradonbeh RS, Rezaei H et al (2016) Settlement prediction of the rock-socketed piles through a new technique based on gene expression programming. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2618-8

Faradonbeh RS, Armaghani DJ, Amnieh HB, Mohamad ET (2016) Prediction and minimization of blast-induced flyrock using gene expression programming and firefly algorithm. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2537-8

Faradonbeh RS, Hasanipanah M, Amnieh HB et al (2018) Development of GP and GEP models to estimate an environmental issue induced by blasting operation. Environ Monit Assess. https://doi.org/10.1007/s10661-018-6719-y

Ferreira C (2006) Gene expression programming: mathematical modeling by an artificial intelligence. Springer, New York

Kayadelen C (2011) Soil liquefaction modeling by genetic expression programming and neuro-fuzzy. Expert Syst Appl 38:4080–4087. https://doi.org/10.1016/j.eswa.2010.09.071

Khandelwal M, Armaghani DJ, Faradonbeh RS et al (2016) A new model based on gene expression programming to estimate air flow in a single rock joint. Environ Earth Sci. https://doi.org/10.1007/s12665-016-5524-6

Zhang JF (2007) Study on Prediction by Stages and Control Technology of Rockburst Hazard of Daxiangling Highway Tunnel. M.Sc. Thesis, Southwest Jiaotong University, Chendu

Yang JL, Li XB, Zhou ZL, Lin Y (2010) A Fuzzy assessment method of rock-burst prediction based on rough set theory. Met Mine 6:26–29 (in Chinese)

Zhang LX, Li CH (2009) Study on tendency analysis of rockburst and comprehensive prediction of different types of surrounding rock. Tang CA (ed), Proc 13th Int Symp Rockburst Seism Mines Rint Press Dalian, pp 1451–1456

Yi YL, Cao P, Pu CZ (2010) Multi-factorial comprehensive estimation for jinchuan’s deep typical rockburst tendency. Sci Technol Rev 28:76–80

Kamari A, Arabloo M, Shokrollahi A et al (2015) Rapid method to estimate the minimum miscibility pressure (MMP) in live reservoir oil systems during CO2 flooding. Fuel 153:310–319. https://doi.org/10.1016/j.fuel.2015.02.087

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest or financial conflicts to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

About this article

Cite this article

Shirani Faradonbeh, R., Taheri, A. Long-term prediction of rockburst hazard in deep underground openings using three robust data mining techniques. Engineering with Computers 35, 659–675 (2019). https://doi.org/10.1007/s00366-018-0624-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-018-0624-4