Abstract

Metamodeling or surrogate modeling is becoming increasingly popular for product design optimization in manufacture industries. In this paper, an extended Gaussian Kriging method is proposed to improve the prediction performance of widely used ordinary Kriging in engineering design. Unlike the forgoing approaches, the proposed method places a variance-varying Gaussian prior on the unknown regression coefficients in the mean model of Kriging and makes prediction at untried design points based on the principle of Bayesian maximum a posterior. The achieved regression mean model is adaptive, therefore capable of capturing more effectively the overall trend of computer responses and leading to a more accurate metamodel. Particularly, the regression coefficients in the mean model are estimated by a fast numerical algorithm, making extended Gaussian Kriging implemented roughly as efficient as ordinary Kriging. Experiment results on several examples are presented, showing remarkable improvement in prediction using extended Gaussian Kriging over ordinary Kriging and several other metamodeling methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Currently, computer modeling and experiments are becoming increasingly popular for product design optimization in manufacture industries, in order to produce better and cheaper products more quickly. However, the cost of running computer simulation experiments is nontrivial in spite of the continued growing computing power. For some computer experiments, 12 h or even considerably longer time are required to produce one single simulation response. For example, it is reported that Ford Motor Company takes about 36–160 h to perform one crash simulation [1]. Another known example in literature is the piston slap noise experiment, taking 24 h for every run of computer experiments [2]. In the past 20 years, to improve the computational efficiency and reduce the cost of experiments, meta-modeling techniques have been developed to produce a metamodel or surrogate model, i.e., “model of the model”, as an approximation of the complex and expensive computer code.

In literature, existing metamodels include: polynomial regressions [3, 30], radial basis functions [4, 31, 33, 35], multivariate adaptive regressive splines [54], Gaussian stochastic process models [4, 6, 13, 14, 18], artificial neural networks [5, 55, 56], and support vector regression [57]. Among these methods, the polynomial regressions are probably the most mature and the easiest metamodeling techniques and have been utilized extensively in combination with the Taguchi approach in engineering design [5]. In more recent years, Gaussian stochastic process models, also known as Gaussian Kriging [6, 7], have also been studied extensively in the statistical communities. The popularity of Kriging consists in that, computer experiments are often deterministic and Kriging is able to provide an interpolating approximation model. However, the Kriging method has found limited use in engineering design optimization compared against polynomial regressions. Lack of readily available software to fit Kriging models in early days is one inherent reason [5]. While, the key reason lies in that fitting a Kriging model is more complex than fitting a regression polynomial. The low level of robustness of the approach to sampled points is also an important reason. Nevertheless, when the computer simulators in engineering are deterministic, and in particular, highly nonlinear in a great number of factors (more than 50 or even 100), polynomial regression modeling becomes insufficient and Kriging modeling may be a better choice in spite of the added complexity [5, 8].

A basic Kriging model is universal Kriging, consisting of a Gaussian stochastic process model and a regression mean model. The basis functions or important variables in the regression mean are assumed to be known, which is not feasible in practical applications. As a result, ordinary Kriging becomes the most commonly used Kriging method [8–14]. Ordinary Kriging utilizes a constant mean to capture the overall trend of computer simulation responses, however, resulting in a poor prediction at new design points if there are some strong trends [14]. Till now, Kriging has been used to approximate and analyze different computer experiments in engineering design. As examples, [15] uses Kriging for the design of a High Speed Civil Transport airport, [16] uses Kriging for the helicopter rotor structural design, and [17] uses Kriging for the design of a variable thickness piezoelectric bimorph actuator.

To improve the prediction accuracy of Kriging, several advanced Kriging metamodeling approaches are proposed more recently. For example, Joseph et al. [18] proposed a metamodel called blind Kriging. Blind Kriging achieves higher prediction accuracy compared against ordinary Kriging and universal Kriging. However, a scheme of computationally intensive Bayesian forward selection is involved in the process of estimation. In addition, the correlation parameters in blind Kriging have to be estimated two times, making the computational complexity of blind Kriging at least twice as that of ordinary Kriging. Linkletter et al. [19] also proposed a meta-modeling approach through combining Bayesian variable selection with Kriging. Although the variable selection procedure therein is easy to implement, it is computationally demanding as involving Kriging, too. Besides, Linkletter et al. [19] focused primarily on screening of important design factors, rather than the objective of improvement in prediction. Joseph [20] deduced a metamodel called limit Kriging. Limit Kriging may be viewed as the limit of ordinary Kriging to a certain degree, however, achieving limited improvement in prediction and appearing to be somewhat numerically unstable as claimed. Besides, Li and Sudjianto [2] proposed a penalized likelihood approach, i.e., smoothly clipped absolute deviation (SCAD) penalized Kriging, to improve ordinary Kriging. Their research motivation is that, the correlation parameters in ordinary Kriging are often obtained by the maximum likelihood approach where the likelihood function near the optimum may be flat in some situations, often leading to estimates of the parameters having quite large variance. Notice that, however, the penalized likelihood approach is essentially rooted in ordinary Kriging, where the mean is just a constant.

In this paper, an extended Gaussian Kriging method is proposed to improve the prediction accuracy of Kriging metamodeling. Unlike the forgoing approaches, the new method imposes a variance-varying Gaussian prior on the unknown regression coefficients in the mean function of universal Kriging and makes prediction at untried design points based on the principle of Bayesian maximum a posterior. The achieved regression mean model is adaptive, thereby able to capture more effectively the overall trend of computer responses and lead to a more accurate metamodel. The underlying parameters in Kriging are estimated iteratively, and particularly, the regression coefficients are estimated by a fast numerical algorithm; the benefit of exploiting the fast algorithm is that the computational complexity in fitting extended Gaussian Kriging is roughly the same as that of the most commonly used ordinary Kriging. Experimental results on several examples are presented, showing remarkable improvement in prediction using extended Gaussian Kriging over ordinary Kriging and several other metamodeling methods.

One more point is to claim that our proposed method is particularly suitable to those much complex computer experiments. In such situations, collecting a large sample may be very much difficult, and a more accurate approximation model from a small sample size becomes crucial. The extended Gaussian Kriging is just one of such potential choices. However, as the sample size of computer runs increases, the efficiency of our proposed method as well as ordinary Kriging is to decrease due to the inversion of the large-scale correlation matrix involved in fitting Kriging.

The remainder of the paper is organized as follows: Sect. 2 gives a brief review of metamodeling methodologies for computer experiments. In Sect. 3, a Bayesian Kriging metamodeling approach is proposed using Bayesian modeling and posterior inference. Section 4 describes a fast algorithm solving the regression coefficients and other related computational aspects. Section 5 gives experimental results on several empirical applications, validating the performance of our proposed method. Conclusions are finally given in Sect. 6.

2 Metamodeling methods for computer experiments in engineering design

This study concentrates on the deterministic computer experiments in many engineering design fields; that is, the same input settings yield the same computer responses. Suppose a vector of design variable is denoted as \( x = (x_{1} ,x_{2} , \ldots ,x_{p} )^{\prime} \in D \subseteq R^{p} \), and the true nature of a computer analysis code is denoted as \( y = f(x) \). Then the general metamodeling problem can be stated in the following: Given a set of computer runs \( (x^{(1)} ,x^{(2)} , \ldots ,x^{(n)} ) \) produced by the corresponding expensive computer code, the aim is to seek an accurate and computationally inexpensive surrogate model \( y = g(x) \) over a given design domain D, satisfying

That is, the model \( y = g(x) \) is an interpolating global metamodel of the true deterministic computer code. In general, metamodeling is determined by two key issues, namely, the experimental designs and the metamodeling procedures.

An experimental design represents a sequence of experiments to be performed, expressed in terms of factors (design variables) set at specified levels (predefined values) and represented by a matrix where the rows denote experiment runs and the columns denote factor settings [5]. The experimental design techniques were originally developed for physical experiments and now are being applied to the design and analysis of computer experiments (DACE). Classical experimental designs for analysis of physical experiments include [1, 5] full factorial design, fractional factorial design, Plackett-Burman designs, central composite designs (CCD), Box-Behnken designs, and orthogonal arrays. As [1] wrote, “these classic methods tend to spread the sample points around boundaries of the design space and leave a few at the center of the design space”. While, as deterministic computer experiments involve systematic error rather than random noise as in physical experiments, researchers advocate that experimental designs for computer experiments should be space filling [21]. Unlike those classical methods, the space-filling methods tend to fill the design space equally rather than to concentrate on the boundary. Koehler and Owen described several Bayesian and Frequentist space-filling designs [22], including maximum entropy designs, mean squared-error designs, minimax and maximin designs, Latin hypercube designs, randomized orthogonal arrays, and scrambled nets. Four types of space-filling methods are relatively more often used in the literature. They are, respectively, Latin hypercube designs [23, 24], uniform designs [25], Hammersley sequences [26], and orthogonal arrays [27, 28]. A comparison of these methods can be referred to ref. [29]. Besides, sequential and adaptive sampling methodologies have recently gained popularity because of the difficulty of knowing the “appropriate” sampling size a priori. Jin et al. [21] compared several different sampling methods, finding that sequential sampling allows engineers to control the sampling process and it is generally more efficient than one-stage sampling.

Though many metamodeling approaches have been proposed for computer experiments in engineering design, we review here the three that are most prevalent in the literature: polynomial regressions, radial basis functions, and Kriging.

2.1 Polynomial regressions

In this article, PR refers to the low-order polynomial regressions: first-order (linear) and second-order (quadratic) polynomials, which have been used successfully for prediction in many engineering design applications [30]. By now, polynomial regressions are probably the fastest and simplest metamodeling technique. For low curvature, a linear polynomial (LP) can be used as in (2); for significant curvature, a quadratic polynomial (QP) including all two-factor interactions can be used as in (3):

The parameters in (2) and (3) are unknown coefficients usually determined by the least squares approach. However, a PR merely produces a regression fit rather than an interpolative fit satisfying (1); it is not suitable for creating global metamodels for those highly non-linear, multi-modal, and multi-dimensional response surfaces which are frequently encountered in engineering design, either [1]. To improve the prediction accuracy of PR, various local or successive methods have been developed to overcome the deficiency of the global PR in the past several decades, at the expense of additional sampling points and/or representing only a small portion of design space [31]. These methods, however, are not appropriate to the computationally expensive computer simulators, such as the case of vehicle crash simulations [32]. For these reasons, global metamodels with high accuracy and limited sampling points are highly desirable. A more complete discussion of response surfaces and least squares fitting can be referred to ref. [30]. We use the PR in our comparative study in Sect. 5 purely as a benchmark approach, considering its popularity and widespread use in the industry.

2.2 Radial basis functions

The RBF are methodologies for exact interpolation of data in multi-dimensional space [33], originally developed for fitting irregular topographic contours of geographical data [34]. The RBF is a family of models constructed by the same methodology but distinguished by the either linear or nonlinear basis functions. Specifically, a general form of RBF approximation to the true computer code \( y = f(x) \) can be formulated as

where the term \( ||x - x^{(k)} || \) is the Euclidean norm, γ is a basis function, and β k is the coefficient for the kth basis function. From (4), it is observed that a RBF model is an interpolative approximation, thus satisfying (1). The overall accuracy of RBF essentially depends on the selected basis function for a given set of data samples. The most widely used basis functions include linear, cubic, thin-plate spline (TPS), Gaussian, multi-quadric (MQ), and inverse MQ functions [31]. In recent years, multiple comparative studies have demonstrated that the RBF are particularly appropriate to highly nonlinear problems, in both case of large or limited number of samples, and more accurate than polynomial regressions in general [31, 35].

The RBF are good for highly nonlinear responses; however, they are shown to be inappropriate for linear responses [36]. Thus, an augmented RBF model is proposed through adding a linear polynomial, given as [31, 36]

where bj(x) is a linear polynomial function, and λ j is the corresponding unknown coefficient. Since (5) is underdetermined, the orthogonality condition is further imposed on \( \lambda_{j} \left( {j = 1, 2, \ldots , p} \right) \) so that

Combining (5) and (6), coefficients \( \beta = [\beta_{1} ,\beta_{2} , \ldots ,\beta_{n} ]^{\prime } \) and \( \lambda = [\lambda_{1} ,\lambda_{2} , \ldots ,\lambda_{p} ]^{\prime} \) can be estimated by the following system of equations:

where \( Y = [y_{1} ,y_{2} , \ldots ,y_{n} ]^{\prime},\Upupsilon_{j,k} = \gamma \left( {||x^{(j)} - x^{(k)} ||} \right) \), and \( {\rm B}_{j,k} = b_{j} \left( {x^{(k)} } \right) \). In addition, Mullur and Messac [37] recently developed an extended RBF model by adding extra terms to a regular RBF model. It has been shown that the extended RBF is more flexible, in that it provides better smoothing properties and generally it yields more accurate metamodels than the typical RBF [37, 38].

2.3 Kriging

Recently, Kriging is particularly recommended if the computer code to be studied is deterministic and highly nonlinear [5], in that Kriging is capable of dealing with a great number of design factors (more than 50 or even 100) and providing an interpolating approximation model. More importantly, Kriging is by now the least assuming method in this paper in terms of the range of function forms it can approximate. The general form of Kriging metamodel is to represent the true computer analysis code y = f(x) as a combination of a polynomial model plus departures of the form

In (8), μ(x) is a regression function modeling the drift of the process mean over the design space. Particularly, when the mean term μ(x) is a linear combination of some known function of x as in Eq. (9), the resulting Kriging model is called universal Kriging.

In (9), the terms {ψ k (x)} J k=0 are some known functions, e.g., low-order polynomial functions, and {β k } J k=0 are unknown parameters to be estimated.

The second term in (8), i.e., Z(x), is a stationary Gaussian stochastic process modeling residuals from the linear regression, with mean 0, and covariance

In most cases, φ in (10) is a prespecified correlation function of the “distance” between x (l) and x (k), controlling the smoothness of the resulting Kriging model, the influence of other nearby points, and the differentiability of the surface. Koehler and Owen [22] provided an insightful review of four functions commonly utilized in Kriging when approximating a deterministic computer model and the impact of parameter selection on the properties of these functions. In this paper, the correlation function is chosen as the Gaussian function

where {θ j } p j=1 are the correlation parameters.

The parameters {β k } J k=0 , {θ j } p j=1 and σ 2 in (8) are frequently estimated by the maximum likelihood approach [4, 6, 13, 14, 18]. The corresponding predictor of universal Kriging can be formulated as

where ψ(x) = [ψ 0(x), ψ 1(x),…,ψ J (x)]′, φ(x) = [φ(x – x (1)), φ(x − x (2)),…,φ(x − x (n))]′,Φ(θ) is the correlation matrix with elements Φ l,k = φ(x (l) − x (k)), and

Furthermore, given {θ j } p j=1 and σ 2, the variance of the predictor (12) can be obtained as

where u = Ψ ′ Φ −1 φ − ψ.

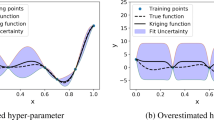

It is observed that, universal Kriging uses known functions to capture the trend of various computer simulators. However, we seldom have a priori knowledge of the trends in the data and specifying them using known functions may introduce inaccuracies. Therefore, ordinary Kriging as shown in (15) becomes the most popular Kriging model, which uses only a constant to capture the overall trend

However, ordinary Kriging will lead to a poor prediction at new design points if there are some strong trends [14]. Thereby, neither universal Kriging nor ordinary Kriging is satisfactory. And it is for this reason that several improved Kriging metamodeling approaches are recently reported in the literature [2, 18–20], as commented in the Sect. 1

3 Extended Gaussian Kriging: Bayesian modeling and inference for Kriging

From the above, we know that neither universal Kriging nor ordinary Kriging is satisfactory to capture the overall trend of computer responses. In fact, the basis functions in universal Kriging can be viewed as the variables greatly affecting the computer responses. Thus, the drawbacks of universal Kriging and ordinary Kriging may be addressed as follows: in practical applications, universal Kriging can seldom avoid including the unimportant variables in the mean function of universal Kriging, while ordinary Kriging has completely ignored the important variables. To improve the prediction accuracy of Kriging metamodeling, an extended Gaussian Kriging method is proposed to balance between ordinary Kriging and universal Kriging. In this section, low-order basis functions are still assumed in the mean model of the extended Gaussian Kriging; however, they are considered to be no more uniformly important as in universal Kriging. Specifically, unlike previous improved Kriging methods, the new method imposes a straightforward variance-varying Gaussian prior density on the unknown regression coefficients in the mean model, making us more adaptively capture the overall trend of computer responses, and therefore leading to a more accurate Kriging metamodel.

3.1 Bayesian modeling for the adaptive mean function

In this subsection, the specification of the prior p(β) is considered, relying on our prior knowledge on the regression coefficients. Ideally, p(β) should reflect our expectation of adaptively choosing the important variables from {Ψ k (x)} J k=0 for a more accurate mean model. In other words, the estimated regression coefficients are expected to be sparse, i.e., p(β) is a sparseness-promoting Bayesian prior. Commonly exploited sparse priors in the literature include the student’s t distribution and the double exponential distribution [39–41]. However, they are not efficient for the final Bayesian inference in this paper. On the one hand, intensive computation is desired to estimate parameters involved in the above distributions. As we know, three parameters are to be estimated in the student’s t distribution, i.e., the degrees of freedom, the location, and the scale parameters; and a scale parameter is to be estimated in the double exponential distribution which has no analytical solution. On the other hand, neither the student’s t distribution nor the double exponential distribution allows for a tractable Bayesian analysis, since neither one is conjugate to the Gaussian likelihood to be specified in the next subsection.

In this paper, we choose p(β) to be the relevance vector machine or sparse Bayesian learning [42], denoted by

In (16), \( \gamma = (\gamma_{0} ,\gamma_{1} , \ldots ,\gamma_{J} )^{\prime},{\rm N}(\beta_{k} |0,\gamma_{k}^{ - 1} ) \) is a zero-mean Gaussian prior placed on β k with varying inverse variance γ k . Notice that the similar prior density has also been applied to signal compressed sensing more recently [43, 44]. The rationality of (16) is interpreted as follows. In fact, each γ k in (16) may be considered to be a random variable, and a Gamma prior can be imposed independently on each unknown inverse variance, obtaining

In the theory of Bayesian statistics, p(γ|a, b) is called hyperprior, and a, b are hyperparameters to be estimated. By marginalizing over the inverse variances γ, the overall prior on β is then evaluated as

When β k plays the role of observed data and \(N\left( {\beta _{k}| 0,\gamma _{k}^{{ - 1}} } \right)\) is a likelihood function, the hyperprior \(\Gamma \left( {\gamma _{k}|a,b} \right)\) is the conjugate prior for γ k , and consequently, the integral in (18) can be evaluated analytically, corresponding to a student’s t distribution [42]. With appropriate choices of a and b, the student’s t distribution is strongly peaked about β k = 0, and therefore the prior in (18) favors most β k being zero, i.e., it is a sparseness-promoting prior. Therefore, (16) is a simplified version of the above two-level hierarchical modeling, i.e., a uniform density is imposed for each inverse variance. Yet, the simplified hierarchical modeling much releases us estimating the hyperparameters.

3.2 Prediction via Bayesian inference and analysis

Given computer outputs y = (y 1,…,y n )′ collected at n design points{x (1),…,x (n)}, the aim is to make prediction at an untried design point x 0. Specifically, the prediction can be formulated as

Because {y 1,…,y n } are realizations of a stationary Gaussian stochastic process, p(y|β, θ, σ 2) is the multivariate Gaussian likelihood function of the computer outputs \( y = (y_{1} , \ldots ,y_{n} )^{\prime} \). That is,

Therefore, it is not hard to obtain the joint distribution of \( y^{0} = g(x^{0} ) \) and \( y = (y_{1} , \ldots ,y_{n} )^{\prime} \) as follows:

where \( \psi (x^{0} ) = [\psi_{0} (x^{0} ),\psi_{1} (x^{0} ), \ldots ,\psi_{J} (x^{0} )]^{\prime} \), and \( \varphi (x^{0} ) = [\varphi (x^{0} - x^{(1)} ),\varphi (x^{0} - x^{(2)} ), \ldots ,\varphi (x^{0} - x^{(n)} )]^{\prime} \). Then, based on the conditional distribution of the multivariate normal [4], \( p(y^{0} |y,\beta ,\theta ,\sigma^{2} ) \) can be formulated as follows:

Hence, the maximizer in (19) is just the expectation of the multivariate normal distribution in (22), that is,

Similar to (14) in universal Kriging, the prediction variance of the proposed predictor (23) can be also clarified. It only needs firstly formulating the density \( p(y^{0} |y,\theta ,\sigma^{2} ) \), and then calculating the variance. Specifically, \( p(y^{0} |y,\theta ,\sigma^{2} ) \) can be written as

It is observed that (23) relies on the correlation parameters θ and the regression coefficients β . Hence, parameters β, θ, σ 2 need estimating to achieve the final prediction in (23). Based on the principle of Bayesian maximum a posterior (MAP), the parameters β, θ, σ 2 can be estimated by

From the above, it is known that \( p(y|\beta ,\theta ,\sigma^{2} ) \) corresponds to a multivariate Gaussian likelihood function of the computer outputs y, and pβ is chosen as a multivariate normal distribution with varying variance in Subsect. 3.1. Thereby, only p(θ) and \( p(\sigma^{2} ) \) need specifying in the following. For computational efficiency, the two priors are expected to be parameter-free. Any reasonable non- informative prior densities may be imposed on the two parameters, such as Jeffrey’s noninformative prior [40, 45], constant prior [39, 40], and so on. In this paper, a constant prior is, respectively, placed on the two parameters; that is,

Substituting (16), (20), (26), and (27) into (25), parameters β, θ and σ 2 can be then estimated by

Besides, the inverse variances γ can be estimated by the following evidence maximization procedure, formulated as

where

4 Computational issues and fast algorithm

This section mainly focuses on the implementation of the optimization problems formulated in Sect. 3. In particular, a fast suboptimal iterative algorithm is presented for the extended Gaussian Kriging. Other detailed computational issues are also to be discussed and clarified in this section.

4.1 Computation and optimization

Above all, reformulate (20) to ease the computation in the following. Since the correlation function φ in Φ(θ) is of Gaussian type, Φ(θ) is symmetric and positive definite, implying that Φ(θ) can be rewritten in a factorized form using the Cholesky decomposition

where C(θ) is an upper triangular matrix, called the Cholesky factor. Multiply the factor C −1 on both sides of the equation y = Ψ β + z, where z = (z 1,…, z n )′ are the realizations of the Gaussian stochastic process z(x) at design points {x (1),…,x (n)}, and obtain

Denote \( \tilde{y} = C^{ - 1} y,\tilde{\Uppsi } = C^{ - 1} \Uppsi ,\tilde{z} = C^{ - 1} z \), and obtain

Then, \( \tilde{y} \) is distributed to a multivariate normal PDF with an identity covariance matrix; (20) can be reformulated as

Now, consider (28) for the Bayesian MAP estimate of the regression coefficients β. Since the likelihoods (20) and (34) are equivalent to each other as given β, θ, σ 2, (28) can be reformulated as

Because the prior density \( p(\beta |\gamma ) \) is conjugate to the likelihood \( p(\tilde{y}|\beta ,\theta ,\sigma^{2} ) \), it is easy to find that the Bayesian posterior for the regression coefficients can be expressed analytically as a multivariate normal distribution, i.e.,

where

and \( \Uplambda = diag(\gamma_{0} ,\gamma_{1} , \ldots ,\gamma_{J} ) \). Hence, provided the transformed outputs \( \tilde{y} \) and the parameters \( \theta ,\gamma ,\sigma^{2} \), the MAP estimate of the regression coefficients is

For the standard deviation σ 2 and correlation parameters θ, due to their being placed on the constant prior, the optimization in (29) and (30) corresponds to the maximum likelihood estimation as in universal Kriging. The MAP estimates of parameters σ 2, θ are therefore given by

Obviously, there is no analytical solution to (36). In the literature, several numerical algorithms have been exploited to solve (36), e.g., the Newton–Raphson method [2], the quasi-Newton method [46], the Fisher scoring algorithm [2], adaptive simulated annealing [46], and the pattern search method [46, 47]. The former three methods use gradient information and yield local optimizers. Though the pattern search is not directly gradient-based, it is still a local optimization method. Notice that the pattern search method tends to be better appropriate to highly nonlinear or discontinuous functions. Comparatively, adaptive simulated annealing is more robust in finding solutions to functions where multimodal and long near-optimal ridge features exist, e.g., (37). While, adaptive simulated annealing is computationally intensive as it is a Monte Carlo method [46]. Considering both issues of computational efficiency and accuracy, the Fisher scoring algorithm and the pattern search are chosen as candidate optimization algorithms for solving (37).

To estimate the inverse variances γ, one has to compute the function L(γ) as in (32). Since we have \( p(\tilde{y}|\beta ,\theta ,\sigma^{2} )p(\beta |\gamma ) \propto N(\mu ,\sum ),\;L(\gamma ) \) can be written as follows:

where \( I_{n \times n} \) is an identity matrix, \( \Upomega = \sigma^{2} I_{n \times n} + \tilde{\Uppsi }\Uplambda^{ - 1} \tilde{\Uppsi }^{\prime} \), and

Thus,

where \( \mu_{k} \) is the kth element of \( \mu \), and ∑ kk is the kth diagonal element of ∑.

Finally, using (35), (36), (37) and (38), an iterative algorithm for proposed extended Gaussian Kriging can be obtained by updating each of the parameters γ, β, σ 2, θ per iteration.

4.2 Fast algorithm

In Subsect. 4.1, estimation of parameters γ, β, σ 2, θ requires iterative computation of (35), (36), (37), and (38). Notice that σ 2 is easy to calculate utilizing (36), and the correlation parameters θ can be calculated by the efficient Fisher scoring algorithm or the pattern search method. However, the estimation of β and γ is not cheap because matrix inversion in (35) and (38) requires O((J + 1)3) computations. Therefore, the algorithm presented in Sect. 4.1 cannot be directly applied to practical metamodeling tasks, but is able to serve us as the starting point in developing a fast algorithm in the following. The kernel idea of the fast algorithm is the reduction of the dimension of Σ in (35) and (38). According to the expectation that the regression coefficients β are sparse and most inverse variances of {γ k } J k=0 should be set equal to zero, thus leading to a lower dimension matrix Σ. Therefore, the fast algorithm can be implemented by starting an empty matrix Ψ (that is, all the inverse variances are equal to zero), and iteratively and adaptively adding a single variable every time to the matrix Ψ.

The fast algorithm is presented as follows: consider the function L(γ) (omitting a constant)

To estimate each inverse variance γ k while holding other inverse variances fixed, (39) is rewritten as

where Ω −k denotes that the contribution of kth basis in \( \tilde{\Uppsi } = [\tilde{\psi }_{0} ,\tilde{\psi }_{1} , \ldots ,\tilde{\psi }_{J} ] \), i.e., \( \tilde{\psi }_{k} \), is not included, and

Besides, s k , q k in (40) are defined as \( s_{k} = \tilde{\psi }_{k}^{\prime } \Upomega_{ - k}^{ - 1} \tilde{\psi }_{k} , \, q_{k} = \tilde{\psi }_{k}^{\prime } \Upomega_{ - k}^{ - 1} \tilde{y} \). Thus, γ k can be estimated by just maximizing l(γ k ) since L(γ −k ) does not depend on γ k . After careful calculation, we obtain

As \( \gamma_{k} = \infty ,\psi_{k} \) is pruned out from the matrix Ψ, and β k is set equal to zero. Notice that, similar to [39, 48], s k , q k can be efficiently calculated by

where Σ and \( \tilde{\Uppsi } \) include only the columns that have been included in the regression matrix\( \tilde{\Uppsi } \). Since the inverse variances γ can be easily estimated by (41), β can be calculated rather efficiently by (35) with low dimensional matrices Σ and \( \tilde{\Uppsi } \). Additionally, the initial values of parameters \( \gamma ,\beta ,\sigma^{2} ,\theta \) to the fast algorithm are given as follows: \( \gamma^{(0)} = 0 \), \( \beta^{(0)} = 0 \), \( \sigma^{2(0)} = 0 \), \( \theta^{(0)} = 0 \).

4.3 Discussion on computational complexity

Another important issue is the computational complexity of the overall extended Gaussian Kriging. It is noticed that the related parameters in extended Gaussian Kriging are estimated iteratively; and particularly, the regression coefficients are estimated adaptively by a fast algorithm in Subsect. 4.2. However, since the correlation parameters in extended Gaussian Kriging are estimated by the Fisher scoring algorithm or pattern search method as in ordinary Kriging, the computational efficiency of extended Gaussian Kriging and ordinary Kriging is to decrease as the sample size of computer runs increases, due to the inversion of the correlation matrix involved in the process of fitting Kriging. As a matter of fact, the similar problem also exists in other recent Kriging metamodeling methods, e.g., limit Kriging [20], SCAD penalized Kriging [2], blind Kriging [18], and so on. However, it is believed that the prediction performance of extended Gaussian Kriging can be still superior to that of ordinary Kriging and universal Kriging. That is because extended Gaussian Kriging can provide a more accurate mean model adaptively capturing the overall trend of computer responses.

Actually, our extended Gaussian Kriging is particularly appropriate to those much more complex computer experiments. In such situations, collecting a large or moderate sample may be very difficult, and a more efficient and accurate approximation model from a small sample becomes much crucial. To be specific, our extended Gaussian Kriging has competitive computational efficiency compared against ordinary Kriging, in that a fast algorithm is exploited to estimate the regression coefficients in the mean model and the correlation parameters are estimated only once as ordinary Kriging. More importantly, experimental results in Sect. 5 demonstrate that extended Gaussian Kriging is capable of achieving higher prediction accuracy. However, the correlation parameters in blind Kriging have to be estimated two times [18], making the computational complexity of blind Kriging at least twice as that of ordinary Kriging. In the meanwhile, a scheme of computationally intensive Bayesian forward variable selection is also involved in the process of estimation. Though limit Kriging [20] achieves the similar computational efficiency as ordinary Kriging, the improvement in prediction accuracy is rather limited. Furthermore, SCAD penalized Kriging [2] improves ordinary Kriging at the cost of estimating the tuning parameters involved in the penalized likelihood. As for non-Kriging metamodeling methods in Sect. 2, model construction can be more efficient than ordinary Kriging because there only needs estimating the regression coefficients in PR and (augmented) RBF. Nevertheless, less efficiency both in computation and prediction is to be expected as applied to those more complex problems with high dimension and highly non-linear performance [5].

5 Examples

5.1 Example 1: piston slap noise experiment

Automobile customer satisfaction depends highly on the level of satisfaction that a customer has with the vehicle’s engine. The noise, vibration, and harshness characteristics of an automobile and its engine are the critical elements of customer dissatisfaction. Specifically, piston slap noise is focused in this case study. Generally, the piston slap noise results from the piston secondary motion which is caused by a combination of transient forces and moments acting on the piston during engine operation and presence of clearances between the piston and the cylinder liner. The combination results in both a rotation and a lateral movement of the piston, causing the piston to impact the cylinder wall at regular intervals. It is just these impacts resulting in the objectionable engine noise known as piston slap. A thorough and detailed description on the experiment can be found in Hoffman et al. [49].

For this study, a computer experiment needs performing by varying variables to minimize the piston slap noise. In the experiment, the piston slap noise (y) is taken as the output variable, and the clearance between the piston and the cylinder liner (x 1), the location of peak pressure (x 2), the skirt length (x 3), the skirt profile (x 4), the skirt ovality (x 5), and the pin offset (x 6) are taken as the input variables. It is to be noted that the clearance between the piston and the cylinder liner (x 1) and the location of peak pressure (x 2) are noise factors. Besides, the uniform design [25] is used to construct an experimental design for the computer experiment with 12 runs. The designed inputs and outputs of the computer experiment are given in Table 1. More details on the experimental setup can be found in Li et al. [2].

In the following, extended Gaussian Kriging is used to construct a metamodel as an approximation to the computationally expensive computer experiment. The candidate basis functions in the mean model are assumed to be the linear and quadratic polynomial functions, as well as the two-factor interactions, that is,

Since there are six factors in the piston slap noise experiment, there are hence totally 28 variables in the regression mean model. Besides, ordinary Kriging, universal Kriging, SCAD penalized Kriging [2], and blind Kriging [18] are also implemented for comparison. In the following, universal Kriging is denoted as universal Kriging-LP as linear polynomial functions are chosen as candidate variables, and denoted as universal Kriging-QP as (42) is chosen.

For all the Kriging methods, the correlation parameters are estimated by the Fisher scoring algorithm [2]. Augmented radial basis functions (A-RBF) using several commonly used basis functions are also tried for this example, including linear, cubic, Gaussian, multi-quadric, and inverse multi-quadric functions [31]. The related parameters in these basis functions are tuned to be optimal. Besides, PR–LP and PR–QP are implemented for comparison, considering their popularity and widespread use in the industry.

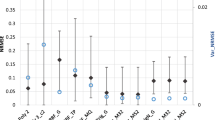

To assess the performance of different metamodeling approaches, additional 100 runs are provided by another designed computer experiments. Two criteria are used to measure how well the prediction performs. They are, respectively, the root mean square error (RMSE) and the median of absolute error (MAR), defined as

where N is the number of total testing design points \( \{ x^{(1)} , \ldots ,x^{(N)} \} \), \( \hat{y}(x) \) is the predicted response at a design point x, and \( y(x) \) is the true response. The RMSE and MAR of different metamodeling methods are listed in Tables 2 and 3. Besides, estimated regression coefficients of different approaches and correlation parameters in different Kriging methods are outlined in Tables 4 and 5.

The RMSE for extended Gaussian Kriging is 1.1174, and equals 1.2807, 1.4813, 1.7307, 1.4864, and 4.5407 for blind Kriging, SCAD penalized Kriging, and ordinary Kriging, universal Kriging-LP, and universal Kriging-QP. The MAR for extended Gaussian Kriging is 0.7852, and MAR equals 0.8560, 1.0588, 1.3375, 1.1730, and 3.2739 for blind Kriging, SCAD penalized Kriging, and ordinary Kriging, universal Kriging-LP, and universal Kriging-QP. Both the RMSE and MAR demonstrate that extended Gaussian Kriging achieves a fairly better prediction performance than other Kriging methods. Though SCAD penalized Kriging focuses on the more careful calculation of correlation parameters, it is still limited in improving the prediction accuracy due to its mean function just a constant. It is also observed that extended Gaussian Kriging performs slightly better than blind Kriging though a Bayesian forward variable selection strategy has been specifically incorporated into Kriging. And universal Kriging-QP has achieved the worst performance among several Kriging methods, in that there are many unimportant variables included in the mean model as shown in Table 4, which actually deteriorate the prediction performance. That is also why universal Kriging-LP has performed better than universal Kriging-QP in this example.

The RMSE for PR-LP is 2.6435, and the MAR equals 2.1827. The RMSE for PR-QP is 6.4818, and the MAR equals 3.6618. Compared against the Kriging methods, it is clear that PR-LP (QP) achieves poorer prediction performance than universal Kriging-LP (QP). The reason lies in that there is a Gaussian stochastic process modeling residuals from the linear regression in universal Kriging-LP (QP). Besides, Kriging is interpolative, whereas PR is not. Compared against A-RBF, PR is of also lower prediction accuracy. The RMSE for the linear basis function is 1.8027 and equals 1.8656, 1.4856, 1.8090, and 1.4856 for the cubic, Gaussian, multi-quadric, and inverse multi-quadric basis functions. Their MAR equals 1.2465, 1.3308, 1.1730, 1.2480, and 1.1730, correspondingly. The superiority of A-RBF to PR also consists in that a RBF model passes through all the sampling points exactly, whereas PR does not. In the meanwhile, it is observed that Gaussian and inverse multi-quadric basis functions achieve the same RMSE and MAR and perform a little better than other three basis functions.

Observed from the RMSE and MAR of all the methods discussed in this example, it is obvious that extended Gaussian Kriging achieves the best performance, not only lower in the global RMSE but also lower in the local MAR. Observed from the estimated regression coefficients shown in Table 4, there are many more zeros in the regression mean model of extended Gaussian Kriging than other Kriging and non-Kriging methods. Hence, extended Gaussian Kriging has obtained a more adaptive regression mean, thereby leading to a more accurate metamodel for expensive computer simulators.

Furthermore, Fig. 1 plots the sorted absolute residuals (AR) from universal Kriging-LP, SCAD penalized Kriging, blind Kriging, and extended Gaussian Kriging versus the AR from ordinary Kriging. From these plots, we see that SCAD penalized Kriging, blind Kriging, and extended Gaussian Kriging have uniformly improved ordinary Kriging, and extended Gaussian Kriging performs more effectively than SCAD penalized Kriging and blind Kriging. It is also observed that universal Kriging-LP achieves similar performance as SCAD penalized Kriging, whereas universal Kriging-QP completely fails in this example due to the inclusion of unimportant effects in the mean model. Besides, Fig. 2 plots the true responses versus the predicted responses obtained by extended Gaussian Kriging and A-RBF via different basis functions, showing that the predicted responses obtained by extended Gaussian Kriging (Fig. 2f) are more accurate than those predicted by A-RBF methods.

5.2 Example 2: exhaust manifold sealing experiment

In this case study, the design of an exhaust manifold is considered. The exhaust manifold is one of the engine components, expected to endure harsh and rapid thermal cycling conditions ranging from sub-zero to, in some cases, more than 1,000°C. To dramatically reduce the cycle of product development, a transient non-linear finite element method is proposed by Hazime et al. [50] to simulate the inelastic deformation used to predict the thermo-mechanical fatigue life of cast exhaust manifolds. The exhaust manifold assembly includes the exhaust manifold component, fastener, gasket, and a portion of the cylinder head. In addition to predicting fatigue life, the model also predicts dynamic sealing pressure on gasket to identify potential exhaust gas leaks. To optimize the gasket design to prevent leaks, a sym- metric Latin hypercube (SLH) design 17 runs and 5 factors is applied to the simulation model as shown in Table 6. More details on exhaust manifold sealing experiment can be referred to ref. [4, 50]. Besides, a set of testing data set is listed in Table 7 to assess the performance of different Kriging and non-Kriging metamodeling methods.

Various Kriging methods including extended Gaussian Kriging, SCAD penalized Kriging, universal Kriging-LP, universal Kriging-QP, and ordinary Kriging, and non-Kriging methods, i.e., augmented RBF via different basis functions are tried for the exhaust manifold sealing experiment. In this example, the correlation parameters in extended Gaussian Kriging are estimated by the pattern search method. The comparison of results in terms of RMSE and MAR is presented in Table 8.

The RMSE for extended Gaussian Kriging is 1.2269, and equals 1.2323, 1.8772, 2.3697, 2.8792, and 4.0745 for blind Kriging, SCAD penalized Kriging, and universal Kriging-QP, universal Kriging-LP, and ordinary Kriging. The MAR for extended Gaussian Kriging is 0.5762, and correspondingly, the MAR equals 0.5937, 0.6672, 1.5059, 2.0805, and 3.4323. From the RMSE and MAR, it is observed that extended Gaussian Kriging achieves higher accuracy than SCAD penalized Kriging, universal Kriging, and ordinary Kriging. It is also observed that ordinary Kriging achieves the poorest prediction performance among these Kriging methods in this example. The reason lies in that a mere constant mean can not capture the overall trend of system responses. This is also manifested by SCAD penalized Kriging which performs superior to ordinary and universal Kriging while inferior to extended Gaussian Kriging. In this example, note that extended Gaussian Kriging achieves similar prediction performance as blind Kriging. However, the computational complexity of blind Kriging is high, whereas extended Gaussian Kriging has competitive computational efficiency compared against ordinary Kriging.

Besides Kriging methods, augmented RBF methods are also carried out for comparison. Specifically, the RMSE for the linear basis function is 2.5895 and equals 2.2189, 1.4949, 2.2189, and 2.3391 for the cubic, Gaussian, multi-quadric, and inverse multi-quadric basis functions; and the corresponding MAR equals 1.5540, 1.3687, 0.7432, 1.3687, and 1.5768. Among the five basis functions, A-RBF-Gaussian achieves the best prediction in this example. Besides, compared against the SCAD penalized Kriging method, A-RBF-Gaussian performs slightly better in the term of RMSE and slightly worse in the term of MAR. Yet, extended Gaussian Kriging overwhelms SCAD penalized Kriging and A-RBF-Gaussian in both terms of RMSE and MAR. Hence, it can be concluded once again that the adaptive mean model helps to improving the prediction performance of Kriging metamodeling.

5.3 Example 3: Borehole model

The Borehole model is a classical example of computer experiments having studied by many authors to compare different methods in computer experiments, for instance, Fang and Lin [51], Fang et al. [52], and Li [53]. The borehole function is defined as

where y is the response variable, rw, r, Tu, Tl, Hu, Hl, L and Kw are the eight input variables, having the experimental domain as follows:

The same uniform design as in Li [53] is used to generate an experimental design for the Borehole function. The generated 30 design points and their corresponding outputs are depicted in Table 9. For performance assessment, 10,000 sample points are generated from the uniform distribution over the experimental domain.

In this study, extended Gaussian Kriging and several existing methods performed on the borehole model are compared for their prediction accuracy. The comparison of RMSE results is listed in Table 10. In literature, Fang and Lin [51] constructed a uniform design with 32 experiments and used the B-spline model to approximate the borehole function, achieving the RMSE 2.1095. With the same uniform design, the quadratic regression model used in Fang et al. [52] has the RMSE 0.5077. Li [53] used the SCAD penalized quadratic spline model for metamodeling. Their predictor is demonstrated to be more accurate than existing methods, and the RMSE equals 0.3335. Since universal Kriging may include unimportant variables in the mean model, the widely used ordinary Kriging, SCAD penalized Kriging, blind Kriging, and extended Gaussian Kriging are conducted on the Borehole model. And the Fisher scoring algorithm is utilized to estimate the correlation parameters. The RMSE equals 0.6587 for ordinary Kriging, equals 0.7527 for SCAD penalized Kriging, and equals 0.0044 for extended Gaussian Kriging. We see that the RMSE of extended Gaussian Kriging is much lower than that of Li’s SCAD quadratic spline model, Fang and Lin’s B-spline model, and Fang et al.’s quadratic regression model. It is also observed that both ordinary Kriging and SCAD penalized Kriging have achieved poor prediction performance in this example, in that the two methods completely ignore the important variables greatly affecting the system response. In fact, the adaptive mean model obtained by extended Gaussian Kriging is \( \mu (x) = 10.3218 + 2.0270x_{1} - 0.0015x_{7} \), which implies that only x 1 and x 7 are important variables. The result clearly demonstrates the importance of incorporating variable selection and hence an adaptive mean model into Kriging metamodeling.

6 Conclusions

This paper primarily focuses on the issue of constructing surrogate models based on Kriging as cheap alternatives to computer simulators. Though several improved Kriging approaches are recently reported in the literature (e.g., SCAD penalized Kriging [2], blind Kriging [18]), an extended Gaussian Kriging method is proposed to improve the prediction accuracy of Kriging metamodeling. Unlike the forgoing approaches, the new method imposes a variance-varying Gaussian prior on the unknown regression coefficients in the mean model of universal Kriging and makes prediction at new design points based on the principle of Bayesian MAP inference. The achieved mean model is adaptive, thereby able to capture more effectively the overall trend of computer responses and lead to a more accurate meta- model. Experimental results on empirical case studies are presented, showing remarkable improvement in prediction utilizing extended Gaussian Kriging over several benchmark methods in the literature.

Future aspects on extended Gaussian Kriging include considering non-Gaussian correlation functions in Kriging for nonsmooth metamodeling tasks; considering appropriate Bayesian priors on the correlation parameters for robust parameter estimation; considering Kriging metamodeling for stochastic computer experiments, and so on. Besides, one particular problem deserves further research, i.e., improving the computational efficiency of estimating the correlation parameters in extended Gaussian Kriging as the sample size of computer runs is large or moderate. Research on these topics is currently ongoing and will be reported elsewhere.

References

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. ASME transactions. J Mech Des 45(6):1208–1221

Li R, Sudjianto A (2005) Analysis of computer experiments using penalized likelihood in Gaussian Kriging models. Technometrics 47:111–120

Fang KT, Li R, Sudjianto A (2006) Design and modeling for computer experiments. CRC Press, New York

Santner TJ, Willianms BJ, Notz WI (2003) The design and analysis of computer experiment. Springer, Berlin

Simpson TW, Peplinski J, Koch PN, Allen JK (2001) Metamodels for computer-based engineering design: survey and recommendations. Eng Comput 17(2):129–150

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4(4):409–435

Sacks J, Schiller SB, Welch WJ (1989) Designs for computer experiments. Technometrics 31(1):41–47

Kleijnen JPC (2005) An overview of the design and analysis of simulation experiments for sensitivity analysis. Eur J Oper Res 164(2):287–300

Wackernagel H (2002) Multivariate geostatistics. Springer, New York

Currin C, Mitchell TJ, Morris MD, Ylvisaker D (1991) Bayesian prediction of deterministic functions, with applications to the design and analysis of computer experiments. J Amer Statist Assoc 86:953–963

Welch WJ, Buck RJ, Sacks J, Wynn HP, Mitchell TJ, Morris MD (1992) Screening, predicting, and computer experiments. Technometrics 34:15–25

Simpson TW, Mauery TM, Korte JJ, Mistree F (2001) Kriging metamodels for global approximation in simulation-based multidisciplinary design optimization. AIAA J 39(12):2233–2241

Martin JD, Simpson TW (2004) On using Kriging models as probabilistic models in design. SAE Trans J Mater Manuf 5:129–139

Martin JD, Simpson TW (2005) On the use of Kriging models to approximate deterministic computer models. AIAA J 43:853–863

Giunta AA (1997) Aircraft multidisciplinary design optimization using design of experiments theory and response surface modeling. Dissertation, Virginia Polytechnic and State University, Blacksburg

Booker AJ, Conn AR, Dennis JE, Frank PD, Serafini D, Torczon V, Trosset M (1996) Multi-level design optimization: a Boeing/IBM/Rice collaborative project. The Boeing Company, Seattle

Cappelleri DJ, Frecker MI, Simpson TW, Snyder A (2002) Design of a PZT bimorph actuator using a metamodel-based approach. ASME J Mech Des 124:354–357

Joseph VR, Hung Y, Sudjianto A (2008) Blind Kriging: a new method for developing metamodels. ASME J Mech Des 130: 031102-1-8

Linkletter CD, Bingham D, Hengartner N, Higdon D, Ye KQ (2006) Variable selection for Gaussian process models in computer experiments. Technometrics 48:478–490

Joseph VR (2006) Limit Kriging. Technometrics 48:458–466

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidiscip Optim 23(1):1–13

Koehler JR, Owen AB (2003) Computer experiments. In: Ghosh S, Rao CR (eds) Handbook of Statistics. Elsevier Science, New York, pp 261–308

McKay MD, Bechman RJ, Conover WJ (1979) A Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21(2):239–245

Park JS (1994) Optimal Latin-hypercube designs for computer experiments. J Stat Plan Inference 39:95–111

Fang KT, Lin DKJ, Winker P, Zhang Y (2000) Uniform design: theory and application. Technometrics 39(3):237–248

Kalagnanam JR, Diwekar UM (1997) An efficient sampling technique for off-line quality control. Technometrics 39(3):308–319

Owen A (1992) Orthogonal arrays for computer experiments, integration, and visualization. Statistica Sinica 2:439–452

Hedayat AS, Sloane NJA, Stufken J (1999) Orthogonal arrays: theory and applications. Springer, New York

Simpson TW, Lin DKJ, Chen W (2001) Sampling strategies for computer experiments: design and analysis. Int J Reliab Appl 2(3):209–240

Montgomery DC (2001) Design and analysis of experiments. Wiley, New York

Fang HB, Horstemeyer MF (2006) Global response approximation with radial basis functions. Eng Optim 38(4):407–424

Fang HB, Rais-Rohani M, Horstemeyer MF (2004) Multi-objective crashworthiness optimization with radial basis functions. In: Proceedings of the 10th AIAAISSMO Multidisciplinary Analysis and Optimization Conference, Paper No. AIAA-2004-4487, Albany

Powell MJD (1987) Radial basis functions for multivariate interpolation: a review. In: Mason JC, Cox MG (eds) Algorithm for approximation. Clarendon Press, Oxford, pp 143–167

Hardy RL (1971) Multiquadratic equations of topography and other irregular surfaces. J Geophys 76:1905–1915

Hussain MF, Barton RR, Joshi SB (2002) Metamodeling: radial basis functions, versus polynomials. Eur J Oper Res 138(1):142–154

Krishnamurthy T (2003) Response surface approximation with augmented and compactly supported radial basis functions. In: Proceedings of the 44th AIAAASMEASCEAHSASC Structures, Structural Dynamics, and Materials Conference, Paper No. AIAA-2003-1748, Norfolk

Mullur AA, Messac A (2005) Extended radial basis functions: more flexible and effective meta- modeling. AIAA J 43(6):1306–1315

Mullur AA, Messac A (2006) Metamodeling using extended radial basis functions: a comparative approach. Eng Comput 21:203–217

Babacan SD, Molina R, Katsaggelos AK (2010) Bayesian compressive sensing using Laplace priors. IEEE Trans Image Process 19(1):53–63

Yi NJ, Xu SZ (2008) Bayesian LASSO for quantitative trait loci mapping. Genetics 179:1045–1055

Park T, Casella G (2008) The Bayesian Lasso. J Am Stat Assoc 103(482):681–686

Tipping ME (2001) Sparse Bayesian learning and the relevance vector machine. J Mach Learn Res 1:211–244

Ji S, Xue Y, Carin L (2008) Bayesian compressive sensing. IEEE Trans Signal Process 56(6):2346–2356

Ji S, Dunson D, Carin L (2009) Multi-task compressive sensing. IEEE Trans Signal Process 57(1):92–106

Figueiredo M (2003) Adaptive sparseness for supervised learning. IEEE Trans Pattern Anal Mach Intell 25(9):1150–1159

Gano SE, Renaud JE, Martin JD, Simpson TW (2005) Update strategies for Kriging models for use in variable fidelity optimization. In: Proceedings of 1st AIAA Multidisciplinary Design Optimization Specialist Conference, AIAA, Austin

Lophaven SN, Nielsen HB, SØndergaard J (2002) DACE: a Matlab Kriging toolbox. Technical Report, IMM-REP-2002-12, Technical University of Denmark, Denmark

Tipping M, Faul A (2003) Fast marginal likelihood maximisation for sparse Bayesian models. In Bishop C, Frey B (eds), In: Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Key West

Hoffman RM, Sudjianto A, Du X, Stout J (2003) Robust piston design and optimization using piston secondary motion analysis. SAE Transactions SAE Paper 2003-01-0148

Hazime RM, Dropps SH, Anderson DH, Ali MY (2003) Transient non-linear fea and tmf life estimates of cast exhaust manifolds. Society of Automotive Engineers SAE 2003-01-0918

Fang KT, Lin DKJ (2003) Uniform designs and their application in industry. Handbook on Statistics in industry 22:131–170

Fang KT, Ho WM, Xu ZQ (2000) Case studies of computer experiments with uniform design, Technical Report. Hong Kong Baptist University, Hong Kong

Li R (2002) Model selection for analysis of uniform design and computer experiment. Int J Reliab Qual Saf Eng 9(4):367–382

Friedman JH (1991) Multivariate adaptive regressive splines. The Annal Stat 19(1):1–67

Hajela P, Berke L (1993) Neural networks in structure analysis and design: an overview. 4th AIAA/USAF/NASA/OAI Symposium on Multidisciplinary Analysis and Optimization, September 21–23. Cleveland, OH

Hong J, Dagli CH, Ragsdell KM (1994) Artificial neural network and the Taguchi method application for robust Wheatstone bridge design. In: Gilmore BJ, Hoeltzel DA et al (eds) Advances in Design Automation, Minneapolis Sept 11–14, 69(2):37–41

Clarke SM, Griebsch JH, Simpson TW (2005) Analysis of support vector regression for approximation of complex engineering analyses. Trans ASME J Mech Des 127(6):1077–1087

Acknowledgments

Many thanks are given to the two anonymous reviewers for their pertinent and helpful suggestions. The research of Ma was supported by the Natural Science Foundation of China under Grant 70931002, and the work of Wei was supported by the National High Technology Research and Development Plan of China under Grant 2007AA12E100.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shao, W., Deng, H., Ma, Y. et al. Extended Gaussian Kriging for computer experiments in engineering design. Engineering with Computers 28, 161–178 (2012). https://doi.org/10.1007/s00366-011-0229-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-011-0229-7