Abstract

The statistical inference of multicomponent stress-strength reliability under the adaptive Type-II hybrid progressive censored samples for the Weibull distribution is considered. It is assumed that both stress and strength are two Weibull independent random variables. We study the problem in three cases. First assuming that the stress and strength have the same shape parameter and different scale parameters, the maximum likelihood estimation (MLE), approximate maximum likelihood estimation (AMLE) and two Bayes approximations, due to the lack of explicit forms, are derived. Also, the asymptotic confidence intervals, two bootstrap confidence intervals and highest posterior density (HPD) credible intervals are obtained. In the second case, when the shape parameter is known, MLE, exact Bayes estimation, uniformly minimum variance unbiased estimator (UMVUE) and different confidence intervals (asymptotic and HPD) are studied. Finally, assuming that the stress and strength have the different shape and scale parameters, ML, AML and Bayesian estimations on multicomponent reliability have been considered. The performances of different methods are compared using the Monte Carlo simulations and for illustrative aims, one data set is investigated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Among various censoring schemes, Type-I and Type-II censoring schemes are two most effective schemes. Type-I scheme finished the test at the pre-chosen time and Type-II scheme finished the test at the pre-determined number of failures. The hybrid scheme which is a mixing of Type-I and Type-II schemes has been introduced by Epstein (1954). This censoring scheme finished the test at time \(T^*=\min \{X_{m:n},T\}\), where \(X_{m:n}\) is the m-th failure times from n items and \(T>0\). Because the above schemes cannot remove active units during the test, the progressive censoring scheme is introduced. In this scheme, the active units can be ejected during the test. Combining the Type-II and progressive schemes, the progressive Type-II censoring is obtained. Also, mixing the hybrid and progressive schemes, the progressive hybrid censoring is provided. In a progressive hybrid censoring scheme which was initiated by Kundu and Joarder (2006), N units are put on the experiment and \(X_{1:n:N}\le \cdots \le X_{n:n:N}\) come from a progressive censoring scheme, with the censoring scheme \((R_1,\ldots ,R_n)\) and halting time \(T^*=\min \{X_{n:n:N},T\}\), \(T>0\). If \(X_{n:n:N}<T\), the test finished at time \(X_{n:n:N}\) and n failures happen. Also, if \(X_{J:n:N}<T<X_{J+1:n:N}\), the test finished at time T and J failures happen. It is obvious that the sample size in progressive hybrid censoring is random. So, with a small number of samples, statistical inference is not applicable in practical situations. To solve this problem, Ng et al. (2009) have been provided the adaptive hybrid progressive scheme. In this paper, we work on the adaptive Type-II hybrid progressive censoring (AT-II HPC). In AT-II HPC scheme, let \(X_{1:n:N}\le \cdots \le X_{n:n:N}\) be a progressive censoring sample with scheme \((R_1,\ldots ,R_n)\) and \(T>0\) is fixed. If \(X_{n:n:N}<T\), the experiment proceeds with the progressive censoring scheme \((R_1,\ldots ,R_n)\) and stops at the \(X_{n:n:N}\) (see Fig. 1). Otherwise, once the experimental time passes time T but the number of observed failures has not reached n, we would want to terminate the experiment as soon as possible for fixed value of n, then we should leave as many surviving items on the test as possible. Suppose J is the number of failures observed before time T, i.e. \(X_{J:n:N}<T<X_{J+1:n:N}\), \(J=0,\cdots ,n\). After passed time T, we do not withdraw any items at all except for the time of the n-th failure where all remaining surviving items are removed. So, we set

In Fig. 2, the schematic representation of this situation is given. From now on, a AT-II HPC sample will be denoted with \(\{X_1,\ldots ,X_n\}\) under the scheme \(\{N,n,T,R_1,\ldots R_n\}\) such that \(X_{J}<T<X_{J+1}\). Getting the effective number of failures n is one of the most advantages of AT-II HPC scheme. Also, this scheme can be reduced to Type-II progressive censoring and Type-II censoring by setting \(T=0\) and \(T=\infty \), respectively. By this approach, the tester will be able to control the test. Very recently, Nassar and Abo-Kasem (2017) estimated the inverse Weibull parameters and AL Sobhi and Soliman (2016) studied the estimation of exponentiated Weibull model under this scheme.

In recent years much attention has been paid to the multicomponent stress-strength model. A multicomponent system is arranged of common stress and k independent and identical strengths component. This system is reliable when s (\(1\le s\le k\)) or more of k components simultaneously survive. This model corresponds to the G system: s-out-of-k. Some examples can be given that is consistent with the G system. For example, in a suspension bridge, the wind loading, heavy traffic, corrosion, etc. can be regarded as stresses and the k number of vertical cable pairs, which support the bridge deck, can be considered as strengths. The bridge will only survive if a minimum s number of vertical cable through the deck is not damaged. As another example of the G system: 4-out-of-8, we can point to a V-8 engine of an automobile. As a contractor, if at least 4 cylinders are firing then the automobile may be to derive.

Bhattacharyya and Johnson (1974) improved the multicomponent reliability as

when the strengths \((X_1, \dots , X_k )\) are independent and identically distributed random variables with cdf \(F_X(.)\) and the stress Y is a random variable with the cdf \(F_Y(.)\). Estimation of the multicomponent stress-strength parameter has been studied for different distributions like for example exponential (Hanagal 1999), bivariate Kumaraswamy (Kizilaslan and Nadar 2018) and Marshall-Olkin Bivariate Weibull (Nadar and Kizilaslan 2016).

The multicomponent stress-strength parameter, in the case of complete samples, has been estimated by many authors. But, in the case of censored samples, not many studies have been done for inference about multicomponent reliability, whereas, in some practical situations, we deal with different censoring schemes. For example, we consider the following situation. If the water capacity of a reservoir in a region on December of the previous year is less than the amount of water achieved on Aguste at least two years out of the next 5 years, we claim that there will be no excessive drought afterward. In this example, it is very likely, we observe censored samples rather than complete samples from both populations.

In reliability theory, the Weibull distribution is one of the most widely used lifetime distributions with respect to other distributions. The probability density, cumulative distribution and failure rate functions of the Weibull distribution with the shape and scale parameters \(\alpha \) and \(\theta \), respectively, are as follows:

respectively. Hereafter, we denote the Weibull distribution, with the probability distribution function in (1), by \(W(\alpha ,\theta )\). As we see, the failure rate function of \(W(\alpha ,\theta )\) is an increasing, constant or decreasing functions for \(\alpha >1\), \(\alpha =1\) and \(\alpha <1\), respectively. Because of this, many researchers may be used the Weibull distribution to analyze the data sets.

In this paper, on the basis of AT-II HPC samples, we study the statistical inferences for multicomponent stress-strength reliability model \(R_{s,k}=P[\text {at least}~ s ~\text {of} (X_1,\dots ,X_k) ~\text {exceed} ~Y]\). Therefore, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) and \(\{Y_{1},\ldots ,Y_{n}\}\) can be considered as \(n+1\) AT-II HPC samples from two different Weibull distributions, which one of them for stress and the other for strength. The censoring schemes for \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) and \(\{Y_{1},\ldots ,Y_{n}\}\) can be studied as \(\{K,k,T_2,R_{i1},\ldots R_{in}\},~i=1\ldots ,n\) and \(\{N,n,T_1,S_1,\ldots S_n\}\), respectively.

This paper is arranged as follows: In Sect. 2, we infer \(R_{s,k}\) when the stress and strength have the common and unknown shape parameter \(\alpha \). First, we obtain the ML estimation of \(R_{s,k}\). As we will see, because the different equations to obtain the MLE cannot be solved explicitly, we derive the AMLE’s of \(R_{s,k}\) which has explicit forms. Also, we provide the asymptotic and two bootstrap confidence intervals for the multicomponent stress-strength parameter. The Bayesian estimation is another estimate which we obtain for \(R_{s,k}\). Because the Bayesian estimation cannot be obtained in a closed form, we use two approximation method: Lindley approximation and MCMC method. By using the MCMC method, we derive the HPD credible intervals. In Sect. 3, when the stress and strength have the common and known shape parameter \(\alpha \), we derive the MLE, asymptotic confidence interval, exact Bayes estimate, HPD credible interval and UMVUE of \(R_{s,k}\). In Sect. 4, when all parameters are different and unknown, we infer on \(R_{s,k}\). In this section, we obtain the MLE, AMLE and Bayesian inference of multicomponent stress-strength reliability parameter. In Sect. 5, simulation results and data analysis are provided. Finally, we conclude the paper in Sect. 6.

2 Inference on \(R_{s,k}\) if \(\alpha \), \(\theta \) and \(\lambda \) are unknown

2.1 Maximum likelihood estimation of \(R_{s,k}\)

Suppose that \(X\sim W(\alpha ,\theta )\) and \(Y\sim W(\alpha ,\lambda )\) are two independent random variables with unknown common shape parameter \(\alpha \) and different scale parameters \(\theta \) and \(\lambda \). For Weibull distribution using (1) and (2), the multicomponent stress-strength reliability is given by

The MLE of \(R_{s,k}\) will be obtained by deriving the MLE of \(\theta \), \(\lambda \) and \(\alpha \). It is known that n systems are put on the life-testing experiment. So, to constructing the likelihood function, the samples can be shown as

where \(\{Y_{1},\ldots ,Y_n\}\) is a AT-II HPC sample from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n)\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) is a AT-II HPC sample from \(W(\alpha ,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_2+1}\). Therefore, the likelihood function of \(\theta \), \(\lambda \) and \(\alpha \) can be obtained as

Regrading the advantages of this likelihood function, this function is a general likelihood function. For example, in the case \(T_1=T_2=\infty \), the likelihood function for \(R_{s,k}\) in the progressive censored sample is obtained. When \(k=1\) the likelihood function for \(R=P(X<Y)\) in the adaptive hybrid progressive censored scheme (by making a few changes in symbols) is obtained. Also, when \(k=1\) and \(T_1=T_2=\infty \) the likelihood function for \(R=P(X<Y)\) in the progressive censored scheme (by making a few changes in symbols) is derived. Moreover, when \(T_1=T_2=\infty \) and \(R_{ij}=0,~S_i=0\) the likelihood function for \(R_{s,k}\) in complete sample is earned. Furthermore, when \(k=1\), \(T_1=T_2=\infty \) and \(R_{ij}=0,~S_i=0\), the likelihood function for \(R=P(X<Y)\) in complete sample is achieved.

Based on the observed data, the likelihood function is as

The log-likelihood function ignoring the additive constant, from (4), is

So, with solving the following equations, the MLE of \(\theta \), \(\lambda \) and \(\alpha \), say \(\widehat{\theta }\), \(\widehat{\lambda }\) and \(\widehat{\alpha }\), respectively, can be resulted

Obviously, we can obtain from (5) and (6)

To find the solution of Eq. (7), a simple iterative procedure, for example, the Newton-Raphson method, can be applied. So, once the value of \(\widehat{\alpha }\) should be derived and then \(\widehat{\theta }\) and \(\widehat{\lambda }\) can result. Consequently, the MLE of \(R_{s,k}\) becomes

2.2 Approximate maximum likelihood estimation

As we have seen in the previous section, based on AT-II HPC samples, the MLE of \(\theta \), \(\lambda \), \(\alpha \) and hence \(R_{s,k}\) cannot be obtained in a closed form. To solve this problem, we try to obtain AMLEs of the parameters.

Let \(Z''\) has Extreme value distribution, in symbols \(Z''\sim EV(\mu ,\sigma )\), if it has the pdf as follows:

It is obvious that if \(Z'\sim W(\alpha ,\theta )\) and \(Z''=\log (Z')\), then \(Z''\sim EV(\mu ,\sigma )\), where \(\mu =\frac{1}{\alpha }\log (\theta )\) and \(\sigma =\frac{1}{\alpha }\). Now, suppose that \(\{Y_{1},\ldots ,Y_n\}\) be a AT-II HPC sample from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) is a AT-II HPC sample from \(W(\alpha ,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_2+1}\). Moreover, let \(U_{ij}=\log (X_{ij})\) and \(V_i=\log (Y_i)\). So, \(U_{ij}\sim EV(\mu _1,\sigma )\) and \(V_i\sim EV(\mu _2,\sigma )\), where

So, with ignoring the constant value, based on the observed data \(\{U_{i1},\ldots ,U_{ik}\}\), \(i=1,\ldots ,n\) and \(\{V_{1},\ldots ,V_{n}\}\), the log-likelihood function can be obtained by

where \(\displaystyle t_{ij}=\frac{u_{ij}-\mu _1}{\sigma }\) and \(\displaystyle z_i=\frac{v_i-\mu _2}{\sigma }\). The usual equations, with taking derivatives of the above equation with respect to \(\mu _1\), \(\mu _2\) and \(\sigma \), can be derived by

We derive the AMLEs of \(\theta \), \(\lambda \), \(\alpha \) and \(R_{s,k}\), by expanding the functions \(e^{t_{ij}}\) and \(e^{z_i}\), in Taylor series around the points \(\nu _i=\log \big (-\log (1-q_i)\big )\) and \(\nu '_{ij}=\log \big (-\log (1-q'_{ij})\big )\), respectively, where

and

Then, we keep only the first-order derivatives in Taylor series and obtain the following results:

where

The AMLEs of \(\mu _1\), \(\mu _2\) and \(\sigma \), say \(\hat{\hat{\mu }}_1\), \(\hat{\hat{\mu }}_2\) and \(\hat{\hat{\sigma }}\), respectively, by applying the linear approximations, can be provided as

where

After deriving \(\hat{\hat{\mu }}_1\), \(\hat{\hat{\mu }}_2\) and \(\hat{\hat{\sigma }}\), the AMLEs of \(\theta \), \(\lambda \), \(\alpha \) and so \(R_{s,k}\), say \(\hat{\hat{\theta }}\), \(\hat{\hat{\lambda }}\), \(\hat{\hat{\alpha }}\) and \(\hat{\hat{R}}_{s,k}\), respectively, can be evaluated by \(\hat{\hat{\theta }}=e^{\frac{\hat{\hat{\mu }}_1}{\hat{\hat{\sigma }}}}\), \(\hat{\hat{\lambda }}=e^{\frac{\hat{\hat{\mu }}_2}{\hat{\hat{\sigma }}}}\), \(\hat{\hat{\alpha }}=\frac{1}{\hat{\hat{\sigma }}}\), and so

2.3 Confidence intervals

2.3.1 Asymptotic confidence interval

In this section, we derive the asymptotic confidence interval of \(R_{s,k}\). To reach this aim, by using the multivariate central limit theorem, we obtain the asymptotic distribution of unknown parameters, i.e. \(\alpha \), \(\theta \), \(\lambda \), and by applying the delta method, we provide the asymptotic distribution of \(R_{s,k}\).

We denote the expected Fisher information matrix of \(\Theta =(\theta _1,\theta _2,\theta _3)=(\alpha ,\theta ,\lambda )\) by \(J(\Theta ) = -E(I(\Theta ))\), where \(\displaystyle I(\Theta ) = [I_{ij}]=[{\partial ^2\ell }/{(\partial \theta _i\partial \theta _j)}]\), \(i, j = 1, 2, 3\), is the observed information matrix. In our case, the observed Fisher information matrix is obtained by

Lemma 1

Suppose that \(\{Z_{1},\ldots ,Z_{n}\}\) is a AT-II HPC sample from \(W(\alpha ,\theta )\) with censored scheme \(\{N,n,T,R_1,\ldots ,R_n\}\), then

-

(i)

\(\mathbb {E}(Z_i^\alpha )=\theta C_{i-1}\sum \limits _{d=1}^{i} \frac{a_{i,d}}{\eta ^2_{d}},\)

-

(ii)

\(\mathbb {E}(Z_i^\alpha \log (Z_i))=\frac{\theta C_{i-1}}{\alpha } \sum \limits _{d=1}^{i}\frac{a_{i,d}}{\eta ^2_{d}}[\psi (2) -\log (\frac{\eta _d}{\theta })]\),

-

(iii)

\(\mathbb {E}(Z_i^\alpha \log ^2(Z_i))=\frac{\theta C_{i-1}}{\alpha ^2} \sum \limits _{d=1}^{i}\frac{a_{i,d}}{\eta ^2_{d}}\{[\psi (2) -\log (\frac{\eta _d}{\theta })]^2+\zeta (2,2)\}\),

where \(\psi (x),~\zeta (x,y)\) are Euler’s psi and Riemann’s zeta functions, respectively. Also, \(\eta _d=N-d+1+\sum \nolimits _{l=1}^{d-1}R_l\), \(C_{i-1}=\prod \nolimits _{d=1}^i\eta _d\), \(a_{i,d}=\prod \nolimits _{{\mathop {l\ne i}\limits ^{l=1}}}^d\frac{1}{\eta _l-\eta _i}\).

Proof

As given in Ng et al. (2009), if we let \(\{Z_{1},\ldots ,Z_{n}\}\) be a AT-II HPC sample from \(W(\alpha ,\theta )\), then the pdf of \(Z_i\) is

-

(i)

A simple method is presented for proving this part.

-

(ii)

We use the table of the integrals from Gradshteyn and Ryzhik (1994) (formula 4.352(1)) and prove this part as

$$\begin{aligned} \mathbb {E}\big (Z_i^\alpha \log (Z_i)\big )&=\int _0^\infty z^\alpha \log (z)f_{Z_i}(z)dz\\&=\frac{\alpha }{\theta } C_{i-1}\sum _{d=1}^ia_{i,d}\int _{0}^\infty z^{2\alpha -1}\log (z)e^{-z^\alpha \frac{\eta _d}{\theta }}dz\\&=\frac{\theta C_{i-1}}{\alpha }\sum \limits _{d=1}^{i}\frac{a_{i,d}}{\eta ^2_{d}}\left[ \psi (2) -\log \left( \frac{\eta _d}{\theta }\right) \right] . \end{aligned}$$ -

(iii)

We use the table of the integrals from Gradshteyn and Ryzhik (1994) (formula 4.358(2)) and prove this part as

$$\begin{aligned} \mathbb {E}\big (Z_i^\alpha \log ^2(Z_i)\big )&=\int _0^\infty z^\alpha \log ^2(z)f_{Z_i}(z)dz\\&=\frac{\alpha }{\theta } C_{i-1}\sum _{d=1}^ia_{i,d}\int _{0}^\infty z^{2\alpha -1}\log ^2(z)e^{-z^\alpha \frac{\eta _d}{\theta }}dz\\&=\frac{\theta C_{i-1}}{\alpha ^2}\sum \limits _{d=1}^{i}\frac{a_{i,d}}{\eta ^2_{d}} \left\{ \left[ \psi (2)-\log \left( \frac{\eta _d}{\theta }\right) \right] ^2+\zeta (2,2)\right\} . \end{aligned}$$\(\square \)

By using Lemma 1,

By using the multivariate central limit theorem, \((\widehat{\alpha },\widehat{\theta },\widehat{\lambda }) \sim N_3((\alpha ,\theta ,\lambda ),\mathbf {J^{-1}}(\alpha ,\theta ,\lambda )),\) where \(\mathbf {J^{-1}}(\alpha ,\beta ,\lambda )\) is a symmetric matrix which has the following representation:

where

Also, by using the delta method, the asymptotic distribution of \(\widehat{R}_{s,k}\) can be resulted as \(\widehat{R}^{MLE}\sim N(R_{s,k},B)\) where \(B=\mathbf {b^TJ^{-1}}(\alpha ,\theta ,\lambda )\mathbf{b}\), in which

with

consequently,

Therefore, a \(100(1-\gamma )\%\) asymptotic confidence interval for \(R_{s,k}\) is constructed as

where \(z_{\gamma }\) is 100\(\gamma \)-th percentile of N(0, 1).

2.3.2 Bootstrap confidence interval

All we know with certainty is that the asymptotic confidence intervals, for small sample size, do not perform very well. So the asymptotic confidence intervals can be replaced by bootstrap confidence intervals as an alternative method. In this section, we propose two bootstrap parametric methods: (i) percentile bootstrap method which denoted by Boot-p, based on the original idea of Efron (1982) and (ii) bootstrap-t method which denoted by Boot-t, based on the idea of Hall (1988).

(i) Boot-p method

-

1.

Provide the AT-II HPC bootstrap sample \(\{y_1,\ldots ,y_{n}\}\) from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\), provide the AT-II HPC sample \(\{x_{i1},\ldots ,x_{ik}\}\), \(i=1,\ldots ,n\) from \(W(\alpha ,\theta )\) under the scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) and estimate \((\widehat{\alpha },\widehat{\theta },\widehat{\lambda })\).

-

2.

Provide the AT-II HPC bootstrap sample \(\{y_1^*,\ldots ,y_{n}^*\}\) from \(W(\widehat{\alpha },\widehat{\lambda })\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\) and provide the AT-II HPC sample \(\{x_{i1},\ldots ,x_{ik}\}\), \(i=1,\ldots ,n\) from \(W(\widehat{\alpha },\widehat{\theta })\) under the scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) and by them compute the bootstrap estimate \(\widehat{R}^{MLE*}_{s,k}\), using (8).

-

3.

Reiterate step 2 NBOOT times.

-

4.

Allow \(G^*(x) = P(\widehat{R}^{MLE*}_{s,k}\le x)\) be the cdf of \(\widehat{R}^{MLE*}_{s,k}\) and define \(\widehat{R}^{Bp}_{s,k}(x) = {G^{*}}^{-1}(x)\) for a given x. The \(100(1-\gamma )\%\) Boot-p confidence interval of \(R_{s,k}\) is provided by

$$\begin{aligned} \left( \widehat{R}^{Bp}_{s,k}\left( \frac{\gamma }{2}\right) ,\widehat{R}^{Bp}_{s,k} \left( 1-\frac{\gamma }{2}\right) \right) . \end{aligned}$$

(ii) Boot-t method

-

1.

Provide the AT-II HPC bootstrap sample \(\{y_1,\ldots ,y_{n}\}\) from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\), provide the AT-II HPC sample \(\{x_{i1},\ldots ,x_{ik}\}\), \(i=1,\ldots ,n\) from \(W(\alpha ,\theta )\) under the scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\), estimate \((\widehat{\alpha },\widehat{\theta },\widehat{\lambda })\) and compute \(\widehat{R}^{MLE}_{s,k}\).

-

2.

Provide the AT-II HPC bootstrap sample \(\{y_1^*,\ldots ,y_{n}^*\}\) from \(W(\widehat{\alpha },\widehat{\lambda })\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\), provide the AT-II HPC sample \(\{x_{i1},\ldots ,x_{ik}\}\), \(i=1,\ldots ,n\) from \(W(\widehat{\alpha },\widehat{\theta })\) under the scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\), compute the bootstrap estimate \(\widehat{R}^{MLE*}_{s,k}\) and the statistic:

$$\begin{aligned} T^*=\frac{(\widehat{R}^{MLE*}_{s,k}-\widehat{R}^{MLE}_{s,k})}{\sqrt{V(\widehat{R}^{MLE*}_{s,k})}}, \end{aligned}$$where \(V(\widehat{R}^{MLE*}_{s,k})\) is given in the previous section.

-

3.

Reiterate step 2 NBOOT times.

-

4.

Allow \(H(x)=P(T^*\le x)\) be the cdf of \(T^*\), and define \(\widehat{R}^{Bt}_{s,k}(x)= \widehat{R}^{MLE}_{s,k} + H^{-1}(x)\sqrt{V(\widehat{R}^{MLE}_{s,k} )}\), for a given x. The \(100(1-\gamma )\%\) Boot-t confidence interval of \(R_{s,k}\) is given by

$$\begin{aligned} \left( \widehat{R}^{Bt}_{s,k}\left( \frac{\gamma }{2}\right) ,\widehat{R}^{Bt}_{s,k}\left( 1-\frac{\gamma }{2}\right) \right) . \end{aligned}$$

2.4 Bayes estimation of \(R_{s,k}\)

In this section, when the unknown parameters \(\alpha \), \(\theta \) and \(\lambda \) are independent random variables, we improve the Bayesian inference about \(R_{s,k}\). In details, the Bayes estimate and the corresponding credible intervals of \(R_{s,k}\), under the squared error loss function, are derived. To reach this aim, we assume that \(\alpha \) has the gamma distribution with the parameters \((a_1,b_1)\), \(\theta \) and \(\lambda \) have the inverse gamma distributions with the parameters \((a_2,b_2)\) and \((a_3,b_3)\), respectively, such that we show in symbols, \(\alpha \sim \Gamma (a_1,b_1)\), \(\theta \sim {\text{ I }}\Gamma (a_2,b_2)\) and \(\lambda \sim {\text{ I }}\Gamma (a_3,b_3)\). The joint posterior density function of \(\alpha \), \(\theta \) and \(\lambda \) is proportion with the likelihood function based on the observed sample and the prior distributions of each unknown parameters, i.e.

where

From the Eq. (12), it is obvious that the Bayes estimates of \(\alpha \), \(\theta \), \(\lambda \) and \(R_{s,k}\) cannot be obtained in a closed form. Therefore, we propose two approximation methods to obtain them:

-

Lindley’s approximation,

-

MCMC method.

2.4.1 Lindley’s approximation

Lindley (1980) has proposed one of the most common numerical techniques to derive the Bayes estimate, in 1980. It is known that the Bayes estimate of \(U(\Theta )\) under the squared error loss function is the posterior expectation of \(U(\Theta )\), such that

where \(Q(\Theta )=\ell (\Theta )+\rho (\Theta )\), \(\ell (\Theta )\) and \(\rho (\Theta )\) are the logarithm of the likelihood function and logarithm of the prior density of \(\Theta \), respectively. Lindley suggested that \(\mathbb {E}(u(\Theta )|\text{ data })\) would be approximated by

where \(\Theta =(\theta _1,\dots ,\theta _m)\), \(i,j,k,p=1,\dots ,m\), \(\widehat{\Theta }\) is the MLE of \(\Theta \), \(u=u(\Theta )\), \(u_i=\partial u/\partial \theta _i\), \(u_{ij}=\partial ^2u/(\partial \theta _i\partial \theta _j)\), \(\ell _{ijk}=\partial ^3\ell /(\partial \theta _i\partial \theta _j\partial \theta _k)\), \(\rho _j=\partial \rho /\partial \theta _j\), and \(\sigma _{ij}=(i,j)\)th element in the inverse of matrix \([-\ell _{ij}]\) all evaluated at the MLE of the parameters. Therefore, in the case of three parameters \(\Theta =(\theta _1,\theta _2,\theta _3)\), after simplifying the above equation we get

which their elements are presented in detail in “Appendix A”. Therefore, the Bayes estimate of \(R_{s,k}\) is

It is notable that all parameters are computed at \((\widehat{\alpha },\widehat{\beta },\widehat{\lambda })\).

Because by applying the Lindley’s method, constructing the credible interval is not available, so, by using the Markov Chain Monte Carlo (MCMC) method, we obtain the Bayes estimate and the corresponding HPD credible interval.

2.4.2 MCMC method

After simplifying the Eq. (12), the posterior pdfs of \(\theta \), \(\lambda \) and \(\alpha \) can be easily obtained as

As we see above, generating samples from the posterior pdfs of \(\theta \) and \(\lambda \) can be easily done. On the other hand, because the posterior pdf of \(\alpha \) cannot be reduced analytically to a well-known distribution, generating a sample from \(\pi (\alpha |\theta ,\lambda ,\text {data})\) is not available, directly. Consequently, with normal proposal distribution, we use the Metropolis-Hastings method to generate a sample from \(\pi (\alpha |\theta ,\lambda ,\text {data})\). So, the Gibbs sampling algorithm is as follows:

-

1.

Begin with an initial conjecture \((\alpha _{(0)}\), \(\theta _{(0)}\), \(\lambda _{(0)})\).

-

2.

Set \(t=1\).

-

3.

Generate \(\alpha _{(t)}\) from \(\pi (\alpha |\theta _{(t-1)},\lambda _{(t-1)}, \text {data})\) with the \(N(\alpha _{(t-1)},1)\) as the proposal distribution, using Metropolis-Hastings method.

-

4.

Generate \(\theta _{(t)}\) from \(\text {I}\Gamma (nk+a_2, b_2+\sum \limits _{i=1}^{n}\sum \nolimits _{j=1}^kx_{ij}^{{\alpha _{(t)}}} +\sum \nolimits _{i=1}^{n}\sum \nolimits _{j=1}^{J_2}R_{ij}x_{ij}^{{\alpha _{(t)}}} +\sum \nolimits _{i=1}^{n}R_{ik}x_{ik}^{{\alpha _{(t)}}})\).

-

5.

Generate \(\lambda _{(t)}\) from \(\text {I}\Gamma (n+a_3, b_3+\sum \limits _{i=1}^{n}y_i^{{\alpha _{(t)}}}+\sum \nolimits _{i=1}^{J_1}S_iy_i^{{\alpha _{(t)}}}+S_ny_n^{{\alpha _{(t)}}})\).

-

6.

Evaluate \(R_{(t)s,k}=\displaystyle \sum _{p=s}^{k}\sum _{q=0}^{k-p}\left( {\begin{array}{c}k\\ p\end{array}}\right) \left( {\begin{array}{c}k-p\\ q\end{array}}\right) \frac{(-1)^q\theta _{(t)}}{\theta _{(t)}+\lambda _{(t)}(p+q)}.\)

-

7.

Set \(t = t +1\).

-

8.

Reiterate steps 3–7, T times.

Using this algorithm, the Bayes estimate of \(R_{s,k}\) is given by

Also, by applying the method of Chen and Shao (1999), the HPD \(100(1-\gamma )\%\) credible interval of \(R_{s,k}\) is provided.

3 Inference on \(R_{s,k}\) if \(\alpha \) is known, \(\theta \) and \(\lambda \) are unknown

3.1 Maximum likelihood estimation of \(R_{s,k}\)

Let \(\{Y_{1},\ldots ,Y_n\}\) is a AT-II HPC sample from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) is a AT-II HPC sample from \(W(\alpha ,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_2+1}\). Now, we consider the case that the common shape parameter \(\alpha \) is known. From Sect. 2.1, it can be easily obtained that the MLE of \(R_{s,k}\) is as follows:

By applying a similar method in Sect. 2.2, \((\widehat{R}^{MLE}_{s,k}-R_{s,k})\sim N(0,C)\), where

Consequently, a \(100(1-\gamma )\%\) asymptotic confidence interval for \(R_{s,k}\) is constructed as

where \(z_{\gamma }\) is 100\(\gamma \)-th percentile of N(0, 1).

3.2 Bayes estimation of \(R_{s,k}\)

In this section, when the unknown parameters \(\theta \) and \(\lambda \) are independent random variables, we improve the Bayesian inference about \(R_{s,k}\). In details, the Bayes estimate and the corresponding credible intervals of \(R_{s,k}\), under the squared error loss function, are derived. To reach this aim, we assume that \(\theta \) and \(\lambda \) have the inverse gamma distributions with the parameters \((a_2,b_2)\) and \((a_3,b_3)\), respectively. The joint posterior density function of \(\theta \) and \(\lambda \) is as follows:

where

The Bayes estimate of \(R_{s,k}\), under the squared error loss function, can be obtained by solving the double integral as follows:

Using the idea of Kizilaslan and Nadar (2018), the exact Bayes estimate is

where \(\displaystyle w=nk+n+a_2+a_3\), \(\displaystyle z=1-\frac{(U+b_3)(p+q)}{V+b_2}\) and

The function \(~_2F_1(\alpha ,\beta ;\gamma ,z)\) is known as the hypergeometric series and is quickly evaluated and readily available in standard software such as Matlab. Also, by applying the method of Chen and Shao (1999), the HPD \(100(1-\gamma )\%\) credible interval of \(R_{s,k}\) is provided.

3.3 UMVUE of \(R_{s,k}\)

Let \(\{Y_1,\cdots ,Y_n\}\) is a AT-II HPC sample from \(W(\alpha ,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\cdots ,S_n\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\cdots ,X_{ik}\}\), \(i=1,\cdots ,n\), is a AT-II HPC sample from \(W(\alpha ,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\cdots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_{2}+1}\). When common shape parameter \(\alpha \) is known, the likelihood function is as follows:

where V and U are given in (17). From Eq. (19), when \(\alpha \) is known, we conclude that V and U are the complete sufficient statistics for \(\theta \) and \(\lambda \), respectively. By transforming \(Y^*_{i}=Y_{i}^\alpha ,~i=1,\dots ,n\), we derive one AT-II HPC sample from the exponential distribution with mean \(\lambda \). Now, let

By Balakrishnan and Aggarwala (2000), we result that \(Z_1,\cdots ,Z_n\) are independent and identically random variables which come from an exponential distribution with mean \(\lambda \). So, \(U=\sum \nolimits _{i=1}^{n}Z_i\sim \Gamma (n,\frac{1}{\lambda })\).

Lemma 2

Let \(X^*_{ij}=X_{ij}^\alpha \), \(j=1,\ldots ,k,~i=1,\ldots ,n\). By this notation, the conditional pdfs of \(Y^*_{1}\) given \(U=u\) and \(X^*_{11}\) given \(V=v\) are respectively as follows:

Proof

By a similar method as in Kohansal (2017), we prove the lemma. \(\square \)

Theorem 1

\(\widehat{\psi }_U(\theta ,\lambda )\), UMVUE of \(\psi (\theta ,\lambda )=\frac{\theta }{\theta +\lambda (p+q)}\), based on the complete sufficient statistics V and U, for \(\theta \) and \(\lambda \), respectively, is as follows:

Proof

It is easy to see that \(Y^*_{1}\) and \(X^*_{11}\) are two random variables which come from the exponential distributions with means \(\frac{\lambda }{N}\) and \(\frac{\theta }{K}\), respectively. So,

is an unbiased estimate of \(\psi (\theta ,\lambda )\). Therefore,

where \({\mathcal{A}}=\{(x,y):0<x<v/K,0<y<u/N,N(p+q)y<Kx\},\) \(f_{X^*_{11}|V=v}(x)\) and \(f_{Y^*_{1}|U=u}(y)\) are defined in Lemma 2. For \(v<u(p+q)\), we have

Similarly, for \(v>u(p+q)\), we obtain \(\widehat{\psi }_U\left( \theta ,\lambda \right) =\displaystyle \sum \limits _{l=0}^{nk-1}(-1)^l \left( \frac{u(p+q)}{v}\right) ^l \frac{{{nk-1}\atopwithdelims ()l}}{{{n+l-1}\atopwithdelims ()l}}\). \(\square \)

Consequently, \(\widehat{R}^U_{s,k}\), UMVUE of \(R_{s,k}\), is

4 Inference on \(R_{s,k}\) if \(\alpha _1\), \(\alpha _2\), \(\theta \) and \(\lambda \) are unknown

4.1 Maximum likelihood estimation of \(R_{s,k}\)

Suppose that \(X\sim W(\alpha _1,\theta )\) and \(Y\sim W(\alpha _2,\lambda )\) are two independent random variables with different unknown parameters. For Weibull distribution using (1) and (2), the multicomponent stress-strength reliability is given by

This integral cannot be evaluated in closed form expression, so the numerical solution is required. Now, let \(\{Y_{1},\ldots ,Y_n\}\) is a AT-II HPC sample from \(W(\alpha _1,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) is a AT-II HPC sample from \(W(\alpha _2,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_2+1}\). The likelihood function based on observed data is

and the log-likelihood function is

Therefore, the MLEs of \(\theta \) and \(\lambda \) denoted by \(\widehat{\lambda }\) and \(\widehat{\theta }\) are obtained by

Also, the MLEs of \(\alpha _1\) and \(\alpha _2\) are the solution of the following equations, respectively:

Then, in view of the MLE’s invariance property, the MLE of \(R_{s,k}\) is given by

4.2 Approximate maximum likelihood estimation of \(R_{s,k}\)

In this section, we obtain the AMLEs of \(\theta \), \(\lambda \), \(\alpha _1\), \(\alpha _2\) and \(R_{s,k}\). Let \(\{Y_{1},\ldots ,Y_n\}\) is a AT-II HPC sample from \(W(\alpha _1,\lambda )\) under the scheme \(\{N,n,T_1,S_1,\ldots ,S_n\}\) such that \(Y_{J_1}<T_1<Y_{J_1+1}\). Also, \(\{X_{i1},\ldots ,X_{ik}\},~i=1,\ldots ,n,\) is a AT-II HPC sample from \(W(\alpha _2,\theta )\) under the AT-II HPC scheme \(\{K,k,T_2,R_{i1},\ldots ,R_{ik}\}\) such that \(X_{iJ_2}<T_2<X_{iJ_2+1}\). Moreover, let \(U_{ij}=\log (X_{ij})\) and \(V_i=\log (Y_i)\). So, \(U_{ij}\sim EV(\mu _1,\sigma _1)\) and \(V_i\sim EV(\mu _2,\sigma _2)\), where

So, with ignoring the constant value, based on the observed data \(\{U_{i1},\ldots ,U_{ik}\}\), \(i=1,\ldots ,n\) and \(\{V_{1},\ldots ,V_{n}\}\), the log-likelihood function can be obtained by

where \(\displaystyle t_{ij}=\frac{u_{ij}-\mu _1}{\sigma _1}\) and \(\displaystyle z_i=\frac{v_i-\mu _2}{\sigma _2}\). The usual equations, with taking derivatives of the above equation with respect to \(\mu _1\), \(\mu _2\), \(\sigma _1\) and \(\sigma _2\), can be derived by:

Quite similar to Sect. 2.2, we can obtain AMLEs of \(\mu _1\), \(\mu _2\), \(\sigma _1\) and \(\sigma _2\), say \(\hat{\hat{\mu }}_1\), \(\hat{\hat{\mu }}_2\), \(\hat{\hat{\sigma }}_1\) and \(\hat{\hat{\sigma }}_2\), respectively, by

where \(A_1\), \(A_2\), \(B_1\), \(B_2\), \(C_1\), \(C_2\), \(D_1\), \(D_2\), \(E_1\) and \(E_2\) are given in Sect. 2.2. After deriving \(\hat{\hat{\mu }}_1\), \(\hat{\hat{\mu }}_2\), \(\hat{\hat{\sigma }}_1\) and \(\hat{\hat{\sigma }}_2\), the values \(\hat{\hat{\theta }}\), \(\hat{\hat{\lambda }}\), \(\hat{\hat{\alpha }}_1\), \(\hat{\hat{\alpha }}_2\) and \(\hat{\hat{R}}_{s,k}\) can be evaluated by \(\hat{\hat{\theta }}=e^{\frac{\hat{\hat{\mu }}_1}{\hat{\hat{\sigma }}_1}}\), \(\hat{\hat{\lambda }}=e^{\frac{\hat{\hat{\mu }}_2}{\hat{\hat{\sigma }}_2}}\), \(\hat{\hat{\alpha }}_1=\frac{1}{\hat{\hat{\sigma }}_1}\), \(\hat{\hat{\alpha }}_2=\frac{1}{\hat{\hat{\sigma }}_2}\) and

4.3 Bayes estimation of \(R_{s,k}\)

In this section, we assume that the unknown parameters \(\alpha _1\), \(\alpha _2\) have the gamma distributions with the parameters \((a_1,b_1)\) and \((a_4,b_4)\) and \(\theta \) and \(\lambda \) have the inverse gamma distributions with the parameters \((a_2,b_2)\) and \((a_3,b_3)\), respectively. We improve the Bayesian inference about \(R_{s,k}\) in this section. The joint posterior density function of \(\alpha _1\), \(\alpha _2\), \(\theta \) and \(\lambda \) is proportion with the likelihood function based on the observed sample and the prior distributions of each unknown parameters, i.e.

From the Eq. (23), it is obvious that the Bayes estimates of \(\alpha _1\), \(\alpha _2\), \(\theta \), \(\lambda \) and \(R_{s,k}\) cannot be obtained in a closed form. Therefore, we utilize the MCMC method. The posterior pdfs of \(\theta \), \(\lambda \), \(\alpha _1\) and \(\alpha _2\) are respectively:

As we see above, generating samples from the posterior pdfs of \(\theta \) and \(\lambda \) can be easily done. On the other hand, because the posterior pdfs of \(\alpha _1\) and \(\alpha _2\) cannot be reduced analytically to a well-known distribution, generating a sample from \(\pi (\alpha _1|\theta ,\text {data})\) and \(\pi (\alpha _2|\lambda ,\text {data})\) are not available, directly. Consequently, with normal proposal distribution, we use the Metropolis-Hastings method to generate a sample from \(\pi (\alpha _1|\theta ,\text {data})\) and \(\pi (\alpha _2|\lambda ,\text {data})\). So, the Gibbs sampling algorithm is as follows:

-

1.

Begin with an initial conjecture \((\alpha _{1(0)}\), \(\alpha _{2(0)}\), \(\theta _{(0)}\), \(\lambda _{(0)})\).

-

2.

Set \(t=1\).

-

3.

Generate \(\alpha _{1(t)}\) from \(\pi (\alpha _1|\theta _{(t-1)}, \text {data})\) with the \(N(\alpha _{1(t-1)},1)\) as the proposal distribution, using Metropolis-Hastings method.

-

4.

Generate \(\alpha _{2(t)}\) from \(\pi (\alpha _2|\lambda _{(t-1)}, \text {data})\) with the \(N(\alpha _{2(t-1)},1)\) as the proposal distribution, using Metropolis-Hastings method.

-

5.

Generate \(\theta _{(t)}\) from \(\text {I}\Gamma \bigg (nk+a_2, b_2+\sum \limits _{i=1}^{n}\sum \limits _{j=1}^kx_{ij}^{{\alpha _{1(t)}}} +\sum \limits _{i=1}^{n}\sum \limits _{j=1}^{J_2}R_{ij}x_{ij}^{{\alpha _{1(t)}}} +\sum \limits _{i=1}^{n}R_{ik}x_{ik}^{{\alpha _{1(t)}}}\bigg )\).

-

6.

Generate \(\lambda _{(t)}\) from \(\text {I}\Gamma (n+a_3, b_3+\sum \limits _{i=1}^{n}y_i^{{\alpha _{2(t)}}}+\sum \limits _{i=1}^{J_1}S_iy_i^{{\alpha _{2(t)}}} +S_ny_n^{{\alpha _{2(t)}}})\).

-

7.

Evaluate \(R_{(t)s,k}=\sum \limits _{p=s}^{k}\left( {\begin{array}{c}k\\ p\end{array}}\right) \frac{\alpha _{2(t)}}{\lambda _{(t)}}\int _0^\infty y^{\alpha _{2(t)}-1}e^{-\frac{p}{\theta _{(t)}}y^{\alpha _{1(t)}}-\frac{1}{\lambda _{(t)}}y^{\alpha _{2(t)}}}(1-e^{-\frac{y^{\alpha _{1(t)}}}{\theta _{(t)}}})^{k-p}dy.\)

-

8.

Set \(t = t +1\).

-

9

Reiterate steps 3–8, T times.

Using this algorithm, the Bayes estimate of \(R_{s,k}\) is given by

Also, by applying the method of Chen and Shao (1999), the HPD \(100(1-\gamma )\%\) credible interval of \(R_{s,k}\) is provided.

5 Data analysis and comparison study

In this section, to compare the performance of the different methods which described in the preceding sections, we display some results based on Monte Carlo simulations and real data.

5.1 Numerical experiments and discussions

In this subsection, the performance of ML, AML and Bayes estimates under different adaptive hybrid progressive censoring schemes are investigated by the Monte Carlo simulation. For this purpose, the performances of the different estimates are compared in terms of biases and mean squares errors (MSEs). Also, the different confidence intervals, namely the asymptotic confidence intervals, two bootstrap confidence intervals and the HPD credible intervals are compared in terms of the average confidence lengths, and coverage percentages. The simulation results for the different parameter values, the different hyperparameters and different sampling schemes are calculated. The utilized censoring schemes are given in Table 1. Also, we provide \(T_1=T_2=2\), and we consider \(s = 2\) and \(s = 4\). All the results are based on 2000 replications.

In the simulation study, when the common shape parameter \(\alpha \) is unknown, the parameter value \(\Theta = (\theta ,\lambda ,\alpha )=(1,1,3)\) is used to compare the MLE, AMLE and different Bayes estimators, in all cases. Three priors are assumed for computing the Bayes estimators and HPD credible intervals as follows:

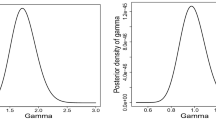

For different priors and different censoring schemes, the average biases, and MSEs of the MLE and AMLE using (8) and (9) and Bayes estimates of \(R_{s,k}\) via Lindley’s approximation and MCMC method using (14) and (15) are derived, respectively. The results are reported in Table 2. From Table 2, it is observed that the biases and MSEs of the ML and AML estimates are almost similar in most schemes. The Bayes estimates have less MSEs. Comparing the different Bayes estimates indicates that the best performance in term of MSEs belongs to the Bayes estimates based on Prior 3. Moreover, comparing two approximate Bayes methods shows that the biases and MSEs of the MCMC method are generally smaller than those obtained from the Lindley’s approximation. Actually, with increasing n, for fixed s and k, the biases and MSEs of the ML and Bayes estimates of \(R_{s,k}\) decrease in all cases. Perhaps one of the most important reasons is the fact that when n increases, some additional information is gathered and the number of observed failures increases.

Also, we calculate the \(95\%\) confidence intervals for \(R_{s,k}\), based on the asymptotic distribution of the MLE. Furthermore, the Boot-p, Boot-t confidence intervals and the HPD credible intervals are computed. In the simulation study, the bootstrap intervals are computed based on 350 re-sampling. Also, the Bayes estimates and the associated credible intervals are computed based on 3000 sampling, namely \(T_{bayes}=3000\). These results include the average confidence or credible lengths, and the corresponding coverage percentages and they are reported in Table 3. From Table 3, we show that the bootstrap confidence intervals have the widest average confidence lengths and the HPD intervals provide the smallest average credible lengths for different censoring schemes and different priors. Also, the asymptotic confidence intervals are the second best confidence intervals. Comparing the different confidence and credible intervals show that the best performance belongs to HPD credible interval based on the Prior 3 and it is evident that the HPD intervals provide the most coverage percentages in most considered cases. Also, with increasing n, for fixed s and k, the average confidence or credible lengths decrease and the associated coverage percentages increase in all cases.

Now let us consider the case when the common shape parameter \(\alpha \) is known, the parameter value \(\Theta = (\theta ,\lambda ,\alpha )=(2,2,5)\) is used mainly to compare the MLEs, different Bayes estimators and UMVUEs. Three priors are assumed for computing the Bayes estimators and HPD credible intervals as follows:

For different priors and different censoring schemes, the average biases, and MSEs of the MLE using (16), Bayes estimate via exact method using (18) and UMVUE of \(R_{s,k}\) using (20) are derived. We reported the results in Table 4. From Table 4, it is observed that the performances of UMVU and ML estimates are quite close to those of the Bayes estimates, in most cases. Comparing the different Bayes estimates indicates that the best performance in term of MSEs belongs to the Bayes estimates based on Prior 6.

Also, based on the asymptotic distribution of the MLE, we computed the \(95\%\) confidence intervals for \(R_{s,k}\). Furthermore, we computed the HPD credible intervals. The simulation results included the average confidence or credible lengths, and the corresponding coverage percentages are reported in Table 5. The nominal level for the confidence intervals or the credible intervals is 0.95 in each case. From Table 5, we observed that the HPD intervals provide the smallest average credible lengths and the asymptotic confidence intervals are the second best confidence intervals. Also, comparing the confidence and credible intervals show that the best performance belongs to HPD credible interval based on the Prior 6 and it is evident that the HPD intervals provide the most coverage percentages in most considered cases. Same as before, with increasing n, for fixed s and k, the average confidence or credible lengths decrease and the associated coverage percentages increase in all cases.

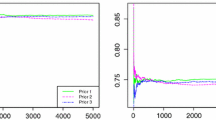

Also, we can compare Biases and MSEs of the estimators by plotting them for some censoring plans. For this aim, we consider \(\alpha =5\) and \((\lambda ,\theta )\)=(6,1), (6,2), (6,3), (6,4), (6,6), (6,8), (6,10), (6,12), (6,15), (6,18), (6,20), (6,22) and (6,24). The true values of reliability in multicomponent stress-strength with the given combinations for \((s,k)=(2,5)\) are 0.1876, 0.3324, 0.4459, 0.5359, 0.6667, 0.7540, 0.8144, 0.8571, 0.9005, 0.9286, 0.9419, 0.9522 and 0.9603. Also, we study Bayesian case with \(a_i=1\), \(b_i=2\), \(i=1\), 2, for hyperparameters. Figure 3 demonstrates the Biases and MSEs of \(\widehat{R}^{MLE}_{s,k}\), \(\widehat{R}^{B}_{s,k}\) and \(\widehat{R}^{U}_{s,k}\), for the different schemes. We observe that with increasing sample size, the Biases and MSEs of the estimates decrease, as expected. Moreover, the MSE is large when \(R_{s,k}\) is about 0.5 and it is small for the extreme values of \(R_{s,k}\). Furthermore, the MSEs of UMVUE are greater than that of MLE when \(R_{s,k}\) is about 0.5 and MSEs of UMVUE are small than that of MLE for the extreme values of \(R_{s,k}\).

In this part, when the shape parameters \(\alpha _1\) and \(\alpha _2\) are different, the parameter value \(\Theta = (\theta ,\lambda ,\alpha _1,\alpha _2)=(1,1,3,5)\) is used to compare the MLEs and different Bayes estimators, in all the cases. Three priors are assumed for computing the Bayes estimators as follows:

In this case, the average biases, and MSEs of the MLE using (21), AMLE using (22) and Bayes estimate of \(R_{s,k}\) using (24) are derived. We reported the results in Table 6. From Table 6, it is observed that the biases and MSEs of the ML estimates are quite close to those of the Bayes estimates, in most cases. Comparing the different Bayes estimates indicates that the best performance in term of MSEs belongs to the Bayes estimates based on Prior 9. Also, with increasing n, for fixed s and k, the biases and MSEs of the different estimates of \(R_{s,k}\) decrease in all cases.

5.2 Data analysis

For illustrative purposes, in this section, the analysis of a pair of real data sets is provided. In agriculture, it is very important that we all understand drought. Drought occurrence has a lot of damage to production. On the other hand, there are several ways to manage these damages. One of the most important is the use of drought-insurance products. In the following, one scenario concerning the excessive drought is constructed. If the water capacity of a reservoir in a region on Aguste at least two years out of next five years is more than the amount of water achieved on December of the previous year, we demand that there will be no excessive drought afterward. So, multicomponent reliability is the probability of non-occurrence drought. Estimation of this parameter is important in agriculture. We cannot ignore the effect of drought on farm production and livestock holdings. The most immediate consequence of drought is a fall in crop production, due to inadequate and poorly distributed rainfall. Low rainfall causes poor pasture growth and may also lead to a decline in fodder supplies from crop residues. In fact, by this probability, we can plan to prevent damage from drought, which included the safety of instruments that might be damaged. In this scenario, it is very likely that the researcher confronts the censored samples from both populations rather than complete samples. There are some reasons that we can face the censored data, in water capacity. These reasons may be natural disasters, such as flood, earthquake and storm or caused by human activities, such as financial pressures, unwanted errors and etc. Many authors have studied the application of censoring data in drought and environmental conditions, for more details see Antweiler (2015), Sürücü (2015) and Tate and Freeman (2000). The data which we study in this section is the monthly water capacity of the Shasta reservoir in California, USA. We consider the months of August and December from 1975 to 2016. These data sets can be found here http://cdec.water.ca.gov/cgi-progs/queryMonthly?SHA. Some authors have been studied these data previously, for more details see Kizilaslan and Nadar (2016, 2018) and Nadar et al. (2013).

When we confront complete data, \(X_{11},...,X_{15}\) are the capacities of August from 1976 to 1980, \(X_{21},...,X_{25}\) are the capacities of August from 1982 to 1986 and so on \(X_{71},...,X_{75}\) are the capacities of August from 2012 to 2016. Also, \(Y_1\) is the capacity of December 1975, \(Y_2\) is the capacity of December 1981 and so on \(Y_7\) is the capacity of December 2011. Just for simplify calculations, we divide all the data points by the total capacity of Shasta reservoir which is 4,552,000 acre-foot. This work will not have any effect on statistical inference.

To implement the theoretical methods, first, we fitted the Weibull distribution on two data sets, separately. Based on the results, for X, the estimated parameters are \(\alpha _1=3.5427\), \(\theta =0.1891\), the Kolmogorov-Smirnov distance and associated p-value are 0.1550 and 0.3345, respectively. Also, for Y, the estimated parameters are \(\alpha _2=11.0172\), \(\lambda =0.0341\), the Kolmogorov-Smirnov distance and associated p-value are 0.2833 and 0.4604, respectively. From the p-values, it is obvious that the Weibull distribution is an adequate fit for these data sets. For the X and Y data sets, we provided the empirical distribution functions and the PP-plots in Figs. 4 and 5, respectively. Because the scale parameters of two data sets are not same, we consider the general case.

To obtain the MLE of unknown parameters \(\alpha _1\) and \(\alpha _2\), we use the Newton-Raphson method. The initial value for this algorithm is obtained by maximizing of profile log-likelihood function. So, we plot the profile log-likelihood function of \(\alpha _1\) and \(\alpha _2\) in Fig. 6 and we see that the approximate initial values for \(\alpha _1\) and \(\alpha _2\) should be close 3 and 11, respectively.

For the complete data sets, the MLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.1891, 0.0341, 3.5427, 11.0172, the MLE of \(R_{1,5}\) is 0.6727 and the MLE of \(R_{2,5}\) is 0.3333. Also, the AMLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.1277, 0.0146, 3.0498, 11.6436, the AMLE of \(R_{1,5}\) is 0.4306 and the AMLE of \(R_{2,5}\) is 0.1347. Moreover, the Bayes estimate of \(R_{1,5}\) with non-informative priors assumption, via the MCMC method is 0.6795 and the 95\(\%\) credible interval is (0.3885,0.8954). Furthermore, the Bayes estimate of \(R_{2,5}\) with non-informative priors assumption, via the MCMC method is 0.5774 and the 95\(\%\) credible interval is (0.3136,0.8155).

For illustrative purposes, two different AT-II HPC samples have been generated from the above data sets:

To obtain the censored data from X and Y, we perform as follows. First, using the method which has been explained in Sect. 1, we censor some elements from the Y vector and for any data of Y which has been censored, we remove the same row of X matrix. In the remaining matrix of X, we apply the censoring scheme for each row.

Based on Scheme 1, the MLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.2449, 0.0892, 2.9631, 9.0491, the MLE of \(R_{1,4}\) is 0.5850 and the MLE of \(R_{2,4}\) is 0.2378. Also, the AMLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.2055, 0.0861, 2.8030, 8.8085, the AMLE of \(R_{1,4}\) is 0.4758 and the AMLE of \(R_{2,4}\) is 0.1615. Moreover, the Bayes estimate of \(R_{1,4}\) with non-informative priors assumption, via the MCMC method is 0.6348 and the 95\(\%\) credible interval is (0.3322,0.8813). Furthermore, the Bayes estimate of \(R_{2,4}\) with non-informative priors assumption, via the MCMC method is 0.4904 and the 95\(\%\) credible interval is (0.2249,0.7482).

Similarity, based on Scheme 2, the MLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.2168, 9.2e–5, 2.9518, 25.3593 and the MLE of \(R_{1,3}\) is 0.5432. Also, the AMLEs of \(\theta ,\lambda ,\alpha _1,\alpha _2\) are 0.1249, 5.9e-5, 2.9740, 26.1505 and the AMLE of \(R_{1,3}\) is 0.2355. Moreover, the Bayes estimate of \(R_{1,3}\) with non-informative priors assumption, via the MCMC method is 0.5792 and the 95\(\%\) credible interval is (2552,0.8520).

To see the effect of the hyperparameters on the Bayes estimators and also on credible intervals, we utilize the informative priors. By using the re-sampling method, the hyperparameters can be obtained as \(a_1 = 46.302, b_1 = 0.0792, a_2 = 33.7983, b_2 = 0.1665, a_3 = 0.0375, b_3 =0.0001, a_4=6.3566, b_4=2.3182\). Based on this, for complete data, Bayes estimator of \(R_{1,5}\) with MCMC method is 0.5373 and \(95\%\) credible interval is (0.2942,0.7623). Also, Bayes estimator of \(R_{2,5}\) with MCMC method is 0.4128 and \(95\%\) credible interval is (0.2073,0.6443). For Scheme 1, Bayes estimator of \(R_{1,4}\) with MCMC method is 0.4469 and \(95\%\) credible interval is (0.2162,0.6881). Moreover, Bayes estimator of \(R_{2,4}\) with MCMC method is 0.3466 and \(95\%\) credible interval is (0.1459,0.5971). For Scheme 2, Bayes estimator of \(R_{1,3}\) with MCMC method is 0.3605 and \(95\%\) credible interval is (0.1420,0.6140). As we see, by the above scenario, the estimation of non-occurrence probability of drought, in most cases, is less than 0.6. So, in this example, we can conclude that the rainfall is not very good and dehydration may occur. Therefore, in order to avoid possible damage, planning must be done in this regard.

Comparing the two schemes with informative and non-informative priors, it is obvious that in Scheme 1, estimators have smaller standard errors than Scheme 2, as expected. Also, comparing Bayes estimators, it is observed that they depend on hyperparameters. Because the HPD credible intervals based on informative priors are slightly smaller than the corresponding length of the HPD credible intervals based on non-informative priors, therefore, if prior information is available it should be used. Moreover, comparing the estimators in the complete data set or in Scheme 1, it is observed that, in \(s = 1\), estimators are smaller than \(s = 2\), as expected.

6 Conclusions

In this paper, the estimation of multicomponent reliability for Weibull distribution based on AT-II HPC schemes is studied. In fact, we solved the problem in three cases. Firstly, when the common shape parameter is unknown, we derived the MLEs of the unknown parameters and \(R_{s,k}\). Because the MLEs cannot be obtained in closed forms, we derived the approximate MLEs of the parameters and \(R_{s,k}\). Also, by earning the asymptotic distribution of the \(R_{s,k}\), we constructed the asymptotic confidence intervals. Moreover, two bootstrap confidence intervals are proposed which their performance are quite satisfactory. Furthermore, we approximated the Bayes estimate of \(R_{s,k}\) by applying two methods: Lindley’s approximation and MCMC method. We constructed the HPD credible intervals by using the MCMC method. Secondly, when the common shape parameter is known, the ML, exact Bayes and UMVU estimates are computed. Also, asymptotic and HPD credible intervals are constructed in this case. Finally, in general case, when two shape parameters are different and unknown, the statistical inferences about \(R_{s,k}\) such as ML, AML and Bayesian estimations are obtained and the HPD credible intervals are constructed.

From the simulation results, it is obvious that the ML and AML estimates are almost similar in most schemes. Also, comparing the Bayes estimators shows that the estimates based on informative priors perform better than the ones which obtained using the non-informative prior. Furthermore, the MCMC method approximates the Bayes estimate better than the Lindley method. Moreover, comparing the different confidence intervals indicates that HPD intervals provide the smallest credible lengths and the bootstrap intervals provide the widest lengths for different censoring schemes and different parameter values. Also, comparing two bootstrap confidence intervals shows that Boot-p intervals perform better than the Boot-t intervals.

References

Antweiler RC (2015) Evaluation of statistical treatments of left-censored environmental data using coincident uncensored data sets. II. group comparisons. Environ Sci Technol 49:13439–13446

AL Sobhi MM, Soliman AA (2016) Estimation for the exponentiated Weibull model with adaptive Type-II progressive censored schemes. Appl Math Model 40:1180–1192

Balakrishnan N, Aggarwala R (2000) Progressive censoring: theory, methods and applications. Birkhäuser, Boston

Bhattacharyya GK, Johnson RA (1974) Estimation of reliability in multicomponent stress-strength model. J Am Stat Assoc 69:966–970

Chen MH, Shao QM (1999) Monte Carlo estimation of Bayesian Credible and HPD intervals. J Comput Gr Stat 8:69–92

Efron B (1982) The jackknife, the bootstrap and other re-sampling plans. SIAM, CBMSNSF regional conference series in applied mathematics, No. 34, Philadelphia

Epstein B (1954) Truncated life tests in the exponential case. Ann Math Stat 25:555–564

Gradshteyn IS, Ryzhik IM (1994) Table of integrals, series, and products, 5th edn. Academic Press, Boston

Hall P (1988) Theoretical comparison of bootstrap confidence intervals. Ann Stat 16:927–953

Hanagal DD (1999) Estimation of system reliability. Stat Pap 40:99–106

Kizilaslan F, Nadar M (2018) Estimation of reliability in a multicomponent stress-strength model based on a bivariate Kumaraswamy distribution. Stat Pap 59:307–340

Kizilaslan F, Nadar M (2016) Estimation and prediction of the Kumaraswamy distribution based on record values and inter-record times. J Stat Comput Simul 86:2471–2493

Kohansal A (2017) On estimation of reliability in a multicomponent stress-strength model for a Kumaraswamy distribution based on progressively censored sample. Stat Pap. https://doi.org/10.1007/s00362-017-0916-6

Kundu D, Joarder A (2006) Analysis of type-II progressively hybrid censored data. Comput Stat Data Anal 50:2509–2528

Lindley DV (1980) Approximate Bayesian methods. Trab de Estad investig oper 3:281–288

Nadar M, Kizilaslan F (2016) Estimation of reliability in a multicomponent stress-strength model based on a Marshall-Olkin bivariate weibull Distribution. IEEE Trans Reliab 65:370–380

Nadar M, Papadopoulos A, Kizilaslan F (2013) Statistical analysis for Kumaraswamy’s distribution based on record data. Stat Pap 54:355–369

Nassar M, Abo-Kasem OE (2017) Estimation of the inverse Weibull parameters under adaptive type-II progressive hybrid censoring scheme. J Comput Appl Math 315:228–239

Ng HKT, Kundu D, Chan PS (2009) Statistical analysis of exponential lifetimes under an adaptive Type-II progressively censoring scheme. Nav Res Log 56:687–698

Sürücü B (2015) Testing for censored bivariate distributions with applications to environmental data. Environ Ecol Stat 22:637–649

Tate EL, Freeman SN (2000) Three modelling approaches for seasonal streamflow droughts in southern Africa: the use of censored data. Hydrol Sci J 45:27–42

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

For three parameters case, we compute 13 at \(\widehat{\Theta }=(\widehat{\theta }_1,\widehat{\theta }_2,\widehat{\theta }_3)\), where

In our case, for \((\theta _1,\theta _2,\theta _3)\equiv (\alpha ,\theta ,\lambda )\) and \(u\equiv u(\alpha ,\theta ,\lambda )=R_{s,k}\) as provided in (3), we have

Also, by using \(\ell _{ij}, i,j = 1,2,3, \sigma _{ij}, i,j = 1,2,3\) can be obtained and

and other \(\ell _{ijk}=0\). In addition, \(u_1=\partial R_{s,k}/\partial \alpha =0\), \(u_{i1}=\partial ^2 R_{s,k}/(\partial \theta _i\partial \alpha )=0\), \(i=1,2,3\), and \(u_2\), \(u_3\) are given in (10) and (11), respectively. Also,

Hence,

Rights and permissions

About this article

Cite this article

Kohansal, A., Shoaee, S. Bayesian and classical estimation of reliability in a multicomponent stress-strength model under adaptive hybrid progressive censored data. Stat Papers 62, 309–359 (2021). https://doi.org/10.1007/s00362-019-01094-y

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-019-01094-y

Keywords

- Adaptive Type-II hybrid progressive censored

- Approximation maximum likelihood estimation

- MCMC method

- Multicomponent stress-strength reliability

- Weibull distribution