Abstract

Repeated measures ANOVA and mixed-model designs are the main classes of experimental designs used in psychology. The usual analysis relies on some parametric assumptions (typically Gaussianity). In this article, we propose methods to analyze the data when the parametric conditions do not hold. The permutation test, which is a non-parametric test, is suitable for hypothesis testing and can be applied to experimental designs. The application of permutation tests in simpler experimental designs such as factorial ANOVA or ANOVA with only between-subject factors has already been considered. The main purpose of this paper is to focus on more complex designs that include only within-subject factors (repeated measures) or designs that include both within-subject and between-subject factors (mixed-model designs). First, a general approximate permutation test (permutation of the residuals under the reduced model or reduced residuals) is proposed for any repeated measures and mixed-model designs, for any number of repetitions per cell, any number of subjects and factors and for both balanced and unbalanced designs (all-cell-filled). Next, a permutation test that uses residuals that are exchangeable up to the second moment is introduced for balanced cases in the same class of experimental designs. This permutation test is therefore exact for spherical data. Finally, we provide simulations results for the comparison of the level and the power of the proposed methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

ANOVA is a technique used to test the equality of means from different treatment groups. Usually the designs with within-subject factors only are called repeated measures ANOVA. Within-subject factors are factors for which measurements are obtained on the same subject over time or under all the conditions. These designs are extremely common in psychological and biomedical studies. Mixed-model designs are another family of useful designs, especially in clinical research, when there are both within-subject and between-subject factors. Between-subject factors occur when each subject is measured on only one level of the factor. Mixed-model designs are also known as split-plot ANOVA (Keppel 1991), or univariate mixed-model designs with the subject and its interaction as a random effects. Sometimes these designs are simply called repeated measures; for example the IBM SPSS menu called repeated measures treats split-plot designs as well (IBM Corp 2013).

These kinds of designs have some advantages over other (between-subject) ANOVA methods. For instance in clinical research, fewer subjects are required than for the between-subject ANOVA. Repeated measures designs make efficient use of subjects, both in the practical sense of using fewer subjects than between-subject designs, and in the statistical sense of having less error variance, i.e. more statistical power. The major disadvantage of this kind of design is that usually the models are more complex than for the non-repeated measures designs, since they need to take into account the associations between observations obtained from the same individuals.

It is likely that for this kind of design, the parametric assumptions are not satisfied, like the assumption that all the random parts are normally distributed. In that case, the result of the methods based on parametric assumptions might not be reliable.

Permutation tests or randomization tests date back to Fisher (1935). These tests belongs to the family of distribution-free techniques and are suitable for hypothesis testing. The only requirement for permutation tests is the exchangeability of the observations under the null hypothesis. For a set of continuous random variables \((z_{1},z_{2},\ldots ,z_{n})\), exchangeability holds if the joint density \(f\) satisfies \(f(z_{1},z_{2},\ldots ,z_{n})=f\left( z_{\Pi (1)},z_{\Pi (2)},\ldots ,z_{\Pi (n)}\right) \) for any permutation \(\Pi \) of the indices (Good 1994). Permutation tests can be categorized as either exact or approximate. A test is exact if under the null hypothesis the type I error rate is equal to the nominal level of the test (Good 1994; Anderson and Ter Braak 2003). The exchangeability of the permuted units is the requirement for having an exact permutation test. Note that the permuted units can be the original observations, some form of residuals, or a restricted set thereof (citealtbassospssynchronizedsps2007,bassospsdiscussionsps2006).

For a review of all the alternatives of permutation methods in the ANOVA designs, see Anderson and Ter Braak (2003). For a complete account on the permutation of the residuals, we refer to Kherad-Pajouh and Renaud (2010). In the following, we only mention the literature that deals with repeated measures ANOVA based on permutation tests. Since in the repeated measures ANOVA (or mixed-model designs), there is a correlation between observations within a given subject, the application of permutation tests is more involved. White and Harris (1978) proposed a permutation test for experimental designs, and a computer program which can run up to a four-way analysis of variance with any combination of between- and within-subject factors. The proposed permutation test is an approximate test. Still and White (1981) also developed an alternative \(F\) test for ANOVA, using various forms of approximate permutation tests. Good (1994) introduced a form of restricted permutations for ANOVA with only between-subject factors, specifically to test the main effects. Later, Anderson and Ter Braak (2003) applied permutation techniques (permutation of the observations and permutation of the residuals) for fixed effect ANOVA and nested designs. Finally, Mazzaro et al. (2001), Pesarin and Salmaso (2010) and Basso (2009) introduced some techniques for repeated measures designs and unbalanced repeated measures designs. However both the model they considered, and what they called “repeated measures”, are different from what we will present here, (they considered only repetition over time). Pesarin (2001) also considered repetition over time in the MANOVA layout for response profiles, where the analysis can be extended to more complex designs and where repetitions are not necessarily time points. In their proposed techniques, repeated measurements, panel data and longitudinal data are synonymous.

The structure of the paper is the following. In Sect. 2, a general model and its matrix formulation are given for balanced and unbalanced repeated measures and mixed-model designs. Section 3 presents the parametric approach for balanced repeated measures and mixed-model designs, and corresponding F-statistics. In Sect. 4, we introduce an approximate permutation test for balanced repeated measures and mixed-model designs, which is based on permutation of the reduced residuals. We also introduce a technique for computing the residuals which is applicable to general repeated measures designs with any number of factors and subjects. The tests are carried out separately, much like in traditional ANOVA \(F\)-tests. We present an extension of permutation of the reduced residuals for unbalanced designs with unequal number of repetitions in each cell in Sect. 5. A permutation test for balanced repeated measures and mixed-model designs is introduced in Sect. 6. This method is based on permutation of the residuals under the modified model, which removes the correlation between the reduced residuals. In Sect. 7, we show an application of the introduced methods for repeated measure designs with one and two within-subject factors. In Sect. 8, the application of the introduced method for mixed-model designs (one between-subject factor and one within-subject factor) is considered, and Sect. 9 is dedicated to the simulations that have been performed in order to compare the level and the power of different proposed and existing methods.

2 General model for mixed-model designs and repeated measures ANOVA

The mixed-model designs, as their name suggests, are designs with combination of between-subject and within-subject factors. Consider \(A\) as (one of) the between-subject factor(s), \(B\) as (one of) the within-subject factor(s), and \(S\) the random factor corresponding to the subjects. The structural model for a mixed-model design can be written as

In this model \(\alpha _{l}\) are the effects of the between-subject factor, \(\eta _{i}\) are the effects of the within-subject factor and \(\pi _{j/l}\) are the effects of the subjects. The interaction terms are given by the concatenation of the symbols (\(\alpha \eta _{li}\) and \(\pi \eta _{ji/l}\)). \(N=\Sigma _{i}\Sigma _{j}\cdots \Sigma _{k}n_{ij\cdots k}\) is the total number of observations and it is clear that in the balanced case, \(N=abs\cdots n\). It is important to note that we included the interactions between the subject and the within-subject factor(s). It is quite common and highly desirable in psychology, see e.g. Rouanet and Lépine (1970), and this is the model underlying SPSS repeated measures procedure. For various reasons, these terms (interactions between subjects and between-subject factors) are often omitted in the mixed-effect/hierarchical/multilevel model literature as well as in the longitudinal analysis literature. As \(A\) is a between-subject factor, there is no interaction term such as \(\alpha \pi \) in the model. In our model, \(\alpha \) and \(\eta \) are fixed factors. Without loss of generality, we will assume that they satisfy the sigma-restriction constraints, i.e. \(\sum ^{a}_{l=1}\alpha _{l}=0\) and \(\sum ^{b}_{i=1}\eta _{i}=0\). Concerning the random parts in the model, we assume that \(\pi \sim (0,\sigma ^{2}_{\pi })\) and \(\eta \pi \sim (0,\sigma ^{2}_{\eta \pi })\). Following the pigeon hole model that characterize the distinction between fixed and random effects (Cornfield and Tukey 1956), the interaction terms \((\eta \pi )\) of the subject (random effect, \(\pi \)) and the within-subject factor (fixed effect, \(\eta \)) satisfy the constraint \(\sum ^{b}_{i=1}\pi \eta _{ji/l}=0\) for \(j=1,\cdots ,s\), which introduces a dependence between certain interaction terms for each subject between the levels of the within-subject factor, for more details we refer to Sahai and Ageel (2000). Note that the major dependence or correlation that will be brought out in the next sections is no due to this term but to the subject effects. The extension to more than one within-subject factor and/or more than one between-subject factor of our notation is obvious. Finally, let’s mention here that repeated measures designs are special cases of our model (1), where there are only within-subject factors in the model. The conditions mentioned above, hold in this kinds of designs as well. It is not difficult to see that model (1) can be written in matrix form as

where \(y\) contains all observations for all subjects, \(X_{N\times p}\) is the design matrix for the fixed part of the model and \(Z_{N\times q}\) is the design matrix due to the random part of the model. \(\beta _{p\times 1}\) is the unknown vector of parameters for the fixed effects, \(\gamma _{q\times 1}\) contains the random part of the model and \(\epsilon _{N \times 1}\) is random vector of the error terms. It should be mentioned here that \(X\) and \(Z\) matrix and corresponding parameters are based on sigma-restricted (SR) parametrization, see e.g. Cardinal and Aitken (2006).

Often in practice, the condition of normality of the random parts and of the error term are not satisfied, and the traditional approaches are not suitable. Expressed in the matrix form, we will only suppose that \(\gamma \), the vector of random effects is distributed as \(\gamma \sim (0,G)\), where \(G\) is the covariance matrix of random part of the design matrix. The random error term \(\epsilon \) is distributed as \(\epsilon \sim (0,\sigma ^{2}_{\epsilon } \mathrm I _{N})\), and is uncorrelated from the other random part \(\gamma \). Therefore we can write \(y\sim (X\beta ,\Sigma )\), where \(\Sigma =Z'GZ+\sigma ^{2}_{\epsilon } I_{N}\).

Suppose we want to test one part of the parameter, say \(\beta _{2}\), where the vector of fixed parameter \(\beta \) is divided in two parts: \(\beta '=\big (\beta '_1,\beta '_2\big )\). The corresponding hypotheses are

The matrix formula for the repeated measures or mixed-model design can be written as the following

In this model \((X_1)_{N \times p_{1}}\) is the part of design matrix which corresponds to \(\beta _1\) and \((X_2)_{N \times p_{2}}\) to \(\beta _2\). The matrix \(Z_2\) and its corresponding vector of parameters \(\gamma _2\) are the random effects counterparts of \(X_2\) and \(\beta _{2}\). More precisely they contain the interaction between the subject effect and the effect(s) to be tested for within-subject effects and merely the subject effect for between-subject effects. The matrix \(Z_1\) and its corresponding vector of parameters \(\gamma _1\) contain all the other random effects.

3 Parametric approach for balanced designs

In the case of a balanced design, a genuine test for \(\beta _2\) is defined as

The \(\mathrm F \)-statistic for test of \(\beta _{2}\) (between-subject or within-subject factor) is based on two sum of squares: the one attributed to the effect of \(\beta _{2}\) and another one, taken from the random part, that play the role of the denominator, see e.g. Myers and Well (2003) . So \((Z_{1})_{N\times q_{1}}\) can be viewed as the part of design matrix which is not in the denominator of \(\mathrm F \)-statistic to test for \((\beta _{2})\) and \((Z_{2})_{N \times q_{2}}\) is the part which is used.

This test is not the likelihood ratio test, but it can be shown to be an exact test with Gaussian and sphericity assumption. It is the test used in SPSS with the repeated measures menu, and S-Plus/R Error statement in function aov. Due to the orthogonality implied by the balanced designs, the \(\mathrm F \)-statistic can also be written based on residual sum of squares(\(\mathsf {RSS}\)) as

where the estimates in parenthesis indicate which model is fitted to obtain the corresponding \(\mathsf {RSS}\).

Using the same computation as for the numerator in Theorem 2 of Kherad-Pajouh and Renaud (2010), we can rewrite the numerator and the denominator of (5) in a matrix form as

where \(H_{[X_{2}]}=X_{2}(X'_{2}X_{2})^{-1}X'_{2}\) is the projection matrix of \(\beta _2\), the fixed part to be tested in Eq. (3). Similarly for the denominator, the projection matrix of random part in the denominator of the \(\mathrm F \)-statistic is \(H_{[Z_{2}]}=Z_{2}(Z'_{2}Z_{2})^{-1}Z'_{2}\). Recall that the design matrices of \(X_{2}\) and \(Z_{2}\), are based on sigma-restricted (SR) parametrization, so the inverse is always defined.

4 Permutation of the reduced residuals for balanced designs

We now propose an altenative testing procedure, extending the idea of permutation of the residuals under the reduced model or reduced residuals, introduced by Still and White (1981) and Freedman and Lane (1983). In some designs like ANOVA with a single error term and nested ANOVA designs, Anderson and Ter Braak (2003) showed that this method is relatively more powerful in comparison with other permutation methods.

In Eq. (3), the random parts \(\eta \) has an expectation equal zero, so the only part which has non-zero expectation under the null hypothesis (2) is the fixed part not tested. So, the \(y_{ijkl}\)’s do not share the same expectation under the null hypothesis and so the observations are far from exchangeable.

The idea behind the reduced residuals is to obtain so-called residuals that share the same expectation under the null hypothesis. This is achieved by removing the effect of fixed parameter not tested. For mixed-model and repeated measures designs, the model includes also the random effects. Since the latter have mean zero, we can choose whether to keep or to remove these random parts in the reduced residuals. Now the question is: what are the necessary and sufficient conditions to define the reduced residuals? Here is a more precise definition for the reduced residuals.

Definition 1

Consider the hypothesis on \(\beta _{2}\) as in (2). The reduced residuals are defined as \(y_{rr}=H_{p}y\), where \(H_p\) is a projection matrix which satisfies the following conditions:

-

(a)

all elements in \(y_{rr}\) have zero expectation under the null hypothesis;

-

(b)

the F-statistic of the reduced residuals defined as

$$\begin{aligned} \mathrm F _{rr}=\frac{y'_{rr}H_{[X_{2}]}y_{rr}/p_{2}}{y'_{rr}H_{[Z_{2}]}y_{rr}/q_{2}}, \end{aligned}$$(7)is equal to \(\mathrm F _{ss}\) for the original observations defined in (4).

The following lemma specifies a set of sufficient conditions on the projection matrix in order to have reduced residuals for repeated measures and mixed-model designs.

Lemma 1

Any projection matrix \(H_{p}\) satisfying (a) \(H_pX_1=0\), (b) \(H_pX_2=X_2\), and (c) \(H_pZ_2=Z_2\) generates a suitable reduced residual vector \(y_{rr}=H_p y\).

Due to space constraints, all the proofs are provided as supplementary material. In the following we demonstrate two specific examples of reduced residuals.

Example 1

Consider the projection matrix that only removes the fixed part among all the non-interesting parts to be tested: \(H_p=(I-H_{[X_{1}]})\), where \(H_{[X_{1}]}=X_1(X_1'X_1)^{-1}X'_{1}\). It is easy to see that \(H_p X_1=0\). Moreover, since, in the balanced model, \(X_1\), \(X_2\), \(Z_1\), and \(Z_2\) have orthogonal columns, they span orthogonal sub-spaces, and therefore they remain unchanged by multiplying by \(H_p\). Hence,

where \(\epsilon ^{(1)}_{rr}=H_{p}\epsilon \).

Example 2

A more conservative choice removes all the parts except the ones on the numerator and denominator of the \(\mathrm F \)-ratio. We called it \(H_{nd}\) and it is defined as \(H_{nd}=H_{[X_{2}]}+H_{[Z_{2}]}\). Again note that mutual orthogonality of the columns of \(X_1\), \(X_2\), \(Z_1\), and \(Z_2\) implies that pre-multiplying \(H_{nd}\) removes every part, except \(X_2\) and \(Z_2\). Therefore, for this example we get

In the following, we use \(y_{rr}\) to denote the reduced residuals in its general sense. It is worth mentioning that different projection matrices \(H_p\) may yield different values for residuals and different covariance matrices \(\Sigma _{rr}\). However, they all have zero expectation under the null hypothesis. Additionally, as stated in definition 1, the value of \(\mathrm F _{rr}\) is unique regardless of which projection matrix is being used. Under the null hypothesis the distribution of \(y_{rr}\) is

where \(\Sigma _{rr}=H_{p}\Sigma H_{p}\) and \(\Sigma \) is the covariance matrix of \(y\). Note that although the elements of \(y_{rr}\) have the same expectation, their (variance-) covariance matrix may not be compound-symetric and therefore they would not be exchangeable.

After selecting the suitable reduced residuals, the next step is to perform a permutation test on these residuals in order to make inference about the parameter \(\beta _2\). The following algorithm shows the required steps.

Algorithm 1

-

Choose a suitable statistic based on the reduced residuals \(y_{rr}\). We propose to use the statistic of \(\mathrm F _{rr}\) introduced in (7).

-

Freely permute the reduced residuals to obtain “new” residuals \(y^{*}_{rr}\).

-

The permuted statistics is calculated for the“new” residuals \(y^{*}_{rr}\) as

$$\begin{aligned} \mathrm F ^{*}_{rr}=\frac{y^{*\prime }_{rr}H_{[X_{2}]}y^{*}_{rr}/p_{2}}{y^{*\prime }_{rr}H_{[Z_{2}]}y^{*}_{rr}/q_{2}}. \end{aligned}$$ -

The two previous steps should be repeated for a large number of times (say \(M\) times). Define the \(p\)-value for the test on \(\beta _2\) as

$$\begin{aligned} {\mathrm {p-value}}=\frac{(\# \mathrm F ^{*}_{rr} \ge \mathrm F _{rr})}{M}, \end{aligned}$$(10)which is simply the proportion of \(\mathrm F ^{*}_{rr}\) larger or equal to the original value of \(\mathrm F _{rr}\).

Note that this method does not follow the traditional approach in permutation tests. Indeed, (the residuals of) the observations are permuted, instead of permuting within the subjects (or blocks). A more traditional approach would be to split the design in strata (corresponding in traditional ANOVA to one between- and (depending on the number of within-subject factors) several within-subject sums of squares (Kirk 1994). We believe that it is worth going beyond the block approach for the following reasons. First, if desired, one could additionally restrict the above permutations to occur only within the subjects, leading to a restricted permutation method. Second, note that this splitting corresponds geometrically to apply several projections that are very similar to the above projection. So applying the additional restriction leads to a method that somehow gets back to the splitting. Third, in the mixed model literature, this approach by observation is well accepted and several bootstrap or permutation methods are often done this way (Field and Welsh 2007). Finally, and most importantly, the splitting approach relies heavily on the orthogonality of the subspaces, i.e. on the requirement that the design is fully balanced. Even with a single missing value, the factors do not belong any more to a single stratum. It is therefore not suited to provide a method for unbalanced data, which is our aim in the next section.

5 Permutation of the reduced residuals for unbalanced design

Since the design is not balanced, the matrices \(X_1\), \(X_2\), \(Z_1\) and \(Z_2\) are not orthogonal any more. Therefore the matrix form is slightly more complex. For example, if we use \(y^{(1)}_{rr}\) as the reduced residuals, then

where \(X_{2rr}=(I-H_{[X_{1}]})X_{2}\), \(Z_{1rr}=(I-H_{[X_{1}]})Z_1\) and \(Z_{2rr}=(I-H_{[X_{1}]})Z_2\).

In the case of unbalanced designs with random effects, there is no general and exact F-statistic. In many statistical softwares like SPSS, when there are missing observations, they automatically delete all the observations from the subject(s) with at least one missing value. However this might remove an important proportion of subjects and lead to a comparative loss of power. Alternatively, some \(\mathrm F \)-statistics have been proposed in the unbalanced designs with more than one random effects, e.g. a quasi-F statistic Myers and Well (2003). Another technique is to use so-called weighted or unweighted means for unbalanced designs with random effects with only between-subject effects. For example Hirotsu (1979) proposed an approximate \(F\)-test to test the main effects and the interactions in unbalanced ANOVA with random effects, using the test statistics corresponding to those in the balanced case, but where the mean squares are obtained with the unweighted mean approach (Sahai and Ageel (2000)). However, there is no consensus on the choice of the statistic for unbalanced mixed-model designs. We propose to use a statistic that is very close to the balanced case [(compare with Eqs. (5) and (6)].

where \(H_{[X_{2rr}]}=X_{2rr}(X'_{2rr}X_{2rr})^{-1}X'_{2rr}\) and \(H_{[Z_{2rr}]}\) is defined as \(H_{[Z_{2rr}]}=Z_{2rr}(Z'_{2rr}Z_{2rr})^{-1}Z'_{2rr}\). As in the balanced case, we define the reduced residuals and their corresponding statistics as

Then by freely permuting the elements of \(y_{rr}\) and replacing in Eq. (13), like in Algorithm 1, we obtain the permuted statistics and the corresponding \(p\)-value for testing the parameter \(\beta _{2}\). Note that for the unbalanced case, it is also correct that under the null hypothesis, \(y_{rr}\sim (0,\Sigma _{rr})\).

Theorem 1

For the original observations, the statistics defined in (13) and (12) are equivalent, i.e. \(\mathrm F _{rr}=\mathrm F _{ss}\).

The proof of the theorem is in the supplementary material. Note that this theorem does not state that the null distribution of these statistics are equal. It can be mentioned that other forms of residuals might be defined. For instance, \(y_{rr}=(I-H_{[X_{1},Z_{1},\epsilon ]})y\) and corresponding \(X_{rr}=(I-H_{[X_{1},Z_{1},\epsilon ]})X_2\) or \(y_{rr}=(I-H_{[X_{1},Z_{1}]})y\) and corresponding \(X_{rr}=(I-H_{[X_{1},Z_{1}]})X_2\) would still satisfy the above Theorem.

6 Permutation of the modified residuals for balanced designs

This method was introduced by Jung et al. (2006) in balanced ANOVA and then by Kherad-Pajouh and Renaud (2010) in general balanced and unbalanced ANOVA with a single error term and in regression settings. Here we extend this method for mixed-model and repeated measures ANOVA with more than one error term in the balanced case.

The aim of introducing the residuals under the modified model or in brief modified residuals is to remove the correlation between the reduced residuals, in order to achieve residuals that are exchangeable up to the second moment. It would follow that if the original data have a spherical pdf, the test based on the modified residuals is exact, see Theorems 3 and 4 in Kherad-Pajouh and Renaud (2010). In other words we want to transform \(\Sigma _{rr}\) to an identity matrix.

Consider the reduced residuals of \(y^{(2)}_{rr}=H_{nd}y\) based on model (8). The covariance matrix of \(y_{rr}\) is \(\Sigma _{rr}=H_{nd}\Sigma H_{nd}\). Since \(H_{nd}\) is a projection matrix, the eigen-decomposition of \(\Sigma _{rr}\) can be written as \( U^0\Lambda _{0}{U^{0}}'+U\Lambda _{rr}U'=\Sigma _{rr}, \) where \(U^0\) contains orthonormal eigen-vectors corresponding to the zero eigen-value, and \(\Lambda _{0}\) is a diagonal matrix of the zero eigen-value, or zero matrix. Thus

where \(U\) contains orthonormal eigen-vectors corresponding to the non zero eigen-values and \(\Lambda _{rr}\) is a diagonal matrix of the non zero eigen-values. Also by multiplying \(U'\) and \(U\) in both sides of Eq. (14), using the orthonormality of the columns of \(U\), we get

Let us construct the matrix \(V=U\Lambda _{rr}^{-\frac{1}{2}}\).

The matrix \(V\) has the same dimension \(N\times (p_{2}+q_{2})\) as the orthonormal matrix U which is related to the geometrical multiplicities of non zero eigen-values of \(\Sigma _{rr}\) (recall that \(p_2\) and \(q_2\) are the number of columns of \(X_2\) and \(Z_2\)). In the two following sections, for the two special cases of repeated measures (with one or several within-subject factor(s)) and for mixed-model design (with one within-subject factor and one between-subject factor), we will show that \(\Sigma _{rr}=\lambda H_{nd}\) where \(\lambda \) is the value of the only non-zero eigen-value of the matrix \(\Sigma _{rr}\). We also compute the exact value of \(\lambda \) and show that its multiplicity is equal to \((p_2+q_2)\). The results can be extended to more general designs as well.

In the case that the reduced residuals are based on \(H_{nd}\), the non-zero eigen-values, or the diagonal elements of \(\Lambda \), are thus all equal to a unique value, say \(\lambda \). We propose to use \(y^{(2)}_{rr}\), because of this property, since for other forms of \(y_{rr}\), the eigen-values may differ. We can thus write \(\Lambda _{rr}=\lambda I_{(p_{2}+q_{2})}\). In this case, the \(V\) matrix satisfies the following:

The value of \(\lambda \) depends on the design and on several parameters, see the two next Sections. To obtain the modified residuals, we pre-multiply both sides of Eq. (8) by \(V'\):

where \(y_{mr}=V'y_{rr}\) are the residuals under the modified model, or modified residuals, with dimension \((p_{2}+q_{2})\), \(X_{mr}=V'X_{2}\), \(Z_{mr}=V'Z_{2}\) and \(\epsilon _{mr}=V'\epsilon _{rr}\). Using matrix V and Eq. (15), the covariance matrix of \(y_{mr}\) can be obtained:

which implies that under the null, the distribution of \(y_{mr}\) is \( y_{mr}\sim (0, I_{(p_{2}+q_{2})}). \)

Based on the modified residuals, the \(\mathrm F \)-statistic to test of \(\beta _2\) for the original \(y_{mr}\), can be defined as the following:

Again, by freely permuting the \(y_{mr}\), we get \(y^{*}_{mr}\) and compute its associated statistic

We can use the same procedure as Algorithm 1 using \(\mathrm F _{mr}\) to make inference about \(\beta _2\). By the following theorem, we will show the property of modified residuals, which keeps numerator and denominator of F-statistics for the original modified residuals, equals to the original F-statistics, even though the dimension of \(y_{mr}\) and \(X_{mr}\) is reduced.

Theorem 2

For the original observations \(y\), the statistics defined in Eqs. (4), (7) and (18) are equal.

The proof is in the supplementary material.

7 Application for repeated measures ANOVAs with one or more within-subject factor(s)

First, we consider a simple case of Eq. (1), where there is only one within-subject factor for balanced design. In such situation, the observations can be modelled as

This model based on (3) can be expressed in matrix form as

where \(\mathbb {1}_{{n}\times {m}}\) is a \(n\times m\) matrix with all elements equal to \(1\). To test the effect of the within-subject factor \(B\), the hypotheses are

Therefore, the working matrices are \(X_{1}=\mathbb {1}\), \(X_{2}=X_{\eta }\) (effect of factor \(B\)), \(Z_{1}=X_{\pi }\) (subject effect \(S\)) and \(Z_{2}=X_{\eta \pi }\) (interaction effect of \(BS\)). More details on these matrices are given in the proof of Theorem 3. Note that \(y\) is distributed according to \(y \sim (\mathbb {1}\mu +X_{2}\eta , \Sigma )\), where \(\Sigma \) is the covariance matrix of \(y\). The random parts are based on \(\pi \sim (0,\sigma ^2_{\pi }I_{s})\), \((\eta \pi )\sim (0,{\mathrm {cov}}(\eta \pi ))\), and \(\epsilon \sim (0,\sigma _{\epsilon }^2I_{bsn})\).

The \(\mathrm F \)-statistic to test the within-subject factor \(B\) can be written in the well known form using the mean squares as \(\mathrm F ^{B}_{ss}=\frac{\mathsf {MS}_B}{\mathsf {MS}_{BS}}\), or in matrix form as \(\mathrm F ^{B}_{ss}=\frac{y'H_{[X_{\eta }]}y/(b-1)}{y'H_{[X_{\eta \pi }]}y/(b-1)(s-1)}\), where \(H_{[X_{\eta }]}\) and \(H_{[X_{\eta \pi }]}\) are the projection matrices of the sigma-restricted design matrices \(X_{\eta }\) and \(X_{\eta \pi }\).

In order to apply the reduced residuals method, we can use any of two kinds of reduced residuals introduced after Lemma 1 for example \(y^{(1)}_{rr}=(I-H_{[\mathbb {1}]})y\) or \(y^{(2)}_{rr}=(H_{[X_{\eta }]}+H_{[X_{\eta \pi }]})y\), and the corresponding \(\mathrm F \)-statistics based on any of these reduced residuals is

In order to use the modified residuals method, we will only use \(y^{(2)}_{rr}\) and in the following section we will show how to construct the covariance matrix and to apply the permutation of the modified residuals method.

7.1 Construction of the covariance matrix of the observations

To use a permutation test based on the modified residuals, we need to find the eigen-values of \(\Sigma _{rr}=H_{nd}\Sigma H_{nd}\) to construct the \(V\) matrix. It is easy to explicitly construct \(H_{nd}\) and a little more complex to find the matrix \(\Sigma \). To simplify the computation, the construction of the design matrices for the projection matrices are based on orthonormal contrasts (e.g. Helmert contrasts), which is a special case of sigma-restriction parametrization where all columns are orthonormal. We explain these contrasts in detail in the proofs.

However for construction of covariance matrices, it is much easier to work with design matrices that are over-parametrized, which typically contain only values of \(0\) and \(1\). To distinguish between these two parametrizations, we use the notation of \(X^{o}\) for over parametrized design matrices. For more detail about differences between these two kinds of design matrices, we refer to Cardinal and Aitken (2006). The covariance matrix of \(y\) which is based on random part of model (20), is constructed based on over-parametrized model and can be written as

where \(X^{o\prime }_{\pi }=I_{s}\otimes \mathbb {1}_{{b}\times {1}}\otimes \mathbb {1}_{{n}\times {1}}\). Note that in repeated measures designs, the constraint \(\sum _{i}(\eta \pi )_{ij}=0\), \(\forall j\) implies a correlation between the interaction terms \((\eta \pi )_{ij}\). A natural way of keeping such dependency, is to write \((\eta \pi )_{ij}=\omega _{ij}-\frac{1}{b}\sum _{i=1}^{b}\omega _{ij}\). In this case \(\omega _{ij}\) are independent and identically distributed random variable with \((\omega _{ij})\sim (0,\sigma ^{2}_{\omega })\), where \(\sigma ^{2}_{\omega }=\frac{b}{b-1}\sigma ^{2}_{\eta \pi }\). This allows us to write \({\mathrm {cov}}(\eta \pi )=(I_{s}\otimes \Delta _{b}) \sigma ^{2}_{\omega }\) where \(\Delta _{b}=I_{b}-\frac{1}{b}\mathbb {1}_{{b}\times {b}}\). Finally, \(X^{o}_{\eta \pi }=I_{s}\otimes I_{b}\otimes \mathbb {1}_{{n}\times {1}}\) and \(X^{o\prime }_{\eta \pi }{\mathrm {cov}}(\eta \pi )X^{o}_{\eta \pi }=\sigma ^{2}_{\omega }(I_{s}\otimes \Delta _{b}\otimes \mathbb {1}_{{n}\times {n}})\).

7.2 Eigen-values and Eigen-vectors of \(\Sigma _{rr}\)

Theorem 3

In the one within-subject factor with possible repetitions in each cell, let \(\Sigma _{rr}=H_{nd}\Sigma H_{nd}\), and \(H_{nd}=H_{[X_{\eta }]}+H_{[X_{\eta \pi }]}\), where \(\Sigma \) is defined on Eq. (22). Then \(\Sigma _{rr}=\lambda H_{nd}\), and the only non-zero eigen-value of \(\Sigma _{rr}\) is \(\lambda =n\sigma ^{2}_{\omega }+\sigma ^2_{\epsilon }\) and have multiplicity \((b-1)s\).

The proof of the theorem is in the supplementary material.

7.3 Estimation of the variance components and the eigen-values in non-oracle designs

To apply the method of permutation of the modified residuals, we need to construct \(y_{mr}=V'y_{rr}\), thus \(V\), and therefore we must estimate \(\lambda \). The value of \(\lambda \), given on Theorem 3, is based on \(\sigma ^{2}_{\omega }\) and \(\sigma ^{2}_{\epsilon }\). Theorem 3 also shows that the columns of \(H_{nd}\) are eigen-vectors of \(\Sigma _{rr}\), and it follows that the columns of \(X_{\eta }\) and \(X_{\eta \pi }\) are the eigen-vectors of \(\Sigma _{rr}\). The following lemma proposes an estimation for \(\lambda \).

Lemma 2

The parameter \(\lambda \) in Theorem 3 can be estimated from

where

This estimator is unbiased, that is, \(\mathbb {E}[\hat{\lambda }]=\lambda \).

We present the proof of this lemma in the supplementary material.

7.4 Repeated measures with more than one within-subject factor

In the case with two within-subject factors, the model is

In this model \(\eta \) and \(\gamma \) are fixed effects and both are within-subject factors and \(\pi \) is the random effect due to the subjects. We construct the covariance matrix of \(\Sigma _{rr}\), based on the constrains which are on the random part of the model, which are: \(\sum _{i=1}(\eta \pi )_{ij}=0, \forall j\) and \(\sum _{l=1}(\gamma \pi )_{lj}=0, \forall j\) and for the three way interactions, \(\sum _{i}(\eta \gamma \pi )_{ilj} =0\ \forall l,j\) and \(\sum _{l}(\eta \gamma \pi )_{ilj} =0\ \forall i,j\). The covariance matrix for this model can thus be written as

\(H_{nd}\) depends on the selected parameter to be tested. We skip the details, as they are similar to the case with one within-subject factor. However, the decomposition of \(\Sigma _{rr}\) can be found in Kherad-Pajouh (2011) and the main result is shown in the following theorem.

Theorem 4

In the two within-subject factors design with possible repetitions in each cell, if we are interested to test one of the within-subject factor, say \(\eta \), let \(H_{nd}=H_{[X_{\eta }]}+H_{[X_{\eta \pi }]}\) and \(\Sigma _{rr}\) defined as in Eq. (23). Then \(\Sigma _{rr}=\lambda H_{nd}\) and the only non-zero eigen-value of \(\Sigma _{rr}\) is \(\lambda =na\sigma ^{2}_{\omega }+\sigma ^2_{\epsilon }\) and have multiplicity of \((b-1)s\).

The proof of the theorem is in the supplementary material. We skip the estimation of \(\lambda \) in this part, as it can be achieved in a similar way as Lemma 2. Similarly, the proof of this Theorem can be generalized to more than two within-subject factors with the only difficulty of having a more complex notation.

8 Application for mixed-model designs

In this section we consider the mixed-model design with one between-subject and one within-subject factor.

where \(\alpha \) is the between-subject factor, \(\eta \) is the within-subject factor, \(\pi \) is the effect of subjects, which is random, and \(s\) is the number of subjects in each levels of between-subject factor. There is obviously no interaction for the subject and the between-subject factor, \(\alpha \eta \) is the fixed interaction part, and \(\pi \eta \) in the random interaction part. In the parametric approach the \(\mathrm F \)-statistic for a within-subject factor is similar to the one for the repeated measures design and the \(\mathrm F \)-statistic for between-subject factor of A can be written as

The \(\mathrm F \)-statistic for the interaction of within-subject factor and between-subject factor can be written as

In order to provide a permutation test based on the reduced residuals, the corresponding statistics \(F_{rr}\) should be used. This can be achieved by replacing \(y_{rr}\) in the above formulae (and in the unbalanced case, one should also replace \(X_{\eta rr}\) and \(X_{\alpha \eta rr}\)). We skip the details for this method, since it is very similar to the previous cases.

Concerning the definition of the modified residuals in mixed-model designs, we should first construct the covariance matrix of \(y_{rr}\) and then find its eigen structure. As mentioned before, the covariance matrix of \(y\) is only based on the random part of model (24). Using similar notation as in previous sections, the covariance matrix of the observations can be written as

and then, the covariance matrix of \(y_{rr}\) can be written as \(\Sigma _{rr}=H_{nd} \Sigma H_{nd}\), where \(H_{nd}\) is dependent on the testing parameters.

Theorem 5

In the mixed-model design with one within- and one between-subject effects and in order to test the between-subject factor, we have \(H_{nd}=H_{[X_{\alpha }]}+H_{[X_{\pi }]}\) and \(\Sigma \) is based on Eq. (26). Then \(\Sigma _{rr}=\lambda H_{nd}\) and the only non-zero eigen-value is \(\lambda =nb\sigma ^{2}_{\pi }+\sigma ^2_{\epsilon }\) with multiplicity \((a-1)+(s-1)\).

Theorem 6

In the mixed-model design, corresponding to (24), in order to test the within-subject factor, we have \(H_{nd}=H_{[X_{\eta }]}+H_{[X_{\eta \pi }]}\) and \(\Sigma _{rr}=H_{nd}\Sigma H_{nd}\), where \(\Sigma \) is based on (26). Then \(\Sigma _{rr}=\lambda H_{nd}\) and the only non-zero eigen-value is \(\lambda =n\sigma ^{2}_{\omega }+\sigma ^2_{\epsilon }\), with multiplicity \(a(s-1)(b-1)+(b-1)\).

The proofs of the two above theorems can be found in the supplementary material. We skip the estimation of \(\lambda \), as it can be achieved in a similar way as in Lemma 2. The proof of these Theorems can be generalized to designs with more factors with the only difficulty of having a more complex notation.

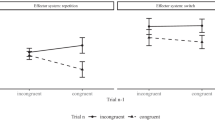

9 Simulation study

In this section, simulation studies are carried out to validate and compare the level and power of the four different methods mentioned in this article (called below \(Y_{mr}\), \(Y_{rr}\), \(Y\) and \(\mathrm F \)-test). The permutation of the reduced residuals, based on \(y^{(2)}_{rr}=H_{nd}y\) is named \(Y_{rr}\). We compare it to the permutation of raw data, which is based on freely permuting the (raw) data, and using the regular \(\mathrm F \)-statistic given in (6). We also provide the results for the parametric \(\mathrm F \)-test, which is based on Gaussian assumption (and the split-plot approach for mixed-model designs) and uses the \(F\) distribution. Of course, this is not a permutation test, but provides the results for the classical approach. Finally, by \(Y_{mr}\) we mean permutation of the modified residuals: \(y_{mr}=V'y_{rr}\). For this method, it is important to separate out the intrinsic quality of the method with a possible loss of quality (both for the level and the power) due to the estimation of the parameter \(\lambda \). This leads us to have two modified residuals procedures: an “oracle” case, that we call \(Y^{or}_{mr}\), for which the population value of \(\lambda \) is supposed to be known, and a “non-oracle” case, that we call \(Y^{n-or}_{mr}\), in which we have to estimate \(\lambda \) from the data. Although the oracle case is not a genuine method, it is an important benchmark, both for the non-oracle case, and for the permutation of the reduced residuals (named \(Y_{rr}\)). Indeed, if the latter have a similar level and power than the oracle \(Y_{mr}\), this would indicate that the reduced residuals are adequate and that there is no need to try to use the modified residuals or to improve the estimation of the unknown \(\lambda \).

9.1 Repeated measures ANOVA

To explain how the data are generated, let’s first consider the repeated measure simulations. They are based on model (20). In this model, \(\mu \) and \(\eta \) are the fixed parts. We fixed \(\mu \) as a vector with all elements equal to 1 (this value has no influence on the results). The test will concern the fixed parameters \(\eta _i\). In order to simulate under the null hypothesis and under several alternatives, we consider the variation measure \(\theta =\big (\sum ^{b}_{i}(\eta _{i}^\text {orig})^2/b\big )^{-1/2}\). In the simulations, the values of \(\eta _i\) that will be used are \((\eta _{i}^\text {orig})\cdot t/\theta \), where \(t\) is a constant that modifies the amplitude of the effects. For instance for estimation of the significance level, \(t\) is equal to zero, which makes all the \(\eta _i\) equal to zero, and the power is obtained with values \(t>0\). Concerning the random parts of the model (20), the ones due to \(\pi \) and \(\eta \pi \) are generated according to a normal distribution, with the following variances: \(\sigma ^{2}_{\pi }=0.2\), \(\sigma ^{2}_{\eta \pi }=0.5\).

Finally the distribution of the error part is either \(\mathcal {N}(0,1)\), or \(U(-\sqrt{3},\sqrt{3})\) or \(\exp (1)-1\), which all have also a variance equal to 1, i.e. \(\sigma ^{2}_{\epsilon }=1\). We also generated data sets from a Generalized Pareto \(GP(0.4814,0.01,1)\), a more heavy-tailed distribution, with shape parameter \(0.4814\) and scale parameter \(0.01\) in order that error terms have variance equal 1. Note that only the errors include skewed or non-normal distributions, not the subject effects.

To construct the modified residuals (\(Y^{or}_{mr}\)), we generated the covariance matrix according to the variances for different random parts of the model and based on Eq. (22). For the modified residuals in non-oracle case (\(Y^{n-or}_{mr}\)), we additionally estimated \(\lambda \).

In Table 1, we generate data for the model which is typical for psychological data. It is constituted by ten subjects \(s=10\), one within-subject factor with four levels \(b=4\) and one observation per cell \(k=1\). The original values for \(\eta \) are chosen as \(\eta ^\text {orig}=[2,-2,4,-4]\) and satisfy the sigma-restriction constraint. In Table 2, an unbalanced repeated measures design is considered with \(s=3\) subjects . The null hypothesis concerns the effect of \(\eta \) with three levels \(b=3\). The original values are \(\eta ^\text {orig}=[4,-2,-2]\). There are two repetitions in each cell except for the last cell with only one observation. In this model, since the parametric \(F\) is not defined for this case and the only proposed method is the permutation of the reduced residuals, we only consider this method in this simulation.

9.2 Mixed-model design

In the Tables 3 and 4, the simulation study is related to a mixed-model design as in model (24) with one within-subject factor with three levels \(b=3\), and one between-subject factor with two levels \(a=2\). There are three subjects in each level of between-subject factor and one repetition per cell. The null hypothesis concerning the within-subject factor \(H_{0}:\eta _1=\eta _2=\eta _3\) is tested in Table 3, and the null hypothesis concerning the between-subject factor \(H_0:\alpha _1=\alpha _2\) is found on Table 4.

The original values for \(\eta ^\text {orig}=[3,-2,-1]\) and for \(\alpha ^\text {orig}=[1,-1]\). As in the previous case, in order to estimate the level and power of each method, we used the variation measure \(\theta \). The variance of the random part of the model are \(\sigma ^{2}_{\pi }=0.5\), \(\sigma ^{2}_{\eta \pi }=0.2\) and \(\sigma ^{2}_{\epsilon }=1\). We used the same covariance matrix of the random part as in the previous case, since only the within-subject factor has interaction with the random effect of the subjects, so adding a between-subject factor does not change the random structure.

In all simulations we used permutation tests based on 1000 permutations. For all settings, we used 5000 Monte-Carlo replications.

10 Discussion

In all the settings of the present simulations, as expected, the type I error is very close to the nominal level of \(0.05\), and the power of the method \(Y^{or}_{mr}\) is relatively higher than the other permutation approaches. The power of \(Y^{n-or}_{mr}\), as expected, is a little lower than \(Y^{or}_{mr}\). Note that already with a medium-sized sample, the modified residuals methods and the approximate test \(Y_{rr}\) are comparable with the permutation of raw data and the parametric \(F\), especially in the non Gaussian cases. Also based on these results, the permutation of the reduced residuals, which is an approximate test, has a relatively good level close to \(0.05\) and good power for all designs and factors tested. This result is in contrast with the results of Kherad-Pajouh and Renaud (2010), who found that for very small sample sizes the modified residuals outperform the reduced residuals in ANOVA with a single error term, i.e. with no repeated measures. This is probably due to the two following reasons. First, the number of observations is almost necessarily larger in repeated measures designs, since each subject has to be measured on each level. Second, the correlation remaining in the reduced residuals is probably less serious for repeated measures designs than for designs for independent observations since in the former correlation between observations are anyway present through the effect of the subjects. In the heavy tailed distribution of GP we still have the same results that all methods have almost level close to \(0.05\). The power of \(Y^{n-or}_{mr}\) is still lower than \(Y^{or}_{mr}\) and in general \(Y_{mr}\) has a little less power than the reduced residual model due to the error terms from heavy tailed distribution.

Given that the two residual methods give similar results, that the reduced residuals are less complex to obtain, that they have slightly higher power for the heavy tailed distributions, and more importantly that the reduced residuals allow for unbalanced designs as well, we suggest to use the reduced residual methods.

Finally, as expected, the simulation results show that in cases where all random parts have normal distributions, the parametric \(\text {F}\)-test is correct. It is however important to note that the proposed permutation methods are quite close in terms of level and power even in this parametric settings. However, the permutation methods outperform the parametric \(\text {F}\)-test quite consistently when the error term is not normal, see e.g. Table 1, \(\exp (1)-1\) at \(t=0.6\), \(t=0.9\) and \(t=1.2\).

11 Conclusion

In this paper at first we introduced an approximate permutation test based on the reduced residuals for repeated measures and mixed-model designs. Based on this method, we can test any within-subject or between-subject factor, including the interactions of within- and between-subject factors. This method is extendable to the unbalanced designs with no empty cell as well. We also introduced a permutation test called modified residuals that is exact up to the second moment. It is based on a modification on the previous approximate test that removes the correlations between the residuals. This method is introduced for balanced repeated measure and mixed-model designs, and can be used to test any within-subject or between-subject factor. We also provide three examples for which we go trough the details of the two proposed methods. Finally, we did simulations in order to compare the level and power of the proposed methods. The method based on the reduced residuals seems to be a good compromise between precision (close to nominal level), power, and complexity of the method. It is therefore advocated in practice, even as a diagnostic to check if the classical approach using a parametric test gives sensitive results.

References

Anderson M, Ter Braak C (2003) Permutation tests for multi-factorial analysis of variance. J Stat Comput Simul 73(2):85–113

Basso D (2009) Permutation tests for stochastic ordering and ANOVA: theory and applications with R. Springer, New York

Basso D, Salmaso L (2006) A discussion of permutation tests conditional to observed responses in unreplicated \(2^m\) full factorial designs. Commun Stat Theory Methods 35(1):83–97. doi: 10.1080/03610920500437277

Basso D, Chiarandini M, Salmaso L (2007) Synchronized permutation tests in replicated IxJ designs. J Stat Plan Inference 137(8):2564–2578. doi:10.1016/j.jspi.2006.04.016

Cardinal R, Aitken M (2006) ANOVA for the behavioural sciences researcher. Lawrence Erlbaum Associates, New Jersey

Cornfield J, Tukey JW (1956) Average values of mean squares in factorials. Ann Math Stat 27(4):907–949. doi:10.1214/aoms/1177728067

Field CA, Welsh A (2007) Bootstrapping clustered data. J Roy Stat Soc B 69:369–390

Fisher R (1935) The design of experiments. Oliver & Boyd, Edinburgh

Freedman D, Lane D (1983) A nonstochastic interpretation of reported significance levels. J Bus Econ Stat 1(4):292–298

Good P (1994) Permutation tests: a practical guide to resampling methods for testing hypotheses. Springer, New York

Hirotsu C (1979) An F approximation and its application. Biometrika 66(3):577–584

IBM Corp (2013) IBM SPSS statistics for Windows, Version 22.0. Armonk, NY

Jung BC, Jhun M, Song SH (2006) A new random permutation test in ANOVA models. Stat Pap 48:47–62

Keppel G (1991) Design and analysis: a researcher’s handbook. Prentice-Hall, Englewood Cliffs

Kherad-Pajouh S (2011) Permutation tests for experimental designs, with extension to simultaneous EEG signal analysis. PhD thesis, University of Geneva. url: http://archive-ouverte.unige.ch/unige:15743

Kherad-Pajouh S, Renaud O (2010) An exact permutation method for testing any effect in balanced and unbalanced fixed effect anova. Comput Stat Data Anal 54(7):1881–1893. http://dx.doi.org/10.1016/j.csda.2010.02.015

Kirk R (1994) Experimental design: procedures for the behavioral sciences. Wadsworth Publishing Co Inc., Belmont

Mazzaro D, Pesarin F, Salmaso L (2001) Permutation tests for effects in unbalanced repeated measures factorial designs. In: Advances in model-oriented design and analysis: proceedings of the 6th international workshop on model-oriented data analysis. Springer, Berlin, p 183

Myers J, Well A (2003) Research design and statistical analysis. Lawrence Erlbaum, New Jersey

Pesarin F (2001) Multivariate permutation tests: with applications in biostatistics. Wiley, Chichester

Pesarin F, Salmaso L (2010) Permutation tests for complex data: theory, applications and software. Wiley, New York

Rouanet H, Lépine D (1970) Comparison between treatments in a repeated-measurement design: ANOVA and multivariate methods. Br J Math Stat Psychol 23:147–163

Sahai H, Ageel M (2000) The analysis of variance: fixed, random, and mixed models. Birkhauser, Boston

Still A, White A (1981) The approximate randomization test as an alternative to the \(F\) test in analysis of variance. Br J Math Stat Psychol 34(2):243–252

White A, Harris P (1978) RANOVA: a fortran IV program for four-way Monte Carlo analysis of variance. Behav Res Methods 10(5):732

Acknowledgments

The authors thank the editor and referees for their helpful comments. The work is supported by the Swiss National Foundation under the Grant number 105211-112465/1 and 105214-116269/1 and by the Ernst et Lucie Schmidheiny Fundation.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Kherad-Pajouh, S., Renaud, O. A general permutation approach for analyzing repeated measures ANOVA and mixed-model designs. Stat Papers 56, 947–967 (2015). https://doi.org/10.1007/s00362-014-0617-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-014-0617-3