Abstract

A particle tracking velocimetry apparatus is presented that is capable of measuring three-dimensional particle trajectories across large volumes, of the order of several meters, during natural snowfall events. Field experiments, aimed at understanding snow settling kinematics in atmospheric flows, were conducted during the 2021/2022 winter season using this apparatus, from which we show preliminary results. An overview of the methodology, wherein we use a UAV-based calibration method, is provided, and analysis is conducted of a select dataset to demonstrate the capabilities of the system for studying inertial particle dynamics in atmospheric flows. A modular camera array is used, designed specifically for handling the challenges of field deployment during snowfall. This imaging system is calibrated using synchronized imaging of a UAV-carried target to enable measurements centered 10 m above the ground within approximately a 4 m \(\times\) 4 m \(\times\) 6 m volume. Using the measured Lagrangian particle tracks, we present data concerning 3D trajectory curvature and acceleration statistics, as well as clustering behavior using Voronoï analysis. The limitations, as well as potential future developments, of such a system are discussed in the context of applications with other inertial particles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A wide variety of geophysical processes involve atmospheric transport of inertial particles, such as wind-blown sand and dust, sea sprays, and snowfall, wherein the details of particle kinematics can have long-ranging implications for society in terms of the environment, climate, and weather. Aeolian transport of sand drives major changes in landscape, not only on Earth but also on other planets (Lapotre et al. 2016), and the related suspension of mineral dust aerosols in the atmosphere, and their subsequent settling, may greatly influence global climate (Kok et al. 2018). For example, sea sprays, which can broadly also be characterized in the same manner as inertial particles in atmospheric turbulence, play an important role in the momentum and thermal fluxes at the air–sea interface and thus must be modeled appropriately for the forecasting of tropical cyclones (Emanuel 2018). Snow particles, which are the primary focus of the study herein, are particularly complex inertial particles due to their intricate morphologies, causing different tumbling behaviors and turbulence interactions that modulate their fall speed and thereby the resultant ground accumulation heterogeneity (Garrett and Yuter 2014; Wang and Huang 2017; Vionnet et al. 2017; Zeugin et al. 2020; Li et al. 2021b). Coherent structures affect their settling characteristics (Aksamit and Pomeroy 2018) and saltation dynamics and near-surface winds also affect transport (Aksamit and Pomeroy 2016). For such reasons, it is critical to better understand the kinematics of atmospheric inertial particles and their interaction with the surrounding flow.

Such atmospheric flow, however, is naturally characterized by a broad range of turbulence scales, where Reynolds numbers are typically on the order of \(10^6\). The largest motions that drive bulk transport can be captured by remote sensing techniques, such as time-of-flight light detection and ranging (LiDAR) from satellites (Huang et al. 2015), for tracking aerosol movements, or Doppler LiDAR (Lundquist et al. 2017) for wind measurements in the atmospheric boundary layer (ABL). However, resolution of the small-scale motions that govern the dynamics of individual particles, and thus influence important parameters for modeling such as fall speed, requires alternative approaches, such as imaging, for particle-level tracking.

Imaging systems for particle tracking and velocimetry are commonplace in the laboratory setting, implemented in both 2D and 3D, the latter typically with multi-camera arrays [for an overview, see Discetti and Coletti (2018)]. The 3D approach is more challenging due to the need for precise calibration of the camera system to be able to triangulate or otherwise reconstruct particle positions accurately, but also due to the difficulty of subsequently linking these particles across time steps to form long Lagrangian trajectories that are needed to study inertial particle kinematics. Great advancements have been made in both respects, such as with iterative particle reconstruction (IPR; Wieneke 2012) and predictive tracking (e.g., Shake-the-Box; Schanz et al. 2016a; Novara et al. 2019), both of which aid in enabling long Lagrangian trajectories to be obtained from dense particle images. Hou et al. (2021) introduced a novel method for large-scale PTV (demonstrated within a 4 m \(\times\) 1.5 m \(\times\) 1.5 m measurement volume) using a single camera based on glare points created by illuminating cm-scale bubbles seeded into the flow as tracers. Apart from this recent addition, traditional methods have generally been limited to measurement domains of \(\sim\)1 m (Schanz et al. 2016b; Terra et al. 2019), due to combined challenges of tracer seeding, illumination, and calibration. For such reasons, imaging-based particle tracking has seen very limited implementation in the field.

That being said, notable examples of particle imaging-based flow measurement in the field have been conducted. These include particle image velocimetry (PIV) or particle tracking velocimetry (PTV) in the atmospheric surface layer (ASL) for both wind energy research (Hong et al. 2014; Abraham and Hong 2020; Wei et al. 2021) and boundary-layer turbulence studies (Hommema and Adrian 2003; Morris et al. 2007; Toloui et al. 2014; Rosi et al. 2014; Heisel et al. 2018), PTV for snow settling in the ASL (Nemes et al. 2017; Li et al. 2021a, b), as well as water surface velocimetry (Perks et al. 2020). In the case of the ASL, both artificial tracers (smoke, fog) and natural tracers (snow) have been used, while in the latter case for water surface velocimetry the patterns of surface reflections from the water itself may be used, or else artificial tracers introduced. However, except for Rosi et al. (2014) and Wei et al. (2021), all the cited approaches are two-dimensional, either in a light sheet or on a surface.

For the case of snow settling, such 2D studies have provided valuable insights into the enhancement of settling velocities due to turbulence (Nemes et al. 2017), clustering behavior influencing settling (Li et al. 2021a), and preferential sampling of sweeping motions from vortices (Li et al. 2021b). Planar PTV has also been used to characterize near-surface snow transport interactions with turbulence and coherent structures (Aksamit and Pomeroy 2016, 2018). Nevertheless, three-dimensional measurements of snow particle motions are necessary in order to fully resolve particle kinematics. In particular, both trajectory curvature and particle clustering may be biased on resolving only in 2D, and therefore, 3D measurements of snow particle transport are needed.

Adaptation of three-dimensional particle tracking to the field is even rarer, compared to cases mentioned for 2D measurement, due to limitations from calibration procedures, particle seeding, and illumination. Rosi et al. (2014) studied ASL turbulence within the first few meters of the surface, using bubbles as tracer particles, imaged with a multi-camera system. Due to artificial seeding constraints over the 4 m \(\times\) 2 m \(\times\) 2 m volume, bubble concentrations were relatively low, with 0.5 m spacing. Another noteworthy implementation of 3D PTV in the field is given by Wei et al. (2021), who used a multi-camera system to measure the time-averaged flow structure and vorticity in the wake of a full-scale vertical axis wind turbine. They achieved measurements in a larger volume (10 m \(\times\) 7 m \(\times\) 7 m), though like Rosi et al. (2014) with a low particle concentration, as they had to use artificial snow as tracers introduced upstream. Multiple runs had to be performed and datapoints spatially binned over the ensemble to obtain a single time-averaged flow field, as the entire domain could not be sampled simultaneously. Thus, turbulent fluctuations could not be captured.

Other related work focused on obtaining Lagrangian trajectories in large-scale measurements has been conducted in the field of bio-locomotion [e.g., Theriault et al. (2014); Muller et al. (2020)], tracking the motions of birds and of fish. These studies generally involve sparse objects, as usually only a few points of interest within the field of view are to be tracked, but have involved innovative calibration approaches to enable tracking in large volumes. The study herein builds upon these calibration approaches, as will be discussed in Sect. 2.2.

The aim of the present work was to track complex 3D motions of snow particles as they settle in atmospheric turbulence and in particular to obtain a sufficiently high number of Lagrangian trajectories to enable the estimate of acceleration, curvature, and high-order statistics. Here, we seek to overcome challenges inherent to the snow settling case, measuring 10 m above the ground in a large volume while also dealing with high concentrations of particles in camera images. In the following, we describe our methodology for achieving these aims through the development of a field-scale 3D imaging system (Sects. 2.1–2.3), including preliminary validation experiments (Sect. 2.4), after which we show results from a field deployment in April 2022 (Sect. 3) to demonstrate the capabilities of this system in terms of the new data and analysis afforded by such measurements (Sect. 4). This work is conducted as part of the Grand-scale Atmospheric Imaging Apparatus project, hereafter referred to as GAIA.

2 Methods

2.1 System description

The principal components of the field-scale 3D imaging system developed under the GAIA project, hereafter referred to as GAIA-PTV, and the associated workflow, are depicted in Fig. 1. In the following, we provide a brief overview followed by a more detailed description. Firstly, GAIA-PTV involves a modular, multi-camera imaging array that, once calibrated, enables the desired 3D particle triangulation and tracking. To obtain the calibration of the camera array, an unmanned aerial vehicle (UAV), equipped with its own lighting, is flown within the measurement volume while being imaged by all cameras. Images collected during the calibration procedure as well as the particle images taken during the experiment are then processed as will be described in Sects. 2.2 and 2.3.

This system was designed with a few key criteria in mind. Due to the difficult working conditions in the field during snowfall, GAIA-PTV needed to be easy to use and capable of rapid deployment. Furthermore, it was desirable for deployment configurations to be flexible and enable large distances between cameras. Such flexibility is needed in the field when snow concentration, and thus image density, is outside our control, and instead, we may desire to adjust the particle image density in each camera by changing the camera separation distances. Lastly, we needed to measure snow settling dynamics in a volume of at least several meters in each dimension, centered high enough above the ground to avoid surface effects on the flow.

To meet these demands, GAIA-PTV implements a modular approach. We use a dedicated single-board computer (SBC) for each camera and wireless communication over a local network, using a COMFAST Outdoor WIFI Antenna. This local network can also be used for wireless camera synchronization for frame rates \(<100\) Hz. Thus, each camera is a stand-alone unit that can be moved and set up easily. The only cables between cameras are for synchronization \(>100\) Hz, wherein GPIO connectors from separate cameras would be wired together, setting one camera as “primary” to output a trigger signal when exposure begins causing the remaining “secondary” cameras to simultaneously begin capture. Having dedicated SBC’s is also a cost-saving approach, removing the need for a more expensive central processing computer capable of handling the bandwidth from the multiple cameras, and thus enables easy future upgrades of the system with more than four cameras. This was achieved using NVIDIA Jetson Nano SBC’s, each equipped with a wireless antenna for communication and a solid-state drive (SSD) for rapid data storage such that images do not need to be buffered on the camera and can be continuously recorded at a sufficiently high frame rate (e.g., 200 Hz). NVIDIA’s Jetson platform provides a full Linux operating system with enough I/O for all auxiliary devices, needing only a 5 V, 10 W power supply easily provided from a battery pack.

The cameras used are FLIR Black Fly S U3-27S5C color units with the Sony IMX429 sensor, pixels measuring 4.5 \(\mu\)m, capable of 95 fps continuous recording at 2.8 MP (1464 \(\times\) 1936 pix.), or up to 200 fps at 0.7 MP, using decimation. Each camera is paired with a 16 mm Fujinon lens, with 3.45 \(\mu\)m pixel pitch and an aspherical lens design minimizing radial distortions.

As stated above, an additional requirement for the system was that it be able to image snow particles in a measurement region high above the ground, approximately 10 m. This particular choice of height was motivated by the goal of avoiding the flow effects of local surface “roughness” elements (e.g., bushes, small topographic changes) on snow settling, ensuring a more uniform light background for the four-camera images, avoiding contamination from re-suspended snow particles, and lastly, to match previous experiments involving 2D tracking that were designed under similar considerations (Nemes et al. 2017; Li et al. 2021a, b). This design criterion introduced two challenges for the system. First, our lighting system to illuminate the snow particles needed to be strong enough for those distances, and second, the camera calibration would need to be done far above the ground.

The sampling volume to be imaged measures typically around 4 m \(\times\) 4 m \(\times\) 6 m (x, y, z). Therefore, to illuminate the snowflakes throughout this large of a volume centered at a height of 10 m, a 5 kW spotlight is used, similar to that used by Hong et al. (2014) and Toloui et al. (2014). The beam is expanded at 18 degree divergence half angle such that it spread to a 4 m wide region in the measurement volume, after reflecting off of a 45-degree polished aluminum mirror. The conical illumination region is then able to be further modified by adjusting the curvature of the mirror such that the volume is elongated in the desired direction (e.g., downstream).

2.2 Calibration

The camera calibration cannot follow traditional approaches taken in the laboratory and instead is implemented using a UAV equipped with two rigidly connected lights that is flown throughout the measurement domain. While being imaged by the camera array, the UAV samples the entire measurement volume from edge to edge. The basis of this approach, which follows the method by Theriault et al. (2014), is to move an “object” of known dimensions through the measurement volume while identifying image coordinates of the object keypoints in each camera. Here, our object consists of two LEDs, one green and one red, held at a fixed separation of 34.5 cm by a carbon fiber tube, with the object “keypoints” being the LEDs themselves. This apparatus is attached to the UAV, which needs to be flown carefully throughout the measurement domain.

Snow tracking deployments, including those described in Sect. 3, are conducted at night, with artificial illumination. In such a case, the extent of the measurement volume in the horizontal plane, and the UAV within it, is visually apparent to the flight operator. Therefore, the UAV flight plan can be implemented manually, keeping within this x–y domain while monitoring the UAV altitude to stay within the desired region. A Holybro S500 V2 quadcopter UAV, with 480 mm wheelbase, is used for this purpose, with a Pixhawk flight controller operating on the open-source ArduPilot platform wherein the Mission Planner software can be used for monitoring the UAV.

However, it should be noted that GAIA-PTV was also designed with the future goal in mind to be capable of other types of 3D PTV measurements in daylight as well, where no artificial lighting would be used, in which case a precise, automated flight plan may need to be charted with GPS coordinates. Mission Planner enables many features for flight planning that are useful in this regard, including tools for automated scanning of regions at various altitudes, as well as the ability to inject real-time kinematic positioning (RTK) corrections to the GPS. The UAV is therefore equipped with an RTK-capable Ublox M8P GPS unit that, upon integration with NTRIPP data stream provided from a local Minnesota Department of Transportation (MNDOT) base station, is capable of providing \(\approx\)cm-scale accuracy to the UAV positioning. Regular GPS units are typically only \(\approx\)1 m accurate, at best.

The UAV flight for calibration typically lasts about 5 min, while the cameras capture images at 30 fps in order to obtain several thousand positions at which the UAV LEDs are seen by all cameras. All images are processed using Python with in-house code to find all potential green and red LED candidates. A color filter is used in image processing, selecting the green and red bands in the RGB images separately, and an intensity threshold is applied. Poor candidates are further filtered using a temporal smoothness constraint to the LED positions in the 2D images.

To obtain a calibration mapping from the image to the object space, the eight-point algorithm is used (Hartley and Zisserman 2003) to initially estimate camera pose (i.e., position and orientation), based on a pinhole model. This estimate is then refined using sparse bundle adjustment (SBA) (Lourakis and Argyros 2009), implemented in “easyWand” by Theriault et al. (2014). The uncertainty of the calibration results that are obtained following the SBA is assessed using two different metrics: reconstructed LED separation in 3D space, varying for each frame captured; and reprojection error, given as the r.m.s. distance between original and reprojected keypoints. If the mean reprojection error are too large, i.e., > 0.5 pixels, data points with the largest reprojection errors are iteratively removed and the calibration re-calculated. Otherwise, more calibration points could be collected with further flights in order to reduce error. Such calibration flights are typically conducted both before and after particle image datasets are collected.

2.3 Particle tracking

After collecting particle images and obtaining a camera calibration, Lagrangian trajectories are extracted using predictive 3D PTV following the implementation by Tan et al. (2020) with OpenLPT. This open-source implementation of 3D PTV is based on the Shake-the-Box (StB) algorithm by Schanz et al. (2016a), with additional improvements made for ghost particle rejection. StB has been shown to be a robust predictive tracking approach that iteratively solves the problems of triangulation and matching/linking across frames simultaneously, leveraging the expected particle position information to handle dense particle images (e.g., 0.1 ppp). In OpenLPT, initializing trajectories with which to predict subsequent particle positions requires at least four time steps, following the approach of Schanz et al. (2016a) who found this to be an adequate number based on experience. Other initialization time step counts may be feasible, but it is generally desirable to require fewer initialization frames. After initialization, at each frame a combination of active, inactive, and exited tracks is determined. The active tracks are a combination of short trajectories that have not yet reached the needed initialization length and long tracks that have been successfully predicted. Once an active track is lost, it is added to the inactive tracks list, and if the particle departs the specified measurement domain, it is added to the list of exited tracks.

Once trajectories of particles are obtained, their paths must be smoothed before computing higher-order quantities such as acceleration and curvature. A Gaussian filter kernel is convolved with the particle position vector in each component, with an optimal kernel size determined following the approach of Nemes et al. (2017). If the kernel is too small, acceleration data are contaminated by high-frequency noise, whereas if it is excessively large, fine-scale motions may be overly attenuated. In the results presented in Sect. 4, this filter length was 0.26 s, or \(\sim 3\tau _{\eta }\), where \(\tau _{\eta }\) is the Kolmogorov timescale, which is similar to that used in prior studies (Nemes et al. 2017; Li et al. 2021a).

2.4 Method validation and demonstration

Prior to the deployment of GAIA-PTV in the snow, two experiments were undertaken to validate the approach. First, the UAV-based calibration method was tested at scale to determine whether this methodology could provide sufficiently accurate camera pose estimation. Figure 2 shows a spatial map of the path taken by the UAV, where markers denote the reconstructed positions of the UAV lights colored by their deviation from the true distance between the lights, D. For this case, the UAV’s flight was automated to scan throughout a volume measuring approximately 4 m \(\times\) 4 m \(\times\) 4 m using the equipped RTK GPS unit. It can be seen that reconstructed errors are largest near the edges of the calibration volume, where they are at most \(\approx\)1 cm, and much less throughout the core of the volume. A separate scale is added where D is normalized with the measured distance between lights of 34.5 cm, where relative errors are below 3%.

A further experiment was performed under controlled conditions to test the tracking capabilities provided by the calibration when input to the OpenLPT software. For this experiment, standard hole-punch confetti paper particles (6 mm diameter) were dropped manually in still-air conditions in a large warehouse environment. Though it was not feasible to densely fill an entire measurement with such particles, they mimicked many of the conditions expected to be encountered in the field while also enabling some ground-truthing of their fall speed. In particular, the disk-shaped confetti were expected to exhibit more complex trajectories with tumbling motions similar to that can be exhibited by snowflakes, such as those with dendrite morphologies. Cameras were positioned in a fan array at 11 m radial distance from the center of the measurement volume in order to reproduce a similar scale to be measured over in the field. The calibration here was not performed with a UAV, as the experiment was indoors, and instead the LEDs and carbon fiber rod were held by hand and moved through the measurement volume manually. A sample confetti image (after enhancement) and resultant trajectories are shown in Fig. 3, where it can be seen that long trajectories are able to be captured. Furthermore, it was estimated that approximately 93% of confetti particles could be tracked, based on the identified particles in each image (after filtering out double-counted particles whose centroids were within 1 pixel of each other) and the sum of the durations of all particle tracks (in number of frames), which gave an estimate of the average particles tracked per frame.

Looking closer at individual trajectories, as in Fig. 4a, it can be seen that the fine-scale motions of the confetti disks can be resolved, with an oscillation in the trajectory as the disk tumbles end-over-end. The average fall speed of individual confetti particles was also measured in independent experiments and compared with the distribution of particle fall speed measured with PTV, which show close agreement (Fig. 4b).

3 Experiment

3.1 Field site

A series of experiments measuring natural snowfall were undertaken during the winter season of 2021/2022. All measurements were performed at the University of Minnesota Outreach, Research and Education (UMore) Park, in Rosemount, Minnesota, near the EOLOS wind energy research station. The region surrounding the deployment location of the GAIA-PTV system is depicted in Fig. 5 and consists of relatively flat farmland, apart from the meteorological (met) tower nearby. The met tower is equipped with SAT3 Campbell Scientific Sonic anemometers at elevations of 10, 30, 80, and 129 m above ground, sampling at 20 Hz, and cup-and-vane anemometers at elevations of 7, 27, 52, 77, 102, and 126 m above ground. Further details concerning the met tower, located approximately 50 ms from the GAIA-PTV system, are provided in Toloui et al. (2014) and Heisel et al. (2018).

The data analyzed herein were collected on April 17, 2022, between the hours of 20:39 and 23:00 CST. Although multiple datasets were collected during this period, here we present an exemplary subset of data from 20:59 to 21:00. Wind conditions and air temperature during this period are shown in Fig. 6a–c, where speeds of approximately 1.7 m/s were steady within ± 0.3 m/s, measured from the 10 m sonic anemometer.

In order to characterize the properties of the snow particles that precipitated during the deployment period, independent measurements were conducted using a digital inline holography system similar to that used by Nemes et al. (2017), and described in more detail by Li et al. (2022). Given that snow particles can exhibit diverse physical characteristics (Garrett et al. 2015; Grazioli et al. 2022), it is important to measure them during each experiment. The holographic measurement apparatus used herein was located within approximately 10 ms of the camera system, mounted on a tripod, and captured data between 20:13 and 21:58 CST. The morphological characteristics of the particles are described in Fig. 6d, e, where particles typically measured less than 5 mm in diameter. Morphological types observed were mostly single or aggregated dendrite particles. It may be noted that the p.d.f. differs here from those shown by Nemes et al. (2017) and Li et al. (2021a), wherein a distinct peak in the distribution could be identified, whereas here the p.d.f. increases toward zero. This is due to a combination of effects: the variability of snow particle shape and size observed during the deployment, and the increased accuracy in particle identification thanks to an improved algorithm able to identify snow particles over a larger range of sizes using machine learning (Li et al. 2022).

The field site and equipment used are depicted in Fig. 5a, in which can be seen the layout of the spotlight illumination, met tower, camera system, UAV, and an example of raw particle images obtained. The layout is also schematically illustrated in the inset of Fig. 1. Cameras were mounted on tripods in a “fan” array spanning 90 degrees of a circular arc, 5.5 m in radius. All were tilted upward to be oriented at 58 degrees from horizontal, resulting in the varying magnification across the field of view in Fig. 7b. This resulted in a measurement volume of approximately 4 m \(\times\) 4 m \(\times\) 6 m in x, y, and z, the streamwise, spanwise, and wall-normal directions, respectively. Note that the linear size of the field of view is of the same order of magnitude of the integral scale of the flow at that distance from the ground, \(\simeq k_v z \simeq 4\) m, based on the von Karman constant \(k_v\) and the mixing length assumption in turbulent boundary layers. Cameras recorded at 200 fps in decimated mode with 732 \(\times\) 968 pixels, providing spatial resolution of \(\approx\)6.3 mm per pixel at the center of the FOV. For a given dataset, 10,000 frames were recorded in each camera, providing 50 s duration sequences.

3.2 Calibration

A measurement volume of approximately 4 m \(\times\) 4 m\(\times\) 6 m was calibrated (after the particle image datasets were collected) as depicted in the inset of Fig. 1, where red and green markers indicate the reconstructed trajectory of the UAV as it flew through the measurement region. Cameras recorded the UAV lights at 30 fps during this sequence and resulted in 4063 frames in which both lights could be seen by all four cameras and successfully identified. This resulted in mean reprojection errors of 0.275 pixels across the four cameras, or approximately 1.73 mm in the center of the measurement domain. This showed an improvement upon the validation experiment, likely due to the longer calibration sequence capturing a greater number of calibration points. Please note that 1.73 mm is of the same order as \(d_s\), mean snow particle diameter, and \(<1\%\) of \(I_d\), mean inter-particle distance (see Sect. 4.3).

4 Results

4.1 Particle trajectories

Having obtained this camera calibration, snow particle images could be processed using 3D PTV. In the following, the coordinate system is defined such that x is the streamwise direction, y is spanwise, and z is vertical.

The distribution of snow particle trajectory durations, obtained from 250,619 particles tracked over 10,000 frames, is shown in Fig. 8a. Due to the challenge in tracking particle motions in 3D, durations are skewed toward lower values, and taper off at the long end by approximately 400 frames, or 2 s. This does not include particle trajectories shorter than 5 frames, which are not considered valid trajectories for studying particle kinematics as they have not passed the initialization phase and developed sufficient duration, follow the approach of Tan et al. (2020) and Schanz et al. (2016a). Approximately 1800 “long” particle trajectories (\(>4\) frames) and 7000 “short” trajectories are obtained at each frame. These longer trajectories are continuously tracked until they either exit the domain or become “inactive” (i.e., lost). The number of snow particles present in the measurement volume, on average, is \(\approx\)11,000, based on the number of particles identified in all four camera frames. Thus, the measurement yield of reconstructed particle positions, out of all particles physically in the measurement domain, is estimated as 80% while the yield of long trajectories is be approximated as 20%. This lower estimate compared to the confetti validation experiment is most likely due to imperfect view overlap from different cameras at the edges of the FOV and poorer image quality from the upper region of the measurement volume. This latter effect is shown in Fig. 8b where the distribution of long trajectories is projected onto the x–z plane. Though trajectories are found throughout the volume, the highest concentration are lower in the measurement domain, where image quality is also better in terms of illumination and magnification.

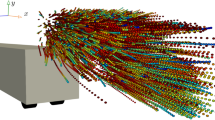

A sample set of snow particle trajectories, from approximately 1 s of recording, is shown in Fig. 9 from two different vantage points. Note that the coordinate system for the x and y axes has been rotated such that the x-axis is aligned with the mean streamwise direction of particle trajectories. As shown in Fig. 9a, when viewed from a vantage point nearly perpendicular to the x-axis, particle trajectories appear relatively linear, elongated in the streamwise direction. This type of view is similar to what would be captured using a 2D imaging method. Closer inspection of Fig. 9b, with the vantage point closer to being parallel with the x-axis, reveals more complex kinematics of the particles, with subtle oscillations in their curvature. This is emphasized through the colormap applied to the trajectories. Here, the lateral acceleration component, \(a_y\), is chosen as it particularly emphasizes the three-dimensionality of the particle motion that cannot be captured with planar or 2D imaging methods. The particles along these trajectories accelerate back and forth, suggesting non-negligible interactions with the turbulent flow and the different scales of vortical motions (Li et al. 2021b). Furthermore, this behavior is not observable for all neighboring particles. Some, such as in the inset of Fig. 9b, are observed to translate relatively linearly along the primary flow direction as they settle, as indicated by the relatively uniform and low magnitude \(a_y\) coloring, while others display relatively strong changes in \(a_y\). This could be speculated to be the result of differences in the size and morphology of the snow particles, affecting their inertial properties and their ability to follow different scales of the flow, despite different particles sampling similar regions in the flow. Note that the mean inter-particle distance is \(\approx 0.18\) m, or \(\approx 2\lambda _T\), where \(\lambda _T\) is the Taylor microscale. Estimated by the nearby sonic anemometer, \(\lambda _T\) provides reasonable estimates of the thickness of the shear layers and the size of the vortex cores (Heisel et al. 2021), implying that nearby snow particles may still sample different flow topologies.

4.2 Acceleration and curvature

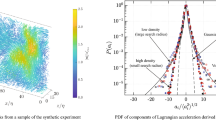

The statistics of the particle kinematics, explored qualitatively in the previous section, are presented in Fig. 10 plotting p.d.f.s of settling velocity, acceleration components, and curvature. Settling velocity displays an approximately normal distribution, weakly skewed toward lower fall speeds. For now, we do not attempt to correlate the settling velocities with particle size, because this relationship is exceedingly complex and will require additional datasets. Particle drag and density are function of the snow morphological type (e.g., dendrites, plates, graupels), and the projected drag area may vary depending on the particle orientation and falling style. Moreover, turbulence can contribute to enhance the settling velocity, when critical Stokes number is approached and particles preferentially sample certain regions of the flow (Li et al. 2021b). Further experiments across a broad range of meteorological conditions beyond what is presented herein will hopefully enable such relations to be drawn on a statistical basis, taking into account these effects.

In terms of acceleration, the spanwise component, \(a_y\), is generally of similar magnitude to \(a_x\) and \(a_z\), though their distribution tails differ, and as such these spanwise motions are important to capture. All three components display wider tails compared to a normal distribution, where \(a_z\) in particular is skewed toward negative accelerations. The streamwise component, \(a_x\), on the other hand, is more strongly skewed with positive accelerations.

Curvature is given as

where \(\rho\) is the curvature, R is the radius of curvature, \(\textbf{v}\) and \(\textbf{a}\) are the velocity and acceleration vectors, respectively, and double brackets indicate taking the L2-norm. The distribution of R, plotted in Fig. 10c, is calculated in both 2D and 3D, where in the 2D case only the x and z components of \(\textbf{v}\) and \(\textbf{a}\) are used, simulating the components that would be resolved if a planar imaging system were used that was appropriately aligned parallel to the streamwise direction. Markedly different distributions are shown between these two, where the 2D case is skewed toward larger, weaker curvature, with peaks in their distributions of approximately 8 m and 6 m for the 2D and 3D cases, respectively. This agrees with the observed behavior in the sample trajectories from Fig. 9c, which showed significant lateral components in acceleration and should be expected given the probability density functions of acceleration components shown in Fig. 10b. During parts of trajectories where the particle may oscillate in the y-direction, a 2D tracking system would not register this out-of-plane motion and thus return higher radii of curvature for such tracks. Particle inertial properties are known to affect the curvature and acceleration distribution [see e.g., Bec et al. (2006)], as heavier-than-fluid particles cannot be trapped in vortical flows. Hence, it can be expected that the inertial particle curvature, when measured in a fixed reference frame as done here, should be larger than the expected size of vortex cores. However, it should be noted that more in-depth analysis of coherent fluid structures are beyond the scope of the work herein, and, indeed, even for neutrally buoyant particle trajectories would still require careful treatment using objective metrics from a Lagrangian framework (Haller et al. 2021).

4.3 Clustering

Three-dimensional particle cloud reconstructions are particularly advantageous, as compared to 2D planar imaging, because they also enable direct quantification of particle concentration statistics and clustering behaviors without any 2D assumptions. This can be achieved using Voronoï diagrams, spatial tessellations of the particle cloud, wherein the volume of individual cells produced by the tessellation are inversely proportional to the local concentration. This method is preferable to other approaches, such as box-counting, as it avoids biasing due to input parameter choices such as bin size, and is also robust to particle sub-sampling (up to 50%) effects often unavoidable in experimental data (Monchaux 2012). The shape and extent of a Voronoï cell are defined such that all points within the cell (except the edges) are closer to the single particle lying within the cell than they are to any neighboring particles outside the cell. Thus, each Voronoï cell is associated with a given particle, and the vertices and borderlines themselves are equidistant to the neighboring particles. An example of a single Voronoï cell is shown in Fig. 11a, showing the complex topology of the cell in 3D space, while the p.d.f. of Voronoï cell size is shown in Fig. 11b. Here, the length scales associated with the cell size for both the full 3D data and quasi-2D data are shown. The 2D case is simulated for comparison by computing the 2D Voronoï tessellation on particles found within sub-volumes oriented in the x–z plane, with their y-dimension removed. Two different sub-volume thicknesses are compared, of 0.3 m and 0.1 m, similar to that used in previous 2D planar snow tracking experiments (Nemes et al. 2017; Li et al. 2021a, b). In all cases, 2D or 3D the data are taken from particles within \(\pm 1\) m of the measurement domain center (in all directions) in order to avoid any unwanted effects near the boundaries. The length scale, L, for the 3D case is the cube-root of the cell volume, while for the 2D case L is the square-root of the cell area. The distributions of L peak at 0.18 m for the 3D, indicative of true inter-particle spacing, \(I_d\), whereas the 2D cases are affected by the sub-volume thickness. The thicker 2D sub-volume results in smaller L, due to the fact that more particles are projected onto the x–z plane, compared to the thin 2D sub-volume Monchaux (2012).

Normalizing the volumes (or areas for the 2D case) by their respective means, these data can be compared to randomly distributed particles (Fig. 11c), which, following Ferenc and Néda (2007), can be described for the 2D and 3D cases, respectively, as

a Example of a single Voronoï cell produced by the tessellation, where the red dot indicates the snow particle, blue dots indicate vertices, and green lines are the ridges of the cell; b probability density functions of cell length scales, L, comparing the 2D and 3D cases; c Probability density functions in log–log space of cell volumes, compared against models from Ferenc and Néda (2007) for randomly distributed particles given by Eqs. 2 and 3

Deviations from these distributions indicate clustering of particles due to flow and particle inertia. In all comparisons between snow data and profiles of \(f_{2D}\) and \(f_{3D}\), it can be seen that the snow particles display clustering behavior. Evidence of such clustering can be qualitatively observed in the raw images, as indicated in the inset of Fig. 7b, though it must be remembered that the image is a projection of all particles across the depth of the volume and therefore the scale from the images may not be indicative of true spacing. Furthermore, the results of the distributions suggest that 2D measurement of the same snow particles would have slightly underestimated the extent of this clustering behavior. This is shown by the disparity between the distribution for the 3D experimental data and the profile for \(f_{3D}\), which is greater than that of the 2D data. This is true for both the “thick” or “thin” 2D data, where it can also be noted that the normalization here largely removes the differences observed in Fig. 11b. Our results are largely consistent with the results shown by Monchaux (2012) concerning 2D/3D biases in Voronoï analysis, wherein differences between 2D and 3D treatments are minor though appreciable. It may be speculated, however, that the extent to which these differences between 2D or 3D treatments are manifested may be influenced by the particular type of flow (e.g., a turbulent boundary layer) and the statistically persistent 3D vortical structures it contains.

5 Conclusions and discussion

The GAIA-PTV system presented herein has been demonstrated to provide unprecedented Lagrangian tracking of natural snow settling motions in 3D using a multiview imaging array. Snow particles are tracked within a volume measuring approximately 4 m \(\times\) 4 m\(\times\) 6 m. The system leverages the use of a UAV to enable calibration far from the surface, centered at an altitude of 10 m, providing the ability to capture long trajectories that are free from flow disturbances due to the ground. The apparatus is robust in the field, capable of being rapidly deployed under the harsh snowfall conditions, and is mobile and flexible for alternative camera arrangements as needed. The modular camera and data acquisition system also enables easy expansion of the system by adding more cameras, each of which stores data and is operated independently, with the capability of wireless synchronization as well for lower frame rates suitable for larger fields of view.

The results presented herein provide a snapshot of the analyses that are enabled by GAIA-PTV, and demonstrate the utility of measuring particle kinematics in 3D over 2D. Due to the long trajectories capable of being measured, further Lagrangian diagnostics are also possible that have not been explored herein, such as relative pairwise dispersion (e.g., Pumir et al. 2000; Gibert et al. 2010; Bragg et al. 2016) or gravitational drift (Berk and Coletti 2021). Such analyses can contribute to fundamental investigations of particle–turbulence interactions and inertial particle dynamics (Brandt and Coletti 2022). With future experiments, systematic investigations of snow settling dynamics across a range of meteorological conditions are possible, in order to offer unique insights into particle kinematics and clustering. Furthermore, the GAIA-PTV system can be extended to measuring transport of other particles in atmospheric flows, such as droplet sprays and dispersed pollen (Sabban and van Hout 2011). The system was designed to be both robust in the field, as has been tested under the harsh winter conditions, and scalable depending on the needs of the flow phenomena of interest.

In future work, two key limitations of the current system could be improved upon. Firstly, as with most 3D PTV systems, the Lagrangian tracking capability is limited in part by the domain size and the fact that the domain is fixed. If the current system could be made mobile, such as by using UAV-mounted cameras instead of fixed tripod-mounted cameras on the ground, it would enable much longer tracking times to study particle dispersion, for example. In a similar vein as has been performed with translating laboratory imaging systems [e.g., the “flying PIV” by Zheng and Longmire (2013)], the system could follow along with the particles of interest, keeping them within the measurement domain. Such advances are challenging, however, primarily due to the problem of calibrating this type of system.

The use of a UAV for calibration imposes some constraints on the operating conditions feasible with this method. When mean wind speed at 10 m altitude (typically assessed prior to experiments using forecasts) exceeds 24 km/hr (15 mph) UAV flight becomes hazardous, and thus, calibrating the camera array with the present approach is not possible. This can be overcome, however, with a larger, more powerful UAV than the one presently used.

Additionally, it is possible that under highly concentrated snow fall the particle image density for the cameras may become too high for the volume measured herein (4 m \(\times\) 4 m\(\times\) 6 m). In this case, the measurement volume must be reduced, by moving the cameras closer to the measurement volume, to increase inter-particle spacing in the images. A modular camera approach, intentionally adopted by this system, makes such adjustments, which must be made in real time in the field, relatively straightforward. It should be noted that the size of snow particles that can be captured by the GAIA-PTV system is dependent on both the proximity of the cameras and their sensors. In more challenging conditions where particles scatter less light (e.g., due to having smaller effective diameters), the cameras can be adjusted to achieve a smaller field of view (i.e., moved into an arrangement in closer proximity to the measurement volume). Additionally, more sensitive monochromic cameras with larger pixels may be used. Larger sensors with such pixels would thus also enable us to expand the field of view to larger measurement domains.

The need for artificial illumination as used herein constrains the measurement opportunities to the hours of darkness, when the particles of interest can be illuminated against the night sky. Advances in particle detection methods capable of dealing with natural daylight illumination could significantly increase use cases, as well as simplify the deployment requirements without the need for a high-wattage light sources. The primary challenge is in overcoming the low contrast available when imaging during daylight, but rapid developments in the field of computer vision and object detection may yield promising tools for improvements in this regard.

Data availability

The data that support the findings of this study are available from the corresponding author, J.H., upon reasonable request.

References

Abraham A, Hong J (2020) Dynamic wake modulation induced by utility-scale wind turbine operation. Appl Energy 257(114):003

Aksamit NO, Pomeroy JW (2016) Near-surface snow particle dynamics from particle tracking velocimetry and turbulence measurements during alpine blowing snow storms. Cryosphere 10(6):3043–3062

Aksamit NO, Pomeroy JW (2018) The effect of coherent structures in the atmospheric surface layer on blowing-snow transport. Bound Layer Meteorol 167(2):211–233

Bec J, Biferale L, Boffetta G et al (2006) Acceleration statistics of heavy particles in turbulence. J Fluid Mech 550:349–358

Berk T, Coletti F (2021) Dynamics of small heavy particles in homogeneous turbulence: a Lagrangian experimental study. J Fluid Mech 917:A47

Bragg AD, Ireland PJ, Collins LR (2016) Forward and backward in time dispersion of fluid and inertial particles in isotropic turbulence. Phys Fluids 28(1):013305

Brandt L, Coletti F (2022) Particle-laden turbulence: progress and perspectives. Ann Rev Fluid Mech 54:159–189

Discetti S, Coletti F (2018) Volumetric velocimetry for fluid flows. Meas Sci Technol 29(4):042001

Emanuel K (2018) 100 years of progress in tropical cyclone research. Meteorol Monogr 59:15.1

Ferenc JS, Néda Z (2007) On the size distribution of Poisson Voronoi cells. Phys A Stat Mech Appl 385(2):518–526

Garrett TJ, Yuter SE (2014) Observed influence of riming, temperature, and turbulence on the fallspeed of solid precipitation. Geophys Res Lett 41(18):6515–6522

Garrett TJ, Yuter SE, Fallgatter C et al (2015) Orientations and aspect ratios of falling snow. Geophys Res Lett 42(11):4617–4622

Gibert M, Xu H, Bodenschatz E (2010) Inertial effects on two-particle relative dispersion in turbulent flows. Europhys Lett 90(6):64005

Haller G, Aksamit N, Encinas-Bartos AP (2021) Quasi-objective coherent structure diagnostics from single trajectories. Chaos Interdiscip J Nonlinear Sci 31(4):043131

Hartley R, Zisserman A (2003) Multiple view geometry in computer vision. Cambridge University Press

Heisel M, Dasari T, Liu Y et al (2018) The spatial structure of the logarithmic region in very-high-Reynolds-number rough wall turbulent boundary layers. J Fluid Mech 857:704–747

Heisel M, de Silva C, Hutchins N et al (2021) Prograde vortices, internal shear layers and the Taylor microscale in high-Reynolds-number turbulent boundary layers. J Fluid Mech 920:715–731

Hommema SE, Adrian RJ (2003) Packet structure of surface eddies in the atmospheric boundary layer. Bound Layer Meteorol 106(1):147–170

Hong J, Toloui M, Chamorro LP et al (2014) Natural snowfall reveals large-scale flow structures in the wake of a 2.5-MW wind turbine. Nat Commun 5(1):1–9

Hou J, Kaiser F, Sciacchitano A et al (2021) A novel single-camera approach to large-scale, three-dimensional particle tracking based on glare-point spacing. Exp Fluids 62(5):1–10

Huang J, Liu J, Chen B et al (2015) Detection of anthropogenic dust using calipso lidar measurements. Atmos Chem Phys 15(20):11653–11665

Grazioli J, Ghiggi G, Billault-Roux AC et al (2022) MASCDB, a database of images, descriptors and microphysical properties of individual snowflakes in free fall. Sci Data 9(1):186

Kok JF, Ward DS, Mahowald NM et al (2018) Global and regional importance of the direct dust-climate feedback. Nat Commun 9(1):1–11

Lapotre M, Ewing R, Lamb M et al (2016) Large wind ripples on mars: a record of atmospheric evolution. Science 353(6294):55–58

Li C, Lim K, Berk T et al (2021) Settling and clustering of snow particles in atmospheric turbulence. J Fluid Mech 912:A49

Li J, Abraham A, Guala M et al (2021) Evidence of preferential sweeping during snow settling in atmospheric turbulence. J Fluid Mech 928:A8

Li J, Guala M, Hong J (2022) Snow particle analyzer for simultaneous measurements of snow density and morphology. arXiv preprint arXiv:2209.11129

Lourakis MI, Argyros AA (2009) SBA: a software package for generic sparse bundle adjustment. ACM Trans Math Softw 36(1):1–30

Lundquist JK, Wilczak JM, Ashton R et al (2017) Assessing state-of-the-art capabilities for probing the atmospheric boundary layer: the XPIA field campaign. Bull Am Meteorol Soc 98(2):289–314

Monchaux R (2012) Measuring concentration with Voronoï diagrams: the study of possible biases. New J Phys 14(9):095013

Morris SC, Stolpa SR, Slaboch PE et al (2007) Near-surface particle image velocimetry measurements in a transitionally rough-wall atmospheric boundary layer. J Fluid Mech 580:319–338

Muller K, Hemelrijk C, Westerweel J et al (2020) Calibration of multiple cameras for large-scale experiments using a freely moving calibration target. Exp Fluids 61(1):1–12

Nemes A, Dasari T, Hong J et al (2017) Snowflakes in the atmospheric surface layer: observation of particle-turbulence dynamics. J Fluid Mech 814:592–613

Novara M, Schanz D, Geisler R et al (2019) Multi-exposed recordings for 3d Lagrangian particle tracking with multi-pulse shake-the-box. Exp Fluids 60(3):1–19

Perks MT, Dal Sasso SF, Hauet A et al (2020) Towards harmonisation of image velocimetry techniques for river surface velocity observations. Earth Syst Sci Data 12(3):1545–1559

Pumir A, Shraiman BI, Chertkov M (2000) Geometry of Lagrangian dispersion in turbulence. Phys Rev Lett 85(25):5324

Rosi GA, Sherry M, Kinzel M et al (2014) Characterizing the lower log region of the atmospheric surface layer via large-scale particle tracking velocimetry. Exp Fluids 55(5):1–10

Sabban L, van Hout R (2011) Measurements of pollen grain dispersal in still air and stationary, near homogeneous, isotropic turbulence. J Aerosol Sci 42(12):867–882

Schanz D, Gesemann S, Schröder A (2016a) Shake-The-Box: Lagrangian particle tracking at high particle image densities. Exp Fluids 57(5):1–27

Schanz D, Huhn F, Gesemann S, et al (2016b) Towards high-resolution 3D flow field measurements at cubic meter scales. In: Proceedings of the 18th international symposium on the application of laser and imaging techniques to fluid mechanics, Springer

Tan S, Salibindla A, Masuk AUM et al (2020) Introducing OpenLPT: new method of removing ghost particles and high-concentration particle shadow tracking. Exp Fluids 61(2):1–16

Terra W, Sciacchitano A, Shah Y (2019) Aerodynamic drag determination of a full-scale cyclist mannequin from large-scale PTV measurements. Exp Fluids 60(2):1–11

Theriault DH, Fuller NW, Jackson BE et al (2014) A protocol and calibration method for accurate multi-camera field videography. J Exp Biol 217(11):1843–1848

Toloui M, Riley S, Hong J et al (2014) Measurement of atmospheric boundary layer based on super-large-scale particle image velocimetry using natural snowfall. Exp Fluids 55(5):1–14

Vionnet V, Martin E, Masson V et al (2017) High-resolution large eddy simulation of snow accumulation in alpine terrain. J Geophys Res Atmos 122(20):11–005

Wang Z, Huang N (2017) Numerical simulation of the falling snow deposition over complex terrain. J Geophys Res Atmos 122(2):980–1000

Wei NJ, Brownstein ID, Cardona JL et al (2021) Near-wake structure of full-scale vertical-axis wind turbines. J Fluid Mech 914:A17

Wieneke B (2012) Iterative reconstruction of volumetric particle distribution. Meas Sci Technol 24(2):024008

Zeugin T, Krol Q, Fouxon I et al (2020) Sedimentation of snow particles in still air in stokes regime. Geophys Res Lett 47(15):e2020GL087832

Zheng S, Longmire EK (2013) Flying PIV investigation of vortex packet evolution in perturbed boundary layers. In: PIV13; 10th international symposium on particle image velocimetry, Delft, The Netherlands, July 1–3, 2013, Citeseer

Acknowledgements

This work was supported by the National Science Foundation under grants CBET-2018658 and AGS-1822192.

Funding

This work was supported by the National Science Foundation under grants CBET-2018658 and AGS-1822192.

Author information

Authors and Affiliations

Contributions

JH and MG oversaw the study conception and design. NB wrote the manuscript text, generated figures, and was the primary contributor to the measurement system development. PH contributed to the development of the measurement system. All authors contributed to the field experiments.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bristow, N., Li, J., Hartford, P. et al. Imaging-based 3D particle tracking system for field characterization of particle dynamics in atmospheric flows. Exp Fluids 64, 78 (2023). https://doi.org/10.1007/s00348-023-03619-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-023-03619-6