Abstract

This paper presents a combined colour-infrared imaging technique based on light refraction and absorption for measuring water surface over a non-horizontal fixed bottom known a priori. The procedure requires processing simultaneous visible and near-infrared digital images: on the one hand, the apparent displacement of a suitable pattern between reference and modulated visible images allows to evaluate the refraction effect induced by the water surface; on the other hand, near-infrared images allow to perform an accurate estimate of the penetration depth, due to the high absorption capacity of water in the near-infrared spectral range. The imaging technique is applied to a series of laboratory tests in order to estimate overall measurement accuracy. The results prove that the proposed method is robust and accurate and can be considered an effective non-intrusive tool for collecting spatially distributed experimental data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The availability of laboratory data concerning the shape of water surface is important in engineering applications for both the complete understanding and reliable prediction of free surface flow phenomena, and for the validation of numerical codes.

In recent years, various imaging techniques based on stereo imagery or light reflection, refraction, or absorption have been used to measure water surface slopes and water depths as these methods have the advantage of not disturbing the water surface. Of the measuring techniques capable of both returning surface data spatially distributed over extended areas and avoiding any interference with the flow, the acoustic and photogrammetric methods work well with fixed surfaces but are not a convenient tool for measuring rapidly varying water surfaces. Stereo vision techniques, based on floating particle tracking (e.g. Eaket et al. 2005; Chatellier et al. 2013) or on the analysis of the recorded deformation of a suitable pattern (e.g. Tsubaki and Fujita 2005; Gomit et al. 2013), usually require laborious cross-correlations of images obtained from multiple points of view. On the other hand, reflection-based techniques are suitable in the case of small surface gradients only and are penalized from the fact that only a small fraction of the light hitting the water surface is reflected. For this reason, refraction-based techniques are often preferable, especially in surface slope measurement of short wind waves (e.g. Jähne et al. 1994), and are widely adopted in literature (e.g. Moisy et al. 2009; Ng et al. 2011; Gomit et al. 2013).

Literature provides accurate surveys on the state of the art in this field (see, for example, Jähne et al. 1994, 2005; Zhang and Cox 1994; Moisy et al. 2009), outlining advantages and limitations of the imaging techniques with reference to their applicability to hydrodynamic processes characterized by surface wave propagation. Furthermore, these techniques are nowadays extensively used in several fields of fluid flow measurements due to their effectiveness and versatility (e.g. Dabiri 2009; Nitsche and Dobriloff 2009).

In this context, the combined techniques based on light refraction and absorption offer the valuable advantage of performing the simultaneous measurement of surface slope and water depth. Barter et al. (1993) showed the feasibility of a gauge based on the principle that a laser beam passing through a water body is attenuated by the liquid medium and refracted by the air–water interface, consequently carrying duplicate information about surface slope and wave height amplitude. However, this gauge performs point measurements and thus is not useful in assessing the spatial variability of the water surface.

Jähne et al. (2005) overcame this limitation by developing an imaging technique capable of providing simultaneous wave slope and wave height measurements over an extended surface area. The essential peculiarity of this method is the use of a telecentric optical system (in which the image magnification remains unchanged with wave height) and of a dual wavelength telecentric illumination device for measuring wave height. Since all the refracted vertical beams originated by the same surface slope collimate the same spot on a horizontal backlit screen placed on the focal plane of the lens, surface slopes are estimated by exploiting a linear changing illumination gradient generated onto this screen. On the other hand, water depth is measured by exploiting its relationship with the ratio of measured irradiance corresponding to two different infrared wavelengths. The main drawbacks of this technique are the need for a telecentric system (which is difficult to implement and expensive in the case of large scale experiments) and its inapplicability on non-flat bed topographies.

More recently, Aureli et al. (2011) proposed an absorption-based image processing technique capable of providing distributed measures of water depth, potentially with high spatial resolution, over an extended flow field. A colouring agent was added to the water in order to artificially increase the absorbing properties of the medium in the visible spectrum. Through a set of dam-break experiments, the Authors verified that this technique guarantees accuracy comparable to that of traditional ultrasonic transducers. However, this method requires laborious spatial calibration and considers refraction at a sloped surface as an interfering effect: the penetration depth of a beam in an absorbing medium is confused with vertical depth, and this causes an error that could become unacceptable in the case of high surface slopes and/or non-horizontal bottom.

To overcome this limitation, this paper presents a novel imaging technique for measuring water surface, even in the presence of a non-horizontal (non-discontinuous) bottom known a priori, based on the processing of simultaneous visible and near-infrared digital images. The image transmitted in the visible spectrum by the water surface of a suitable pattern depicted on the bottom allows to evaluate the refraction effect (Kurata et al. 1990; Moisy et al. 2009). The near-infrared image allows to perform an accurate estimate of the penetration depth (Barter et al. 1993; Jähne et al. 2005) without using colouring agents, due to the high absorption capacity of water in the near-infrared spectral range (in this range, the value of the absorption coefficient of water is >10−1 cm−1 for wavelength >925 nm). Confirmation of the validity and effectiveness of this technique, along with evaluation of its accuracy, is provided in this paper by means of several laboratory tests.

2 Measuring principle

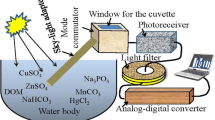

The measuring principle is illustrated in Fig. 1. A camera able to capture simultaneous and co-registered images in the visible and near-infrared (IR) range is placed above the water surface, with the optical axis pointed vertically downwards (parallel to the Z-axis). Combined visible-IR uniform illumination is arranged under a transparent bottom, where a pattern of well-recognizable interest points is drawn.

Considering an image in the visible spectrum captured in dry condition, a generic feature point A located on the bottom is imaged at point A′ on the sensor plane. If the relationships between the object coordinates X, Y, Z and the image-plane coordinates ξ, η are calibrated, and the shape of the bottom is known a priori, the object coordinates (X A, Y A, Z A) of point A derive from the intersection of the bottom topography with the straight line passing through the image point A′ (ξ A′, η A′) and the projection centre O (X O, Y O, Z O).

In the presence of a water body with air–water interface, the same feature point A is imaged by the camera in a different point A″ (ξ A″, η A″) because of the refraction of the visible beam at water surface. The transmitting point P (placed on the water surface) lies on the straight line A″O, which appears to come from the bottom point B. In essence, refraction at the air–water interface (assumed sufficiently smooth) produces a displacement of each imaged point of the bottom pattern with consequent modulation of the structure of the pattern itself. Therefore, corresponding image points A′ and A″ across two images (reference and modulated) must be identified and matched by means of a suitable imaging processing algorithm.

Obviously, infrared radiation is subject to refraction at the air–water interface too. However, visible and IR beams transmitted by the refraction point P can be assumed to be overlapping since the refraction index of water in the near-IR spectrum has a value that can be confused (accepting an overestimate by about 0.5 %, according to Hale and Querry 1973) with the classical value of visible light (4/3). Moreover, because of the attenuation of infrared radiation passing through the water body, the grey tone G recorded at image point A″ on the modulated infrared image is expected to be less than the grey tone G 0 recorded in the same position on the reference image. An estimate of the length L of the optical path AP in the liquid medium can be obtained from the transmittance G/G 0 registered by the IR sensor by means of a calibrated absorption model.

In conclusion, the refracting point P can be identified as the intersection point of the straight line A″O with the spherical surface of radius L and centre at point A (restricted to its portion above the bottom). In this way, the water surface can be detected in discrete form with a spatial resolution depending on the number of recognizable points drawn on the bottom. Once the object coordinates of points O, A, and P have been evaluated, water surface slope components in the X- and Y-directions at refracting point P can be also obtained from simple trigonometric calculations by using Snell’s law which correlates incident and refraction angles at the interface between two different media. With reference to one-dimensional ray geometry of Fig. 1b, the surface slope in the X-direction (angle θ) at refracting point P satisfies the equation:

where α and β are the angles (with reference to vertical, positive counterclockwise) of refracted and incident beams, respectively, and n w is the refraction index of water. Therefore, the estimation of the local free surface slope at a generic refracting point derives easily and directly from the application of the procedure and requires no information about points recognized in the surroundings.

Furthermore, it is noteworthy that this measuring technique also works in the presence of irregular, although smooth, bottom topography. Finally, since it does not require a telecentric system, it offers the advantage of circumventing the size restrictions typically imposed by this kind of systems (see, for example, Zhang and Cox 1994; Jähne et al. 2005), and therefore, its application could theoretically be extended relatively easily to larger facilities.

3 Experimental set-up

The imaging technique described above was tested in an experimental facility set-up at the Hydraulics Laboratory of Parma University.

The facility is sketched in Fig. 2a and consists of a support frame that can be positioned in a configuration inclined up to 45° from vertical (Fig. 2b). Experiments were carried out in a PMMA (polymethyl methacrylate) box with a 0.29 m × 0.29 m square internal section, filled with water in a level range of 0–12 cm. This test box was rigidly connected to its support element and backlit by means of an illumination source consisting in an array of high-power LEDs where white LEDs with colour temperature of 5,500 °K were alternated with IR-LEDs with peak wavelength of 940 nm. At this wavelength, water shows good absorbing properties: in fact, considering the experimental value of 0.1818 cm−1 for the absorption coefficient of water at 22 °C (Kou et al. 1993), the radiation intensity decreases by about 84 % in the case of 10 cm penetration depth and almost 94 % in the case of 15 cm penetration depth. Therefore, for the water depth range here considered, this choice allows to achieve a good overall resolution. However, IR wavelength must be set conveniently in relation to the water depth expected in the experimental facility. The use of IR radiation closer to the red light frequencies is suitable when greater depths (in the order of 0.5 m) are considered.

Moreover, the high density of LEDs in the illumination device, along with the presence of an overlying plane diffusive screen, guaranteed uniform illumination. The internal walls of the box were coated with non-reflective black paint so as to eliminate problems due to light reflection. Experiments were performed in a dark room in order to exclude any reflection from the water surface and obtain results insensitive to natural illumination conditions.

A digital camera facing downward was mounted on an adjustable support located at the top of the frame in order to capture images of the water surface in the test box. A JAI camera AD-080GE, equipped with 16-mm Schneider lenses, was used. JAI AD-080GE is a prism-based 2-CCD (4.76 mm × 3.57 mm) progressive area scan camera capable of simultaneously capturing visible and near-infrared images (in the range of 750–1,000 nm) through the same optical path, by means of a RGB Bayer array and a monochrome sensor, respectively (Fig. 3). The camera has a resolution of 1,024 × 768 pixels and can deliver a maximum of 30 frames per second. In the experiments performed, images in the visible spectrum were acquired in native format (RAW) and then converted into RGB colour mode with a 24-bit depth A/D conversion, whereas IR images were acquired in greyscale mode with an 8-bit depth.

The camera was placed at a distance of about 1.20 m from the bottom of the box and the optical axis arranged approximately parallel to the walls (Fig. 2). Since the optical system is not telecentric, the reduction in the distance between the camera and the target surface in the presence of water produces a magnification of the image of the backlit area that is captured.

Three test boxes with different shapes of the bottom sheet were used (Fig. 4).

A 0.20 m × 0.20 m square colour screen was printed onto the inner side of the PMMA bottom of each box. The dimensions of this screen were shorter than those of the box (and of the imaged area) in order to reduce border effects and vignetting disturbance. A regular pattern (Fig. 5) composed of 400 square elements with 1 cm side and strongly varying colours was carefully designed in order to identify a suitable number of corners (441) to be recognized in the image processing analysis. With the magnification ratio (≅70) of the optical system here adopted, the pattern was clearly resolved by the camera since a 1-cm segment identified on the bottom of the box was imaged by means of approximately 30 pixels. Each inner corner was univocally defined by a set of four neighbouring specific colours.

On the basis of the square pattern adopted, the global average spatial resolution of the water surface reconstruction was of about one point every cm2, which was satisfactory for the applications here discussed.

The colour screen was produced by means of a special digital printing accomplished directly on the PMMA inducing a slight uniform absorption of the near-infrared radiation. Indeed, the screen’s squared structure was not recognizable in an IR image taken in dry conditions.

Finally, for comparison purposes, point water depths were measured by means of a piezoelectric ultrasonic distance meter (Banner S18U) with 0.5-mm inaccuracy.

4 Experimental procedure

The experimental procedure is divided into two main stages: the preliminary calibration of the imaging system and the post-processing of the images captured during the experimental runs.

4.1 Calibration

First, the inner calibration of the camera and lenses was accomplished, once and for all, in order to evaluate focal length, principal point coordinates in the image space coordinate system, and parameters accounting for radial and decentring distortion. The six parameters of the outer orientation of the camera (coordinates of the camera perspective centre and angles of rotation of the camera axes with reference to the object coordinate system) were then evaluated through a system of collinearity equations (Kraus 1997) written for eight control points (black markers in Fig. 5); this outer calibration was repeated prior to each experiment in order to take into account any accidental change in camera orientation. Both steps were performed using the commercial software PhotoModeler Pro 5 (2003).

The last calibration step consisted in setting up a suitable absorption mathematical model, i.e. a function relating the irradiance (expressed in grey tones) recorded by the IR sensor of the camera and the penetration depth in the water body. The calibration data were obtained by measuring different water depths in static condition via the ultrasonic distance meter and simultaneously recording greyscale values in the “centre” of the IR images acquired (where no refraction occurs). It is widely accepted that the attenuation of radiation passing through an absorbing medium is described by Beer–Lambert–Bouguer law, which states the negative linear dependence between natural logarithm of transmittance and beam path length according to an absorption coefficient depending on the medium’s physical properties (temperature, salinity, etc.) and on radiation wavelength. However, since a single exponential function is unable to fully match the calibration data, probably due to the fact that the near-IR illumination is not strictly “monochromatic”, the following nonlinear regression model was assumed, in some way “combining” the absorption effects of three distinct wavelengths:

where G and G 0 indicate irradiance (in grey tones) acquired by the IR sensor in both the presence and the absence of the absorbing medium, respectively; α 1, α 2, and α 3 are the absorption coefficients of water (Hale and Querry 1973; Kou et al. 1993) for 900, 940, and 975 nm wavelengths (α 1 = 0.0680 cm−1, α 2 = 0.1818 cm−1, α 3 = 0.4485 cm−1); L is the path length in the water body; c 1, c 2, and c 3 are the weight coefficients of the three wavelengths (to be calibrated by regression).

Figure 6 shows the depth–luminance curve adopted (c 1 = 0.3628, c 2 = 0.4005, c 3 = 0.2367, ρ 2 = 0.9990): static sensitivity varies with penetration length and is higher for thinner water layers. If a fixed photon noise level is assumed for the IR sensor according to Jähne et al. (2005), the smallest measurable change in penetrating length increases with water depth, and consequently, the accuracy of the method decreases with the rise of water level.

It must be noticed that the calibration curve of Fig. 6 based on normalized irradiance G/G 0 is assumed to be valid in the whole measuring field; that is, coefficients c 1, c 2, and c 3 of Eq. 2 are considered independent of the local value of G 0, thereby implicitly accounting for all the non-uniformity effects (mainly due to the illuminator and optics vignetting). It is also required that the square pattern depicted on the bottom induces an almost uniform absorption of the IR radiation. On the basis of this assumption, a spatially distributed calibration is not necessary.

4.2 Image analysis

In order to derive water surface measurements from RGB and IR images captured during the experiments, image post-processing was performed by means of automated algorithms in accordance with the following steps:

-

detection of the corners of the coloured pattern printed onto the bottom in both actual (wet) and reference (dry) RGB images;

-

correlation of the corresponding corners in actual and reference RGB images;

-

extraction of information about local penetrating length from near-IR image using the absorption model previously calibrated;

-

reconstruction of water surface depth and slope at every detected point according to the measuring principle described in Sect. 2.

4.2.1 Corner detection

Several corner detection algorithms have been proposed in literature over the last years (e.g. Moravec 1977; Förstner and Gülch 1987; Harris and Stephens 1988; Trajkovic and Hedley 1998; Zheng et al. 1999). In this work, the Moravec operator (Moravec 1977) was preferred due to its simplicity and computational efficiency coupled with a satisfactory detection rate and good capability of locating the corners detected. Moreover, it is fairly stable and robust as regards noise disturbance.

This technique identifies corners as points characterized by large intensity variation in every direction. Originally proposed for grey images, it was here extended to RGB images by introducing Euclidean distance between two colour vectors in the RGB space (Wyszecki and Stiles 1982) in place of luminance change; therefore, intensity variation must be regarded now as a kind of colour change.

If the colour vector in the RGB space is denoted with I, and a local window W centred in the (x, y) pixel is considered in the image, the overall colour change E (u,v) produced by a shift (u, v) of window W reads:

where a and b allow to cover all the pixels of the windows. A square window with size 3 × 3 pixels (a, b = −1, 0, +1) and a shift of one pixel in the eight reference directions (u, v = −1, 0, +1) were assumed in this work.

A measure of the cornerness of each pixel is provided by the minimum value of colour change E produced by any of the shifts. Finally, a corner is detected by finding a local maximum in cornerness, above a suitable threshold value.

In the applications here discussed, the Moravec operator showed some limitations, mainly lying in the difficulty in detecting corners around the border of the coloured pattern, in the sensitivity to isolated pixels, and in the structural tendency not to be rotationally invariant. Moreover, the demosaicing process reconstructed colour images with edge artefacts, and this had negative repercussions on the accuracy of corner localization.

Compared to the original algorithm, a special treatment was introduced to reduce the probability of detecting false corners based on the fact that four strongly different colours were present around a true corner of the coloured pattern.

In the measurement tests presented in this paper, the detection rate (percentage of corners detected over the total number) was always >96 %, and the repeatability rate (percentage of corners detected in the modulated image compared to those detected in the reference image) was always >98 %. Over 93 % of the corners extracted were located with only one pixel accuracy.

4.2.2 Feature matching

After the corner detection stage, a reliable correspondence had to be found between the interest points detected in the reference and modulated images.

With this aim, literature usually represents the feature of the neighbourhood of each interest point using a suitable descriptor (e.g. Lowe 2004; Bay et al. 2008; Fan et al. 2009). The matching of corresponding points ensues from some measure of the similarity of the respective descriptors.

The descriptor herein adopted includes the colours present in the interest point’s neighbourhood and stores information about their arrangement. In detail, for each corner detected in the reference image at (x, y) pixel, an ordered sequence of four sets of RGB values concerning the four surrounding colours is built as follows:

where u is a suitable shifting parameter. Since the size of each square element in the reference image was approximately 30 pixels, setting u = 10 allowed to indentify four pixels belonging to regions characterized by approximately homogeneous colours and reasonably guaranteed that any possible corner misplacement (in the order of 5–6 pixels at the most) would not result in a “wrong” individuation of the colour descriptor. Obviously, the value of u had to be tuned according to the grid or CCD size and the magnification ratio adopted.

Each corner detected in the modulated image was then compared with each corner found in the reference image. A 31 × 31 pixel square region was considered, centred with reference to a generic corner detected in the modulated image, and a segmentation indexing (based on the Euclidean colour distance) was performed in order to classify the pixels selected into four groups, associated, respectively, with the four colour components of the reference point descriptor V r, and in an undefined class gathering any other colour. Since the colour pattern had been carefully designed so that every corner could be univocally identified by its four neighbouring colours, the presence of similar colours in the same order in the surroundings was the basic requirement for potential matches. Therefore, two interest points were considered as candidates to be matched if a suitable number of pixels was included in each colour class. If different pairs of points exhibited this correspondence, the segmentation procedure was repeated for progressively smaller square windows (29 × 29 pixels, 27 × 27, etc.), until only one pair of candidates fulfilled the matching criterion.

Despite its simplicity, the descriptor implemented is sufficiently distinctive and robust to noise, corner displacement, and geometric deformations. Moreover, the matching process is very effective since its success rate, even if depending on some parameter settings, was always >95 % (with reference to the total number of corners detected in the reference image) in the applications presented in this paper. If an initial window size smaller than 31 pixels is adopted, a gradual reduction in the matching rate can be observed.

4.3 Measuring system accuracy assessment

In this section, inaccuracies and restrictions affecting the measurement procedure are listed and discussed.

-

Effect of temperature and water quality. In the near-infrared spectrum, the absorption coefficient of water significantly depends on temperature and, to a lesser extent, on salinity and water quality in general. However, it has been proved in literature that the dependence on salinity is less important in comparison with the dependence on temperature (Pegau et al. 1997) and can be reasonably neglected in first approximation. The effect of temperature on the absorption coefficient depends on wavelength. On the basis of the experimental data obtained by Pegau et al. (1997), by adopting a simple linear relationship between absorption coefficient and temperature, at 900 nm an increase in the absorption coefficient value in the order of 10−3 cm−1 is expected as a consequence of a temperature increase of 10 °C. Moreover, according to the exponential absorption model of Eq. 2, the error in the penetration length estimation due to temperature depends on water level (through the normalized irradiance G/G 0). For G/G 0 = 0.5, the error due to temperature is 0.02 mm/ °C. Anyhow, to avoid this error, the weight coefficients of the absorption model (Eq. 2) should be calibrated at about the same temperature of the experimental tests.

-

Effect of water surface steepness. Free surface steepness influences penetrating length, as a consequence of refraction. This effect can grow significantly in the presence of strong surface slopes. In the considered experimental configuration, the rays reach the most lateral corners of the coloured pattern with an angle of approximately 5°. In this region, assuming a horizontal bottom, for a water surface slope equal to ±1 the length of the path can be 4 % longer than the corresponding water depth; in static conditions, the penetrating length is 0.2 % longer than the water depth. Due to this small variation depending on water surface steepness, no significant deterioration in the accuracy of the penetrating length estimation is expected. However, if the surface slope is very steep, boundary effects potentially arise since refracted beams could fall outside of the area covered by the colour screen.

-

Effect of camera resolution. Sensor resolution only affects the “visibility” of the detectable corners and their location on the bottom. The uncertainty on the planimetric position of detected corners due to image resolution affects the accuracy of the water surface reconstruction. Error propagation analysis shows that the position of point P on the water surface is provided with a precision of 0.1 mm at most in the case of a horizontal bottom and a 0.33 mm displacement (corresponding to the pixel dimension in the object space) of the emitting point A (see Fig. 1).

-

Effect of grid size of the coloured pattern. The grid size of the pattern determines the number of interest points (corners) potentially recognizable by the image processing procedure; hence, it influences the spatial resolution of the free surface detection and introduces a restriction regarding the minimum wavelength detectable on the free surface. In any case, the pattern must be clearly resolved by the camera, to ensure that the acquired images are not sensibly affected by Bayer effects smearing colour transitions.

-

Non-uniformity effects. As already stated in Sect. 4.1, the adoption of the variable G/G 0 in the calibration procedure (Eq. 2) aims to account in an implicit way for all the non-uniformity effects.

5 Test cases and results

5.1 Experimental tests

The measuring technique described in the previous sections was applied to a series of laboratory test measurements in static conditions in order to estimate overall measuring accuracy. Each test is characterized by different inclinations of the experimental apparatus (with reference to the vertical), different shapes of the bottom of the box, and different configurations of the water surface. Table 1 summarizes the test conditions for each experiment.

Finally, an example of application to a test case characterized by a moving water surface propagating approximately one-dimensional waves is presented.

5.2 Results

In Test 1, the water surface was horizontal and parallel to the bottom of the experimental tank. Three horizontal targets with different water depths were materialized; three corresponding “reference” water levels Z ref were measured by means of an ultrasonic distance meter with 0.5-mm inaccuracy.

Figure 7 shows the experimental results for these three test conditions. The average value Z av of the water depth distribution derived from the images is reported in Table 2 along with some data representing “bias” of the imaging technique (considered as acting as a water depth gauge) compared to the ultrasonic transducer. The absolute value of the relative “systematic” error (from the reference value) increases as the thickness of the water layer decreases.

Table 2 also reports standard deviation σ from reference and average values, respectively, of the measured water depth distributions. These deviation data confirm the overall tendency of measured points to draw a horizontal plane well and provide an effective estimate of the precision of the procedure. The imprecision of the measurement process increases with water depth because, as already observed, the estimate of penetration length is less accurate for greater depths. In any case, over 95 % of the experimental data lie within the interval Z av ± 2σ.

In Tests 2 and 3, the water surface was still horizontal; however, since the Z-axis was set to be normal to the bottom of the experimental box, all the reconstructed points appeared to lie on an inclined plane. In Test 2, the plane target was characterized by an inclination θ 2 = 28.8° (evaluated by means of a 0.1° accurate digital inclinometer) along the Y-direction with reference to the bottom of the experimental box of Fig. 4a. Test 3 was performed in the experimental box of Fig. 4b (with X-inclined bottom), and the support frame was tilted by θ 3 = 29.9°, so that the water surface was inclined at the same time along the X- and the Y-directions.

For each of the above-mentioned test configurations, three different water levels were considered; in particular, in Tests 2a and 3a, the bottom was only partially submerged. Figures 8 and 9 show the contour maps of the water surface in the three test conditions considered for Tests 2 and 3, respectively. Contour levels are approximately parallel and equidistant in all cases.

Table 3 reports the parameters of the regression plane Z r = m x X + m y Y + c that fits the measured points according to the least square approach for each test condition. The estimated values of m coefficients can be compared with the reference values: m ref x = 0 and m ref y = tan (28.8°) = 0.5498 for Test 2; m ref x = tan (9.9°) = 0.1745 and m ref y = tan (29.9°) = 0.5750 for Test 3. Table 3 also reports the values of the coefficient of determination ρ 2 that quantifies how well linear regression describes the experimental data sets.

Test 4 aimed at demonstrating the applicability of the imaging technique here proposed to cases characterized by a non-plane bottom topography known a priori. This test was performed in the experimental box shown in Fig. 4c, filled with a still volume of water completely submerging the curved bottom. Before the experiments, the bottom surface had been carefully surveyed. Figure 10 shows the contour maps of measured water surface elevation and the corresponding water depth contours for two different filling levels.

Table 4 compares mean water elevation Z av derived from the imaging data with the corresponding reference quantity Z ref measured through the accurate device used for the bottom survey. Table 4 also reports standard deviation σ of the imaging data from the reference and average values.

Test 5 was arranged to verify the applicability of the imaging technique in the presence of non-flat water surfaces. To achieve a curved surface target in static conditions, a thin semi-spherical cap made of PMMA with a radius of about 0.1 m was placed on the air–water interface, so that an almost semi-spherical “water surface” was generated. Undoubtedly, the presence of the thin PMMA object above the water layer induced a slight additional absorption of the IR radiation, which could lead to a systematic overestimate of the penetration length. This effect was taken into account by capturing an IR image of the bottom and the cap together before introducing water into the experimental box; by using the grey tones extracted from this photograph as reference values G 0 in Eq. 2 and neglecting the refraction induced by the cap, it can be assumed that the absorption model previously calibrated remains valid. Moreover, since the cap showed some surface defects and could not be considered perfectly spherical, a preliminary close-range photogrammetric survey was performed in order to create a highly accurate (~0.1 mm) reference model of the object.

Figure 11a compares the contour map of measured water depth with the reference map obtained from the photogrammetric model of the cap. The diagonal slices along the axis Y = X and Y = −X are represented in Fig. 11b. The average absolute difference between reference and measured water depths is equal to 0.8 mm.

Figure 12 shows the contour maps of water surface slopes in the X- and Y-directions. Slope data are returned directly by the measuring procedure, and hence, it is not necessary to make calculations on the detected water surface to extract this information. Reference slope data instead were derived on the basis of the gridded surface provided by the topographical survey of the cap.

Finally, in order to prove the capability of the imaging procedure of reconstructing a moving water surface, the propagation of an approximately one-dimensional gravity wave generated in the box of Fig. 4a is presented. The results of two consecutive frames captured by the camera are shown in Figs. 13 and 14.

6 Conclusions

The measuring technique here proposed can be classified as an imaging remote water surface gauge based on light refraction and absorption, and on simultaneous colour and near-infrared digital image processing. Additionally, the method returns measurements of the water surface slope. It is non-invasive and capable of providing spatially distributed measures over an extended flow field, even in the presence of a smooth bottom topography known a priori. In general, this kind of data is essential to validate numerical simulation models and assess their accuracy.

The measuring principle does not require a telecentric imaging system, but needs a backlight source, in both the visible and near-infrared range, and the simultaneous acquisition of co-registered visible and near-infrared pictures. In the implementation here described, the imaging system benefits from a 2-CCD camera capable of capturing visible and infrared images through the same optical path. Moreover, the measuring apparatus is simple to set up.

Overall spatial resolution depends jointly on the sensor resolution of the camera adopted and on the grid size of feature elements depicted on the bottom of the experimental box: the pitch of these interest points influences the surface wavelength resolvable. Time resolution only depends on the frame rate of the camera.

Since the absorption properties of water are more favourable in the near-infrared range than in the visible spectrum, the estimate of penetration depth can be performed with high sensitivity (without using colouring agents) on the basis of a suitable and calibrated absorption model.

The results obtained from some experimental tests prove that the imaging technique here presented is an effective and reliable method for water surface measurement, in both static and dynamic conditions, even in the case of non-horizontal bottom known a priori. Actually, the measuring technique is able to estimate water levels with accuracy in the order of 1 mm. The main practical drawback is the need for a back-lighting device, which restricts the suitability of this technique to laboratory-scale applications. A further weak point is that the measuring sensitivity of the penetration depth decreases with water depth: consequently, the adoption of this technique is not recommended when strong water depth excursions are expected. To obviate this limitation, it could be sufficient to adopt a back-lighting source that allows to set the peak wavelength of emitting near-infrared radiation according to the measuring depth range. In general, the estimation of penetrating length is mainly influenced by A/D conversion (8-12-16 bit), photon noise, and the structure of the IR absorption model. Moreover, according to Snell’s refraction law, penetrating length into the water medium is influenced by free surface steepness. This effect can grow significantly in the presence of strong surface slopes, like those commonly observed in small amplitude waves (as short gravity or capillary waves). If the surface slope is very steep, boundary effects potentially arise since the refracted beams could fall outside the area covered by the colour screen. Nevertheless, the test cases here presented show that the technique is effective if the surface steepness is in the order of ±1, whilst it fails when the wave breaks or the water surface is so steep that the squared structure of the coloured pattern is completely destroyed in the image captured by the camera.

Theoretically, the imaging technique proposed could be applied to large-sized facilities too. However, the application to areas which are one order of magnitude greater than the one here considered implies challenging technical problems and a heavy economic effort. A reasonable maximum area would be in the order of 1–2 m2. Therefore, in the case of larger facilities, if the experiments are repeatable, it would be possible to move the illumination system and the camera to take images of different portions of the domain during different runs. Alternatively, multiple cameras and illumination systems could be employed to simultaneously image different parts of the domain.

References

Aureli F, Maranzoni A, Mignosa P, Ziveri C (2011) An image processing technique for measuring free surface of dam-break flows. Exp Fluids 50(3):665–675

Barter JD, Beach KL, Lee PHY (1993) Collocated and simultaneous measurement of surface slope and amplitude of water waves. Rev Sci Instrum 64(9):2661–2665

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speed-up robust features (SURF). Comput Vis Image Underst 110(3):346–359

Chatellier L, Jarny S, Gibouin F, David L (2013) A parametric PIV/DIC method for the measurement of free surface flows. Exp Fluids 54(3):1488–1502

Dabiri D (2009) Digital particle image thermometry/velocimetry: a review. Exp Fluids 46(2):191–241

Eaket J, Hicks FE, Peterson AE (2005) Use of stereoscopy for dam break flow measurement. J Hydraul Eng 131(1):24–29

Fan P, Men A, Chen M, Yang B (2009) Colour-SURF: a SURF detector with local kernel colour histograms. In: Proceedings of the IC-NIDC2009. Beijing, China, pp 726–730

Förstner W, Gülch E (1987) A fast operator for detection and precise location of distinct points, corners and circular features. In: Proceedings of the ISPRS intercommission conference on fast processing on photogrammetric data. Interlaken, Switzerland, pp 281–305

Gomit G, Chatellier L, Calluaud D, David L (2013) Free surface measurement by stereo-refraction. Exp Fluids 54(6):1540

Hale GM, Querry MR (1973) Optical constants of water in the 200-nm to 200-μm wavelength region. Appl Opt 12(3):555–563

Harris C, Stephens M (1988) A combined corner and edge detector. In: Proceedings of the 4th Alvey vision conference, pp 147–151

Jähne B, Klinke J, Waas S (1994) Imaging of short ocean wind waves: a critical theoretical review. J Opt Soc Am A: 11(8):2197–2209

Jähne B, Schmidt M, Rocholz R (2005) Combined optical slope/height measurements of short wind waves: principle and calibration. Meas Sci Technol 16:1937–1944

Kou L, Labrie D, Chylek P (1993) Refractive indices of water and ice in the 0.65- to 2.5-μm spectral range. Appl Opt 32(19):3531–3540

Kraus K (1997) Photogrammetry. Dummler, Bonn

Kurata J, Grattan KTV, Uchiyama H, Tanaka T (1990) Water surface measurement in a shallow channel using the transmitted image of a grating. Rev Sci Instrum 61(2):736–739

Lowe DG (2004) Distinctive image features from scale-invariant key points. Int J Comput Vis 60(2):91–110

Moisy F, Rabaud M, Salsac K (2009) A synthetic Schlieren method for the measurement of the topography of a liquid interface. Exp Fluids 46(6):1021–1036

Moravec HP (1977) Towards automatic visual obstacle avoidance. In: Proceedings of the 5th international joint conference on artificial intelligence, p 584

Ng I, Kumar V, Sheard GJ, Hourigan K, Fouras A (2011) Experimental study of simultaneous measurement of velocity and surface topography: in the wake of a circular cylinder at low Reynolds number. Exp Fluids 50(3):587–595

Nitsche W, Dobriloff C (eds) (2009) Imaging measurement methods for flow analysis. Springer, Berlin

Pegau WS, Gray D, Zaneveld JRV (1997) Absorption and attenuation of visible and near-infrared light in water: dependence on temperature and salinity. Appl Opt 36(24):6035–6046

PhotoModeler Pro (2003) Version 5.0. Eos Systems Inc., Vancouver

Trajkovic M, Hedley M (1998) Fast corner detection. Image Vis Comput 16(2):75–87

Tsubaki R, Fujita I (2005) Stereoscopic measurement of a fluctuating free surface with discontinuities. Meas Sci Technol 16:1894–1902

Wyszecki G, Stiles WS (1982) Colour science: concepts and methods, quantitative data and formulae. Wiley, New York

Zhang X, Cox CS (1994) Measuring the two-dimensional structure of a wavy water surface optically: a surface gradient detector. Exp Fluids 17(4):225–237

Zheng Z, Wang H, Teoh EK (1999) Analysis of gray level corner detection. Pattern Recogn Lett 20:149–162

Acknowledgments

The authors are grateful to the Reviewers for their valuable comments and helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Aureli, F., Dazzi, S., Maranzoni, A. et al. A combined colour-infrared imaging technique for measuring water surface over non-horizontal bottom. Exp Fluids 55, 1701 (2014). https://doi.org/10.1007/s00348-014-1701-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-014-1701-0